Abstract

Random events make multiobjective programming solutions vulnerable to changes in input data. In many cases statistically quantifiable information on variability of relevant parameters may not be available for decision making. This situation gives rise to the problem of obtaining solutions based on subjective beliefs and a priori risk aversion to random changes. To solve this problem, we propose to replace the traditional weighted goal programming achievement function with a new function that considers the decision maker’s perception of the randomness associated with implementing the solution through the use of a penalty term. This new function also implements the level of a priori risk aversion based around the decision maker’s beliefs and perceptions. The proposed new formulation is illustrated by means of a variant of the mean absolute deviation portfolio selection model. As a result, difficulties imposed by the absence of statistical information about random events can be encompassed by a modification of the achievement function to pragmatically consider subjective beliefs.

Similar content being viewed by others

1 Introduction

Weighted goal programming (WGP) is one of the major goal programming variants that allows normalised, unwanted deviations from goals to be traded-off directly (Jones and Tamiz 2010). Typically, a linear programming solution algorithm will be used to solve linear WGP models, resulting in an extreme point solution. This solution is, by its nature, vulnerable to small changes in the model parameters that can leave the proposed solution either infeasible or sub-optimal (Ignizio 1999). In many real-life applications random events prevent the planned solution from being implemented, namely, the WGP deterministic solution should be viewed as a desirable average affected by random variability.

Previous approaches to deal with random variability of model parameters in WGP usually implies the existence of some statistically quantifiable knowledge by assuming either an uncertainty set or a given probability distribution. Robust goal programming approaches (Kuchta 2004; Ghahtarani and Najafi 2013; Hanks et al. 2017), are usually based on probabilistic guarantees (e.g., a symmetric bounded distribution) for random parameters within well-defined uncertainty sets (Matthews et al. 2018). Stochastic programming relies on the conversion of a stochastic program into a deterministic one by considering the expected value of the objective function and by imposing that restrictions are fulfilled with a minimum probability threshold (Abdelaziz et al. 2007, 2009; Masri 2017). Stochastic multiobjective programming problems are also addressed by interactive procedures based on reference-points (Muñoz and Ruiz 2009; Muñoz et al. 2010). Finally, fuzzy goal programming proposals (Díaz-Madroñero et al. 2014; Messaoudi et al. 2017; Jiménez et al. 2018), are based on the concept of fuzzy numbers to express the uncertainty levels due to lack of knowledge of input data.

However, statistical information on variability of relevant parameters is not usually available to the decision maker (DM). This gives rise to the problem of how to obtain a prudent WGP deterministic solution that takes into account the DM’s beliefs and risk aversion relating to the random changes that may occur in the implementation process. In this case, a ‘prudent solution’ is defined as one with which the DM would be satisfied as being more likely to be implementable in practice, although potentially slightly worse with respect to objectives than the deterministic solution without randomness taken into account.

In this paper, we propose a deterministic WGP model whose objective function (or achievement function) is established by the DM from his/her beliefs on the variability of goals in the day-to-day implementation process as well as his/her a priori risk aversion for this variability. It should be pointed out that the proposed model is not stochastic. In fact, the model does not rely on statistically observable data but it relies on subjective values (beliefs, psychological aversion) disclosed by the DM. The main purpose of the paper is to obtain prudent deterministic solutions influenced by these subjective values. To this end, we replace the traditional WGP achievement function by a new function that takes into account the decision maker’s perception of the randomness associated by means of a penalty term bounded by a maximum value. This penalty term is ultimately related to the concept of absolute risk aversion introduced by Arrow (1965) and risk premium through the Pratt (1964) approximation and a power utility function (Ballestero 1997). Summarising, two main contributions can be highlighted:

-

1.

We propose a pragmatic approach to WGP based on a subjective penalty parameter as a proxy for believed variability when statistically quantifiable information is not available.

-

2.

We establish a link between this penalty parameter and risk premium through the concept of Arrow’s absolute risk aversion coefficient.

To illustrate our proposal, we consider a portfolio selection problem where the DM aims to find the percentage of a given budget that is allocated to each one of a set of available assets. More precisely, we reformulate the mean-absolute-deviation portfolio selection model proposed by Konno and Yamazaki (1991) to incorporate subjective beliefs in the objective function. The main implication that can be derived from our proposal and the case study is that the difficulties imposed by the absence of statistical information about random events can be alleviated by the modification of the achievement function within the context of WGP.

The remainder of this paper is organised as follows. In Sect. 2, the state of the art is reviewed. Section 3 deals with the proposed method. Section 4 provides a discussion through some illustrative portfolio selection examples, this being completed with sensitivity analyses and comparisons of results with respect to the classical WGP model. The paper closes with concluding remarks.

2 State of the art

2.1 On goal programming

Various extensions to the original Charnes et al. (1955) goal programming model have been proposed to account for the fact that there may be a level of uncertainty or imprecision around the parameters of the goal programming model in some applications. These have mainly concentrated on uncertainty or imprecision regarding the set of weights associated with the penalisation of the unwanted deviations and of the target values. A model is proposed by Charnes and Collomb (1972) that allows interval targets for goals for which both deviations on both sides of the target are unwanted. Another proposal by Gass (1986) suggests the use of the analytic hierarchy process to determine a weight set .

More recently, stochastic goal programming gives a formal methodology for handling randomness when the probabilities associated with the uncertain parameters are known or can be estimated. Recent proposals on stochastic goal programming include generalised models (Aouni and La Torre 2010) and several applications in the fields of portfolio selection, resource allocation, project selection, healthcare management, transportation and marketing (Aouni et al. 2012; Masri 2017). Fuzzy goal programming formulate imprecision in the relevant data by using (Zadeh 1965) concept of fuzzy numbers. Relevant applications can be found in materials requirement planning (Díaz-Madroñero et al. 2014) group decision methods (Bilbao-Terol et al. 2016) and portfolio selection (Messaoudi et al. 2017; Jiménez et al. 2018). Other extensions of goal programming consider the presence of strict priority levels under a lexicographical structure (Choobineh and Mohagheghi 2016) and the use of goal programming as a method to combine alternative perspectives in voting systems (González-Pachón and Romero 2016; González-Pachón et al. 2019).

Summarising, there have been various methods proposed to deal with the uncertain and sometimes subjective nature of weight determination in goal programming. The above are methods for handling imprecision around specific sets of parameters which can be statistically quantified and hence managed via specific techniques. Thus, we can classify them as formal methods. However, Jones and Tamiz (2010) conclude that the process of setting weights in a goal programming is either a formal or informal process of interaction with DMs and Jones (2011) proposes a pragmatic algorithm that explores the weighting space to aid in this process. As a departure from existing approaches, we here propose a method to deal with uncertainty of a more general nature that cannot be statistically quantified and hence an approach based on the beliefs and risk aversion levels of the DM is proposed.

2.2 On risk aversion

This concept was introduced by Arrow (1965) and Pratt (1964) with the following meaning: (a) it is not a measure of risk, but a psychological parameter to characterise how much the DM fears risk of changes in a result, which is of interest to the DM; (b) it derives from the DM’s utility function concerning that result. In Arrow’s theory the DM’s utility function relies on certain topological assumptions (Debreu 1960). They are rather unrelated to concepts such as value function, additive value theory, multi-attribute value theory and multi-attribute utility theory (MAUT), which appeared later in time since 1970. To avoid confusion, the fact that Arrow’s utility function is a departure from value functions and other concepts cited above should be pointed out, although relationships between topological assumptions and solvability assumptions have been discussed by Krantz et al. (1971).

Approaches to balanced scorecard problems such as those proposed, e.g., by Grigoroudis et al. (2012) and Xu and Yeh (2012) based on additive utility functions and multiattribute decision making models are a departure from the present paper, which relies on Arrow’s utility theory and risk aversion. There is a critical advantage of using Arrow’s utility and risk aversion to underpin GP. Arrow’s theory is a paradigm in economic analysis and therefore, GP based on Arrow’s theory could become a convincing method for economists. Risk aversion is sometimes related to expected utility (see, e.g. Schechter (2007)), but the problem of estimating risk aversion and risk premium without the expected utility assumption is also developed in decision theory (Langlais 2005).

Determining Pratt’s coefficient when the DM utility function is virtually unknown is a critical issue addressed by McCarl and Bessler (1989). There is a wide range of literature on risk aversion (related to utility). These papers deal with a range of managerial and economic applications, e.g. dynamic portfolios and consumption (Bhamra and Uppal 2006; Kihlstrom 2009), cost-effectiveness (Elbasha 2005), one-period models (Cheridito and Summer 2006), pricing and options (Ewald and Yang 2008), water use planning (Bravo and Gonzalez 2009) and consumption over time (Johansson-Stenman 2010), to cite a few examples. Expected value-stochastic goal programming by Ballestero (2001) and socially responsible investment methods by Ballestero et al. (2012)) also rely on Arrow’s-Pratt’s utility, but in a different way from the model proposed in this paper.

3 Reformulating the objective function from the DM’s beliefs and risk aversion

Let us start with a classical WGP formulation (Charnes and Cooper 1957):

with \(w_j^+ + w_j^- =1\), for all \(j=,1,2, \ldots , n\) and \(\sum _{j=1}^n w_j =1\), and subject to the following constraints:

together with the non-negativity conditions \(x_i, d_j^+, d_j^- \ge 0\). Note that if we make \(W_j^+ = w_j w_j^+\) and \(W_j^- = w_j w_j^-\), then objective function (1) becomes

where the sum of weights turns out to be equal to one. In the previous equations the symbols have the following meaning:

-

\(x_i\) is the i-th output (decision variable).

-

\(a_{ij}\) is the per unit cost of the i-th output for the j-th goal.

-

\(b_j\) is the target or aspiration level for the j-th goal.

-

\(d_j^+\) and \(d_j^-\) are the j-th positive and negative deviations, respectively, from the j-th target.

-

\(w_j\), \(w_j^+\) and \(w_j^-\), or equivalently, \(W_j^+\) and \(W_j^-\), are the preference weights to be attached to the j-th deviation. They satisfy the above standard relationships.

In the previous formulation, we do not use percentage normalisation by means of targets \(b_j\), to avoid the limitation of the model when targets are zero. However, it is important to note that normalisation will be required if goals measured in different units are summed directly.

For mathematical convenience (see below) we will use objective function (1) instead of its equivalent form (3). Equation (1) can be viewed as a disutility function D whose arguments are deviations behaving “the more the worse”. Disutility D can be converted into utility U by converting its arguments into “the more the better” variables. There are different procedures to carry out this conversion, one of them being the following:

where \(M_j\) is a sufficiently large number, and \(k_j\) is some normalisation constant, together with \(q_j \ge 0\). Some kind of normalisation is required if goals measured in different units are summed directly. Percentage normalisation is a particular case of Eq. (4) when \(M_j=1\) and \(k_j\) equals the target value for the jth goal:

Note that percentage normalisation requires non-zero targets \(b_j\). This requirement may be an issue when, e.g., decision-makers aim to minimise cost deviations above a target that is set to zero. In addition, the conversion of disutility to utility requires that deviations are limited to 100% of target value to avoid negative utility when \((w_j^+ d_j^+ + w_j^- d_j^-)>b_j\). In what follows, we use the general case described in Eq. (4) to overcome these numerical limitations. A further advantage of this choice is its generality since Eq. (4) can also be used in conjunction with multiple different normalisation schemes. As a result, we transform the minimisation objective function (1) in the next maximisation expression:

Let \((x_{10}, x_{20}, \ldots , x_{i0}, \ldots , x_{m0})\) be the solution vector obtained from deterministic model encoded in Eqs. (1) and (2). This solution should be implemented in the day-to-day routine of the activity. For example, suppose that \(x_{10} = 45\) units, \(x_{20} = 32\) units, and so on are quantities of products in an agricultural planning model to be produced and sold on the market weekly, such as model (1) and (2) has recommended. Obviously, the farm cannot produce and sell these units exactly every week, as random events will affect the implementation process giving rise to sales variability for an assumed perishable product. Faced with this implementation process, the farm fears the consequences of these random changes on its utility. Then, achievement \(q_j\) given by Eq. (6) is reduced by a penalty term \(V_j\) as follows:

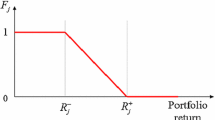

where \(Q_j\) is the achievement after penalty. Penalty term \(V_j\) is defined as the believed variability (%) in the achievement of the j-th goal, due to random events affecting the day-to-day implementation process. This value is assumed by the DM from his/her beliefs. Penalty term \(V_j\) is the subjective expression of some statistically unquantifiable randomness. It is the way that a DM can encompass either uncertainty or randomness when he/she is not able to define in statistical terms by means of a given probability distribution, an uncertainty set or fuzzy numbers. To solve this limitation, the DM’s beliefs with respect to the random changes that may occur are represented by a penalty term for each goal. This penalty term is the way to incorporate the consequences of these random changes in the resulting utility. In addition, penalty terms can be determined by means of the common interactive process in multiple criteria decision making in which penalty terms selection and optimisation stages are repeated until a satisfactory solution is obtained (Miettinen et al. 2008). Following with our example in agricultural planning in this section, the farm can periodically adjust its utility achievement function according to its beliefs regarding weather forecasts, price expectations and many other factors. Finally, \(V_{max}\) is an upper limit (less than 100%) for penalty term \(V_j\). Then, for all j, we have:

In the language of Arrow’s 1965 utility theory, the DM has risk aversion for such variability in the implementation stage. A classical measurement of risk aversion is stated by the Arrow’s (p.94 Arrow (1965)) absolute risk aversion (ARA) coefficient \(r_{Aj}\), namely:

where U is the DM’s utility function.

Remark 1

Using WGP objective function with achievement (7) involves using a linear utility function. Then, in Eq. (9), the second derivative equals zero. Therefore, from Eq. (9) we have \(r_{Aj} = 0\). This means that the DM is not a risk averter at all, but a risk neutral. As most DMs are risk averters, an ARA non-linear utility function should be used in our context instead of a linear objective function. By using additive power utility, we have:

where exponent \(\beta _j\) is a parameter characterising the power utility function (\(0~<~\beta _j~<~1\)). Exponent \(\beta _j \le 0\) has no sense as utility should increase with the increase of \(Q_j\) achievement (see, e.g., Kallberg and Ziemba (1983)). Non-linearity in Eq. (10) may impose some limitations due to the computational burden in large-scale problems. The use of evolutionary algorithms (Branke et al. 2008) is a potentially suitable option to overcome this limitation as long as suboptimality in the calculation of solutions is of the same order of magnitude than possible errors in the input data.

One may ask if any other type of utility function with second derivative not equal to zero such as either logarithmic or exponential functions can be used instead of power utility functions such as the one described in Eq. (10). It is shown elsewhere (Ballestero 1997; Ballestero and Romero 1998) that the n-th derivative of usual utility functions such as the exponential, logarithmic and power functions presents the same mathematical structure. By setting the appropriate parameters in this structure, one may derive the same results that we next do for the case of power utility functions due to the simplicity of mathematical notation.

Our problem now is to specify each power utility function by determining the respective \(V_j\) and \(\beta _j\), so that the DM’s utility reflects their risk aversion for random changes in the day-to-day implementation process.

3.1 Specifying power utility in our context

To this end, a relationship between each power utility function and the DM’s risk aversion for randomness in the implementation process is needed. From Eq. (9), Arrow’s relative risk aversion \(R_{Rj}\) (Arrow 1965) is calculated for each power utility function as follows:

Remark 2

From Eq. (11), the lower the \(R_{Rj}\) relative risk aversion the higher the \(\beta _j\) exponent. Minimum \(R_{Rj}=0\) corresponds to \(\beta _j=1\). Highest levels of relative risk aversion \(R_{Rj}\) close to 1 correspond to lowest \(\beta _j\) exponents close to zero.

To elicit the relative risk aversion coefficient (11), the concept of risk premium, which is defined as “the maximum amount of money \(\pi\) that one is ready to pay to escape a pure risk” (Gollier 2001) is used. More generally, it is the amount of achievement that a risk averse DM is willing to give up in exchange for zero randomness, namely, in exchange for a risk-free scenario. Risk premium \(\pi _j\) is obtained by the following Pratt (1964) approximation:

Equation (12) is characterised by saying that the risk premium is proportional to the following factors:

-

(a)

A statistical measure of randomness, namely, the variance \(\sigma _j^2\) of random changes affecting the j-th goal in the day-to-day implementation process. This variance is unknown in our context, as no statistical information on random changes is generally available to the DM.

-

(b)

A psychological factor, namely, the risk aversion coefficient, which is established by the DM.

As variance \(\sigma _j^2\) is unknown, Eq. (12) should be converted into an equivalent equation where a proxy for variability such as \(V_j\) (based on beliefs) is used instead of the variance. To this end, Eq. (12) is written as follows:

It is sensible to assume that the DM uses their beliefs on percentage variability (namely, \(V_j\)) instead of the unknown \(\sigma _j/Q_j\) coefficient of variation. Thus, Eq. (13) turns into:

From Eqs. (11) and (14), we get:

3.2 Eliciting risk premium and computing the \(\beta _j\) exponents

In the context of this paper, the risk premium is established by the DM from their beliefs on percentage variability in the j-th goal due to random changes in the day-to-day implementation process of the deterministic WGP solution. A sensible rule is as follows: the greater the believed variability the larger the risk premium. If zero variability is assumed, there is no reason to establish a risk premium. To help the DM elicit the risk premium for each believed variability concerning the j-th goal and compute the respective \(\beta _j\) exponent of the power function, Table 1 is constructed for each goal. Columns of the table have the following meaning and application.

-

First column A wide sample of potential levels of believed variability is displayed. These levels are ordered from 0% to 90%.

-

Second column For each believed variability \(V_j\), the DM is invited to disclose their risk premium in percentage terms with respect to utility \(Q_j\).

-

Third column For each believed variability and the respective risk premium, the \(R_Rj\) relative risk aversion coefficient is computed by Eq. (14). For the sake of coherence, the risk premium succession in the second column should lead to a succession of increasing \(R_{Rj}\) in the third column. If not, the DM should be invited to reconsider their risk premium values.

-

Fourth column From the third column, the \(\beta _j\) exponent for each row is computed by Eq. (11).

-

Fifth column For each row, the upper limit for the risk premium (%) is here recorded. If the DM has disclosed a risk premium value greater than (or equal to) the respective upper limit, then the \(\beta _j\) exponent turns out to be less than (or equal to) zero, which is inappropriate (see Remark 2). In this case, the analyst may invite the DM to reconsider their risk premium values. Alternatively, the analyst may take a very low \(\beta _j\) level, such as \(\beta _j=0.01\).

4 Case study

To illustrate our proposal, we consider a portfolio selection problem where the DM aims to find the percentage of a given budget that is allocated to each one of a set of available assets. The classical bicriteria approach, as proposed by Markowitz (1952), includes the joint maximisation of returns and the minimisation of risk through the well-known mean-variance portfolio selection model. The portfolio optimisation problem is still an ongoing issue and different approaches such as fuzzy goal programming (Messaoudi et al. 2017; Jiménez et al. 2018), stochastic goal programming (Masri 2017), and generalised data envelopment analysis and statistical models (Tsionas 2019) have been recently proposed. In this case study, we reformulate a variant of the classical portfolio selection model proposed by Konno and Yamazaki (1991), which is known as the mean-absolute-deviation (MAD) model to incorporate subjective beliefs as described in the previous section. Instead of variance as a measure of risk, the MAD model uses the absolute deviation of returns with respect to the mean. This fact motivates the selection of the MAD model in our WGP context. A further advantage of the use of the MAD model with respect to the mean-variance model is that the assumption of normality of returns is not required.

In order to analyse the impact of market trends in the performance of the MAD model, we use three different data sets for weekly returns in the Spanish Stock Exchange (IBEX 35) as summarised in Table 2. More precisely, we identify three different trends: 1) bull market (from 2005 to 2008) with an average weekly return of 0.062%; 2) sideways trend (from 2014 to 2019) with an average weekly return of \(-\)0.001%; and 3) bear market (from 2008 to 2012) with an average weekly return of \(-\)0.189%. Interested readers can obtain the data themselves at http://es.finance.yahoo.com.

To solve these portfolio selection optimisation models, we use the open-source library SciPy in Python (Version 3.6.4) and Jupyter Notebooks (Kluyver et al. 2016) and the SLSQP (Sequential Least Squares Programming) method originally implemented by Kraft (1988).

4.1 Performance of the MAD model

The MAD formulation is based on T previous observations returns from a set of m available assets. A formulation of the MAD model adjusted to the approach described in this paper is as follows:

where \(\rho\) is the desired return for the portfolio, \(\overline{r}_i\) is the average return for the i-th asset, \(r_{it}\) is the return of asset i in time period t, and \(d_{2t}^+\) and \(d_{2t}^-\) are, respectively, the positive and negative deviation of returns from target \(\rho\). Then, \(Q_1\) measures the average return of the portfolio and \(Q_2\) measures the mean absolute deviation of returns from the desired return.

Using normalised returns and deviations for the three data sets described in Table 2, we first obtain optimal portfolios for 20 different levels of returns between the minimum and maximum returns among all assets and data sets. Then, we compare performances in terms of returns and absolute deviations by solving the classical MAD model encoded between Eqs. (16) and (23) as shown in Fig. 1. In all three cases, we identify a zone of dominated portfolios where low returns are not rewarded with low deviations. In these cases, there is a better alternative (higher return for a similar deviation) available for investors. This behaviour is similar to the well-known bullet-shape in the mean-variance model (Ballestero and Pla-Santamaria 2004).

As expected, the performance of the MAD model in the bear market is poorer than in the bull market and the sideways trend for all portfolios except for one. Interestingly, the best performance of the MAD model does not correspond to the bull market case but to the sideways trend. A reasonable explanation for this behaviour is that bull market trends are expected to be characterised by larger deviations than in the case of a sideways trend.

4.2 Performance of the adjusted MAD model

Since based on past data, the MAD model is deterministic. However, investors can express their beliefs and risk aversion by means of penalty term \(V_j\) and exponent \(\beta _j\) for each goal, namely, returns and mean absolute deviations from average returns. For instance, an investor may do the following reasoning: “Due to economic uncertainty derived from political instability in Spain, my beliefs on percentage variability with respect to the average returns is 2% and with respect to mean absolute deviations of returns is 5%”. For each believed variability, the investor is invited to disclose their risk premium in percentage terms with respect to achievement \(Q_j\) for each goal j. Let us assume that the investor assessment of risk for each believed variability is common for all goals as it summarised in Table 3. As a result, exponent \(\beta _j\) for each goal j can be computed using Eq. (15) for a given range of believed variabilities. Note that the higher the believed variability, the higher the risk premium attached to an account and the lower exponent \(\beta _j\), hence reducing achievement \(Q_j\).

Then, we can reformulate of the MAD model according to the subjective beliefs of our investor as follows. From Table 3, we obtain \(V_{max}=15\%\) and exponents \(\beta _1 = 0.90\) and \(\beta _2=0.76\) for a believed variability of \(V_1 = 2\%\) for returns and \(V_2= 5\%\) for mean absolute deviations. As a result of the investor’s beliefs and risk aversion utility derived from returns in \(Q_1\) is reduced and disutility produced by deviations in \(Q_2\) is increased. Then, the adjusted MAD model to be solved is as follows:

It is well known that the optimal solution of a GP might be Pareto inefficient when DMs are too pessimistic when setting target levels. In our porfolio selection context, both the MAD and the adjusted MAD model are built with the ultimate goal of maximising the difference between returns and the absolute deviations of returns. As a result, target \(\rho\) is only used as a reference to compute absolute deviations of returns. However, we must recall that in case that Pareto inefficiency is detected, several restoration techniques have been proposed in the literature (Romero 1991; Tamiz and Jones 1996; Jones and Tamiz 2010).

The adjusted MAD model provides a formulation that implements subjective beliefs about uncertainty without increasing complexity. With respect to stochastic goal programming, there is no need to assume a probability distribution for returns. Fuzzy goal programming goes one step further in the search for a better way of implementing beliefs about the level of satisfaction by means of fuzzy goals and fuzzy restrictions. This implementation is achieved using fuzzy numbers and membership functions as mathematical representation of the linguistic description of returns and risk targets imprecision made by the DM. Our approach goes even further in reducing complexity by relying on subjective beliefs implemented in the portfolio selection model by means of a single penalty term for each of the goals under consideration.

Using again our data sets with weekly returns from the Spanish Stock Exchange (IBEX 35) in three different market trends, we derive a new set of optimal portfolios by solving the adjusted MAD model program encoded between Eqs. (24) and (31). The combined bicriteria performance in terms of expected return and deviations is shown in Fig. 2. By comparing the portfolios derived from the MAD model in Fig. 1 with those obtained form the adjusted MAD model in Fig. 2, we note that returns are limited by the beliefs and risk aversion of the decision maker. Consequently, she/he has to accept more risk to achieve the same desired level of returns.

On average, a similar level of performance measured in terms of the features of non-dominated portfolios for the MAD model and the adjusted MAD model is observed as shown in Table 4. However, this level of performance is achieved in exchange for diversification, particularly in the Sideways market scenario. Here, we measure diversification by means of the reciprocal \((1-H)\) of the Herfindahl’s concentration index (H) computed as the sum of the squares of the share allocated to each asset (Woerheide and Persson 1993). This index H ranges in the interval [0, 1], the higher the value of H, the less diversified portfolio. Then, the reciprocal \(1-H\) is a measure of diversification.

However, the internal structure of the non-dominated portfolios from the adjusted MAD model is different to those obtained using the MAD model for all three market trends. To evaluate the impact of the adjusted MAD model in the internal construction of portfolios, we computed: 1) the average sum of absolute changes in percentages of each asset; and 2) the average number of changes in percentages of assets observed. For instance, when comparing two portfolios \(p_1=(0.4, 0.3, 0.3)\) and \(p_2=(0.5, 0.2, 0.3)\), the sum of absolute changes is \(|0.4-0.5|+|0.3-0.2|+|0.3-0.3|=0.2\), and the number of changes is 2 since the first and the second asset’s percentages have changed. The results of the comparison of the MAD model and the adjusted MAD model for all non-dominated portfolios and the three market scenarios are summarised in Table 5. More precisely, we find more important changes in the case of the bull market. The average number of changes in assets is slightly lower than two in both the bull and the bear market case, and above two in the sideways trend case. As a result, this feature shows the utility of our adjusted model: if there is an impact of beliefs and risk aversion on the optimal solutions, investors should have a tool to elicit the adjusted portfolios to those beliefs. The adjusted GP model described in this paper is an example of such a tool.

4.3 Sensitivity analysis

In this subsection, we perform a sensitivity analysis to explore the impact that the variation of the two key parameters of the adjusted MAD model has on the structure of non-dominated portfolios. More precisely, we compute average values of non-dominated portfolios for both the minimum and maximum share allocated to each asset and the Herfindahl concentration index (Woerheide and Persson 1993). Along the lines of our case study, we consider three different scenarios represented by the data sets summarised in Table 2, namely, bull market, sideways trend and bear market.

Recall from Sect. 3, that for each believed variability \(V_j\), the DM is invited to disclose their risk premium in percentage terms \(100\pi _j/Q_j\) with respect to utility \(Q_j\). Later, from \(V_j\) and \(\pi _j/Q_j\), the \(\beta _j\) exponent is computed through Eq. (15) to build the modified objective function (24). It can reasonably be assumed that the greater the believed variability the larger the risk premium. As a result, we should find a balance between parameters \(V_j\) and \(\pi _j/Q_j\) and computations since some areas of the parameter space may lead to inconsistent solutions from the DM’s point of view Jones (2011). As an example, consider the extreme case when the choice of \(V_j\) and \(\pi _j/Q_j\) results in a value of \(\beta _j\) close to zero. Then power utility function (24) remains almost invariant to changes in achievement \(Q_j\). Similarly, when \(\beta _j\) is close to one, the power utility function (24) becomes linear.

This sensitivity analysis follows two alternative means of experimentation. In the first one, we study the impact on non-dominated portfolio structure of variations of the believed variability for returns \(V_1\) and for deviations \(V_2\). In Table 6, we summarise the minimum, the maximum share and the reciprocal of the Herfindahl’s concentration index for the three groups of believed variability. Risk premiums are established following to the rule: the greater the believed variability the larger the risk premium. Otherwise, the extreme case mentioned above may lead to an inconsistent solution. By analysing Table 6, we conclude that an increase in variability leads in general to an increase in the minimum share in all three scenarios. No significant changes were observed in the maximum share and in the diversification index.

In the second set of sensitivity results given in Table 7, we explore the impact on the non-dominated portfolio structure of variations of the risk premium disclosed by the DM. Again, there exist different groups of experiments increasing the respective risk premium percentages \(100\pi _1/Q_1\) and \(100\pi _2/Q_2\) while maintaining invariant the believed variabilities \(V_1\) and \(V_2\). On average, we also observe a general increase in the minimum share and no significant changes were observed in the maximum share or the diversification index. However, in this case there is a remarkable change in the minimum share in the Bull market case in the third group of experiments. A closer look at the experimental results show that in this case the number of non-dominated portfolios is lower than the rest, hence provoking this numerical change. This occurrence may be caused by a low \(\beta _2\) value that leads to numerical issues in the power utility function as mentioned above.

The sensitivity analysis described in this section provides further insights about the expected pros and cons of the adjusted MAD model. In general, we observe that the structure of the optimal portfolios is not remarkably affected by changes in believed variabilities and risk premiums. Even though there are some changes when focusing in a extreme measure such as the minimum share, a more synthetic measure such as the Herfindahl concentration index shows almost no change. As a result, we conclude that the stability of the portfolio structure is not affected by the reasonable changes considered in our experiments. On the other hand, both analysts and DMs must be cautious on the parameter setting process due to possible inconsistencies that may arise due to the effects of extreme values of \(\beta _j\) exponents. In this sense, an interactive process between the analyst and the DM in which parameter setting and optimisation stages are repeated until a satisfactory solution is obtained may be of help.

Summarising, one of the main points of this paper is to show the advantages of the adjusted MAD model when incorporating subjective beliefs to deal with statistically unquantifiable randomness. The classic MAD portfolio selection model deals with uncertainty by considering expected returns and deviations. On the contrary, the adjusted MAD model allows decision makers to obtain solutions according to their beliefs by means of a subjective penalty term. The trade-off for the advantage of more accurate modelling of the decision maker’s subjective beliefs in the adjusted MAD model is the necessity of a non-linear objective function, which may prove more computationally challenging to solve. However, the case study in Sect. 4 of this paper has demonstrated the solution of reasonable sized models without difficulty.

An alternative way to deal with uncertainty in portfolio selection is by considering fuzzy goal targets. Our method deals with uncertainty on a much more fundamental level than simply making the goal target value fuzzy. We replace the traditional weighted goal programming achievement function with a new function that considers the decision maker’s perception of the randomness associated with implementing the solution through the use of a penalty term. Furthermore, a fuzzy goal, by its nature, is quantifiable by means of a fuzzy number whereas our method deals with unquantifiable randomness. As a result, difficulties imposed by the absence of statistical information about random events can be encompassed by a modification of the achievement function to incorporate pragmatically subjective beliefs.

5 Conclusions

The problem of adapting a weighted goal programming solution to account for the presence of randomness has been addressed in this paper. A pragmatic methodology has been adopted that has assumed that the randomness cannot be quantified statistically. Therefore, the method proposed has relied upon the decision maker’s beliefs and risk aversion for variability as well as his/her preferences in order to produce solutions that take into account both the need to reach the goals as closely as possible and the need to have a solution that is implementable in practice.

The application of this methodology has been illustrated by means of a variant of the mean absolute deviation portfolio selection model in three different contexts for the Spanish Stock Exchange IBEX35: bull market, bear market and sideways trend. The utility of our adjusted model is demonstrated by the finding that the internal structure of optimal portfolios vary when beliefs and risk aversion are integrated in a portfolio selection model. By implementing the adjusted model described in this paper, decision makers have now the possibility to derive optimal solutions according to their beliefs and a priori risk aversion.

The methodology proposed in this paper should be applicable to the wide range of goal programming applications that have some form of statistically unquantifiable randomness affecting their solutions. In addition to the agricultural planning and portfolio selection examples described above, our proposal is particularly interesting in contexts where forecasts are based on beliefs about the near future. An example of this application is the use of beliefs about the economic context to derive cash management policies (Salas-Molina et al. 2018) or to evaluate corporate social responsibility by means of goal programming (Oliveira et al. 2019). In addition, this paper has discussed the linear weighted goal programming case but there is the potential for future research to extend the methodology to other goal programming variants.

Since weight and parameter setting may provoke some difficulties in the application of any multiple objective programming variant, we believe that an interactive process is a suitable way to deal with this topic. As a result, the design of any type of algorithm or interactive process to facilitate the task of setting the parameters of our model is an interesting future line of work.

References

Abdelaziz FB, Aouni B, El Fayedh R (2007) Multi-objective stochastic programming for portfolio selection. Eur J Oper Res 177(3):1811–1823

Abdelaziz FB, El Fayedh R, Rao A (2009) A discrete stochastic goal program for portfolio selection: the case of united arab emirates equity market. INFOR Inf Syst Op Res 47(1):5–13

Aouni B, La Torre D (2010) A generalized stochastic goal programming model. Appl Math Comput 215(12):4347–4357

Aouni B, Ben Abdelaziz F, La Torre D (2012) The stochastic goal programming model: theory and applications. J Multi-Criteria Decis Anal 19(5–6):185–200

Arrow KJ (1965) Aspects of the theory of risk-bearing. Academic Bookstore, Helsinki

Ballestero E (1997) Utility functions: a compromise programming approach to specification and optimization. J Multi-Criteria Decis Anal 6(1):11–16

Ballestero E (2001) Stochastic goal programming: a mean-variance approach. Eur J Op Res 131(3):476–481

Ballestero E, Pla-Santamaria D (2004) Selecting portfolios for mutual funds. Omega 32(5):385–394

Ballestero E, Romero C (1998) Multiple criteria decision making and its applications to economic problems. Kluwer Academic Publishers, Dordrecht

Ballestero E, Bravo M, Pérez-Gladish B, Arenas-Parra M, Pla-Santamaria D (2012) Socially responsible investment: a multicriteria approach to portfolio selection combining ethical and financial objectives. Eur J Op Res 216(2):487–494

Bhamra HS, Uppal R (2006) The role of risk aversion and intertemporal substitution in dynamic consumption-portfolio choice with recursive utility. J Econ Dyn Control 30(6):967–991

Bilbao-Terol A, Jiménez M, Arenas-Parra M (2016) A group decision making model based on goal programming with fuzzy hierarchy: an application to regional forest planning. Ann Op Res 245(1–2):137–162

Branke J, Deb K, Miettinen K, Slowiński R (2008) Multiobjective optimization: interactive and evolutionary approaches. Springer Science & Business Media, Berlin

Bravo M, Gonzalez I (2009) Applying stochastic goal programming: a case study on water use planning. Eur J Op Res 196(3):1123–1129

Charnes A, Collomb B (1972) Optimal economic stabilization policy: linear goal-programming models. Soc-Econ Plan Sci 6:431–435

Charnes A, Cooper WW (1957) Management models and industrial applications of linear programming. Manag Sci 4(1):38–91

Charnes A, Cooper WW, Ferguson RO (1955) Optimal estimation of executive compensation by linear programming. Manag Sci 1(2):138–151

Cheridito P, Summer C (2006) Utility maximization under increasing risk aversion in one-period models. Finance Stoch 10(1):147–158

Choobineh M, Mohagheghi S (2016) A multi-objective optimization framework for energy and asset management in an industrial microgrid. J Clean Prod 139:1326–1338

Debreu G (1960) Topological methods in cardinal utility theory. In: Mathematical Methods in the Social Sciences. Standford University Press, Standford

Díaz-Madroñero M, Mula J, Jiménez M (2014) Fuzzy goal programming for material requirements planning under uncertainty and integrity conditions. Int J Prod Res 52(23):6971–6988

Elbasha EH (2005) Risk aversion and uncertainty in cost-effectiveness analysis: the expected-utility, moment-generating function approach. Health Econ 14(5):457–470

Ewald CO, Yang Z (2008) Utility based pricing and exercising of real options under geometric mean reversion and risk aversion toward idiosyncratic risk. Math Methods Op Res 68(1):97–123

Gass SI (1986) A process for determining priorities and weights for large-scale linear goal programmes. J Op Res Soc 37(8):779–785

Ghahtarani A, Najafi AA (2013) Robust goal programming for multi-objective portfolio selection problem. Econ Model 33:588–592

Gollier C (2001) The economics of risk and time. MIT press, Cambridge

González-Pachón J, Romero C (2016) Bentham, Marx and Rawls ethical principles: in search for a compromise. Omega 62:47–51

González-Pachón J, Diaz-Balteiro L, Romero C (2019) A multi-criteria approach for assigning weights in voting systems. Soft Comput 23(17):8181–8186

Grigoroudis E, Orfanoudaki E, Zopounidis C (2012) Strategic performance measurement in a healthcare organisation: a multiple criteria approach based on balanced scorecard. Omega 40(1):104–119

Hanks RW, Weir JD, Lunday BJ (2017) Robust goal programming using different robustness echelons via norm-based and ellipsoidal uncertainty sets. Eur J Op Res 262(2):636–646

Ignizio JP (1999) Illusions of optimality. Eng Optim 31(6):749–761

Jiménez M, Bilbao-Terol A, Arenas-Parra M (2018) A model for solving incompatible fuzzy goal programming: an application to portfolio selection. Int Trans Op Res 25(3):887–912

Johansson-Stenman O (2010) Risk aversion and expected utility of consumption over time. Games Econ Behav 68(1):208–219

Jones D (2011) A practical weight sensitivity algorithm for goal and multiple objective programming. Eur J Op Res 213(1):238–245

Jones D, Tamiz M (2010) Practical goal programming. Springer, New York

Kallberg JG, Ziemba WT (1983) Comparison of alternative utility functions in portfolio selection problems. Manag Sci 29(11):1257–1276

Kihlstrom R (2009) Risk aversion and the elasticity of substitution in general dynamic portfolio theory: consistent planning by forward looking, expected utility maximizing investors. J Math Econ 45(9–10):634–663

Kluyver T, Ragan-Kelley B, Pérez F, Granger BE, Bussonnier M, Frederic J, Kelley K, Hamrick JB, Grout J, Corlay S, et al (2016) Jupyter notebooks-a publishing format for reproducible computational workflows. In: ELPUB, pp. 87–90

Konno H, Yamazaki H (1991) Mean-absolute deviation portfolio optimization model and its applications to tokyo stock market. Manag Sci 37(5):519–531

Kraft D (1988) A software package for sequential quadratic programming. Forschungsbericht- Deutsche Forschungs- und Versuchsanstalt fur Luft- und Raumfahrt 28

Krantz D, Luce D, Suppes P, Tversky A (1971) Foundations of measurement: geometrical, threshold, and probabilistic representations. Academic Press, New York

Kuchta D (2004) Robust goal programming. Control Cybern 33(3):501–510

Langlais E (2005) Willingness to pay for risk reduction and risk aversion without the expected utility assumption. Theory Decis 59(1):43–50

Markowitz H (1952) Portfolio selection. J Financ 7(1):77–91

Masri H (2017) A multiple stochastic goal programming approach for the agent portfolio selection problem. Ann Op Res 251(1–2):179–192

Matthews LR, Guzman YA, Floudas CA (2018) Generalized robust counterparts for constraints with bounded and unbounded uncertain parameters. Comput Chem Eng 116:451–467

McCarl BA, Bessler DA (1989) Estimating an upper bound on the pratt risk a version coefficient when the utility function is unknown. Aust J Agric Econ 33:56

Messaoudi L, Aouni B, Rebai A (2017) Fuzzy chance-constrained goal programming model for multi-attribute financial portfolio selection. Ann Op Res 251(1–2):193–204

Miettinen K, Ruiz F, Wierzbicki AP (2008) Introduction to multiobjective optimization: interactive approaches. In: Multiobjective Optimization. Springer, Berlin, pp 27–57

Muñoz MM, Ruiz F (2009) ISTMO: an interval reference point-based method for stochastic multiobjective programming problems. Eur J Op Res 197(1):25–35

Muñoz MM, Luque M, Ruiz F (2010) Interest: a reference-point-based interactive procedure for stochastic multiobjective programming problems. OR Spectr 32(1):195–210

Oliveira R, Zanella A, Camanho AS (2019) The assessment of corporate social responsibility: the construction of an industry ranking and identification of potential for improvement. Eur J Op Res 278(2):498–513

Pratt JW (1964) Risk aversion in the small and in the large. Econometrica 32(1–2):122–136

Romero C (1991) Handbook of critical issues in goal programming. Pergamon Press, Oxford

Salas-Molina F, Rodríguez-Aguilar JA, Pla-Santamaria D (2018) Boundless multiobjective models for cash management. Eng Econ 63(4):363–381

Schechter L (2007) Risk aversion and expected-utility theory: a calibration exercise. J Risk Uncertain 35(1):67–76

Tamiz M, Jones D (1996) Goal programming and pareto efficiency. J Inf Optim Sci 17(2):291–307

Tsionas MG (2019) Multi-objective optimization using statistical models. Eur J Op Res 276(1):364–378

Woerheide W, Persson D (1993) An index of portfolio diversification. Financ Serv Rev 2(2):73–85

Xu Y, Yeh CH (2012) An integrated approach to evaluation and planning of best practices. Omega 40(1):65–78

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Acknowledgements

This work is devoted to the memory of Professor Enrique Ballestero for his selfless dedication to it.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bravo, M., Jones, D., Pla-Santamaria, D. et al. Encompassing statistically unquantifiable randomness in goal programming: an application to portfolio selection. Oper Res Int J 22, 5685–5706 (2022). https://doi.org/10.1007/s12351-022-00713-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12351-022-00713-1