Abstract

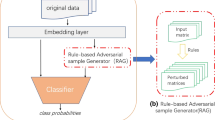

Recent years have seen the wide application of natural language processing (NLP) models in crucial areas such as finance, medical treatment, and news media, raising concerns about the model robustness and vulnerabilities. We find that prompt paradigm can probe special robust defects of pre-trained language models. Malicious prompt texts are first constructed for inputs and a pre-trained language model can generate adversarial examples for victim models via maskfilling. Experimental results show that prompt paradigm can efficiently generate more diverse adversarial examples besides synonym substitution. Then, we propose a novel robust training approach based on prompt paradigm which incorporates prompt texts as the alternatives to adversarial examples and enhances robustness under a lightweight minimax-style optimization framework. Experiments on three real-world tasks and two deep neural models show that our approach can significantly improve the robustness of models to resist adversarial attacks.

Similar content being viewed by others

References

Qiang J, Zhang F, Li Y, Yuan Y, Zhu Y, Wu X. Unsupervised statistical text simplification using pre-trained language modeling for initialization. Frontiers of Computer Science, 2023, 17(1): 171303

Kang L, Wu L, Yang Y H. A novel unsupervised approach for multilevel image clustering from unordered image collection. Frontiers of Computer Science, 2013, 7(1): 69–82

Huang P, Yang Y, Jia F, Liu M, Ma F, Zhang J. Word level robustness enhancement: fight perturbation with perturbation. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. 2022, 10785–10793

Huang P, Yang Y, Liu M, Jia F, Ma F, Zhang J. e-weakened robustness of deep neural networks. In: Proceedings of the 31st ACM SIGSOFT International Symposium on Software Testing and Analysis. 2022, 126–138

Zhang X, Zhang J, Chen Z, He K. Crafting adversarial examples for neural machine translation. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers). 2021, 1967–1977

Zheng X, Zeng J, Zhou Y, Hsieh C, Cheng M, Huang X. Evaluating and enhancing the robustness of neural network-based dependency parsing models with adversarial examples. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 2020, 6600–6610

Lin J, Zou J, Ding N. Using adversarial attacks to reveal the statistical bias in machine reading comprehension models. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers). 2021, 333–342

Cheng M, Wei W, Hsieh C J. Evaluating and enhancing the robustness of dialogue systems: a case study on a negotiation agent. In: Proceedings of 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). 2019, 3325–3335

Devlin J, Chang M, Lee K, Toutanova K. BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). 2019, 4171–4186

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V. Roberta: a robustly optimized BERT pretraining approach. 2019, arXiv preprint arXiv: 1907.11692

Liu P, Yuan W, Fu J, Jiang Z, Hayashi H, Neubig G. Pre-train, prompt, and predict: a systematic survey of prompting methods in natural language processing. 2021, arXiv preprint arXiv: 2107.13586

Xu J, Ju D, Li M, Boureau Y L, Weston J, Dinan E. Bot-adversarial dialogue for safe conversational agents. In: Proceedings of 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2021, 2950–2968

Bartolo M, Thrush T, Jia R, Riedel S, Stenetorp P, Kiela D. Improving question answering model robustness with synthetic adversarial data generation. In: Proceedings of 2021 Conference on Empirical Methods in Natural Language Processing. 2021, 8830–8848

Perez E, Huang S, Song H F, Cai T, Ring R, Aslanides J, Glaese A, McAleese N, Irving G. Red teaming language models with language models. In: Proceedings of 2022 Conference on Empirical Methods in Natural Language Processing. 2022

Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A. Towards deep learning models resistant to adversarial attacks. In: Proceedings of the 6th International Conference on Learning Representations. 2018

Maas A L, Daly R E, Pham P T, Huang D, Ng A Y, Potts C. Learning word vectors for sentiment analysis. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies. 2011, 142–150

Jin D, Jin Z, Zhou J T, Szolovits P. Is BERT really robust? A strong baseline for natural language attack on text classification and entailment. In: Proceedings of the 34th AAAI Conference on Artificial Intelligence. 2020, 8018–8025

Gao T, Fisch A, Chen D. Making pre-trained language models better few-shot learners. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers). 2021, 3816–3830

Schick T, Schütze H. Exploiting cloze-questions for few-shot text classification and natural language inference. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume. 2021, 255–269

Li X L, Liang P. Prefix-tuning: Optimizing continuous prompts for generation. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers). 2021, 4582–4597

Dou Z, Liu P, Hayashi H, Jiang Z, Neubig G. GSum: a general framework for guided neural abstractive summarization. In: Proceedings of 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2021, 4830–4842

Yang Y, Lei W, Huang P, Cao J, Li J, Chua T. A dual prompt learning framework for few-shot dialogue state tracking. In: Proceedings of the ACM Web Conference 2023, WWW 2023, Austin, TX, USA, 30 April 2023–4 May 2023. 2023, 1468–1477

Wang X, Yang Y, Deng Y, He K. Adversarial training with fast gradient projection method against synonym substitution based text attacks. In: Proceedings of the 36th AAAI Conference on Artificial Intelligence. 2021, 13997–14005

Alzantot M, Sharma Y, Elgohary A, Ho B H, Srivastava M B, Chang K W. Generating natural language adversarial examples. In: Proceedings of 2018 Conference on Empirical Methods in Natural Language Processing, Brussels. 2018, 2890–2896

Zang Y, Qi F, Yang C, Liu Z, Zhang M, Liu Q, Sun M. Word-level textual adversarial attacking as combinatorial optimization. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 2020, 6066–6080

Ren S, Deng Y, He K, Che W. Generating natural language adversarial examples through probability weighted word saliency. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. 2019, 1085–1097

Yang Y, Huang P, Ma F, Cao J, Zhang M, Zhang J, Li J. Quantifying robustness to adversarial word substitutions. 2022, arXiv preprint arXiv: 2201.03829v1

Li D, Zhang Y, Peng H, Chen L, Brockett C, Sun M T, Dolan B. Contextualized perturbation for textual adversarial attack. In: Proceedings of 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2021, 5053–5069

Li L, Ma R, Guo Q, Xue X, Qiu X. BERT-ATTACK: adversarial attack against BERT using BERT. In: Proceedings of 2020 Conference on Empirical Methods in Natural Language Processing. 2020, 6193–6202

Garg S, Ramakrishnan G. BAE: BERT-based adversarial examples for text classification. In: Proceedings of 2020 Conference on Empirical Methods in Natural Language Processing. 2020, 6174–6181

Mozes M, Stenetorp P, Kleinberg B, Griffin L D. Frequency-guided word substitutions for detecting textual adversarial examples. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume. 2021, 171–186

Zhou Y, Jiang J, Chang K W, Wang W. Learning to discriminate perturbations for blocking adversarial attacks in text classification. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. 2019, 4903–4912

Miyato T, Dai A M, Goodfellow I J. Adversarial training methods for semi-supervised text classification. In: Proceedings of the 5th International Conference on Learning Representations. 2017

Jia R, Raghunathan A, Göksel K, Liang P. Certified robustness to adversarial word substitutions. In: Proceedings of 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. 2019, 4129–4142

Dong X, Luu A T, Ji R, Liu H. Towards robustness against natural language word substitutions. In: Proceedings of the 9th International Conference on Learning Representations. 2021

Pang B, Lee L. Seeing stars: exploiting class relationships for sentiment categorization with respect to rating scales. In: Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics. 2005, 115–124

Bowman S R, Angeli G, Potts C, Manning C D. A large annotated corpus for learning natural language inference. In: Proceedings of 2015 Conference on Empirical Methods in Natural Language Processing. 2015, 632–642

Lewis M, Liu Y, Goyal N, Ghazvininejad M, Mohamed A, Levy O, Stoyanov V, Zettlemoyer L. BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 2020, 7871–7880

Dong Z, Dong Q. Hownet and the Computation of Meaning. Singapore: World Scientific Publishing Co. Pte. Ltd., 2006

Wolsey L A, Nemhauser G L. Integer and Combinatorial Optimization. New York: Wiley-Interscience, 1999

Kingma D P, Ba J. Adam: A method for stochastic optimization. In: Proceedings of the 3rd International Conference on Learning Representations. 2015

Conneau A, Kiela D, Schwenk H, Barrault L, Bordes A. Supervised learning of universal sentence representations from natural language inference data. In: Proceedings of 2017 Conference on Empirical Methods in Natural Language Processing. 2017, 670–680

Pennington J, Socher R, Manning C D. Glove: Global vectors for word representation. In: Proceedings of 2014 Conference on Empirical Methods in Natural Language Processing. 2014, 1532–1543

Acknowledgements

This work has been supported by the National Key R&D Program of China (No. 2021AAA0140203), the Zhejiang Provincial Key Research and Development Program of China (No. 2021C01164), and the National Natural Science Foundation of China (Nos. 61972384, 62132020, and 62203425).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Yuting Yang is a PhD candidate at Institute of Computing Technology, Chinese Academy of Sciences, China. Her research interests include trustworthy AI, dialogue system and text generation.

Pei Huang received his PhD from Institute of Software, Chinese Academy of Sciences, China. He is a postdoc scholar at Department of Computer Science, Stanford University, USA. His research interests include automated reasoning and trustworthy AI.

Juan Cao is a research professor at Institute of Computing Technology, Chinese Academy of Sciences, China. Her research interests include multimedia analysis.

Jintao Li is a research professor at Institute of Computing Technology, Chinese Academy of Sciences, China. His research interests include multimedia analysis and pervasive computing.

Yun Lin is a research assistant professor at School of Computing, National University of Singapore, Singapore. His research interests include explainable and interactive root cause inference techniques.

Feifei Ma is a research professor at Institute of Software, Chinese Academy of Sciences, China. Her research interests include automated reasoning, constraint solving, and computer mathematics.

Electronic Supplementary Material

Rights and permissions

About this article

Cite this article

Yang, Y., Huang, P., Cao, J. et al. A prompt-based approach to adversarial example generation and robustness enhancement. Front. Comput. Sci. 18, 184318 (2024). https://doi.org/10.1007/s11704-023-2639-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-023-2639-2