Abstract

Purpose

Surface reconstructions from laryngoscopic videos have the potential to assist clinicians in diagnosing, quantifying, and monitoring airway diseases using minimally invasive techniques. However, tissue movements and deformations make these reconstructions challenging using conventional pipelines.

Methods

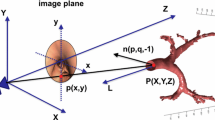

To facilitate such reconstructions, we developed video frame pre-filtering and featureless dense matching steps to enhance the Alicevision Meshroom SfM pipeline. Time and the anterior glottic angle were used to approximate the rigid state of the airway and to collect frames with different camera poses. Featureless dense matches were tracked with a correspondence transformer across subsets of images to extract matched points that could be used to estimate the point cloud and reconstructed surface. The proposed pipeline was tested on a simulated dataset under various conditions like illumination and resolution as well as real laryngoscopic videos.

Results

Our pipeline was able to reconstruct the laryngeal region based on 4, 8, and 16 images obtained from simulated and real patient exams. The pipeline was robust to sparse inputs, blur, and extreme lighting conditions, unlike the Meshroom pipeline which failed to produce a point cloud for 6 of 15 simulated datasets.

Conclusion

The pre-filtering and featureless dense matching modules specialize the conventional SfM pipeline to handle the challenging laryngoscopic examinations, directly from patient videos. These 3D visualizations have the potential to improve spatial understanding of airway conditions.

Similar content being viewed by others

References

Holsinger FC, Kies MS, Weinstock YE, Lewin JS, Hajibashi S, Nolen DD, Weber R, Laccourreye O (2008) Examination of the larynx and pharynx. New Engl J Med 358:2. https://doi.org/10.1056/NEJMvcm0706392

Ridley MB, Kelly JH, Marsh BR, Roa A (1995) The Larynx : a multidisciplinary approach, 2nd edn. Mosby, St. Louis

Luegmair G, Mehta DD, Kobler JB, Dollinger M (2015) Three-dimensional optical reconstruction of vocal fold kinematics using highspeed video with a laser projection system. IEEE Trans Med Imaging 34(12):2572–2582. https://doi.org/10.1109/TMI.2015.2445921

Fast JF, Dava HR, Ruppel AK, Kundrat D, Krauth M, Laves MH, Spindeldreier S, Kahrs LA, Ptok M (2021) Stereo laryngoscopic impact site prediction for droplet-based stimulation of the laryngeal adductor reflex. IEEE Access 9:112177–112192. https://doi.org/10.1109/ACCESS.2021.3103049

Miyamoto M, Ohara A, Arai T, Koyanagi M, Watanabe I, Nakagawa H, Yokoyama K, Saito K (2019) Three-dimensional imaging of vocalizing larynx by ultra-high-resolution computed tomography. Eur Arch Oto-Rhino-Laryngol. https://doi.org/10.1007/s00405-019-05620-4

Sun D, Liu J, Linte CA, Duan H, Robb RA (2013) Surface reconstruction from tracked endoscopic video using the structure from motion approach. In: Liao H, Linte CA, Masamune K, Peters TM, Zheng G (eds) Augmented reality environments for medical imaging and computer-assisted interventions. Springer, Berlin, pp 127–135

Recasens D, Lamarca J, Facil JM, Montiel JMM, Civera J (2021) Endo-depth-and-motion: localization and reconstruction in endoscopic videos using depth networks and photometric constraints. CoRR abs/2103.16525. https://arxiv.org/abs/2103.16525

DeVore EK, Adamian N, Jowett N, Wang T, Song P, Franco R, Naunheim MR (2022) Predictive outcomes of deep learning measure-ment of the anterior glottic angle in bilateral vocal fold immobility. Laryngoscope. https://doi.org/10.1002/lary.30473

Ozyoruk KB, Gokceler GI, Bobrow TL, Coskun G, Incetan K, Almalioglu Y, Mahmood F, Curto E, Perdigoto L, Oliveira M, Sahin H, Araujo H, Alexandrino H, Durr NJ, Gilbert HB, Turan M (2021) Endoslam dataset and an unsupervised monocular visual odometry and depth estimation approach for endoscopic videos. Med Image Anal 71:102058. https://doi.org/10.1016/j.media.2021.102058

Edwards PJE, Psychogyios D, Speidel S, Maier-Hein L, Stoyanov D (2022) Serv-ct: a disparity dataset from cone-beam ct for validation of endoscopic 3d reconstruction. Med Image Anal 76:102302. https://doi.org/10.1016/j.media.2021.102302

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60:91–110. https://doi.org/10.1023/B:VISI.0000029664.99615.94/METRICS

Griwodz C, Gasparini S, Calvet L, Gurdjos P, Castan F, Maujean B, Lanthony Y, Lillo GD (2021) Alicevision meshroom. pp. 241–247. https://doi.org/10.1145/3458305.3478443

VisualSFM: a visual structure from motion system. http://ccwu.me/vsfm/index.html

Dong J, Soatto S (2014) Domain-size pooling in local descriptors: Dsp-sift. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition, 07–12-June-2015, pp. 5097–5106. https://doi.org/10.48550/arxiv.1412.8556

Alcantarilla PF, Nuevo J, Bartoli A (2013) Fast explicit diffusion for accelerated features in nonlinear scale spaces. In: BMVC 2013-electronic proceedings of the british machine vision conference 2013. https://doi.org/10.5244/C.27.13

Levy B, Petitjean S, Ray N, Maillot J (2002) Least squares conformal maps for automatic texture atlas generation. ACM Trans Graph (TOG) 21:362–371. https://doi.org/10.1145/566654.566590

Okatani T, Deguchi K (1997) Shape reconstruction from an endoscope image by shape from shading technique for a point light source at the projection center. Comput Vis Image Underst 66(2):119–131. https://doi.org/10.1006/cviu.1997.0613

Ren Z, He T, Peng L, Liu S, Zhu S, Zeng B (2017) Shape recovery of endoscopic videos by shape from shading using mesh regularization. In: Zhao Y, Kong X, Taubman D (eds) Image and graphics. Springer, Cham, pp 204–213

Prinzen M, Trost J, Bergen T, Nowack S, Wittenberg T (2015) 3d shape reconstruction of the esophagus from gastroscopic video. In: Handels H, Deserno TM, Meinzer H-P, Tolxdorff T (eds) Bildverarbeitung F ̈ur die Medizin 2015. Springer, Berlin, pp 173–178

Widya AR, Torii A, Okutomi M (2018) Structure-from-motion using dense cnn features with keypoint relocalization. IPSJ Trans Comput Vis Appl 10:1–7. https://doi.org/10.48550/arxiv.1805.03879

Jiang W, Trulls E, Hosang J, Tagliasacchi A, Yi KM (2021) COTR: correspondence transformer for matching across images. In; Proceedings of the IEEE/CVF international conference on computer vision (ICCV). abs/2103.14167. https://arxiv.org/abs/2103.14167

Revaud J, Weinzaepfel P, Harchaoui Z, Schmid C (2015) Deep convolutional matching. CoRR abs/1506.07656. https://arxiv.org/abs/1506.07656

Sun J, Shen Z, Wang Y, Bao H, Zhou X (2021) LoFTR: detector-free local feature matching with transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

Sidhu V, Tretschk E, Golyanik V, Agudo A, Theobalt C (2020) Neural dense non-rigid structure from motion with latent space constraints. In: European conference on computer vision (ECCV)

Golyanik V, Jonas A, Stricker D, Theobalt C (2020) Intrinsic dynamic shape prior for dense non-rigid structure from motion. In: 2020 international conference on 3D vision (3DV), pp. 692–701

Agudo A, Moreno-Noguer F, Calvo B, Montiel JMM (2016) Sequential non-rigid structure from motion using physical priors. IEEE Trans Pattern Anal Mach Intell 38(5):979–994. https://doi.org/10.1109/TPAMI.2015.2469293

Agudo A (2021) Total estimation from rgb video: on-line camera self-calibration, non-rigid shape and motion. In: 2020 25th international conference on pattern recognition (ICPR), pp. 8140–8147. https://doi.org/10.1109/ICPR48806.2021.9412923

Adamian N, Naunheim MR, Jowett N (2020) An open-source computer vision tool for automated vocal fold tracking from videoendoscopy. Laryngoscope 131:E219–E225. https://doi.org/10.1002/lary.28669

3D Molier International: Turbosquid: 3D Human Respiratory (2019) https://www.turbosquid.com/3d-models/3d-human-respiratory-1469281

Ashton M, Barnard J, Casset F, Charlton M, Downs G, Gorse D, Holliday J, Lahana R, Willett P (2002) Identification of diverse database subsets using property-based and fragment-based molecular descriptions. Quant Struct-Act Relat 21(6):598–604. https://doi.org/10.1002/qsar.200290002

Schneider CA, Rasband WS, Eliceiri KW (2012) Nih image to imagej: 25 years of image analysis. Nat Methods 9:671–675. https://doi.org/10.1038/nmeth.2089

Su M-C, Yeh T-H, Tan C-T, Lin C-D, Linne O-C, Lee S-Y (2002) Measurement of adult vocal fold length. J Laryngol Otol 116(6):447–449. https://doi.org/10.1258/0022215021911257

Zhao Q, Price T, Pizer S, Niethammer M, Alterovitz R, Rosenman J (2016) The endoscopogram: a 3d model reconstructed from endoscopic video frames. In: Ourselin S, Joskowicz L, Sabuncu MR, Unal G, Wells W (eds) Medical image computing and computer-assisted intervention–MICCAI 2016. Springer, Cham, pp 439–447

Funding

We would like to thank the following sources of funding: (1) Department Research Award, Department of Otolarngology—Head & Neck Surgery, Temerty Faculty of Medicine, University of Toronto, and (2) University of Toronto Mississauga Undergraduate Research Grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Ethical approval

This study was approved by the Unity Health Toronto Research Ethics Board (REB#: 20–235).

Informed consent

Informed consent was obtained from all individual participants included in the study. Patients signed informed consent regarding their data and photographs.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file2 (MP4 20233 kb)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Regef, J., Talasila, L., Wiercigroch, J. et al. Laryngeal surface reconstructions from monocular endoscopic videos: a structure from motion pipeline for periodic deformations. Int J CARS (2024). https://doi.org/10.1007/s11548-024-03118-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11548-024-03118-x