Abstract

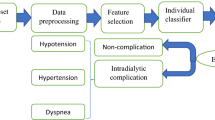

The high prevalence and incidence of severe renal diseases exhaust constrained medical resources for the treatment of uremia patients. In addition, the problem of imbalanced-class data distributions induces negative effects on classifier learning algorithms. Hemodialysis is the most common treatment for uremia diseases due to the limited supply of donated organs available for transplantation. This study focused on assessing the adequacy of hemodialysis. The lack of available information represents the primary obstacle limiting the evaluation of adequacy, namely: (1) the imbalanced-class problem in a given dataset, (2) obeying mathematical distributions for a given dataset, (3) a lack of effective methods for identifying determinant attributes, and (4) developing effective decision rules to explain a given dataset. To address these issues for determining the therapeutic effects of hemodialysis in uremia patients, this study proposes a hybrid imbalanced-class decision tree-rough set model to integrate the knowledge of expert physicians, a feature selection method, imbalanced sampling techniques, a rough set classifier, and a rule filter. The method was assessed by examining the medical records of uremia patients from a medical center in Taiwan. The proposed method yields better performance compared to previously reported methods according to the evaluation criteria.

Similar content being viewed by others

References

Abellán J, Baker RM, Coolen FPA, Crossman RJ, Masegosa AR (2014) Classification with decision trees from a nonparametric predictive inference perspective. Comput Stat Data Anal 71:789–802

Aggarwal HK, Jain D, Sahney A, Bansal T, Yadav RK, Kathuria KL (2012) Effect of dialyser reuse on the efficacy of haemodialysis in patients of chronic kidney disease in developing world. J Int Med Sci Acad 25(2):81–83

Ayu MA, Ismail SA, Matin AFA, Mantoro T (2012) A comparison study of classifier algorithms for mobile-phone’s accelerometer based activity recognition. Proc Eng 41:224–229

Batista G, Monard MC, Prati RC (2004) A study of the behavior of several methods for balancing machine learning training data. SIGKDD Explor 6(1):20–29

Becker W, Rowson J, Oakley JE, Yoxall A, Manson G, Worden K (2011) Bayesian sensitivity analysis of a model of the aortic valve. J Biomech 44(8):1499–1506

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Chawla NV, Japkowicz N, Kotcz A (2004) Editorial: special issue on learning from imbalanced data sets. SIGKDD Explor 6(1):1–6

Chen Y-S (2012) Classifying credit ratings for Asian banks using integrating feature selection and the CPDA-based rough sets approach. Knowl-Based Syst 26:259–270

Chen Y-S (2013) Modeling hybrid rough set-based classification procedures to identify hemodialysis adequacy for end-stage renal disease patients. Comput Biol Med 43(10):1590–1605

Chen Y-S, Cheng C-H (2013) Hybrid models based on rough set classifiers for setting credit rating decision rules in the global banking industry. Knowl-Based Syst 39:224–239

Chen Y-S, Cheng C-H (2013) Application of rough set classifiers for determining hemodialysis adequacy in ESRD patients. Knowl Inf Syst 34(2):453–482

Chen LS, Su CT, Yih Y (2006) Knowledge acquisition through information granulation for imbalanced data. Expert Syst Appl 31:531–541

Chen F, Li X, Liu L (2013) Improved C4.5 decision tree algorithm based on sample selection. In: Proceedings of the IEEE international conference on software engineering and service sciences (ICSESS) art. no. 6615421, pp 779–782

Chiranjeevi P, Sengupta S (2012) Robust detection of moving objects in video sequences through rough set theory framework. Image Vis Comput 30(11):829–842

Cleary JG, Trigg LE (1995) K*: an instance-based learner using an entropic distance measure. In: 12th international conference on machine learning, pp 108–114

Cleofas-Sánchez L, García V, Martín-Félez R, Valdovinos RM, Sánchez JS, Camacho-Nieto O (2013) Hybrid associative memories for imbalanced data classification: an experimental study. Lect Notes Comput Sci 7914:325–334

Combe C, McCullough K, Asano Y, Ginsberg N, Maroni B, Pifer T (2004) Kidney disease outcomes quality initiative (K/DOQI) and the dialysis outcomes and practice patterns study (DOPPS): nutrition guildlines, indicators and practices. Am J Kidney Dis 44(3):39–46

Culp KR, Flanigan M, Hayajneh Y (1999) An analysis of body weight and hemodialysis adequacy based on the urea reduction ratio. ANNA J 26(4):391–400

Drummond C, Holte RC (2003) C4.5, class imbalance, and cost sensitivity: why under-sampling beats over-sampling. In: Workshop on learning from imbalanced data sets II. ICML, Washington

Dubey R, Zhou J, Wang Y, Thompson PM, Ye J (2014) Analysis of sampling techniques for imbalanced data: an n = 648 ADNI study. NeuroImage 87:220–241

Durai MAS, Acharjya DP, Kannan A, Iyengar NCSN (2012) An intelligent knowledge mining model for kidney cancer using rough set theory. Int J Bioinform Res Appl 8(5–6):417–435

Estabrooks A, Japkowicz N, Jo T (2004) A multiple resampling method for learning from imbalanced data sets. Comput Intell 20(1):18–36

Fernandez A, Garcia S, del Jesus MJ, Herrera F (2008) A study of the behavior of linguistic fuzzy rule based classification systems in the framework of imbalanced data-sets. Fuzzy Sets Syst 159(18):2378–2398

Fernández A, López V, Galar M, del Jesus MJ, Herrera F (2013) Analysing the classification of imbalanced data-sets with multiple classes: Binarization techniques and adhoc approaches. Knowl-Based Syst 42:97–110

Frank E, Witten IH (1998) Generating accurate rule sets without global optimization. Proceedings of the 15th international conference on machine learning (ICML-98). Madison, Wisconsin, pp 144–151

Frank E, Hall M, Pfahringer B (2003) Locally weighted naive Bayes. In: 19th conference in uncertainty in artificial intelligence, pp 249–256

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: Thirteenth international conference on machine learning, San Francisco, pp 148–156

Greco S, Matarazzo B, Slowinski R (2001) Rough sets theory for multicriteria decision analysis. Eur J Oper Res 129(1):1–47

Grzymala-Busse JW (1992) LERS—a system for learning from examples based on rough sets. In: Slowinski R (ed) Intelligent decision support. Kluwer Academic Publishers, Dordrecht, pp 3–18

Grzymala-Busse JW (1997) A new version of the rule induction system LERS. Fundam Inf 31(1):27–39

Grzymala-Busse JW (2008) MLEM2 rule induction algorithms: with and without merging intervals. Stud Comput Intell 118:153–164

Grzymala-Busse JW, Stefanowski J, Wilk S (2005) A comparison of two approaches to data mining from imbalanced data. J Intell Manuf 16:565–573

Hall MA, Holmes G (2003) Benchmarking attribute selection techniques for discrete class data mining. IEEE Trans Knowl Data Eng 15(3):1–16

Han J, Kamber M (2001) Data mining: concepts and techniques. Morgan Kaufmann Publishers, San Francisco

Hanley JA, McNeil BJ (1983) A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology 148(3):839–843

Holte RC, Kubat M, Matwin S (1998) Machine learning for the detection of oil spills in satellite radar images. Mach Learn 30(2–3):195–215

Hwang J-C, Weng S-F, Weng R-H (2012) High incidence of hepatocellular carcinoma in ESRD patients: caused by high hepatitis rate or ‘uremia’? A population-based study. Jpn J Clin Oncol 42(9):780–786

Ibarra DA, Fennel K, Cullen JJ (2014) Coupling 3-D Eulerian bio-physics (ROMS) with individual-based shellfish ecophysiology (SHELL-E): a hybrid model for carrying capacity and environmental impacts of bivalve aquaculture. Ecol Model 273:63–78

Japkowicz N, Jo T (2004) Class imbalances versus small disjuncts. SIGKDD Explor 6(1):40–49

Jing S-Y (2013) A hybrid genetic algorithm for feature subset selection in rough set theory. Soft Comput 1–10, Article in Press

John GH, Langley P (1995) Estimating continuous distributions in Bayesian classifiers. In: Eleventh conference on uncertainty in artificial intelligence, San Mateo, pp 338–345

Kattan MW, Cooper RB (2000) A simulation of factors affecting machine learning techniques: an examination of partitioning and class proportions. Omega Int J Manag Sci 28:501–512

Keerthi SS, Shevade SK, Bhattacharyya C, Murthy KRK (2001) Improvements to Platt’s SMO algorithm for SVM classifier design. Neural Comput 13(3):637–649

Kim KA, Choi JY, Yoo TK, Kim SK, Chung K, Kim DW (2013) Mortality prediction of rats in acute hemorrhagic shock using machine learning techniques. Med Biol Eng Comput 51(9):1059–1067

Kohavi R (1995) The power of decision tables. In: 8th European conference on machine learning, pp 174–189

Krawczyk B, Woźniak M, Schaefer G (2014) Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl Soft Comput 14 (PART C):554–562

le Cessie S, van Houwelingen JC (1992) Ridge estimators in logistic regression. Appl Stat 41(1):191–201

Lin S-W, Chen S-C (2012) Parameter determination and feature selection for C4.5 algorithm using scatter search approach. Soft Comput 16(1):63–75

Liu J, Hu Q, Yu D (2008) A weighted rough set based method developed for class imbalance learning. Inf Sci 178(4):1235–1256

Liu J, Hu Q, Yu D (2008) A comparative study on rough set based class imbalance learning. Knowl-Based Syst 21(8):753–763

Liu H-T, Sheu TWH, Chang H-H (2013) Automatic segmentation of brain MR images using an adaptive balloon snake model with fuzzy classification. Med Biol Eng Comput 51(10):1091–1104

Liu NT, Holcomb JB, Wade CE, Batchinsky AI, Cancio LC, Darrah MI, Salinas J (2014) Development and validation of a machine learning algorithm and hybrid system to predict the need for life-saving interventions in trauma patients. Med Biol Eng Comput 52(2):193–203

López V, Fernández A, García S, Palade V, Herrera F (2013) An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf Sci 250:113–141

Lu P, Wang X-H, Xiao J-M (2013) Method of fault diagnosis in power system based on rough set theory and graph theory. Kongzhi yu Juece/Control and Decision 28(4):511–516 + 524

McClellan WM, Frankenfield DL, Frederick PR, Flanders WD, Alfaro-Correa A, Rocco M, Helgerson SD (1999) Can dialysis therapy be improved? A report from the ESRD Core Indicators Project. Am J Kidney Diseases 34(6):1075–1082

Merkx MAG, Bode AS, Huberts W, Oliván Bescós J, Tordoir JHM, Breeuwer M, van de Vosse FN, Bosboom EMH (2013) Assisting vascular access surgery planning for hemodialysis by using MR, image segmentation techniques, and computer simulations. Med Biol Eng Comput 51(8):879–889

Meyer TW, Hostetter TH (2007) Uremia. N Engl J Med 357(13):1316–1325

Murphy KP (2002) Bayes Net ToolBox, Technical Report, MIT Artificial Intelligence Laboratory, http://www.ai.mit.edu/~murphyk/

National Health Insurance Administration, ministry of health and welfare (2015) Retrieved from http://www.nhi.gov.tw/webdata/webdata.aspx?menu=17&menu_id=1027&webdata_id=4565, on 28 Oct 2015

Nava R, Escalante-Ramírez B, Cristóbal G, Estépar RSJ (2014) Extended Gabor approach applied to classification of emphysematous patterns in computed tomography. Med Biol Eng Comput 52(4):393–403

Nguyen HS, Nguyen SH (2003). Analysis of stulong data by rough set exploration system (RSES). In: Berka P (ed) Proceedings of the ECML/PKDD workshop 2003 discovery challenge, pp 71–82

Olatunji SO, Selamat A, Abdulraheem A (2014) A hybrid model through the fusion of type-2 fuzzy logic systems and extreme learning machines for modeling permeability prediction. Inf Fusion 16(1):29–45

Ozaki M, Hori J, Okabayashi T (2013) Evaluation of urea reduction ratio estimated from the integrated value of urea concentrations in spent dialysate. Ther Apher Dial. doi:10.1111/1744-9987.12069

Park S-A, Hwang H-J, Lim J-H, Choi J-H, Jung H-K, Im C-H (2013) Evaluation of feature extraction methods for EEG-based brain–computer interfaces in terms of robustness to slight changes in electrode locations. Med Biol Eng Comput 51(5):571–579

Parra E, Ramos R, Betriu A, Paniagua J, Belart M, Martín F, Martínez T (2006) Multicenter prospective study on hemodialysis quality. NEFROLOGÍA 26:688–694

Pawlak Z (1982) Rough sets. Inf J Comput Inf Sci 11(5):341–356

Pawlak Z (1991) Rough sets, theoretical aspects of reasoning about data. Kluwer, Dordrecht

Peng L, Niu R, Huang B, Wu X, Zhao Y, Ye R (2014) Landslide susceptibility mapping based on rough set theory and support vector machines: a case of the Three Gorges area, China. Geomorphology 204:287–301

Platt J (1998) Fast training of support vector machines using sequential minimal optimization. In: Schoelkopf B, Burges C, Smola A (eds) Advances in kernel methods—support vector learning

Provost FJ, Weiss GM (2003) Learning when training data are costly: the effect of class distribution on tree induction. J Artif Intell Res 19:315–354

Quinlan JR (1986) Induction of decision trees. Mach Learn 1(1):81–106

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann, San Mateo

Raich D, Kulkarni PS (2014) Application of artificial neural networks and rough set theory for the analysis of various medical problems and nephritis disease diagnosis. Adv Intell Syst Comput 247:83–90

Rajesh T, Malar RSM (2013) Rough set theory and feed forward neural network based brain tumor detection in magnetic resonance images. In: 2013 Proceedings of the international conference on advanced nanomaterials and emerging engineering technologies (ICANMEET) art. no. 6609287, pp 240–244

Ravi Kumar P, Ravi V (2007) Bankruptcy prediction in banks and firms via statistical and intelligent techniques—a review. Eur J Oper Res 180(1):1–28

Ravi V, Kurniawan H, Thai PNK, Ravi Kumar P (2008) Soft computing system for bank performance prediction. Appl Soft Comput 8(1):305–315

Roozitalab M, Mohammadi B, Najafi S, Mehrabi S, Fararouei M (2013) KT/V and URR and the adequacy of Hemodialysis in Iranian provincial hospitals: an evaluation study. Life Sci J 10(12):13–16

Roumani YF, May JH, Strum DP, Vargas LG (2013) Classifying highly imbalanced ICU data. Health Care Manag Sci 16(2):119–128

Rutkowski L, Jaworski M, Pietruczuk L, Duda P (2014) Decision trees for mining data streams based on the gaussian approximation. IEEE Trans Knowl Data Eng 26(1), art. no. 6466324:108–119

Shen M, Dong B, Xu L (2013) An improved method for the feature extraction of Chinese text by combining rough set theory with automatic abstracting technology. Commun Comput Inf Sci 332:496–509

Shi C (2013) Model of financial crisis early-warning system based on rough set theory and artificial neural networks. ICIC Express Lett Part B Appl 4(3):647–653

Sobol AB, Kaminska M, Walczynska M, Walkowiak B (2013) Effect of uremia and hemodialysis on platelet apoptosis. Clin Appl Thromb Hemost 19(3):320–323

Sridhar NR, Josyula S (2013) Hypoalbuminemia in hemodialyzed end stage renal disease patients: risk factors and relationships—a 2 year single center study. BMC Nephrol 14(1) Article number 242:1–9 doi:10.1186/1471-2369-14-242

Su J, Zhang H (2006) A fast decision tree learning algorithm. In: Proceedings of the 21st AAAI conference on artificial intelligence, Boston, MA, July 16–20, pp 500–505

Sunanda V, Santosh B, Jusmita D, Rao BP (2012) Achieving the urea reduction ratio (URR) as a predictor of the adequacy and the NKF-K/DOQI target for calcium, phosphorus and Ca × P product in esrd patients who undergo haemodialysis. J Clin Diagn Res 6(2):169–172

Tatsis VA, Tjortjis C, Tzirakis P (2013) Evaluating data mining algorithms using molecular dynamics trajectories. Int J Data Mining Bioinf 8(2):169–187

Tsumoto, S. (2011). Incremental rule induction based on rough set theory. Lecture notes in artificial intelligence. In; ISMIS’11 Proceedings of the 19th international conference on Foundations of intelligent systems, LNAI 6804. Springer, Berlin, pp 70–79

United States Renal Data System (USRDS) (2015). Retrieved from http://www.usrds.org/2014/download/V1_Ch_i_Intro_14.pdf, on 28 Oct 2015

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Vlachokosta AA, Asvestas PA, Gkrozou F, Lavasidis L, Matsopoulos GK, Paschopoulos M (2013) Classification of hysteroscopical images using texture and vessel descriptors. Med Biol Eng Comput 51(8):859–867

Walters RW, Kier KL (2012) Chapter 8: the application of statistical analysis in the biomedical Sciences. In: Kier KL, Malone PM, Stanovich JE (eds) Drug information: a guide for pharmacists, 4th edn. McGraw-Hill, New York

Wang K-J, Makond B, Wang K-M (2013) An improved survivability prognosis of breast cancer by using sampling and feature selection technique to solve imbalanced patient classification data BMC Med Inf Decis Making 13(1) art. no. 124

Weren ER, Kauer AU, Mizusaki L, Moreira VP, de Oliveira JPM, Wives LK (2014) Examining multiple features for author profiling. J Inf Data Manag 5(3):266–279

Winston PH (1992) Artificial intelligence, 3rd edn. Addison-Wesley, Boston

Wu P, Liu C (2013) Financial distress study based on PSO k-means clustering algorithm and rough set theory. Appl Mech Mater 411–414:2377–2383

Ye M, Wu X, Hu X, Hu D (2013) Anonymizing classification data using rough set theory. Knowl-Based Syst 43:82–94

Yeung CK, Shen DD, Thummel KE, Himmelfarb J (2013) Effects of chronic kidney disease and uremia on hepatic drug metabolism and transport. Kidney Int. doi:10.1038/ki.2013.399

Yin L, Ge Y, Xiao K, Wang X, Quan X (2013) Feature selection for high-dimensional imbalanced data. Neurocomputing 105:3–11

Yuan Z, Wang L-N, Ji X (2014) Prediction of concrete compressive strength: research on hybrid models genetic based algorithms and ANFIS. Adv Eng Softw 67:156–163

Zhang H, Jiang S (2004) Naive bayesian classifiers for ranking. In: Proceedings of the European conference on machine learning (ECML-2004), ITALIE 3201:501–512, Lecture notes in computer science. Springer, Berlin

Zięba M, Tomczak JM, Lubicz M, Świa̧tek J (2014) Boosted SVM for extracting rules from imbalanced data in application to prediction of the post-operative life expectancy in the lung cancer patients. Appl Soft Comput J 14 (PART A):99–108

Zmijewski ME (1984) Methodological issues related to the estimation of financial distress prediction models. J Account Res 22:59–82

Acknowledgments

The author sincerely thanks Dr. Cheng-Yi Hsu (a medical doctor) for his background knowledge and assistance, which provided invaluable assistance and support and significantly improved this study. The author also thanks the National Science Council of the Republic of China, Taiwan, for financially supporting this study under Contract Nos. MOST 103-2221-E-146-003-MY2 & 102-2410-H-146-003. Furthermore, the Editor-in-Chief and Managing Editor of Medical & Biological Engineering & Computing (MBEC) and anonymous referees are also greatly appreciated for their invaluable comments and suggestions in preparing the revised version of this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflict of interest reported.

Appendices

Appendix 1: Definitions of rough set theory

Definition 1

Let \(B \subseteq A\) and \(X \subseteq U\) denote an information system (or an attribute–value system) that uses an information table to represent a basic knowledge framework in which the columns contain “attributes” and the rows contain “objects.” U is the defined universe, a non-empty set of finite objects, and A is a non-empty finite set of attributes \(\varvec{C} \cup \varvec{D}\), such that \(a:\varvec{U} \to V_{a}\), where \(V_{a}\) is the set of values that attribute a may take and where C and D are finite sets of condition and decision attributes, respectively, for objects x, y such that for each condition attribute a, a(x) = a(y), and d(x) = d(y). Furthermore, B is a reduced set of attributes, and X is a subset of objects in the approximation space.

Definition 2

Let \(B \subseteq A\) to indicate an associated equivalence relation denoted IND(B), which is termed a B-indiscernibility relation, and the equivalence classes of the B-indiscernibility relation are denoted [x] B , as follows:

The equivalence class of IND(B) is termed the elementary set in B because it represents the smallest discernible objects.

Definition 3

The set X is approximated using the information table contained in B by constructing the B-lower and B-upper approximation sets as follows:

where x denotes an object in the universe U. The B-lower approximation is the union of all of the equivalence classes in [x] B that the target set contains. However, the B-upper approximation is the union of all of the equivalence classes in [x] B that have non-empty intersections with the target set. Given the lower and upper approximations of a set \(X \subseteq U\), the universe is divided into boundary, positive, and negative regions, as follows:

The elements in \(\underline{B(X)}\) (lower approximation) can be classified as positive members of target set X using the knowledge in B, but the elements in \(\overline{B(X)}\) (upper approximation) can be classified only as possible members of target set X based on the knowledge in B. The boundary region set, BND(X), contains objects that cannot be clearly classified as members of X using the knowledge in B. That is, they can neither be ruled in nor be ruled out as members of the target set X. The target set X is termed “rough” (or “roughly definable”) with respect to the knowledge in B provided that the boundary region is non-empty. The positive region, POS(X), contains all of the objects of U that can be classified into classes using the knowledge in B. The negative region, NEG(X), contains the set of objects that can be definitely ruled out as members of the target set.

Additionally, the quality of approximation of the classification X by B describes the percentage of objects that can be correctly classified as \(\gamma_{B} (X)\) [67]. If \(\gamma_{B} (X) = 1\), the decision table is consistent; otherwise, it is inconsistent. The accuracy of the rough set representation of the target set X can be represented as \(\alpha_{B} (X)\), as given in Eq. (8), and the quality of approximation of the classification X by B is given by Eq. (9) [67].

Definition 4

The condition attributes that do not provide any additional information for the objects in U can be removed and reduced in a decision process of an information system. There is a subset of attributes that can, by itself, fully characterize the knowledge in the database, and such an attribute set is called a reduct. Given a condition attribute C and a decision attribute D, the reduct is defined as any \(R \subseteq C\), such that \(\gamma \left( {R,D} \right) = \gamma \left( {C,D} \right)\), and the reducted set is defined as the power set \(R(C)\). The reduct set is the minimal non-redundant subset of attributes from the original dataset for IND(B) = IND(A) that all provide the same classification quality of the universe of discourse. That is, the reduct set is the subset of all possible reducts of the equivalence relation denoted by C and D, and the minimal reduct is the reduct of least cardinality for the equivalence relation.

Definition 5

The set of attributes that are common to all reducts is termed the core. The core is composed of the most relevant attributes in the original dataset and cannot be removed from an information system without causing collapse of the equivalence-class structure. There is no indispensable attribute if the core is empty. Let RED(A) denote all reducts of A. The intersection of RED(A) is labeled a core of A, shown as Eq. (10).

Appendix 2: Evaluation standard for imbalanced-class data

Traditionally, although the most common evaluation measurement is the overall accuracy rate, this criterion alone cannot assess classification performance, as accuracy is not a reliable metric for the real performance of a classifier and will yield misleading results if the dataset is unbalanced. Thus, other evaluation standards should be used when considering imbalanced-class data problems. In this study, it is absolutely necessary to adopt different criteria, such as accuracy (in %), precision, recall, F-measure, G-mean, true-positive (TP) rate, true-negative (TN) rate, false-positive (FP) rate, false-negative (FN) rate, receiver operation characteristic area (ROCA) [35], and the number of rules, to measure the performance of classifiers from various aspects. Furthermore, the mean, standard deviation, number of generated rules, and run time (in second) of each of the 14 methods were calculated for additional verification. The TP denotes the number of correct predictions that a sample is positive, FP represents the number of incorrect predictions that a sample is positive, FN is the number of incorrect predictions that a sample is negative, and TN is the number of correct predictions. Precision is the fraction of retrieved instances that are relevant, whereas recall is the fraction of relevant instances that are retrieved. High recall indicates that an algorithm returned most of the relevant results, whereas high precision means that an algorithm returned substantially more relevant results than irrelevant. The F-measure measures a combination of the precision and recall for given samples, and the G-mean measures the balanced performance of a learning algorithm between the TP-rate and the TN-rate. Particularly, for imbalanced-class data problems, the classification performance increases with the accuracy, TP-rate, TN-rate, precision, recall, F-measure, G-mean, and ROCA. However, as the FP-rate, FN-rate, and standard deviation decrease, the performance increases, and vice versa.

The related evaluation criteria are given as Eqs. (11–19).

Rights and permissions

About this article

Cite this article

Chen, YS. An empirical study of a hybrid imbalanced-class DT-RST classification procedure to elucidate therapeutic effects in uremia patients. Med Biol Eng Comput 54, 983–1001 (2016). https://doi.org/10.1007/s11517-016-1482-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-016-1482-0