Abstract

Learning from texts means acquiring and applying knowledge, which requires students to judge their text comprehension accurately. However, students usually overestimate their comprehension, which can be caused by a misalignment between the cues used to judge one’s comprehension and the cognitive requirements of future test questions. Therefore, reading instructions might help students to use more valid cues and hence to make more accurate judgments. In two randomized experiments, we investigated the effect of application instructions (in contrast to general and memory instructions) on judgment bias regarding memory test performance and application test performance. In Experiment 1, 131 pre-service teacher students read two texts: For the first text (pretest phase), all participants received general reading instructions. For the second text (testing phase), they received one of the three reading instructions. Main results were that the general reading instructions in the pretest phase resulted in underestimation for memory test performance and overestimation for application test performance. Results from the testing phase yielded mixed effects and, overall, no strong evidence that reading instructions, and in particular application instructions, are beneficial for debiasing judgments of comprehension. Experiment 2 (N = 164 pre-service teachers) restudied the effects with the same texts but a different study design. Results replicated the effects found in the testing phase of Experiment 1. Overall, the results indicated that reading instructions without further support are not sufficient to help students to accurately judge their comprehension and suggested that text characteristics might impact the effect of reading instructions on judgment bias.

Similar content being viewed by others

Studying and understanding texts is a typical learning activity in higher education. In teacher education, text learning can help students not only to gain professional knowledge but also to use this knowledge for educational practice (Csanadi et al., 2021). Indeed, pre-service teachers, that is, students pursuing a teaching degree to teach in schools, have trouble applying scientific theories or empirical evidence to educational situations (Klein et al., 2015). One possible explanation are difficulties in understanding texts in a way that enables them to apply this information to educational situations (Callender & McDaniel, 2007). Successful text comprehension depends not only on cognitive abilities and reading skills but also on the ability to accurately judge one’s own comprehension, called metacomprehension (Dunlosky & Rawson, 2012). Metacomprehension enables students to adapt their reading process when necessary (Ackerman & Goldsmith, 2011). The accuracy of metacomprehension is defined as the agreement between personal judgments of one’s own comprehension and one’s actual comprehension performance (Soto et al., 2019). Judgment bias is a frequently used measure that indicates whether students’ judgments match their actual comprehension performance or indicates over- or underconfidence (Hacker et al., 2000; Maki et al., 2005). Judgment bias is especially important when students must acquire knowledge from one text or use a text for problem solving. In such learning situations, students must understand a single text independently of other texts and, therefore, need to be able to judge their comprehension independently of other texts.

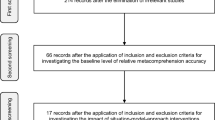

In general, students show overconfidence in their text comprehension (Chen, 2022; Hacker et al., 2000, 2008; Huff & Nietfeld, 2009; Maki et al., 2005; Nietfeld et al., 2006). Therefore, interventions that improve metacomprehension accuracy are of major importance. Studies on test expectancy have shown that informing students about the demands of the future test improves their ability to discriminate texts they understood well from those they understood less well. This discrimination ability, called relative metacomprehension accuracy, represents another accuracy measure in addition to judgment bias (Dunlosky & Lipko, 2007; Griffin et al., 2019; Thiede et al., 2011; Wiley et al., 2016a). However, relative metacomprehension accuracy and judgment bias are not necessarily related (Maki et al., 2005). For example, high relative metacomprehension accuracy can be accompanied by low absolute metacomprehension accuracy. Thus, the two measures represent different aspects of metacognitive monitoring. There are two previous studies (Chen, 2022; Wiley et al., 2016a) that used instructions to examine the effect of test expectancy on students’ judgment bias. However, their results were inconsistent because Chen (2022) did not find a significant effect of test expectancy on students’ overconfidence, whereas Wiley et al. (2016a) did. One major difference between these studies is that Wiley et al. (2016a) supplemented test expectancy with additional cues about processing and used texts contents from the study area of the participants. Therefore, in the present study, we examined whether test expectancy provided by reading instructions without further instructional support would reduce students’ overconfidence.

Theory of multiple mental text representations

According to Kintsch’s (1998) construction-integration model, text comprehension means constructing a mental text representation on multiple levels: the surface level, the text-base level, and the situation-model level. Whereas the surface level primarily enables the literal repetition of sentences without understanding, the text-base and situation-model levels are crucial for a deeper understanding. The text-base level describes the propositional level of representation and comprises constructing a hierarchically structured network of propositions and the relationships between them. Because, in most cases, texts do not contain all the propositions necessary to form a coherent mental representation of the text, the construction of the propositional text base is often insufficient for a complete understanding (Kintsch, 1998). The mental representation of a text at the level of the text base contains coherence gaps, which readers can close by integrating their prior knowledge. Integrating the text base and prior knowledge results in the situation model, which is defined as the reader’s mental image of a text (Kintsch, 1998, 2018). According to Kintsch (1998, 2018), not only should readers reproduce explicitly stated text information, but they should also apply it to new contexts. This application requires them to construct a situation model. To gain a comprehensive text representation, readers also need to monitor their comprehension, which is known as metacomprehension (Koriat & Levy-Sadot, 1999).

Metacomprehension: cue utilization and judgment bias

Metacognitive judgments reflect students’ awareness of their internal thinking and processing, including what they do and do not know (Pieschl, 2009). According to the cue-utilization framework (Koriat, 1997), students do not have direct access to the quality of their own cognitive information processing. Hence, they must infer their comprehension based on cues. Whether students’ judgments are accurate depends on the validity of the cues they use to predict their future performance (Bruin et al., 2017; Brunswik, 1955). Regarding the dual-process model (Koriat & Levy-Sadot, 1999), cues can be divided into information-based and experience-based cues. Information-based cues are independent of the actual learning task and can be described as performance expectations (e.g., prior knowledge, interest). By contrast, experience-based cues are related to students’ experiences during the actual learning task (e.g., ease of processing, accessibility; Zhao & Linderholm, 2008). Experience-based cues are usually found to be more predictive of future performance than information-based cues (Griffin et al., 2008; Thiede et al., 2010; Wiley et al., 2016b). Moreover, the validity of experienced-based cues depends on the match between the specific cue a reader chooses and the requirements of the test (Rawson et al., 2000; Wiley et al., 2005). This implies that experience-based cues that result from the construction of the text base (e.g., recall of explicit textual information) are appropriate for predicting performance on questions that assess the text base (i.e., detailed questions). However, these cues are less suitable for predicting one’s performance on test questions that refer to the situation model, such as inference questions (Griffin et al., 2019; Wiley et al., 2016b). One reason for this disparity is that text-base questions are usually easier to answer than inference or transfer questions. When readers use text-base cues to judge their performance on situation-model questions, they are prone to overestimation.

Hence, a misalignment between the experience-based cues used to judge comprehension and the requirements of the future test questions can be understood as a cause of overconfidence. One approach to reduce this misalignment is to provide students with an appropriate level of test expectancy by informing them about the demands of the future test, for example, by providing them with reading goals (Chen, 2022; Griffin et al., 2019; Thiede et al., 2011; Wiley et al., 2016a).

Reading goals and judgment bias

Reading is a goal-directed process, and readers can pursue various goals during reading, for example, extracting single facts from a text or applying it to new situations (Jiang & Elen, 2011). The reading goal a learner pursues during reading (e.g., reading to gather facts) does not necessarily match the demands underlying the test questions (e.g., understanding the theme of a text). According to the goal-focusing model by McCrudden et al. (2010), students’ reading goals can be operationalized as an interaction between personal intentions and external instructions. Reading instructions can help students to understand the demands of a learning task (Locke & Latham, 1990; McCrudden & Schraw, 2007) and, therefore, to select appropriate cues that they can use to judge their comprehension (Duchastel & Merrill, 1973; Wiley et al., 2005).

Previous studies have shown that metacomprehension accuracy increases when students are supported in their selection of appropriate cues (Dinsmore & Parkinson, 2013; Winne & Hadwin, 1998). More specifically, findings have revealed that providing situation-model-based test expectancies (e.g., informing students that the future test consists of inference questions) increases relative metacomprehension accuracy (Chen, 2022; Griffin et al., 2019; Thiede et al., 2011), that is, the ability to differentiate between well-understood and less-well-understood texts. Regarding judgment bias, the previous findings have been mixed. Chen (2022) found no effect of test expectancy, neither for detailed questions nor inference questions, whereas Wiley et al. (2016a) showed that test expectancy reduced overconfidence regarding performance on inference questions. The two studies differed in their text materials. Wiley et al. used texts about topics drawn from the participants’ field of study. In contrast, Chen included texts with lower ecological validity because the text topics were unrelated to the participants’ field of study. Additionally, the instructions by Wiley et al. were twofold as they informed students that the future test questions would “assess [their] ability to make connections between the different parts of a text” (p. 398) and, in addition, the instructions explained how to use self-explanation during reading. Hence, regarding its effects on judgment bias, it is unclear whether test expectancy itself (without self-explanation as an additional instructional aid) can reduce judgment bias for texts. Furthermore, previous studies used only inference questions to investigate comprehension at the situation-model level. Another more complex type of task for addressing the situation model are application questions, which represent a measure of successful learning from text, because they require students to transfer text information to realistic problems (Kintsch, 1998, 2018). Additionally, the use of application questions is especially important in teacher education programs, as (pre-service) teachers are expected to use their professional knowledge (e.g., knowledge about pedagogical theories) to make pedagogical decisions.

We conducted two experiments to examine whether reading instructions without additional instructional aids are effective in helping students to accurately judge their comprehension. To circumvent a potential limitation of previous work, we used texts that were related to the participants’ field of study. Furthermore, we aimed to extend previous findings by using application questions (instead of inference questions).

Experiment 1

The primary aim of Experiment 1 was to investigate the unique effect of reading instructions (without any further instructional aid) on metacomprehension accuracy in terms of judgment bias. To extend previous research, we examined metacomprehension with respect to not only memory-based test questions but also application-based test questions. The latter assessed students’ ability to apply text information to educational practice situations. Moreover, following Wiley et al. (2016a), who, contrary to Chen (2022), found an effect of test expectancy on judgment bias, we used text materials with high ecological validity for our sample of pre-service teachers. A secondary aim of the present experiment was to replicate previous findings that students tend to use text-base-level cues rather than situation-model-level cues to judge their text comprehension when they receive only general reading instructions (e.g., Griffin et al., 2019; Thiede et al., 2010).

All participants read two texts. For the first text, all participants received general reading instructions with no specific information about what questions the future test would consist of (i.e., read carefully). For the second text, participants were given either one of two specific instructions (read to memorize single facts vs. read to apply the text information) or, in the control condition, the general reading instructions as given for the first text.

With the general reading instructions given to all participants for the first text, we aimed to test whether students’ judgments of comprehension better correspond to their memory test performance or their application test performance (i.e., secondary research aim). Previous studies indicated that students base their comprehension judgments on text-base-level cues rather than situation-model cues (e.g., Griffin et al., 2019; Thiede et al., 2010). As text-base-level cues are suitable for memory test questions but are less valid for more difficult questions that require a higher level of text comprehension, such as, application questions, we hypothesized that:

-

H1.1: Students who receive general reading instructions show an accurate judgment or slight overconfidence regarding their memory test performance.

-

H1.2: Students who receive general reading instructions show overconfidence regarding their application test performance.

The aim of the two specific reading instructions for the second text was to investigate their effect on judgment bias compared to a control group that received general reading instructions (i.e., primary research aim). Overestimation can be understood as the result of a misalignment between the cues used to judge one’s comprehension and the requirements of future test questions. Griffin et al. (2019) found that a comprehension-test-expectancy resulted in a higher relative accuracy regarding students’ comprehension test performance compared to a memory-test-expectancy- and a control-group. Furthermore, Wiley et al. (2016a) showed that a comprehension-test-expectancy with additional instructions for processing the text resulted in lower overconfidence than a control-group. Therefore, we hypothesized that:

-

H2.1: Students provided with memory instructions or general instructions show accurate judgments or a slight overestimation regarding their memory performance.

-

H2.2: Students provided with application instructions are underconfident regarding their memory performance.

-

H2.3: Students provided with memory instructions do not differ in their overestimation regarding their application performance from students provided with general instructions.

-

H2.4: Students provided with application instructions show less overestimation regarding their application performance than students provided with memory or general instructions.

Method

Participants

Participants were 131 pre-service teachers (69.9% women) from a German university. All students took part in a master’s teacher education program and had already attended at least one class in educational science. Participants were between 24 and 29 years old.Footnote 1 The post hoc statistical power for all analyses ranged from 0.71 to 1.00, which can be considered sufficient according to Cohen (2013).

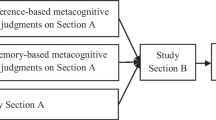

Design and procedure

To test the hypotheses, we conducted an experiment with the three-level factor instruction (general, memory, application). All participants read two texts, the first in the pretest phase and the second in the testing phase. The texts covered two different topics. To avoid position effects, we counterbalanced the texts: Half of the students read Text 1 in the pretest phase and Text 2 in the testing phase. For the other half of the sample, the order was reversed. As dependent variables, we examined memory performance bias and application performance bias.

The study was conducted as an online experiment during the COVID-19 pandemic. Students read the texts and took the tests individually from home. At the beginning of the experiment, students were informed of the importance of reading and comprehending scientific texts for their academic performance. To increase their motivation to participate, they were also informed that they would receive a book voucher worth 10 euros upon full participation in the study. The experiment lasted about 2 h on average, so it can be assumed that participants who completed the experiment took part with seriousness.

Pretest phase: General reading instruction

In the pretest phase (average duration: 51 min), students first answered prior knowledge questions about the topics presented in the two texts. Second, to determine participants’ baseline metacomprehension accuracy, we asked them to read the first text, judge their comprehension of this text, and answer the comprehension questions (memory and application questions) about this text. In this phase, all students were given the same general reading instructions:

You will now be given a text on [topic]. Read the text carefully so that you understand it. Take as much time as you need to read the text. You get to decide when you have read the text sufficiently and think you are ready to answer questions about the text. Afterwards, you will be given questions about the text.

Immediately after reading (but before testing), participants judged their comprehension. Afterwards, students answered the memory and application questions.

Testing phase: Manipulation of reading instruction

After the students finished the pretest, they were randomly assigned to one of the three groups representing the different kinds of instructions (general, memory, application). In addition to the general instruction (see pretest phase), students in the memory and the application instruction groups were given further information about what the test would require.

Memory instruction group: After reading the text, you should be able to answer questions that pertain to information that was explicitly presented in the text, for example, questions about terms and connections described in the text.

Application instruction group: After reading the text, you should be able to answer questions that require you to apply the information presented in the text and use it for explanations, for example, questions about using the terms and relationships described in the text to explain and solve a pedagogical problem.

Participants then proceeded in the same order as for the pretest phase: (a) reading the text, (b) judging their comprehension of the text, and (c) answering the test questions (memory and application). The testing phase lasted on average 56 min.

Text material

To promote ecological validity, we used texts with two different themes from the field of educational sciences. The first text addressed the cognitive load theory (CLT: 658 words and 31 sentences). It was extracted from an established German study book (Brünken et al., 2019). The original text was modified slightly (i.e., removal of references and additional information). The second text was about the control-value theory (CVT) of achievement emotions (586 words and 34 sentences), which was also taken from an established German study book (Wild & Möller, 2009). The original text was shortened and modified slightly (i.e., references and additional information were removed) to increase readability. According to the LIX indexFootnote 2 (CLT: 64.7 and CVT: 64.2), the two texts were very similar in their linguistic parameters, both had a high degree of difficulty, and both could be classified as academic (cf., Lenhard & Lenhard, 2022). In addition to the LIX index, the two texts were tested for comprehensibility on a small pilot sample of pre-service teacher students (N = 48). The questionnaire by Friedrich (2017) was used to assess the comprehensibility of the two texts in terms of word difficulty, clarity of conception, sentence difficulty, argument density, reorganization effort, language, and comprehensibility perception on a 5-point Likert scale. A t-test for independent samples showed no significant differences in students’ comprehensibility ratings between the two texts, t(46) < 1, p = .591 (CLT: M = 2.55, SD = 0.39; CVT: M = 2.60, SD = 0.34). This means that both texts were rated as to be of average comprehensibilityFootnote 3.

Measures

Prior knowledge

A prior knowledge test with six multiple-choice (MC) items with each four answer options was constructed for each text. We used the multiple true/false scoring method to score “both selection of relevant information and nonselection of irrelevant information” (Betts et al., 2022, p. 162). This procedure was chosen to minimize the probability of guessing. Students received one point for each correctly checked answer and each correctly unchecked answer. The total performance score per text was the sum of all the points achieved across all six items. The maximum score per text was 24 points.

Comprehension performance

For each text, we developed one memory test and one application test. The memory test focused on students’ ability to remember original or paraphrased sentences. The memory test consisted of four MC items for each text. Each MC item contained three answer options. As in the prior knowledge test, students received one point for each correctly checked answer and each correctly unchecked answer. The maximum score per text was 12 points (maximum score of 3 points for each item).

The application test questions addressed students’ ability to apply text information to solve pedagogical problems. For each text, the application test included two pedagogical vignettes with three questions each. An example vignette of the application test is:

Leon attends 6th grade. Mathematics in particular causes him difficulties. In the morning, he woke up and did not feel well. When his mother asked him what was wrong, he replied, “I’m writing a math exam today and I’m scared. I am sure that I will do everything wrong because I couldn’t solve a single assignment correctly while studying.”

One of the three questions was open-ended; the other two questions were MC items (with four or five answer options as well as an additional open answer field in which students were asked to justify their answer). An example of the open-ended and the MC item is shown in Table 1.

Learning from text means that “text information be integrated with the reader’s prior knowledge and become part of it” (Kintsch, 1998, p. 290). We, therefore, have chosen both open-ended and multiple-response tasks with an additional open-ended response field to examine whether students have gained a deep comprehension and are able to relate the text information to the vignettes. The MC items were scored in the same manner as the memory-test questions. For the open-ended items, we developed a coding schema that differentiated four levels of students’ application of text information to explain the pedagogical cases. Items that were not answered were scored with 0 points. Two independent raters coded students’ answers to the open-ended items. Overall, the raters showed a good agreement, CLT text: Cohen’s κ = 0.85; CVT text: κ = 0.82. The total performance score on the application test was determined as the sum of the points achieved on the multiple-choice items and the open-ended items for each text. Students could reach a maximum score of 42 points.

The memory test questions asked for different facets of the topics covered in the texts to capture the breadth of acquired knowledge and were deliberately not optimized for high homogeneity (Klauer, 1987; Streiner, 2003). Therefore, McDonald’s Omega was low for the memory test (CLT: ⍵ = 0.54, CVT: ⍵ = 0.44) and acceptable for the application test (CLT: ⍵ = 0.75, CVT: ⍵ = 0.80).

Judgment of comprehension

In line with Chen (2022) and Wiley et al. (2016a), students’ comprehension judgment was assessed via a continuous 100% rating scale. Students were asked to indicate how well they understood the text, ranging from 0% (not at all) to 100% (very well). Due to this judgment of comprehension (instead of judgments separated for the memory and application tasks), we were able to examine whether a students’ judgments were better aligned with their performance on the memory test or their performance on the application test.

Judgment bias

Judgment bias was calculated as the signed difference between a students’ judgment of their comprehension and their performance (in percent). We calculated one bias score for memory test performance and one for application test performance.

Manipulation check

Reading goals pursued by students after reading were assessed to check whether students followed the given instructions. To do so, we used a questionnaire with two scales and four items each: (a) memorization processing goals (adopted by Richter, 2003; sample item: “While reading, I mainly wanted to remember the facts mentioned in the text.”; Cronbach’s α = 0.75) and (b) application processing goals (based on the LIST questionnaire by Wild & Schiefele, 1994; sample item: “While reading, I was particularly interested in whether I could apply the text information to examples.”; α = 0.72).

From a theoretical perspective, reading goals shape the processing of a text. Therefore, the different instructions should have an impact on students’ reading process. Reading time as an indicator of processing effort during reading was recorded by the computer. It represents the time (in seconds per word) that was spent on the text pages.

Data handling and data analyses

The assumption of normal distribution was tested for the examined variables for the total sample using the Shapiro-Wilk test. Although few of the variables were not normally distributed, we used parametric tests (Blanca Mena et al., 2017). We treated values of M+/–2SD as outliers. Because analyses with the replaced outliers yielded the same pattern of results as the original data, we used the original data for the analyses. Testing variance homogeneity was included in the hypothesis testing and flagged in the “Results” section if violated.

To investigate H1.1, we conducted a one-sample t-test against zero with the memory performance bias as the dependent variable. Thereby, we investigated whether the judgment of memory performance was significantly biased toward overestimation (significantly positive value) or underestimation (significantly negative value). Likewise, to test H1.2, we conducted a one-sample t-test against zero with application performance bias as dependent variable. In doing so, we investigated whether the application performance bias was higher than zero.

Before examining the effects of reading instruction on judgment bias, we conducted two univariate ANOVAs with the average rating of importance for the memorization processing goals and the average rating of importance for the application processing goals, respectively, as dependent variables and the instruction groups as independent variables. In doing so, we tested whether the reading instructions were successful, that is, whether students had adopted the reading goals that they had been instructed to pursue during reading.

To investigate H2.1 and H2.2, we conducted one-sample t-test with memory performance bias as dependent variable and instruction group as independent variable. Thereby, we investigated whether the judgment of memory performance was significantly biased toward overestimation (significantly positive value) or underestimation (significantly negative value) for each instruction group. In addition, we conducted contrast analyses to investigate differences in memory performance bias between the three instruction groups. Likewise, to test H2.3 and H2.4, we conducted a one-sample t-test with application performance bias as dependent variable and instruction group as independent variable. In addition, we conducted contrast analyses to investigate differences in application performance bias between the three instruction groups. All analyses were performed using IBM SPSS Statistics 29.

Results

Table 2 shows the descriptive statistics for the performance measures, the comprehension judgment, and the judgment bias measures from the pretest phase with the general reading instruction.

Preparatory analyses

Regarding prior knowledge, we found that participants answered, on average, 65.48% (SD = 16.28) of the questions concerning the CLT text correctly and 69.78% (SD = 19.15) of the CVT-related questions correctly. The prior knowledge for the CVT text was significantly higher than for the CLT text, t(129) = 3.15, p = .002, Cohen’s d = 0.28. Furthermore, we tested for a-priori differences in prior knowledge between the three instruction groups. An ANOVA showed that the prior knowledge regarding the CVT text did not significantly differ between the instruction groups, F(2, 127) = 1.20, p = .304. However, prior knowledge concerning the CLT text significantly differed between instruction groups, F(2, 127) = 4.07, p = .019, η² = 0.06, as students in the memory instruction group (M = 60.65, SD = 17.17) had significantly lower prior knowledge than students in the application instruction group (M = 70.27, SD = 12.79), \(\varDelta M\) = 9.62 (SD = 3.37), 95% CI [1.43, 17.80], p = .015. Students in the control group (M = 65.65, SD = 17.42) did not significantly differ in their prior knowledge for the CLT text from students in the memory instruction, \(\varDelta M\) = 5.00 (SD = 3.44), 95% CI [\(-\)3.33, 13.34], p = .443, or the application instruction group, \(\varDelta M\) = 4.62 (SD = 3.45), 95% CI [\(-\)3.76, 12.99], p = .552. To check whether the a-priori difference in prior knowledge for the CLT text influenced the effect of the reading instructions on the dependent measures, we calculated ANCOVAs. More concretely, we performed two ANCOVAs with instruction group as independent variable, prior knowledge as covariate, and memory performance bias and application performance bias, respectively, as dependent variable. The results showed that the interaction term between instruction group and prior knowledge was nonsignificant for both the memory performance bias, F(2,60) < 1, p = .410, and the application performance bias, F(2,60) < 1, p = .602, which suggested that the existing prior knowledge difference did not distort the results for the two bias measures.

Moreover, we examined potential effects of the text topic. The analysis revealed a significant effect of text topic because the CLT text resulted in a significantly higher memory test performance, \(\varDelta M\)= 8.42, 95% CI [2.84, 14.00]; t(128) = 2.99, p = .003, d = 0.52, a higher judgment of comprehension, \(\varDelta M\) = 7.07, 95% CI [0.99, 13.16]; Welch-TestFootnote 4 (123.89) = 2.31, p = .023, d = 0.40, and higher overconfidence for application test performance, \(\varDelta M\) = 10.10, 95% CI [3.60, 16.61]; t(128) = 3.07, p = .003, d = 0.54, compared to the CVT text. No difference between text topics occurred for application test performance, t(128) = 1.86, p = .065, and memory performance bias, t(128) < 1, p = .571. Thus, all the following analyses were conducted for each text separately.

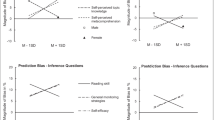

Memory performance bias and application performance bias (pretest phase)

H1.1 stated that students show an accurate judgment or slight overconfidence regarding their memory test performance. One-sample t tests against zero revealed, however, a statistically significant underconfidence regarding memory performance for both texts, CLT: t(63) = 3.41, p = .001, d = 0.43 and CVT: t(65) = 3.05, p = .003, d = 0.38. Thus, H1.1 was not supported.

H1.2 proposed that students would show overconfidence regarding their application test performance. Results showed a statistically significant overconfidence regarding students’ application performance bias for both texts, CLT: t(63) = 8.96, p < .001, d = 1.12 and CVT: t(65) = 4.03, p < .001, d = 0.49. Thus, H1.2 was supported.

The influence of reading instruction on memory performance bias and application performance bias (testing phase)

Manipulation check

We found no significant difference between the reading instruction groups in the average rating of importance for the memorization processing goals, F(2, 98) = 1.43, p = .245. This finding is consistent with the theory of multiple mental text representations, according to which the situation model is built on the propositional text base. As intended, the average rating of importance for the application processing goals yielded a significant difference between instruction groups, F(2, 98) = 3.79, p = .026, η² = 0.07. Planned contrasts showed that students in the application instruction group had higher values than students in the other two groups, t(98) = 2.61, p = .010, d = 0.60. The latter two groups did not significantly differ, t(98) < 1, p = .460.

In addition, a univariate ANOVA revealed a significant effect of the reading instructions on the time spent reading the text, F(2, 127) = 13.63, p < .001, η² = 0.18.Footnote 5 Planned contrast showed that students in the application instruction group had significantly longer reading times than students in the other two groups, t(127) = 4.99, p < .001, d = 0.93. The latter two groups did not significantly differ from each other in their reading time, t(127) = 1.67, p = .098. Hence, students seem to have used the reading instructions to adjust their reading time (see Table 3).

Memory performance bias

Regarding memory performance bias, we hypothesized that students provided with a memory instruction and students provided with a general instruction would make accurate judgments or slightly overconfident judgments (H2.1), and students provided with an application instruction would underestimate their memory performance (H2.2) (Tables 4).

CLT text

The mean judgment bias for memory test performance (see Table 4) showed that, for the CLT text, all three instruction conditions tended toward underconfidence. However, this tendency was statistically significant only for the control group, t(24) = 1.93, p = .033, d = 0.39. The memory instruction group, t(17) < 1, p = .244, and the application instruction group, t(22) < 1, p = .285, did not show a significant tendency toward underconfidence (or overconfidence) for their memory test performance. Thus, H2.1 was partly supported and H2.2 was not confirmed.

CVT text

For the CVT text, participants in the control group, t(15) = 3.13, p = .003, d = 0.78, the memory instruction group, t(26) = 4.99, p < .001, d = 0.96, and the application instruction group, t(20) = 5.31, p < .001, d = 1.16, showed a significant tendency toward underestimation regarding their memory test performance (see Table 4 ). Hence, regarding the CVT text, H2.1 was not confirmed and H2.2 was supported.

Application performance bias

We assumed that students provided with a memory instruction do not differ in their overestimation regarding their application test performance from students provided with a general instruction (H2.3). We further proposed that students provided with the application instruction would show an accurate judgment or less overestimation compared to students in the memory instruction group or the control group (H2.4).

CLT text

For the CLT text, students in the control group, t(24) = 3.21, p = .002, d = 0.64, the memory instruction group, t(17) = 2.31, p = .017, d = 0.54, and the application instruction group, t(22) = 3.88, p < .001, d = 0.81, showed a considerable and significant extent of overestimation regarding their application performance (see Table 4). Moreover, overestimation did not differ between the memory instruction group and the control group, t(63) < 1, p = .521, which is in line with H2.3. However, contrary to H2.4, participants in the application instruction group did not differ in their overestimation from students in the other two conditions, t(63) < 1, p = .521. Thus, H2.3 was supported while H2.4 was not confirmed.

CVT text

Participants in the control group, t(15) < 1, p = .220, and the memory instruction group, t(26) < 1, p = .452, demonstrated no statistically significant overestimation (or underestimation) for the CVT text (see Table 4). This result contradicted H2.3, although one aspect of H2.3 – namely that both groups would not differ in their application performance bias – was supported, t(61) < 1, p = .391. Furthermore, the application instruction group showed, as expected in H2.4, no significant bias toward overestimation (or underestimation), t(20) < 1, p = .410. However, they judged their application performance not more accurately than students in the other two conditions, t(61) < 1, p = .534, which partly contradicts H2.4. Thus, H2.3 as well as H2.4 were not fully supported.

Discussion of Experiment 1

The primary aim of Experiment 1 was to examine the effects of reading instructions without further instructional support on judgment bias when learning from text. In particular, we were interested whether an application instruction would reduce overestimation of performance on application test questions. Overall, the results were mixed, with some hypotheses supported and others discouraged. A main finding was that the application reading instructions had no debiasing effect on metacomprehension. With regard to our secondary research aim, we found that, when given only general instructions (i.e., “read carefully”) in the pretest phase, students overestimated their performance on the application test, which supported our hypothesis, but also tended to underestimate their performance on the memory test, which contradicted our hypothesis.

Memory performance bias and application performance bias (pretest phase)

The results from the pretest phase, in which participants received only general reading instructions (“read carefully”), showed that participants tended to overestimate their application test performance to a moderate to strong extent. This finding was consistent with our hypothesis and previous research (e.g., Hacker et al. ,2000; Maki et al., 2005). Application tasks are complex tasks that go beyond the text base and require the construction of a situation model (Kintsch, 1998, 2018). However, students tend to use text-base-level cues to judge their comprehension (e.g., Thiede et al., 2010). Because text-based cues are inadequate for judging one’s application performance, students overestimate their application performance. The results are also in line with Schraw and Roedel (1994) who showed that difficult items (in this experiment: application test) rather than easy items (memory test) provoke overestimation. As overconfidence very likely leads to deficits in future learning activities (e.g., due to a premature termination of learning; Dunlosky & Rawson, 2012), the present finding underlines the need for interventions that can effectively reduce such inaccurate judgments for application tests.

Regarding memory performance bias, results from the pretest phase suggested that the general reading instructions led students to be underconfident, and hence, contrary to our expectations, not accurate or slightly overconfident. However, this significant underestimation most likely resulted from the fact that performance on the memory test items was, on average, very high. As judgment bias is mathematically dependent on the performance level (e.g., the higher the performance score, the higher the chance of underestimation; Hacker et al., 2000), the underestimation for the memory test most likely reflects a statistical artifact instead of an actual metacognitive effect (Schraw & Roedel, 1994).

Effect of reading instructions on students’ memory performance bias and application performance bias (testing phase)

In general, students use memory-based cues instead of comprehension-related cues to judge their text understanding (Thiede et al., 2010). This usually results in a rather accurate judgment for the memory performance but an overestimation of performance that taps the comprehension, such as application performance. Therefore, reading instructions should support students in using judgment cues that are diagnostic for the requirements of the actual test. Specifically, we assumed that instructing learners to gain a level of understanding that enables them to apply the knowledge from the text to educational situations should reduce their overestimation on the actual application performance.

We found such a debiasing effect for the CVT text, but the other two reading instructions also showed no significant bias for this text. For the CLT text, on the other hand, the application instruction had no significant debiasing effect. Thus, there was no clear evidence that the application instruction improved metacomprehension accuracy with regard to application performance.

However, students in the application-instruction group spent significantly more time reading the text than students who received either general instructions or memory instructions. Thus, the application instruction had some effect on the reading behavior. Generally, a longer reading time can occur when students monitor their text understanding more extensively or engage in more reading processes (e.g., Narvaez et al., 1999). Why the longer reading time in the application instruction group did not translate into a less overconfident judgment on the application performance for the CLT text, and why the two other reading instructions showed also no significant overestimation of the application performance for the CVT text is unclear.

The pattern of results might indicate that reading instructions, in particular application instructions, without further instructional support are not a reliable means to improve judgment bias, which would be consistent with Chen’s (2022) findings. An alternative explanation could be that the design of the experiment may have overshadowed the effects of the reading instructions. More concretely, by reading the text and taking the comprehension test (both memory and application) in the pretest phase, students have already gained experience with the requirements of the comprehension test. Participants from all three conditions could have used this text experience, deliberately or not, as an anchor for their comprehension judgment for the second text in the testing phase. Such anchoring effects have been found, for example, by Österholm (2015) and Zhao and Linderholm (2008) who showed that students draw on previous reading experiences when judging their comprehension of an actual text (see also Foster et al., 2017). To rule out this explanation, we reexamined the impact of the reading instructions on judgment bias with a different study design.

Experiment 2

The aim of Experiment 2 was to restudy the effects of reading instructions without further instructional aid on memory performance bias and application performance bias with a different study design. Experiment 2 differed from Experiment 1 in that students read only one text for which they received one of three reading instructions (general vs. memory vs. application instruction). The tested hypotheses were the same as those in the testing phase of Experiment 1 (see H2.1 to H2.4).

Method

Participants

Participants were N = 164 pre-service teachers (70% female) from a German university. They were between 24 and 29 years old.Footnote 6 All students took part in a master’s teacher education program and had already attended at least one class in educational science. None of them participated in Experiment 1.

Design and procedure

The experiment followed a one-factorial design with the three-level factor instruction (general, memory, application). Participants were randomly assigned to one of the three reading instructions (“general” vs. “memory” vs. “application”). The dependent variables were, as in Experiment 1, the memory performance bias and application performance bias.

At the beginning of the experiment, participants answered the prior knowledge test. Afterwards, they received the reading instruction according to their experimental condition (i.e., “general” or “memory” or “application”, see Experiment 1 for instructions). Then, they read one text (either the CLT text or the CVT text; counter-balanced across all participants). After reading, participants judged their text comprehension and answered the memory and application test questions.

Experiment 2 was also conducted as an online experiment due to the COVID-19 pandemic. Students were informed that they would receive a book voucher worth 10 euros upon full participation in the study, which was intended to increase their motivation to participate.

Text materials and measures

The text material, measures, and manipulation check were the same as in Experiment 1.

Results

Table 5 shows the descriptive statistics per group for the memory and application performance bias as well as for the performance measures and the judgment of comprehension.

Preparatory analyses

Regarding prior knowledge, we found that participants correctly answered on average of 61% (SD = 16.79) of the questions in the prior knowledge test for the CLT text and 60% (SD = 24.82) of the questions in the prior knowledge test for the CVT text correctly. There was neither a significant interaction effect between text and instructional condition, F(2, 158) < 1, p = .630, η² = 0.01, nor a significant main effect of text, F(1, 158) < 1, p = .930, η² = 0.00, and instructional condition, F(2, 158) < 1, p = .919, η² = 0.00.

The influence of reading instruction on memory performance bias and application performance bias

Due to the text order effects found in Experiment 1, the analyses for hypotheses testing were conducted separately for the two texts.

Manipulation check

We found no significant difference between the instruction groups for the average rating of importance for the memorization processing goals, F(2, 158) < 1, p = .919, η² = 0.01. As expected, the average rating of importance for the application processing goals yielded a significant difference between instruction groups, F(2, 158) = 8.73, p < .001, η² = 0.16. Planned contrasts showed that students in the application instruction group rated the application processing goals as more relevant to their reading than students in the other two groups, t(161) = 4.04, p < .001, d = 0.95. The latter two groups did not significantly differ from each other, t(161) < 1, p = .401.

In addition, an univariate ANOVA revealed a significant effect of instruction on the time spent reading the text, F(2, 161) = 9.59, p < .001, η² = 0.11.Footnote 7 Planned contrast showed that students in the application instruction group had significantly longer reading times than students in the other two groups, t(161) = 4.36, p < .001, d = 0.75. The latter two groups did not significantly differ from each other in their reading time, t(161) < 1, p = .576. Hence, students seem to have used the reading instructions to adjust their reading time (see Table 3).

Memory performance bias

Regarding memory performance bias, we hypothesized that students provided with a memory instruction and students provided with a general instruction would make accurate or slightly overconfident judgments (H2.1), and students provided with an application instruction would underestimate their memory performance (H2.2).

CLT text

The mean judgment bias for memory test performance (see Table 5) showed a significant underestimation for the control group, t(27) = 4.34, p < .001, d = 0.82, and the memory instruction group, t(31) = 4.63, p < .001, d = 0.82. The application instruction group, t(24) < 1, p = .706, did not show a significant tendency toward underconfidence (or overconfidence) for their memory test performance. Thus, H2.1 and H2.2 were not confirmed.

CVT text

For the CVT text, participants in the control group, t(27) = 3.13, p = .004, d = 0.59, the memory instruction group, t(28) = 5.65, p < .001, d = 1.05, and the application instruction group, t(21) = 5.49, p < .001, d = 1.17, showed a significant tendency toward underestimation regarding their memory test performance (see Table 5). Hence, regarding the CVT text, H2.1 was not confirmed and H2.2 was supported.

Application performance bias

We assumed that students provided with a memory instruction do not differ in their overestimation regarding their application test performance from students provided with a general instruction (H2.3). We further proposed that students provided with the application instruction would show an accurate judgment or less overestimation compared to students in the memory instruction group or the control group (H2.4).

CLT text

For the CLT text, students in the control group, t(27) = 10.62, p < .001, d = 2.01, the memory instruction group, t(31) = 8.21, p < .001, d = 1.45, and the application instruction group, t(24) = 6.52, p < .001, d = 1.30, showed a considerable and significant extent of overestimation regarding their application performance (see Table 5). Moreover, overestimation did not differ between the memory instruction group and the control group, t(82) < 1, p = .483, which is in line with H2.3. However, contrary to H2.4, participants in the application instruction group did not differ in their overestimation from students in the other two conditions, t(82) = 0.127, p = .209. Thus, H2.3 was supported while H2.4 was not confirmed.

CVT text

Students in the control group, t(27) = 3.91, p < .001, d = 0.74, demonstrated, as expected in H2.3, a statistically significant overestimation for the CVT text, while students in the memory instruction group, t(28) = 1.23, p = .114, d = 0.23, showed no significantly biased judgment, which contradicted H2.3. However, one aspect of H2.3 – namely that both groups would not differ in their application performance bias – was supported, t(76) = 1.56, p = .123. Moreover, the application instruction group, t(21) < 1, p = .290, d = 0.12, did not show a significant tendency toward underconfidence or overconfidence for their application test performance (see Table 5), which was in line with H2.4. However, they judged their application performance not more accurately than students in the other two conditions, t(76) = 0.171, p = .245, which partly contradicted H2.4. Thus, H2.3 as well as H2.4 were not fully supported.

Discussion of Experiment 2 and general discussion

The aim of Experiment 2 was to restudy the findings from Experiment 1. The results from Experiment 2 were consistent with the findings from Experiment 1. For the CVT text, the application instruction group, but also the other two reading instruction groups, showed no significant judgment bias. For the CLT text, the application instruction had no debiasing effect and all instruction groups tended toward overestimation.

Analogous to Experiment 1, we found that students in the application instruction group had longer reading times than the other two experimental groups. Thus, the application instruction seemed to have an effect on reading behavior. Why it did not result in significantly higher application performance compared to the other reading instructions and in a debiased judgment for the CLT text as well is unclear.

Furthermore, Experiment 2 showed the same pattern of results regarding application performance bias as in Experiment 1. While all experimental groups overestimated their performance on the application test for the CLT text, their judgment for the CVT text was less biased. Importantly, the higher overestimation of the application test performance for the CLT text resulted from a higher judgment compared to the CVT text, while the level of performance was comparable. This text-related effect on the comprehension judgment might be due to different reading experiences, in particular the ease of processing. Ease of processing refers to the subjective feeling of error-free processing. A higher ease of processing usually results in higher judgments of comprehension (Forster et al., 2016; Rawson & Dunlosky, 2002) but not necessarily in higher performance (Rawson & Dunlosky, 2002, Experiment 3). Differences in ease of processing between the two texts in the present experiments might have been caused by differences in content complexity (other features were highly comparable between the CLT and CVT text, see Method section in Experiment 1): The content of the CLT text referred to three concepts (i.e., intrinsic, extrinsic, and germane cognitive load) that did not need to be related to each other to explain the development of cognitive load during learning. In contrast, the CVT text contained two concepts (i.e., subjective control and value) that must be considered together to be able to explain the origin of emotions. Therefore, the CLT text might have encouraged rather superficial text processing (see Graesser & Otero 2013) and, hence, might have resulted in a higher ease of processing (see Dunlosky et al., 2006) than the CVT text. Thus, the present findings might partly reflect the fact that text characteristics are important for metacomprehension accuracy.

Moreover, these text-specific differences suggest that students rely more on experience-based cues, such as ease of processing, than on external cues, such as the reading instruction, in judging their comprehension. Thus, it could be suspected, and would need to be investigated in future studies, that processing ease affects the effectiveness of reading instructions.

General limitations and directions for future research

One limitation relates to the variety of cues students use when generating their judgment (Koriat & Levy-Sadot, 1999). Reading instructions should guide students’ reading process and increase students’ attention and their selection of appropriate cues for judging their comprehension (Duchastel & Merrill, 1973; Wiley et al., 2005). In our study, the instructions informed students on the requirements of the future comprehension test. Although students understood the instructions, they might not have been sufficient to help students to select the most appropriate cues for judging their comprehension (Prinz et al., 2020). This is in line with the transfer-appropriate monitoring view (Son & Metcalfe, 2005). Further research is needed to examine (a) whether reading instructions informing students about the requirements of the future comprehension test have an impact on students’ judgments of their comprehension, and (b) whether reading instructions that more specifically address the required reading processes have an impact on students’ judgments of their comprehension. Further studies should focus on the question of whether the impact of reading instructions could be increased if students are reminded of the instructions and encouraged to consider them during the process of judgment generation.

A second limitation refers to the online setting of the study. Participants read the texts and took the tests individually at home. Compared with previous studies in laboratory settings, our participants’ learning environments were more diverse, and potential confounding variables could not be controlled for. For example, we could not control for side activities or any interruptions during participation, as well as the use of additional tools during reading and answering the test questions. Furthermore, the online setting of the study may have resulted in differences in motivation and reading effort, which in turn may have influenced the outcome of the study. In addition, students could end the experiment prematurely without this being noticed. Due to the online setting of the study, it should be noted that students did not have the opportunity to mark the text or take notes while reading. This restriction must be considered a limitation that may have reduced or prevented in-depth processing, which should be addressed in future studies. Nevertheless, the individual learning situation at home reflects the typical learning setting in higher education, and online instructions in general are becoming more prevalent. Therefore, despite the limitations due to the online setting, the present results have strong ecological validity.

Conclusion

The cue-utilization framework describes that the accuracy of students’ judgments of learning depends on the validity of the cues they use to judge their learning. In the current two experiments, we focused on the misalignment between the experience-based cues used to judge comprehension and the requirements of the future test questions as a cause of overconfidence. Our results showed that judging one’s comprehension is a complex process and that reading instructions alone seem not sufficient to help students to accurately judge their comprehension. Finally, our results showed that text characteristics should be considered in the investigation of metacomprehension accuracy.

Open practices statement

The materials of the experiment are available from the first author upon request. The experiment was not preregistered.

Data availability

The data is available on request.

Notes

Age had to be indicated in age categories.

The LIX index (Lenhard & Lenhard, 2014–2022) measures the readability of texts regarding text characteristics, such as the number of words, sentences, and difficult words (i.e., words with more than six letters).

max. value was five.

The variance homogeneity was tested with Levene’s test, according to which we cannot assume equality of variances for the variable judgment of comprehension (p = .038).

Reading time was operationalized as seconds per word.

Age had to be indicated in age categories.

Reading time was operationalized as seconds per word.

References

Ackerman, R., & Goldsmith, M. (2011). Metacognitive regulation of text learning: On screen versus on paper. Journal of Experimental Psychology: Applied, 17(1), 18–32. https://doi.org/10.1037/a0022086

Betts, J., Muntean, W., Kim, D., & Kao, S. C. (2022). Evaluating different scoring methods for multiple response items providing partial credit. Educational and Psychological Measurement, 82(1), 151–176. https://doi.org/10.1177/0013164421994636

Blanca Mena, M. J., Alarcón Postigo, R., Arnau Gras, J., Bono Cabré, R., & Bendayan, R. (2017). Non-normal data: Is ANOVA still a valid option? Psicothema, 29(4), 552–557. https://doi.org/10.7334/psicothema2016.383

Brünken, R., Münzer, S., & Spinath, B. (2019). Pädagogische Psychologie: Lernen und Lehren. Bachelorstudium Psychologie [Educational psychology: Learning and teaching. bachelor’s programme psychology]. Hogrefe.

Brunswik, E. (1955). Representative design and probabilistic theory in a functional psychology. Psychological Review, 62(3), 193–217. https://doi.org/10.1037/h0047470

Callender, A. A., & McDaniel, M. A. (2007). The benefits of embedded question adjuncts for low and high structure builders. Journal of Educational Psychology, 99(2), 339–348. https://doi.org/10.1037/0022-0663.99.2.339

Chen, Q. (2022). Metacomprehension monitoring accuracy: Effects of judgment frames, cues and criteria. Journal of Psycholinguistic Research, 51(3), 1–16. https://doi.org/10.1007/s10936-022-09837-z

Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Elsevier Science.

Csanadi, A., Kollar, I., & Fischer, F. (2021). Pre-service teachers’ evidence-based reasoning during pedagogical problem-solving: Better together? European Journal of Psychology of Education, 36, 147–168. https://doi.org/10.1007/s10212-020-00467-4

de Bruin, A. B. H., Dunlosky, J., & Cavalcanti, R. B. (2017). Monitoring and regulation of learning in medical education: The need for predictive cues. Medical Education, 51(6), 575–584. https://doi.org/10.1111/medu.13267

Dinsmore, D. L., & Parkinson, M. M. (2013). What are confidence judgments made of? Students’ explanations for their confidence ratings and what that means for calibration. Learning and Instruction, 24, 4–14. https://doi.org/10.1016/j.learninstruc.2012.06.001

Duchastel, P. C., & Merrill, P. F. (1973). The effects of behavioral objectives on learning: A review of empirical studies. Review of Educational Research, 43(1), 53–69. https://doi.org/10.2307/1170121

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16, 228–232. https://doi.org/10.1111/j.1467-8721.2007.00509.x

Dunlosky, J., & Rawson, K. A. (2012). Overconfidence produces underachievement: Inaccurate self evaluations undermine students’ learning and retention. Learning and Instruction, 22(4), 271–280. https://doi.org/10.1016/j.learninstruc.2011.08.003

Dunlosky, J., Baker, J. M. C., Rawson, K. A., & Hertzog, C. (2006). Does aging influence people’s metacomprehension? Effects of processing ease on judgments of text learning. Psychology and Aging, 21(2), 390–400. https://doi.org/10.1037/0882-7974.21.2.390

Forster, M., Leder, H., & Ansorge, U. (2016). Exploring the subjective feeling of fluency. Experimental Psychology, 63(1), 45–58. https://doi.org/10.1027/1618-3169/a000311

Foster, N. L., Was, C. A., Dunlosky, J., & Isaacson, R. (2017). Even after thirteen class exams, students are still overconfident: The role of memory for past exam performance in student predictions. Metacognition Learning, 12, 1–19. https://doi.org/10.1007/s11409-016-9158-6

Friedrich, M. (2017). Textverständlichkeit Und Ihre Messung: Entwicklung Und Erprobung eines Fragebogens Zur Textverständlichkeit [Text comprehensibility and its measurement: Development and testing of a questionnaire on text comprehensibility]. Waxmann.

Graesser, A. C., & Otero, J. (2013). 1. Introduction to the psychology of science text comprehension. In J. Otero, J. A. León, & A. C. Graesser (Eds.), The psychology of science text comprehension (pp. 1–15). Lawrence Erlbaum Associates.

Griffin, T. D., Wiley, J., & Thiede, K. W. (2008). Individual differences, rereading, and self-explanation: Concurrent processing and cue validity as constraints on metacomprehension accuracy. Memory & Cognition, 36(1), 93–103. https://doi.org/10.3758/MC.36.1.93

Griffin, T. D., Wiley, J., & Thiede, K. W. (2019). The effects of comprehension-test expectancies on metacomprehension accuracy. Journal of Experimental Psychology Learning Memory and Cognition, 45(6), 1066–1092. https://doi.org/10.1037/xlm0000634

Hacker, D. J., Bol, L., & Bahbahani, K. (2008). Explaining calibration accuracy in classroom contexts: The effects of incentives, reflection, and explanatory style. Metacognition and Learning, 3(2), 101–121. https://doi.org/10.1007/s11409-008-9021-5

Hacker, D. J., Bol, L., Horgan, D. D., & Rakow, E. A. (2000). Test prediction and performance in a classroom context. Journal of Educational Psychology, 92(1), 160–170. https://doi.org/10.1037//0022-0663.92.1.160

Huff, J. D., & Nietfeld, J. L. (2009). Using strategy instruction and confidence judgments to improve metacognitive monitoring. Metacognition and Learning, 4(2), 161–176. https://doi.org/10.1007/s11409-009-9042-8

Jiang, L., & Elen, J. (2011). Why do learning goals (not) work: A reexamination of the hypothesized effectiveness of learning goals based on students’ behaviour and cognitive processes. Educational Technology Research and Development, 59(4), 553–573. https://doi.org/10.1007/s11423-011-9200-y

Kintsch, W. (1998). Comprehension: A paradigm for cognition. Cambridge University Press.

Kintsch, W. (2018). Revisiting the construction—integration model of text comprehension and its implications for instruction. In D. E. Alvermann, N. Unrau, M. Sailors, & R. B. Ruddell (Eds.), Theoretical models and processes of literacy (pp. 178–203). Routledge.

Klauer, K. J. (1987). Kriteriumsorientierte tests [Criterion-oriented tests]. Hogrefe.

Klein, M., Wagner, K., Klopp, E., & Stark, R. (2015). Förderung Anwendbaren Bildungswissenschaftlichen Wissens Bei Lehramtsstudierenden anhand fehlerbasierten kollaborativen Lernens: Eine Studie Zur Replikation Bisheriger Befunde sowie zur Nachhaltigkeit Und Erweiterung Der Trainingsmaßnahmen [Fostering applicable educational knowledge in student teachers through error-based collaborative learning: A study to replicate previous findings and to sustain and extend training interventions. Teaching Science] Unterrichtswissenschaft, 43(3), 225–244.

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349–370. https://doi.org/10.1037/0096-3445.126.4.349

Koriat, A., & Levy-Sadot, R. (1999). Processes underlying metacognitive judgments: Information-based and experience-based monitoring of one’s own knowledge. In S. Chaiken & Y. Trope (Eds.), Dual-process theories in social psychology (pp. 483–502). Guilford Press.

Lenhard, W., & Lenhard, A. (2022). Berechnung des Lesbarkeitsindex LIX nach Björnson [Calculation of the readability index LIX according to Björnson]. https://doi.org/10.13140/RG.2.1.1512.3447

Locke, E. A., & Latham, G. P. (1990). A theory of goal setting & task performance. Prentice-Hall, Inc. https://doi.org/10.2307/258875

Maki, R. H., Shields, M., Wheeler, A. E., & Zacchilli, T. L. (2005). Individual differences in absolute and relative metacomprehension accuracy. Journal of Educational Psychology, 97(4), 723–731. https://doi.org/10.1037/0022-0663.97.4.723

McCrudden, M. T., & Schraw, G. (2007). Relevance and goal-focusing in text processing. Educational Psychology Review, 19(2), 113–139. https://doi.org/10.1007/s10648-006-9010-7

McCrudden, M. T., Magliano, J. P., & Schraw, G. (2010). Exploring how relevance instructions affect personal reading intentions, reading goals and text processing: A mixed methods study. Contemporary Educational Psychology, 35(4), 229–241. https://doi.org/10.1016/j.cedpsych.2009.12.001

Narvaez, D., van den Broek, P., & Ruiz, A. B. (1999). The influence of reading purpose on inference generation and comprehension in reading. Journal of Educational Psychology, 91(3), 488–496. https://doi.org/10.1037/0022-0663.91.3.488

Nietfeld, J. L., Cao, L., & Osborne, J. W. (2006). The effect of distributed monitoring exercises and feedback on performance and monitoring accuracy. Metacognition and Learning, 2, 159–179. https://doi.org/10.1007/s10409-006-9595-6

Österholm, M. (2015). What is the basis for self-assessment of comprehension when reading mathematical expository texts? Reading Psychology, 36(8), 673–699. https://doi.org/10.1080/02702711.2014.949018

Pieschl, S. (2009). Metacognitive calibration—an extended conceptualization and potential applications. Metacognition and Learning, 4(1), 3–31. https://doi.org/10.1007/s11409-008-9030-4

Prinz, A., Golke, S., & Wittwer, J. (2020). To what extent do situation-model-approach interventions improve relative metacomprehension accuracy? Meta-analytic insights. Educational Psychology Review, 32(4), 917–949.

Rawson, K. A., & Dunlosky, J. (2002). Are performance predictions for text based on ease of processing? Journal of Experimental Psychology: Learning Memory and Cognition, 28(1), 69. https://doi.org/10.1037//0278-7393.28.1.69

Rawson, K. A., Dunlosky, J., & Thiede, K. W. (2000). The rereading effect: Metacomprehension accuracy improves across reading trials. Memory & Cognition, 28, 1004–1010. https://doi.org/10.3758/bf03209348

Richter, T. (2003). Epistemologische Einschätzungen beim Textverstehen [Epistemological beliefs in text comprehension] (Doctoral dissertation).

Schraw, G., & Roedel, T. D. (1994). Test difficulty and judgment bias. Memory & Cognition, 22(1), 63–69. https://doi.org/10.3758/BF03202762

Son, L. K., & Metcalfe, J. (2005). Judgments of learning: Evidence for a two-stage process. Memory & Cognition, 33(6), 1116–1129. https://doi.org/10.3758/BF03193217

Soto, C., Gutiérrez de Blume, A. P., Jacovina, M., McNamara, D., Benson, N., & Riffo, B. (2019). Reading comprehension and metacognition: The importance of inferential skills. Cogent Education, 6(1), 1565067. https://doi.org/10.1080/2331186X.2019.1565067

Streiner, D. L. (2003). Starting at the beginning: An introduction to coefficient alpha and internal consistency. Journal of Personality Assessment, 80(1), 99–103. https://doi.org/10.1207/S15327752JPA8001_18

Thiede, K. W., Griffin, T. D., Wiley, J., & Anderson, M. C. M. (2010). Poor metacomprehension accuracy as a result of inappropriate cue use. Discourse Processes, 47(4), 331–362. https://doi.org/10.1080/01638530902959927

Thiede, K. W., Wiley, J., & Griffin, T. D. (2011). Test expectancy affects metacomprehension accuracy. British Journal of Educational Psychology, 81(2), 264–273. https://doi.org/10.1348/135910710X510494

Wild, K. P., & Schiefele, U. (1994). Lernstrategien Im Studium: Ergebnisse Zur Faktorenstruktur Und Reliabilität eines neuen fragebogens [Learning strategies in higher education: Results on the factor structure and reliability of a new questionnaire]. Zeitschrift für Differentielle Und Diagnostische Psychologie, 15, 185–200.

Wild, E., & Möller, J. (Eds.). (2009). Pädagogische Psychologie [Educational Psychology]. Springer. https://doi.org/10.1007/978-3-540-88573-3

Wiley, J., Griffin, T. D., & Thiede, K. W. (2005). Putting the comprehension in metacomprehension. Journal of General Psychology, 132, 408–428. https://doi.org/10.3200/GENP.132.4.408-428

Wiley, J., Griffin, T. D., Jaeger, A. J., Jarosz, A. F., Cushen, P. J., & Thiede, K. W. (2016a). Improving metacomprehension accuracy in an undergraduate course context. Journal of Experimental Psychology: Applied, 22(4), 393–405. https://doi.org/10.1037/xap0000096

Wiley, J., Griffin, T., Thiede, K. W., Jaeger, A. J., Jarosz, A. F., & Cushen, P. J. (2016b). Improving metacomprehension with the situation-model approach. In K. Mokhtari (Ed.), Improving reading comprehension through metacognitive reading strategies instruction (pp. 93–110). Rowman and Littlefield.

Winne, P. H., & Hadwin, A. F. (1998). Studying as self-regulated learning. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Metacognition in educational theory and practice (pp. 277–304). Erlbaum.

Zhao, Q., & Linderholm, T. (2008). Adult metacomprehension: Judgment processes and accuracy constraints. Educational Psychology Review, 20(2), 191–206. https://doi.org/10.1007/s10648-008-9073-8

Funding

Open Access funding enabled and organized by Projekt DEAL. No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

All subjects participated voluntarily and received a small compensation for their participation. The Declaration of Helsinki as well as the joint Ethical Guidelines of the German Psychological Society (DGPs) and the Professional Association of German Psychologists (BdP) were adequately addressed.

Informed consent

The participants provide their written informed consent to participate in this study.

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Marion Händel and Stefanie Golke shared authorship.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Knellesen, J., Händel, M. & Golke, S. Effects of reading instructions on pre-service teachers’ judgment bias when learning from texts. Metacognition Learning 19, 319–343 (2024). https://doi.org/10.1007/s11409-023-09371-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11409-023-09371-w