Abstract

Metacognitive skills are often considered domain-general, therefore they have the potential to transfer across domains, subjects, and tasks. However, transfer of metacognitive skills seldomly occurs spontaneously. Schuster et al., (2020) showed that training can have beneficial effects on spontaneous near and far transfer of metacognitive skills. However, evidence of metacognitive skill transfer resulting in superior acquisition of content knowledge is pending. In the present study we set out to extend prior findings by investigating whether students benefit from training metacognitive skills not only regarding metacognitive skill application but also regarding content knowledge acquisition in learning tasks of different transfer distances. 243 fifth-grade students were randomly assigned to three different conditions for the first 15 weeks of a school year: two hybrid metacognitive skill training conditions (metacognitive skills and one out of two cognitive strategies) and one non-hybrid training condition (cognitive strategies or motivation regulation only). For the second 15 weeks of the school year, all students received non-hybrid training involving a new cognitive strategy. Spontaneous metacognitive skill transfer of different transfer distances (near and far) was tested after the first and after the second 15 weeks of training. The effect of hybrid metacognitive skill training on the acquisition of content knowledge was measured once directly after the first 15 weeks. Results show that hybrid metacognitive skill training supported spontaneous transfer of metacognitive skills to learning scenarios of both near and far transfer distance. However, hybrid metacognitive skill training only had a positive effect on content knowledge acquisition if metacognitive skill transfer was near.

Similar content being viewed by others

Introduction

Self-regulated learning plays a crucial role in life-long learning. Therefore, we should help learners to organize their learning consciously by fostering self-regulated learning (SRL) in schools and universities (Schleicher, 2019). Learners need knowledge about cognitive, motivational, and metacognitive strategies. They need to be able to apply these strategies in a skillful way (Wirth et al., 2020), and they need to be able to apply these strategies in different learning tasks and domains. However, whether strategies of SRL are transferable between tasks and domains depends on the domain-specificity of the respective strategy. The degree to which certain strategies of SRL are domain-general or domain-specific has been discussed several times without clear and consensual results having been reached (e.g., Greene et al., 2015; Meijer et al., 2006; Neuenhaus et al., 2011; Poitras & Lajoie, 2013; Raaijmakers et al., 2018; Rotgans & Schmidt, 2009; van Meeuwen et al., 2018; Veenman et al., 2006). Beyond that we are not aware of studies that addressed the question regarding domain-specificity experimentally (Raaijmakers et al., 2018). Based on theoretical considerations and our own previous empirical evidence, we assume that strategies of SRL differ concerning domain-specificity. Cognitive strategies (like text-highlighting or summarizing) are strategies which directly guide processing of a specific learning task and the content information to be learned. Therefore, we argue that cognitive strategies are rather task and domain-specific. In contrast, research has shown that the skillful application of metacognitive strategies (like planning, monitoring, evaluating and regulating) can improve learning in many different domains (e.g., Aghaie & Zhang 2012; Biwer et al., 2020; Desoete et al., 2003; Meijer et al., 2006). Metacognitive strategies are higher-order strategies which do not guide processing of a learning task and the content knowledge to be learned directly. They are applicable in every domain (Veenman et al., 2006). Thus, we consider metacognitive strategies as domain-general (e.g., Donker et al., 2014; Schuster et al., 2020), and we assume that learners might transfer metacognitive strategies between tasks and domains. However, transfer seldom occurs spontaneously, especially if transfer is far, so the question arises how to support metacognitive transfer. In our study we examined whether training of metacognitive strategy application can be designed in a way that the likelihood of metacognitive transfer is increased. We compared a hybrid training (combined training of cognitive and metacognitive strategy application) with a non-hybrid training (training of cognitive strategy application only) with regard to content knowledge acquisition and transfer of metacognitive strategies. Additionally, we varied transfer distances and investigated whether transfer of metacognitive strategies occurs not only in near but also in far transfer conditions.

The domain-general nature of metacognitive skills in self-regulated learning

Models of self-regulated learning and metacognition (e.g., Boekaerts 1999; Nelson & Narens, 1990; Winne & Hadwin, 1998; Zimmerman, 2013) describe learning as a dynamic process in which learners are active and constructive focusing on different issues including three essential components of SRL: cognition, motivation and metacognition (Schraw et al., 2006; Veenman et al., 2006). Within cognition, cognitive strategies of rehearsing, organizing and elaborating information play a crucial role for information processing (Weinstein et al., 2000), whereas within motivation the light is raised on beliefs and attitudes that motivate the application of cognitive and metacognitive strategies (Schraw et al., 2006). According to Flavell (1979), metacognition refers to monitoring and regulation of cognitive processes. Models of self-regulated learning and metacognition (e.g., Boekaerts 1999; Nelson & Narens, 1990; Winne & Hadwin, 1998; Zimmerman, 2013) share the core idea of metacognition as the continuous monitoring of various aspects of the current state of a learning process. For example, according to Nelson & Narens (1990), learning is monitored at the object-level, which - in line with the COPES model (Winne & Hadwin, 1998) - is evaluated against standards at the meta-level. Either the learner is on target or the learner has to regulate (“control” sensu Nelson & Narens 1990; Winne & Hadwin, 1998). Assumptions about metacognition differ, however, in what the subject of monitoring and regulation at the object-level is (Veenman et al., 2006). For example, theories of metacognitive judgment accuracy and metacomprehension (Dunlosky & Lipko, 2007; Thiede et al., 2010) put acquired knowledge at the object-level. Learners generate metacognitive judgments of comprehension and regulate their learning according to the quality and quantity of acquired content knowledge. Other theories, e.g. the COPES model (Winne & Hadwin, 1998) additionally put cognitive operations at the object-level. Study tactics and (cognitive) learning strategies become the target of monitoring and evaluation, and learners regulate their learning process by toggling or editing their application of these tactics and strategies (for further theoretical elaboration on the interplay of monitoring and regulation in the context of metacognitive regulation, see Wirth et al., 2020). In our own studies, we put learning strategies at the object-level, and we consider metacognition as means to ensure the quality of the application of cognitive learning strategies.

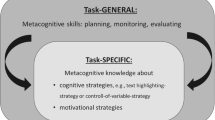

Veenman et al., (2006) divide metacognition into two main components. Metacognitive knowledge includes declarative knowledge about interactions between person, tasks, and strategy characteristics (Flavell, 1979). According to Paris et al., (1983), metacognitive knowledge comprises knowledge that a learning strategy exists (“knowing that”), knowledge about the operations that build a learning strategy as well as the standards needed for monitoring the quality of strategy application (“knowing how”), and knowledge that helps to decide whether a learning strategy fits the specific learning task or not (“knowing when and why”). In a nutshell, metacognitive knowledge is knowledge about learning strategies which directly affect information processing of a learning task and the content information to be learned. Therefore, metacognitive knowledge is needed to adjust the learning process to the specific situational demands of a learner in a specific learning task (Wirth et al., 2020). Since metacognitive knowledge strongly applies to task-specific demands, we assume that metacognitive knowledge is rather task- or domain-specific. Metacognitive skills are skills to generate feedback about the current learning process. Feedback is generated by applying metacognitive strategies and metacognitive skills. Metacognitive skills can be considered as the ability to apply metacognitive strategies. Metacognitive strategies involve planning, monitoring, evaluating, and regulating cognitive processes (Flavell et al., 2002). Planning refers to the forethought phase of the cyclic model of self-regulated learning (Schunk & Zimmerman, 1998), whereas monitoring, evaluating, and regulating refer to the performance phase and the self-reflection phase. Metacognitive skills affect learning strategies that have the purpose to process the learning task and the content information to be learned. Accordingly, metacognitive strategies contribute to learning not directly but indirectly since their application ensures that cognitive learning strategies are carried out in a high quality (Leopold & Leutner, 2015). Delimiting to metacognitive processes like feeling of knowing or judgment of learning (Nelson & Narens, 1990), we focus on metacognitive skills that regulate the quality of the application of learning strategies. To do so, they fall back on standards stored in metacognitive knowledge, especially when monitoring and evaluating learning strategy applications. However, since they are not directly involved in processing the specific content information of a learning task, we assume that metacognitive skills are rather domain-general (Veenman et al., 2006).

This assumption of metacognitive skills being domain-general is supported by several training studies that showed positive effects of metacognitive skill training on learning performance and motivation (e.g., Koedinger et al., 2009; Veenman et al., 2006; Zepeda et al., 2015) in various domains, such as reading comprehension (e.g., Aghaie & Zhang 2012; Carretti et al., 2014), science and mathematics (Desoete et al., 2003; Leopold & Leutner, 2015; Zepeda et al., 2015), history (Meijer et al., 2006; Poitras & Lajoie, 2013) or even air traffic control (van Meeuwen et al., 2018). Accordingly, metacognitive skills are effective in different domains. However, in these studies metacognitive skills were trained with tasks of one certain domain. Whether the trained metacognitive skills had positive effects on learning was tested with learning tasks of the same or similar domain. From an instructional point of view, the question arises whether metacognitive skills have to be trained separately in each domain or whether learners are able to transfer metacognitive skills more or less automatically between different domains (Raaijmakers et al., 2018). Since transfer seldomly occurs spontaneously, it is a further question how to design metacognitive skill training to increase the likelihood of metacognitive skill transfer to new learning scenarios with positive effects on content knowledge acquisition.

Transfer of metacognitive skills

Just as metacognition is given high importance in the context of learning, so is transfer. And just as in the case of metacognition, the term transfer is also inconsistently defined. A brief definition of transfer comes from Lobato (2012): “Past experiences carry over from one context to the next.“ (p. 232). From a theoretical view, there are different perspectives on transfer, such as actor-oriented transfer (e.g., Lobato 2012; Roorda et al., 2015), transfer of situated learning (e.g., Greeno et al., 1993; Gruber et al., 1996), or analogical transfer (Gentner, 1983, 1989). We use the idea of analogical transfer to understand the transfer of metacognitive skills in more detail by applying principles that have been studied in the context of analogical problem-solving (e.g., Gick & Holyoak 1983) to SRL.

Analogical problem solving involves the transfer of a solution learned in one problem to another problem. To describe analogical problem solving, Gentner (1983, 1989) developed the structure-mapping theory (SMT) of analogical transfer. SMT distinguishes the source problem from which an analogy originates and the target problem to which the transfer is to be made. According to SMT, analogical transfer of the solution includes four steps (Holyoak & Thagard, 1989). The first step is about retrieval. The problem solver seeks for an already solved source problem in prior knowledge which might be similar to the target problem to be solved. The retrieval of potential source problems strongly depends on their surface similarity to the target problem. That is, at this first step, potential source problems are retrieved from prior knowledge if they “look” similar to the target problem at a first glance, e.g., because they share the same context or domain (Forbus et al., 1994; Reeves & Weisberg, 1994). Whether they are really analogical to the target problem is tested in the second step when the deep structures of the source and target problem are mapped. The deep structure of a problem is defined by its problem space which includes every single problem state in which a problem can be transformed as well as the operations that transform one problem state into another. A problem’s solution is defined as a path through that problem space, that is a series of operations that transform the initial state into intermediate states until the goal state is reached. Thus, the deep structure of a problem defines its solution. Problems have a high deep structure similarity if their deep structures can be mapped onto each other (homomorphic or isomorphic problems). In that case, the source problem’s solution can directly be transferred to the target problem in the third step and transfer is near. If the deep structure of the source problem mappes only partially to the target problem’s deep structure, the source solution needs to be adapted before transferred to the target problem, if possible, and transfer is far. In the last step, problem solvers learn from analogical problem solving and integrate their problem-solving experience into a schema.

Forbus and colleagues (1994) point out that there are two kinds of similarity that are relevant for different steps of analogical transfer. Retrieval is primarily influenced by surface similarity. Surface similarity derives from correspondences of object descriptions which are independent of the relations between objects. For example, two problems have a high surface similarity just because they are presented in the same context. Two chemistry tasks seem to be more similar than a chemistry task and a task from history because they share the same domain. Surface similarity is easily recognized and retrieval relies on surface similarity in order to cheaply detect potential sources in prior knowledge. However, just because two tasks are presented in the same context does not mean that they have a high deep structure similarity, too. Deep structure similarity is determined during the mapping process. Deep structure similarity relies on relations, that is in case of problem solving operations between problem states. If two problems can be solved by the same series of operations their deep structure is the same. If problems completely differ in their deep structure they have to be solved completely differently.

The distinction of surface and deep structure similarity is not only crucial for analogical problem solving but also for SRL and metacognitive skill transfer. For example, imagine two science texts, one from chemistry and one from history. Surface similarity is low since both texts seem to have nothing in common. However, deep structure similarity might be high. For example, if with both texts the learners’ task was to identify relevant information in the text, learners could apply a text-highlighting strategy when reading both texts. In that case deep structure similarity was high, and the cognitive strategy applied within the one text could be easily transferred to the other text. In the same way, metacognitive skills applied to the text-highlighting strategy when reading the chemistry text could be easily transferred to the text-highlighting strategy when reading the history text since planning, monitoring, evaluating, and regulating concerns the same cognitive strategy and standards, and metacognitive knowledge regarding that, how, when and why is nearly the same. Thus, this would be an example of near transfer of metacognitive skills. In contrast, imagine two chemistry tasks, one reading task and one experiment. On a surface level both tasks might share a lot of content since they are both chemistry tasks. However, the experimental task will not be solved by any cognitive reading strategy as the reading task will not be solved by experimental strategies. That is, states and operations of both tasks differ substantially and deep structure similarity is low. In the same way, metacognitive skills applied to planning, monitoring, evaluating, and regulating the reading strategy need to rely on metacognitive knowledge and standards that differ greatly from metacognitive knowledge about experimental tasks and strategies. Thus, this would be an example of far transfer of metacognitive skills.

As we assume that metacognitive skills are domain-general one might argue that metacognitive skills are not transferred from one task to another but just applied to different tasks and domains. However, whether and to what extent metacognitive skills of planning, monitoring, evaluating, and regulating are transferred or applied depends on the strategic maturity of the learners. For example, Leopold et al., (2007) showed that younger students might be cognitively overloaded when requested to reflect on their strategy use. Accordingly, it can be assumed that younger learners (e.g., at the end of primary, beginning of secondary school) do not yet possess deeply developed metacognitive skills of planning, monitoring, evaluating, and regulating but need teaching and training. This assumption is confirmed by findings of Schuster et al., (2020) who showed that before training of metacognitive skills fifth graders possessed none of the metacognitive skills trained afterwards. At this stage, metacognitive skills are not domain-general, yet. They first have to be learned by applying them to a specific learning task and are therefore task-specific at the beginning. By transferring metacognitive skills learned with a specific learning task to one and more other learning tasks metacognitive skills become more and more domain-general. That is, only by transferring them to different cognitive strategies do metacognitive skills become domain-general. As soon as they are domain-general, they are “just” applied to different learning strategies, tasks, and domains.

The effect of a hybrid-training approach on transfer of metacognitive skills

From an instructional point of view, the question arises how to design metacognitive skill training to increase the likelihood of both near and far metacognitive skill transfer. Within a hybrid-training approach (Schuster et al., 2020), students learn to use metacognitive skills to regulate the application of a cognitive strategy. Such training increases the quality of strategy application (Leopold & Leutner, 2015) compared to non-hybrid training that addresses cognitive or motivational strategies without fostering metacognitive regulation. The advantage of hybrid metacognitive skill training is that learners learn both the criteria of a high-quality strategy application (metacognitive knowledge) as well as the use of these criteria when performing metacognitive skills of planning, monitoring, evaluating, and regulating (metacognitive skills). Hybrid training consists of a series of training tasks that require the repeated and consistent application of a certain cognitive strategy. Therefore, the repeated and consistent use of the same quality criteria by metacognitive skills support the automatization of metacognitive skill execution (Anderson, 1982). As a consequence, metacognitive skills require less working memory capacity and leave more capacity for direct information processing, that is content knowledge acquisition (Wirth et al., 2020). To give an example, in hybrid metacognitive skill training students learn the steps for high-quality strategy application of cognitive strategies such as a three-step reading strategy (reading the paragraph, formulating a question according to the content of the paragraph, and underlining the answer) in combination with metacognitive skills of planning, monitoring, evaluating, and regulating. Regarding this example, in metacognitive skill training reading would therefore consist of at least six steps: (1) Planning the use of the three-step reading strategy, (2) reading the paragraph, (3) formulating a question according to the content of the paragraph, (4) underlining the answer, (5) monitoring and (6) evaluating and regulating the use of the three-step reading strategy. In comparison, non-hybrid training would only consist of the three-step reading strategy (reading the paragraph, formulating a question according to the content of the paragraph, underlining the answer) without metacognitive skills.

Previous research showed that hybrid training enhances near transfer, that is when the deep structure similarity between training and transfer task is high (e.g., both reading strategy; Aghaie & Zhang 2012; Souvignier & Mokhlesgerami, 2006, or both problem solving strategy, Raaijmakers et al., 2018). In contrast, research on far transfer, that is, when deep structure similarity between training and transfer task is low, is scarce. Schuster et al., (2020) investigated students’ near and far transfer of metacognitive skills by comparing hybrid training, non-hybrid training and control group training. In their study, a fictive learning scenario was used to measure near and far transfer: In the form of a scenario-based vignette, students were asked to describe their intended approach to process a given learning task. In the near transfer learning tasks, students should apply the same cognitive strategy as learned in the hybrid training (high deep structure similarity). In the far transfer learning task students should apply a different cognitive strategy than learned in the hybrid training (low deep structure similarity). Students’ descriptions, provided in an open-response format, were analyzed using a category system to detect how many metacognitive strategies and how many steps of cognitive strategies have been mentioned. The more metacognitive strategies were mentioned, the better was the intended transfer of metacognitive skills. Results showed that the hybrid training improved both students’ near and far intended transfer of metacognitive skills. Furthermore, intended cognitive strategy application was partially improved based on metacognitive skills transfer. Overall, however, reports of intended metacognitive skill application remained comparably low.

In the study of Schuster et al., (2020), the scenario-based vignettes made it possible to observe near and far transfer of metacognitive skills within a hybrid-training approach for the first time. However, results are limited for several reasons. For example, metacognitive skills were measured in a quantitative, not qualitative matter (“The more…, the better…”; Wirth & Leutner 2008). Additionally, students’ answers were coded in a very training-specific manner. But most importantly, Schuster et al., (2020) did not include any measure of content knowledge acquisition in their analyses. Whether metacognitive skills transfer to actual learning processes and increase the quality of the application of cognitive strategies as well as content knowledge acquisition in the performance and reflection phase still remains unclear. Consequently, it is still an open question whether metacognitive skills can be transferred in a way that content knowledge acquisition and learning improve in both near and far transfer learning tasks.

Authors such as Sweller & Paas (2017) call for empirical evidence that hybrid metacognitive skill training in one domain supports content knowledge acquisition in another domain. They argue that it is only reasonable to implement hybrid metacognitive skill training instead of non-hybrid training if transfer is proven for content knowledge acquisition. Sweller & Paas (2017) discuss the question of transfer or general trainability of metacognitive skills on the basis of the concepts of biologically primary knowledge and biologically secondary knowledge. Biologically primary knowledge is characterized as domain-general, not trainable and develops automatically without instruction or training. Biologically secondary knowledge is domain-specific, trainable, and does not develop automatically. Sweller & Paas (2017) assume that metacognitive skills belong to biologically primary knowledge and that transfer has to be demonstrated in order to clarify whether metacognitive skills can be taught at all and whether the training of SRL is generally useful. Implementation of hybrid metacognitive skill training costs much more effort than the implementation of a non-hybrid training. This only pays off if hybrid training supports metacognitive skills in a way that they can be applied in different domains. Non-hybrid training is domain-specific, that is, each domain needs its own non-hybrid training. Costs of the implementation of non-hybrid training increase with the number of domains and exceed the costs of hybrid training as soon as a certain number of domains is reached.

The present study

The present study aimed to answer two questions: Do students spontaneously transfer metacognitive skills to learning scenarios of different transfer distances (RQ 1)? We expected students receiving hybrid metacognitive skill training should report more and better intended metacognitive skill application in the learning scenarios of different transfer distances than students receiving non-hybrid training without support of metacognitive skills (Hypothesis 1). Do students profit from metacognitive skill training with respect to content knowledge acquisition in learning scenarios of different transfer distances (RQ 2)? We expected students receiving hybrid metacognitive skill training should outperform students receiving non-hybrid training without support of metacognitive skills (Hypothesis 2). However, we expected this effect to be stronger for near transfer compared to far transfer of metacognitive skills. If metacognitive skill transfer is near, using specific criteria for planning, monitoring, evaluating and regulating the quality of strategy application has been repeatedly practiced in training. Therefore, applying metacognitive skills becomes more automatized (Wirth et al., 2020). Near transfer of metacognitive skills should therefore not only lead to a higher quality of strategy application but also result in more free working memory capacity for content knowledge acquisition. If metacognitive skill transfer is far, different specific criteria for planning, monitoring, evaluating and regulating the quality of the actual strategy application have to be retrieved from metacognitive knowledge and used for metacognitive regulation. Therefore, application of metacognitive skills becomes more cognitively demanding. We assume that metacognitive skills increase the quality of cognitive strategy application in far transfer tasks in the same way as in near transfer tasks. However, since metacognitive skill application is more cognitively demanding in far transfer tasks it leaves less free working memory capacity for content knowledge acquisition. In far transfer tasks, learners might therefore not profit from a high-quality execution of a cognitive learning strategy in the same way as in near transfer tasks. Additionally, we expect the effect of hybrid metacognitive skill training on content knowledge acquisition to be stronger the more often learners apply a cognitive learning strategy. Metacognitive skills have an effect on the quality of the application of a cognitive learning strategy, and the more learners apply a cognitive learning strategy with a high quality the more and the better they should learn.

For testing Hypothesis 1 we reanalyzed the vignette data of Schuster et al., (2020) by using a new coding schema in order to focus more on the quality (rather than the quantity) of intended strategy application, since applying metacognitive skills was expected to increase the quality of cognitive strategy application (Leopold & Leutner, 2015). Additionally, students’ answers were coded in a less training-specific manner by including more general metacognitive processes that were not directly fostered in the hybrid metacognitive skill training. This way we reanalyzed students’ answers to those near and far transfer tasks that were initially analyzed by Schuster et al., (2020). Furthermore, we exceeded the analyses of Schuster and colleagues by including an additional far transfer task.

For testing Hypothesis 2 we used a learning scenario that was not included in the analyses of the Schuster et al., (2020) study. The scenario (MicroDYN; Greiff et al., 2012; Schweizer et al., 2013) is an authentic and computer-based learning scenario in which the students’ task is to acquire content knowledge by conducting experiments. Students have to explore a dynamic system by manipulating input variables in order to observe subsequent changes in the output variables and to infer from the observed changes the system’s underlying structure. In this learning task, learners not only plan how to learn but need to engage in all three phases of a SRL process of planning, monitoring, evaluating and regulating.

Method

Participants

The sample consisted of 243 fifth-grade students from two German secondary schools (122 females; M = 10.5 years, SD = 0.48; Min = 9, Max = 13) who participated in a school development project for all-day schools. Not all students were present at all times of measurement. Missing data occurred randomly due to student absence because of illness, school organizational incidents, or a fire alarm during data collection.

Overall, 159 participants (65.4%) provided complete data on all measurement scales for RQ 1 and were included in the analysis for RQ 1; 183 participants (75.3%) provided complete data on all measurement scales for RQ 2 and were included in the analysis for RQ 2. Analyzing the missing values indicated that most of the scale means of participants included in the two analysis samples did not differ from the means of participants excluded from the two analysis samples due to missings on one of the measurement scales. Furthermore, participants’ gender was not related to missingness in the two samples, and missingness in one sample was not related to missingness in the other sample. For analyzing the missing values, overall 12 significance tests (nine t tests on nine measurement scales, two chi-square tests for gender and sample membership, and one chi-square test for membership in the samples) were conducted, each at a Bonferroni corrected significance level of alpha = 0.05/12 = 0.004. Only three significance tests (tests on measurement scales) reached significance. In a last step we checked that missingness in the analysis sample for RQ 1 was unrelated to pretest scores (reported in the study of Schuster et al., 2020, and collected before start of the training programs) on the measurement scales used in the analyses (t tests, all p values ≥ 0.367). Accordingly, we concluded that missing data in our analyses occurred largely at random.

Participating students did not receive any reward for their participation since the training study was part of everyday school life. Written informed consent was obtained from all students’ parents.

The sample was taken from the Schuster et al., (2020) study. For the present analyses, additional variables were included, some open-response data were re-coded in a new way as described below in the Instruments section, and the students were aggregated in different groups.

Design and training

The experimental study lasted one full school year. For the first half of the school year (15 weeks of 90 min training), all students were randomly assigned to one of three conditions in a between-subjects design: two hybrid-training groups and one non-hybrid-training group (see Fig. 1).

Group 1 (ME; N = 40, 21 participants in the RQ 1 analysis sample and 34 participants in the RQ 2 analysis sample) received hybrid metacognitive skill training, that is training on both metacognitive skills and the cognitive strategy of conducting experiments. Accordingly, students were taught to apply metacognitive skills in combination with the cognitive strategy of conducting experiments. Metacognitive skills included planning, monitoring, evaluating and regulating (e.g., Winne & Hadwin 1998). In line with Klahr & Dunbar (1988) the cognitive strategy for conducting an experiment consisted of three steps: (1) deriving a hypothesis, (2) checking the hypothesis by using the control-of-variables strategy (CVS; Tschirgi 1980), (3) drawing a conclusion.

Group 2 (MT; N = 42, 21 participants in the RQ 1 analysis sample and 32 participants in the RQ 2 analysis sample) received hybrid metacognitive skill training, that is training on both metacognitive skills and the cognitive strategy of text highlighting. Accordingly, students were taught to apply metacognitive skills in combination with the cognitive strategy of text highlighting. Metacognitive skills included planning, monitoring, evaluating and regulating (e.g., Winne & Hadwin 1998). In line with Leopold & Leutner (2015), the cognitive strategy for text highlighting consisted of three steps: (1) reading a section, (2) formulating questions about the content of the section, (3) highlighting parts of the text that answer the questions.

In the first 15 weeks students learned how to apply metacognitive skills to conduct experiments (Group 1) or read science texts using text high-lighting (Group 2) on a high-quality level. In the beginning of this training, students learned the basics about learning (“Why is self-regulated learning important?”), classroom rules (“How do we behave when we conducting experiments or reading science texts”) and cooperative learning methods (“Which roles and responsibilities exist in group work?”). Subsequently, students of Group 1 and 2 were introduced to metacognitive skills. They were directly instructed to use, for example, goal setting to plan the experiment (Group 1) or the reading process (Group 2), monitoring to observe the correct application of CVS (Group 1) or text-highlighting strategy (Group 2), and evaluating to check whether they were satisfied with how they conducted the experiment (Group 1) or read the science text (Group 2). Both hybrid training conditions (Group 1 and 2) ended with 90 min of transfer training showing where students could use metacognitive skills elsewhere in everyday life situations. At the end of each lesson, students used a rubric to reflect on their learning processes during the training lessons in both Groups 1 and 2. Group 3 (0 M; N = 161, 117 participants in both the RQ 1 and the RQ 2 analysis samples) received non-hybrid training, that is training of some certain cognitive or motivational strategies without any metacognitive skill training. This group was aggregated from four different groups of the Schuster et al., (2020) study, receiving either training of a cognitive strategy (conducting experiments or text highlighting) or training of reading motivation but no training of metacognitive skills (see Fig. 1).

Students receiving direct training on conducting experiments (E) or text highlighting (T) received the same training like Groups 1 or 2 but no training of metacognitive skills. The three steps of conducting experiments or reading a science text were identical to the three steps in the hybrid training. However, in both non-hybrid training groups the cognitive strategies were taught without referring to metacognitive skills, leaving more time to practice the cognitive strategy. In the beginning of this non-hybrid training, as in the hybrid training, the basics on learning, classroom rules, and cooperative learning were taught. The transfer session from hybrid training was replaced leaving more time to train the cognitive strategies.

Students in the reading motivation training (R) received different opportunities to read and present their favorite books in order to foster reading motivation. However, they learned no specific motivation regulation strategy. In line with the procedure in the aforementioned groups, students learned the classroom rules and the basics of cooperative learning. The basics of learning were not part of this reading motivation training. In this training, students were not instructed or trained in any metacognitive skill or cognitive strategy.

In all these non-hybrid training groups transfer of metacognitive skills (as expected from the hybrid-training groups) could not occur. Therefore, these four groups were aggregated for our statistical comparisons of hybrid and non-hybrid training groups. Furthermore, statistical analyses showed that these four non-hybrid training groups did not differ on the dependent variables of the present analyses, λ = 0.69, F(24, 244) = 1.37, p = .124.

In the second half of the school year (again 15 weeks of 90 min training), all groups received a non-hybrid training concerning that cognitive strategy that had not been trained in the first half of the school year. That is, if text highlighting was trained in the first half of the school year, conducting experiments was trained in the second half and vice versa. Accordingly, the cognitive strategy of the second half of the school year represented a new strategy for all participants. Metacognitive skills were not trained. This experimental variation allowed us to investigate transfer of metacognitive skills.

Instruments

Metacognitive skills (MST). Concerning Hypothesis 1, spontaneous recall and intended application of metacognitive skills was assessed using an economic and scenario-based measure for strategies, called Multi-Strategy Test (MST; Stebner et al., 2015). In the form of a vignette, the MST outlines a fictitious learning scenario in which students are asked to describe their intended approach to process the learning task. Students’ descriptions, provided in an open-response format, were analyzed using a category system to detect how many metacognitive skills (of high quality) had been mentioned. Compared to the MST version used in the study by Schuster et al., (2020), a new category system was developed which not only focuses on recalling metacognitive skills in a quantitative manner, but also focuses on the quality of metacognitive skill application. We included in our analyses the same MST versions (regarding the instruction) of Schuster et al., (2020), that is MST_E which requires to describe the learning process when conducting an experiment and MST_T which requires to describe the learning process when reading a science text with text highlighting. In addition, we included a third MST version (MST_P) representing a fictitious learning scenario that was not mentioned in the training, neither in the first nor in the second half of the school year. Within this new learning scenario, students were put into the situation to prepare for a class exam. This scenario was not practiced in the training conditions, but represented a familiar and everyday learning scenario for fifth-grade students.

The three MST versions were administered at all three measurement points. Regarding the transfer of metacognitive skills, the MST performance at T2 and T3 of Group 1 (ME) and Group 2 (MT) was crucial, since students had learned metacognitive skills in hybrid training. For Group 1 (ME), MST_E served as a near-transfer task at T2 and T3, since deep structure similarity between training and learning tasks is high. That is, the task could be successfully processed with the same metacognitive skills and cognitive strategy that students had previously learned within hybrid training. In contrast, MST_T served as a far-transfer task for Group 1 (ME), since deep structure similarity between training and learning task is low. Accordingly, solving MST_T required the application of another cognitive strategy that students from Group 1 (ME) had not previously learned in the hybrid training. For Group 2 (MT), MST_T served as a near-transfer task at T2 and T3, while the MST_E served as a far-transfer task. For both Group 1 (ME) and Group 2 (MT), MST_P at T2 and T3 served as a far transfer task, since the task was not domain-specific but required a flexible (cross-domain) strategy application. Group 3 (0 M) was never trained in metacognitive skills and their application. Therefore, all MST versions at all points of time had no potential for transfer of metacognitive skills.

The task formulations for the three MST versions (originally in German) were as follows. For MST_E: “At school, you are dealing with plants. With the help of an experiment, you want to find out what plants need to grow. What possibilities do you have to work on this task?”, for MST_T: “For homework, you have to read a multi-page article from a magazine. Tomorrow, you have to tell your classmates what the article is about. How do you proceed to solve the task?”, and for MST_P “You are preparing yourself for a class exam. Which ways can you consider to make sure you will have learned enough?”. Metacognitive skills were coded identically for all three versions.

Scoring. Going beyond Schuster et al., (2020) and in line with Schunk & Zimmerman (1998), the whole learning process was considered which resulted in a (new) coding manual that consisted of a total of seven categories: Goal setting, planning, monitoring (observing), monitoring (assessing), reacting, reflecting, and evaluating. Only metacognitive skills were coded. Cognitive strategies like text highlighting or CVS were not coded because they were not relevant to our research questions. Students could receive zero, one or two points per category. Zero points per category were awarded if the answer did not refer to the metacognitive skill of the category at all. One point was awarded if the metacognitive skill was only mentioned. Two points were awarded if the metacognitive skill was mentioned and correctly applied to the content of the task. An overview of the categories and examples for MST_P can be found in Table 1. Note that students could mention the application of metacognitive skills on a micro level using e.g. goal setting to organize the use of a certain cognitive learning strategy (e.g., restudy). Students could also use metacognitive skills on a macro level using e.g. goal setting to organize the whole learning process including several cognitive learning strategies. It shows that in all scenarios various metacognitive skills can be used resulting in a coding procedure that awards any mentioning or application of metacognitive skills. But as soon as learners received two points for applying a metacognitive skill, learners could not receive more points for this category of metacognitive skills. This results in a maximum score of 14 points. All students’ answers were coded by two staff members. After an initial phase discussing about the answers and potential conflicts (which were solved using the underlying theoretical model), 25 answers were coded by both raters resulting in a good inter-rater reliability, with an average Cohen’s κ = 0.98 (0.89 ≤ κ ≤ 1.0; n = 25). Afterwards, all of the students’ answers were randomly assigned to one of the two raters.

Content knowledge acquisition (MicroDYN). Concerning Hypothesis 2, students’ exploration behavior and resulting content knowledge acquisition in a computer-based learning scenario (MicroDYN; Greiff et al., 2012; Schweizer et al., 2013) was assessed. MicroDYN is an established and validated instrument that originally aimed at measuring complex problem solving using three computer-simulated experiments which had to be carried out in different domains and with different cover stories (including sports, household, and school). The scenarios were designed in a way that they could be solved without relying on prior declarative knowledge about the cover stories. The aim of each of the experiments was to identify the underlying structure and connections between input and output variables on which each of the specific complex systems was based. Input variables had to be manipulated using a digital lever in order to observe subsequent changes in the output variables that were displayed and to infer from observed changes the system’s underlying structure.

MicroDYN was administered at T2 only. At this point of time, the MicroDYN learning tasks represented near-transfer tasks for Group 1 (ME), since students of this group had learned the (cognitive) control-of-variables strategy (CVS) as well as their regulation by metacognitive skills. Thus, although training tasks and learning tasks differed with regard to context and other superficial features (surface structure), they required the application of the same cognitive strategy. Since students of Group 2 (MT) had learned the application and regulation of the (cognitive) text-highlighting strategy, the MicroDYN learning tasks represented far transfer tasks for them. Since students of Group 3 (0 M) had never been trained in metacognitive skills and their application, the MicroDYN learning tasks had no potential for transfer of metacognitive skills.

Scoring. In line with Greiff et al., (2012), from MicroDYN we derived two measures: a process measure that captured students’ exploration behavior and a measure that captured students’ content knowledge acquisition as a result of their exploration behavior.

As the process measure captured the quality of students’ exploration behavior, the application of the control-of-variables strategy (CVS; Tschirgi 1980) was scored with the help of log files. Students could reach a total of eight points if the CVS strategy was consistently applied in each of the three experiments (two points for experiments one and two, four points for experiment three, which was more complex than experiments one and two). “Consistently” means that students varied only one variable at a time in order to find out what effect the input variables had on the output variable and that this exploration strategy was employed for each input variable in each experiment. In the following we present an example of coding for the complex experiment where students could get four points: students got zero points if students did not use CVS at all, one point if students ran one experiment without changing any independent variables (to see whether a change in the dependent variable comes naturally by time), two points if CVS was used inconsistently only for one of three independent variables, three points if the CVS was used inconsistently only for two of three independent variables, and four points if CVS was used consistently for all three variables in each round of the experiment. Consistency of CVS application was a measure of the quality of actual strategy application. At the same time, it was considered to indicate transfer of metacognitive skills since metacognitive skills were expected to increase the quality of (cognitive) strategy application.

As a measure that captured students’ knowledge acquisition as a result of their exploration behavior, students had to draw conclusions by using arrows in a knowledge map to visualize the relations between input and output variables they had identified and learned. Students could reach a total of nine points. For each of the three experiments, zero points were awarded if the conclusion contained at least three mistakes. One point was awarded if the conclusion contained two mistakes. Two points were awarded if the conclusion contained one mistake. Three points were awarded if the conclusion was completely correct.

Student characteristics. In addition to demographic data (age and sex), students’ cognitive abilities were recorded as an indicator of intelligence using the verbal scale (20 items, alpha = 0.73, omega = 0.72) and the non-verbal figural scale (25 items, alpha = 0.92, omega = 0.94) of a cognitive abilities test (Heller & Perleth, 2000) at T1. The two scale scores were averaged to represent an intelligence indicator. Following Kröner et al., (2005), this indicator was used as a covariate to test Hypothesis 2.

Procedure

Each week of the school year, a timeslot of 90 min was blocked in the students’ regular timetable without canceling regular lessons. Lessons took place in the regular classrooms in school. All students completed a pretest before the first training at T1 involving the three versions of the MST (MST_E, MST_T, and MST_P) and students’ characteristics. At T1, the MST versions were used to measure students’ prior knowledge about their intended application of metacognitive skills in three different learning scenarios. After receiving either hybrid training or non-hybrid training for half the school year, students completed a posttest at T2 involving the three versions of the MST and MicroDYN. Following the posttest, all students received the second, non-hybrid training. After the second training, students completed a follow-up test at the end of the school year at T3 involving the three versions of the MST.

In order to keep intervention fidelity high, researchers carried out all training versions while being observed by additional researchers (Gearing et al., 2011). Furthermore, they took turns changing training groups after every 2 weeks (first week observing, second week teaching) in order to prevent teacher effects.

Data Analysis

Regarding RQ 1 on spontaneous transfer of metacognitive skills to learning scenarios of different transfer distances, a multivariate analysis of variance (MANOVA) was calculated with Group as factor (ME, MT, 0 M) and six MST scores (three MST scales MST_E, MST_T, and MST_P; each administered at two times of measurement T2 and T3) as dependent variables. Different MST scales, measured at different times of measurement, represented different transfer distances depending on which training group students were allocated to (Fig. 1). For example, for students of the ME group, who received a hybrid metacognitive skill training on conducting experiments, the MST_E scale on experimenting represented, at both times of measurement T2 and T3, a measure of near transfer, whereas the MST_T scale on text highlighting and the MST_P on preparing for a class exam represented, at both times of measurement T2 and T3, a measure of far transfer. For students of the MT group, who received a hybrid metacognitive skill training on text highlighting, the MST_T scale represented, independent of the time of measurement, a measure of near transfer whereas the MST_E und MST_P scales represented, independent of the time of measurement, a measure of far transfer. Accordingly, we were neither interested in the main effect of time nor in the interaction of time and group. Instead, for testing our hypothesis that students receiving hybrid metacognitive skill training (ME group and MT group) show better metacognitive application in learning scenarios of different transfer distances than students receiving non-hybrid training (0 M group), we compared the ME and the MT groups with the 0 M group by computing multivariate contrast estimates (planned comparisons). In a second step, we computed, based on follow-up ANOVAs, univariate contrast estimates for each of the six dependent variables. All univariate analyses were ensured by nonparametric tests and all univariate contrast estimates were ensured by calculating bootstrapping-based confidence intervals of the estimates. Using G-Power 3.1.9.4 (Faul et al., 2009) we calculated a statistical power of 1-β = 0.85 for the MANOVA (N = 159, 3 groups, 6 dependent variables, ɑ = 0.05, f2(V) = 0.0625), and a statistical power of 1-β = 0.88 for univariate contrast estimates (N = 159, 2 groups, ɑ = 0.05, f = 0.25).

RQ 2 asked whether students are better prepared for effective strategy-based content knowledge acquisition in learning scenarios of different transfer distances when they received a metacognitive skill training compared to a non-hybrid training. We used the two measures derived from the MicroDYN scenario for testing this hypothesis: the control-of-variables strategy (CVS) score and the content knowledge acquisition score. We first tested for CVS mean differences between the three training groups and computed the Pearson correlation between CVS and content knowledge acquisition within the training groups. After that we tested whether the regression of content knowledge acquisition on CVS was moderated by group membership. In doing so, we specified a General Linear Model (GLM; e.g., Horton 1978) with content knowledge acquisition as the dependent variable, CVS as a first (metric) predictor, group (ME, MT, 0 M) as a second (factorial) predictor, and the interaction of CVS and group as a third predictor. We sequentially decomposed the variance of the dependent variable in this order by specifying SSTYPE 1 in the SPSS GLM analysis. For testing the hypothesis that students, who received a hybrid metacognitive skill training (the ME and MT groups), are better prepared for effective strategy-based content knowledge acquisition than students who received non-hybrid training (the 0 M group), we compared each of the two hybrid training groups (ME, MT) with the 0 M group by computing contrast estimates for group and the interaction of CVS and group. All contrast estimates were ensured by calculating bootstrapping-based confidence intervals of the estimates.

Results

Do students spontaneously transfer metacognitive skills to learning scenarios of different transfer distances?

Regarding this research question, three Multi-Strategy Tests (MST) were the learning scenarios. We calculated a MANOVA with Group (ME, MT, 0 M) as factor and students’ planning performance in three fictive learning scenarios (experimenting, text highlighting, and preparing for a class exam) at two points of measurement (T2, T3) as dependent variables. We expected that Group 1 (ME) and Group 2 (MT) receiving hybrid metacognitive skill training to report more and better intended metacognitive skill application than Group 3 (0 M) receiving non-hybrid training. Accordingly, this hypothesis was tested using planned comparisons by contrasting each of the two hybrid training groups against the non-hybrid group.

Near transfer was defined to occur when metacognitive skills learned with a specific cognitive strategy transferred to a learning scenario involving the same cognitive strategy. Far transfer was defined to occur when metacognitive skills learned with a specific cognitive strategy transferred to a learning scenario involving another cognitive strategy. Accordingly, experimenting (MST_E) comprised near transfer for Group 1 (ME) and far transfer for Group 2 (MT). The same way, text highlighting (MST_T) comprised near transfer for Group 2 (MT) and far transfer for Group 1 (ME). Preparing for a class exam (MST_P) comprised far transfer for both hybrid trainings.

Means and standard deviations of students’ planning performance are given in Table 2, the Pearson correlations of the scales are given in Table A1 in the Appendix. MANOVA results indicated a multivariate effect of Group, λ = 0.16, F(12, 302) = 35.57, p < .001, ηp² = 0.59. Comparing Group 1 (ME) with Group 3 (0 M), λ = 0.38, F(6, 151) = 49.41, p < .001, ηp² = 0.66, as well as comparing Group 2 (MT) with Group 3 (0 M), λ = 0.38, F(6, 151) = 41.93, p < .001, ηp² = 0.63, revealed significant multivariate contrast estimates. In a second step, we calculated follow-up univariate ANOVAs on each of the six dependent variables of the MANOVA. As can be seen in Table 3, all but one univariate contrast estimate were statistically significant and in the expected direction. Concerning transfer distances, the effect size measures in Table 3 indicated that the metacognitive skills learned in Group 1 (ME) showed both near and far transfer in all learning scenarios. Metacognitive skills learned in Group 2 (MT), however, showed near transfer on MST_T and far transfer on MST_P, but no far transfer on MST_E at T2 and only small far transfer on MST_E at T3. These results are largely in line with our hypothesis that, compared to Group 3 (0 M) (non-hybrid training), Group 1 (ME) and Group 2 (MT) (hybrid metacognitive skill training), showed near as well as far transfer, whereas there were, on a descriptive level, no consistent effect size differences between near and far transfer.

Do students profit from metacognitive skill training with respect to content knowledge acquisition in learning scenarios of different transfer distances (RQ 2)?

For this research question, MicroDYN was the learning scenario. If in this scenario students made consistent use of the so-called control-of-variables strategy (CVS), we expected them to have good chances to identify the underlying structure of the complex systems that were presented to them. Thus, the cognitive strategy to which metacognitive skill training was to transfer in this learning scenario was the CVS, which represented near transfer for Group 1 (ME) that received hybrid training of metacognitive skills and conducting experiments, and far transfer for Group 2 (MT) that received hybrid training of metacognitive skills and text highlighting.

In a first step, we calculated the Pearson correlation of CVS and content knowledge acquisition, which turned out to be r = .40 across students of all training groups, with r = .65 for Group 1 (ME) that received the hybrid training on metacognitive skills and conducting experiments, r = .42 for the Group 2 (MT) that received the hybrid training on metacognitive skills and text highlighting, and r = .31 for Group 3 (0 M) that received non-hybrid training. The three training groups, however, did not differ on CVS, F(2, 156) < 1. These results indicated that - although there were no mean differences between groups in consistently using the control-of-variables strategy - there seemed to be differences in the effectiveness of using the CVS when it comes to content knowledge acquisition. In Table 4, descriptive statistics are reported.

In a second step, we calculated a General Linear Model (GLM) with content knowledge acquisition as the dependent variable, CVS as a first (metric) predictor, group (ME, MT, 0 M) as a second (factorial) predictor, and the interaction of CVS and group as a third predictor. Intelligence was included as a further (metric) predictor, as previous research on MicroDYN and related scenarios showed that intelligence was predictive of students’ achievement (e.g., Kröner et al., 2005). We expected Group 1 (ME) and Group 2 (MT) to outperform Group 3 (0 M) at least when CVS was high. Accordingly, we included the interaction of group and CVS in the linear model and calculated the respective contrast estimates. Results indicated significant effects of intelligence, F(1, 176) = 5.24, p = .023, ηp² = 0.03, and CVS, F(1, 176) = 31.10, p < .001, ηp² = 0.15. Contrast estimates indicated that neither Group 1 (ME), F(1, 176) < 1, nor Group 2 (MT), F(1, 176) < 1, outperformed Group 3 (0 M) on knowledge acquisition. However, the contrast estimates concerning the interaction of Group and CVS indicated that the slope of the regression of knowledge acquisition on CVS was stronger for Group 1 (ME) than for Group 3 (0 M), F(1, 176) = 3.67, p(one-tailed) = 0.029, ηp² = 0.02, whereas the slopes of the regression of Group 2 (MT) and Group 3 (0 M) did not differ, F(1, 176) < 1. Bootstrap results (CBa: bias-corrected and accelerated, 3000 samples) confirmed that the slopes of the regressions in Group 1 (ME) and Group 3 (0 M) differed significantly, B = 0.324, Bias < 0.001, SE = 0.136, p = .015, 95% CI: [0.051, 0.595], whereas the slopes of the regressions in Group 2 (MT) and Group 3 (0 M) did not differ, B = 0.142, Bias < 0.001, SE = 0.161, p = .359, 95% CI: [-0.170, 0.456]. In Table A2 (Appendix), the confidence intervals of all parameters of the model are given.

Figure 2 shows the scatterplot and the regression lines when predicting MicroDYN content knowledge acquisition from CVS. It can be seen that CVS is highly predictive for content knowledge acquisition. The regression line of Group 1 (ME), however, is steeper than the regression line of Group 3 (0 M), whereas the regression line of Group 2 (MT) is descriptively only a little steeper than in Group 3 (0 M). These results are partly in line with our hypothesis: Although Group 1 (ME) did not outperform Group 3 (0 M) on knowledge acquisition in general, Group 1 (ME) outperformed Group 3 (0 M) when CVS was high, indicating better effectiveness of using the control-of-variables strategy. This effect, however, could not be found for Group 2 (MT). In other words, concerning content knowledge acquisition the hybrid metacognitive skill training showed substantial near transfer (that is, from conducting experiments as a cognitive strategy to CVS as a related cognitive strategy) but did not show far transfer (that is, from text highlighting as a cognitive strategy to CVS as an unrelated cognitive strategy).

Discussion

In this experimental study we examined whether students receiving hybrid metacognitive skill training (metacognitive skills and cognitive strategies) spontaneously transferred metacognitive skills to learning scenarios of different transfer distances (near and far transfer). Therefore, we aimed at clarifying the question whether metacognitive skills only need to be trained in one domain (because students are able to transfer spontaneously) or need to be trained within each domain requiring a (new) cognitive strategy in order to increase the quality of cognitive learning strategy application (because students are not able to transfer metacognitive skills automatically). Our experimental study was conducted in real school life providing high external validity.

From a theoretical point of view, our study provides new insights into important questions about transfer processes in learning strategy training. It extends the previous research perspective by drawing attention to effects on content knowledge acquisition and provides new arguments for the discussion about the domain-general nature of metacognitive skills in self-regulated learning. An experimental design made it possible to enforce fundamental criteria of high-quality research (Spörer & Glaser, 2010): randomized assignment of students to learning groups, alternative treatments to obtain adequate control groups, treatment integrity was guaranteed via classroom observations and trainer rotation, various criteria were used to measure training effects, and, in addition, near and far transfer - as part of the research questions - was explicitly considered. In the following, we describe theoretical considerations and methodical implications structured according to our two research questions.

Theoretical considerations

(Spontaneous) metacognitive skill transfer (RQ 1)

Our first research question of this study aimed to clarify whether students spontaneously transfer metacognitive skills to learning scenarios of different transfer distances (Schunk & Zimmerman, 1998). Therefore, we analyzed our results with regard to three learning scenarios (MST_E, MST_T, and MST_P). Regarding near transfer, we were able to consistently show metacognitive skill transfer for both hybrid training groups. Regarding far transfer, both hybrid training groups showed far transfer to MST_P, in which students were asked to plan the preparation for a class exam. We also found far transfer to MST_T. However, there was no far transfer to MST_E. That is, students were able to transfer metacognitive skills when learning required the application of a text-highlighting strategy or any strategy for preparing for an exam, but they were not able to transfer metacognitive skills when learning required the cognitive strategy of conducting experiments and metacognitive skills were not trained regarding conducting experiments. This raises questions concerning the boundary conditions of far transfer of metacognitive skills to occur. One answer could be the familiarity of the cognitive strategy. Since metacognitive skills fall back on metacognitive knowledge, that is declarative, procedural, and conditional strategy knowledge, their successful application depends on a minimum of available strategy knowledge. We assume that - without a specific training - students are more familiar with text highlighting as well as with preparing for an exam than with conducting experiments and applying CVS. Probably students’ metacognitive knowledge of the text-highlighting strategy and systematic preparation for an exam was sufficient even without training - to allow for far metacognitive skill transfer. In contrast, their metacognitive knowledge of the strategy of conducting experiments might have been too weak, inhibiting far metacognitive skill transfer. Thus, spontaneous far transfer of metacognitive skills across different domains is possible but requires sufficient domain-specific metacognitive strategy knowledge.

Metacognitive skill transfer resulting in content knowledge acquisition (RQ 2)

With the second research question we aimed to clarify whether students profit from metacognitive skill training with respect to content knowledge acquisition in near and far transfer tasks. We used a computer-based learning scenario which required students to engage in all three phases of a self-regulated learning process of planning, monitoring, evaluating and regulating (Schunk & Zimmerman, 1998). In line with the results of RQ 1, we found near transfer for hybrid training Group 1 (ME). However, near transfer did not show up in the form of higher quality of strategy execution, since we could not find group differences here. But, we did find effects on content knowledge acquisition. Students who showed high quality in performing CVS acquired more content knowledge when metacognitive skill transfer was near compared to far transfer. This finding can be discussed - carefully because we have no data on that - in the light of students’ cognitive load from metacognitive regulation and resulting working memory capacity for content knowledge acquisition (Wirth et al., 2020). We assume that for the hybrid training group with regard to conducting experiments (ME), regulating cognitive strategy application (CVS) via metacognitive skills was sufficiently little cognitively demanding. Thus, students of Group 1 (ME) had sufficient free working memory capacity for interpreting experimental results and content knowledge construction. Students of the hybrid training group with regard to text highlighting (MT) were able to transfer their metacognitive skills for regulating CVS application. However, this might have produced too much cognitive load resulting in insufficient free working memory capacity for content knowledge construction. This finding is shown in the regression. If metacognitive skills were applied for regulating cognitive strategy application, content knowledge was acquired, because memory capacity was available. Far transfer seems to exist with regard to cognitive strategy regulation via metacognitive skills, but under far transfer conditions this regulation process is very cognitively demanding, so that not sufficient working memory capacity remains to acquire content knowledge. Again, far transfer seems to be possible and results in better content knowledge acquisition, but only if metacognitive knowledge about a specific cognitive strategy is available, that is, criteria for cognitive strategy execution are familiar. If a student does not have those criteria of a cognitive strategy available, transfer of metacognitive skills is so cognitively demanding that far transfer fails.

The question regarding near and far transfer primarily serves to refute concerns about the general trainability of metacognitive skills and a lack of positive effects on content knowledge acquisition (Sweller & Paas, 2017). Overall, our results of the first research question suggest that transfer exists, but we have to restrictively mention that sufficient metacognitive knowledge (in form of criteria for cognitive strategy application) serves as a prerequisite for successful transfer.

This result strengthens the assumption that metacognitive skill training is only effective in combination with cognitive strategies in the sense of a hybrid-training approach (Sweller & Paas, 2017). From an instructional point of view, hybrid metacognitive skill training should be designed in such a way that, in a first step, metacognitive skills are trained in combination with a specific cognitive strategy (Schuster et al., 2020). Afterwards, in a second step, as far as in hybrid training metacognitive skills and metacognitive knowledge about a specific cognitive strategy had been conveyed, non-hybrid training on other cognitive strategies could follow.

Further considerations

Overall, on a purely descriptive level, we mainly found decreasing scores from T2 to T3 in all MST versions in all groups. That is, metacognitive skill transfer decreased over time. This finding is consistent with general findings from transfer research (Klauer, 2010; Salomon & Perkins, 1989; Schuster et al., 2020). It could be an indication that metacognitive skill transfer - even if metacognitive skills were trained for half a year - is not permanent and, therefore, has to be refreshed regularly over a long period of time. Indirect strategy training might be an efficient way for continuously refreshing metacognitive skills and fostering metacognitive skill transfer (Schuster et al., 2018). In contrast to direct strategy training (learning strategies are conveyed explicitly), in indirect training strategies are only stimulated via a strategy-conducive learning environment. Research on combined direct and indirect strategy training provides initial indications for a successful strengthening of the transfer of metacognitive skills (Dignath & Veenman, 2020; Schuster et al., 2018). Such an indirect training approach might be appropriate especially for schools since indirect strategy training is easier implemented in regular school classes over a long period of time than direct strategy training (Stebner et al., 2019).

Limitations and methodological implications for future research

The present study was conducted in schools providing high external validity. However, there were a lot of confounding variables that could have influenced transfer in both ways. Although scientific quality standards have been met and observed, confounding variables (such as student social interactions, noise etc.) or individual differences (such as socio-economic status; Stebner et al., 2020) could have hampered transfer but also could have helped students to transfer certain metacognitive skills. Therefore, future research should use a laboratory setting in order to gain higher internal validity by minimizing the possible effect of confounding variables (Hulleman & Cordray, 2009). Furthermore, in future studies, cognitive load should be measured to validate our conclusion from Hypothesis 2.

Another offense to the internal validity of our results deals with missing data: Our study suffered from the fact that not all students participated in all measurements (because of illness, school organizational incidents, or a fire alarm during data collection). In answering our two research questions, only those students were included in our analyses who provided non-missing data on all variables. Having had multiple variables in our analyses, this decision for listwise deletion caused considerable percentages of missing data (RQ 1: 35%, RQ 2: 25%). Although analyzing the structure of missingness (cf., Graham 2009) justified us to conclude that missing data occurred largely at random so that our results would not largely be biased, future research should make every effort to reduce the amount of missing data. Again, laboratory settings would open appropriate opportunities to reduce missingness.

MST was used to capture the spontaneous recall and intended application of metacognitive skills in the form of scenario-based vignettes. Compared to questionnaires, vignettes are known to provide higher validity due to their situation-specific characteristics (Wirth & Leutner, 2008). Generally, vignettes require students to activate learning strategy knowledge independently in order to be able to use this for the solution of the task. However, students apply only learning strategy knowledge they plan to use. Whether they actually apply the planned learning strategies in the performance phase and in the reflection phase of the learning process at all and in a high-quality manner cannot be measured by the MST vignettes.

In the study of Schuster et al., (2020), students only mentioned a few metacognitive skills providing a floor effect. In the present study, a new coding system made it possible to capture more metacognitive skills compared to the previous study by considering metacognitive skills that were not explicitly provided in training. Although this can be seen as success, future studies should use multimodal measurements of the learning strategy application to increase validity even more in order to get a realistic impression of students’ actual metacognitive skills application (Azevedo et al., 2022; Dörrenbächer-Ulrich et al., 2021; Panadero et al., 2016; Rovers et al., 2019).

While the MST measuring metacognitive skills application was administered three times during the study (at T1, T2, and T3), due to logistical reasons, MicroDYN, measuring content knowledge acquisition, was only administered at T2. If MicroDYN had been also administered after the second 15 weeks of training (T3) or if there had been an authentic learning scenario requiring the text-highlighting strategy, we might have been able to observe even far transfer with an effect on content knowledge acquisition. Furthermore, we lost statistical power in the analyses because of missing data due to a fire alarm during T2 and, as T2 was in the winter, a lot of ill students. These aspects emphasize the need for further studies in laboratory settings with a larger number of students and MicroDYN to be administered not only once in order to enable pre-post-follow-up comparisons.

Conclusion

To the best of our knowledge, this study on transfer of metacognitive skills in self-regulated learning is one of the first intervention studies conducted in the field that (1) considered all criteria of high-quality research (Spörer & Glaser, 2010) and (2) examined transfer effects on metacognitive skill application as well as actually regulating cognitive strategy application resulting in content knowledge acquisition. Results show that hybrid training (that is, training of metacognitive skills in combination with training of a cognitive strategy) was crucial for successful near and far transfer of metacognitive skills. We could consistently empirically show near metacognitive skill transfer for metacognitive skill application and for content knowledge acquisition. Far transfer was restricted to learning scenarios in which students possessed sufficient metacognitive strategy knowledge. Since we administered this kind of far transfer task, we were only able to show far metacognitive skill transfer, but not for actually regulating cognitive strategy application resulting in content knowledge acquisition. However, our results suggest that it is sufficient to provide students with metacognitive knowledge of quality criteria of a certain cognitive strategy if, and only if, students received hybrid metacognitive skill training in advance.

Appendix

Data Availability

All authors declare that all data and materials as well as software application or custom code support the published claims and comply with field standards.

References

Aghaie, R., & Zhang, L. J. (2012). Effects of explicit instruction in cognitive and metacognitive reading strategies on Iranian EFL students’ reading performance and strategy transfer. Instructional Science, 40, 1063–1081

Anderson, J. R. (1982). Acquisition of cognitive skill. Psychological Review, 89, 369–406

Azevedo, R., et al. (2022). Lessons learned and future directions of Meta Tutor: Leveraging multichannel data to scaffold self-regulated learning with intelligent tutoring systems. Frontiers in Psychology, 13, DOI: https://doi.org/10.3389/fpsyg.2022.813632

Biwer, F., oude Egbrink, M. G. A., Aalten, P., & de Bruin, A. (2020). Fostering effective learning strategies on higher education - a mixed methods study. Journal of Applied Research in Memory and Cognition, 9, DOI: https://doi.org/10.1016/j.jarmac.2020.03.004

Boekaerts, M. (1999). Self-regulated learning: where we are today. International Journal of Educational Research, 31, 445–457

Carretti, B., Caldarola, N., Tencati, C., & Cornoldi, C. (2014). Improving reading comprehension in reading and listening settings: The effect of two training programmes focusing on metacognition and working memory. The British Journal of Educational Psychology, 84, 194–210

Desoete, A., Roeyers, H., & Clercq, A. (2003). Can offline metacognition enhance mathematical problem solving? Journal of Educational Psychology, 95, 188–200

Dignath, C., & Veenman, M. V. J. (2020). The role of direct strategy instruction and indirect activation of self-regulated learning—Evidence from classroom observation studies. Educational Psychology Review. https://doi.org/10.1007/s10648-020-09534-0

Donker, A. S., de Boer, H., Kostons, D., Dignath-van Ewijk, C., & van der Werf, M. (2014). Effectiveness of learning strategy instruction on academic performance: A meta-analysis. Educational Research Review, 11, 1–26

Dörrenbächer-Ulrich, L., Weißenfels, M., Russer, L., & Perels, F. (2021). Multimethod assessment of self-regulated learning in college students: Different methods for different components? Instructional Science, 49, 137–163. https://doi.org/10.1007/s11251-020-09533-2

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Association for Psychological Science, 16, 228–232