Abstract

This study proposes a non-parametric ICA method, called ECOPICA, which describes the joint distribution of data by empirical copulas and measures the dependence between recovery signals by an independent test statistic. We employ the grasshopper algorithm to optimize the proposed objective function. Several acceleration tricks are further designed to enhance the computational efficiency of the proposed algorithm under the parallel computing framework. Our simulation and empirical analysis show that ECOPICA produces better and more robust recovery performances than other well-known ICA approaches for various source distribution shapes, especially when the source distribution is skewed or near-Gaussian.

Similar content being viewed by others

Notes

IRMAS dataset can be found from https://www.upf.edu/web/mtg/irmas.

References

Abayomi, K., Lall, U., de la Pena, V.: Copula based independent component analysis. http://ssrn.com/abstract=1028822 (2007)

Bach, F.R., Jordan, M.I.: Kernel independent component analysis. J. Mach. Learn. Res. 3(Jul), 1–48 (2002)

Bansal, P.: Intel Image Classification (Image Scene Classification of Multiclass), Version 2 (2019). https://www.kaggle.com/puneet6060/intel-image-classification

Bartlett, M.S., Movellan, J.R., Sejnowski, T.J.: Face recognition by independent component analysis. IEEE Trans. Neural Netw. 13(6), 1450–1464 (2002). https://doi.org/10.1109/TNN.2002.804287

Beckmann, C.F., Smith, S.M.: Probabilistic independent component analysis for functional magnetic resonance imaging. IEEE Trans. Med. Imaging 23(2), 137–152 (2004). https://doi.org/10.1109/TMI.2003.822821

Beckmann, C.F., Smith, S.M.: Tensorial extensions of independent component analysis for multisubject FMRI analysis. Neuroimage 25(1), 294–311 (2005). https://doi.org/10.1016/j.neuroimage.2004.10.043

Bell, A.J., Sejnowski, T.J.: An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7(6), 1129–1159 (1995). https://doi.org/10.1162/neco.1995.7.6.1129

Bell, A.J., Sejnowski, T.J.: The “independent components’’ of natural scenes are edge filters. Vis. Res. 37(23), 3327–3338 (1997). https://doi.org/10.1016/S0042-6989(97)00121-1

Bosch, J.J., Janer, J., Fuhrmann, F., Herrera, P.: A comparison of sound segregation techniques for predominant instrument recognition in musical audio signals. In: ISMIR, pp. 559–564 (2012). Citeseer

Brunner, C., Naeem, M., Leeb, R., Graimann, B., Pfurtscheller, G.: Spatial filtering and selection of optimized components in four class motor imagery EEG data using independent components analysis. Pattern Recognit. Lett. 28(8), 957–964 (2007). https://doi.org/10.1016/j.patrec.2007.01.002

Calhoun, V.D., Adali, T., Pearlson, G.D., Pekar, J.J.: A method for making group inferences from functional MRI data using independent component analysis. Hum. Brain Mapp. 14(3), 140–151 (2001). https://doi.org/10.1002/hbm.1048

Cardoso, J.-F., Souloumiac, A.: Blind beamforming for non-gaussian signals. In: IEE Proceedings F (Radar and Signal Processing), vol. 140, pp. 362–370 (1993). https://doi.org/10.1049/ip-f-2.1993.0054. IET. https://digital-library.theiet.org/content/journals/10.1049/ip-f-2.1993.0054

Chen, R.-B., Guo, M., Härdle, W.K., Huang, S.-F.: Copica-independent component analysis via copula techniques. Stat. Comput. 25, 273–288 (2015). https://doi.org/10.1007/s11222-013-9431-3

Comon, P.: Independent component analysis, a new concept? Signal Process. 36(3), 287–314 (1994). https://doi.org/10.1016/0165-1684(94)90029-9

Dagher, I., Nachar, R.: Face recognition using IPCA-ICA algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 28(6), 996–1000 (2006). https://doi.org/10.1109/TPAMI.2006.118

Delorme, A., Sejnowski, T., Makeig, S.: Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage 34(4), 1443–1449 (2007). https://doi.org/10.1016/j.neuroimage.2006.11.004

Fukumizu, K., Gretton, A., Sun, X., Schölkopf, B.: Kernel measures of conditional dependence. In: Platt, J., Koller, D., Singer, Y., Roweis, S. (eds.) Advances in Neural Information Processing Systems, vol. 20, pp. 489–496. Curran Associates Inc, New York (2008)

Helwig, N.E.: ICA: Independent Component Analysis (2018). R package version 1.0-2. https://CRAN.R-project.org/package=ica

Hyvärinen, A.: Sparse code shrinkage: denoising of Nongaussian data by maximum likelihood estimation. Neural Comput. 11(7), 1739–1768 (1999). https://doi.org/10.1162/089976699300016214

Hyvarinen, A.: Fast and robust fixed-point algorithms for independent component analysis. IEEE Trans. Neural Netw. 10(3), 626–634 (1999). https://doi.org/10.1109/72.761722

Hyvärinen, A., Oja, E.: Independent component analysis by general nonlinear Hebbian-like learning rules. Signal Process. 64(3), 301–313 (1998). https://doi.org/10.1016/S0165-1684(97)00197-7

Hyvärinen, A., Oja, E.: Independent component analysis: algorithms and applications. Neural Netw. 13(4–5), 411–430 (2000). https://doi.org/10.1016/S0893-6080(00)00026-5

Hyvärinen, A., Karhunen, J., Oja, E.: 7. What is Independent Component Analysis?, pp. 145–164. Wiley, New York (2001). https://doi.org/10.1002/0471221317.ch7

Jutten, C., Herault, J.: Blind separation of sources, part I: an adaptive algorithm based on neuromimetic architecture. Signal Process. 24(1), 1–10 (1991). https://doi.org/10.1016/0165-1684(91)90079-X

Karvanen, J., Eriksson, J., Koivunen, V.: Maximum likelihood estimation of ICA model for wide class of source distributions. In: Neural Networks for Signal Processing X. Proceedings of the 2000 IEEE Signal Processing Society Workshop (Cat. No.00TH8501), vol. 1, pp. 445–454 (2000). https://doi.org/10.1109/NNSP.2000.889437. IEEE

Karvanen, J., Eriksson, J., Koivunen, V.: Maximum likelihood estimation of ica model for wide class of source distributions. In: Neural Networks for Signal Processing X. Proceedings of the 2000 IEEE Signal Processing Society Workshop (Cat. No.00TH8501), vol. 1, pp. 445–4541 (2000). https://doi.org/10.1109/NNSP.2000.889437. https://ieeexplore.ieee.org/document/889437

Kirshner, S., Póczos, B.: ICA and ISA using Schweizer–Wolff measure of dependence. In: Proceedings of the 25th International Conference on Machine Learning, pp. 464–471 (2008)

Kwak, K.-C., Pedrycz, W.: Face recognition using an enhanced independent component analysis approach. IEEE Trans. Neural Netw. 18(2), 530–541 (2007). https://doi.org/10.1109/TNN.2006.885436

Lai, Z., Xu, Y., Chen, Q., Yang, J., Zhang, D.: Multilinear sparse principal component analysis. IEEE Trans. Neural Netw. Learn. Syst. 25(10), 1942–1950 (2014). https://doi.org/10.1109/TNNLS.2013.2297381

Learned-Miller, E.G., Iii, J.W.F.: ICA using spacings estimates of entropy. J. Mach. Learn. Res. 4, 1271–1295 (2003)

Lopez-Paz, D., Hennig, P., Schölkopf, B.: The randomized dependence coefficient. Adv. Neural Inf. Process. Syst. 26 (2013)

Lu, C.-J., Lee, T.-S., Chiu, C.-C.: Financial time series forecasting using independent component analysis and support vector regression. Decis. Support Syst. 47(2), 115–125 (2009). https://doi.org/10.1016/j.dss.2009.02.001

Marchini, J.L., Heaton, C., Ripley, B.D.: fastICA: FastICA Algorithms to Perform ICA and Projection Pursuit. (2019). R package version 1.2-2. https://CRAN.R-project.org/package=fastICA

Nocedal, J., Wright, S.: Numerical Optimization (Springer Series in Operations Research and Financial Engineering), 2nd edn. Springer, New York (2009)

Oja, E., Kiviluoto, K., Malaroiu, S.: Independent component analysis for financial time series. In: Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No.00EX373), pp. 111–116 (2000). https://doi.org/10.1109/ASSPCC.2000.882456. https://ieeexplore.ieee.org/document/882456

Pham, T.D., Möcks, J.: Beyond principal component analysis: a trilinear decomposition model and least squares estimation. Psychometrika 57(2), 203–215 (1992)

Póczos, B., Ghahramani, Z., Schneider, J.: Copula-based kernel dependency measures. arXiv preprint arXiv:1206.4682 (2012)

Póczos, B., Ghahramani, Z., Schneider, J.: Copula-based kernel dependency measures. In: Proceedings of the 29th International Coference on International Conference on Machine Learning. ICML’12, pp. 1635–1642. Omnipress, Madison, WI, USA (2012)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2019). R Foundation for Statistical Computing. https://www.R-project.org

Rahmanishamsi, J., Alikhani-Vafa, A.: On a measure of dependence and its application to ICA. J. Stat. Model. Theory Appl. 1(1), 155–167 (2020)

Roy, A., Ghosh, A., Goswami, A., Murthy, C.: Some new copula based distribution-free tests of independence among several random variables. Sankhya A 84, 556–596 (2020). https://doi.org/10.1007/s13171-020-00207-2

Saremi, S., Mirjalili, S., Lewis, A.: Grasshopper optimisation algorithm: theory and application. Adv. Eng. Softw. 105, 30–47 (2017). https://doi.org/10.1016/j.advengsoft.2017.01.004

Sklar, A.: Fonctions de repartition an dimensions et leurs marges. Publ. inst. statist. univ. Paris 8, 229–231 (1959)

Song, L., Lu, H.: EcoICA: skewness-based ICA via eigenvectors of cumulant operator. In: Durrant, R.J., Kim, K.-E. (eds.) Proceedings of The 8th Asian Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 63, pp. 445–460. PMLR, The University of Waikato, Hamilton, New Zealand (2016). https://proceedings.mlr.press/v63/Song94.html

Spurek, P., Tabor, J., Rola, P., Ociepka, M.: Ica based on asymmetry. Pattern Recognit. 67, 230–244 (2017). https://doi.org/10.1016/j.patcog.2017.02.019

Suganthan, P.N., Hansen, N., Liang, J.J., Deb, K., Chen, Y.-P., Auger, A., Tiwari, S.: Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization. KanGAL report 2005005 (2005), 2005 (2005)

Tsai, A.C., Liou, M., Jung, T.-P., Onton, J.A., Cheng, P.E., Huang, C.-C., Duann, J.-R., Makeig, S.: Mapping single-trial EEG records on the cortical surface through a spatiotemporal modality. Neuroimage 32(1), 195–207 (2006). https://doi.org/10.1016/j.neuroimage.2006.02.044

Wen, Z., Yin, W.: A feasible method for optimization with orthogonality constraints. Math. Program. 142(1), 397–434 (2013). https://doi.org/10.1007/s10107-012-0584-1

Yang, J., Gao, X., Zhang, D., Yang, J.-Y.: Kernel ICA: an alternative formulation and its application to face recognition. Pattern Recognit. 38(10), 1784–1787 (2005). https://doi.org/10.1016/j.patcog.2005.01.023

Zhang, H., Yang, H., Guan, C.: Bayesian learning for spatial filtering in an EEG-based brain-computer interface. IEEE Trans. Neural Netw. Learn. Syst. 24(7), 1049–1060 (2013). https://doi.org/10.1109/TNNLS.2013.2249087

Acknowledgements

We would like to express our sincere gratitude to the Editor and two anonymous reviewers for their insightful comments to improve our work. We thank the National Science and Technology Council, Taiwan (Grants NSTC 112-2118-M-110-001-MY2, MOST 111-2118-M-006-002-MY2, and NSTC 112-2118-M-008-004-MY3) for partial support of this research. This article is dedicated to Professor Wen-Jang Huang for acknowledging his significant role in fostering mathematical and statistical talents in Taiwan until his passing on November 21, 2023.

Author information

Authors and Affiliations

Contributions

Pi, Guo, Chen, and Huang discussed the concept of the proposed method and wrote the main manuscript text. Pi conducted the numerical experiments.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Two tricks of acceleration

In this section, we introduce the details of the uniform trick and mini-batch trick for acceleration mentioned in Sect. 3.2.

-

(i)

Uniform Trick Since we need to calculate \(s_2\) in (7) multiple times, which measures the kernel distance between the empirical copula of the data \({\widehat{C}}_{\varvec{Y}, T}\) and the empirical independence copula \(\varPi _T\), the required computing resources increase dramatically when the signal length T is large. Since the estimation of \(s_2\) is not sensitive to the accuracy of the estimation of \(\varPi _T\), we propose to estimate \(s_2\) in the following way to reduce the computational complexity:

$$\begin{aligned} s_2 = \frac{1}{T \cdot T_0^n} \sum _{i=1}^T \prod _{j=1}^n \sum _{k=1}^{T_0} k_{\sigma }\left( u_j^{(i)}, \frac{k}{T_0}\right) , \end{aligned}$$where \(T_0 = \min {\{1000, T\}}\). That is, we use \(\varPi _{T_0}\), a “thicker" (but still unbiased) estimator of the independence copula, in the calculation of \(s_2\). By doing this, the computational complexity of \(s_2\) reduced from \(O(nT^2)\) to O(nT) when \(T > 1000\). We call it the uniform trick since this concise form is derived from the property of independence copula. Based on Lemma 6 in Póczos et al. (2012), Lemma L2 in Roy et al. (2020) shows that the error term of this approximation is bounded by \(4n/T_0^2\). In addition, Fig. 18 presents the contour plot of the error bounds of the uniform trick approximation under various T and n, which reveals that the error bounds decrease as T increasing for any fixed n and the error bounds are less than \(10^{-4}\) when \(T>2000\) for \(n\le 50\).

-

(ii)

Mini-Batch Trick In addition to the uniform trick, we further propose a mini-batch trick to reduce the computational complexity of \(I_{\sigma }\). By the findings in Roy et al. (2020), the power of the dependency testing based on \(I_{\sigma }\) is acceptable if the sample size \(T\ge 250\). Hence, the proposed mini-batch trick is to split a signal into multiple batches with a predetermined batch size, say 250. Accordingly, the \(I_{\sigma }\) is estimated by aggregating the measurements of the dependency on each batch, and the computational complexity is reduced to O(nT). Specifically, let T denote the length of the signal and \(T_0\) be the selected batch size. Hence, the number of batches is \(B = \lceil T/T_0 \rceil \). First, split the recover data matrix \({\textbf{Y}}\) into multiple batches \({\textbf{Y}}_1,\dots , {\textbf{Y}}_B\). Next, perform the empirical copula transformation on each batch to get \({\textbf{U}}_1,\dots , {\textbf{U}}_B\) and compute the dependency measurement, denoted by \({\widehat{I}}_{\sigma }({\textbf{Y}}_i)\), \(i=1,\ldots , B\), for each batch. Finally, the \(I_{\sigma }\) is estimated by \(B^{-1}\sum _{i=1}^B{\widehat{I}}_{\sigma }({\textbf{Y}}_i)\). The concept of this procedure is to estimate the overall dependency with the dependencies of nearby regions and ignore the dependencies of distant regions. From the view of the estimation of \(s_1\), we estimate the kernel matrix by a sparse matrix with zeros in all its off-diagonal block matrices.

Appendix B: Parallel computation framework

To compute \({\widehat{I}}_{\sigma }(\varvec{\beta })\) in Eq. (7) parallelly, which is equivalent to compute \(s_1\) and \(s_2\) in Eq. (7) parallelly. In the calculation of \(s_2\), we assign tasks to different threads according to \(i = 1, 2, \dots ,T\); In the calculation of \(s_1\), we assign tasks to different threads according to \(k = 1, 2, \dots , \left( {\begin{array}{c}T\\ 2\end{array}}\right) \), where the relationship between k and (i, j), \(1 \le i < j \le T\), is defined as

The key to this idea is that when k is determined, the closed-form of i and j can be found, making it possible to calculate \(k_\sigma ({\textbf{u}}^{(i)}, {\textbf{u}}^{(j)})\) used in Eq. (7). The following proposition shows how we fulfilled this idea, and the rigorous proof of it is also given below.

Proposition 1

If \(k = i + (j-1)(j-2)/2\), \(1 \le i < j \le T\), \(1 \le k \le \left( {\begin{array}{c}T\\ 2\end{array}}\right) \), and \(i, j, k \in {\mathbb {N}}\), then \(j = \lceil 1/2 + \sqrt{2k + 1/4} \rceil \) and \(i = k - (j-1)(j-2)/2\).

Proof

Before the proof, the matrix shown below helps to gain better intuition of (B1), where i and j indicate the row number and the column number of the matrix, and k indicates the value in the (i, j)-th entry of it.

This proof can be divided into two parts. The first part proves that j can be uniquely determined when k is fixed, and the second part proves that i can be uniquely determined when k and j are fixed. One can note that the second part of the proof is done through (B1) directly. Now assume k is fixed, the minimum value of i is 1 and the maximum value of it is \(j-1\). When \(i = 1\), we have \(2(k-i) = (j-1)(j-2)\), which implies \(j = 3/2 + \sqrt{2k-7/4}\); when \(i = j-1\), we have \(2k = j(j-1)\), which implies \(j = 1/2 + \sqrt{2k+1/4}.\) Since \(j \in {\mathbb {N}}\), it remains to show \(\lceil j_l(k) \rceil = \lfloor j_u(k) \rfloor \), where \(j_l(k) = 1/2 + \sqrt{2k+1/4}\) and \(j_u(k) = 3/2 + \sqrt{2k-7/4}\).

Suppose \(N< j_l(k) < N + 1\) for some \(k, N \in {\mathbb {N}}\), there exists \(k^*=N(N-1)/2 < k\) such that \(j_u(k^*+1) = N+1 \le j_u(k)\). Hence, there do not exists \(N \in {\mathbb {N}}\) such that \(j_l(k), j_u(k) \in (N, N+1)\), which implies \(\lceil j_l(k) \rceil \le \lfloor j_u(k) \rfloor \) and \(\lceil j_u(k) \rceil - \lfloor j_l(k) \rfloor \le 1 + \sqrt{2k-7/4} - \sqrt{2k+1/4} < 1\), which leads to contradiction since \(\lceil j_l(k) \rceil \) and \(\lfloor j_u(k) \rfloor \) are both positive integers. Therefore, \(\lceil j_l(k) \rceil = \lfloor j_u(k) \rfloor \) and the proof is completed. \(\square \)

Appendix C: Accelerated method experiments

To investigate the effects of batch sizes on the parallel computation framework, we used 10 cores for parallel computing and 30 particles in the ECOPICA algorithm. Three mixed signals with a length of 5000 were generated randomly. For each possible combination of \((n_1, n_2)\), we randomly generated 30 particles 50 times and recorded the time cost for dependency measuring in each run. The boxplots for the time costs of batch sizes 250, 1000, and 5000 are shown in Fig. 19, which reveals that calculating \(I_{\sigma }\) becomes more time-demanding with large batch sizes and it is appropriate to use more threads for acceleration in this case. On the contrary, it is more important to process different particles simultaneously in the case of small batch sizes. Through the experiment, two conclusions can be made:

-

1.

Using a smaller batch size can greatly reduce the time spent.

-

2.

How to allocate cores only matters when using a smaller batch size.

In addition, Table 3 shows the median of the time cost for different batch sizes and combinations of \((n_1, n_2)\). When the batch size is 250, we can save up to about 30% of the time spent. On the other hand, when the batch size is 1000 and 5000, the time saved is less than 8%. In our experiments, we set the batch size to 250, \(n_1 = 5\), and \(n_2 = 2\), resulting in over 1000 times speed up.

Appendix D: MICA algorithm

Following the same symbols in Sect. 2.3, based on the Theorem 3.1 in Rahmanishamsi and Alikhani-Vafa (2020), the dependency of a two-dimensional random vector \((Y_1, Y_2)\) can be measured by

where \(h(T)=T^2(T+1)/(T^3+T^2+5T)\). To minimize \(\delta _T\), we can simply omit the constant multipliers to make computation more efficient. For the multidimensional case, we use the sum of pairwise dependencies to measure the magnitude of multidimensional dependency. To fairly compare the performances of the two non-parametric dependency measurements, \(I_\sigma \), and \(\delta _T\), we adopt the same island-based GOA algorithm described in Algorithm 1 (substituting \(I_\sigma \) as \(\delta _T\)) to solve the ICA problem as well as adopts the same acceleration methods mentioned in 3.2 when conducting MICA.

Appendix E: Other simulation results

1.1 E.1: Gamma distribution

In this scenario, we consider generating independent source signals from a gamma distribution \(\text {Gamma}(x; a, 1)\) with density

where \(\Gamma (\cdot )\) is the gamma function. In particular, we consider the cases of \(a\in \{1, 2, 4, 8, 16, 32\}\). The corresponding densities of the 6 cases are shown in Fig. 20 and the associated excess kurtosis and skewness are reported in Table 4.

By performing the same procedure as in the scenario of Beta distributions, Fig. 21 presents the boxplots of mjSNRs of various ICA methods. From Fig. 21, one can find that all models worked well when the excess kurtosis \(\gamma _2 \ge 1\), that is, \(a \in \{ 1,2,4 \}\), and the ECOPICA performs better than other ICA methods. However, when a increases, ECOPICA and COPICA have better performance than other ICA methods. This phenomenon indicates that ECOPICA has achieved the best performance in most of our cases when the source distribution gradually approaches the Gaussian distribution.

1.2 E.2: Rayleigh distribution

In this example, we evaluated the capability of each model when the source signals follow the Rayleigh distribution. Compared with \(\text {Gamma}(16, 1)\), Rayleigh distribution has smaller excess kurtosis but larger skewness. The density function of the \(\text {Rayleigh}(x;\sigma )\) distribution is

Since both the excess kurtosis and the skewness of the Rayleigh distribution are constants, they can be computed by \(-(6 \pi ^2 -24\pi + 16)/(4-\pi )^2 \approx 0.245\) and \(2\sqrt{\pi }(\pi -3)/(4-\pi )^{3/2} \approx 0.631\), respectively. We only consider the case \(\sigma = 1\) in this example. Simulation results are presented in Fig. 22, which reveals that only ECOPICA, COPICA, and JADE worked well, and ECOPICA dominated the other models in this example.

1.3 E.3: Laplace distribution

Next, we generated source signals from a \(\text {Laplace}(x;\mu ,b)\) distribution with the following density function

Since the Laplace distribution is symmetric and its excess kurtosis is 3, we expect to see that all the models recovered the source signals successfully. The numerical results are presented in Fig. 23, which is consistent with our expectations.

1.4 E.4: Mixed scenario

Different from the previous scenarios generating source signals independently form a single distribution, we further consider generating 3 source signals from \(\text {Rayleigh}(1)\), \(\text {Beta}(1, 3)\), and \(\text {Gamma}(8, 1)\) separately. The approximated values of excess kurtosis and skewness of the three distributions are reported in Table 5. The boxplots of mjSNR value for each ICA model in this scenario are shown in Fig. 24, which reveals the superiority of ECOPICA over the other ICA methods.

Appendix F: Setup of (K, N, m) in the Algorithm 1

Intuitively, utilizing more resources for optimized search is more likely to yield better performance. In our experience, increasing the total number of particles (\(K \times N\)) in the GOA algorithm can mostly lead to improved performance. In practice, however, we need to consider constraints on time and resources. Here fixed the total number of particles \(K \times N\) as 30, we attempted to divide the particles into 2, 3, 5, and 6 groups (\(K = 2, 3, 5, 6\)) with different communication frequencies (\(m = 3, 5, 7\)). To see the effect of the different (K, N, m) combinations, we revisited the experiments for our first beta distribution scenario in Sect. 4.2, and for each parameter combination, we independently repeated the experiment 50 times under the same distribution assumption, resulting in a total of \(4 \times 3 \times 15 \times 50 = 9000\) experiments. The mjSNR is also used as the performance measurement.

Figure 25 shows the boxplots of mjSNR for different (K, N, m) settings. Based on this figure, we observed that the ECOPICA performance is not sensitive to the parameter set-up. Thus due to our experience, we recommend a minimum of 10 particles per group, i.e., \(N = 10\), and group size K as 3 to ensure optimization effectiveness. Regarding communication frequency, we advise a frequency of at least every 3 iterations (\(m = 3)\) for computational efficiency.

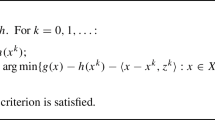

Appendix G: Gradient-based algorithm

Although GOA works well in our experiments, choosing the right number of particles may be a potential problem when n, the number of signals, is large because the dimension of the solution space grows quadratically as n increases. Here, we propose a gradient-based algorithm, so that no parameterization on the orthogonal transformation \({\textbf{R}}\) is needed.

First, we compute the derivative \(\displaystyle \frac{\partial {\widehat{I}}_{\sigma }({\textbf{Y}})}{\partial {\textbf{R}}^{(j)}}\), where \({\textbf{R}}^{(j)}\) is the j-th row of \({\textbf{R}}\). Consider \({\widehat{I}}_{\sigma }({\textbf{Y}}) = \sqrt{\frac{a}{b}}\), where \(a = s_1 - 2s_2 + v_3\) and \(b=v_1 - 2v_2 + v_3\) as defined in Eq. (7).

Recall that

For simplicity, we use the following notations:

Thus,

where

To approximate the density function of \(y_j\), one can use a histogram of \({\textbf{y}}_j\). Another way is to fit spline functions by points used in the histogram, which may be helpful if the second-order condition is considered.

For clarity, we rewrite the optimization problem in Eq. (9) as

To solve the problem, one can use the Cayley transformation technique as mentioned in Wen and Yin (2013). Given the current orthogonal transformation \({\textbf{R}}\) and the gradient \(G = \displaystyle \frac{\partial {\widehat{I}}_{\sigma }({\textbf{R}})}{\partial {\textbf{R}}_{i,j}}\), one can first compute the skew-symmetric matrix

Then, \({\textbf{R}}\) can be updated by the following rule:

where \(\tau \in {\mathbb {R}}\) is the step size. To find the appropriate step size, a line search can be adopted to find \(\tau \) satisfying the Armijo-Wolfe conditions (Nocedal and Wright 2009). To reduce the complexity of calculation and update faster, a stochastic gradient descent-like method can be adopted.

Although we propose this theoretical method to solve the ICA problem, the algorithm may be difficult to implement practically because solving the gradient of \({\widehat{I}}_{\sigma }({\textbf{R}})\) is much more complicated than computing \({\widehat{I}}_{\sigma }({\textbf{R}})\). In addition, the second-order condition should be considered to evaluate whether the final point attains the local minimum. Therefore, determining a differentiable approximation of \({\widehat{I}}_{\sigma }({\textbf{R}})\) is important for future research.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pi, HK., Guo, MH., Chen, RB. et al. ECOPICA: empirical copula-based independent component analysis. Stat Comput 34, 52 (2024). https://doi.org/10.1007/s11222-023-10368-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10368-3