Abstract

The estimator of a causal directed acyclic graph (DAG) with the PC algorithm is known to be consistent based on independent and identically distributed samples. In this paper, we consider the scenario when the multivariate samples are identically distributed but not independent. A common example is a stationary multivariate time series. We show that under a standard set of assumptions on the underlying time series involving \(\rho \)-mixing, the PC algorithm is consistent under temporal dependence. Further, we show that for the popular time series models such as vector auto-regressive moving average and linear processes, consistency of the PC algorithm holds. We also prove the consistency for the Time-Aware PC algorithm, a recent adaptation of the PC algorithm for the time series scenario. Our findings are supported by simulations and benchmark real data analyses provided towards the end of the paper.

Similar content being viewed by others

Notes

See (Robins et al. 2003) for definition of uniform consistency in this setting.

References

Assaad, C.K., Devijver, E., Gaussier, E.: Entropy-based discovery of summary causal graphs in time series. Entropy 24(8), 1156 (2022)

Biswas, R., Shlizerman, E.: Statistical perspective on functional and causal neural connectomics: a comparative study. Front. Syst. Neurosci. 16, 817962 (2022)

Biswas, R., Shlizerman, E.: Statistical perspective on functional and causal neural connectomics: the time-aware pc algorithm. PLoS Comput. Biol. 18(11), e1010653 (2022)

Bradley, R.C.: Basic properties of strong mixing conditions. A survey and some open questions. Probab. Surv. 2, 107–144 (2005)

Bussmann, B., Nys, J., Latré, S.: Neural additive vector autoregression models for causal discovery in time series. In: International Conference on Discovery Science, pp 446–460. Springer (2021)

Chickering, D.M.: Optimal structure identification with greedy search. J. Mach. Learn. Res. 3, 507–554 (2002)

Chickering, D.M.: Learning equivalence classes of Bayesian-network structures. J. Mach. Learn. Res. 2, 445–498 (2002)

Chu, T., Glymour, C., Ridgeway, G.: Search for additive nonlinear time series causal models. J. Mach. Learn. Res. 9(5) (2008)

Clive, W.J.: Granger. Essays in Econometrics: Collected Papers of Clive WJ Granger, vol. 32. Cambridge University Press, Cambridge (2001)

Dahlhaus, R., Eichler, M.: Causality and Graphical Models in Time Series Analysis. Oxford Statistical Science Series, pp. 115–137 (2003)

Dhamala, M., Rangarajan, G., Ding, M.: Analyzing information flow in brain networks with nonparametric granger causality. Neuroimage 41(2), 354–362 (2008)

Drton, M., Maathuis, M.H.: Structure learning in graphical modeling (2016) arXiv preprint arXiv:1606.02359

Ebert-Uphoff, I., Deng, Y.: Causal discovery for climate research using graphical models. J. Clim. 25(17), 5648–5665 (2012)

Eichler, M.: Graphical modelling of multivariate time series. Probab. Theory Relat. Fields 153, 233–268 (2012)

Eichler, M.: Causal inference with multiple time series: principles and problems. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 371(1997), 20110613 (2013)

Eichler, M., Didelez, V.: On granger causality and the effect of interventions in time series. Lifetime Data Anal. 16, 3–32 (2010)

Finn, E.S., Shen, X., Scheinost, D., Rosenberg, M.D., Huang, J., Chun, M.M., Papademetris, X., Constable, R.T.: Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nature Neurosci. 18(11), 1664–1671 (2015)

Fukumizu, K., Gretton, A., Sun, X., Schölkopf, B.: Kernel measures of conditional dependence. Adv. Neural Inf. Process. Syst. 20 (2007)

Geweke, J.: Inference and causality in economic time series models. Handb. Econ. 2, 1101–1144 (1984)

Glymour, C., Zhang, K., Spirtes, P.: Review of causal discovery methods based on graphical models. Front. Genet. 10, 524 (2019)

Hassabis, D., Kumaran, D., Summerfield, C., Botvinick, M.: Neuroscience-inspired artificial intelligence. Neuron 95(2), 245–258 (2017)

Hauser, A., Bühlmann, P.: Characterization and greedy learning of interventional Markov equivalence classes of directed acyclic graphs. J. Mach. Learn. Res. 13(1), 2409–2464 (2012)

Hilden, J., Glasziou, P.: Regret graphs, diagnostic uncertainty and youden’s index. Stat. Med. 15(10), 969–986 (1996)

Hotelling, H.: New light on the correlation coefficient and its transforms. J. R. Stat. Soc. Ser. B (Methodol.) 15(2), 193–232 (1953)

Jangyodsuk, P., Seo, D.-J., Gao, J.: Causal graph discovery for hydrological time series knowledge discovery (2014)

Kalisch, M., Bühlman, P.: Estimating high-dimensional directed acyclic graphs with the pc-algorithm. J. Mach. Learn. Res. 8(3) (2007)

Kolmogorov, A.N., Rozanov, Y.A.: On strong mixing conditions for stationary gaussian processes. Theory Probab. Appl. 5(2), 204–208 (1960)

Lauritzen, S.L.: Graphical models, vol. 17. Clarendon Press, Osford (1996)

Masry, E.: The estimation of the correlation coefficient of bivariate data under dependence: convergence analysis. Stat. Probab. Lett. 81(8), 1039–1045 (2011)

Meek, C.: Causal inference and causal explanation with background knowledge in uncertainty in artificial intelligence 11 (1995)

Meek, C.: Strong-completeness and faithfulness in belief networks. Technical report, Carnegie Mellon University (1995)

Miersch, P., Jiang, S., Rakovec, O., Zscheischler, J.: Identifying drivers of river floods using causal inference. Technical report, Copernicus Meetings (2023)

Molina, J.-L., Zazo, S.: Causal reasoning for the analysis of rivers runoff temporal behavior. Water Resour. Manage 31, 4669–4681 (2017)

Moraffah, R., Sheth, P., Karami, M., Bhattacharya, A., Wang, Q., Tahir, A., Raglin, A., Liu, H.: Causal inference for time series analysis: Problems, methods and evaluation. Knowl. Inf. Syst. 1–45 (2021)

Muirhead, R.J.: Aspects of Multivariate Statistical Theory. John Wiley & Sons, New Jersey (2009)

Pearl, J.: Causality. Cambridge University Press, Cambridge (2009)

Peters, J., Janzing, D., Schölkopf, B.: Causal inference on time series using restricted structural equation models. Adv. Neural Inf. Process. Syst. 26 (2013)

Pham, T.D., Tran, L.T.: Some mixing properties of time series models. Stoch. Process. Appl. 19(2), 297–303 (1985)

Politis, D.N., Romano, J.P.: The stationary bootstrap. J. Am. Stat. Assoc. 89(428), 1303–1313 (1994)

Razak, F.A., Jensen, H.J.: Quantifying ‘causality’in complex systems: understanding transfer entropy. PLoS One 9(6), e99462 (2014)

Reid, A.T., Headley, D.B., Mill, R.D., Sanchez-Romero, R., Uddin, L.Q., Marinazzo, D., Lurie, D.J., Valdés-Sosa, P.A., Hanson, S.J., Biswal, B.B., et al.: Advancing functional connectivity research from association to causation. Nature Neurosci. 22(11), 1751–1760 (2019)

Robins, J.M., Scheines, R., Spirtes, P., Wasserman, L.: Uniform consistency in causal inference. Biometrika 90(3), 491–515 (2003)

Rokem, A., Trumpis, M., Perez, F.: Nitime: time-series analysis for neuroimaging data. In: Proceedings of the 8th Python in Science Conference, pp. 68–75 (2009)

Runge, J., Nowack, P., Kretschmer, M., Flaxman, S., Sejdinovic, D.: Detecting and quantifying causal associations in large nonlinear time series datasets. Sci. Adv. 5(11), eaau4996 (2019)

Runge, J., Bathiany, S., Bollt, E., Camps-Valls, G., Coumou, D., Deyle, E., Glymour, C., Kretschmer, M., Mahecha, M.D., Muñoz-Marí, J., et al.: Inferring causation from time series in earth system sciences. Nature Commun. 10(1), 1–13 (2019)

Schmidt, C., Pester, B., Schmid-Hertel, N., Witte, H., Wismüller, A., Leistritz, L.: A multivariate granger causality concept towards full brain functional connectivity. PLoS ONE 11(4), e0153105 (2016)

Shojaie, A., Fox, E.B.: Granger causality: a review and recent advances. Annu. Rev. Stat. Appl. 9, 289–319 (2022)

Šimundić, A.-M.: Measures of diagnostic accuracy: basic definitions. Ejifcc 19(4), 203 (2009)

Smith, S.M., Miller, K.L., Salimi-Khorshidi, G., Webster, M., Beckmann, C.F., Nichols, T.E., Ramsey, J.D., Woolrich, M.W.: Network modelling methods for FMRI. Neuroimage 54(2), 875–891 (2011)

Spirtes, P., Meek, C., Richardson, T.: An algorithm for causal inference in the presence of latent variables and selection bias. Comput. Causation Discov. 21, 1–252 (1999)

Spirtes, P., Glymour, C.N., Scheines, R., Heckerman, D.: Causation, Prediction, and Search. MIT Press, Cambridge (2000)

Tsamardinos, I., Brown, L.E., Aliferis, C.F.: The max-min hill-climbing bayesian network structure learning algorithm. Mach. Learn. 65(1), 31–78 (2006)

Valdes-Sosa, P.A., Roebroeck, A., Daunizeau, J., Friston, K.: Effective connectivity: influence, causality and biophysical modeling. Neuroimage 58(2), 339–361 (2011)

Verma, T.S., Pearl, J.: Equivalence and synthesis of causal models. In: Probabilistic and Causal Inference: The Works of Judea Pearl, pp. 221–236. Morgan and Claypool Publishers (2022)

Weichwald, S., Jakobsen, M.E., Mogensen, P.B., Petersen, L., Thams, N., Varando, G.: Causal structure learning from time series: Large regression coefficients may predict causal links better in practice than small p-values. In Escalante, H.J., Hadsell, R. (eds.), Proceedings of the NeurIPS 2019 Competition and Demonstration Track, volume 123 of Proceedings of Machine Learning Research, pp. 27–36. PMLR, 08–14 Dec (2020)

Weichwald, S., Jakobsen, M.E., Mogensen, P.B., Petersen, L., Thams, N., Varando, G.: Causal structure learning from time series: Large regression coefficients may predict causal links better in practice than small p-values. In: Escalante, H.J., Hadsell, R. (eds), Proceedings of the NeurIPS 2019 Competition and Demonstration Track, Proceedings of Machine Learning Research, vol. 123, pp. 27–36. PMLR (2020)

Acknowledgements

SM was supported by the National University of Singapore Start-Up Grant R-155-000-233-133, 2021. The authors thank Dr. Eli Shlizerman for his helpful advice. RB would like to thank Dr. Surya Narayana Sripada for valuable insights and guidance in this work.

Author information

Authors and Affiliations

Contributions

RB conceived of the presented idea. RB and SM developed the theory. RB performed the computations and programming. RB and SM verified the analytical methods. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Facts about the VARMA process (8)

Lemma A.1

Suppose that the eigenvalues of F in (8) are of modulus strictly less than 1. Then \(\{X_{ti},X_{tj},t\ge 1\}\) is strongly mixing at an exponential rate for all i, j, that is, \(\xi _{ij}(t)=\exp (-a_{ij}t)\) for some \(a_{ij}>0\). Furthermore, the conditions \(\int \Vert x\Vert g(x) dx < \infty \) and \(\int \vert g(x+\theta ) - g(x) \vert dx = O(\Vert \theta \Vert )\) hold for the Gaussian density g.

Proof

It follows from Theorem 3.1 in (Pham and Tran 1985), that \(\Vert \Delta _t\Vert _1\rightarrow 0\) at exponential rate, where \(\Vert \Delta _t \Vert _1\) is a Gastwirth and Rubin mixing coefficient of \(\varvec{X}_t,t\ge 1\). Furthermore, \(\alpha _{ij}(t)\le 4\Vert \Delta _t \Vert _1\) (See (Pham and Tran 1985)), where \(\alpha _{ij}(t)\) are the strong mixing coefficients of \(\varvec{X}_t,t\ge 1\). Hence, \(\alpha _{ij}(t)\rightarrow 0\) as \(t\rightarrow \infty \), at an exponential rate.

Now, \(\int \Vert x\Vert g(x) dx < \infty \) holds trivially for the Gaussian density g. Therefore, it only remains to show that \(\int \vert g(x) - g(x-\theta ) \vert dx = O(\Vert \theta \Vert )\) for the Gaussian density. Towards this, note that:

where \(a \lesssim b\) denotes that \(a \le C b\) for some universal constant \(C>0\). \(\square \)

Lemma A.2

If \(\varvec{X}_t\) satisfies (A.1)-(A.3) (or (A.1)* - (A.3)*), then so does \(\varvec{\chi }_t\).

Proof

We only address the case when \(\varvec{X}_t\) satisfies (A.1)-(A.3), as the case when \(\varvec{X}_t\) satisfies (A.1)*-(A.3)* can be proved exactly similarly. For \(u\in \{i,j\}\), let \(q_u\) and \(r_u\) denote the quotient and remainder (respectively) on dividing u by p, with the slightly different convention of redefining \(r_u\) to be p if \(r_u=0\). Note that \(\chi _{tu} = X_{(t-1)r+q_u +{\varvec{1}}(r_u\ne p)~,~r_u}.\) Hence, if we define \({\mathcal {G}}_a^b\) as the \(\sigma \)-field generated by the random variables \(\{\chi _{si},\chi _{sj}:a\le s\le b\}\), then assuming \(i\le j\) without loss of generality, we have:

where \({\mathcal {F}}_{a}^b(u,v)\) denotes the \(\sigma \)-field generated by the random variables \(\{X_{tu},X_{tv}: a\le t\le b\}.\) Hence, denoting \(\tilde{\xi }_{ij}(k)\) to be the maximal correlation coefficients for the process \(\{\chi _{ti},\chi _{tj}: t=1,2,\ldots \}\), we have:

for all \(k > (q_j-q_i+1)/r\). Assumptions (A.1) and (A.2) for the process \(\{\chi _t\}\) now follow immediately. For (A.3), note that if \(n^{1/2}\xi _{r_i,r_j}(s_n) \rightarrow 0\) for some \(s_n=o(n^{1/2})\), then \(t_n:= r^{-1}(s_n+(q_j-q_i)+1)\) satisfies \(t_n = o(n^{1/2})\), and \(n^{1/2}\tilde{\xi }_{ij}(t_n) \rightarrow 0\), thereby verifying assumption (A.3) for the process \(\{\chi _t\}\). \(\square \)

Appendix B: Simulation study details

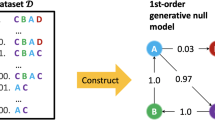

We study the following simulation paradigms.

-

1.

Linear Gaussian Vector Auto-Regressive (VAR) Model (Fig. 2a left-column). Let \(N(0,\eta ^2)\) denote a normal random variable with mean 0 and standard deviation \(\eta \). We define \(X_{tv}\) as a linear Gaussian VAR for \(v=1,\ldots ,4\) and \(t=1,2,\ldots ,1000\), whose true CFC has the edges \(1\rightarrow 3,2\rightarrow 3, 3\rightarrow 4\). Let \(X_{0v}\sim N(0,\eta ^2)\) for \(v=1,\ldots ,4\) and for \(t\ge 1\),

$$\begin{aligned} \begin{array}{ll} X_{t1}=1+\epsilon _{t1},&{} X_{t2}=-1+\epsilon _{t2},\\ X_{t3}=2X_{(t-1)1}+X_{(t-1)2}+\epsilon _{t3},\qquad \qquad &{} X_{t4}=2X_{(t-1)3}+\epsilon _{t4}. \end{array} \end{aligned}$$where \(\epsilon _t\sim N(0,\eta ^2)\). It follows that the Rolled Markov Graph with respect to \(\varvec{\chi }_t\) has edges \(1\rightarrow 3, 2\rightarrow 3, 3\rightarrow 4\). We obtain 25 simulations of the entire time series each for different noise levels \(\eta \in \{0.1,0.5,1,1.5,2,2.5,3,3.5\}\).

-

2.

Non-linear Non-Gaussian VAR Model (Fig. (2a) \(2^{\text {nd}}\) left-column). Let \(U(0,\eta )\) denote a Uniformly distributed random variable on the interval \((0,\eta )\). We define \(X_{tv}\) as a non-linear non-Gaussian VAR for \(v=1,\ldots ,4\) and for \(t=1,2,\ldots ,1000\), whose true CFC has the edges \(1\rightarrow 3, 2\rightarrow 3, 3\rightarrow 4\). Let \(X_{0v}\sim U(0,\eta )\) for \(v=1,\ldots ,4\) and for \(t\ge 1\),

$$\begin{aligned} \begin{array}{ll} X_{t1}\sim U(0,\eta ),&{} \quad X_{t2}\sim U(0,\eta ),\\ X_{t3}=4\sin (X_{(t-1)1}+3\cos (X_{(t-1)2})+U(0,\eta ),&{} \quad X_{t4}=2\sin (X_{(t-1)3})+U(0,\eta ). \end{array} \end{aligned}$$The Rolled Markov Graph with respect to \(\varvec{\chi }_t\) has edges \(1\rightarrow 3, 2\rightarrow 3, 3\rightarrow 4\). We obtain 25 simulations of the entire time series each for different noise levels \(\eta \in \{0.1,0.5,1,1.5,2,2.5,3,3.5\}\).

-

3.

Contemporaneous Vector Auto-Regressive Moving Average (VARMA) Model (Fig. (2a) \(3^{\text {rd}}\) left-column) Let \(N(0,\eta )\) denote a normal random variable with mean 0 and standard deviation \(\eta \). We define \(X_v(t)\) as a linear Gaussian VAR for \(v=1,\ldots ,4\) whose true CFC has the edges \(1\rightarrow 3,2\rightarrow 3, 3\rightarrow 4\). Let \(X_v(0)=N(0,\eta )\) for \(v=1,\ldots ,4\), and \(t=1,2,\ldots ,1000\),

$$\begin{aligned}&\varvec{X}_t=\begin{pmatrix} 1\\ -1\\ 1\\ 2 \end{pmatrix}+ \begin{pmatrix} 0 &{} 0 &{} 0 &{} 0\\ 0 &{} 0 &{} 0 &{} 0\\ 2 &{} 1 &{} 0 &{} 0\\ 0 &{} 0 &{} 2 &{} 0 \end{pmatrix} \varvec{X}_{t-1} + \begin{pmatrix} 0 &{} 0 &{} 0 &{} 0\\ 0 &{} 0 &{} 0 &{} 0\\ 0 &{} 0 &{} 0 &{} 0\\ 2 &{} 1 &{} 0 &{} 0 \end{pmatrix} \varvec{\epsilon }_{t-1}\\ {}&\quad + \begin{pmatrix} 1 &{} 0 &{} 0 &{} 0\\ 0 &{} 1 &{} 0 &{} 0\\ 2 &{} 1 &{} 1 &{} 0\\ 2 &{} 1 &{} 1 &{} 1 \end{pmatrix} \varvec{\epsilon }_{t} \end{aligned}$$It follows that the Rolled Markov Graph with respect to \(\varvec{\chi }_t\) has the edges \(1\rightarrow 3, 2\rightarrow 3, 3\rightarrow 4\). Furthermore, \(\varvec{X}_t\) is faithful with respect to the same graph. We obtain 25 simulations of the entire time series each for different noise levels \(\eta \in \{0.1,0.5,1,1.5,2,2.5,3,3.5\}\).

-

4.

Continuous Time Recurrent Neural Network (CTRNN) (Fig. (2a) right-column). We simulate neural dynamics by Continuous Time Recurrent Neural Networks (10). \(u_j(t)\) is the instantaneous firing rate at time t for a post-synaptic neuron j, \(w_{ij}\) is the linear coefficient to pre-synaptic neuron i’s input on the post-synaptic neuron j, \(I_j(t)\) is the input current on neuron j at time t, \(\tau _j\) is the time constant of the post-synaptic neuron j, with i, j being indices for neurons with m being the total number of neurons. Such a model is typically used to simulate neurons as firing rate units,

$$\begin{aligned}{} & {} \tau _j \frac{du_j(t)}{dt}=-u_j (t) \nonumber \\ {}{} & {} + \sum _{i=1}^m w_{ij} \sigma (u_i (t)) + I_j (t), \quad j=1,\ldots , m. \end{aligned}$$(10)We consider a motif consisting of 4 neurons with \(w_{13}=w_{23}=w_{34}=10\) and \(w_{ij}=0\) otherwise. We also note that in Eq. 10, activity of each neuron \(u_j(t)\) depends on its own past. Therefore, the Rolled Markov Graph with respect to \(\varvec{\chi }_t\) has the edges \(1\rightarrow 3,2\rightarrow 3,3\rightarrow 4, 1\rightarrow 1, 2\rightarrow 2, 3\rightarrow 3, 4\rightarrow 4\). The time constant \(\tau _i\) is set to 10 msecs for each neuron i. We consider \(I_i(t)\) to be distributed as an independent Gaussian process with mean 1 and the standard deviation \(\eta \). The signals are sampled at a time gap of \(e \approx 2.72\) msecs for a total duration of 1000 msecs. We obtain 25 simulations of the entire time series each for different noise levels \(\eta \in \{0.1,0.5,1,1.5,2,2.5,3,3.5\}\).

The GC graph is computed using the Nitime Python library, which fits an MVAR model followed by using the GrangerAnalyzer to compute the Granger Causality (Rokem et al. 2009). For PC, TPCS and TPCNS, the computation is done using the TimeAwarePC Python library (Biswas and Shlizerman 2022b). The TPCS and TPCNS algorithms estimate the Rolled Markov Graph from the signals with \(\tau =1, r=2\tau \).

The choice of thresholds tunes the decision whether a connection exists in the estimate. For PC, TPCS and TPCNS, increasing \(\alpha \) in conditional dependence tests increases the rate of detecting edges, but also increases the rate of detecting false positives. We consider \(\alpha = 0.01, 0.05, 0.1\) for PC, TPCS and TPCNS. For GC, a likelihood ratio statistic \(L_{uv}\) is obtained for testing \(A_{uv}(k) = 0\) for \(k=1,\ldots ,K\). An edge \(u\rightarrow v\) is outputted if \(L_{uv}\) has a value greater than a threshold. We use a percentile-based threshold, and output an edge \(u\rightarrow v\) if \(L_{uv}\) is greater than the \(100(1-\alpha )^{\text {th}}\) percentile of \(L_{ij}\)’s over all pairs of neurons (i, j) in the graph (Schmidt et al. 2016). We consider \(\alpha = 0.01, 0.05, 0.1\) which corresponds to percentile thresholds of \(99\%, 95\%, 90\%\). TPCNS is conducted with 50 subsamples with window length of 50 msec and frequency cutoff for edges to be equal to \(40\%\).

Appendix C: Benchmark datasets

We use the River Runoff benchmark real dataset from Causeme (Runge et al. 2019; Bussmann et al. 2021). This is a real dataset that consists of time series of river runoff at different stations. This time series has a daily time resolution and only includes summer months (June-August). The physical time delay of interaction (as inferred from the river velocity) are roughly below one day, hence the dataset has contemporaneous time interactions. This dataset has 12 variables and 4600 time recordings for each variable.

The PC algorithm was implemented with p-value 0.1 for kernel-based non-linear conditional dependence tests. In river-runoff data, the TPCS algorithm was implemented with \(\alpha = 0.05\), and the TPCNS algorithm was implemented with \(\tau =2\) and 4 recordings respectively, as per specification in the datasets, and \(\alpha = 0.05\) for the conditional dependence tests. TPCNS was conducted with 50 subsamples with window length of 50 recordings and frequency cut-off for edges to be equal to 0.1.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Biswas, R., Mukherjee, S. Consistent causal inference from time series with PC algorithm and its time-aware extension. Stat Comput 34, 14 (2024). https://doi.org/10.1007/s11222-023-10330-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10330-3