Abstract

Several joint models for longitudinal and survival data have been proposed in recent years. In particular, many authors have preferred to employ the Bayesian approach to model more complex structures, make dynamic predictions, or use model averaging. However, Markov chain Monte Carlo methods are computationally very demanding and may suffer convergence problems, especially for complex models with random effects, which is the case for most joint models. These issues can be overcome by estimating the parameters of each submodel separately, leading to a natural reduction in the complexity of the joint modelling, but often producing biased estimates. Hence, we propose a novel two-stage approach that uses the estimations from the longitudinal submodel to specify an informative prior distribution for the random effects when estimating them within the survival submodel. In addition, as a bias correction mechanism, we incorporate the longitudinal likelihood function in the second stage, where its fixed effects are set according to the estimation using only the longitudinal submodel. Based on simulation studies and real applications, we empirically compare our proposal with joint specification and standard two-stage approaches considering different types of longitudinal responses (continuous, count and binary) that share information with a Weibull proportional hazard model. The results show that our estimator is more accurate than its two-stage competitor and as good as jointly estimating all parameters. Moreover, the novel two-stage approach significantly reduces the computational time compared to the joint specification.

Similar content being viewed by others

1 Introduction

Joint modelling for longitudinal and survival data has become very popular medical applications (Ibrahim and Molenberghs 2009; Neuhaus et al. 2009; Wu et al. 2011). This class of models is a useful tool when it is necessary to study the association between repeated measurements and time until an event of interest (Papageorgiou et al. 2019). When considering both information together (simultaneously) into a single model, the estimates are less biased and there is an increase in statistical efficiency, since clinical hypotheses consider in advance that longitudinal and survival data are connected in some way (Muthén et al. 2009; Ibrahim et al. 2010).

The complexity of the joint modelling often entails two critical issues. The first one is related to identification problems due to the large number of parameters (Wu 2009; Gould et al. 2015; Papageorgiou et al. 2019). On the other hand, the calculation of marginal likelihood and survival functions include integrals without closed-form, so numerical integration methods are required, making the inferential process more time-consuming (Lesaffre and Spiessens 2001; Pinheiro and Chao 2006; Rizopoulos et al. 2009; Wu et al. 2010; Barrett et al. 2015).

Recently, Alvares and Rubio (Alvares and Rubio 2021) proposed a new specification for joint models of longitudinal and survival data that is flexible and does not require numerical integrations. The proposal divides the longitudinal linear predictor into variables that depend or not on time to share them with the survival submodel. This strategy leads to some limitations presented by the authors and which will not be discussed here. Another incipient and very promising proposal is the use of integrated nested Laplace approximation (INLA) for joint models (van Niekerk et al. 2020). However, this approach is not yet accessible to non-expert INLA users.

A more classic alternative is the two-stage approach (Self and Pawitan 1992; Tsiatis et al. 1995). In this case, the longitudinal submodel is fitted separately and then the shared parameters and random effects estimates are used into the survival submodel. Several authors have applied this strategy in order to circumvent the identifiability issues and reduce processing time (Murawska et al. 2012; Guler et al. 2017; Donnelly et al. 2018; Mauff et al. 2020). Nevertheless, the main disadvantage of this approach is that the survival model parameter estimates are often biased (Tsiatis and Davidian 2004; Rizopoulos 2012).

From the Bayesian perspective, some bias correction strategies have been proposed for the two-stage approach (Tsiatis et al. 1995; Wulfsohn and Tsiatis 1997; Viviani et al. 2014; Leiva-Yamaguchi and Alvares 2021). Still, Markov chain Monte Carlo (MCMC) methods are quite demanding and their use may be impractical (Ye et al. 2008; Andrinopoulou et al. 2018).

In this paper, we propose a novel two-stage approach that uses the estimations from the longitudinal submodel to specify an informative prior distribution for the random effects when estimating them within the survival submodel. In addition, as a bias correction mechanism, we incorporate longitudinal likelihood function in the second stage, where its fixed effects are set according to the estimation using only the longitudinal submodel.

The rest of the paper is organised as follows. Section 2 introduces a generic formulation for Bayesian joint models of longitudinal and survival data. Section 3 presents a standard two-stage approach to making the inference in joint modelling framework. Section 4 describes our two-stage proposal. Sections 5 and 6 compare the bias and computational time for the three inferential strategies presented using simulated and real data, respectively. Finally, Sect. 7 discusses the results obtained and potential extensions of our methodology.

2 Bayesian joint model formulation

Bayesian joint models for longitudinal and survival data usually assume a full joint probability distribution given by (Armero et al. 2018; Alvares et al. 2021):

where \({\varvec{y}}\) and \({\varvec{s}}\) represent the longitudinal and the survival process, respectively. Random effects are denoted by \({\varvec{b}}\) and have \({\varvec{\theta }}_{b}\) as their hyperparameters; \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{s}\) are the parameters and hyperparameters of each process. The factors on the right hand side of (1) are the conditional joint distribution of the processes \({\varvec{y}}\) and \({\varvec{s}}\) given \({\varvec{b}}\), \({\varvec{\theta }}_{y}\), and \({\varvec{\theta }}_{s}\), \(f({\varvec{y}}, {\varvec{s}} \mid {\varvec{b}}, {\varvec{\theta }}_{y}, {\varvec{\theta }}_{s})\); the conditional distribution of \({\varvec{b}}\) given \({\varvec{\theta }}_{b}\), \(f({\varvec{b}} \mid {\varvec{\theta }}_{b})\); and the (independent) prior distributions of \({\varvec{\theta }}_{y}\), \({\varvec{\theta }}_{s}\) and \({\varvec{\theta }}_{b}\), \(\pi ({\varvec{\theta }}_{y})\), \(\pi ({\varvec{\theta }}_{s})\) and \(\pi ({\varvec{\theta }}_{b})\), respectively.

Typically, the conditional joint distribution of \({\varvec{y}}\) and \({\varvec{s}}\) is factorised as (Wu and Carroll 1988):

where both processes are considered as conditionally independent given \({\varvec{b}}\), \({\varvec{\theta }}_{y}\), and \({\varvec{\theta }}_{s}\). Note that if the longitudinal parameters and hyperparameters, \({\varvec{\theta }}_{y}\), are not shared with the survival process, then \(f({\varvec{s}} \mid {\varvec{b}}, {\varvec{\theta }}_{y}, {\varvec{\theta }}_{s}) = f({\varvec{s}} \mid {\varvec{b}}, {\varvec{\theta }}_{s})\). In the next subsections, we will give more details about components from (1) and (2).

2.1 Longitudinal submodel

Typically, the terms \(f({\varvec{y}} \mid {\varvec{b}}, {\varvec{\theta }}_{y})\) in (2) and \(f({\varvec{b}} \mid {\varvec{\theta }}_{b})\) in (1) for an individual i at time t are expressed through a generalised linear mixed specification:

where EF represents an exponential family distribution with mean \(\mu _{i}(t \mid \cdot )\); \({\varvec{\theta }}_{y}\) contains the parameters and hyperparameters of \(\mu _{i}(t \mid \cdot )\); and \({\varvec{\theta }}_{b}={\varvec{\Sigma }}\). The mean trajectory function can be formulated in different ways, but the most common corresponds to the linear mixed model specification with \(\mu _{i}(t \mid \cdot ) = {\varvec{x}}_{i}^{\top }(t){\varvec{\beta }} + {\varvec{z}}_{i}^{\top }(t){\varvec{b}}_{i}\), where \({\varvec{x}}_{i}(t)\) and \({\varvec{z}}_{i}(t)\) are covariates of the individual i at time t, \({\varvec{\beta }}\) is the vector of fixed effects, and \({\varvec{b}}_{i}\) represents a K-dimensional vector of individual random effects (Pinheiro and Bates 2000).

2.2 Survival submodel

We will introduce some key elements of survival analysis in order to characterise the term \(f({\varvec{s}} \mid {\varvec{b}}, {\varvec{\theta }}_{y}, {\varvec{\theta }}_{s})\) in (2) using a hazard function. Let \(T_{i}^{*}\) denote the event time for individual i, \(C_{i}\) the censoring time, \(T_{i}=\min \{T_{i}^{*}, C_{i}\}\) the observed time, and \(\delta _{i}=\text {I}(T_{i}^{*}\le C_{i})\) the event indicator. The proportional hazards specification is commonly used to model these problems (Kumar and Klefsjö 1994) and at the same time incorporate the longitudinal information from submodel (3). So, the hazard function of the survival time \(T_{i}\) of individual i is expressed by:

where \(h_{0}(t \mid {\varvec{\theta }}_{s})\) represents an arbitrary baseline hazard function at time t, \({\varvec{x}}_{i}\) is a covariate vector with coefficients \({\varvec{\gamma }}\), and \(g_{i}(t \mid {\varvec{b}}_{i}, {\varvec{\theta }}_{y})\) is a temporal function of terms that comes from the longitudinal submodel and can be specified in different ways, such as \(\mu _{i}(t \mid \cdot )\), \(d\mu _{i}(t \mid \cdot )/dt\), and \(\int _{0}^{t}\mu _{i}(v \mid \cdot )dt\) (Rizopoulos 2012). The component \(g_{i}(t \mid \cdot )\) has the role of connecting both processes, while \(\alpha \) measures the strength of this association. Finally, \({\varvec{\theta }}_{s}\) includes the parameters of \(h_{0}(t \mid \cdot )\), \({\varvec{\gamma }}\) and \(\alpha \).

2.3 Prior distributions

We assume independent and diffuse marginal prior distributions (Gelman et al. 2013). More specifically, all longitudinal and survival regression coefficients (including the association parameter) follow Normal distributions with mean at zero and large variance; \(\sigma \) follows a weakly-informative half-Cauchy(0, 5) (Gelman 2006); and \({\varvec{\Sigma }}\) follows an inverse-Wishart(V, r), where V is a \(K\times K\) identity matrix, \(r=K\) is the degrees-of-freedom parameter (Schuurman et al. 2016). Once the baseline hazard function \(h_{0}(t \mid {\varvec{\theta }}_{s})\) is defined, diffuse priors are also specified for its parameters.

3 Standard two-stage (STS) approach

As a potential competitor, we use Tsiatis, De Gruttola and Wulfsohn (Tsiatis et al. 1995) approach adapted to the Bayesian framework (Leiva-Yamaguchi and Alvares 2021). Specifically, in the first stage, we calculate the maximum a posteriori (MAP) of the random effects \({\varvec{b}}\) and parameters \({\varvec{\theta }}_{y}\) estimated from longitudinal submodel fit separately. Posterior mean or median can also be used instead. In the second stage, we incorporate the temporal function into the survival submodel considering \(g_{i}(t \mid \hat{\varvec{b}}_{i}, \hat{\varvec{\theta }}_{y})\), where \(\hat{\varvec{b}}_{i}\) and \(\hat{\varvec{\theta }}_{y}\) are the MAP of \({\varvec{b}}_{i}\) and \({\varvec{\theta }}_{y}\), and then the posterior distribution of \({\varvec{\theta }}_{s}\) is calculated.

4 Novel two-stage (NTS) approach

Like all two-stage methods for joint models, the first stage is to fit the longitudinal submodel separately. So, we calculate the MAP of longitudinal and random effects parameters, which will be denoted by \(\hat{\varvec{\theta }}_{y}\) and \(\hat{\varvec{\theta }}_{b}\), respectively. Note that it is not necessary to estimate the random effects \({\varvec{b}}\) and therefore, we can run a Bayesian estimation algorithm using the marginalised likelihood function (integrating out the random effects) of the longitudinal submodel (3).

In the second stage, we estimate the random effects \({\varvec{b}}\) and parameters \({\varvec{\theta }}_{s}\) replacing the full joint probability distribution (1) with:

where the \(f(\cdot )\)’s and \(\pi (\cdot )\) have the same functional form as in (1) and (2), except that the parameters \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{b}\) are now assumed to be known and so it is not necessary to include their prior distributions in (5). Note that when using \({\varvec{\theta }}_{b}\) estimated from the longitudinal submodel (i.e., \(\hat{\varvec{\theta }}_{b}\)), random effects \({\varvec{b}}\) become fixed effects, further reducing the complexity of (5) with respect to the full joint probability distribution (1).

Essentially, our proposal simplifies/approximates the likelihood function from the joint approach by assuming that the shared parameters can be satisfactorily estimated from their MAP using the longitudinal submodel separately. In particular, as \(n_{i}\) grows, the longitudinal submodel \(f({\varvec{y}} \mid {\varvec{b}}, {\varvec{\theta }}_{y})\) becomes the dominating part in the conditional joint likelihood function (see Eq. (2)), implying that the survival submodel is of minimal importance to obtain the MAP of \({\varvec{\theta }}_{y}\). Then, the MAP of \({\varvec{\theta }}_{s}\) is estimated considering \({\varvec{\theta }}_{y}\) as a nuisance parameter (Murphy and van der Vaart 2000) and assuming that the contribution from random effects and priors in the posterior distribution is similar using either JS or NTS. See Appendix 1 for technical details.

5 Simulation studies

Simulation studies were performed to empirically corroborate that our methodological proposal (see Sect. 4) presents improvements to the dual problem “biased estimation versus high computational time” when compared to the joint specification (JS, see Sect. 2) and standard two-stage (STS, see Sect. 3) approaches. Algorithm 1 summarises a simulation scheme for joint models of longitudinal and survival data (Rizopoulos 2022; Austin 2012).

In this algorithm, \(x_{i}\) denotes a binary covariate, \({\varvec{b}}_{i}\) the vector of random effects, \(T_{i}^{*}\) the event time (not always observed), \(C_{i}\) the censoring time, \(T_{i}\) the observed time, and \(\delta _{i}\) the event indicator. Additionally, n, \(\Delta \), and \(t_{\max }\) represent number of individuals, interval between longitudinal observations, and maximum observational time, respectively.

We analysed the joint formulation based on submodels (3) and (4) considering longitudinal continuous, count and binary responses, where the mean of the longitudinal submodel, \(\mu _{i}(t \mid \cdot )\), was shared with the survival submodel.

5.1 Longitudinal continuous response

The longitudinal continuous process for an individual i at time t is written as a linear mixed-effects model:

where \({\varvec{\theta }}_{y} = (\beta _{0},\beta _{1},\beta _{2}, \sigma ^{2})^{\top }\) and \({\varvec{\theta }}_{b} = {\varvec{\Sigma }}\). The covariate \(x_{i}\) is a binary group indicator. The hazard function of the survival time \(T_{i}\) of individual i is specified as follows:

where \(h_{0}(t \mid {\varvec{\theta }}_{s})\) is a Weibull baseline hazard with \(\phi \) and \(\exp (\gamma _{0})\) being shape and scale parameters, respectively, and \({\varvec{\theta }}_{s} = (\phi ,\gamma _{0},\gamma _{1},\alpha )^{\top }\).

5.2 Longitudinal count response

The longitudinal count process for an individual i at time t is written as a Poisson mixed-effects model:

where \({\varvec{\theta }}_{y} = (\beta _{0},\beta _{1},\beta _{2})^{\top }\), random effects are defined as in (6), and the survival submodel is specified as in (7).

5.3 Longitudinal binary response

Similar to the previous case, the longitudinal binary process for an individual i at time t is also written as a Bernoulli mixed-effects model:

where \({\varvec{\theta }}_{y} = (\beta _{0},\beta _{1},\beta _{2})^{\top }\), random effects are defined as in (6), and the survival submodel is specified as in (7).

5.4 Simulation settings

For each scenario (Normal, Poisson, and Bernoulli), we simulated 300 datasets with \(n=500\), \(\Delta =1\), and \(t_{\max }=20\). Table 1 presents the specified parameter values for each scenario.

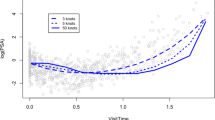

Figure 1 shows the proportion of the number of longitudinal observations per individual from the simulated data using the parameter values from Table 1. The resulting censoring rates varied between 26% and 40% (Normal), 22% and 35% (Poisson), and 16% and 27% (Bernoulli).

5.5 Results

The longitudinal submodels for continuous, count and binary responses were set as in (6), (8) and (9), respectively, and the survival submodels as in (7). Prior distributions were specified as in Sect. 2.3. The MCMC configuration was defined as follows: 2000 iterations with warm-up of 1000 for the joint model using the joint specification (JS) and for the longitudinal submodel from the novel two-stage (NTS) and standard two-stage (STS) approaches. Additionally, 1000 iterations with warm-up of 500 were set to run the survival submodel from both two-stage approaches. All models were implemented using the rstan R-package version 2.21.7 (Stan Development Team 2022). Simulations were performed on a Dell laptop with 2.2 GHz Intel Core i7, 32 GB RAM, OS Windows.

Table 2 displays the running times of each estimation approach.

Unsurprisingly, STS had the lowest computational time, while the NTS required less processing time than the JS approach.

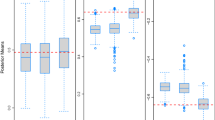

From an inferential perspective, we analysed the posterior mean for the group (\(\gamma _{1}\)) and association (\(\alpha \)) parameters in order to study the (potential) bias produced by two-stage approaches by ignoring the joint nature between the longitudinal and survival processes. Figure 2 shows the bias (posterior mean - true parameter value) and standard deviation for the group parameter using the JS, NTS and STS estimation approaches in each simulated scenario.

The group parameter was robustly estimated by the three approaches in all scenarios. However, as expected, the standard deviation from the STS estimators is smaller than the competitors. This happens because sharing the MAP of random effects without correction causes losses in the variability of such components, leading to an artificial and wrong reduction in the uncertainty of survival parameter estimators. Furthermore, it is important to note that NTS and JS produced nearly equivalent standard deviations. Figure 3 shows the bias and standard deviation for the association parameter using the JS, NTS and STS estimation approaches in each simulated scenario.

The association parameter was unbiased using JS and NTS, while the STS approach underestimated the positive associations (\(\alpha =2\) for Normal and \(\alpha =3\) for Bernoulli) and overestimated the negative one (\(\alpha =-2\) for Poisson). Also, our proposal presented standard deviations for the association parameter with insignificant differences in relation to those from the JS estimator, while STS again reduced such standard deviations.

The reduction of complexity of the inferential process by using two-stage strategies was quite clear when analysing computational times. However, only our proposal corrected the estimation bias without affecting the estimator variance, producing results equivalent to the JS approach.

Appendix 1 shows complementary results using the same scenarios as in Table 1, but increasing the number of repeated measurements per individual (\(30 \le n_{i} \le 50\)). Once again, our two-stage strategy corrected the estimation bias as well as kept the standard deviation of the estimator equivalent to that from the JS approach.

6 Applications

We used three real datasets, which are available in the JM R-package version 1.5-2 (Rizopoulos 2022), in order to illustrate the performance of the novel two-stage (NTS, see Sect. 4) against the joint specification (JS, see Sect. 2) and the standard two-stage (STS, see Sect. 3) approach. A brief description of each dataset is presented below:

-

Acquired immunodeficiency syndrome (aids): This dataset includes information about 467 patients with advanced human immunodeficiency virus infection during antiretroviral treatment who had failed or were intolerant to zidovudine therapy (Goldman et al. 1996). The longitudinal variable “CD4” was transformed by applying the square root; and “drug” was set as a group variable (zalcitabine \(=0\) and didanosine \(=1\)).

-

Liver cirrhosis (prothro): This dataset includes 488 patients with histologically verified liver cirrhosis, with 237 patients randomised to treatment with prednisone and the remaining received placebo (Andersen et al. 1993). The longitudinal variable “pro” was divided by 10 and truncated to the nearest integer; and “treat” was set as a group variable (placebo \(=0\) and prednisone \(=1\)).

-

Primary biliary cirrhosis (pbc): This dataset includes 312 patients with primary biliary cirrhosis, a rare autoimmune liver disease, at Mayo Clinic, where 158 patients were randomised to D-penicillamine and 154 to placebo (Murtaugh et al. 1994). The longitudinal variable “serBilir” was dichotomised into \(\le 2\) (0, control group) or \(>2\) (1, late stage of PBC); and “drug” was set as a group variable (placebo \(=0\) and D-penicil \(=1\)).

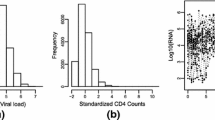

Figure 4 shows the frequency of the number of longitudinal observations per individual from the three datasets. Censoring rates are 59.74% (aids), 40.16% (prothro), and 55.13% (pbc).

Based on the nature of the longitudinal response type of each dataset, the joint models are specified as follows: (6) and (7) for aids dataset; (8) and (7) for prothro dataset; and (9) and (7) for pbc dataset. Prior distributions and MCMC configuration were defined as in Sect. 5.5. We provide the code for reproducibility at www.github.com/daniloalvares/BJM-NTS2.

Table 3 shows the posterior summary of the group (\(\gamma _{1}\)) and association (\(\alpha \)) parameters estimated from JS, NTS and STS approaches for each dataset as well as the average computational time.

In the three applications, the group parameters (\(\gamma _{1}\)) are quite equivalent, except for small differences in the posterior mean using the STS approach, but their 95% credible intervals are similar to those of the other approaches. On the other hand, the biased estimation using the STS approach is more notorious for the association parameter (\(\alpha \)). When using the Normal submodel (aids dataset), JS and NTS are almost identical, while STS has a slightly higher point estimate and a narrower 95% credible interval. Equivalence between JS and NTS is maintained for the joint models for count (prothro) and binary (pbc) data, and in both cases the estimation bias using STS is more prominent, in which the credible intervals do not include the posterior mean of \(\alpha \) calculated by the JS approach (reference estimation). Again, STS remains the fastest option, while NTS has reasonable average times and significantly lower than those of the JS approach.

7 Discussion

We have proposed a novel two-stage (NTS) approach that can be used in a Bayesian joint analysis of longitudinal and survival data. We have simplified the complex nature of the joint specification (JS) by using a two-stage strategy that at the same time corrects the well-known bias from a standard two-stage (STS) approach.

NTS was shown to be successful, producing satisfactory results both in simulated scenarios and in real applications considering different types of longitudinal responses (continuous, count and binary) that share information with a Weibull proportional hazard model.

In practical terms, the group parameter (\(\gamma _{1}\)) has not been significantly affected by the use of two-stage approaches. However, we have once again observed that the association parameter (\(\alpha \)) is biased when the STS is used. This issue is mitigated by replacing STS with NTS. Furthermore, we highlight that the strength of the association does not affect the NTS estimation, even with few longitudinal observations per individual. In particular, the strong association presented in the simulation studies and also observed in the pbc dataset modelling did not produce bias, as typically happens with regression calibration approaches (Nevo et al. 2020). Moreover, our proposal had a much lower computational time than the JS approach in all scenarios.

Theoretically, the correction of shared information should be enough so that all other characteristics of the survival model (baseline hazard and coefficients of baseline variables) do not suffer estimation bias compared to the JS. However, in this paper, we have not investigated different baseline hazard specifications to corroborate such behaviour, but we hope to do so in future works.

Another advantage of the proposed two-stage approach is that it can be easily generalised to more complex longitudinal (e.g., skewed or multiple longitudinal data) and survival (e.g., competing-risks or multistate data) submodels.

References

Alvares, D., Rubio, F.J.: A tractable Bayesian joint model for longitudinal and survival data. Stat. Med. 40(19), 4213–4229 (2021)

Alvares, D., Armero, C., Forte, A., Chopin, N.: Sequential Monte Carlo methods in Bayesian joint models for longitudinal and time-to-event data. Stat. Model. 21(1–2), 161–181 (2021)

Andersen, P.K., Borgan, O., Gill, R.D., Keiding, N.: Statistical models based on counting processes, 1st edn. Springer, New York (1993)

Andrinopoulou, E.R., Eilers, P.H.C., Takkenberg, J., Rizopoulos, D.: Improved dynamic predictions from joint models of longitudinal and survival data with time-varying effects using P-splines. Biometrics 74(2), 685–693 (2018)

Armero, C., Forte, A., Perpiñán, H., Sanahuja, M.J., Agustí, S.: Bayesian joint modeling for assessing the progression of chronic kidney disease in children. Stat. Methods Med. Res. 27(1), 298–311 (2018)

Austin, P.C.: Generating survival times to simulate Cox proportional hazards models with time-varying covariates. Stat. Med. 31(29), 3946–3958 (2012)

Barrett, J., Diggle, P., Henderson, R., Taylor-Robinson, D.: Joint modelling of repeated measurements and time-to-event outcomes: flexible model specification and exact likelihood inference. J. Roy. Stat. Soc.: Ser. B (Methodol.) 77(1), 131–148 (2015)

Donnelly, C., McFetridge, L.M., Marshall, A.H., Mitchell, H.J.: A two-stage approach to the joint analysis of longitudinal and survival data utilising the Coxian phase-type distribution. Stat. Methods Med. Res. 27(12), 3577–3594 (2018)

Gelman, A.: Prior distributions for variance parameters in hierarchical models. Bayesian Anal. 1(3), 515–534 (2006)

Gelman, A., Carlin, J.B., Stern, H.S., Dunson, D.B., Vehtari, A., Rubin, D.B.: Bayesian data analysis, 3rd edn. Chapman & Hall, New York (2013)

Goldman, A.I., Carlin, B., Crane, L.R., Launer, C., Korvick, J.A., Deyton, L., Abrams, D.I.: Response of CD4 lymphocytes and clinical consequences of treatment using ddI or ddC in patients with advanced HIV infection. J. Acquir. Immune Defic. Syndr. Hum. Retrovirol. 11(2), 161–169 (1996)

Gould, A.L., Boye, M.E., Crowther, M.J., Ibrahim, J.G., Quartey, G., Micallef, S., Bois, F.Y.: Joint modeling of survival and longitudinal non-survival data: current methods and issues. Report of the DIA Bayesian joint modeling working group. Stat. Med. 34(14), 2181–2195 (2015)

Guler, I., Faes, C., Cadarso-Suárez, C., Teixeira, L., Rodrigues, A., Mendonça, D.: Two-stage model for multivariate longitudinal and survival data with application to nephrology research. Biom. J. 59(6), 1204–1220 (2017)

Ibrahim, J.G., Molenberghs, G.: Missing data methods in longitudinal studies: a review. TEST 18(1), 1–43 (2009)

Ibrahim, J.G., Chu, H., Chen, L.M.: Basic concepts and methods for joint models of longitudinal and survival data. J. Clin. Oncol. 28(16), 2796–2801 (2010)

Kumar, D., Klefsjö, B.: Proportional hazards model: a review. Reliab. Eng. Syst. Safety 44, 177–188 (1994)

Leiva-Yamaguchi, V., Alvares, D.: A two-stage approach for Bayesian joint models of longitudinal and survival data: correcting bias with informative prior. Entropy 23, 1–10 (2021)

Lesaffre, E., Spiessens, B.: On the effect of the number of quadrature points in a logistic random effects model: an example. J. Roy. Stat. Soc.: Ser. C (Appl. Stat.) 50(3), 325–335 (2001)

Mauff, K., Steyerberg, E., Kardys, I., Boersma, E., Rizopoulos, D.: Joint models with multiple longitudinal outcomes and a time-to-event outcome: a corrected two-stage approach. Stat. Comput. 30, 999–1014 (2020)

Murawska, M., Rizopoulos, D., Lesaffre, E.: A two-stage joint model for nonlinear longitudinal response and a time-to-event with application in transplantation studies. J. Prob. Stat. 2012, 1–18 (2012)

Murphy, S.A., van der Vaart, A.W.: On profile likelihood. J. Am. Stat. Assoc. 95(450), 449–465 (2000)

Murtaugh, P.A., Dickson, E.R., van Dam, G.M., Malinchoc, M., Grambsch, P.M., Langworthy, A.L., Gips, C.H.: Primary biliary cirrhosis: prediction of short-term survival based on repeated patient visits. Hepatology 20(1), 126–134 (1994)

Muthén, B., Asparouhov, T., Boye, M.E., Hackshaw, M., Naegeli, A.: Applications of continuous-time survival in latent variable models for the analysis of oncology randomized clinical trial data using Mplus. Muthén & Muthén, Technical report (2009)

Neuhaus, A., Augustin, T., Heumann, C., Daumer, M.: A review on joint models in biometrical research. J. Stat. Theory Practice 3(4), 855–868 (2009)

Nevo, D., Hamada, T., Ogino, S., Wang, M.: A novel calibration framework for survival analysis when a binary covariate is measured at sparse time points. Biostatistics 21(2), 148–163 (2020)

Papageorgiou, G., Mauff, K., Tomer, A., Rizopoulos, D.: An overview of joint modeling of time-to-event and longitudinal outcomes. Annual Rev. Stat. Appl. 6(1), 223–240 (2019)

Pinheiro, J.C., Bates, D.M.: Linear mixed-effects models: basic concepts and examples. In: Mixed-effects models in S and S-Plus, pp. 3–56. Springer, New York (2000)

Pinheiro, J.C., Chao, E.C.: Efficient Laplacian and adaptive Gaussian quadrature algorithms for multilevel generalized linear mixed models. J. Comput. Graph. Stat. 15(1), 58–81 (2006)

Rizopoulos, D.: JM: joint modeling of longitudinal and survival data. R Package Version 1.5-2. https://cran.r-project.org/package=JM (2022)

Rizopoulos, D.: Joint models for longitudinal and time-to-event data: with applications in R, 1st edn. Chapman & Hall/CRC, Boca Raton (2012)

Rizopoulos, D., Verbeke, G., Molenberghs, G.: Shared parameter models under random effects misspecification. Biometrika 95(1), 63–74 (2008)

Rizopoulos, D., Verbeke, G., Lesaffre, E.: Fully exponential Laplace approximations for the joint modelling of survival and longitudinal data. J. Royal Stat. Soci.: Ser. B (Stat. Methodol.) 71(3), 637–654 (2009)

Schuurman, N.K., Grasman, R.P.P.P., Hamaker, E.L.: A comparison of inverse-Wishart prior specifications for covariance matrices in multilevel autoregressive models. Multivar. Behav. Res. 51(2–3), 185–206 (2016)

Self, S., Pawitan, Y.: Modeling a marker of disease progression and onset of disease. In: AIDS Epidemiology: methodological Issues, pp. 231–255. Springer, New York (1992)

Stan Development Team: rstan: R Interface to Stan. R Package Version 2.21.7. http://mc-stan.org (2022)

Tsiatis, A.A., Davidian, M.: Joint modeling of longitudinal and time-to-event data: an overview. Stat. Sin. 14(3), 809–834 (2004)

Tsiatis, A.A., De Gruttola, V., Wulfsohn, M.S.: Modeling the relationship of survival to longitudinal data measured with error. Applications to survival and CD4 counts in patients with AIDS. J. Am. Stat. Associat. 90(429), 27–37 (1995)

van Niekerk, J., Bakka, H., Rue, H.: Competing risks joint models using R-INLA. Stat. Model. 21(1–2), 56–71 (2020)

Viviani, S., Alfó, M., Rizopoulos, D.: Generalized linear mixed joint model for longitudinal and survival outcomes. Stat. Comput. 24(3), 417–427 (2014)

Wu, L.: Mixed effects models for complex data, 1st edn. Chapman & Hall/CRC, New York (2009)

Wu, M.C., Carroll, R.J.: Estimation and comparison of changes in the presence of informative right censoring by modeling the censoring process. Biometrics 44(1), 175–188 (1988)

Wu, L., Liu, W., Hu, X.J.: Joint inference on HIV viral dynamics and immune suppression in presence of measurement errors. Biometrics 66(2), 327–335 (2010)

Wu, L., Wei, L., Yi, G.Y., Huang, Y.: Analysis of longitudinal and survival data: joint modeling, inference methods, and issues. J. Probab. Stat. 2012, 1–17 (2011)

Wulfsohn, M.S., Tsiatis, A.A.: A joint model for survival and longitudinal data measured with error. Biometrics 53(1), 330–339 (1997)

Ye, W., Lin, X., Taylor, J.M.G.: Semiparametric modeling of longitudinal measurements and time-to-event data - a two-stage regression calibration approach. Biometrics 64(4), 1238–1246 (2008)

Acknowledgements

Danilo Alvares was supported by the Chilean National Fund for Scientific and Technological Development (FONDECYT), Grant No. 11190018, and by the UKRI Medical Research Council, Grant No. MC_UU_00002/5.

Author information

Authors and Affiliations

Contributions

DA and VLY contributed equally to the writing of the manuscript and implementation of the methodology. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Let \(f({\varvec{y}}_{i} \mid {\varvec{b}}_{i}, {\varvec{\theta }}_{y})\) and \(f(s_{i} \mid {\varvec{b}}_{i}, {\varvec{\theta }}_{y}, {\varvec{\theta }}_{s})\) be the longitudinal and survival likelihood functions for individual i, respectively, where \({\varvec{y}}_{i} = \{y_{it_{ij}}: t_{ij}=1,\ldots ,n_{i}\}\). We will analyse the behaviour of the maximum a posteriori (MAP) of \({\varvec{\theta }}_{y}\) from the conditional joint log-likelihood function of individual i, as \(n_{i}\) grows:

Calculating \(\lim _{n_{i} \rightarrow \infty }\big [l_{n_{i}}({\varvec{\theta }}_{y})/n_{i}\big ]\), we note that the longitudinal term is Op(1), while the survival one is op(1). Extending this result to the joint likelihood for all individuals is straightforward. In summary, as \(n_{i}\) grows, the MAP of \({\varvec{\theta }}_{y}\) (let’s say \(\hat{\varvec{\theta }}_{y}\)) depends only on the longitudinal submodel. In this sense, we can calculate \(\hat{\varvec{\theta }}_{y}\) both from the JS and from the NTS first stage equivalently. It is noteworthy that this result only applies to \(\hat{\varvec{\theta }}_{y}\), i.e., there are no guarantees of equivalence for the MAP of \({\varvec{b}}_{i}\) (let’s say \(\hat{\varvec{b}}_{i}\)) from the first stage. This is a key difference between our proposal and STS. While NTS needs the MAP of \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{b}\) from the first stage to then correct the random effects in the second one, STS uses \(\hat{\varvec{\theta }}_{y}\) and \(\hat{\varvec{b}}_{i}\) (both calculated from the longitudinal submodel) in the second stage. Therefore, the bias produced by STS when estimating \({\varvec{\theta }}_{s}\) is mainly due to uncorrected random effects.

Taking the MAP of \({\varvec{\theta }}_{y}\) from the NTS approach, our next goal is to calculate the MAP of \({\varvec{\theta }}_{s}\). Hence, similar to a profiled likelihood strategy (Murphy and van der Vaart 2000), we can consider \(\hat{\varvec{\theta }}_{y}\) as a vector of nuisance parameters and then estimate the MAP of \({\varvec{\theta }}_{s}\) from the the conditional joint log-likelihood function:

The MAP of \(({\varvec{\theta }}_{y},{\varvec{\theta }}_{s})\) jointly estimated from the conditional joint log-likelihood function is equivalent to calculating the MAP of \({\varvec{\theta }}_{y}\) from \(l_{n_{i}}({\varvec{\theta }}_{y})\) (assuming that \(n_{i} \rightarrow \infty \)) and then obtaining the MAP of \({\varvec{\theta }}_{s}\) from \(l({\varvec{\theta }}_{s})\). Note that Eq. (A2) is specified as the log-likelihood function in NTS second stage.

So far we have omitted the specification of random effects and priors for the estimation of the MAP of \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{s}\). We assume that the distribution of \({\varvec{b}}\), \(f({\varvec{b}} \mid {\varvec{\theta }}_{b})\), from the JS approach is the same as the prior distribution of fixed effects \({\varvec{b}}\), \(\pi ({\varvec{b}} \mid \hat{\varvec{\theta }}_{b})\), from the NTS approach. So, the contribution of random effects to calculate the MAP of \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{s}\) from both approaches is the same. Additionally, even if the random effects distributions are misspecified, Rizopoulos, Verbeke and Molenberghs’ Theorem 1 (Rizopoulos et al. 2008) demonstrates that, as \(n_{i}\) grows, the estimates of \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{s}\) will be minimally affected. On the other hand, we assume that the prior distributions are proper and weakly-informative, so they must have an irrelevant role in calculating the MAP of \({\varvec{\theta }}_{y}\) and \({\varvec{\theta }}_{s}\). Furthermore, as the sample size increases, the choice of prior distributions have a minimal impact.

Appendix B

Simulation results from 300 datasets comparing the joint specification (JS), the novel two-stage (NTS), and the standard two-stage (STS) for longitudinal continuous, count, and binary responses. The panels show a bias and b standard deviation for the group parameter. Number \(n_{i}\) of repeated measurements per individual varying between 30 and 50

Simulation results from 300 datasets comparing the joint specification (JS), the novel two-stage (NTS), and the standard two-stage (STS) for longitudinal continuous, count, and binary responses. The panels show a bias and b standard deviation for the association parameter. Number \(n_{i}\) of repeated measurements per individual varying between 30 and 50

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alvares, D., Leiva-Yamaguchi, V. A two-stage approach for Bayesian joint models: reducing complexity while maintaining accuracy. Stat Comput 33, 115 (2023). https://doi.org/10.1007/s11222-023-10281-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10281-9