Abstract

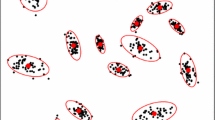

Maximum likelihood through the EM algorithm is widely used to estimate the parameters in hidden structure models such as Gaussian mixture models. But the EM algorithm has well-documented drawbacks: its solution could be highly dependent from its initial position and it may fail as a result of degeneracies. We stress the practical dangers of theses limitations and how carefully they should be dealt with. Our main conclusion is that no method enables to address them satisfactory in all situations. But improvements are introduced, first, using a penalized log-likelihood of Gaussian mixture models in a Bayesian regularization perspective and, second, choosing the best among several relevant initialisation strategies. In this perspective, we also propose new recursive initialization strategies which prove helpful. They are compared with standard initialization procedures through numerical experiments and their effects on model selection criteria are analyzed.

Similar content being viewed by others

References

Banfield, J.D., Raftery, A.E.: Model-based Gaussian and non-Gaussian clustering. Biometrics 49, 803–821 (1993)

Baudry, J.-P.: Sélection de modèle pour la classification non supervisée. Choix du nombre de classes. PhD thesis, Université Paris-Sud (2009)

Baudry, J.-P., Maugis, C., Michel, B.: Slope heuristics: overview and implementation. Stat. Comput. 22, 455–470 (2011)

Berchtold, A.: Optimisation of mixture models: comparison of different strategies. Comput. Stat. 19, 385–406 (2004)

Biernacki, C., Celeux, G., Govaert, G.: Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans. Pattern Anal. Mach. Intell. 22, 719–725 (2000)

Biernacki, C., Celeux, G., Govaert, G.: Choosing starting values for the EM algorithm for getting the highest likelihood in multivariate gaussian mixture models. Comput. Stat. Data Anal. 41, 561–575 (2003)

Birgé, L., Massart, P.: Minimal penalties for Gaussian model selection. Probab. Theory Relat. Fields 138, 33–73 (2007)

Celeux, G., Govaert, G.: A classification EM algorithm for clustering and two stochastic versions. Comput. Stat. Data Anal. 14, 315–332 (1992)

Celeux, G., Govaert, G.: Parsimonious Gaussian models in cluster analysis. Pattern Recognit. 28, 781–793 (1995)

Ciuperca, G., Ridolfi, A., Idier, J.: Penalized maximum likelihood estimator for normal mixtures. Scand. J. Stat. 30, 45–59 (2003)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Methodological) 39(1), 1–38 (1977)

Fraley, C., Raftery, A., Wehrens, R.J.: Incremental model-based clustering for large datasets with small clusters. J. Comput. Graph. Stat. 14, 529–546 (2005)

Fraley, C., Raftery, A.E.: Model-based clustering, discriminant analysis and density estimation. J. Am. Stat. Assoc. 97, 611–631 (2002)

Fraley, C., Raftery, A.E.: Bayesian regularization for normal mixture estimation and model-based clustering. J. Classif. 24, 155–181 (2007)

Frazee, A.C., et al.: ReCount: a multi-experiment resource of analysis-ready RNA-seq gene count datasets. BMC Bioinform. 12, 449 (2011)

Graveley, B.R., et al.: The development transcriptome of Drosophila melanogaster. Nature 471, 473–479 (2011)

Keribin, C.: Consistent estimation of the order of mixture models. Sankhya A 62(1), 49–66 (2000)

McLachlan, G., Krishnan, T.: The EM Algorithm and Extensions, 2nd edn. Wiley, Hoboken (2008)

McLachlan, G.J., Peel, D.: Finite Mixture Models. Wiley, New York (2000)

Papastamoulis, P., Martin-Magniette, M.-L., Maugis-Rabusseau, C.: On the estimation of mixtures of poisson regression models with large numbers of components. Computat. Stat. Data Anal. (to appear) (2014)

Pelleg, D., Moore, A.W.: X-means: Extending k-means with efficient estimation of the number of clusters. In: Langley, P. (ed.) ICML, pp. 727–734. Morgan Kaufmann (2000)

Rau, A., Maugis-Rabusseau, C., Martin-Magniette, M.-L., Celeux, G.: Co-expression analysis of high-throughput transcriptome sequencing data with Poisson mixture models. Bioinformatics. (to appear) (2015)

Roeder, K., Wasserman, L.: Practical Bayesian density estimation using mixtures of normals. J. Am. Stat. Assoc. 92, 894–902 (1997)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Baudry, JP., Celeux, G. EM for mixtures. Stat Comput 25, 713–726 (2015). https://doi.org/10.1007/s11222-015-9561-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-015-9561-x