Abstract

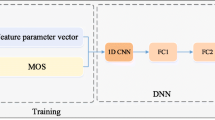

For mobile streaming media service providers, it is necessary to accurately predict the quality of experience (QoE) to formulate appropriate resource allocation and service quality optimization strategies. In this paper, a QoE evaluation model is proposed by considering various influencing factors (IFs), including perceptual video quality, video content characteristics, stalling, quality switching and video genre attribute. Firstly, a no-reference video multimethod assessment fusion (VMAF) model is constructed to measure the perceptual quality of the video by the deep bilinear convolutional neural network. Then, the deep spatio-temporal features of video are extracted using a TSM-ResNet50 network, which incorporates temporal shift module (TSM) with ResNet50, obtaining feature representation of video content characteristics while balancing computational efficiency and expressive ability. Secondly, video genre attribute, which reflects the user’s preference for different types of videos, is considered as a IF while constructing the QoE model. The statistical parameters of other IFs, including the video genre attribute, stalling and quality switching, are combined with VMAF and deep spatio-temporal features of video to form an overall description parameters vector of IFs for formulating the QoE evaluation model. Finally, the mapping relationship model between the parameters vector of IFs and the mean opinion score is established through designing a deep neural network. The proposed QoE evaluation model is validated on two public video datasets: WaterlooSQoE-III and LIVE-NFLX-II. The experimental results show that the proposed model can achieve the state-of-the-art QoE prediction performance.

Similar content being viewed by others

References

Index, C. V. N. (2014). Global mobile data traffic forecast update. Cisco White Paper, Cisco: San Jose, CA, USA (Vol. 7, p. 180). http://www.cisco.com/en/US/solutions/collateral/ns341/ns525/ns537/ns705/ns827/white_paper_c11-520862.pdf.

Xiao, A., Liu, J., Li, Y., Song, Q., & Ge, N. (2018). Two-phase rate adaptation strategy for improving real-time video QoE in mobile networks. China Communications, 15(10), 12–24.

Brunnström, K., Beker, S. A., De Moor, K., Dooms, A., Egger, S., Garcia, M. N., et al. (2012). Qualinet white paper on definitions of quality of experience. European Network on Quality of Experience in Multimedia Systems and Services (COST Action IC 1003), 3, 1–26.

Mpeg, I. (2012). Information technology-dynamic adaptive streaming over HTTP (DASH)-part 1: Media presentation description and segment formats. ISO/IEC MPEG, Technical report.

Duanmu, Z., Liu, W., Chen, D., Li, Z., Wang, Z., Wang, Y., & Gao, WC. (2019). A knowledge-driven quality-of-experience model for adaptive streaming videos. arXiv preprint arXiv:1911.07944.

Ghadiyaram, D., Pan, J., & Bovik, A. C. (2018). Learning a continuous-time streaming video QoE model. IEEE Transactions on Image Processing, 27(5), 2257–2271.

Duanmu, Z., Zeng, K., Ma, K., Rehman, A., & Wang, Z. (2016). A quality-of-experience index for streaming video. IEEE Journal of Selected Topics in Signal Processing, 11(1), 154–166.

Bampis, C. G., & Bovik, A. C. (2017). Learning to predict streaming video QoE: Distortions, rebuffering and memory. arXiv preprint arXiv:1703.00633.

Bentaleb, A., Begen, A. C., & Zimmermann, R. (2016). SDNDASH: Improving QoE of HTTP adaptive streaming using software defined networking. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, October 15–19, 2016 (pp. 1296–1305).

I. Recommendation. (2017). 1203.3, Parametric bitstream-based quality assessment of progressive download and adaptive audiovisual streaming services over reliable transport-quality integration module. International Telecommunication Union.

Spiteri, K., Urgaonkar, R., & Sitaraman, R. K. (2020). BOLA: Near-optimal bitrate adaptation for online videos. IEEE/ACM Transactions on Networking, 28(4), 1698–1711.

Yin, X., Jindal, A., Sekar, V., & Sinopoli, B. (2015). A control-theoretic approach for dynamic adaptive video streaming over HTTP. In Proceedings of the 2015 ACM conference on special interest group on data communication,. London United Kingdom, August 17–21, 2015 (pp. 325–338).

Liu, X., Dobrian, F., Milner, H., Jiang, J., Sekar, V., Stoica, I., & Zhang, H. (2012). A case for a coordinated internet video control plane. In Proceedings of the ACM SIGCOMM 2012 conference on Applications, technologies, architectures, and protocols for computer communication, Helsinki Finland, August 13–17, 2012 (pp. 359–370).

Li, Z., Aaron, A., Katsavounidis, I., Moorthy, A., & Manohara, M. (2016). Toward a practical perceptual video quality metric, The Netflix Tech Blog, 6(2).

Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Du, L., Zhuo, L., Li, J., Zhang, J., Li, X., & Zhang, H. (2020). Video quality of experience metric for dynamic adaptive streaming services using DASH standard and deep spatial-temporal representation of video. Applied Sciences, 10(5), 1793.

Rodríguez, D. Z., Rosa, R. L., Costa, E. A., Abrahão, J., & Bressan, G. (2014). Video quality assessment in video streaming services considering user preference for video content. IEEE Transactions on Consumer Electronics, 60(3), 436–444.

Lin, J., Gan, C., & Han, S. (2019). Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF international conference on computer vision,. Long Beach, CA, USA. June 16–17, 2019 (pp. 7083–7093).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, Nevada, USA, June 27–30, 2016 (pp. 770–778).

Soundararajan, R., & Bovik, A. C. (2012). Video quality assessment by reduced reference spatio-temporal entropic differencing. IEEE Transactions on Circuits and Systems for Video Technology, 23(4), 684–694.

Mittal, A., Soundararajan, R., & Bovik, A. C. (2012). Making a “completely blind” image quality analyzer. IEEE Signal Processing Letters, 20(3), 209–212.

Rehman, A., Zeng, K., & Wang, Z. (2015). Display device-adapted video quality-of-experience assessment. In Human vision and electronic imaging XX (Vol. 9394, p. 939406). International Society for Optics and Photonics.

Zhang, W., Ma, K., Yan, J., Deng, D., & Wang, Z. (2018). Blind image quality assessment using a deep bilinear convolutional neural network. IEEE Transactions on Circuits and Systems for Video Technology, 30(1), 36–47.

Sheikh, H. R., & Bovik, A. C. (2006). Image information and visual quality. IEEE Transactions on Image Processing, 15(2), 430–444.

Li, S., Zhang, F., Ma, L., & Ngan, K. N. (2011). Image quality assessment by separately evaluating detail losses and additive impairments. IEEE Transactions on Multimedia, 13(5), 935–949.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In IEEE conference on computer vision and pattern recognition, Miami, Florida, June 20–25, 2009 (pp. 248–255).

Tang, J., Liu, J., Zhang, M., & Mei, Q. (2016). Visualizing large-scale and high-dimensional data. In Proceedings of the 25th international conference on world wide web, Montreal, Canada, Apr 11–15, 2016 (pp. 287–297).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: A simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(1), 1929–1958.

Duanmu, Z., Rehman, A., & Wang, Z. (2018). A quality-of-experience database for adaptive video streaming. IEEE Transactions on Broadcasting, 64(2), 474–487.

Bampis, C. G., Li, Z., Katsavounidis, I., Huang, T. Y., Ekanadham, C., & Bovik, A. C. (2021). Towards perceptually optimized adaptive video streaming-a realistic quality of experience database. IEEE Transactions on Image Processing, 30, 5182–5197.

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61531006, and in part by the Beijing Municipal Education Commission Cooperation Beijing Natural Science Foundation under Grant KZ201910005007.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

Authors declare that they have no conflicts of interest.

Ethical approval

This study does not contain any study with human or animal participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Du, L., Yang, S., Zhuo, L. et al. Quality of Experience Evaluation Model with No-Reference VMAF Metric and Deep Spatio-temporal Features of Video. Sens Imaging 23, 15 (2022). https://doi.org/10.1007/s11220-022-00386-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11220-022-00386-2