Abstract

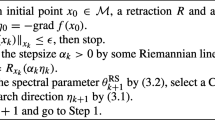

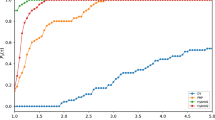

This paper presents a new conjugate gradient method on Riemannian manifolds and establishes its global convergence under the standard Wolfe line search. The proposed algorithm is a generalization of a Wei-Yao-Liu-type Hestenes-Stiefel method from Euclidean space to the Riemannian setting. We prove that the new algorithm is well-defined, generates a descent direction at each iteration, and globally converges when the step lengths satisfy the standard Wolfe conditions. Numerical experiments on the matrix completion problem demonstrate the efficiency of the proposed method.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization algorithms on matrix manifolds. Princeton University Press, Princeton (2008)

Boumal, N.: An introduction to optimization on smooth manifolds. Cambridge University Press, Cambridge (2023)

Boumal, N., Mishra, B., Absil, P.-A., Sepulchre, R.: Manopt, a Matlab toolbox for optimization on manifolds. J. Mach. Learn. Res. 15(42), 1455–1459 (2014)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Fletcher, R., Reeves, C. M.: Function minimization by conjugate gradients. Comput. J. Standards(2):149–154, (1964)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. National Bur. Stand. 49(6), 409–436 (1952)

Iiduka, H., Sakai, H.: Riemannian stochastic fixed point optimization algorithm. Num. Algorithm 90(4), 1493–1517 (2022)

Najafi, S., Hajarian, M.: Multiobjective BFGS method for optimization on Riemannian manifolds. Comput. Optim. Appl. (2023). https://doi.org/10.1007/s10589-023-00522-y

Najafi, S., Hajarian, M.: Multiobjective conjugate gradient methods on Riemannian manifolds. J. Optim. Theory Appl. 197(3), 1229–1248 (2023)

Nocedal, J., Wright, S.J.: Numerical optimization. Springer, New York (2006)

Polak, E., Ribiére, G.: Note sur la convergence de méthodes de directions conjuguées. Revue française dinformatique et de recherche opérationnelle 3(R1), 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 9(4), 94–112 (1969)

Ring, W., Wirth, B.: Optimization methods on Riemannian manifolds and their application to shape space. SIAM J. Optim. 22(2), 596–627 (2012)

Sakai, H., Iiduka, H.: Hybrid Riemannian conjugate gradient methods with global convergence properties. Comput. Optim. Appl. 77(3), 811–830 (2020)

Sakai, H., Iiduka, H.: Sufficient descent Riemannian conjugate gradient methods. J. Optim. Theory Appl 190(1), 130–150 (2021)

Sakai, H., Iiduka, H.: Riemannian adaptive optimization algorithm and its application to natural language processing. IEEE Trans Cybern 52(8), 7328–7339 (2022)

Sakai, H., Sato, H., Iiduka, H.: Global convergence of Hager-Zhang type Riemannian conjugate gradient method. Appl. Math. Comput. 441, 127685 (2023)

Sato, H.: A Dai-Yuan-type Riemannian conjugate gradient method with the weak Wolfe conditions. Comput Optim. Appl. 64(1), 101–118 (2016)

Sato, H.: Riemannian optimization and its applications. Springer, New York (2021)

Sato, H.: Riemannian conjugate gradient methods: general framework and specific algorithms with convergence analyses. SIAM J. Optim. 32(4), 2690–2717 (2022)

Sato, H., Iwai, T.: A new, globally convergent Riemannian conjugate gradient method. Optimization 64(4), 1011–1031 (2015)

Shengwei, Y., Wei, Z., Huang, H.: A note about WYL’s conjugate gradient method and its applications. Appl. Math. Comput. 191(2), 381–388 (2007)

Tang, C., Rong, X., Jian, J., Xing, S.: A hybrid Riemannian conjugate gradient method for nonconvex optimization problems. J. Appl. Math. Comput. 69(1), 823–852 (2023)

Vandereycken, B.: Low-rank matrix completion by Riemannian optimization. SIAM J. Optim. 23(2), 1214–1236 (2013)

Wei, Z., Yao, S., Liu, L.: The convergence properties of some new conjugate gradient methods. Appl. Math. Comput. 183(2), 1341–1350 (2006)

Zhang, L.: An improved Wei-Yao-Liu nonlinear conjugate gradient method for optimization computation. Appl. Math. Comput. 215(6), 2269–2274 (2009)

Acknowledgements

We would like to express our appreciation to the editor and an anonymous referee for providing valuable feedback that improved the quality of this paper.

Author information

Authors and Affiliations

Contributions

SN: software, validation, formal analysis, writing—original draft, investigation, conceptualization, methodology. MH: writing—review and editing, supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Najafi, S., Hajarian, M. An improved Riemannian conjugate gradient method and its application to robust matrix completion. Numer Algor (2023). https://doi.org/10.1007/s11075-023-01688-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-023-01688-6