Abstract

The nonlinear inverse problem of exponential data fitting is separable since the fitting function is a linear combination of parameterized exponential functions, thus allowing to solve for the linear coefficients separately from the nonlinear parameters. The matrix pencil method, which reformulates the problem statement into a generalized eigenvalue problem for the nonlinear parameters and a structured linear system for the linear parameters, is generally considered as the more stable method to solve the problem computationally. In Section 2 the matrix pencil associated with the classical complex exponential fitting or sparse interpolation problem is summarized and the concepts of dilation and translation are introduced to obtain matrix pencils at different scales. Exponential analysis was earlier generalized to the use of several polynomial basis functions and some operator eigenfunctions. However, in most generalizations a computational scheme in terms of an eigenvalue problem is lacking. In the subsequent Sections 3–6 the matrix pencil formulation, including the dilation and translation paradigm, is generalized to more functions. Each of these periodic, polynomial or special function classes needs a tailored approach, where optimal use is made of the properties of the parameterized elementary or special function used in the sparse interpolation problem under consideration. With each generalization a structured linear matrix pencil is associated, immediately leading to a computational scheme for the nonlinear and linear parameters, respectively from a generalized eigenvalue problem and one or more structured linear systems. Finally, in Section 7 we illustrate the new methods.

Similar content being viewed by others

1 Introduction

The nonlinear inverse problems of complex exponential analysis [1, 2] and sparse polynomial interpolation [3, 4] from uniformly sampled values can both be traced back to the exponential fitting method of de Prony from the eighteenth century [5, 6]:

The French nobleman de Prony solved the problem by obtaining the n nonlinear parameters \(\phi _{i}\) from the roots of a polynomial and the n coefficients \(\alpha _i\) as the solution of a Vandermonde structured linear system. Almost 200 years later this basic fitting problem, which plays an important role [7, 8] in many computational science disciplines, engineering applications and digital signal processing, was reformulated in terms of a generalized eigenvalue problem [9]. This reformulation, which is referred to as the matrix pencil method, is generally the most reliable one when solving the exponential analysis problem computationally.

It is the property

of the building blocks \(\exp (\phi _{i} t)\) in (1) that allows to split the nonlinear interpolation problem (1) into two numerical linear algebra problems, namely the separate computation of the nonlinear parameters \(\phi _{i}\) from a generalized eigenvalue problem on the one hand and the linear coefficients \(\alpha _i\) from a structured linear system on the other.

Problem statement (1) was partially generalized, to the use of non-standard polynomial bases such as the Pochhammer basis and Chebyshev and Legendre polynomials [10,11,12,13,14] and to the use of some eigenfunctions of linear operators [15,16,17]. Many of these generalizations are unified in the algebraic framework described in [18].

What is lacking in most of the generalizations above, is a reformulation in terms of numerical linear algebra problems. In this paper we carry the generalized eigenvalue formulation of (1), so essentially the matrix pencil method, to linear combinations of the trigonometric functions cosine, sine, the hyperbolic cosine and sine functions, the Chebyshev (1st, 2nd, 3rd, 4th kind) and spread polynomials, the Gaussian function, the sinc and gamma function. In addition, we introduce the paradigm of a selectable dilation \(\sigma \) and translation \(\tau \) of the interpolation points, as used in refinable function theory. All of the above functions namely satisfy a property similar to

which allows to separate the effect of the scale \(\sigma \) and the shift \(\tau \) on the estimation of the parameters \(\phi _{i}\) and \(\alpha _i\). This multiscale option will prove to be useful in several situations, as further detailed in Section 2.2.

In each of the subsequent sections on the trigonometric and hyperbolic functions, polynomial functions, the Gaussian distribution, and some special functions, a different approach is required to express the nonlinear inverse problem

under consideration, as a generalized eigenvalue problem, tailored to the particular properties of the building block \(g(\phi _{i}; t)\) in use. The interpolant is always computed directly from the evaluations \(f_j\) where the \(t_j\) follow some regular interpolation point pattern associated with the specific function \(g(\phi _{i}; t)\).

2 Exponential fitting

We first lay out how the whole theory works for the exponential problem, where \(g(\phi _{i}; t) = \exp (\phi _{i} t)\).

2.1 Scale and shift scheme

By a combination of [9] and [19] we obtain the following. Let f(t) be given by

and let us sample f(t) at the equidistant points \(t_j=j\Delta \) for \(j=0, 1, 2, \ldots \) with \(\Delta \in \mathbb {R}^+\), or more generally at \(t_{\tau +j\sigma }=(\tau +j\sigma )\Delta \) with \(\sigma \in \mathbb {N}\) and \(\tau \in \mathbb {Z}\), where the frequency content in (3) is limited by [20, 21]

with \(\Im (\cdot )\) denoting the imaginary part. More generally, \(\sigma \) and \(\tau \) can belong to \(\mathbb {Q}^+\) and \(\mathbb {Q}\) respectively, as discussed in Section 2.5. The values \(\sigma \) and \(\tau \) are called the scaling factor and shift term respectively. We denote the collected samples by

From \(\exp (\phi _{i} t_{j+1}) = \exp (\phi _{i}\Delta ) \exp (\phi _{i} t_j)\) we find that

or more generally for \(\sigma \in \mathbb {N}\) and \(\tau \in \mathbb {Z}\) that

Hence we see that the scaling \(\sigma \) and the shift \(\tau \) are separated in a natural way when evaluating (3) at \(t_{\tau +j\sigma }\), a property that plays an important role in the sequel. The freedom to choose \(\sigma \) and \(\tau \) when setting up the sampling scheme, allows to stretch, shrink and translate the otherwise uniform progression of sampling points dictated by the sampling step \(\Delta \).

The aim is now to estimate the model order n, and the parameters \(\phi _1, \ldots , \phi _n\) and \(\alpha _1, \ldots , \alpha _n\) in (3) from samples \(f_j\) at a selection of points \(t_j\).

2.2 Generalized eigenvalue formulation

In this and the next subsection we assume for a moment that n was determined. With \(n, \sigma \in \mathbb {N}, \tau \in \mathbb {Z}\) we define

It is well-known that the Hankel matrix \({_\sigma ^\tau }H_n\) can be decomposed as

This decomposition on the one hand translates (5) and on the other hand connects it to a generalized eigenvalue problem: the values \(\exp (\phi _{i}\sigma \Delta )\) can be retrieved [9] as the generalized eigenvalues of the problem

where \(v_i\) are the generalized right eigenvectors. Setting up this generalized eigenvalue problem requires the 2n samples \(f_{j\sigma }, j=0, \ldots , 2n-1\). A similar statement holds for the values \(\exp (\phi _{i} \tau \Delta )\) from the linear pencil \(({_\sigma ^\tau }H_n, {_\sigma ^0}H_n)\). In [5, 9] the choices \(\sigma =1\) and \(\tau =1\) are made and then, from the generalized eigenvalues \(\exp (\phi _{i}\Delta )\), the complex numbers \(\phi _{i}\) can be retrieved uniquely because of the restriction \(|\Im (\phi _{i})|\Delta <\pi \).

Choosing \(\sigma > 1\) offers a number of advantages though, among which:

-

reconditioning [19, 22, 23] of a possibly ill-conditioned problem statement,

-

superresolution [19, 24] in the case of clustered frequencies,

-

validation [25] of the exponential analysis output for n and \(\phi _{i}, i=1, \ldots , n\),

-

the possibility to parallelize the estimation of the parameters \(\phi _{i}\) [25].

With \(\sigma >1\) the \(\phi _{i}\) cannot necessarily be retrieved uniquely from the generalized eigenvalues \(\exp (\phi _{i} \sigma \Delta )\) since \(\left| \Im (\phi _{i}) \right| \sigma \Delta \) may well be larger than \(\pi \). Let us indicate how to solve that problem which is called aliasing.

2.3 Vandermonde structured linear systems

For chosen \(\sigma \) and with \(\tau =0\), the \(\alpha _i\) are computed from the interpolation conditions

either by solving the system in the least squares sense, in the presence of noise, or by solving a subset of n interpolation conditions in the case of noiseless samples. The samples of f(t) occurring in (9) are the same samples as the ones used to fill the Hankel matrices in (8) with. Note that

and that for fixed \(\sigma \) the coefficient matrix of (9) is therefore a transposed Vandermonde matrix with nodes \(\exp (\phi _{i} \sigma \Delta )\). In a noisy context the Hankel matrices in (8) can also be extended to rectangular \(N \times \nu \) matrices with \(N>\nu \ge n\) and the generalized eigenvalue problem can be considered in a least squares sense [26]. In that case the index j in (9) runs from 0 to \(N+\nu -1\).

Next, for chosen nonzero \(\tau \), a shifted set of at least n samples \(f_{\tau +j\sigma }\) is interpreted as

where \(k \in \{0, 1, \ldots , n\}\) is fixed. Note that (10) is merely a shifted version of the original problem (3), where the effect of the shift is pushed into the coefficients of (3). The latter is possible because of (5). From (10), having the same (but maybe less) coefficient matrix entries as (9), we compute the unknown coefficients \(\alpha _i\exp (\phi _{i}\tau \Delta )\). From \(\alpha _i\) and \(\alpha _i\exp (\phi _{i}\tau \Delta )\) we then obtain

from which again the \(\phi _{i}\) cannot necessarily be extracted unambiguously if \(\tau >1\). But the following can be proved [19].

Denote \(s_{i,\sigma }:= {{\,\text{sign}\,}}\left( \Im \left( {{\,\text{Ln}\,}}\left( \exp (\phi _{i}\sigma \Delta ) \right) \right) \right) \) and \(s_{i,\tau }:= {{\,\text{sign}\,}}\left( \Im \left( {{\,\text{Ln}\,}}\left( \exp (\phi _{i}\tau \Delta ) \right) \right) \right) \), where \({{\,\text{Ln}\,}}(\cdot )\) indicates the principal branch of the complex natural logarithm and \(\left| \Im \left( {{\,\text{Ln}\,}}\left( \exp (\phi _{i}\sigma \Delta ) \right) \right) \right| \le \pi \). If \(\gcd (\sigma , \tau )=1\), then the sets

which contain all the possible arguments for \(\phi _{i}\) in \(\exp (\phi _{i}\sigma \Delta )\) from (8) and in \(\exp (\phi _{i}\tau \Delta )\) from (10) respectively, have a unique intersection [19]. How to obtain this unique element in the intersection and identify the \(\phi _{i}\) is detailed in [19, 25]. Convenient choices for \(\sigma \) and \(\tau \) depend somewhat on the noise level and their selection is also discussed in [25].

So at this point the nonlinear parameters \(\phi _{i}, i=1, \ldots , n\) and the linear \(\alpha _i, i=1, \ldots , n\) in (3) are computed through the solution of (8) and (9), and if \(\sigma > 1\) also (10). Remains to discuss how to determine n.

2.4 Determining the sparsity

What can be said about the number of terms n in (3), which is also called the sparsity? From [27, p. 603] and [28] we know for general \(\sigma \) that

The regularity of \({^0_\sigma }H_n\) persists for any value of \(\Delta \) when collecting the samples to fill the matrix with, while an accidental singularity of \({^0_\sigma }H_\nu \) with \(\nu <n\) only occurs for an unfortunate choice of \(\Delta \) that makes the determinant zero. A standard approach to make use of this statement is to compute a singular value decomposition of the Hankel matrix \({^0_\sigma }H_\nu \) and this for increasing values of \(\nu \). In the presence of noise and/or clustered eigenvalues, this technique is not always reliable and we need to consider rather large values of \(\nu \) for a correct estimate of n [24] or turn our attention to some validation add-on [25].

With \(\sigma =1\) and in the absence of noise, the exponential fitting problem can be solved from 2n samples for \(\alpha _1, \ldots , \alpha _n\) and \(\phi _1, \ldots , \phi _n\) and at least one additional sample to confirm n. As pointed out already, it may be worthwhile to take \(\sigma >1\) and throw in at least an additional n values \(f_{\tau +j\sigma }\) to remedy the aliasing. Moreover, if \(\max _{i=1, \ldots , n} |\Im (\phi _{i})|\) is quite large, then \(\Delta \) may become so small that collecting the samples \(f_j\) becomes expensive and so it may be more feasible to work with a larger sampling interval \(\sigma \Delta \).

2.5 Computational variants

Besides having \(\sigma \in \mathbb {N}\) and \(\tau \in \mathbb {Z}\), more general choices are possible. An easy practical generalization is when the scale factor and shift term are rational numbers \(\sigma /\rho _1 \in \mathbb {Q}^+\) and \(\tau /\rho _2 \in \mathbb {Q}\) respectively, with \(\sigma , \rho _1, \rho _2 \in \mathbb {N}\) and \(\tau \in \mathbb {Z}\). In that case the condition \(\gcd (\sigma , \tau )=1\) for \(S_i\) and \(T_i\) to have a unique intersection, is replaced by \(\gcd (\overline{\sigma }, \overline{\tau })=1\) where \(\sigma /\rho _1=\overline{\sigma }/\rho , \tau /\rho _2=\overline{\tau }/\rho \) with \(\rho =\text {lcm}(\rho _1, \rho _2)\).

We remark that, although the sparse interpolation problem can be solved from the 2n samples \(f_j, j=0, \ldots , 2n-1\) when \(\sigma = 1\), we need at least an additional n samples at the shifted locations \(t_{\tau +j\sigma }, j=k, \ldots , k+n-1\) when \(\sigma >1\). The former is Prony’s original problem statement in [5] and the latter is the generalization presented in [19]. The factorization (7) allows some alternative computational schemes, which may deliver a better numerical accuracy but demand somewhat more samples.

First we remark that the use of a shift \(\tau \) can of course be replaced by the choice of a second scale factor \(\tilde{\sigma }\) relatively prime with \(\sigma \). But this option requires the solution of two generalized eigenvalue problems of which the generalized eigenvalues need to be matched in a combinatorial step. Also, the sampling scheme looks different and requires the \(4n-1\) sampling points

A better option is to set up the generalized eigenvalue problem

which in a natural way connects each eigenvalue \(\exp (\phi _{i}\tau \Delta )\), bringing forth the set \(T_i\), to its associated eigenvector \(v_i\) bringing forth the set \(S_i\). The latter is derived from the quotient of any two consecutive entries in the vector \({^0_\sigma }H_n v_i\) which is a scalar multiple of

Such a scheme requires the \(4n-2\) samples

Note that the generalized eigenvectors \(v_i\) are actually insensitive to the shift \(\tau \): the eigenvectors of (8) and (12) are identical. This is a remarkable fact that reappears in each of the subsequent (sub)sections dealing with other choices for \(g(\phi _{i}; t)\).

We now turn our attention to the identification of other families of parameterized functions and patterns of sampling points. We distinguish between trigonometric and hyperbolic, polynomial and other important functions. Our focus here is on the derivation of the mathematical theory and not on the practical aspects of the numerical computation.

3 Trigonometric functions

The generalized eigenvalue formulation (8) incorporating the scaling parameter \(\sigma \), was generalized to \(g(\phi _{i}; t)=\cos (\phi _{i} t)\) in [11] for integer \(\phi _{i}\) only. Here we present a more elegant full generalization for \(\cos (\phi _{i} t)\) including the use of a shift \(\tau \) as in (10) to restore uniqueness of the solution if necessary. In addition we generalize the scale and shift approach to the functions sine, cosine hyperbolic and sine hyperbolic.

3.1 Cosine function

Let \(g(\phi _{i}; t) = \cos (\phi _{i} t)\) with \(\phi _{i} \in \mathbb {R}\) where

Since \(\cos (\phi _{i} t) = \cos (-\phi _{i} t)\), we are only interested in the \(|\phi _{i}|, i=1, \ldots , n\), disregarding the sign of each \(\phi _{i}\). With \(t_j = j\Delta \) we still denote

and because of

we now also introduce for fixed chosen \(\sigma \) and \(\tau \),

Relation (15) deals with the case \(\sigma =1\) and \(\tau =1\), while the expression \(F_{\tau +j\sigma }\) is a generalization of (15) for general \(\sigma \) and \(\tau \). Observe the achieved separation in (16) of the scaling \(\sigma \) and the shift \(\tau \). We emphasize that \(\sigma \) and \(\tau \) are fixed before defining the \(F(\sigma , \tau ;t_j)\). Otherwise the index j cannot be associated uniquely with the value \( {1}/{2}(f_{\tau +j\sigma }+f_{\tau -j\sigma })\).

Besides the Hankel structured \({^\tau _\sigma }H_n\), we introduce the Toeplitz structured

which is symmetric when \(\tau =0\). Now consider the structured matrix

where \({^{\tau }_{-\sigma }}T_n = {^\tau _\sigma }T_n^T\). When \(\tau =0\), the first two matrices in the sum coincide and the latter two do as well. Note that working directly with the cosine function instead of expressing it in terms of the exponential as \(\cos x = (\exp (\mathtt ix) + \exp (-\mathtt ix))/2\), reduces the size of the matrices involved in the pencil from 2n to n.

Theorem 1

The matrix \(^\tau _\sigma C_n\) factorizes as

Proof

The proof is a verification of the matrix product entry at position \((k+1, \ell +1)\) for \(k, \ell =0, \ldots , n-1\):

\(\square \)

This matrix factorization translates (16) and opens the door to the use of a generalized eigenvalue problem: the cosine equivalent of (8) becomes

where \(v_i\) are the generalized right eigenvectors. Setting up (18) takes 2n evaluations \(f_{j\sigma }\), as in the exponential case. Before turning our attention to the extraction of the \(\phi _{i}\) from the generalized eigenvalues \(\cos (\phi _{i}\sigma \Delta )\), we solve two structured linear systems of interpolation conditions.

The coefficients \(\alpha _i\) in (14) are computed from

Making use of (16), the coefficients \(\alpha _i\cos (\phi _{i}\tau \Delta )\) are obtained from the shifted interpolation conditions

where \(k \in \{0, 1, \ldots , n\}\) is fixed. While for \(\sigma =1\) the sparse interpolation problem can be solved from 2n samples taken at the points \(t_j=j\Delta , j=0, \ldots , 2n-1\), for \(\sigma > 1\) additional samples are required at the shifted locations \(t_{\tau \pm j\sigma }= (\tau \pm j\sigma )\Delta \) in order to resolve the ambiguity that arises when extracting the nonlinear parameters \(\phi _{i}\) from the values \(\cos (\phi _{i} \sigma \Delta )\). The quotient

delivers the values \(\cos (\phi _{i}\tau \Delta ), i=1, \ldots , n.\) Neither from \(\cos (\phi _{i}\sigma \Delta )\) nor from \(\cos (\phi _{i}\tau \Delta )\) the parameters \(\phi _{i}\) can necessarily be extracted uniquely when \(\sigma >1\) and \(\tau >1\). But the following result is proved in the Appendix.

If \(\gcd (\sigma ,\tau )=1\), the sets

containing all the candidate arguments for \(\phi _{i}\) in \(\cos (\phi _{i}\sigma \Delta )\) and \(\cos (\phi _{i}\tau \Delta )\) respectively, have at most two elements in their intersection. Here \({{\,\text{Arccos}\,}}(\cdot ) \in [0,\pi ]\) denotes the principal branch of the arccosine function. In case two elements are found, then it suffices to extend (19) to

which only requires the additional sample \(f_{\tau +(k+n)\sigma }\) as \(f_{\tau -(k+n-2)\sigma }\) is already available. From this extension, \(\cos (\phi _{i}(\sigma +\tau )\Delta )\) can be obtained in the same way as \(\cos (\phi _{i}\tau \Delta )\). As explained in the Appendix, only one of the two elements in the intersection of \(S_i\) and \(T_i\) fits the computed \(\cos (\phi _{i}(\sigma +\tau )\Delta )\) since \(\gcd (\sigma , \tau )=1\) implies that also \(\gcd (\sigma ,\sigma +\tau ) = 1 = \gcd (\tau ,\sigma +\tau )\).

So the unique identification of the \(\phi _{i}\) can require \(2n-1\) additional samples at the shifted locations \((\tau \pm j\sigma )\Delta , j=0, \ldots n-1\) if the intersections \(S_i \cap T_i\) are all singletons, or 2n additional samples, namely at \((\tau \pm j\sigma )\Delta , j=0, \ldots , n-1\) and \((\tau +n\sigma )\Delta \) if at least one of the intersections \(S_i \cap T_i\) is not a singleton.

The factorization in Theorem 1 immediately allows to formulate the following cosine analogue of (11).

Corollary 1

For the matrix \({^0_\sigma }C_n\) defined in (17) holds that

To round up our discussion, we mention that from the factorization in Theorem 1, it is clear that for the generalized eigenvector \(v_i\) from the different generalized eigenvalue problem

holds that \({^0_\sigma }C_n v_i\) is a scalar multiple of

This immediately leads to a computational variant of the proposed scheme, similar to the one given in Section 2.4 for the exponential function, requiring somewhat more samples though. Let us now turn our attention to other trigonometric functions.

3.2 Sine function

Let \(g(\phi _{i}; t)=\sin (\phi _{i} t)\) and let (13) hold. With \(t_j=j \Delta \) We denote

and because of

we introduce for fixed chosen \(\sigma \) and \(\tau \),

We fill the matrices \({_\sigma ^\tau }H_n\) and the Toeplitz matrices \({_\sigma ^\tau }T_n\) and define

Theorem 2

The structured matrix \({_\sigma ^\tau }B_n\) factorizes as

Proof

The proof is again a verification of the matrix product entry, at the position \((k, \ell +1)\) with \(k=1, \ldots , n\) and \(\ell =0, \ldots , n-1\):

\(\square \)

Note that the factorization involves precisely the building blocks in the shifted evaluation (21) of the help function \(F(\sigma ,\tau ; t)\). From this decomposition we find that the \(\cos (\phi _{i}\sigma \Delta ), i=1, \ldots , n\) are obtained as the generalized eigenvalues of the problem

We point out that setting up this generalized eigenvalue problem requires samples of f(t) at the points \(t_{(-n+1)\sigma }, \ldots , t_{2n\sigma }\). Since \(f(t_{j\sigma })=-f(t_{-j\sigma })\) and \(f(0)=0\) it costs 2n samples. Unfortunately, at this point we cannot compute the \(\alpha _i, i=1, \ldots , n\) from the linear system of interpolation conditions

as we usually do, because we do not have the matrix entries \(\sin (\phi _{i} j\sigma \Delta )\) at our disposal. It is however easy to obtain the values \(\cos (\phi _{i} j\sigma \Delta )\) because \(\cos (\phi _{i} j\sigma \Delta ) = \cos \left( \pm j {{\,\text{Arccos}\,}}(\cos (\phi _{i}\sigma \Delta )) \right) \) where \({{\,\text{Arccos}\,}}(\cos (\phi _{i}\sigma \Delta ))\) returns the principal branch value. The proper way to proceed is the following.

From Theorem 2 we get \({^0_\sigma }B_n^T = W_n A_n U_n^T\). So we can obtain the \(\alpha _i\sin (\phi _{i}\sigma \Delta )\) in the first column of \(A_n U_n^T\) from the structured linear system

where \({^0_\sigma }B_n= (b_{ij})_{i,j=1}^n\). From the generalized eigenvalues \(\cos (\phi _{i}\sigma \Delta ), i=1, \ldots \) and the \(\alpha _i\sin (\phi _{i}\sigma \Delta )\) we can now recursively compute for \(j=1, \ldots , n,\)

The system of shifted linear interpolation conditions

can then be looked at as

having a coefficient matrix with entries \(\alpha _i\sin (\phi _{i} j \sigma \Delta )\) and unknowns \(\cos (\phi _{i}\tau \Delta )\). In order to retrieve the \(\phi _{i}\) uniquely from the values \(\cos (\phi _{i}\sigma \Delta )\) and \(\cos (\phi _{i}\tau \Delta )\) with \(\gcd (\sigma ,\tau )=1\), one proceeds as in the cosine case. Finally, the \(\alpha _i\) are obtained from the expressions \(\alpha _i\sin (\phi _{i}\sigma \Delta )\) after plugging in the correct arguments \(\phi _{i}\) in \(\sin (\phi _{i}\sigma \Delta )\) and dividing by it. So compared to the previous sections, the intermediate computation of the \(\alpha _i\) before knowing the \(\phi _{i}\), is replaced by the intermediate computation of the \(\alpha _i\sin (\phi _{i} \sigma \Delta )\). In the end, the \(\alpha _i\) are revealed in a division, without the need to solve an additional linear system.

From the factorization in Theorem 2, the following sine analogue of (11) follows immediately.

Corollary 2

For the matrix \({^0_\sigma }B_n\) defined in (22) holds that

For completeness we mention that one also finds from this factorization that for \(v_i\) in the generalized eigenvalue problem

holds that \({^0_\sigma }B_n v_i\) is a scalar multiple of

3.3 Phase shifts in cosine and sine

It is possible to include phase shift parameters in the cosine and sine interpolation schemes. We explain how, by working out the sparse interpolation of

Since \(\sin t = \left( \exp (\mathtt it) - \exp (-\mathtt it) \right) /2\mathtt i,\) we can write each term in (25) as

So the sparse interpolation of (25) can be solved by considering the exponential sparse interpolation problem

where \(\beta _{2i-1} = \alpha _i\exp (-\mathtt i\psi _i)/(2\mathtt i), \beta _{2i} = -\alpha _i \exp (\mathtt i\psi _i)/(2\mathtt i)\) and \(\zeta _{2i-1} = \phi _{i}=-\zeta _{2i}\). The computation of the \(\phi _{i}\) through the \(\zeta _i\) remains separated from that of the \(\alpha _i\) and \(\psi _i\). The latter are obtained as

3.4 Hyperbolic functions

For \(g(\phi _{i}; t)=\cosh (\phi _{i} t)\) the computational scheme parallels that of the cosine and for \(g(\phi _{i}; t)=\sinh (\phi _{i} t)\) that of the sine. We merely write down the main issues.

When \(g(\phi _{i}; t)=\cosh (\phi _{i} t)\), let

and for fixed chosen \(\sigma \) and \(\tau \), let

Subsequently the definition of the structured matrix \({_\sigma ^\tau }C_n\) is used and in the factorization of Theorem 1, the cosine function is everywhere replaced by the cosine hyperbolic function.

Similarly, when \(g(\phi _{i}; t)=\sinh (\phi _{i} t)\), let

and for fixed chosen \(\sigma \) and \(\tau \), let

Now the definition of the structured matrix \({_\sigma ^\tau }B_n\) is used and in the factorization of Theorem 2 the occurrences of \(\cos \) are replaced by \(\cosh \) and those of \(\sin \) by \(\sinh \).

4 Polynomial functions

The orthogonal Chebyshev polynomials were among the first polynomial basis functions to be explored for use in combination with a scaling factor \(\sigma \), in the context of sparse interpolation in symbolic-numeric computing [11]. We elaborate the topic further for numerical purposes and for lacunary or supersparse interpolation, making use of the scale factor \(\sigma \) and the shift term \(\tau \). We also extend the approach to other polynomial bases and connect to generalized eigenvalue formulations.

4.1 Chebyshev 1st kind

Let \(g(m_i; t)=T_{m_i}(t)\) of degree \(m_i\), which is defined by

and consider the interpolation problem

The Chebyshev polynomials \(T_m(t)\) satisfy the recurrence relation

and the property

With \(0 \le m_1< m_2< \ldots< m_n <M\) we choose \(t_j=\cos (j\Delta )\) where \(0<\Delta \le \pi /M\). Note that the points \(t_j\) are allowed to occupy much more general positions than in [11]. If M is extremely large and n is small, in other words if the polynomial is very sparse, then it is a good idea to recover the actual \(m_i, i=1, \ldots , n\) in two tiers as we explain now. Let \(\gcd (\sigma ,\tau )=1\). We denote

and introduce for fixed \(\sigma \) and \(\tau \),

in order to separate the effect of \(\sigma \) and \(\tau \) in the evaluation. With the same matrices \({_\sigma ^\tau }H_n, {_\sigma ^\tau }T_n\) and \({_\sigma ^\tau }C_n\) as in the cosine subsection, now filled with the \(f_{\tau +j\sigma }\) from (27), the values \(T_{m_i}(\cos (\sigma \Delta ))\) are the generalized eigenvalues of the problem

From the values \(T_{m_i}(\cos (\sigma \Delta )) = \cos (m_i\sigma \Delta )\) the integer \(m_i\) cannot necessarily be retrieved unambiguously. We need to find out which of the elements in the set

is the one satisfying (26), where \({{\,\text{Arccos}\,}}(\cos (m_i\sigma \Delta ))/(\sigma \Delta ) \le M/\sigma \). Depending on the relationship between \(\sigma \) and M (relatively prime, generator, divisor, \(\ldots \)) the set \(S_i\) may contain one or more candidate integers for \(m_i\) evaluating to the same value \(\cos (m_i\sigma \Delta )\). To resolve the ambiguity we consider the Vandermonde-like system for the \(\alpha _i, i=1, \ldots , n\),

and the shifted problem

from which we compute the \(\alpha _i T_{m_i}(\cos (\tau \Delta )) = \alpha _i\cos (m_i\tau \Delta )\). Then

If the intersection of the set \(S_i\) with the set

is processed as in Section 3.1, then one can eventually identify the correct \(m_i\). An illustration thereof is given in Section 7.3.

When replacing (28) by

we find that for \(v_i\) holds that \({^0_\sigma }C_n v_i\) is a scalar multiple of

This offers an alternative algorithm similar to the alternative in Section 3.1 on the cosine function.

4.2 Chebyshev 2nd, 3rd and 4th kind

While the Chebyshev polynomials \(T_{m_i}(t)\) of the first kind are intrinsically related to the cosine function, the Chebyshev polynomials \(U_{m_i}(t)\) of the second kind can be expressed using the sine function:

Therefore the sparse interpolation problem

can be solved along the same lines as in Section 4.1 but now using the samples

instead of the \(f_{\tau +j\sigma }\), for the sparse interpolation of

In a very similar way, the sparse interpolation problems

can be solved, using the Chebyshev polynomials \(V_{m_i}(t)\) and \(W_{m_i}(t)\) of the third and fourth kind respectively, given by

4.3 Spread polynomials

Let \(g(m_i;t)\) equal the degree \(m_i\) spread polynomial \(S_{m_i}(t)\) on [0, 1], which is defined by

The spread polynomials \(S_m(t)\) are related to the Chebyshev polynomials of the first kind by \(1-2tS_m(t) = T_m(1-2t)\) and satisfy the recurrence relation

and the property

We consider the interpolation problem

where \(t_j=\sin ^2(j\Delta ), j=0, 1, 2, \ldots \) with \(0 < \Delta \le \pi /(2M)\) and \(0< m_1< \ldots< m_n < M.\) The \(S_{m_i}(t) = \sin ^2(m_i \arcsin \sqrt{t})\) satisfy

As in Section 4.1 we present a two-tier approach, which for \(\sigma \le 1\) reduces to one step and avoids the additional evaluations required for the second step. However, as indicated above, the two-tier scheme offers some additional possibilities. We denote

With

we obtain

So the effect of the scale factor \(\sigma \) on the one hand and the shift term \(\tau \) on the other can again be separated in the evaluation \(F_{\tau +j\sigma }\).

We introduce the matrices

Theorem 3

The matrices \({^\tau _\sigma }K_n\) and \({_\sigma }J_n\) factorize as

Proof

The factorization is again verified at the level of the matrix entries, now making use of property (29), which is slightly more particular.\(\square \)

This factorization paves the way to obtaining the values \(S_{m_i}(\sin ^2 \sigma \Delta ) = \sin ^2(m_i\sigma \Delta )\) as the generalized eigenvalues of

Filling the matrices in this matrix pencil requires \(2n+1\) evaluations \(f(j\sigma \Delta )\) for \(j=1 \ldots , 2n+1\). From these generalized eigenvalues we cannot necessarily uniquely deduce the values for the indices \(m_i\). Instead, we can obtain for each \(i=1, \ldots , n\) the set of elements

characterizing all the possible values for \(m_i\) consistent with the sparse spread polynomial interpolation problem. Fortunately, with \(\gcd (\sigma , \tau )=1\), we can proceed as follows.

First, the coefficients \(\alpha _i\) are obtained from the linear system of interpolation conditions

The additional values \(F_{\tau +j\sigma }\) lead to a second system of interpolation conditions,

which delivers the coefficients \(\alpha _i S_{m_i}(\sin ^2 \tau \Delta )\). Dividing the two solution vectors of these linear systems componentwise delivers the values \(S_{m_i}(\sin ^2 \tau \Delta ), i=1, \ldots , n\) from which we obtain sets

that have the correct \(m_i\) in their intersection with the respective \(S_i\). The proof of this statement follows a completely similar course as that for the cosine building block \(g(\phi _{i};t)\), given in the Appendix.

The factorization in Theorem 3 allows to write down a spread polynomial analogue of (11).

Corollary 3

For the matrices \({_\sigma }J_n\) and \({^0_\sigma }K_n\) defined in (30) holds that

To round up the discussion we mention that from Theorem 3 and the generalized eigenvalue problem

we also find that \({_\sigma }J_n v_i\) is a scalar multiple of

At the expense of some additional samples this eigenvalue and eigenvector combination offers again an alternative computational scheme.

5 Distribution functions

In [29, pp. 85–91] Prony’s method is generalized from \(g(\phi _{i}; t) = \exp (\phi _{i} t)\) with \(\phi _{i} \in \mathbb {C}\) to \(g(\phi _{i}; t) = \exp (-(t-\phi _{i})^2)\), to solve the interpolation problem

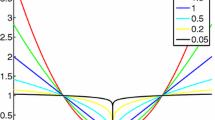

with given fixed Gaussian peak width w. Here we further generalize the algorithm to include the new scale and shift paradigm. The scheme is useful when modelling phenomena using Gaussian functions, as illustrated in Section 7.1. Without loss of generality we put \(2w^2=1\). The easy adaptation to include a fixed constant width factor in the formulas is left to the reader.

We again assume that (4) holds, but now for \(2\Delta \). With \(t_j= j\Delta \), the Gaussian \(g(\phi _{i}; t)=\exp (-(t-\phi _{i})^2)\) satisfies

Let us take a closer look at the evaluation of f(t) at \(t_{\tau +j\sigma } = (\tau +j\sigma )\Delta , j=0, 1, \ldots \) with \(\sigma \in \mathbb {N}\) and \(\tau \in \mathbb {Z}\):

With the auxiliary function

we obtain a perfect separation of \(\sigma \) and \(\tau \) and the problem can be solved using Prony’s method. With fixed chosen \(\sigma \) and \(\tau \), the value \(F(\sigma ,\tau ; t_j)\) is denoted by \(F_{\tau +j\sigma }\).

Theorem 4

The Hankel structured matrix

factorizes as

Proof

The proof is again by verification of the entry \(F_{\tau +(k+\ell )\sigma }\) in \({_\sigma ^\tau G_n}\) at position \((k+1, \ell +1)\) for \(k=0, \ldots , n-1\) and \(\ell =0, \ldots , n-1\). \(\square \)

With \(\tau =0,\sigma \) the values \(\exp (2\phi _{i}\sigma \Delta )\) are retrieved as a factor of the generalized eigenvalues of the problem

As we know from the exponential case, the \(\phi _{i}\) cannot necessarily be identified unambiguously from \(\exp (2\phi _{i}\sigma \Delta )\) when \(\sigma > 1\). In order to remedy that, we turn our attention to two structured linear systems. The first one, where \(\tau =0\),

delivers the \(\alpha _i \exp (-\phi _{i}^2)\) after rewriting it as

The coefficient matrix of this linear system is Vandermonde structured with entry \(\left( \exp (2 \phi _{i} \sigma \Delta ) \right) ^j\) at position \((j+1, i)\). The second linear system, where \(\tau >0\), delivers the \(\alpha _i \exp (-(\tau \Delta -\phi _{i})^2)\) through (31),

Here the coefficient matrix is structured identically as in the first linear system. From both solutions we obtain

From the values \(\exp (2\phi _{i}\sigma \Delta ), i =1, \ldots , n\) and \(\exp (2\phi _{i}\tau \Delta ), i=1, \ldots , n\) the parameters \(2\phi _{i}\) can be extracted as explained in Section 2, under the condition that \(\gcd (\sigma ,\tau )=1\).

The values \(\exp (2\phi _{i}\tau \Delta )\) and \(\exp (2\phi _{i}\sigma \Delta )\) can also be retrieved respectively from the generalized eigenvalues and the generalized eigenvectors of the alternative problem

with \({^0_\sigma }G_n v_i\) being a scalar multiple of

thereby requiring at least of \(4n-2\) samples instead of 3n samples. To conclude, the following analogue of (11) can be given.

Corollary 4

For the matrix \({^0_\sigma }G_n\) given in Theorem 4 holds that

6 Some special functions

The sinc function is widely used in digital signal processing, especially in seismic data processing where it is a natural interpolant. There are several similarities between the narrowing sinc function and the Dirac delta function, among which the shape of the pulse. A large number of papers, among which [30], already discuss the determination of a so-called train of Dirac spikes and their amplitudes, which is essentially an exponential fitting problem. This is generalized here to the use of the sinc function, including a matrix pencil formulation.

The gamma function first arose in connection with the interpolation problem of finding a function that equals n! when the argument is a positive integer. Nowadays the function plays an important role in mathematics, physics and engineering. In [10] sparse interpolation or exponential analysis was already generalized to the Pochhammer basis \((t)_m = t (t+1) \cdots (t+m-1)\), also called rising factorial, which is related to the gamma function by \((t)_m = \Gamma (t+m)/\Gamma (t)\) for \(t \not \in \mathbb {Z}^- \cup \{0\}\). Here we generalize the method to the direct use of the gamma function and we present a matrix pencil formulation as well.

6.1 The sampling function \(\sin (x)/x\)

Let \(g(\phi _{i}; t)=\text {sinc}(\phi _{i} t)\) where \(\text {sinc}(t)\) is historically defined by \(\text {sinc}(t)=\sin t / t\). So our sparse interpolation problem is

with the same assumptions for \(\phi _{i}\) and \(\Delta \) as in Section 3. In order to solve this inverse problem of identifying the \(\phi _{i}\) and \(\alpha _i\) for \(i=1, \ldots , n\), we introduce

and apply the technique from Section 3.2 for the separate identification of the nonlinear parameters \(\phi _{i}\) and linear parameters \(\alpha _i/\phi _{i}\) in the sparse sine interpolation.

6.2 The gamma function \(\Gamma (z)\)

With the new tools obtained so far, it is also possible to extend the theory to other functions such as the gamma function \(\Gamma (z)\). The function \(g(\phi _{i}; z) = \Gamma (z+\phi _{i})\) with \(z, \phi _{i} \in \mathbb {C}\), satisfies the relation

Our interest is in the sparse interpolation of

where the \(\alpha _i, \phi _{i}, i=1, \ldots , n\) are unknown. In the sample point \(z=\Delta \) we define

If by the choice of \(\Delta \), one or more of the \(\Delta +\phi _{i}, i=1, \ldots , n\) accidentally belong to the set of nonpositive integers, then one cannot sample f(z) at \(z=\Delta \). In that case a complex shift \(\tau \) can help out. It suffices to shift the arguments \(\Delta +\phi _{i}\) away from the negative real axis. We then redefine

or in other words

Using (32) we find

If \(\tau =0\) then \(F_{0,j} = F_j(\Delta )\). As soon as the samples at \(\tau +\Delta +j\) are all well-defined, we can start the algorithm for the computation of the unknown linear parameters \(\alpha _i\) and the nonlinear parameters \(\phi _{i}\), We further introduce

Theorem 5

The matrix \({^{\tau ,k}_{1}}\mathcal{H}_n\) is factored as

Proof

With the matrix factorization given, the proof consists of an easy verification of the matrix product with the matrix \({^{\tau ,k}_{1}}\mathcal{H}_n\).\(\square \)

Filling the matrices \({^{\tau ,0}_1}\mathcal{H}_n\) and \({^{\tau ,1}_1}\mathcal{H}_n\) requires the evaluation of f(z) at \(z=\tau +\Delta +j, j=0, \ldots , 2n-1\) which are points on a straight line parallel with the real axis in the complex plane.

The nonlinear parameters \(\phi _{i}\) are now obtained as the generalized eigenvalues of

where the \(v_i, i=1, \ldots , n\) are the right generalized eigenvectors. Afterwards the linear parameters \(\alpha _i\) are obtained from the linear system of interpolation conditions

by computing the coefficients \(\alpha _i\Gamma (\tau +\Delta +\phi _{i})\) and dividing those by the function values \(\Gamma (\tau +\Delta +\phi _{i})\) which are known because \(\Delta ,\tau \) and the \(\phi _{i}, i=1, \ldots , n\) are known.

From Theorem 5 we find that for the generalized eigenvectors of (33) holds that \({^{\tau ,0}_{1}}\mathcal{H}_n v_i\) is a scalar multiple of

This allows to validate the computation of the \(\phi _{i}, i=1, \ldots , n\) obtained as generalized eigenvalues, if desired.

6.3 Pochhammer basis connection

Results on sparse polynomial interpolation using the Pochhammer basis \((t)_m\) where usually the interpolation points are positive integers and \(t \in \mathbb {R}^+\), were published in [10, 28], but no matrix pencil method for its solution was presented. This can now easily be obtained using a similar approach as for the gamma function. We consider more generally the interpolation of

For complex values z, the Pochhammer basis or rising factorial \((z)_m\) satisfies the recurrence relation

For real \(\Delta \), a complex shift \(\tau \) could shift the problem statement away from the negative real axis, as with the gamma function, but it is much simpler here to immediately consider \(\Delta \in \mathbb {C}\setminus \{0, -1, -2, \ldots \}\). Let

With the evaluations \(F_j\) we fill the Hankel matrix

This Hankel matrix decomposes as in Theorem 5, but now with

So the nonlinear parameters \(m_i, i=1, \ldots , n\) are obtained as the generalized eigenvalues of

where the \(v_i\) are the right generalized eigenvectors, for which holds that \({^0_1}H_n v_i\) is a multiple of the vector

From the latter the estimates of the \(m_i\) can be validated by computing the quotient of successive entries in the vector. The linear parameters \(\alpha _i, i=1, \ldots , n\) are obtained from the linear system

7 Numerical illustrations

We present some examples to illustrate the main novelties of the paper, including the multiscale facilities:

-

an illustration of sparse interpolation by Gaussian distributions with fixed width but unknown peak locations;

-

an illustration of the new generalized eigenvalue formulation for use with several trigonometric functions and the sinc;

-

an illustration of the use of the scale and shift strategy for the supersparse interpolation of polynomials.

As stated earlier, our focus is on the mathematical generalizations and not on the numerical issues.

7.1 Fixed width sparse Gaussian fitting

Consider the expression

illustrated in Fig. 1, with the parameters \(\alpha _i, \phi _{i} \in \mathbb {R}\). From the plot it is not obvious that the signal has two peaks.

We first show the output of the widely used [31] Matlab state-of-the-art peak fitting program peakfit.m, which calls an unconstrained nonlinear optimization algorithm to decompose an overlapping peak signal into its components [32, 33].

In peakfit.m the user needs to supply a guess for the number of peaks and supply this as input. If one does not have any idea on the number of peaks, the usual practice is to try different possibilities and compare the corresponding results. Of course, a good estimate of the number of peaks may lead to a good fit of the data. In addition to the peak position \(\phi _{i}\), its height \(\alpha _i\) and width w, the program also returns a goodness-of-fit (GOF).

The peakfit.m algorithm can work without assuming a fixed width, or the width can be passed as an argument. We do the latter as our algorithm also assumes a known fixed peak width w.

Let \(\Delta =0.1\) and let us collect 20 samples \(f_0, f_1, \ldots , f_{19}\). When passing the width to peakfit.m and guessing the number of peaks, then it returns for 1 peak the estimates

For 2 peaks it returns

Since the result is still not matching our benchmark input parameters, let us push further and supply 100 samples. Then for 1 peak peakfit.m returns

and for 2 peaks we get

From the latter experiment it is easy to formulate some desired features for a new algorithm:

-

built-in guess of the number of peaks in the signal,

-

and reliable output from a smaller number of samples.

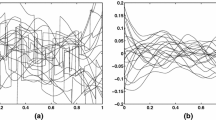

So let us investigate the technique developed in Section 5. Take \(\sigma =1\) and \(\tau =0\) since there is no periodic component in the Gaussian signal, which has only real parameters. With the 20 samples \(f_0, f_1, \ldots , f_{19}\) we define the samples \(F_j = \exp (j^2\Delta ^2) f_j\) and compose the Hankel matrix \({_1^0}G_{10}\). Its singular value decomposition, illustrated in Fig. 2, clearly reveals that the rank of the matrix is 2 and so we deduce that there are \(n=2\) peaks.

From the 4 samples \(F_0, F_1, F_2, F_3\) we obtain through Theorem 7

The new method clearly provides both an automatic guess of the number of peaks and a reliable estimate of the signal parameters, all from only 20 samples. What remains to be done is to investigate the numerical behaviour of the method on a large collection of different input signals, which falls out of the scope of this paper where we provide the mathematical details.

7.2 Sparse sinc interpolation

Consider the function

plotted in Fig. 3, which we sample at \(t_j=j\pi /300\) for \(j=0, \ldots , 19\). The singular value decomposition of \({_1^0}B_{10}\) filled with the values \(t_j f_j\), of which the log-plot is shown in Fig. 4 (left), reveals that f(t) consists of 3 terms. Remember that the sparse sinc interpolation problem with linear coefficients \(\alpha _i\) and nonlinear parameters \(\phi _{i}\) transforms into a sparse sine interpolation problem with linear coefficients \(\alpha _i/\phi _{i}\) and samples \(j\Delta f_j\).

The condition numbers of the matrices \({^0_1}B_3\) and \({^1_1}B_3\) appearing in the generalized eigenvalue problem

equal respectively \(1.6 \times 10^7\) and \(7.5 \times 10^6\). To improve the conditioning of the structured matrix we choose \(\sigma =30, \tau =1\) and resample f(t) at \(t_j=30 j \pi /300 = j\pi /10\) for \(j=0, \ldots , 5\). The singular values of \({^0_\sigma }B_{10}\) are graphed in Fig. 4 (right) and the condition numbers of \({^0_\sigma }B_3\) and \({^1_\sigma }B_3\) improve to \(1.1\times 10^3\) and \(9.7\times 10^2\) respectively.

The generalized eigenvalues of the matrix pencil \({^1_\sigma }B_3 -\lambda {^0_\sigma }B_3\) are given by

and with these we fill the matrix \(W_3\) from Theorem 2. We solve (23) for the values \(\alpha _i\sin (\phi _{i}\sigma \Delta )/\phi _{i}, i=1, 2, 3\) and further compute for \(j=1, \ldots , n,\)

At this point the matrix \(U_3\) from Theorem 2 can be filled and the \(\cos (\phi _{i}\tau \Delta )\) can be computed from (24), with the right-hand side filled with the additional samples \(F(31\Delta ), F(61\Delta ), F(91\Delta )\), where

Since \(\tau =1\) we obtain the \(\phi _{i}\) directly from the values \(\cos (\phi _{i}\tau \Delta )\): \(\phi _1 = 145.5000000000\), \(\phi _2 = 149.0000000000\), \(\phi _3 = 147.3000000000\). The linear coefficients \(\alpha _i\) are given by

resulting in \(\alpha _1 = -9.999999999991, \alpha _2 = 19.99999999978, \alpha _3 = 4.000000000089.\)

7.3 Supersparse Chebyshev interpolation

We consider the polynomial

which is clearly supersparse when expressed in the Chebyshev basis. We sample f(t) at \(t_j=\cos (j\Delta )\) where \(\Delta =\pi /100000\) with \(M=50000\). The first challenge is maybe to retrieve an indication of the sparsity n.

Take \(\sigma =1\) and collect 15 samples \(f_j, j=0, \ldots , 14\) to form the matrix \({^0_1}C_8\). From its singular value decomposition, computed in double precision arithmetic and illustrated on the log-plot in Fig. 5, one may erroneously conclude that f(t) has only 2 terms, a consequence of the fact that, relatively speaking, the degrees \(m_1=6\) and \(m_2=7\) are close to one another and appear as one cluster.

Imposing a 3-term model to f(t) instead of the erroneously suggested 2-term one, does not improve the computation as the matrix \({^0_1}C_8\) is ill-conditioned with a condition number of the order of \(10^{10}\). So 3 generalized eigenvalues cannot be extracted reliably from the samples. For completeness we mention the unreliable double precision results, rounded to integer values: \(m_1 = 6, m_2 = 39999, m_3 = 25119\).

Now choose \(\sigma =3125\) and \(\tau =16\). The singular value decomposition of \({^0_{3125}}C_8\), shown on the log-plot in Fig. 6, reveals that f(t) indeed consists of 3 terms. Also, the conditioning of the involved matrix \({^0_{3125}}C_8\) has improved to the order of \(10^3\).

The distinct generalized eigenvalues extracted from the matrix pencil \({^1_{3125}}C_3 -\lambda {^0_{3125}}C_3\) are given by

From 3 shifted samples at the arguments \(t_{\tau +j\sigma }, j=0, 1, 2\) we obtain

Building the sets \(S_i\) and \(T_i\) for \(i=1, 2, 3\) as indicated in Section 4.1, and rounding the result to the nearest integer, does unfortunately not provide singletons for \(S_1\cap T_1, S_2\cap T_2, S_3\cap T_3\). We consequently need to consider a second shift, for which we choose \(\sigma +\tau =3141\). With this choice we only need to add the evaluation of \(f(\tau +n\sigma )\) to proceed and compute

Finally, intersecting each \(S_i\cap T_i, i=1, 2,3\) with the solutions provided by the second shift, delivers the correct \(m_1 =6, m_2 = 7, m_3 = 39999.\)

8 Conclusion

Let us summarize the sparse interpolation formulas obtained in the preceding sections in a table. For each parameterized univariate function \(g(\phi _{i}; t)\) we list in the columns 1 to 4:

-

1.

the minimal number of samples required to solve the sparse interpolation without running into ambiguity problems, meaning for the choice \(\sigma =1\),

-

2.

the minimal number of samples required for the choice \(\sigma >1\) (if applicable), thereby involving a shift \(\tau \not = 0\) to restore uniqueness of the solution,

-

3.

the linear matrix pencil (A, B) in the generalized eigenvalue formulation \(Av_i = \lambda _i Bv_i\) of the sparse interpolation problem involving the \(g(\phi _{i};t)\),

-

4.

the generalized eigenvalues in terms of \(\tau \), as they can be read directly from the structured matrix factorizations presented in the theorems 1–5,

-

5.

the information that can be computed from the associated generalized eigenvectors, as indicated at the end of each (sub)section.

\(g(\phi _{i}; t)\) | # samples | pencil\(^*\) (A, B) | \(\lambda _i\) | \(Bv_i\) | |

|---|---|---|---|---|---|

\(\sigma =1\) | \(\sigma >1\) | ||||

\(\exp (\phi _{i}t)\) | 2n | 3n | \(\left( {_\sigma ^\tau }H_n, {_\sigma ^0}H_n \right) \) | \(\exp (\phi _{i}\tau \Delta )\) | \(\alpha _i, \exp (\phi _{i}\sigma \Delta )\) |

\(\cos (\phi _{i} t)\) | 2n | 4n | \(\left( {_\sigma ^\tau }C_n, {_\sigma ^0}C_n \right) \) | \(\cos (\phi _{i}\tau \Delta )\) | \(\alpha _i, \cos (\phi _{i}\sigma \Delta )\) |

\(\sin (\phi _{i} t)\) | 2n | \(4n+2\) | \(\left( {_\sigma ^\tau }B_n, {_\sigma ^0}B_n \right) \) | \(\cos (\phi _{i}\tau \Delta )\) | \(\alpha _i, \sin (\phi _{i}\sigma \Delta )\) |

\(\cosh (\phi _{i} t)\) | 2n | 4n | \(\left( {_\sigma ^\tau }C_n^*, {_\sigma ^0}C_n^* \right) \) | \(\cosh (\phi _{i}\tau \Delta )\) | \(\alpha _i, \cosh (\phi _{i}\sigma \Delta )\) |

\(\sinh (\phi _{i} t)\) | 2n | \(4n+2\) | \(\left( {_\sigma ^\tau }B_n^*, {_\sigma ^0}B_n^* \right) \) | \(\cosh (\phi _{i}\tau \Delta )\) | \(\alpha _i, \sinh (\phi _{i}\sigma \Delta )\) |

\(T_{m_i}(t)\) | 2n | 4n | \(\left( {_\sigma ^\tau }C_n, {_\sigma ^0}C_n \right) \) | \(T_{m_i}(\cos \tau \Delta )\) | \(\alpha _i, T_{m_i}(\cos \sigma \Delta )\) |

\(S_{m_i}(t)\) | \(2n+1\) | \(4n+2\) | \(\left( {_\sigma ^\tau }K_n, {_\sigma }J_n \right) \) | \(S_{m_i}(\sin ^2 \tau \Delta )\) | \(\alpha _i, S_{m_i}(\sin ^2\sigma \Delta )\) |

\(\text {sinc}(\phi _{i} t)\) | 2n | \(4n+2\) | \(\left( {_\sigma ^\tau }B_n, {_\sigma ^0}B_n \right) \) | \(\cos (\phi _{i}\tau \Delta )\) | \(\alpha _i, \sin (\phi _{i}\sigma \Delta )\) |

\(\Gamma (z+\phi _{i})\) | 2n | X | \(\left( {_\sigma ^{\tau ,1}}\mathcal{H}_n, {_\sigma ^{\tau ,0}}\mathcal{H}_n \right) \) | \(\phi _{i}\) | \(\alpha _i, \phi _{i}\) |

\(\exp (-(t-\phi _{i})^2)\) | 2n | 3n | \(\left( {_\sigma ^\tau }G_n, {_\sigma ^0}G_n \right) \) | \(\exp (2\phi _{i}\tau \Delta )\) | \(\alpha _i, \exp (2\phi _{i}\sigma \Delta )\) |

Availability of data and materials

Code generating the data and running all examples will be available from the website http://cemath.org.

References

Kay, S.M., Marple, S.L.: Spectrum analysis - A modern perspective. Proceedings of the IEEE 69(11), 1380–1419 (1981). https://doi.org/10.1109/PROC.1981.12184

Plonka, G., Potts, D., Steidl, G., Tasche, M.: Numerical Fourier Analysis (Chapter 10: Prony Method for Reconstruction of Structured Functions). Birkhäuser, Cham (2018)

Blahut, R.E.: Transform techniques for error control codes. IBM Journal of Research and Development 23(4), 299–315 (1979)

Ben-Or, M., Tiwari, P.: A deterministic algorithm for sparse multivariate polynomial interpolation. In: STOC ’88: Proceedings of the Twentieth Annual ACM Symposium on Theory of Computing, pp. 301–309. ACM, New York, NY, USA (1988). https://doi.org/10.1145/62212.62241

Prony, R.: Essai expérimental et analytique sur les lois de la dilatabilité des fluides élastiques et sur celles de la force expansive de la vapeur de l’eau et de la vapeur de l’alkool, à différentes températures. J. Ec. Poly. 1(22), 24–76 (1795)

Hildebrand, F.B.: Introduction to Numerical Analysis, 2nd edn. Dover Publications, Inc., New York (1987)

Istratov, A.A., Vyvenko, O.F.: Exponential analysis in physical phenomena. Rev. Sci. Instrum. 70(2), 1233–1257 (1999)

Pereyra, V., Scherer, G.: Exponential data fitting. In: Pereyra, V., Scherer, G. (eds.) Exponential Data Fitting and Its Applications, pp. 15–41 (2010). Chap. 1

Hua, Y., Sarkar, T.K.: Matrix pencil method for estimating parameters of exponentially damped/undamped sinusoids in noise. IEEE Trans. Acoust. Speech Signal Process. 38, 814–824 (1990). https://doi.org/10.1109/29.56027

Lakshman, Y.N., Saunders, B.D.: Sparse polynomial interpolation in nonstandard bases. SIAM J. Comput. 24(2), 387–397 (1995). https://doi.org/10.1137/S0097539792237784

Giesbrecht, M., Labahn, G., Lee, W.: Symbolic-numeric sparse polynomial interpolation in Chebyshev basis and trigonometric interpolation. In: Proc. Workshop on Computer Algebra in Scientific Computation (CASC), pp. 195–204 (2004)

Imamoglu, E., Kaltofen, E.L., Yang, Z.: Sparse polynomial interpolation with arbitrary orthogonal polynomial bases. In: ISSAC’18—Proceedings of the 2018 ACM International Symposium on Symbolic and Algebraic Computation, pp. 223–230. ACM, New York, NY, USA (2018). https://doi.org/10.1145/3208976.3208999

Potts, D., Tasche, M.: Sparse polynomial interpolation in Chebyshev bases. Linear Algebra Appl. 441, 61–87 (2014). https://doi.org/10.1016/j.laa.2013.02.006

Peter, T., Plonka, G., Roşca, D.: Representation of sparse Legendre expansions. J. Symbolic Comput. 50, 159–169 (2013). https://doi.org/10.1016/j.jsc.2012.06.002

Peter, T., Plonka, G.: A generalized Prony method for reconstruction of sparse sums of eigenfunctions of linear operators. Inverse Problems 29(2), 025001–21 (2013). https://doi.org/10.1088/0266-5611/29/2/025001

Plonka, G., Stampfer, K., Keller, I.: Reconstruction of stationary and non-stationary signals by the generalized Prony method. Anal. Appl. (Singap.) 17(2), 179–210 (2019). https://doi.org/10.1142/S0219530518500240

Stampfer, K., Plonka, G.: The generalized operator based Prony method. Constr. Approx. (2020). https://doi.org/10.1007/s00365-020-09501-6

Kunis, S., Römer, T., Ohe, U.: Learning algebraic decompositions using Prony structures. Adv. in Appl. Math. 118, 102044–43 (2020). https://doi.org/10.1016/j.aam.2020.102044

Cuyt, A., Lee, W.-s.: How to get high resolution results from sparse and coarsely sampled data. Appl. Comput. Harmon. Anal. 48, 1066–1087 (2020). https://doi.org/10.1016/j.acha.2018.10.001. (Published online October 11, 2018. Toolbox and experiments downloadable.)

Nyquist, H.: Certain topics in telegraph transmission theory. Trans. Am. Inst. Electr. Eng. 47(2), 617–644 (1928). https://doi.org/10.1109/T-AIEE.1928.5055024

Shannon, C.E.: Communication in the presence of noise. Proc. IRE 37, 10–21 (1949)

Plonka, G., Wannenwetsch, K., Cuyt, A., Lee, W.-s.: Deterministic sparse FFT for \(m\)-sparse vectors. Numer. Algorithms 78(1), 133–159 (2018). https://doi.org/10.1007/s11075-017-0370-5

Katz, R., Diab, N., Batenkov, D.: Decimated prony’s method for stable super-resolution. CoRR abs/2210.13329 (2022). https://doi.org/10.48550/arXiv.2210.13329

Cuyt, A., Tsai, M., Verhoye, M., Lee, W.-s.: Faint and clustered components in exponential analysis. Appl. Math. Comput. 327, 93–103 (2018). (Toolbox and experiments downloadable.)

Briani, M., Cuyt, A., Knaepkens, F., Lee, W.: VEXPA: Validated EXPonential Analysis through regular subsampling. Signal Processing 177, 107722 (2020). https://doi.org/10.1016/j.sigpro.2020.107722. (Published online July 17, 2020. Toolbox and experiments downloadable.)

Chu, D., Golub, G.H.: On a generalized eigenvalue problem for nonsquare pencils. SIAM J. Matrix Anal. Appl. 28(3), 770–787 (2006). https://doi.org/10.1137/050628258

Henrici, P.: Applied and Computational Complex Analysis I. John Wiley & Sons, New York (1974)

Kaltofen, E., Lee, W.-s.: Early termination in sparse interpolation algorithms. J. Symbolic Comput. 36(3-4), 365–400 (2003). https://doi.org/10.1016/S0747-7171(03)00088-9. International Symposium on Symbolic and Algebraic Computation (ISSAC 2002) (Lille)

Peter, T.: Generalized Prony method. PhD thesis, Georg-August-Universität Göttingen (2013)

Batenkov, D.: Stability and super-resolution of generalized spike recovery. Applied and computational harmonic analysis. 45(2) (2018-09)

O’Haver, T.: Publications that cite the use of my book, programs and documentation (2019). https://terpconnect.umd.edu/toh/spectrum/papers.pdf

O’Haver, T.: peakfit.m. MATLAB Central File Exchange. https://uk.mathworks.com/matlabcentral/fileexchange/23611-peakfit-m (Version 9.0, January 2018)

O’Haver, T.: A Pragmatic Introduction to Signal Processing with Applications in Scientific Measurement. Independently published (2020). ISBN-13: 979-8611266687

Funding

Annie Cuyt and Wen-shin Lee received funding from the European Union’s Horizon 2020 Research and Innovation Staff Exchange program under the MSCA grant agreement No. 101008231 (EXPOWER).

Wen-shin Lee received funding from the Carnegie Trust (Project “Advancing exponential analysis: high resolution information from sparse and regularly sample data”), grant reference RIG009853.

Author information

Authors and Affiliations

Contributions

The publication is the result of joint work, to which both authors contributed equal efforts.

Corresponding author

Ethics declarations

Ethics approval

Not applicable

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

To reconstruct a function of the form

from equidistantly collected samples \(f_j\) at \(t=j\Delta \), in other words to recover the unknown parameters \(\phi _{i}\) and coefficients \(\alpha _i\), the sampling step \(\Delta \) needs to satisfy the Shannon-Nyquist constraint

Since we do not distinguish \(\phi _{i}\) from \(-\phi _{i}\) in this case, we can simply drop the sign information in \(\phi _{i}\) from here on and write \(\Delta = \pi /R\) with

The challenge we consider now is to retrieve the parameters \(\phi _{i}\) and coefficients \(\alpha _i\) from sub-Nyquist rate collected samples \(f_{j\sigma }\) at \(t=j\sigma \Delta \) with \(\sigma >1\) and the shifted evaluations \(f_{j\sigma +\tau }\) at \(t=(j\sigma +\tau )\Delta \) with \(\gcd (\sigma ,\tau )=1\). In Section 3.1 we describe how for \(i=1, \ldots , n\) the values

are obtained. The aim is to extract the correct value for \(\phi _{i}\) from the knowledge of the evaluations \(C_{i,\sigma }\) and \(C_{i,\tau }\), particularly when \((\sigma \Delta ) \max _{i=1, \ldots , n}\phi _{i} \ge \pi \) and the parameter \(\phi _{i}\) cannot be obtained uniquely from \(C_{i,\sigma }\) alone. We now discuss the unique identification of this parameter \(\phi _{i}\) and in doing so we further drop the index i. Let us denote

where \({{\,\text{Arccos}\,}}(\cdot ) \in [0,\pi ]\) indicates the principal value of the inverse cosine function. Knowing that \(0 \le A_\sigma , A_\tau \le \pi \) and that \(0 \le \phi \sigma \Delta < \sigma \pi \), we find that all possible positive arguments \(\phi \sigma \Delta \) of \(C_\sigma \) are in \(\mathcal{A}_{\sigma ,1} \cup \mathcal{A}_{\sigma ,2}\) with

where \({{\,\text{sgn}\,}}(a_\sigma )=+1\) for \(0<A_\sigma \le \pi \) and \({{\,\text{sgn}\,}}(0)=0\). The set \(\mathcal{A}_{\sigma ,1} \cup \mathcal{A}_{\sigma ,2}\) may even contain some candidate arguments of \(C_\sigma \) that do not satisfy the bounds, but this does not create a problem in the identification of the correct \(\phi <R\). Along the same lines, sets \(\mathcal{A}_{\tau ,1}\) and \(\mathcal{A}_{\tau ,2}\) can be constructed.

We further denote

Then the possible solutions for \(\phi \) to \(C_\sigma =\cos (\phi \sigma \Delta )\) are in \(\Phi _{\sigma ,1} \cup \Phi _{\sigma ,2}\) where

Analogously, the possible solutions to \(C_\tau =\cos (\phi \tau \Delta )\) are in \(\Phi _{\tau ,1} \cup \Phi _{\tau ,2}\) where

One statement is obvious: whatever the choice for \(\sigma \) and \(\tau \), both \(\Phi _{\sigma ,1} \cup \Phi _{\sigma ,2}\) and \(\Phi _{\tau ,1} \cup \Phi _{\tau ,2}\) contain the unknown value for \(\phi \) which produced \(C_\sigma \) and \(C_\tau \). What remains open is the question whether \(\left( \Phi _{\sigma ,1}\cup \Phi _{\sigma ,2} \right) \cap \left( \Phi _{\sigma ,1}\cup \Phi _{\sigma ,2} \right) \) is a singleton. And in case it is not, we want to find an algorithm that can identify the correct \(\phi \).

When either \(\phi _\sigma =0\) or \(\phi _\sigma = R/\sigma \) the sets \(\Phi _{\sigma ,1}\) and \(\Phi _{\sigma ,2}\) coincide. And similarly for \(\phi _\tau \). On the other hand, if these sets do not coincide, they are disjoint. So the true value for the unknown parameter \(\phi \) can belong to any of the intersections \(\Phi _{\sigma ,1} \cap \Phi _{\tau ,1}, \Phi _{\sigma ,1} \cap \Phi _{\tau ,2}, \Phi _{\sigma ,2} \cap \Phi _{\tau ,1}, \Phi _{\sigma ,2} \cap \Phi _{\tau ,2}\). A sequence of lemmas will lead to the conclusion that the four intersections do not deliver more than two distinct elements. Thereafter we indicate how to identify the only true value for the unkown \(\phi \).

Lemma 1

\(i,j \in \{1, 2\}: \Phi _{\sigma ,i} \cap \Phi _{\tau ,j} \not = \emptyset \Longrightarrow \#\left( \Phi _{\sigma ,i} \cap \Phi _{\tau ,j} \right) = 1.\)

Proof

Without loss of generality we prove the statement for \(i=1=j\), by contraposition. The proof of the other cases is entirely similar. From \(\Phi _{\sigma ,1} \cap \Phi _{\tau ,1} \not = \emptyset \) and containing at least two elements, we then find that

This leads to

which is a contradiction because \(|\ell _1-\ell _2|< \sigma , |k_1-k_2|< \tau \) and \(\gcd (\sigma ,\tau )=1\). \(\square \)

When the sets \(\Phi _{\sigma ,1}\) and \(\Phi _{\sigma ,2}\) coincide and the sets \(\Phi _{\tau ,1}\) and \(\Phi _{\tau ,2}\) do as well, then that unique intersection is \(\phi =\phi _\sigma =\phi _\tau =0\). Because \(\gcd (\sigma ,\tau )=1\), other common elements coming from either \(\phi _\sigma =2R/\sigma \) or \(\phi _\tau =2R/\tau \) cannot exist.

We now continue with the situation where either the sets in (36) and (37) or the sets in (38) and (39) do not coincide, so that there are always at least 3 distinct sets in the running. Without loss of generality, we assume that a common element belongs to \(\Phi _{\sigma ,1} \cap \Phi _{\tau ,1}\) and we build our reasoning from there.

Lemma 2

\(\Phi _{\sigma ,1} \cap \Phi _{\tau ,1} \not = \emptyset \Longrightarrow \Phi _{\sigma ,2} \cap \Phi _{\tau ,2} = \emptyset \).

Proof

We know that either \(\Phi _{\sigma ,1} \cap \Phi _{\sigma ,2} \not = \emptyset \) or \(\Phi _{\tau ,1} \cap \Phi _{\tau ,2} \not = \emptyset \) and possibly both, so that \(\Phi _{\sigma ,1} \cap \Phi _{\tau ,1} \not = \Phi _{\sigma ,2} \cap \Phi _{\tau ,2}\). Again by contraposition, we suppose that \(\Phi _{\sigma ,2} \cap \Phi _{\tau ,2} \not = \emptyset \) and so

From this we obtain

which can only be true when \(1+\ell _1+\ell _2 = \sigma \) and \(1+k_1+k_2=\tau \). Then

which contradicts \(0 \le \phi _1, \phi _2 <R\). \(\square \)

While, assuming \(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} \not = \emptyset \), we have seen in Lemma 1 that this intersection is a singleton, and we have seen in Lemma 2 that then \(\Phi _{\sigma ,2}\cap \Phi _{\tau ,2} = \emptyset \), we know nothing so far about the other two intersections \(\Phi _{\sigma ,1}\cap \Phi _{\tau ,2}\) and \(\Phi _{\sigma ,2}\cap \Phi _{\tau ,1}\).

Lemma 3

\(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} \not = \emptyset \Longrightarrow \lnot \left( \Phi _{\sigma ,2} \cap \Phi _{\tau ,1} \not = \emptyset \wedge \Phi _{\sigma ,1} \cap \Phi _{\tau ,2} \not = \emptyset \right) \).

Proof

By contraposition we assume that

This leads to

which again implies \(1+\ell _1+\ell _2=\sigma \) and \(1+k_1+k_2=\tau \). Since

this contradicts \(0 \le \phi _1, \phi _2 <R\). \(\square \)

We have built our sequence of proofs from Lemma 2 on, without loss of generality, on the fact that \(\Phi _{\sigma , 1} \cap \Phi _{\tau ,1} \not = \emptyset \) and the fact that \(\Phi _{\sigma ,1}\) and \(\Phi _{\sigma ,2}\) on the one hand and \(\Phi _{\tau ,1}\) and \(\Phi _{\tau ,2}\) on the other do not collide at the same time. Finally, from Lemma 3 we know that (\(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} \not = \emptyset \) and \(\Phi _{\sigma ,2}\cap \Phi _{\tau ,1} \not = \emptyset \)) or (\(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} \not = \emptyset \) and \(\Phi _{\sigma ,1}\cap \Phi _{\tau ,2} \not = \emptyset \)) cannot occur concurrently, but either one of these cases remains possible.

In general, when at least 3 of the 4 sets \(\Phi _{\sigma , 1}, \Phi _{\sigma , 2}, \Phi _{\tau ,1}, \Phi _{\tau ,2}\) are distinct, then at most 2 of the 4 intersections

are nonempty, with each of the nonempty intersections being a singleton. Further down we illustrate the actual existence of a case, where two intersections are nonempty and consequently the true value of the unknown \(\phi \) cannot be identified from the evaluations \(\cos (\phi \sigma \Delta )\) and \(\cos (\phi \tau \Delta )\) with \(\gcd (\sigma ,\tau )=1\).

In this case we need to collect a third value \(C_\rho :=\cos (\phi \rho \Delta )\) with \(\gcd (\sigma ,\rho )=1\) and \(\gcd (\tau ,\rho )=1\). With \(A_\rho \) and \(\phi _\rho \) defined as in (34) and (35), and \(\Phi _{\rho ,1}\) and \(\Phi _{\rho ,2}\) defined as in (36) and (37), we know, as before, that \(\Phi _{\rho ,1}\cup \Phi _{\rho ,2}\) contains the correct value for \(\phi \). We also know, because of the remark formulated after the proof of Lemma 1, that at least 5 of the 6 involved sets \({\Phi }_{\sigma ,1}, {\Phi }_{\sigma ,2}, {\Phi }_{\tau ,1}, {\Phi }_{\tau ,2}, {\Phi }_{\rho ,1}, {\Phi }_{\rho ,2}\) are distinct unless \(\phi =0\).

We now inspect

where \(i_1, j_1, i_2, j_2\) index the subsets that produce the nonempty intersections of the relatively prime pair \(\sigma \) and \(\tau \), with either \(i_1 \not = i_2\) or \(j_1 \not = j_2\) but not both. We have built our sequence of proofs, without loss of generality, on the fact that \(i_1=1, j_1=1\) and have found that it is then possible that \(i_2=2, j_2=1\). We now continue the proofs from that case and inspect the 4 new intersections in (40).

Lemma 4

\(\Phi _{\rho ,1} \cap \Phi _{\rho ,2} =\emptyset \wedge \Phi _{\sigma ,1}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,1} \not = \emptyset \Longrightarrow \Phi _{\sigma ,1}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,2} = \emptyset .\)

Proof

From Lemma 1, we know that \(\Phi _{\sigma , 1} \cap \Phi _{\tau ,1}{} { isasingleton}.{ Ifthatuniqueelementalsobelongsto}\Phi _{\rho ,1}{} { thenitcannotbelongto}\Phi _{\sigma ,1}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,2}{} { when}\Phi _{\rho ,1}{} { and}\Phi _{\rho ,2}{} { aredisjoint}. \square \)

Lemma 5

\(\Phi _{\sigma ,1} \cap \Phi _{\sigma ,2} =\emptyset \wedge \Phi _{\sigma ,1}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,1} \not = \emptyset \Longrightarrow \Phi _{\sigma ,2}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,1} = \emptyset \).

Proof

From Lemma 1, we know that \(\Phi _{\tau , 1} \cap \Phi _{\rho ,1}{} { isasingleton}.{ Ifthatuniqueelementalsobelongsto}\Phi _{\sigma ,1}{} { thenitcannotbelongto}\Phi _{\sigma ,2}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,1}{} { when}\Phi _{\sigma ,1}{} { and}\Phi _{\sigma ,2}{} { aredisjoint}. \square \)

As a consequence of the Lemmas 4 and 5, the unique true \(\phi \) is identified in

Lemma 6

\(\#\left[ \left( \Phi _{\sigma ,1}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,1} \right) \cup \left( \Phi _{\sigma ,2}\cap \Phi _{\tau ,1}\cap \Phi _{\rho ,2} \right) \right] = 1\).

Proof

We know that either \(\phi =0\) is the unique element in the intersections or at least 2 of the intersections \(\Phi _{\sigma ,1} \cap \Phi _{\sigma ,2}, \Phi _{\tau ,1} \cap \Phi _{\tau ,2}\), \(\Phi _{\rho ,1} \cap \Phi _{\rho ,2}\) are empty. So either \(\Phi _{\sigma ,1} \cap \Phi _{\sigma ,2} =\emptyset \) or \(\Phi _{\rho ,1} \cap \Phi _{\rho ,2} = \emptyset \). When applying Lemma 2 to the pair \((\sigma ,\rho )\) instead of \((\sigma ,\tau )\) the set \(\Phi _{\sigma ,2}\cap \Phi _{\rho ,2}=\emptyset \) if the set \(\Phi _{\sigma ,1}\cap \Phi _{\rho ,1} \not = \emptyset \). Therefore two distinct elements in respectively \(\Phi _{\sigma ,1}\cap \Phi _{\rho ,1}\) and \(\Phi _{\sigma ,2}\cap \Phi _{\rho ,2}\) cannot coexist and solve \(C_\tau =\cos (\phi \tau \Delta )\). \(\square \)

So the unknown parameter \(\phi \) is identified uniquely from at most 3 values \(C_\sigma , C_\tau , C_\rho \) with \(\sigma , \tau , \rho \) all mutually prime. An easy choice for \(\rho \) is \(\rho =\sigma +\tau \) as this minimizes the number of additional samples as explained in Section 3, and also \(\gcd (\sigma ,\sigma +\tau )=1=\gcd (\tau ,\sigma +\tau )\) when \(\gcd (\sigma ,\tau )=1\).

As promised, we show an example where \(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} \not = \emptyset \) and \(\Phi _{\sigma ,2}\cap \Phi _{\tau ,1} \not = \emptyset \). Consider \(\phi =70800/1547 < 1000=R\) with \(\Delta =\pi /R\). Choose \(\sigma =299\) and \(\tau =357\) with \(\gcd (\sigma ,\tau )=1\). With

we have \(\phi \in \Phi _{\sigma ,1}\). With

we find \(\phi \in \Phi _{\tau ,1}\). Unfortunately, since \(\phi _\tau = 2R/\sigma -\phi _\sigma \) we also have \(\phi _\tau \in \Phi _{\sigma ,2}\cap \Phi _{\tau ,1} \not = \emptyset \).

As a last remark, we add that even replacing (13) by the stricter constraint

does not guarantee that each \(\phi \) can be identified from only \(C_\sigma \) and \(C_\tau \). We illustrate this with a counterexample. Let \(\phi =3300/133 < 50=R\) with \(\Delta = \pi /(2R)\). With \(\sigma =21\) and \(\tau =19\) we find

This leads to \(\Phi _{\sigma ,1}\cap \Phi _{\tau ,1} = \{500/133\}\) and \(\Phi _{\sigma ,2}\cap \Phi _{\tau ,1} = \{3300/133\}\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cuyt, A., Lee, Ws. Multiscale matrix pencils for separable reconstruction problems. Numer Algor 95, 31–72 (2024). https://doi.org/10.1007/s11075-023-01564-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01564-3

Keywords

- Prony problems

- Separable problems

- Parametric methods

- Sparse interpolation

- Dilation

- Translation

- Structured matrix

- Generalized eigenvalue problem