Abstract

In a recent paper, a conformable fractional Newton-type method was proposed for solving nonlinear equations. This method involves a lower computational cost compared to other fractional iterative methods. Indeed, the theoretical order of convergence is held in practice, and it presents a better numerical behaviour than fractional Newton-type methods formerly proposed, even compared to classical Newton-Raphson method. In this work, we design a generalization of this method for solving nonlinear systems by using a new conformable fractional Jacobian matrix, and a suitable conformable Taylor power series; and it is compared with classical Newton’s scheme. The necessary concepts and results are stated in order to design this method. Convergence analysis is made and a quadratic order of convergence is obtained, as in classical Newton’s method. Numerical tests are made, and the Approximated Computational Order of Convergence (ACOC) supports the theory. Also, the proposed scheme shows good stability properties observed by means of convergence planes.

Similar content being viewed by others

1 Introduction

Fractional calculus is a generalization of classical calculus, and many properties from this are held. Many problems in real life can be described by using mathematical tools form fractional calculus, because of the higher degree of freedom compared to classical calculus tools [1, 2].

In order to find the solution \(\bar {x}\in \mathbb {R}\) of a nonlinear function f(x) = 0, where \(f:I\subseteq \mathbb {R}\longrightarrow \mathbb {R}\) is a continuous function in \(I\in \mathbb {R}\), some fractional Newton-type methods for solving nonlinear equations were proposed in recent years by using the Riemann-Liouville, Caputo and conformable fractional derivatives (see [3,4,5]). Our goal is to design a conformable vectorial Newton-type method, and make a comparison with the classical vectorial Newton method in terms of convergence analysis and numerical stability.

Let us firstly introduce some preliminary concepts related to scalar conformable derivative. The left conformable fractional derivative of a function \(f:[a,\infty )\longrightarrow \mathbb {R}\), starting from a, of order α ∈ (0,1], \(\alpha ,a,x\in \mathbb {R}\), a < x, is defined as (see [11])

If that limit exists, f is said to be α-differentiable. If f is differentiable, \((T_{\alpha }^{a}f)(x)=(x-a)^{1-\alpha }f^{\prime }(x)\). If f is α-differentiable in (a,b), for some \(b\in \mathbb {R}\), \((T_{\alpha }^{a}f)(a)= \underset {x\rightarrow a^+}{\lim }(T_{\alpha }^{a}f)(x)\). It is also easy to see that \(T_{\alpha }^{a}C=0\), being C a constant.

The conformable fractional derivative is the most natural definition of fractional derivatives and involves a low computational cost, because it does not require the evaluation of special functions, such as Gamma or Mittag-Leffler functions.

Recently, a fractional Newton-type method by using conformable derivative has been designed for solving nonlinear equations in [5] with the following iterative expression:

Where \((T_{\alpha }^{a}f)(x_{k})\) is the left conformable fractional derivative of order α, α ∈ (0,1], starting at a, a < xk, ∀k. When α = 1, the classical Newton-Raphson method is obtained.

In [5], the quadratic convergence of this method by using a suitable conformable Taylor series (see [6]) is stated by the next result.

Theorem 1

([5]) Let \(f:I\subseteq \mathbb {R}\longrightarrow \mathbb {R}\) be a continuous function in the interval \(I\in \mathbb {R}\) containing the zero \(\bar {x}\) of f(x). Let \((T_{\alpha }^{a}f)(x)\) be the conformable fractional derivative of f(x) starting from a, with order α, for any α ∈ (0,1]. Let us suppose that \((T_{\alpha }^{a}f)(x)\) is continuous and not null at \(\bar {x}\). If an initial approximation x0 is sufficiently close to \(\bar {x}\), then the local order of convergence of the conformable fractional Newton-type method

is at least 2, where 0 < α ≤ 1, and the error equation is

where \(C_{j}=\frac {1}{j!\alpha ^{j-1}}\frac {(T_{\alpha }^{a}f)^{(j)}(\bar {x})}{(T_{\alpha }^{a}f)(\bar {x})}\) for \(j=2,3,4,\dots \)

Remark 1

It can be shown that, by using the conformable product and chain rules stated in [11], the asymptotic constant of the error equation can be expressed as (3)

being \(c_{j}=\frac {1}{j!}\frac {f^{(j)}(\bar {x})}{f^{\prime }(\bar {x})}\) for \(j=2,3,4,\dots ,\) which is the classical asymptotical error constant. In this case, j = 2. It can also be proven that the error equation of iterative scheme (2) by using the classical Taylor Series is:

So, (4) and (5) show that error equation obtained by both Taylor series (the classical one, and that provided in [6]) is the same.

Remark 2

As predicted by Traub, since conformable Newton-type method proposed in [5] and the classical one have the same order of convergence, the asymptotical error constant of conformable Newton-type method equals the asymptotical error constant of classical one, plus some value described in [7] (Theorem 2-8).

In both error equations, (3) and (5), when α = 1, we obtain the error equation of classical Newton’s method. In this work, we are going to use both Taylor series to make the convergence analysis, in this case, for a vector valued function.

That method proposed in [5], as seen in Theorem 1, can be only used to solve scalar nonlinear problems. In order to design a conformable vectorial Newton’s method to find the solution \(\bar {x}\in \mathbb {R}^{n}\) of a nonlinear system \(F(x)=\hat {0}\), with coordinate functions \(f_{1},\dots ,f_{n}\), where \(F:D\subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) is a sufficiently Fréchet-differentiable function in an open convex set D, we have to state the existing concepts and results which will be necessary.

First, for the analysis of the convergence of nonlinear systems by using the classical Taylor Series, we can find in [8, 9] the following notation:

Definition 1

Let \(F:D\subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be sufficiently Fréchet-differentiable in D. The q th derivative of F at \(u\in \mathbb {R}^{n}\), \(q\in \mathbb {N}\), q ≥ 1, is the q-linear function \(F^{(q)}(u):\mathbb {R}^{n}\times \cdots \times \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) such that \(F^{(q)}(u)(v_{1},\dots ,v_{q})\in \mathbb {R}^{n}\). It can be observed that:

-

1.

\(F^{(q)}(u)(v_{1},\dots ,v_{q-1},\cdot )\in {\mathscr{L}}(\mathbb {R}^{n})\), being \({\mathscr{L}}(\mathbb {R}^{n})\) the space of linear mappings of \(\mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\).

-

2.

\(F^{(q)}(u)(v_{\sigma _{1}},\dots ,v_{\sigma _{q}})=F^{(q)}(u)(v_{1},\dots ,v_{q})\), for any permutation \(\sigma \in \{1,\dots ,q\}\).

From properties above, we can use the following notation:

-

1.

\(F^{(q)}(u)(v_{1},\dots ,v_{q})=F^{(q)}(u)v_{1}{\cdots } v_{q}\).

-

2.

F(q)(u)vq− 1F(p)(u)vp = F(q)(u)F(p)(u)vq+p− 1.

In [10], we can find a definition of conformable partial derivative as shown next:

Definition 2

Let f be a function in n variables, \(x_{1},\dots ,x_{n}\), the conformable partial derivative of f of order α ∈ (0,1] in xi > a = 0 is defined as:

In [10] is also defined the conformable Jacobian matrix as:

Definition 3

Let f, g be functions in 2 variables x and y, and their respective partial derivatives exist and are continuous, where x > a1 and y > a2, being \(a=(a_{1},a_{2})=(0,0)=\hat {0}\), then the conformable Jacobian matrix is given by:

This can be directly extended to higher dimensions and, as it will be seen in the next section, a can be considered not null.

Another necessary concept, the Hadamard product, can be found in [12]:

Definition 4

Let A = (aij)m×n and B = (bij)m×n be m × n matrices. The Hadamard product is defined by A ⊙ B := (aijbij)m×n.

Remark 3

An analogous concept to Hadamard product is the Hadamard power, where \(A^{\odot r}=\underbrace {A\odot A\odot \cdots \odot A}_{r\text { times}}\), being \(r\in \mathbb {R}\).

In next section, the new concepts and results needed to design a vectorial conformable Newton-type method are stated.

In this manuscript, the design and convergence analysis of the proposed method are made in Section 3, the numerical tests and numerical stability are discussed in Section 4, and the conclusions are given in Section 5.

2 New concepts and results

Regarding that, in (6), \(x_{i}\in (0,\infty )\), we can define the conformable partial derivative in \(x_{i}\in (a,\infty )\) as follows:

Definition 5

Let f be a function in n variables, \(x_{1},\dots ,x_{n}\), the conformable partial derivative of f of order 0 < α ≤ 1 in \(x_{i}\in (a,\infty )\) is defined as

In the case xi = a, \(\frac {\partial _{a}^{\alpha }}{\partial x_{i}^{\alpha }}f(x_{1},\dots ,a,\dots ,x_{n})=\underset {x_{i}\rightarrow a^{+}}{\lim }\frac {\partial _{a}^{\alpha }}{\partial x_{i}^{\alpha }}f(x_{1},\dots ,x_{i},\dots ,x_{n})\).

This derivative is linear, and the product, quotient and chain rules are satisfied, likewise to conformable derivative given in [11]. In next result, a relation between classical partial derivative and conformable partial derivative is stated:

Theorem 2

Let f be a differentiable function in n variables, \(x_{1},\dots ,x_{n}\), xi > a, then,

Proof

Let h = 𝜖(xi − a)1−α, and 𝜖 = h(xi − a)α− 1, we have

□

We can also define the conformable Jacobian matrix for \(x_{1}\in (a_{1},\infty )\) and \(x_{2}\in (a_{2},\infty )\), where x = (x1,x2) and a = (a1,a2):

Definition 6

Let f and g be coordinate functions of a vector valued function \(F:\mathbb {R}^{2}\longrightarrow \mathbb {R}^{2}\) in variables x1 > a1 and x2 > a2, where x = (x1,x2) and a = (a1,a2), such that their respective partial derivatives exist and are continuous. Then, the conformable Jacobian matrix is given by

This can be directly extended to higher dimensions.

To analyze the convergence of nonlinear systems by using a conformable Taylor Series, we can use the following notation analogous to Definition 1:

Definition 7

Let \(F:D\subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be sufficiently α-differentiable in D. The q th conformable derivative of F at \(u\in \mathbb {R}^{n}\) is the α(q)-linear function \(F_{a}^{\alpha (q)}(u):\mathbb {R}^{n}\times \cdots \times \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) such that \(F_{a}^{\alpha (q)}(u)(v_{1},\dots ,v_{q})\in \mathbb {R}^{n}\). It can be observed that:

-

1.

\(F_{a}^{\alpha (q)}(u)(v_{1},\dots ,v_{q-1},\cdot )\in {\mathscr{L}}(\mathbb {R}^{n})\), being \({\mathscr{L}}(\mathbb {R}^{n})\) the space of linear mappings of \(\mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\).

-

2.

\(F_{a}^{\alpha (q)}(u)(v_{\sigma _{1}},\dots ,v_{\sigma _{q}})=F_{a}^{\alpha (q)}(u)(v_{1},\dots ,v_{q})\), for any permutation \(\sigma \in \{1,\dots ,q\}\).

From properties above, we can use the following notation:

-

1.

\(F_{a}^{\alpha (q)}(u)(v_{1},\dots ,v_{q})=F_{a}^{\alpha (q)}(u)v_{1}{\cdots } v_{q}\).

-

2.

\(F_{a}^{\alpha (q)}(u)v^{q-1}F_{a}^{\alpha (p)}(u)v^{p}=F_{a}^{\alpha (q)}(u)F_{a}^{\alpha (p)}(u)v^{q+p-1}\).

To define a conformable Taylor series for a vector valued function, we proceed in a similar way as in Theorem 4.1 from [11].

Theorem 3

Let us suppose that \(F:\mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) is an infinitely α-differentiable vector valued function, for some α ∈ (0,1], around a point \(t_{0}\in \mathbb {R}^{n}\). Then, F has the conformable Taylor power series

where \(F_{t_{0}}^{\alpha (k)}(t_{0})\) means the mapping of conformable derivative k times.

Proof

Let F(t) = K0 + K1(t − t0)α + K2(t − t0)2α + K3(t − t0)3α + ⋯. Then, F(t0) = K0.

If we map the conformable derivative once to F, and then we evaluate at t0, we obtain \(F_{t_{0}}^{\alpha (1)}(t_{0})=K_{1}\alpha \), so, \(K_{1}=\frac {F_{t_{0}}^{\alpha (1)}(t_{0})}{\alpha }\).

If we map the conformable derivative twice to F, and then we evaluate at t0, we obtain \(F_{t_{0}}^{\alpha (2)}(t_{0})=2K_{2}\alpha ^{2}\), so, \(K_{2}=\frac {F_{t_{0}}^{\alpha (2)}(t_{0})}{2\alpha ^{2}}\). Proceeding by induction, we have

So, (11) is obtained. □

Thus, F(t) in (11) may be written as

As it may be seen, the conformable derivatives start at t0, which is the value where derivatives are being also evaluated. This is a problem to be avoided in order to define a conformable Newton-type iterative method.

Proceeding as in [6] (Theorem 4.1), we can obtain a new Taylor series by using Theorem 3 , where the conformable derivatives start at some point \(a=(a_{1},\dots ,a_{n})\in \mathbb {R}^{n}\) different from another point \(b=(b_{1},\dots ,b_{n})\in \mathbb {R}^{n}\) where they are evaluated:

Theorem 4

Let \(F:\mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be an infinitely α-differentiable vector valued function, for some α ∈ (0,1], around a point \(b_{i}\in (a_{i},\infty )\), \(\forall i=1,\dots ,n\), where \(a=(a_{1},\dots ,a_{n})\in \mathbb {R}^{n}\) and \(b=(b_{1},\dots ,b_{n})\in \mathbb {R}^{n}\). Then, F has the conformable Taylor power series

where Δ = H⊙α − L⊙α; H = t − a, L = b − a, being ⊙ the Hadamard power.

Proof

Let us denote by t0 = a in (11),

Evaluating (14) at b,

isolating F(a), we get

If we map the conformable derivative once and twice to F, starting at a, we obtain, respectively,

and

Replacing all derivatives evaluated at a in (14), with all derivatives evaluated at b in (16), (17) and (18) we obtain (13), which can be written as

and the proof is finished. □

Remark 4

With these expressions, we can write the Taylor power series expansion of F around the solution \(\bar {x}\), being the conformable Jacobian matrix \(F_{a}^{\alpha (1)}(\bar {x})\) not singular, as shown next:

where Δ = H⊙α − L⊙α; H = x(k) − a, \(L=\bar {x}-a\), \(e^{(k)}=x^{(k)}-\bar {x}\) and being ⊙ the Hadamard power, C1 = I, \(C_{q}=\frac {1}{q!\alpha ^{q-1}}\left [F_{a}^{\alpha (1)}(\bar {x})\right ]^{-1}F_{a}^{\alpha (q)}(\bar {x})\), q ≥ 2.

Remark 5

By using Definition 7, Theorem 2 and Hadamard power, we obtain

and

respectively, for a vector valued function F, being \(F^{\prime }(x)\) the classical Jacobian matrix. Note that, in (21) \(x\rightarrow a^{+}\) means that \(x_{i}\rightarrow a_{i}^{+}\), \(\forall i=1,\dots ,n\), where \(x=(x_{1},\dots ,x_{n})\in \mathbb {R}^{n}\) and \(a=(a_{1},\dots ,a_{n})\in \mathbb {R}^{n}\).

Moreover, in order to make the convergence analysis of our main proposal, another concept must be introduced.

Theorem 5

Let \(x,y\in \mathbb {R}^{n}\), \(r\in \mathbb {R}\), and be ⊙ the Hadamard power/product. The Newton’s binomial theorem for vector values and fractional power is given by

being the generalized binomial coefficient (see [13])

Proof

Since Hadamard power/product is an element-wise power/product, the proof is analogous to classical one. □

In next section, we deduce the conformable Newton-type method for solving nonlinear systems.

3 Design and convergence analysis

As we proceeded in [5], let us regard the approximation of function F through the Taylor power series (13) up to order one evaluated at the solution \(\bar {x}\), as follows:

As \(F(\bar {x})=\hat {0}\), and Δ = H⊙α − L⊙α; H = x − a, \(L=\bar {x}-a\),

Multiplying both sides of (25), by \(\alpha \left [F_{a}^{\alpha (1)}(\bar {x})\right ]^{-1}\) from the left,

From \((\bar {x}-a)^{\odot \alpha }\), we isolate \(\bar {x}\), so

Regarding iterates x(k) and x(k+ 1) are approximations of solution \(\bar {x}\), we obtain the conformable Newton-type method for nonlinear systems:

Next, convergence analysis of conformable Newton-type method (28) is made by using the conformable Taylor series (13), and the classical one.

In next result, the quadratic convergence of vectorial Newton-type method (28) by using the conformable Taylor series (13) is proven.

Theorem 6

Let \(F:D\subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be a continuous function in an open convex set \(D\subseteq \mathbb {R}^{n}\) holding a zero \(\bar {x}\in \mathbb {R}^{n}\) of a vector valued function F(x). Let \(F_{a}^{\alpha (1)}(x)\) be the conformable Jacobian matrix of F starting at \(a\in \mathbb {R}^{n}\), of order α, for any α ∈ (0,1]. Let us suppose that \(F_{a}^{\alpha (1)}(x)\) is continuous and not singular at \(\bar {x}\). If an initial approximation \(x^{(0)}\in \mathbb {R}^{n}\) is sufficiently close to \(\bar {x}\), then the local order of convergence of conformable vectorial Newton’s method

is at least 2, and the error equation is

being \(C_{j}=\frac {1}{j!\alpha ^{j-1}}\left [F_{a}^{\alpha (1)}(\bar {x})\right ]^{-1}F_{a}^{\alpha (j)}(\bar {x})\), \(j=2,3,4,\dots \), such that a < x(k), ∀k.

Proof

By using Definition 7, Theorem 4, and regarding \(x^{(k)}=e^{(k)}+\bar {x}\), the conformable Taylor power series expansion of F(x) around \(\bar {x}\) is

being \(C_{j}=\frac {1}{j!\alpha ^{j-1}}\left [F_{a}^{\alpha (1)}(\bar {x})\right ]^{-1}F_{a}^{\alpha (j)}(\bar {x})\); \(j=2,3,4,\dots \) Using Theorem 5 (22) and (23), and considering the Hadamard powers (Definition 4 and Remark 3),

Regarding (20), and using again Definition 7 and Theorem 5, the conformable Jacobian matrix of \(F\left (x^{(k)}\right )\) can be expressed as

We can set the Taylor power series expansion of \(\left [F_{a}^{\alpha (1)}\left (x^{(k)}\right )\right ]^{-1}\) as

being X2 an unknown variable such that \(\left [F_{a}^{\alpha (1)}\left (x^{(k)}\right )\right ]^{-1}F_{a}^{\alpha (1)}\left (x^{(k)}\right )=I\), so,

Solving for X2,

So,

Thus,

Then,

Using once again Theorem 5,

Let \(x^{(k+1)}=e^{(k+1)}+\bar {x}\),

Finally,

And this completes the proof. □

As in (4), it can be shown that, by using the product and chain rules, and considering (20), in error (29),

being \(c_{j}=\frac {1}{j!}\left [F^{\prime }(\bar {x})\right ]^{-1}F^{(j)}(\bar {x})\) for \(j=2,3,4,\dots ,\) which is the classical asymptotical error constant for a vector valued function F, and \(F^{\prime }\) is the classical Jacobian matrix. For this case, j = 2.

In next result, the quadratic convergence of conformable Newton-type method (28) by using the the classical Taylor series can be proven:

Corollary 1

Let \(F:D\subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be a continuous function in an open convex set \(D\subseteq \mathbb {R}^{n}\) holding a zero \(\bar {x}\in \mathbb {R}^{n}\) of a vector valued function F(x). Let \(F_{a}^{\alpha (1)}(x)\) be the conformable Jacobian matrix of F starting at \(a\in \mathbb {R}^{n}\), of order α, for any α ∈ (0,1]. Let us suppose that \(F_{a}^{\alpha (1)}(x)\) is continuous and not singular at \(\bar {x}\). If an initial approximation \(x^{(0)}\in \mathbb {R}^{n}\) is sufficiently close to \(\bar {x}\), then the local order of convergence of conformable vectorial Newton’s method

is at least 2, and the error equation is

being \(c_{j}=\frac {1}{j!}\left [F^{\prime }(\bar {x})\right ]^{-1}F^{(j)}(\bar {x})\) for \(j=2,3,4,\dots \), such that a < x(k), ∀k.

Remark 6

It is confirmed that error equations given in (29) and (30) are the same.

In next section, some numerical tests with some nonlinear systems of equations are made. We remark that, in all tests, a comparison with classical Newton-Raphson’s method (when α = 1) is made. Also, the dependence on initial estimates of both methods is analyzed by using the convergence plane.

4 Numerical results

The following tests have been made by using MATLAB R2020a with double precision arithmetic, ∥F(x(k+ 1))∥ < 10− 8 or ∥x(k+ 1) − x(k)∥ < 10− 8 as stopping criterium, and at most 500 iterations. For each test, we use \(a=(a_{1},\dots ,a_{n})=(-10,\dots ,-10)\) to ensure that ai < xi, \(\forall i=1,\dots ,n\), according to Definitions 5 and 6 , and a < x(k), ∀k, according to Theorem 6 and Corollary 1. We also use the Approximated Computational Order of Convergence (ACOC)

defined in [14], to check the theoretical order of convergence is obtained in practice. To make a comparison to each of all test vector valued functions, we have used the same initial estimation for each table, and α ∈ (0,1].

From each table, two figures with error curves are provided in order to visualize the error committed (∥x(k+ 1) − x(k)∥) versus number of iterations for different values of α; firstly, it is shown a figure for all the able values of α, then, it is shown a figure for some values of α in order to distinguish each curve from others. In the latter case, the curves chosen correspond to values of α with fewer iterations, or to an arbitrary choice when the number of iterations is the same. For each case, the corresponding curve to α = 1 is always chosen if possible to visualize both methods, the classical one (when α = 1) and the proposed in this paper, in the same figure.

Our first test vector valued function is F1(x,y) = (x2 − 2x − y + 0.5,x2 + 4y2 − 4)T with real and complex roots \(\bar {x}_{1}\approx (-0.2222,0.9938)^{T}\), \(\bar {x}_{2}\approx (1.9007,0.3112)^{T}\) and \(\bar {x}_{3}\approx (1.1608-0.6545i,-0.9025-0.2104i)^{T}\). The conformable Jacobian matrix of F1(x,y) is

being a = (a1,a2) = (− 10,− 10).

In Table 1, we observe for F1(x,y) that classical Newton’s method (when α = 1) does not find any solution in 500 iterations, whereas conformable vectorial Newton’s procedure converges. We can also observe that ACOC may be even slightly greater than 2 when α≠ 1. We have to remark that a complex root is found with real initial estimate and different values of α when conformable vectorial Newton’s method is used. In Figs. 1 and 2, error curve for classical Newton’s procedure (when α = 1) is not provided because no solution was found in this case, whereas we can see that error curves stop erratic behaviour in later iterations.

Error curves of F1(x,y) for all values of α from Table 1

Error curves of F1(x,y) for some values of α from Table 1

In Table 2, we can see for F1(x,y) with a different initial estimation that, classical vectorial Newton’s scheme and conformable vectorial Newton’s method have a similar behaviour as to amount of iterations and ACOC. Again, the quadratic convergence of conformable Newton’s method is held, for all α ∈ (0,1]. In Figs. 3 and 4, erratic behaviour is not observed, due to errors decrease with each iteration.

Error curves of F1(x,y) for all values of α from Table 2

Error curves of F1(x,y) for some values of α from Table 2

The second test vector valued function is F2(x,y) = (x2 + y2 − 1,x2 − y2 − 1/2)T with real roots \(\bar {x}_{1}=\left (\sqrt {3}/2,1/2\right )^{T}\), \(\bar {x}_{2}=\left (-\sqrt {3}/2,1/2\right )^{T}\), \(\bar {x}_{3}=\left (\sqrt {3}/2,-1/2\right )^{T}\) and \(\bar {x}_{4}=\left (-\sqrt {3}/2,-1/2\right )^{T}\). The conformable Jacobian matrix of F2(x,y) is

being a = (a1,a2) = (− 10,− 10).

It can be seen in Tables 3 and 4 for F2(x,y) that classical Newton’s method and conformable vectorial Newton’s scheme have a similar behaviour as in amount of iterations as in ACOC. Figures 5, 6, 7 and 8 show that erratic behaviour is not observed, because errors are decreasing with iterations.

Error curves of F2(x,y) for all values of α from Table 3

Error curves of F2(x,y) for some values of α from Table 3

Error curves of F2(x,y) for all values of α from Table 4

Error curves of F2(x,y) for some values of α from Table 4

Our third test vector valued function is \(F_{3}(x,y)=(x^{2}-x-y^{2}-1,-{\sin \limits } x+y)^{T}\) with real roots \(\bar {x}_{1}\approx (-0.8453,-0.7481)^{T}\) and \(\bar {x}_{2}\approx (1.9529,0.9279)^{T}\). The conformable Jacobian matrix of F3(x,y) is

being a = (a1,a2) = (− 10,− 10).

We can see in Table 5 for F3(x,y) that conformable vectorial Newton’s procedure requires less iterations than classical Newton’s method for lower values of α. It can also be observed that ACOC may be slightly greater than 2 for lower values of α. In Figs. 9 and 10, errors are decreasing in each iteration.

Error curves of F3(x,y) for all values of α from Table 5

Error curves of F3(x,y) for some values of α from Table 5

In Table 6, we can see for F3(x,y) that conformable vectorial and classical Newton’s method require the same amount of iterations, and ACOC is around 2 in all cases. Again, the errors are decreasing in each iteration in Figs. 11 and 12.

Error curves of F3(x,y) for all values of α from Table 6

Error curves of F3(x,y) for some values of α from Table 6

The fourth test vector valued function is F4(x,y) = (x2 + y2 − 4,ex + y − 1)T with real roots \(\bar {x}_{1}\approx (-1.8163,0.8374)^{T}\) and \(\bar {x}_{2}\approx (1.0042,-1.7296)^{T}\). The conformable Jacobian matrix of F4(x,y) is

being a = (a1,a2) = (− 10,− 10).

We observe in Table 7 for F4(x,y) that again, conformable vectorial Newton’s scheme requires less iterations than classical Newton’s method for all values of α. It can also be seen that ACOC is around 2. We can see in Figs. 13 and 14 that errors are decreasing with iterations.

Error curves of F4(x,y) for all values of α from Table 7

Error curves of F4(x,y) for some values of α from Table 7

In Table 8, we observe for F4(x,y) that conformable vectorial and classical Newton’s method require the same amount of iterations, and ACOC is around 2. Again, the errors are decreasing with iterations in Figs. 15 and 16.

Error curves of F4(x,y) for all values of α from Table 8

Error curves of F4(x,y) for some values of α from Table 8

Our fifth test vector valued function is \(F_{5}(x)=\left (f_{1}(x),\dots ,f_{15}(x)\right )^{T}\), being \(x=(x_{1},\dots ,x_{15})^{T}\) and \(f_{i}:\mathbb {R}^{n}\longrightarrow \mathbb {R}\), \(i=1,2,\dots ,14,15\), such that

with real roots \(\bar {x}_{1}=(-1,\dots ,-1)^{T}\) and \(\bar {x}_{2}=(1,\dots ,1)^{T}\). The conformable Jacobian matrix of F5(x) is

where

being \(a=(a_{1},\dots ,a_{15})=(-10,\dots ,-10)\).

It can be observed in Tables 9 and 10 for F5(x) that classical Newton’s method and conformable vectorial Newton’s scheme have a similar behaviour as in amount of iterations as in ACOC. We can see that Figs. 17, 18, 19 and 20 show that errors are decreasing with iterations, so, erratic behaviour is not observed.

Error curves of F5(x) for all values of α from Table 9

Error curves of F5(x) for some values of α from Table 9

Error curves of F5(x) for all values of α from Table 10

Error curves of F5(x) for some values of α from Table 10

The sixth test vector valued function is \(F_{6}(x)=\left (f_{1}(x),\dots ,f_{10}(x)\right )^{T}\), where \(x=(x_{1},\dots ,x_{10})^{T}\) and \(f_{i}:\mathbb {R}^{n}\longrightarrow \mathbb {R}\), \(i=1,2,\dots ,9,10\), such that

with real roots \(\bar {x}_{1}\approx (-0.9691,\dots ,-0.9691)^{T}\), \(\bar {x}_{2}\approx (-0.7569,\dots ,-0.7569)^{T}\), \(\bar {x}_{3}\approx (-0.3248,\dots ,-0.3248)^{T}\), \(\bar {x}_{4}=(0,\dots ,0)^{T}\), \(\bar {x}_{5}\approx (0.3248,\dots ,0.3248)^{T}\), \(\bar {x}_{6}\approx (0.7569,\dots ,0.7569)^{T}\) and \(\bar {x}_{7}=(0.9691,\dots ,0.9691)^{T}\). The conformable Jacobian matrix of F6(x) is

where

being \(a=(a_{1},\dots ,a_{10})=(-10,\dots ,-10)\).

We can see in Table 11 for F6(x) that conformable vectorial Newton’s procedure requires, in general, much less iterations than classical Newton’s method. It can also be observed that ACOC may be slightly greater than 2. In Figs. 21 and 22, we can see that error curves stop erratic behaviour in later iterations.

Error curves of F6(x) for all values of α from Table 11

Error curves of F6(x) for some values of α from Table 11

In Table 12, we can observe for F6(x) that classical Newton’s method does not find any solution in 500 iterations, whereas conformable one converges for most of values of α. We can see that ACOC is around two, but much greater than 2 when α = 0.1. No results are shown when α = 0.7 because conformable Jacobian matrix becomes singular. Again, in Figs. 23 and 24, we can observe that error curves stop erratic behaviour in later iterations.

Error curves of F6(x) for all values of α from Table 12

Error curves of F6(x) for some values of α from Table 12

In Tables 11 and 12, some errors are zero because double precision arithmetic is used. A value very close to zero could be observed if a variable precision arithmetic be used.

4.1 Numerical stability

In this section, we study the stability of conformable vectorial Newton’s method tested above. In that sense, we analyze the dependence on initial estimates by means of convergence planes, which is defined in [15], and used in [3,4,5]. Only two dimensions can be visualized in convergence planes, so we are going to provide them for vector valued functions F1, F2, F3 and F4.

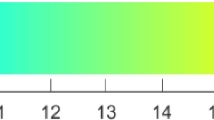

For the construction of convergence planes, we consider from initial estimates (x0,y0), the points x0 in horizontal axis, and values of α ∈ (0,1] in vertical axis. Each one of 8 planes in each figure is representing a different value of y0 from initial estimates (x0,y0). Each color represents a different solution found, and it is painted in black when no solution is found after 500 iterations. Each plane is made with a 400 × 400 grid, with a maximum of 500 iterations, and tolerance 0.001.

In Fig. 25, we can see for F1(x,y) that in (e), (f), (g) and (h) almost 100% of convergence is obtained, whereas in (a), (b), (c) and (d) it is obtained around 86% of convergence. For each case, this method converges to all roots, even to complex root with real initial estimate.

In Fig. 26, for F2(x,y) almost 100% of convergence is obtained for each plane. In (a), (b), (c) and (d) this method converges to 2 of 4 roots, and in (e), (f), (g) and (h) this method converges to the other 2 roots.

In Fig. 27, for F3(x,y) we can observe that between 77% and 98% of convergence is obtained. For each plane, this method converges to both real roots.

In Fig. 28, for F4(x,y) we can see that 100% of convergence is obtained in some cases, and almost in other cases. For (a), (b), (c), (d) and (e) this method converges to both real roots, and for (f), (g) and (h) converges to one root.

We can also observe, in general, it is possible to find several solutions with the same initial estimate by choosing distinct values for α.

5 Conclusion

In this work, the first conformable fractional Newton-type iterative scheme for solving nonlinear systems was designed. Also, we have introduced all the analytical tools required to construct this method. The convergence analysis was made, and the quadratic convergence is held as in classical Newton’s method for nonlinear systems. It was concluded that, by using the conformable Taylor series introduced in this work, and the classical one, the same error equation is obtained in both versions (the conformable scalar method in [5], and the conformable vectorial method proposed in this work). Numerical tests were made, error curves were provided, and the dependence on initial estimates was analyzed, supporting the theory. We could observe that the conformable vectorial Newton-type method presents, in some cases, a better numerical behaviour than classical one in terms of amount of iterations, ACOC, and wideness of basins of attractions of the roots. We also could observe that complex roots may be obtained with real initial estimates, and several roots may be obtained with the same initial estimate by choosing different values of α.

Data Availability

Not applicable.

References

Miller, K.S.: An introduction to fractional calculus and fractional differential equations. Wiley, New York (1993)

Podlubny, I.: Fractional differential equations. Academic Press, New York (1999)

Akgül, A., Cordero, A., Torregrosa, J.R.: A fractional Newton method with 2α th-order of convergence and its stability. Appl. Math. Lett. 98, 344–351 (2019)

Candelario, G., Cordero, A., Torregrosa, J.R.: Multipoint fractional iterative methods with (2α + 1)th-order of convergence for solving nonlinear problems. Mathematics 8(3), 452 (2020). https://doi.org/10.3390/math8030452

Candelario, G., Cordero, A., Torregrosa, J.R., Vassileva, M.P.: An optimal and low computational cost fractional Newton-type method for solving nonlinear equations. Appl. Math. Lett. 124, 107650 (2022). https://doi.org/10.1016/j.aml.2021.107650

Toprakseven, S.: Numerical solutions of conformable fractional differential equations by taylor and finite difference methods. Nat. Appl. Sci. 23(3), 850–863 (2019)

Traub, J.F.: Iterative methods for the solution of equations. Prentice-Hall, New Jersey (1964)

Amiri, A.R., Cordero, A., Darvishi, M.T., Torregrosa, J.R.: Preserving the order of convergence: low-complexity Jacobian-free iterative schemes for solving nonlinear systems. Comput. Appl. Math. 337, 87–97 (2018)

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: A modified Newton-Jarrat’s composition. Numer. Algor. 55, 87–99 (2009)

Atangana, A., Baleanu, D., Alsaedi, A.: New properties of conformable derivative. Open Math. 13, 889–898 (2015)

Abdeljawad, T.: On conformable fractional calculus. Comput. Appl. Math. 279, 57–66 (2015)

Horn, R.A., Johnson, C.R.: Topics in matrix analysis. Cambridge University Press, New York (1991)

Abramowitz, M., Stegun, I.A.: Handbook of mathematical functions. Dover (1970)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 190(1), 686–698 (2007)

Magreñán, A. A.́: A new tool to study real dynamics: the convergence plane. Appl. Math. Comput. 248(1), 215–224 (2014)

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. Pratial financial support was received from the Ministerio de Ciencia, Innovación y Universidades PGC2018-095896-B-C22 and from Dominican Republic FONDOCYT 2018-2019-1D2-140.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent for publication

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Author contributions

Conceptualization: G.C., J.R.T. Formal analysis: A.C., J.R.T. Software: G.C., M.P.V. Validation: A.C., J.R.T. Writing—original draft preparation: G.C., M.P.V. Writing—review and editing: A.C., J.R.T.

Code availability

Not applicable.

Consent to participate

Not applicable.

Human and animal ethics

Not applicable.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Candelario, G., Cordero, A., Torregrosa, J.R. et al. Generalized conformable fractional Newton-type method for solving nonlinear systems. Numer Algor 93, 1171–1208 (2023). https://doi.org/10.1007/s11075-022-01463-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01463-z