Abstract

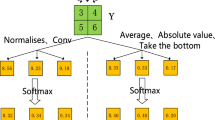

Image inpainting with the large missing blocks is tremendous challenging to achieve visual consistency and realistic effect. In this paper, an Adaptive Visual field Multi-scale Generative Adversarial Networks (denoted as GANs) Image Inpainting based on Coordinate-attention (denoted as AVMGC) is proposed. Firstly, an encoder with deformable convolutional networks in the generator of multi-scale generative adversarial networks is designed to expand the local vision field of network sampling adaptively in the image inpainting, which improves the local visual consistency of the image inpainting. Secondly, in order to expand the receptive field of the deep network and the global visual field, AVMGC combines the coordinate-attention mechanism with the convolutional layers, aiming to capture the direction-aware and position-sensitive information by cross-channel, which helps models to more accurately locate and recognize the objects of interest and generate globally consistent geometric contour in the image inpainting. In particular, instance normalization is introduced to the mutil-scale discriminator for transferring the statistic information of the feature maps and aims to keep the style of the original images. Extensive experiments conducted on public datasets prove that the proposal algorithms have the qualitative performance and outperform the baselines.

Similar content being viewed by others

References

Yang C, Lu X, Lin Z (2017) High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Hawaii, USA, pp 6721–6729

Liu GL, Fitsum AR, Kevin JS, Wang TC (2018) Image inpainting for irregular holes using partial convolutions. In: The 4th European conference on computer vison. computer vison, Munich, Germany, pp 89–105.

Brock A, Donahue J, Simonyan K (2018) Large scale GAN training for high fidelity natural image synthesis. arXiv preprint https://arxiv.org/abs/1809.11096

Tang H, Geng G (2020) Application of digital processing in relic image restoration design. Sens Imaging 21(1):1–10

Yang W, Mingquan Z, Pengfei Z (2020) Matching method of cultural relic fragments constrained by thickness and contour feature. IEEE Access 8:25892–25904

Zhang C, Li R, Chen W (2020) On the research of cultural relic restoration under reverse design. E3S Web of Conferences. EDP Sciences 189: 03006

Mansimov E, Parisotto E, Ba JL (2015) Generating images from captions with attention. arXiv preprint https://arxiv.org/abs/1511.02793

Meng Y, Kong D, Zhu Z (2019) From night to day: GANs based low quality image enhancement. Neural Process Lett 50(1):799–814

Arnal J, Chillarón M, Parcero E (2020) A parallel fuzzy algorithm for real-time medical image enhancement. Int J Fuzzy Syst 22(8):2599–2612

Román JCM, Escobar R, Martínez F (2020) Medical image enhancement with brightness and detail preserving using multiscale top-hat transform by reconstruction. Electron Notes Theor Comput Sci 349:69–80

Chan TF, Shen J (2001) Nontexture inpainting by curvature-driven diffusions. J Vis Commun Image Represent 12(4):436–449

Criminisi A, Perez P, Toyama K (2003) Object removal by exemplar-based inpainting. In: Proceedings 2003 IEEE computer society conference on computer vision and pattern recognition, pp 2: II-II

Yan Z, Li X, Li M (2018) Shift-net: Image inpainting via deep feature rearrangement. In: Proceedings of the European conference on computer vision, Munich, Germany, pp 1–17

Li KW, Zhang WTZ, Sao MW (2020) Multi-scale generative adversarial networks image inpainting algorithm. J Front Comput Sci Technol 14(1):159–170

Dai J, Qi H, Xiong Y (2017) Deformable convolutional networks. In: Proceedings of the IEEE international conference on computer vision, Venice, Italy, pp 764–773

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. Kuala Lumpur, Malaysia, pp 13713–13722

Bertalmio M, Sapiro G, Caselles V (2000) Image inpainting. In: Proceedings of the 27th annual conference on computer graphics and interactive techniques, pp 417–424.

Richard M, Chang MKYS (2001) Fast digital image inpainting. In: The proceedings of the international conference on visualization, imaging and image processing (VIIP 2001), Marbella, Spain, pp 106–107

Shen J, Chan TF (2002) Mathematical models for local nontexture inpaintings. SIAM J Appl Math 62(3):1019–1043

Chen Y, Luan Q, Li H (2006) Sketch-guided texture-based image inpainting. In: International conference on image processing, Atlanta, USA, pp 1997–2000

Ding L, Qu Y (2020) Based on the Research of Texture Image Restoration Technology. In: The third international conference on computer network, electronic and automation (ICCNEA), Xi'an, China, pp 87–90

Tang F, Ying Y, Wang J (2004) A novel texture synthesis based algorithm for object removal in photographs. In: Proceedings of the 9th annual asian computing science conference. Springer, Berlin, Heidelberg, pp 248–258

Liang S, Guo M, Liang X (2016) Enhanced Criminisi algorithm of digital image inpainting technology. Comput Eng Design 37(1314–8):1345

Afif M, Ayachi R, Said Y (2020) Deep learning based application for indoor scene recognition. Neural Process Lett 51(3):2827–2837

Chen Y, Hu H (2019) An improved method for semantic image inpainting with gans: progressive inpainting. Neural Process Lett 49(3):1355–1367

Champandard AJ (2016) Semantic style transfer and turning two-bit doodles into fine artworks. arXiv preprint https://arxiv.org/abs/1603.01768

Pathak D, Krahenbuhl P, Donahue J (2016) Context encoders: Feature learning by inpainting. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, USA, pp 2536–2544

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and locally consistent image completion. ACM Trans Graph (ToG) 36(4):1–14

Yu J, Lin Z, Yang J (2018) Generative image inpainting with contextual attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, USA, pp 5505–5514

Wang Y, Tao X, Qi X (2018) Image inpainting via generative multi-column convolutional neural networks. In: Proceedings of the 32nd International conference on neural information processing systems, pp 329–338

Zeng Y, Fu J, Chao H (2019) Learning pyramid-context encoder network for high-quality image inpainting. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, USA, pp 1486–1494

Sagong M, Shin Y, Kim S (2019) Pepsi: fast image inpainting with parallel decoding network. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, USA, pp 11360–11368

Liu HY, Jiang B, Song Y (2020) Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. In: The 6th European conference on computer vision. Glasgow, UK, pp 725–741

Goodfellow I J, Pouget-Abadie J, Mirza M (2014) Generative adversarial networks. arXiv preprint https://arxiv.org/abs/arXiv:1406.2661

Gao F, Yang Y, Wang J (2018) A deep convolutional generative adversarial networks (DCGANs)-based semi-supervised method for object recognition in synthetic aperture radar (SAR) images. Remote Sens 10(6):846

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein gan. arXiv preprint https://arxiv.org/abs/1701.07875

Gulrajani I, Ahmed F, Arjovsky M (2017) Improved training of Wasserstein GANs. arXiv preprint https://arxiv.org/abs/1704.00028

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv preprint https://arxiv.org/abs/1409.0473

Vaswani A, Shazeer N, Parmar N (2017) Attention is all you need. https://arxiv.org/abs/1706.03762

Wang X, Girshick R, Gupta A (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Salt Lake City, USA, pp 7794–7803

Fu J, Liu J, Tian H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3146–3154

Yuan Y, Huang L, Guo J (2018) Ocnet: object context network for scene parsing. arXiv preprint https://arxiv.org/abs/1809.00916

Guo MH, Liu ZN, Mu TJ (2021) Beyond self-attention: external attention using two linear layers for visual tasks. arXiv preprint https://arxiv.org/abs/2105.02358

Wang H, Jiao L, Wu H (2019) New inpainting algorithm based on simplified context encoders and multi-scale adversarial network. Procedia Comput Sci 147:254–263

Liao L, Hu R, Xiao J (2018) Edge-aware context encoder for image inpainting. In: 2018 IEEE international conference on acoustics, speech and signal processing. Calgary, Canada, pp 3156–3160

Liu R, Sisman B, Gao G (2021) Expressive tts training with frame and style reconstruction loss. IEEE/ACM Trans Audio Speech Lang Process 29:1806–1818

Kim J, Choi Y, Uh Y (2021) Feature statistics mixing regularization for generative adversarial networks. arXiv preprint https://arxiv.org/abs/2112.04120

Zha W, Li X, Xing Y (2020) Reconstruction of shale image based on Wasserstein generative adversarial networks with gradient penalty. Adv Geo-Energy Res 4(1):107–114

Chen Y, Zhang Y, Huang Z (2021) CelebHair: A new large-scale dataset for hairstyle recommendation based on CelebA. In: International conference on knowledge science, engineering and management. Tokyo, Japan, pp 323–336

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, G., Kang, P., Wu, X. et al. Adaptive Visual Field Multi-scale Generative Adversarial Networks Image Inpainting Base on Coordinate-Attention. Neural Process Lett 55, 9949–9967 (2023). https://doi.org/10.1007/s11063-023-11233-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11233-0