Abstract

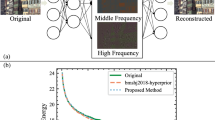

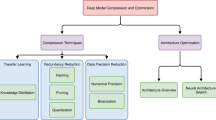

The rapid growth of computer vision-based applications, including smart cities and autonomous driving, has created a pressing demand for efficient 360\(^{\circ }\) image compression and computer vision analytics. In most circumstances, 360\(^{\circ }\) image compression and computer vision face challenges arising from the oversampling inherent in the Equirectangular Projection (ERP). However, these two fields often employ divergent technological approaches. Since image compression aims to reduce redundancy, computer vision analytics attempts to compensate for the semantic distortion caused by the projection process, resulting in a potential conflict between the two objectives. This paper explores a potential route, i.e. 360\(^{\circ }\) Image Coding for Machine (360-ICM), which offers an image processing framework that addresses both object deformation and oversampling redundancy within a unified framework. The key innovation lies in inferring a pixel-wise significant map by jointly considering the requirements of redundancy removal and object deformation offsetting. The significance map would be subsequently fed to a deformation-aware image compression network, guiding the bit allocation process as an external condition. More specifically, we employ a deformation-aware image compression network that is characterized by the Spatial Feature Transform (SFT) layer, which is capable of performing complex affine transformations of high-level semantic features, to be essential in dealing with the deformation. The image compression network and significance inference network are jointly trained under the supervision of a 360\(^{\circ }\) image-specified object detection network, obtaining a compact representation that is both analytics-oriented and deformation-aware. Extensive experimental results have demonstrated the superiority of the proposed method over existing state-of-the-art image codecs in terms of rate-analytics performance.

Similar content being viewed by others

Data Available

Our code will be open source once this manuscript is published.

References

Yang S, Zhu W, Xu H, Zhang X (2020) Graph learning based head movement prediction for interactive 360 video streaming. IEEE Trans Multimedia 22(9):2316–2327

Kyoungkook K, Sunghyun C (2019) Interactive and automatic navigation for 360 video playback. ACM Trans Grap 38(4):1–11

Duan L, Liu J, Yang W, Huang T, Gao W (2020) Video coding for machines: A paradigm of collaborative compression and intelligent analytics. IEEE Trans Image Process 29:8680–8695

Yang K, Zhang J, Reiss S, Hu X, Stiefelhagen XR (2021) Capturing omni-range context for omnidirectional segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 1376–1386

Xu H, Zhao Q, Ma Y, Li X, Yuan P, Feng B, Yan C, Dai F (2022) Pandora: A panoramic detection dataset for object with orientation. In: European conference on computer vision pp 237–252

Eder M, Shvets M, Lim J, Frahm JM (2020) Tangent images for mitigating spherical distortion. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 12426–12434

Armeni I, Sax A, Zamir R, Silvio S (2017) Joint 2d-3d-semantic data for indoor scene understanding. arXiv preprint arXiv:1702.01105

Chang A, Dai A, Funkhouser T, Halber M, Niessner M, Savva M, Song S, Zeng A, Zhang Y (2017) Matterport3d: Learning from rgb-d data in indoor environments. In: International conference on 3D vision pp 667–676

Su Y, Grauman K (2017) Learning spherical convolution for fast features from 360 imagery. Adv Neural Inform Process Syst 30

Su YC, Grauman K (2019) Kernel transformer networks for compact spherical convolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 9442–9451

Benjamin C, Paul CA, Andreas G (2018) Spherenet: Learning spherical representations for detection and classification in omnidirectional images. In: European conference on computer vision pp 62–78

Renata K, Pascal F (2019) Geometry aware convolutional filters for omnidirectional images representation. In: International conference on machine learning pp 3351–3359

Zhao Q, Zhu C, Dai F, Ma Y, Jin G, Zhang Y (2018) Distortion-aware cnns for spherical images. In: International joint conference on artificial intelligence pp 1198–1204

Cohen T, Geiger M, Köhler J, Welling M (2018) Spherical CNNs. In: International conference learning representations

Carlos E, Christine A, Ameesh M, Kostas D (2018) Learning SO (3) equivariant representations with spherical CNNs. In: European conference on computer vision pp 52–68

Nathanaël P, Michaël D, Tomasz K, Raphael S (2019) Deepsphere: Efficient spherical convolutional neural network with healpix sampling for cosmological applications. Astron Comput 27:130–146

Kostelec P, Rockmore D (2018) FFTs on the rotation group. J Fourier Anal Appl 14:145–179

Weinstein A (1996) Groupoids: unifying internal and external symmetry. Notices of the AMS 43:744–752

Jiang C, Huang J, Karthik K, Philip M, Matthias N (2019) Spherical CNNs on unstructured grids. In: International conference on learning representation

Sullivan G, Ohm J, Han W, Wiegand T (2012) Overview of the high efficiency video coding (hevc) standard. IEEE Trans Circuits Syst Video Technol 22:1649–1668

Bross B, Chen J, Liu S, Wang Y (2020) Jvet-s2001 versatilevideo coding (draft 10). In: iJoint Video exploration team (JVET) of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29/WG 11

Liu Y, Xu M, Li C, Li S, Wang Z (2017) A novel rate control scheme for panoramic video coding. In: 2017 IEEE International conference on multimedia and expo pp 691–696

Xiu X, He Y, Ye Y (2018) An adaptive quantization method for 360-degree video coding. Applications of Digital Image Processing XLI 10752:317–325

Tang M, Zhang Y, Wen J, Yang S (2017) Optimized video coding for omnidirectional videos. In: 2017 IEEE International conference on multimedia and expo pp 799–804

Liu Y, Yang L, Xu M, Wang Z (2018) Rate control schemes for panoramic video coding. J Vis Commun Image Represent 53:76–85

Li Y, Xu J, Chen Z (2017) Spherical domain rate-distortion optimization for 360-degree video coding. In: 2017 IEEE International conference on multimedia and expo pp 709–714

Yu M, Lakshman H, Girod B (2015) Content adaptive representations of omnidirectional videos for cinematic virtual reality. In: Proceedings of the 3rd international workshop on immersive media experiences pp 1–6

Youvalari R, Aminlou A, Hannuksela M (2016) Analysis of regional down-sampling methods for coding of omnidirectional video. In: 2016 Picture coding symposium (PCS) pp 1–5

Boyce J, Ramasubramanian A, Skupin GSR, Tourapis A, Wang Y (2017) Hevc additional supplemental enhancement information (draft 4). Joint collaborative team on video coding of ITU-T SG 16

Lee S, Kim S, Yip E, Choi B, Song J, Ko S (2017) Omnidirectional video coding using latitude adaptive down-sampling and pixel rearrangement. Electron Lett 53:655–657

Li M, Li J, Gu S, Wu F, Zhang D (2022) End-to-end optimized 360\(^{\circ }\) image compression. IEEE Trans Image Process 31:6267–6281

Li M, Ma K, Li J, Zhang D (2021) Pseudocylindrical convolutions for learned omnidirectional image compression. arXiv preprint arXiv:2112.13227

Wang X, Yu K, Dong C, Loy C (2018) Recovering realistic texture in image super-resolution by deep spatial feature transform. In: Proceedings of the IEEE conference on computer vision and pattern recognition pp 606–615

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) Centernet: Keypoint triplets for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision

Huang Z, Jia C, Wang S, Ma S (2021) Visual analysis motivated rate-distortion model for image coding. In: 2021 IEEE International conference on multimedia and expo (ICME) pp 1–6

Choi J, Han B (2020) Task-aware quantization network for jpeg image compression. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, Proceedings, Part XX 16, pp 309–324. Accessed 23–28 Aug 2020

Chamain L, Bégaint FRJ, Pushparaja A, Feltman S (2021) End-to-end optimized image compression for machines, a study. In: 2021 Data compression conference (DCC) pp 163–172

Le N, Zhang H, Cricri F, Ghaznavi-Youvalari R, Rahtu E (2021) Image coding for machines: an end-to-end learned approach. In: ICASSP 2021-2021 IEEE International conference on acoustics, speech and signal processing (ICASSP) pp 1590–1594

Suzuki S, Takagi M, Hayase K, Onishi T, Shimizu A (2019) Image pre-transformation for recognition-aware image compression. In: 2019 IEEE International Conference on Image Processing (ICIP) pp 2686–2690

Le N, Zhang H, Cricri F, Ghaznavi R, Tavakoli H, Rahtu E (2021) Learned image coding for machines: A content-adaptive approach. In: 2021 IEEE International conference on multimedia and expo (ICME) pp 1–6

Wang S, Wang S, Yang W, Zhang X, Wang S, Ma S (2021) Teacher-student learning with multi-granularity constraint towards compact facial feature representation. In: ICASSP 2021-2021 IEEE International conference on acoustics, speech and signal processing (ICASSP) pp 8503–8507

Wang S, Wang S, Yang W, Zhang X, Wang S, Ma S, Gao W (2021) Towards analysis-friendly face representation with scalable feature and texture compression. IEEE Trans Multimedia 24:3169–3181

Zhang P, Wang S, Wang M, Li J, Wang X, Kwong S (2023) Rethinking semantic image compression: Scalable representation with cross-modality transfer. IEEE Transactions on circuits and systems for video technology 33(8)

Chen Z, Fan K, Wang S, Duan L, Lin W, Kot A (2019) Lossy intermediate deep learning feature compression and evaluation. In: Proceedings of the 27th ACM international conference on multimedia , pp 2414–2422

Chen Z, Fan K, Wang S, Duan L, Lin W, Kot A (2019) Toward intelligent sensing: Intermediate deep feature compression. IEEE Trans Image Process 29:2230–2243

Raj MSAB (2020) Deriving compact feature representations via annealed contraction. In: ICASSP 2020-2020 IEEE International conference on acoustics, speech and signal processing (ICASSP) pp 2068–2072

Singh S, Abu-El-Haija S, Johnston N, Ballé J, Shrivastava A, Toderici G (2020) End-to-end learning of compressible features. In: 2020 IEEE International conference on image processing (ICIP) pp 3349–3353

Tateno K, Navab N, Tombari F (2018) Distortion-aware convolutional filters for dense prediction in panoramic images. In: Proceedings of the European conference on computer vision (ECCV) pp 707–722

Yang Q, Li C, Dai W, Zou J, Qi G, Xiong H (2020) Rotation equivariant graph convolutional network for spherical image classification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 4303–4312

Zakharchenko V, Choi KP, Park JH (2016) Quality metric for spherical panoramic video. Optics and Photonics for Information Processing X 9970:57–65

Yu M, Lakshman H, Girod B (2015) A framework to evaluate omnidirectional video coding schemes. In: 2015 IEEE International symposium on mixed and augmented reality pp 31–36

Ballé J, Minnen D, Singh S, Hwang S, Johnston N (2018) Variational image compression with a scale hyperprior. arXiv preprint arXiv:1802.01436

Song M, Choi J, Han B (2021) Variable-rate deep image compression through spatially-adaptive feature transform. In: Proceedings of the IEEE/CVF international conference on computer vision pp 2380–2389

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition pp 770–778

Dai F, Chen B, Xu H, Ma Y, Li X, Feng B, Yuan P, Yan C, Zhao Q (2022) Unbiased iou for spherical image object detection. Proceedings of the AAAI conference on artificial intelligence 36:508–515

Lin T, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick C (2014) Microsoft coco: Common objects in context. In: Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, Proceedings, Part V 13 pp 740–755. Accessed 6–12 Sept 2014

Chou S, Sun C, Chang W, Hsu W, Sun M, Fu J (2020) 360-indoor: Towards learning real-world objects in 360\(^{\circ }\) indoor equirectangular images. In: 2020 IEEE Winter conference on applications of computer vision (WACV) pp 834–842

Wallace G (1991) The jpeg still picture compression standard. Commun ACM 34:30–44

Minnen D, Ballé J, Toderici G (2018) Joint autoregressive and hierarchical priors for learned image compression. Adv Neural Inform Process Syst 31

Liu HSJ, Katto J (2023) Learned image compression with mixed transformer-cnn architectures. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR) pp 14388–14397

Funding

This work was supported in part by the National Natural Science Foundation of China Grants 62371310, in part by the Guangdong Basic and Applied Basic Research Foundation under Grant 2023A1515011236, in part by the Shenzhen Natural Science Foundation under Grants JCYJ20200109110410133.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflicts of interest

No Conflicts of interests for this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, S., Shen, X., Zhang, Q. et al. Towards 360\(^{\circ }\) image compression for machines via modulating pixel significance. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19139-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19139-2