Abstract

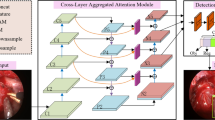

Surgeon action detection plays a crucial role in computer-assisted surgery. However, due to the problems of non-rigid instrument deformation, occlusion, and less available contextual information in the surgeon action detection, these factors lead to the problem that the average accuracy of the current detection of surgical motion is very low, which needs to be solved urgently. Therefore, inspired by the application of convolutional neural networks (CNNs) can express features through learning and success in medical image detection tasks, we developed a path aggregation adaptive spatial feature pyramid network (PAAS-FPN), which combines bottom-up path enhancement and an adaptive spatial fusion mechanism. Path enhancement can use the shallow feature information of images for upward transmission. The adaptive spatial feature fusion network adds spatial granularity between deep and shallow features. In this study, the improved method was experimentally verified on the ESAD dataset and surgeon instrument detection dataset. The proposed detection method achieved the highest detection accuracy in several experiments, thereby confirming its effectiveness in surgeon action detection.

Similar content being viewed by others

References

Azari DP, Hu YH, Miller BL, Le BV, Radwin RG (2019) Using surgeon hand motions to predict surgical maneuvers. Hum Factors 61(8):1326–1339

Bawa VS, Singh G, Kaping'A F, Skarga-Bandurova I, Leporini A, Landolfo C, Stabile A, Setti F, Muradore R, Oleari E, Cuzzolin F (2020) ESAD: endoscopic surgical action detection dataset. arXiv preprint, arXiv:2006.07164

Cai Z, Fan Q, Feris RS, Vasconcelos N (2016) A unified multi-scale deep convolutional neural network for fast object detection. Comput Vis ECCV pp 354–370

Cai Z, Vasconcelos N (2018) Cascade R-CNN: delving into high quality object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 6154–6162

Cao Y, Chen K, Loy CC, Lin D (2020) Prime sample attention in object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 11583–11591

Fu CY, Liu W, Ranga A, Tyagi A, Berg AC (2017) DSSD: deconvolutional single shot detector. arXiv preprint, arXiv:1701.06659

Ghiasi G, Lin TY, Le QV (2019) NAS-FPN: learning scalable feature pyramid architecture for object detection. Proc IEEE/CVF Comput Vis Pattern Recognit 2019:7029–7038

Girshick R (2015) Fast R-CNN. Proc IEEE Int Conf Comput Vis pp 1440–1448

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 580–587

Gkioxari G, Girshick R, Malik J (2015) Contextual action recognition with R*CNN. Proc IEEE Int Conf Comput Vis pp 1080–1088

Grammatikopoulou M, Flouty E, Kadkhodamohammadi A, Quellec G, Chow A, Nehme J, Luengo I, Stoyanov D (2021) CaDIS: cataract dataset for surgical RGB-image segmentation. Med Image Anal 71:102053

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. Proc IEEE Int Conf Comput Vis pp 2961–2969

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Hou R, Chen C, Shah M (2017) An end-to-end 3d convolutional neural network for action detection and segmentation in videos. arXiv preprint, arXiv:1712.01111

Kalogeiton V, Weinzaepfel P, Ferrari V, Schmid C (2017) Action tubelet detector for spatio-temporal action localization. Proc IEEE Int Conf Comput Vis pp 4405–4413

Kim K, Lee HS (2020) Probabilistic anchor assignment with IoU prediction for object detection. arXiv preprint, arXiv:2007.08103

Kocev B, Ritter F, Linsen L (2014) Projector-based surgeon-computer interaction on deformable surfaces. Int J Comput Assist Radio Surg 9(2):301–312

Kong T, Sun F, Liu H, Jiang Y, Li L, Shi J (2020) FoveaBox: beyond anchor-based object detection. IEEE Trans Image Process 29:7389–7398

Kong T, Yao A, Chen Y, Sun F (2016) Hypernet: towards accurate region proposal generation and joint object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 845–853

Li Y, Ohya J, Chiba T, Xu R, Yamashita H (2016) Subaction based early recognition of surgeons’ hand actions from continuous surgery videos. IIEEJ Trans Image Electron Vis Comput 4(2):124–135

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 2117–2125

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. Proc IEEE Int Conf Comput Vis pp 2980–2988

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: single shot multibox detector. Comput Vis ECCV pp 21–37

Liu S, Huang D, Wang Y (2019) Learning spatial fusion for single-shot object detection. arXiv preprint, arXiv:1911.09516

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 8759–8768

Lu X, Ma C, Shen J, Yang X, Reid I, Yang MH (2022) Deep object tracking with shrinkage loss. IEEE Trans Pattern Anal Mach Intell 44(5):2386–2401

Lu X, Wang W, Ma C, Shen J, Shao L, Porikli F (2019) See more, know more: unsupervised video object segmentation with co-attention siamese networks. Proc IEEE/CVF Comput Vis Pattern Recognit pp 3618–3627

Lu X, Wang W, Shen J, Crandall D, Gool LV (2021) Segmenting objects from relational visual data. IEEE Trans Pattern Anal Mach Intell 44:7885–7897. https://doi.org/10.1109/TPAMI.2021.3115815

Lu X, Wang W, Shen J, Crandall D, Luo J (2022) Zero-shot video object segmentation with co-attention siamese networks. IEEE Trans Pattern Anal Mach Intell 44(4):2228–2242

Najibiu M, Rastegari M, Davis LS (2018) G-CNN: an iterative grid based object detector. Proc IEEE Comput Soc Conf Comput Vis pattern Recognit pp 2369–2377

Pang J, Chen K, Shi J, Feng H, Ouyang W, Lin D (2019) Libra R-CNN: towards balanced learning for object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 821–830

Peng X, Schmid C (2016) Multi-region two-stream R-CNN for action detection. Comput Vis ECCV pp 744–759

Qiao S, Chen LC, Yuille A (2020) Detectors: detecting objects with recursive feature pyramid and switchable atrous convolution. arXiv preprint, arXiv:2006.02334

Qiao S, Wang H, Liu C, Shen W, Yuille A (2019) Weight standardization. arXiv preprint, arXiv:1903.10520

Radosavovic I, Kosaraju RP, Girshick R, He K, Dollár P (2020) Designing network design spaces. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 10428–10436

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 779–788

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 7263–7271

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. arXiv preprint, arXiv:1804.02767

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Saha S, Singh G, Cuzzolin F (2017) Amtnet: action-micro-tube regression by end-to-end trainable deep architecture. Proc IEEE Int Conf Comput Vis pp 4414–4423

Shen Z, Liu Z, Li J, Jiang Y-G, Chen Y, Xue X (2017) DSOD: learning deeply supervised object detectors from scratch. Proc IEEE Int Conf Comput Vis pp 1919–1927

Shrivastava A, Gupta A, Girshick R (2016) Training region-based object detectors with online hard example mining. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 761–769

Singh G, Saha S, Sapienza M, Torr P, Cuzzolin F (2017) Online real-time multiple spatiotemporal action localisation and prediction. Proc IEEE Int Conf Comput Vis pp 3637–3646

Sun K, Xiao B, Liu D, Wang JD (2019) Deep high-resolution representation learning for human pose estimation. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 5693–5703

Tian Z, Shen C, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection. Proc IEEE Int Conf Comput Vis pp 9627–9636

Van Amsterdam B, Nakawala H, Momi ED, Stoyanov D (2019) Weakly supervised recognition of surgical gestures. IEEE Int Conf Robot Autom, pp 9565–9571

Voros S, Hager GD (2008) Towards “real-time” tool-tissue interaction detection in robotically assisted laparoscopy. Proc IEEE RAS EMBS Int Conf Biomed Robot Biomechatron pp 562–567

Wang J, Zhang W, Cao Y, Chen K, Pang J, Gong T, Shi J, Loy CC, Lin D (2020) Side-aware boundary localization for more precise object detection. Comput Vis ECCV pp 403–419

Xu W, Liu R, Zhang W, Chao Z, Jia F (2021) Surgical action and instrument detection based on multiscale information fusion. IEEE Int Conf Comput Res Devel pp 11–15

Yang Z, Liu S, Hu H, Wang L, Lin S (2019) RepPoints: point set representation for object detection. Proc IEEE Int Conf Comput Vis pp 9657–9666

Zhang S, Chi C, Yao Y, Lei Z, Li SZ (2020) Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 9759–9768

Zhang X, Wan F, Liu C, Ji X, Ye Q (2021) Learning to match anchors for visual object detection. IEEE Trans Pattern Anal Mach Intell 44:3096–3109. https://doi.org/10.1109/TPAMI.2021.3050494

Zhang S, Wen L, Bian X, Lei Z, Li SZ (2018) Single-shot refinement neural network for object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 4203–4212

Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, Sun Y, He T, Mueller J, Manmatha R, Li M, Smola A (2020) ResNeSt: split-attention networks. arXiv preprint, arXiv:2004.08955

Zhu C, He Y, Savvides M (2019) Feature selective anchor-free module for single-shot object detection. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit pp 840–849

Acknowledgements

This work was supported by grants from the Key-Area Research and Development Program of Guangdong Province (No. 2020B010165004), the National Natural Science Foundation of China (Nos. 82001905, 62172401, and 12026602), the Guangdong Natural Science Foundation (Nos. 2022A1515010439 and 2022A0505020019), the Shenzhen Key Basic Science Program (No. JCYJ2022081801802005), the National Key R&D Program (No. 2019YFC0118100), the Zhuhai Science and Technology Program (No. ZH22017002210017PWC), the Shenzhen Key Laboratory Program (No. ZDSYS201707271637577) and Academic Promotion Project of Shandong First Medical University.

Author information

Authors and Affiliations

Contributions

Zhen Chao and Wenting Xu: Conceptualization, Methodology, Software, Writing-Original draft preparation.

Ruiguo Liu: Validation.

Hyosung Cho: Supervision and Writing-Reviewing.

Fucang Jia: Supervision, Writing-Reviewing and Editing.

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Zhen Chao and Wenting Xu are regarded as co-first author.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chao, Z., Xu, W., Liu, R. et al. Surgical action detection based on path aggregation adaptive spatial network. Multimed Tools Appl 82, 26971–26986 (2023). https://doi.org/10.1007/s11042-023-14990-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14990-1