Abstract

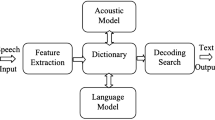

In the subject of pattern recognition, speech recognition is an important study topic. The authors give a detailed assessment of voice recognition strategies for several majority languages in this study. Over the last several decades, many researchers have contributed to the field of voice processing and recognition. Although there are several frameworks for speech processing and recognition, there are only a few ASR systems available for language recognition throughout the world. However, the data gathered for this research reveals that the bulk of the effort has been done to construct ASR systems for majority languages, whereas minority languages suffer from a lack of standard speech corpus. We also looked at some of the key issues for voice recognition in various languages in this research. We have explored various kinds of hybrid acoustic modeling methods required for efficient results. Because the success of a classifier is dependent on the removal of information during the feature separation phase, it is critical to carefully pick the value extraction techniques and classifiers.

Similar content being viewed by others

References

Abdel-Hamid O, Mohamed AR, Jiang H, Deng L, Penn G, Yu D (2014) Convolutional neural networks for speech recognition. Ieee-Acm Transac Audio Speech Lang Proc 22(10):1533–1545. https://doi.org/10.1109/Taslp.2014.2339736

Abushariah MA, Ainon RN, Zainuddin R, Elshafei M, Khalifa OO (2010) Natural speaker-independent Arabic speech recognition system based on Hidden Markov Models using Sphinx tools. In: International Conference on Computer and Communication Engineering (ICCCE'10). IEEE, pp 1–6

Al Mojaly M, Muhammad G, Alsulaiman M (2014) Detection and classification of voice pathology using feature selection. In: 2014 IEEE/ACS 11th International Conference on Computer Systems and Applications (AICCSA). IEEE, pp 571–577

Ali A, Zhang Y, Cardinal P, Dahak N, Vogel S, Glass J (2014) A complete KALDI recipe for building Arabic speech recognition systems. In: 2014 IEEE spoken language technology workshop (SLT). IEEE, pp 525–529

Ali A, Chowdhury S, Hussein A, Hifny Y (2021) Arabic code-switching speech recognition using monolingual data. arXiv preprint arXiv:2107.01573

Amodei D, Ananthanarayanan S, Anubhai R, Bai J, Battenberg E, Case C, Casper J et al (2016) Deep speech 2: End-to-end speech recognition in english and mandarin. In: International conference on machine learning. PMLR, pp 173–182

Amrous AI, Debyeche M, Amrouche A (2011) Robust Arabic speech recognition in noisy environments using prosodic features and formant. International Journal of Speech Technology 14(4):351–359

Ardila R, Branson M, Davis K, Henretty M, Kohler M, Meyer J, … Weber G (2019) Common voice: A massively-multilingual speech corpus. arXiv preprint arXiv:1912.06670

Baccouche M, Besset B, Collen P, Le Blouch O (2014) Deep learning of split temporal context for automatic speech recognition. Paper presented at the 2014 IEEE international conference on acoustics, Speech and Signal Processing (ICASSP)

Badino L, Canevari C, Fadiga L, Metta G (2016) Integrating articulatory data in deep neural network-based acoustic modeling. Comput Speech Lang 36:173–195

Bahdanau D, Chorowski J, Serdyuk D, Brakel P, Bengio Y (2016) End-to-end attention-based large vocabulary speech recognition. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 4945–4949

Bahl L, Brown P, De Souza P, Mercer R (1986l) Maximum mutual information estimation of hidden Markov model parameters for speech recognition. In: ICASSP'86. IEEE international conference on acoustics, speech, and signal processing. IEEE, vol. 11, pp 49–52

Baker JM, Deng L, Glass J, Khudanpur S, Lee C-H, Morgan N, O'Shaughnessy D (2009) Developments and directions in speech recognition and understanding, part 1 [DSP education]. IEEE Signal Process Mag 26(3):75–80

Beck E, Hannemann M, Doetsch P, Schlüter R, Ney H (2018) Segmental encoder-decoder models for large vocabulary automatic speech recognition. In: Interspeech, pp 766–770

Benzeghiba M, De Mori R, Deroo O, Dupont S, Erbes T, Jouvet D, … Ris C (2007) Automatic speech recognition and speech variability: A review. Speech Comm 49(10–11):763–786

Bérard A, Pietquin O, Servan C, Besacier L (2016) Listen and translate: A proof of concept for end-to-end speech-to-text translation. arXiv preprint arXiv:1612.01744

Bhuriyakorn P, Punyabukkana P, Suchato A (2008) A genetic algorithm-aided hidden markov model topology estimation for phoneme recognition of thai continuous speech. In: 2008 Ninth ACIS International Conference on Software Engineering, Artificial Intelligence, Networking, and Parallel/Distributed Computing. IEEE, pp 475–480

Biagetti G, Crippa P, Falaschetti L, Orcioni S, Turchetti C (2017) Speaker identification in noisy conditions using short sequences of speech frames. In: International Conference on Intelligent Decision Technologies. Springer, Cham, pp 43–52

Botros R, Irie K, SundermeyerM, Ney H (2015) On efficient training of wordclasses and their application to recurrent neural network language models. In: Interspeech. Dresden, pp 1443–1447

Bouchakour L, Debyeche M (2018) Improving continuous Arabic speech recognition over mobile networks DSR and NSR using MFCCS features transformed. Int J Circuits Syst Signal Process 12:1–8

Bourlard HA, Morgan N (2012) Connectionist speech recognition: a hybrid approach (Vol. 247): Springer Science & Business Media

Boyer F, Rouas J-L (2019) End-to-end speech recognition: A review for the French language. arXiv preprint arXiv:1910.08502

Burget L, Schwarz P, AgarwalM, Akyazi P, Feng K, Ghoshal A, ... Thomas S (2010) Multilingual acoustic modeling for speech recognition based on subspace Gaussian mixture models. In: 2010 IEEE international conference on acoustics, speech and signal processing. IEEE, pp 4334–4337

Cai M, Shi Y, Liu J (2013) Deep maxout neural networks for speech recognition. In: 2013 IEEE Workshop on Automatic Speech Recognition and Understanding. IEEE, pp 291–296

Chaloupka J, Nouza J, Malek J, Silovsky J (2015) Phone speech detection and recognition in the task of historical radio broadcast transcription. In: 2015 38th International Conference on Telecommunications and Signal Processing (TSP). IEEE, pp 1–4

Chen NF, Wee D, Tong R, Ma B, Li H (2016) Large-scale characterization of non-native mandarin Chinese spoken by speakers of European origin: analysis on iCALL. Speech Comm 84:46–56

Cheng H, Fang H, Ostendorf M (2019) A dynamic speaker model for conversational interactions. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp 2772–2785

Chien J-T, Huang C-H (2006) Aggregate a posteriori linear regression adaptation. IEEE Trans Audio Speech Lang Process 14(3):797–807

Chootrakool P, Chunwijitra V, Sertsi P, Kasuriya S, Wutiwiwatchai C (2016) LOTUS-SOC: A social media speech corpus for Thai LVCSR in noisy environments. In: 2016 Conference of The Oriental Chapter of International Committee for Coordination and Standardization of Speech Databases and Assessment Techniques (O-COCOSDA). IEEE, pp 232–236

Das T, Nahar K (2016) A voice identification system using hidden markov model. Indian J Sci Technol 9(4)

Deemagarn A, Kawtrakul A (2004) Thai connected digit speechrecognition using Hidden Markov models. In: International Conference on Speech and Computer (SPECOM), pp 731–735

Desai N, Dhameliya K, Desai V (2013) Feature extraction and classification techniques for speech recognition: A review. International Journal of Emerging Technology and Advanced Engineering 3(12):367–371

Dey A, Lalhminghlui W, Sarmah P, Samudravijaya K, Mahadeva Prasarma SR, Sinha R, Nirrnala SR (2017) Mizo phone recognition system. In: 2017 14th IEEE India Council International Conference (INDICON). IEEE, pp 1–5

Dey A, Sarma BD, Lalhminghlui W, Ngente L, Gogoi P, Sarmah P, Mahadeva Prasanna SR, Sinha R, Nirmala SR (2018) Robust mizo continuous speech recognition. In: Interspeech, pp 1036–1040

Dhonde SB, Jagade SM (2016) Comparison of vector quantization and gaussian mixture model using effective MFCC features for text-independent speaker identification. Int J Comput Appl 134(15)

Djemili R, Bourouba H, Korba MCA (2012) A speech signal based gender identification system using four classifiers. In: 2012 International conference on multimedia computing and systems. IEEE, pp 184–187

Draman M, Tee DC, Lambak Z, Yahya MR, Mohd Yusoff MI, Ibrahim SH, Saidon S, Abu Haris N, Tan TP (2017) Malay speech corpus of telecommunication call center preparation for ASR. In: 2017 5th International Conference on Information and Communication Technology (ICoIC7). IEEE, pp 1–6

Dua M, Aggarwal RK, Kadyan V, Dua S (2012) Punjabi automatic speech recognition using HTK. Int J Comput Sci Issues (IJCSI) 9(4):359

Dua M, Aggarwal RK, Kadyan V, Dua S (2012) Punjabi speech to text system for connected words. In: Fourth International Conference on Advances in Recent Technologies in Communication and Computing (ARTCom2012). IET, pp 206–209

Dua M, Aggarwal RK, Biswas M (2019) Discriminatively trained continuous Hindi speech recognition system using interpolated recurrent neural network language modeling. Neural Comput Appl 31(10):6747–6755

Dua M, Aggarwal RK, Biswas M (2018) GFCC based discriminatively trained noise robust continuous ASR system for hindi language. J Ambient Intell Humaniz Comput 10(6):2301–2314

Emami A, Mangu L (2007) Empirical study of neural network language models for Arabic speech recognition. In: 2007 IEEE Workshop on Automatic Speech Recognition & Understanding (ASRU). IEEE, pp 147–152

Enarvi S, Smit P, Virpioja S, Kurimo M (2017) Automatic speech recognition with very large conversational finnish and estonian vocabularies. IEEE/ACM Transactions on audio, speech, and language processing 25(11):2085–2097

Fantaye TG, Yu J, Hailu TT (2020) Advanced convolutional neural network-based hybrid acoustic models for low-resource speech recognition. Computers 9(2):36

Fauziya F, Nijhawan G (2014) A comparative study of phoneme recognition using GMM-HMM and ANN based acoustic modeling. International Journal of Computer Applications 98(6):12–16

Fook CY, Hariharan M, Yaacob S, Adom AH (2012) A review: Malay speech recognition and audio visual speech recognition. In: 2012 International Conference on Biomedical Engineering (ICoBE). IEEE, pp 479–484

Furui S (2012) Selected topics from LVCSR research for Asian Languages at Tokyo Tech. IEICE Trans Inf Sys 95(5):1182–1194

Gales MJ (1998) Maximum likelihood linear transformations for HMM-based speech recognition. Comput Speech Lang 12(2):75–98

Gawali BW, Gaikwad S, Yannawar P, Mehrotra SC (2011) Marathi isolated word recognition system using MFCC and DTW features. ACEEE International Journal on Information Technology 1(01):21–24

Gehring J, Auli M, Grangier D, Yarats D, Dauphin YN (2017) Convolutional sequence to sequence learning. In International conference on machine learning. PMLR, pp 1243–1252

Georgescu AL, Cucu H, Burileanu C (2017) SpeeD's DNN approach to Romanian speech recognition. In: 2017 International Conference on Speech Technology and Human-Computer Dialogue (SpeD). IEEE, pp 1–8

Georgescu AL, Cucu H, Buzo A, Burileanu C (2020) Rsc: A romanian read speech corpus for automatic speech recognition. In: Proceedings of the 12th language resources and evaluation conference, pp 6606–6612

Gergen S, Borß C, Madhu N, Martin R (2012) An optimized parametric model for the simulation of reverberant microphone signals. In: 2012 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2012). IEEE, pp 154–157

Gevaert W, Tsenov G, Mladenov V (2010) Neural networks used for speech recognition. Journal of Automatic control 20(1):1–7

Gonzalez-Dominguez J, Eustis D, Lopez-Moreno I, Senior A, Beaufays F, Moreno PJ (2014) A real-time end-to-end multilingual speech recognition architecture. IEEE Journal of Selected Topics in Signal Processing 9(4):749–759

Gupta A, Gupta H (2013) Applications of MFCC and Vector Quantization in speaker recognition. In: 2013 International conference on intelligent systems and signal processing (ISSP). IEEE, pp 170–173

Hammami N, Bedda M (2010) Improved tree model for arabic speech recognition. In: 2010 3rd International Conference on Computer Science and Information Technology. IEEE, vol 5, pp 521–526

Hanani A, Russell MJ, Carey MJ (2013) Human and computer recognition of regional accents and ethnic groups from British English speech. Comput Speech Lang 27(1):59–74

Hoffmeister B, Plahl C, Fritz P, Heigold G, Loof J, Schluter R, Ney H (2007) Development of the 2007 RWTH mandarin LVCSR system. In: 2007 IEEE Workshop on Automatic Speech Recognition & Understanding (ASRU). IEEE, pp 455–460

Hori T, Chen Z, Erdogan H, Hershey JR, Le Roux J, Mitra V, Watanabe S (2017) Multi-microphone speech recognition integrating beamforming, robust feature extraction, and advanced DNN/RNN backend. Comput Speech Lang 46:401–418

Hu X, Saiko M, Hori C (2014) Incorporating tone features to convolutional neural network to improve Mandarin/Thai speech recognition. In: Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2014 Asia-Pacific. IEEE, pp 1–5

Huang Y, Tian K, Wu A, Zhang G (2017a) Feature fusion methods research based on deep belief networks for speech emotion recognition under noise condition. Journal of ambient intelligence and humanized computing, 1-12

Huang H, Xu H, Hu Y, Zhou G (2017b) A transfer learning approach to goodness of pronunciation based automatic mispronunciation detection. The Journal of the Acoustical Society of America 142(5):3165–3177

Huang H, Hu Y, Xu H (2017c) Mandarin tone modeling using recurrent neural networks. arXiv preprint arXiv:1711.01946.

Huet S, Gravier G, Sébillot P (2010) Morpho-syntactic post-processing of N-best lists for improved French automatic speech recognition. Comput Speech Lang 24(4):663–684

Hwang M-Y, Peng G, Ostendorf M, Wang W, Faria A, Heidel A (2009) Building a highly accurate mandarin speech recognizer with language-independent technologies and language-dependent modules. IEEE Trans Audio Speech Lang Process 17(7):1253–1262

Ircing P, Krbec P, Hajic J, Psutka J, Khudanpur S, Jelinek F, Byrne W (2001) On large vocabulary continuous speech recognition of highly inflectional language-Czech. In: Seventh European Conference on Speech Communication and Technology

Jamal N, Shanta S, Mahmud F, Sha’abani MNAH (2017) Automatic speech recognition (ASR) based approach for speech therapy of aphasic patients: A review. In: AIP Conference Proceedings. AIP Publishing LLC 1883(1):020028

Joshi R, Kannan V (2021) Attention based end to end speech recognition for voice search in Hindi and English. In: Forum for Information Retrieval Evaluation, pp 107–113

Kadyan V, Mantri A, Aggarwal R (2017) Refinement of HMM model parameters for Punjabi automatic speech recognition (PASR) system. IETE J Res:1–16

Kantithammakorn P, Punyabukkana P, Pratanwanich PN, Hemrungrojn S, Chunharas C, Wanvarie D (2022) Using automatic speech recognition to assess Thai speech language fluency in the Montreal cognitive assessment (MoCA). Sensors 22(4):1583

Karafiát M, Grézl F, Hannemann M, Černocký JH (2014) BUT neural network features for spontaneous Vietnamese in BABEL. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 5622–5626

Karpov A, Kipyatkova I, Ronzhin A (2011) Very large vocabulary ASR for spoken Russian with syntactic and morphemic analysis. In: Twelfth annual conference of the international speech communication association

Karpov A, Krnoul Z, Zelezny M, Ronzhin A (2013) Multimodal synthesizer for Russian and Czech sign languages and audio-visual speech. In: International Conference on Universal Access in Human-Computer Interaction. Springer, Berlin, Heidelberg, pp 520–529

Karpov A, Markov K, Kipyatkova I, Vazhenina D, Ronzhin A (2014) Large vocabulary Russian speech recognition using syntactico-statistical language modeling. Speech Comm 56:213–228

Kaur J, Singh A, Kadyan V (2020) Automatic speech recognition system for tonal languages: state-of-the-art survey. Archives of Computational Methods in Engineering:1–30

Khelifa MO, Elhadj YM, Abdellah Y, Belkasmi M (2017) Constructing accurate and robust HMM/GMM models for an Arabic speech recognition system. International Journal of Speech Technology 20(4):937–949

Kipyatkova I, Karpov A, Verkhodanova V, Železný M (2012) Analysis of long-distance word dependencies and pronunciation variability at conversational Russian speech recognition. In: 2012 Federated Conference on Computer Science and Information Systems (FedCSIS). IEEE, pp 719–725

Kitchenham BA, Mendes E, Travassos GH (2007) Cross versus within-company cost estimation studies: A systematic review. IEEE Trans Softw Eng 33(5):316–329

Kothapalli V, Sarma BD, Dey A, Gogoi P, Lalhminghlui W, Sarmah P, … Sinha R (2018) Robust recognition of tone specified mizo digits using CNN-LSTM and nonlinear spectral resolution. In: 2018 15th IEEE India Council International Conference (INDICON). IEEE, pp 1–5

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25

Kuo HKJ, Arisoy E, Mangu L, Saon G (2011) Minimum Bayes risk discriminative language models for Arabic speech recognition. In: 2011 IEEE Workshop on Automatic Speech Recognition & Understanding. IEEE, pp 208–213

Kurian C, Balakrishnan K (2009) Speech recognition of Malayalam numbers. In: Proceedings of the world Congress on nature and biologically inspired computing, pp. 1475–1479

Larson M, Eickeler S (2003) Using syllable-based indexingfeatures and language models to improve Germanspoken document retrieval. Eurospeech’03, pp 1217–1220

Le H, Barbier F, Nguyen H Tomashenko N, Mdhaffar S, Gahbiche S, Bougares F, Lecouteux B, Schwab D, Estève Y (2021) ON-TRAC’ systems for the IWSLT 2021 low-resource speech translation andmultilingual speech translation shared tasks. In: International Conference onSpoken Language Translation (IWSLT), Bangkok (virtual), Thailand

Lee SC, Wang JF, Chen MH (2018) Threshold-based noise detection and reduction for automatic speech recognition system in human-robot interactions. Sensors 18(7):2068

Lei X, McDermott E, Variani E, Moreno IL (2016) Speaker verification using neural networks. In: Google Patents

Li X, Wu X (2015) Constructing long short-term memory based deep recurrent neural networks for large vocabulary speech recognition. In: 2015 ieee international conference on acoustics, speech and signal processing (icassp). IEEE, pp 4520–4524

Li J, Yu D, Huang JT, Gong Y (2012) Improving wideband speech recognition using mixed-bandwidth training data in CD-DNN-HMM. In: 2012 IEEE Spoken Language Technology Workshop (SLT). IEEE, pp 131–136

Li X, Yang Y, Pang Z, Wu X (2015) A comparative study on selecting acoustic modeling units in deep neural networks based large vocabulary Chinese speech recognition. Neurocomputing 170:251–256

Liang S, Yan W (2022) Multilingual speech recognition based on the end-to-end framework. Multimed Tools Appl

Liao J, Eskimez SE, Lu L, Shi Y, Gong M, Shou L, ... Zeng M (2020) Improving readability for automatic speech recognition transcription. arXiv preprint arXiv:2004.04438

Liu Y, Fung P, Yang Y, Cieri C, Huang S, Graff D (2006) Hkust/mts: A very large scale mandarin telephone speech corpus. In: International Symposium on Chinese Spoken Language Processing. Springer, Berlin, Heidelberg, pp 724–735

Liu G, Lei Y, Hansen JH (2010) Dialect identification: Impact of differences between read versus spontaneous speech. In: 2010 18th European Signal Processing Conference. IEEE, pp 2003–2006

Ljubešic N, Stupar M, Juric T (2012) Building named entity recognition models for croatian and slovene. In: Proceedings of the Eighth Information Society Language Technologies Conference, pp 117–122

Ljubešić N, Dobrovoljc K, Fišer D (2015) * MWELex–MWE lexica of Croatian, Slovene and Serbian extracted from parsed corpora. Informatica, 39(3)

Lopez-Moreno I, Gonzalez-Dominguez J, Martinez D, Plchot O, Gonzalez-Rodriguez J, Moreno PJ (2016) On the use of deep feedforward neural networks for automatic language identification. Comput Speech Lang 40:46–59

Maas AL, Qi P, Xie Z, Hannun AY, Lengerich CT, Jurafsky D, Ng AY (2017) Building DNN acoustic models for large vocabulary speech recognition. Comput Speech Lang 41:195–213

Maekawa K (2003) Corpus of Spontaneous Japanese: Its design and evaluation. In: ISCA & IEEE Workshop on Spontaneous Speech Processing and Recognition

Maseri M, Mamat M (2019) Malay language speech recognition for preschool children using hidden Markov model (HMM) system training. In computational science and technology (pp. 205-214): springer.

Maurya A, Kumar D, Agarwal R (2018) Speaker recognition for Hindi speech signal using MFCC-GMM approach. Procedia Comp Sci 125:880–887

Miao Y, Gowayyed M, Metze F (2015) EESEN: End-to-end speech recognition using deep RNN models and WFST-based decoding. In: 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU). IEEE, pp 167–174

Miao Y, Gowayyed M, Metze F (2015) EESEN: end-to-end speech recognition using deep RNN models and WFST-based decoding.Paper presented at the 2015 IEEE workshop on automatic speech recognition and understanding (ASRU) (pp. 167-174). IEEE

Milde B, Köhn A (2018) Open source automatic speech recognition for German. In: Speech communication; 13th ITG-symposium. VDE, pp 1–5

Mohan BJ (2014) Speech recognition using MFCC and DTW. In: 2014 international conference on advances in electrical engineering (ICAEE). IEEE, pp 1–4

Moncy AM, Athira M, Jasmin H, Rajan R (2020) Automatic speech recognition in Malayalam using DNN-based acoustic modelling. In: 2020 IEEE Recent Advances in Intelligent Computational Systems (RAICS). IEEE, pp 170–174

Moore AH, Parada PP, Naylor PA (2017) Speech enhancement for robust automatic speech recognition: evaluation using a baseline system and instrumental measures. Comput Speech Lang 46:574–584

Mukhamadiyev A, Khujayarov I, Djuraev O, Cho J (2022) Automatic speech recognition method based on deep learning approaches for Uzbek language. Sensors 22(10):3683

Najkar N, Razzazi F, Sameti H (2010) A novel approach to HMM-based speech recognition systems using particle swarm optimization. Math Comput Model 52(11–12):1910–1920

Nakamura S, Markov K, Nakaiwa H, Kikui G-I, Kawai H, Jitsuhiro T, … Yamamoto S (2006) The ATR multilingual speech-to-speech translation system. IEEE Trans Audio Speech Lang Process 14(2):365–376

Nguyen QT (2016) Speech classification using SIFT features on spectrogram images. Vietnam J Comp Sci 3(4):247–257

Nguyen BA, Van Nguyen K, Nguyen NL-T (2019) Error analysis for vietnamese named entity recognition on deep neural network models. arXiv preprint arXiv:1911.07228

Noda K, Yamaguchi Y, Nakadai K, Okuno HG, Ogata T (2014) Lipreading using convolutional neural network.In: INTERSPEECH, pp 1149–1153

Normandin Y, Cardin R, De Mori R (1994) High-performance connected digit recognition using maximum mutual information estimation. IEEE transactions on speech and audio processing 2(2):299–311

Nouza J, Zdansky J, Cerva P, Silovsky J (2010) Challenges in speech processing of Slavic languages (case studies in speech recognition of Czech and Slovak). In development of multimodal interfaces: active listening and synchrony (pp. 225-241): springer

Nouza J, Červa P, Kuchařová M (2013) Cost-efficient development of acoustic models for speech recognition of related languages. Radioengineering 22:866–873

Ouisaadane A, Safi S, Frikel M (2020) Arabic digits speech recognition and speaker identification in noisy environment using a hybrid model of VQ and GMM. TELKOMNIKA 18(4):2193–2204

Pan J, Liu C, Wang Z, Hu Y, Jiang H. (2012) Investigation of deep neural networks (DNN) for large vocabulary continuous speech recognition: Why DNN surpasses GMMs in acoustic modeling. In: 2012 8th International Symposium on Chinese Spoken Language Processing. IEEE, pp 301–305

Patil UG, Shirbahadurkar SD, Paithane AN (2016) Automatic speech recognition of isolated words in Hindi language using MFCC. In: 2016 International Conference on Computing, Analytics and Security Trends (CAST). IEEE, pp 433–438

Paul AK, Das D, Kamal MM (2009) Bangla speech recognition system using LPC and ANN. In: 2009 seventh international conference on advances in pattern recognition. IEEE, pp 171–174

Peddinti V, Povey D, Khudanpur S (2015) A time delay neural network architecture for efficient modeling of long temporal contexts. In: Sixteenth annual conference of the international speech communication association

Phan P, Giang TM, Nam L (2019) Vietnamese speech command recognition using recurrent neural networks. IJACSA) international journal of advanced computer science and applications, 10(7)

Plahl C, Hoffmeister B, HwangM-Y, Lu D, Heigold G, Loof J, Schlüter R, Ney H (2008) Recent improvements of the RWTH GALE Mandarin LVCSR system. In: Interspeech, pp 2426–2429

Plahl C, Schlüter R, Ney H (2011) Cross-lingual portability of Chinese and English neural network features for French and German LVCSR. In: 2011 IEEE Workshop on Automatic Speech Recognition & Understanding. IEEE, pp 371–376

Radeck-Arneth S, Milde B, Lange A, Gouvêa E, Radomski S, Mühlhäuser M, Biemann C (2015) Open source german distant speech recognition: Corpus and acoustic model. In: International conference on text, speech, and dialogue. Springer, Cham, pp 480–488

Rahman FD, Mohamed N, Mustafa MB, Salim SS (2014) Automatic speech recognition system for Malay speaking children. In: 2014 Third ICT International Student Project Conference (ICT-ISPC). IEEE, pp 79–82

Razavi M, Rasipuram R, Doss MM (2016) Acoustic data-driven grapheme-to-phoneme conversion in the probabilistic lexical modeling framework. Speech Comm 80:1–21

Richardson F, Reynolds D, Dehak N (2015) Deep neural network approaches to speaker and language recognition. IEEE signal processing letters 22(10):1671–1675

Rosdi F, Ainon RN (2008) Isolated malay speech recognition using Hidden Markov Models. In: 2008 International Conference on Computer and Communication Engineering. IEEE, pp 721–725

Sailor HB, Patil HA (2016) Novel unsupervised auditory filterbank learning using convolutional RBM for speech recognition. IEEE/ACM Transactions on audio, speech, and language processing 24(12):2341–2353

Sainath TN, Kingsbury B, Saon G, Soltau H, Mohamed A-R, Dahl G, Ramabhadran B (2015) Deep convolutional neural networks for large-scale speech tasks. Neural Netw 64:39–48

Sainath TN, Vinyals O, Senior A, Sak H (2015) Isolated malay speech recognition using Hidden Markov Models. In: 2008 International Conference on Computer and Communication Engineering. IEEE, pp 721-725

Sak H, Saraclar M, Gungor T (2012) Morpholexical and discriminative language models for Turkish automatic speech recognition. IEEE Trans Audio Speech Lang Process 20(8):2341–2351

Salam MSH, Mohamad D, Salleh SHS (2001) Neural network speaker dependent isolated Malay speech recognition system: handcrafted vs genetic algorithm. In: Proceedings of the Sixth International Symposium on Signal Processing and its Applications (Cat. No. 01EX467). IEEE, vol 2, pp 731–734

Saon G, Chien J-T (2011) Bayesian sensing hidden Markov models. IEEE Trans Audio Speech Lang Process 20(1):43–54

Saon G, Soltau H, Chaudhari U, Chu S, Kingsbury B, Kuo H-K, Mangu L, Povey D (2010) The IBM 2008 GALE Arabic speech transcription system. In: 2010 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, pp 4378–4381

ŞChiopu D, Oprea M (2014) Using neural networks for a discriminant speech recognition system. In: 2014 International Conference on Development and Application Systems (DAS). IEEE, pp 165–169

Schultz T, Kirchhoff K (2006) Multilingual speech processing: Elsevier.

Seide F, Li G, Yu D (2011) Conversational speech transcription usingcontext-dependent deep neural networks. In: Proceedings of Interspeech,pp 437–440

Seltzer ML, Yu D, Wang Y (2013) An investigation of deep neural networks for noise robust speech recognition. In: 2013 IEEE international conference on acoustics, speech and signal processing. IEEE, pp 7398–7402

Seman N, Jusoff K (2008) Automatic segmentation and labeling for spontaneous standard Malay speech recognition. In: 2008 international conference on advanced computer theory and engineering. IEEE, pp 59–63

Seman N, Bakar ZA, Bakar NA (2010) An evaluation of endpoint detection measures for malay speech recognition of an isolated words. In: 2010 International Symposium on Information Technology. IEEE, vol 3, pp 1628–1635

Sertsi P, Lamsrichan P, Chunwijitra V, Okumura M (2021) Hybrid input-type recurrent neural network language modeling for end-to-end speech recognition. In 2021 18th international joint conference on computer science and software engineering (JCSSE) (pp. 1-5). IEEE

Siivola V, Kurimo M, Lagus K (2001) Large vocabulary statistical language modeling for continuous speech recognition in finnish. In: INTERSPEECH, pp 737–740

Siniscalchi SM, Lee C-H (2009) A study on integrating acoustic-phonetic information into lattice rescoring for automatic speech recognition. Speech Commun 51(11):1139–1153. https://doi.org/10.1016/j.specom.2009.05.004

Skowronski MD, Harris JG (2003) Improving the filter bank of a classic speech feature extraction algorithm. In: Proceedings of the 2003 International Symposium on Circuits and Systems, 2003. ISCAS'03. IEEE, vol 4, pp IV–IV

Smit P, Virpioja S, Kurimo M (2017) Improved Subword Modeling for WFST-Based Speech Recognition. In: Interspeech, pp 2551–2555

Sodanil M, Nitsuwat S, Haruechaiyasak C (2010) Improving ASR for continuous Thai words using ANN/HMM. In:10th International Conferenceon Innovative Internet Community Systems (I2CS)–Jubilee Edition 2010

Spille C, Ewert SD, Kollmeier B, Meyer BT (2018) Predicting speech intelligibility with deep neural networks. Comput Speech Lang 48:51–66

Sukvichai K, Utintu C, Muknumporn W (2021) Automatic speech recognition for Thai sentence based on MFCC and CNNs. In 2021 second international symposium on instrumentation, control, artificial intelligence, and robotics (ICA-SYMP) (pp. 1-4). IEEE

Swietojanski P, Ghoshal A, Renals S (2014) Convolutional neural networks for distant speech recognition. IEEE signal processing letters 21(9):1120–1124

Theera-Umpon N, Chansareewittaya S, Auephanwiriyakul S (2011) Phoneme and tonal accent recognition for Thai speech. Expert Syst Appl 38(10):13254–13259

Tong S, Gu H, Yu K (2016) A comparative study of robustness of deep learning approaches for VAD. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 5695–5699

Valente F, Magimai-Doss M, Plahl C, Ravuri SV, Wang W (2010) A comparative large scale study of MLP features for MandarinASR. In: Interspeech, Makuhari, Sep 2010

Vazhenina D, Markov K (2011) Phoneme set selection for Russian speech recognition. In: 2011 7th International Conference on Natural Language Processing and Knowledge Engineering. IEEE, pp 475–478

Ververidis D, Kotropoulos C (2006) Emotional speech recognition: resources, features, and methods. Speech Comm 48(9):1162–1181

Veselý K, KarafiátM, Grézl F, JandaM, Egorova E (2012) The language-independent bottleneck features. In: 2012 IEEE Spoken Language Technology Workshop (SLT). IEEE, pp 336–341

Vydana HK, Pulugandla B, Shrivastava M, Vuppala AK (2017) DNN-HMM acoustic modeling for large vocabulary Telugu speech recognition. Paper presented at the mining intelligence and knowledge exploration: 5th international conference, MIKE 2017, Hyderabad, India, December 13–15, 2017, proceedings

Wahyuni ES (2017) Arabic speech recognition using MFCC feature extraction and ANN classification. In: 2017 2nd International conferences on Information Technology, Information Systems and Electrical Engineering (ICITISEE). IEEE, pp 22–25

Wang C, Miao Z, Meng X (2008) Differential mfcc and vector quantization used for real-time speaker recognition system. In: 2008 Congress on Image and Signal Processing. IEEE, vol 5, pp 319–323

Wang D, Wang X, Lv S (2019) An overview of end-to-end automatic speech recognition. Symmetry 11(8):1018

Wang Y, Mohamed A, Le D, Liu C, Xiao A, Mahadeokar J, Huang H et al (2020) Transformer-based acoustic modeling for hybrid speech recognition. In: ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 6874–6878

Watanabe S, Hori T, Kim S, Hershey JR, Hayashi T (2017) Hybrid CTC/attention architecture for end-to-end speech recognition. IEEE Journal of Selected Topics in Signal Processing 11(8):1240–1253

Weng C, Yu D, Watanabe S, Juang BHF (2014) Recurrent deep neural networks for robust speech recognition. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 5532–5536

Weninger F, Schuller B, Eyben F, Wöllmer M, Rigoll G (2014) A broadcast news corpus for evaluation and tuning of German LVCSR systems. arXiv preprint arXiv:1412.4616

Yang D, Pan Y-C, Furui S (2012) Vocabulary expansion through automatic abbreviation generation for Chinese voice search. Comput Speech Lang 26(5):321–335

Ying W, Zhang L, Deng H (2020) Sichuan dialect speech recognition with deep LSTM network. Frontiers of Computer Science 14(2):378–387

Zerari N, Abdelhamid S, Bouzgou H, Raymond C (2019) Bidirectional deep architecture for Arabic speech recognition. Open Computer Science 9(1):92–102

Zhai L, Fung P, Schwartz R, Carpuat M, Wu D (2004) Using n-best lists for named entity recognition from Chinese speech. In: Proceedings of HLT-NAACL 2004: Short Papers, pp 37–40

Zhang Y, Pezeshki M, Brakel P, Zhang S, Bengio CLY, Courville A (2017) Towards end-to-end speech recognition with deep convolutional neural networks. arXiv preprint arXiv:1701.02720

Zhang X, Zhang F, Liu C, Schubert K, Chan J, Prakash P, Zweig G (2021) Benchmarking LF-MMI, CTC and RNN-T criteria for streaming ASR. In 2021 IEEE spoken language technology workshop (SLT) (pp. 46-51). IEEE

Zhao F, Raghavan P, Gupta SK, Lu Z, Gu W (2000) Automatic speech recognition in mandarin for embedded platforms. In: Sixth International Conference on Spoken Language Processing

Ziehe S, Pannach F, Krishnan A (2021) GCDH@ LT-EDI-EACL2021: XLM-RoBERTa for hope speech detection in English, Malayalam, and Tamil. In proceedings of the first workshop on language Technology for Equality, diversity and inclusion (pp. 132-135)

Zou W, Jiang D, Zhao S, Yang G, Li X (2018) Comparable study of modeling units for end-to-end mandarin speech recognition. In: 2018 11th International Symposium on Chinese Spoken Language Processing (ISCSLP). IEEE, pp 369-373

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have reported no conflicts of interest. The authors claim that they have no known direct financial conflicts of interest that might have impacted the findings of this study. The writers claim to have no financial or personal relations that may be seen as having conflicting agendas.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kaur, A.P., Singh, A., Sachdeva, R. et al. Automatic speech recognition systems: A survey of discriminative techniques. Multimed Tools Appl 82, 13307–13339 (2023). https://doi.org/10.1007/s11042-022-13645-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13645-x