Abstract

We introduce a new methodology for inference of fluid composition from measurements of mineralogical or chemical compositions, expanding upon the use of reactive transport models to understand hydrothermal alteration processes. The reactive transport models are used to impute a latent variable explanatory mechanism in the formation of hydrothermal alteration zones and mineral deposits. An expectation maximisation algorithm is then employed to solve the joint problem of identifying alteration zones in the measured data and estimating the fluid composition, based on the fit between the mineral abundances in the measured and predicted alteration zones. Using the hydrothermal alteration of granite as a test case (greisenisation), a range of synthetic tests is presented to illustrate how the methodology enables objective inference of the mineralising fluid. For field data from the East Kemptville tin deposit in Nova Scotia, the technique generates inferences for the fluid composition which compare favourably with previous independent estimates, demonstrating the feasibility of the proposed calibration methodology.

Similar content being viewed by others

1 Introduction

Big data and small data approaches combined with geological expert knowledge have been employed to obtain insight from measured chemical or mineralogical compositions. Small data approaches are characterised by the screening of large data sets to obtain a subset of high-quality data for a subsequent interpretation (e.g. Herzberg et al. 2010). In contrast, big data approaches rely on statistical methods to analyse large data sets to discover groups and trends (e.g. De Souza et al. 2019). Both approaches rely on expert knowledge of the geological context to make sense of the compositional groups discovered, to screen the measurements prior to analysis, and to adjust parameters related to the statistical methods, for example, the minimum separation between the clusters to be identified. This is often an iterative, subjective and qualitative process.

Reactive transport models enable numerical simulation of the hydrothermal alteration processes that have led to the observed groups and trends, and thus can contribute to an understanding of the underlying processes (e.g. Halter et al. 1998; Geiger et al. 2002). Assuming that a reactive transport model can be formulated that is a good approximation of the geological processes, some of its parameters, such as the composition of the fluid entering the system, will still need to be defined. In the absence of any other sources of information, a natural choice for the fluid composition is the one that leads to the smallest difference between the measured data and the modelled data.

Provided that chemical reactions go to their completion, hydrothermal alteration due to fluid infiltration at constant temperature and pressure leads to the formation of zones with distinctively different mineralogical and chemical compositions. Calibration of the underlying model relies on quantifying how well the modelled alteration zones match measured data, and this requires the assignment of each measurement to an alteration zone. For a well-characterised borehole or geological outcrop, the assignment might be trivial, but this is no longer the case when faced with random samples of an alteration system.

A similar problem occurs when fitting a Gaussian mixture model to a set of points, where the unknowns are the means of the Gaussians, their covariance matrices and mixture weights, and the assignment of each point to a cluster. The categorical variable assigning points to clusters is commonly referred to as a latent or hidden variable. Fitting the Gaussians to the points requires the estimation of the component means, covariance matrices and weights, as well as inference of the hidden variables. Thus, the mineralogical assignment problem can be solved with methods that are strongly related to the fitting of Gaussian mixture models. Specifically, we use an expectation maximisation algorithm (Dempster et al. 1977) to update the model parameters as part of an iterative non-linear approach.

Alteration zones can be observed at different scales, from within centimetres of a fault (e.g. Nishimoto and Yoshida 2010) to remote sensing data sets covering large spatial geological features, for example across a fold belt (e.g. Laukamp et al. 2011). If the aim is to determine the composition of the fluid causing an alteration, the scale on which a sequence of alteration zones is observed is less important, due to the fractal nature of alteration zones. That is, the interest is in calibrating a model that can predict the alteration zones in the correct sequence, and the scale of the alteration zones in time and space is of less concern. Thus, the model to be derived from those data needs to be only as complex as necessary to fit the compositional data within the noise level and should not contain features that are not essential to match the data. Such an Occamist perspective has been argued for in the geophysical literature for more than 35 years (e.g. Constable et al. 1987), and from this point of view, a one-dimensional reactive transport model is sufficient to explain the observations, given that it can be used to predict the observed alteration zones.

Information about fluids responsible for the formation of an alteration pattern, and ultimately an ore deposit, is typically obtained through the analysis of fluid inclusions or mineral stability diagrams. While those fluid inclusions are potential direct observations of the fluid responsible for the formation of an alteration pattern, the analytical data are often incomplete (e.g. there are no data on such critical parameters as fluid acidity or redox state). Fluid inclusions may also be affected by a variable degree of post-entrapment modifications/alterations, and the analysis itself can be time-consuming. Similarly, the applicability of two-dimensional mineral stability diagrams is limited due to the multi-component nature of many alteration systems. The approach introduced here allows a rapid and approximate inference to be made with respect to the fluid composition, given observations of mineral abundances or chemical concentrations.

We introduce the methodology initially for the simple example of the alteration of granite by an acid Na–K–Cl-rich fluid. This is accompanied by a discussion of how well we can expect to be able to resolve the model parameters we seek to infer from the data, particularly as the hydrothermal alteration system becomes more complex. Finally, to study the performance of the method, it is applied to a setting where there is a wealth of information about the fluid composition. In this application we seek to predict the fluid composition through inference from normative mineralogical compositions, capturing the greisenisation at the East Kemptville tin deposit in Nova Scotia, Canada.

2 Method

A synthetic example centred around the hydrothermal alteration of granite with the parameters given in Table 1 is used to introduce the inference methodology. While the behaviour of this simple hydrothermal system could be captured with two-dimensional mineral stability diagrams, it is used here to introduce the inference methodology, with the understanding that once the system becomes more complex, its behaviour can no longer be captured by two-dimensional mineral stability diagrams.

In the following, we introduce the forward problem, use it to generate a synthetic data set, and finally try to independently infer the fluid composition that was used to generate the synthetic data set in the first instance. The forward model predicts the concentrations (mol/m\(^{3}\)) of quartz (Qz), pyrophyllite (Prl), K-feldspar (Kfs), albite (Ab) and muscovite (Ms) reported in the alteration zones. A datum or observation is then given by the concentrations of the aforementioned minerals, and the dimensionality of the data space is given by the number of minerals being tracked/measured. For a fixed number of sample observations, the data set becomes sparser as the dimensionality of the (multivariate) data space increases, and thus it becomes more difficult to extract features, as for example in cluster analysis (e.g. Aggarwal et al. 2001). Hence, we seek to use a data space that is as low-dimensional as possible but still contains the features present in the higher-dimensional data space, that is, the alteration zones. The desired dimensionality reduction is achieved here by normalising the subset of thermodynamically relevant concentrations, that is, the ones the model parameters are sensitive to. The parameters to be inferred from these data are a subset of the parameters that define the reactive transport model; they will in the following be referred to as the “model parameters”, here comprising the concentrations of NaCl and KCl in mol/kg of H\(_{2}\)O in the fluid being injected.

The data being fitted are concentrations; model-based clustering via finite mixture models has previously been applied to such compositional data to identify groupings and infer parameters for the underlying distributions (e.g. Mooijaart et al. 1999; Comas-Cufí et al. 2016; Pal and Heumann 2022). Similarly, inference of the model parameters relies on the assumption that the data can be described by a finite mixture of multivariate distributions, here log-normal, with the complication that the means of the multivariate distribution are based on predictions made by the reactive transport model; our explanatory variables are the parameters of the reactive transport model. Concentrations and normalised concentrations cannot be negative, and it is thus convenient to model them log-normally distributed.

Fitting a model using a normal distribution to quantify the misfit residuals between the predicted and measured logarithm of normalised concentrations is related to applying an additive log-ratio transform to the measurements (Aitchison 1982), and then assuming that they are normally distributed. Different log-ratio transforms have been proposed for the analysis of compositional data (e.g. Aitchison 1986; Pawlowsky-Glahn and Egozcue 2006), and depending on the statistical method to be used to identify structures in the data, one log-ratio transform might be preferred to others. For example, it can be shown that the groups resulting from a distance-based clustering applied to additive log-ratio-transformed data depend on the choice of denominator (e.g. Hron et al. 2021). Conversely, Greenacre et al. (2021) argue for the additive log-ratio transformation with optional amalgamation and carefully selected components/ratios, for a setting where there is a geochemical model to be tested against the data. Similarly, successful inference will depend on selecting a data space that reflects knowledge about the thermodynamic processes embedded in the reactive transport model, and this needs to be expressed by the choice of log-ratios. The information the data carry about our model parameters is subject to the choice of data space and the thermodynamic processes captured in the reactive transport model.

2.1 Forward Problem

Solving the inverse problem relies on first defining a forward model that is a differentiable mapping from the fluid composition to the alteration zone centroids, that is, their predicted average mineralogical or chemical composition. Throughout this work the prediction of the alteration zones is based on the solution of a one-dimensional reactive transport problem with Reaktoro (Leal 2015; Leal et al. 2017). The reactive transport model is run forward in time until an expected number of alteration zones have formed and can be identified. The number of expected alteration zones is directly dependent on the model setup. Thus, prior to attempting to calibrate the model, one needs to understand how many alteration zones the model generates.

a Mineral concentration as a function of the distance from the fluid injection boundary after 625 days. b Extracted alteration zones, with zone 3 representing the most altered zone and zone 1 the unaltered rock. c Extracted alteration zones as a stack-bar plot emphasising the difference in total concentrations between the zones which is a consequence of the different amounts (mol) of reactants and products in the reactions that produce the mineral assemblages. d Alteration zones in the data space given by the normalised concentrations of Ms/Kfs and Ab/Kfs

In the one-dimensional reactive transport model, the existence, position and spatial extent of the alteration zones depends, among other factors, on the amount of fluid being injected, which is a function of time. Figure 1a shows an example spatial distribution of the concentration of the selected minerals after 625 days simulation, where three clear alteration zones with a distinct mineralogical composition have formed. If one sought to match the actual length and time scales of this predictive model, the resulting inverse problem would become very challenging, introducing additional parameters that would need to be constrained by the data, such as the fluid flux (Dolejš 2015). Thus, the non-localised concept of alteration zones is critical in that it is used to link the model prediction and the observed data.

A closer inspection of the transition from zone 2 to 3 indicates that the model could form a fourth alteration zone consisting of Qz and Ms, but the limited spatial extent of the zone means that for the given setting, this zone is unlikely to be usable in an inference context. Thus, the synthetic example is based on the three distinct alteration zones, with one being the unaltered rock, and so one can expect to be able to recover two model parameters.

Given the results of the reactive transport model for a given time step, the alteration zones can be extracted using clustering in the space spanned by the five minerals (Qz, Prl, Kfs, Ab and Ms) and the distance from the boundary across which the fluid is being injected. For a variety of models and data space definitions, density-based spatial clustering of applications with noise (DBSCAN) (Ester et al. 1996) has consistently been able to extract the alteration zones, particularly after scaling the input data. Figure 1d shows the extracted alteration zones which represent the model prediction that will be compared with the observed measurements. The difference in total concentrations (mol/m\(^{3}\)) between the three zones in Fig. 1b and c is due to the different amounts (mol) of reactants and products in the reactions that produce the mineral assemblages of different zones. Further, the underlying reactive transport is generally not a closed system, and minerals can be formed by consuming species in the fluid being injected and minerals present in the rock. Therefore, the concentrations of sets of minerals or elements no longer sum up to the same total concentration for different alteration zones (e.g. Pingitore and Engle 2022).

Given that data are typically sparse in high dimensions, it is desirable to find a low-dimensional data space that still captures the three alteration zones. Care must be taken when employing such dimensionality reductions to ensure that the reduced data space still captures the alteration zones that are predicted by the model and expected to be present in the field data. Figure 1d shows that for this example, a data space spanned by the concentrations of muscovite and albite normalised by the concentration of K-feldspar captures the three alteration zones.

2.2 Synthetic Data Set

The predictions made by the forward model can be used to generate synthetic data sets that in turn will be used to illustrate how well a model can be recovered from data; that is, we simulate a known model and seek to recover it. In the following, normalised measurements for a given alteration zone are assumed to follow a multivariate log-normal distribution, with the probability density function given as

where the vector \({\textbf{x}}\) represents a measurement with k components, \({\text {e}}^{(x)}=\exp (x)\) is the exponential function, and \({\varvec{\mu }}\) and \(C_{\textrm{d}}\) are the parameters defining the log-normal distribution. Under the assumption of no correlation between the normalised mineral concentrations that form a measurement, \(C_{\textrm{d}}\) is a diagonal matrix with its diagonal elements given by \(\sigma ^2\), that is, the square of the standard deviation which is equal to the variance. Then we may model a distribution with mean \({\varvec{\mu }}_x\) and variance \({\varvec{\sigma }}_{x}^{2}\) by choosing

with the variance (i.e. the diagonal elements of the covariance matrix \(C_{\textrm{d}}\)) given as

For each of the alteration zones in Fig. 1d, 75 samples are now generated with the diagonal elements of the data covariance matrix given by \(\sigma _{x\textrm{Ms}/{\textrm{Kfs}}}=0.15\) and \(\sigma _{x \textrm{Ab}/{\textrm{Kfs}}}=0.15\), and \({\varvec{\mu }}_x\) given by prediction made using the true model. The variances were chosen so that there are distinct alteration zones (clusters) in the synthetic data. The synthetic data set is shown in Fig. 2, and in the following, a fluid composition will be inferred from these data and compared with the fluid composition (true model) that was used to generate the data set. There is no guarantee that each data point can be produced by the underlying reactive transport model, in other words that it is thermodynamically feasible. Nonetheless, the limitations of such synthetic data sets are likely to be minor compared with the complexities the algorithm will encounter when applied to field data.

2.3 Model Parameters

The ability to constrain and accurately determine the parameters of the reactive transport model is influenced by several factors, including the number of alteration zones present in the data, the dimensionality and choice of the data space, and the number of observations. These elements play a role in determining the accuracy of parameter estimates. It is important to consider that the composition of the fluid in equilibrium with the rock may differ from the fluid being injected. However, in the selected data space, the unaltered rock does not provide information about the injected fluid, as the data only encompass a subset of minerals. As a result, in order to maintain a favourable data-to-parameter ratio, the inference is limited to two model parameters.

The concentrations of NaCl and KCl in mol/kg of H\(_{2}\)O for the fluid being injected now become the elements of the model vector \({\textbf{m}}\). The data are given by the vector \({\textbf{y}} = y_{(i-1)r+ k}\), where i represents the measurement index and k the mineral ratio index; more generally, k is the data space index and r the dimensionality of the data space. The ith measurement \([y_{(i-1)r+1}, y_{(i-1)r + 2},\ldots , y_{ir}]\) formed by r components is then given as \(y_{(i-1)r+1..ir}\), with .. denoting an integer interval that includes both endpoints and all indices being unit-offset.

The model prediction is given as \(f({\textbf{m}}) = f({\textbf{m}})_{(j-1)r +k}\), where j represents the alteration zone index, k the mineral ratio (i.e. data space index) and r the dimensionality of the data space. Hence, \(f({\textbf{m}})_{(j-1)r+1..jr}\) is the prediction of the r mineral ratios, that is, components for the jth alteration zone.

For practical implementation, it is convenient to organise the model predictions and observations as matrices, and this is discussed in Appendix A. Information about the local shape of the likelihood function in addition to its value for a given model allows for efficient optimisation. A finite difference approach is used to compute the partial derivatives for each prediction with respect to the model parameters to form a numerical Jacobian, with the computation being parallel over the model parameters.

2.4 Inverse Problem

The inverse problem belongs to the family of latent variable problems. In addition to observable variables and model parameters, there are latent variables that are inferred through a mathematical model from the observed variables. When fitting the reactive transport model, the aim is to infer the composition of the fluid being injected (i.e. the model parameters), and thus one needs to determine for each measurement the alteration zone to which it belongs. The alteration zone assigned for each measurement is the hidden or latent variable. It is impossible to infer the latent variables and the model parameters simultaneously, as simultaneous optimisation in the joint discrete and continuous space is intractable. The expectation maximisation algorithm (Dempster et al. 1977) solves this by choosing a set of arbitrary values for one of the two classes of parameters and then using it to estimate the second class. The new values for the second class are then used to update the estimate for the first class. It iterates between these two steps until the model estimate converges. The step to update the information about the latent variables is referred to as the expectation step, whereas the one updating the model parameters is referred to as the maximisation step. Here, the alteration zone to which each measurement belongs is inferred with the expectation step, and the concentrations of the fluid components are determined with the maximisation step.

2.4.1 Expectation Maximisation

The expectation maximisation algorithm may start with the expectation or the maximisation step. For this problem, if the first step is the maximisation step, each measurement has to be randomly assigned to an alteration zone according to some distribution, for example, one that assumes that the alteration zones are equiprobable. Further to this, the fluid composition would still require an initial guess, as in the maximisation step only an update to the fluid composition can be computed. It is thus simpler to start the expectation maximisation algorithm with the expectation step and provide an initial guess for the fluid composition.

The expectation step computes the membership matrix Z comprising the membership weights \(w_{i,j}\) for the ith measurement with respect to the jth alteration zone given as

with

Here, the data covariance matrix \(C_{\textrm{d}} = {{\,\textrm{diag}\,}}({\varvec{\sigma }}^2) = {{\,\textrm{diag}\,}}(h(f({\textbf{m}})_{(j-1)r+1..jr},{\varvec{\sigma }}_{\varvec{x}}))\) is a diagonal matrix with its diagonal elements representing the uncertainty or variability of the concentration of a given mineral within an alteration zone, with \({\varvec{\sigma }}_{\varvec{x}}\) the desired standard deviation of the log-normal distribution. In principle, it is possible to treat the data covariance matrix as unknown, that is, to infer its values simultaneously with the model (i.e. the fluid composition). This could be combined with an ability to classify possibly spurious observations as not belonging to an alteration zone. Doing so would increase the number of unknowns, namely the pieces of information to be constrained with the information provided by the measurements, and this would require a larger number of measurements than what is available in the field data example. To avoid this, we fix \({\varvec{\sigma }}_{\varvec{x}}\) a priori to balance identifiability with multimodality: if \({\varvec{\sigma }}_{\varvec{x}}\) is too large with respect to separation of the means of the alteration zones, the likelihood function will be flat, and there will be little difference between different models with respect to the fit to the data. If \({\varvec{\sigma }}_{\varvec{x}}\) is too small, the likelihood function will contain several local maxima, and an iterative non-linear approach is more likely to get trapped in a local maxima. Choosing \({\varvec{\sigma }}_{\varvec{x}}\) as 25% of the range spanned by the observations leads to a smooth log-likelihood function with a large basin of attraction amenable to the deployment of an iterative non-linear approach to optimisation.

In the subsequent maximisation step, the membership matrix Z is now used to re-weight the Jacobian X when computing the model update \(\Delta {\textbf{m}}\). The full Newton step for the model update is given by

where \(\Delta {\textbf{y}}\) is the difference between each observation and the prediction for the most probable alteration zone it represents, with elements \( \Delta y_{(i-1)r+k} = y_{(i-1)r+k} - f({\textbf{m}})_{(j-1)r+k}\), with \(j = {{\,\mathrm{arg\,max}\,}}_i Z_{i,:}\). Here, i represents the measurement index, j the alteration zone index, k the data component index and r the dimensionality of the data space. A backtracking line search is used to iteratively shrink the full Newton step until the negative log likelihood

decreases.

Based on the synthetic data shown in Fig. 2, the expectation maximisation algorithm is now employed to infer the composition of the fluid entering the model. The initial guess for the fluid composition is significantly different from the true fluid composition used to generate the synthetic data set. It is only in the third alteration zone, representing the most altered rock, where the two fluid compositions lead to distinctly different predictions. After four expectation and maximisation steps, the changes to the inferred fluid composition become negligible (i.e. \(\Vert \Delta {\textbf{m}} \Vert _{2} < 10^{-6}\)). This corresponds to four Jacobian evaluations and 22 evaluations of the log-likelihood function, or 30 calls to the underlying reactive transport model. The time to infer the fluid composition depends on the time it takes to solve the reactive transport problem, which for this example is in the order of minutes. The maximum a posteriori (MAP) solution is the best-fitting model that has been found by the expectation maximisation algorithm. The success of the inference is evident in that it matches the synthetic data better than the initial model, and its fluid composition is close to the fluid composition of the true model, that is, the model that was used to generate the synthetic data (Fig. 1).

Normalised mineral concentrations for the initial guess, the true model, the MAP model and the observations generated based on the predictions for the true model. The alteration zones are numbered from 1 to 3, with 1 representing the unaltered rock, and 3 the most altered rock. Fluid compositions inferred from the synthetic data are plotted together with the initial model and the true model (i.e. the model used to generate the synthetic data). For all alteration zones, the MAP model prediction, the true model prediction, and the mean of the alteration zone samples plot on top of each other

In Fig. 2, bootstrapping with replacement is employed to explore the range of models that fit the data (i.e. the robustness of the inferred fluid composition). Successful inference of a model from data relies typically on the inverse problem being overdetermined, that is, there are more observations than model parameters that need to be constrained. Increasing the number of measurements for each alteration zone and/or decreasing the amount of log-normally distributed noise used to generate the synthetic data set typically leads to the recovered models being closer to the true model. This example seeks to constrain two model parameters from three alteration zones, of which only one turns out to be sensitive to the declared model parameters, and thus, not surprisingly, the solution exhibits typical features of non-uniqueness. There are a wide range of models that fit the data.

One way to address this is to impose additional constraints on the model update calculated in the maximisation step, such as hard lower and upper bounds for individual model parameters or functions thereof. This can be easily achieved by replacing the Newton step and the backtracking line search with a method for constraint optimisation such as the method of moving asymptotes (Svanberg 2002). Another option to address the non-uniqueness is to improve the sensitivity of the model to the data by using a different data space.

In the next example, the inverse problem has been recast as a chemical inference problem, where the data consist of normalised measurements of K and Na relative to H. This is motivated by the fact that the chemical reaction is controlled by the ratios of NaCl to HCl and KCl to HCl as described in previous studies such as Launay et al. (2019). The new data space and alteration zones are now more reflective of the composition of the injected fluid, as demonstrated by the improved recovery of the true model compared to the initial model, as shown in Fig. 3. Despite the synthetic data set having only 10 measurements per alteration zone, with means slightly different from the true model predictions due to the low number of samples, the results show that the choice of data space can significantly impact the ability to recover the model from the data.

Normalised element concentrations for the initial model, the true model, the MAP model and the observations generated based on the predictions for the true model. The alteration zones are numbered from 1 to 3, with 1 representing the unaltered rock, and 3 the most altered rock. Fluid compositions inferred from the synthetic data are plotted together with the initial model and the true model (i.e. the model used to generate the synthetic data)

The recovery of fluid composition for a more complex model, where \(\hbox {CaCl}_2\) and \(\hbox {MgCl}_2\) have been added to the fluid entering the system, is illustrated in Fig. 4. The model generates five alteration zones, and the synthetic data set again consists of 75 measurements per alteration zone that overlap. It is still possible to robustly recover the concentrations of NaCl, KCl, \(\hbox {MgCl}_2\) and \(\hbox {CaCl}_2\) with an initial model that is significantly different from the true model. This is because the number of alteration zones influenced by the fluid composition is larger than the number of model parameters.

Normalised element concentrations for the initial guess, the true model, the MAP model and the observations generated based on the predictions for the true model. The fluid compositions inferred from the synthetic data are plotted together with the initial model and the true model (i.e. the model used to generate the synthetic data). The alteration zones are numbered from 1 to 5, with 1 representing the unaltered rock and 5 the most altered rock. For all alteration zones, the MAP model prediction plots on top of the true model prediction

3 Application to Field Data

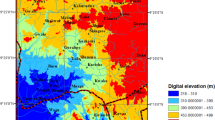

The East Kemptville tin deposit is a greisen-hosted tin deposit in Nova Scotia, where detailed information on fluid and mineralogical composition was published by Halter et al. (1995). Halter et al. (1998) used these data to set up titration and multi-pass fluid flow models to obtain insight into the role alteration-induced changes and fluid chemistry play in cassiterite deposition. They identify the fluid responsible for the greisenisation of the leucogranite as a Na-rich fluid containing variable concentrations of Fe, Mn, K, Si and F. Here, these studies, and particularly the information on rock composition (different alteration zones) given in Halter et al. (1995), are used to illustrate the applicability of the model calibration methodology to field data.

The data from which the fluid composition is inferred are the normative mineralogical composition data given in Halter et al. (1995). These data were calculated from measurements of a drill core that intersects all the alteration zones present in the deposit. From the normative weight composition provided in wt%, normalised mineral concentrations in mol/kg are calculated and used as the data to be matched. The four-dimensional data space is given by the concentrations of K-feldspar (Kfs), albite (Ab), muscovite (Ms) and topaz (Tpz) normalised by the concentration of quartz (Qz). The concentration of Qz was chosen for the normalisation because it is present in each alteration zone. Such a normalisation reduces the dimensionality of the data space, which is desirable, as illustrated by the synthetic tests.

The lower panel shows the nornalised mineral concentrations calculated from the normative weight compositions for the diamond drill hole 90-1 ordered as a function of distance along the borehole (Halter et al. 1995). The upper panel shows for each measurement the probability that it belongs to one of the four alteration zones. Alteration zones are numbered from 1 to 4, with 1 representing the unaltered rock and 4 the most altered rock

With the measurements sorted as a function of distance along the borehole, the observations (Fig. 5) contain four distinct alteration zones, with the first zone representing the unaltered leucogranite. The alteration zones are only identifiable from the combination of the four normalised mineral concentrations, and no single normalised concentration alone can be used to identify them. Thus, it is necessary to use a four-dimensional data space combined with a low number of data points on mineralogical composition, which will limit the robustness of any model parameter estimates. Given that one of the four zones represents unaltered rock, at most three independent model parameters can be constrained. However, the fluid described in Halter et al. (1998) contains more than three components. It is described as a sodium-rich fluid containing Cl, Na, Fe and minor amounts of K, Si, F and Sn. It is not possible to constrain such a large number of model parameters given that the measured data only contain three alteration zones, in addition to the unaltered rock. Hence, inferences are restricted to the concentrations of HCl, KCl and HF, with concentrations for the other components given by Halter et al. (1998) based on estimates for a zoned greisen using mass balance considerations. The motivation for the subset chosen here is the critical role of fluorine in the formation of typomorphic greisen assemblages (e.g. the presence of topaz).

Observed normalised mineral concentrations and the inferred model-predicted normalised mineral concentrations. The inset figure compares the inferred concentration for HCl, KCl and HF with the result from Halter et al. (1998). The alteration zones are numbered from 1 to 4, with 1 representing the unaltered rock and 4 the most altered rock

The expectation maximisation methodology requires an initial guess for the concentrations of the components to be inferred, and it is here chosen to be intentionally different from the values given in Halter et al. (1998). The inferred best-fitting model is thus different from the initial model, and the bootstrap samples are focused around the best-fitting model (Fig. 6). The recovered fluid composition compares favourably with the one estimated for a zoned greisen from mass balance considerations by Halter et al. (1998), falling mostly within the range of models recovered by bootstrapping with replacement for KCl and HF. While this is not the case for HCl, the MAP solution for it is nevertheless closer to the concentration given in Halter et al. (1998) than that of the starting model. The bootstrap sample predictions show limited variability in the data space, and this is a consequence of the information captured in the reactive transport model. The field data example confirms what has been observed in the synthetic tests. If a reactive transport model that predicts the alteration zones exists, the methodology introduced here allows for components of the reactive transport model, such as the partial composition of the fluid, to be inferred.

An important by-product of the expectation maximisation algorithm is that for each measurement in the set of observations, it furnishes the probability for it to belong to one of the predicted alteration zones (Fig. 5). We note that the implementation of the expectation maximisation algorithm does not use any information about the spatial location of the measurements or the ordering of the alteration zones to assign measurements to alteration zones. Nevertheless, the most probable alteration zone for each measurement is in agreement with the alteration zones one would identify from a visual inspection of the data, as reported in Halter et al. (1995). The recovered probabilities do capture the inherent ambiguities in the data around where one alteration zone begins and another ends, which is particularly evident in the transition from sericitized leucogranite to quartz sericite greisen, and in the subsequent transition to quartz topaz greisen (Fig. 5). The prediction of such a gradual transition between alteration zones is more in line with what one encounters in nature.

4 Conclusion

We have introduced a new latent variable-based methodology that enables inferences to be drawn about model fluid composition, given measurements of mineralogical or chemical compositions. A smooth mapping from Reaktoro (Leal 2015; Leal et al. 2017) simulations to alteration zone centroids is used to solve the forward problem of predicting alteration zones given the composition of the unaltered rock and a fluid composition. The expectation maximisation algorithm then treats the identification of alteration zones and the inference of the fluid composition from measurements as a joint problem. The alteration zones (i.e. clusters) identified in this manner are guaranteed to be thermodynamically feasible because they are predicted by the reactive transport model. This is different from a setting where the clustering is performed without accounting for the strong and necessary conservation laws embedded in a reactive transport model.

Given measurements of the mineralogical or chemical compositions, this methodology provides an efficient way to make objective inferences about aspects of a hydrothermal alteration system, such as the fluid composition. For the East Kemptville tin deposit, the inferred information is consistent with what has been reported in the literature based on detailed analyses of samples. The true value of this model calibration methodology is expected to be evident in scenarios where, for example, there are no measurements of fluid inclusions, but one aims to understand the likely composition of a mineralising fluid given field observations of mineralogical or chemical compositions.

The underlying assumption is that the reactive transport is capable of predicting the alteration zones in the data, and all alteration zones are represented in the data. Naturally, a model system that is a poor approximation of the real system may have low explanatory power for a given data set. Additionally, not all alteration zones might be captured in the data, and thus even a correct model might have a poor fit to data. It is also possible to calibrate a model with fewer predicted alteration zones than observed, or more zones than observed. When presented with several models that fit the data, an Occamist approach would be to select the simplest model that still explains the data. For practical applications, assessing a wide range of candidate models together with the within-model variance (through bootstrapping) and overall model selection uncertainty would be a prudent approach to increase confidence in the results.

As expected, fluid models with a large number of components (i.e. higher-dimensional model parameter spaces) have less robust estimates. It is not possible to robustly constrain more model parameters than there are alteration zones that are sensitive to the model parameters. The dimensionality of the data space (i.e. the number of normalised mineral or chemical concentrations) also influences the capacity for robust inference of fluid composition: the higher the dimensionality of the data space, the greater the amount of data required to constrain the model. Thus, while it is necessary to ensure that the data space contains all the minerals or chemical elements needed to identify the alteration zones, in general the data space dimensionality should be kept low.

The machinery described in this paper quantifies the robustness and uncertainty of the inferred fluid compositions, which is valuable in planning data collection efforts. As an example, the apparatus provides the means to determine in principle the number of field measurements required to infer a fluid composition with a given certainty or to choose between two different reactive transport models describing two different scenarios for the processes that could explain the observations.

References

Aggarwal CC, Hinneburg A, Keim DA (2001) On the surprising behavior of distance metrics in high dimensional space. In: Van den Bussche J, Vianu V (eds) International conference on database theory, vol 1973. Springer, pp 420–434. https://doi.org/10.1007/3-540-44503-X_27

Aitchison J (1982) The statistical analysis of compositional data. J R Stat Soc B 44(2):139–177

Aitchison J (1986) The statistical analysis of compositional data. Chapman and Hall, Boca Raton

Comas-Cufí M, Martín-Fernández JA, Mateu-Figueras G (2016) Log-ratio methods in mixture models for compositional data sets. Stat Oper Res Trans SORT 40(2):349

Constable SC, Parker RL, Constable CG (1987) Occam’s inversion: a practical algorithm for generating smooth models from electromagnetic sounding data. Geophysics 52(3):289–300. https://doi.org/10.1190/1.1442303

De Souza S, Dubé B, Mercier-Langevin P, McNicoll V, Dupuis C, Kjarsgaard I (2019) Hydrothermal alteration mineralogy and geochemistry of the Archean world-class Canadian Malartic disseminated-stockwork gold deposit, southern Abitibi greenstone belt, Quebec. Canada. Econ Geol 114(6):1057–1094

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B (Methodol) 39(1):1–22. https://doi.org/10.1111/j.2517-6161.1977.tb01600.x

Dolejš D (2015) Quantitative characterization of hydrothermal systems and reconstruction of fluid fluxes: the significance of advection, disequilibrium, and dispersion. In: Proceedings of the 13th Biennial SGA meeting, 24–27 August 2015, Nancy, France

Ester M, Kriegel H-P, Sander J, Xu X (1996) A density based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the 2nd ACM international conference on knowledge discovery and data mining (KDD), Portland, OR

Geiger S, Haggerty R, Dilles JH, Reed MH, Matthai SK (2002) New insights from reactive transport modelling: The formation of the sericitic vein envelopes during early hydrothermal alteration at Butte. Montana. Geofluids 2(3):185–201. https://doi.org/10.1046/J.1468-8123.2002.00037.X

Greenacre M, Grunsky E, Bacon-Shone J (2021) A comparison of isometric and amalgamation logratio balances in compositional data analysis. Comput Geosci 148:104621. https://doi.org/10.1016/j.cageo.2020.104621

Halter WE, Williams-Jones AE, Kontak DJ (1995) Origin and evolution of the greisenizing fluid at the East Kemptville tin deposit, Nova Scotia. Canada. Econ Geol 93(7):1026–1051

Halter WE, Williams-Jones AE, Kontak DJ (1998) Modeling fluid-rock interaction during greisenization at the East Kemptville tin deposit: implications for mineralization. Chem Geol 150(1–2):1–17

Herzberg C, Condie K, Korenaga J (2010) Thermal history of the Earth and its petrological expression. Earth Planet Sci Lett 292(1–2):79–88. https://doi.org/10.1016/j.epsl.2010.01.022

Hron K, Coenders G, Filzmoser P, Palarea-Albaladejo J, Famera M, Matys Grygar T (2021) Analysing pairwise logratios revisited. Math Geosci 53(7):1643–1666. https://doi.org/10.1007/s11004-021-09938-w

Laukamp C, Cudahy T, Cleverley JS, Oliver NH, Hewson R (2011) Airborne hyperspectral imaging of hydrothermal alteration zones in granitoids of the Eastern Fold Belt, Mount Isa Inlier. Australia. Geochem Explor Environ Anal 11(1):3–24. https://doi.org/10.1144/1467-7873/09-231

Launay G, Sizaret S, Guillou-Frottier L, Fauguerolles C, Champallier R, Gloaguen E (2019) Dynamic permeability related to greisenization reactions in Sn-W ore deposits: quantitative petrophysical and experimental evidence. Geofluids 2019:1–23. https://doi.org/10.1155/2019/5976545

Leal AM (2015) www.reaktoro.org

Leal AM, Kulik DA, Smith WR, Saar MO (2017) An overview of computational methods for chemical equilibrium and kinetic calculations for geochemical and reactive transport modeling. Pure Appl Chem 89(5):597–643. https://doi.org/10.1515/pac-2016-1107

Mooijaart A, Van Der Heijden PGM, Van Der Ark LA (1999) A least squares algorithm for a mixture model for compositional data. Comput Stat Data Anal 30:359–379

Nishimoto S, Yoshida H (2010) Hydrothermal alteration of deep fractured granite: effects of dissolution and precipitation. Lithos 115(1–4):153–162. https://doi.org/10.1016/j.lithos.2009.11.015

Pal S, Heumann C (2022) Clustering compositional data using Dirichlet mixture model. PLoS ONE 17(5):e0268438. https://doi.org/10.1371/journal.pone.0268438

Pawlowsky-Glahn V, Egozcue JJ (2006) Compositional data and their analysis: an introduction. Geol Soc Spec Publ 264:1–10

Pingitore NE, Engle MA (2022) Compositional closure-its origin lies not in mathematics but rather in nature itself. Minerals 12(1):74. https://doi.org/10.3390/min12010074

Svanberg K (2002) A class of globally convergent optimization methods based on conservative convex separable approximations. SIAM J Optim 12(2):555–573

Acknowledgements

J.R.H would like to acknowledge the CSIRO’s Deep Earth Imaging Future Science Platform for supporting this research. A.M.M.L. thanks the Swiss National Science Foundation (SNSF) for funding through the Ambizione PZ00P2-179967 grant.

Funding

Open access funding provided by CSIRO Library Services.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors do not have any conflict of interest or financial disclosures to report.

Appendix A: Membership Matrix Calculation

Appendix A: Membership Matrix Calculation

For practical implementation, the data (i.e. normalised measurements) given by the vector \({\textbf{y}} = y_{(i-1) r + k}\), where i represents the measurement index, k the mineral ratio index (or more generally the data space index), and r the dimensionality of the data space, can be organised as a \(q \times r\) observation matrix Y, with the number of observations being q. Given a model vector \({\textbf{m}}\) of length p, the model prediction \(f({\textbf{m}}) = f({\textbf{m}})_{(j-1)r+k}\) (i.e. \(g(f({\textbf{m}})_{(j-1) r + 1...ir},{\varvec{\sigma }}_{\varvec{x}})\)) with g() as per Eq. 2), where j represents the alteration zone index and k the mineral ratio (data space) index, is then expressed as an \(s \times r\) matrix \(\hat{Y}\), with s the number of predicted alteration zones and r the dimensionality of the data space, here typically the number of normalised concentrations being considered. For each predicted alteration zone j, an \(r \times r\) covariance matrix \(C_{\textrm{d}} = {{\,\textrm{diag}\,}}({\varvec{\sigma }}^{\varvec{2}})\) (i.e. \({{\,\textrm{diag}\,}}(h(f({\textbf{m}})_{(j-1)r +1...jr},{\varvec{\sigma }}_{\varvec{x}})))\), with h() as per Eq. (3), expresses the spread of the data samples representing the alteration zone.

In the expectation step, the computed membership weights \(w_{i,j}\) for the ith measurement with respect to the jth alteration zone are then given by

with

The maximisation step uses a membership-matrix re-weighted Jacobian to compute a model update (Eq. 6). The Jacobian comprises partial derivatives of each prediction matrix entry with respect to the model parameters. It is thus an \((s r) \times p\) matrix, where \(X_{h,l}\) is the derivative of the prediction \(\hat{Y}_{ \lfloor h/r \rfloor +1, h-\lfloor h/r \rfloor +1}\) with respect to the lth model parameter, and \( \lfloor \) \(\rfloor \) denotes the floor of integer division. To re-weight the Jacobian, the membership weights \(w_{i,j}\) are used to form the \((q r)\times (sr)\) membership matrix Z, with \(Z_{i,j}\) = \(w_{\left\lfloor {i/r}\right\rfloor +1,\left\lfloor {j/r}\right\rfloor +1}\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hauser, J., Miron, G.D., Kyas, S. et al. Towards a Model-Based Interpretation of Measurements of Mineralogical and Chemical Compositions. Math Geosci (2024). https://doi.org/10.1007/s11004-023-10121-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11004-023-10121-6