Abstract

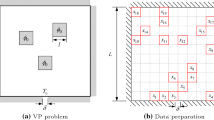

Highly integrated electrical components produce intensive heat while in use, which will seriously impact their performance if not properly designed. In this study, an end-to-end heat dissipation structure topology optimization prediction framework considering physical mechanisms was established by using the convolutional neural network (CNN) and the moving morphable components (MMC) method. Aiming at the sparsity of physical field matrix caused by the initial component distribution in MMC method, a CNN model was established taking the temperature gradient information of both homogeneous material and initial component layout as input. Compared with other seven input forms, the CNN model in this study considers both the initial component layout and the physical field information of the structure, which can predict the topology configuration of heat dissipation structure more accurately. In addition, an improved penalty mean square error (PMSE) function was proposed by introducing a penalty factor, which improved the prediction ability of the CNN model on the structural boundary and ensured more accurate and efficient structural heat dissipation performance. Several 2D and 3D numerical examples verified the effectiveness of the proposed framework and the dual temperature gradient input model. The overall framework provides a new method for the innovative and efficient heat dissipation structure topology optimization in packaging structure of electronic equipment.

Similar content being viewed by others

Data availability statement

In this work, the collection of sample sets is achieved by using the classic MMC188 line program. The CNN framework and hyperparameters have been given in this work. All source code, data or models support the findings of this study are available from the corresponding author upon reasonable request.

References

Aage, N., Andreassen, E., Lazarov, B.S.: Topology optimization using PETSc: An easy-to-use, fully parallel, open source topology optimization framework. Struct. Multidiscip. Optim. 51(3), 565–572 (2015)

Aage, N., Andreassen, E., Lazarov, B.S., Sigmund, O.: Giga-voxel computational morphogenesis for structural design. Nature 550(7674), 84–86 (2017)

Abueidda, D.W., Koric, S., Sobh, N.A.: Topology optimization of 2D structures with nonlinearities using deep learning. Comput. Struct. 237, 106283 (2020)

Allaire, G., Jouve, F., Toader, A.M.: A level-set method for shape optimization. Comptes Rendus Math. 334(12), 1125–1130 (2002)

Anitescu, C., Atroshchenko, E., Alajlan, N., Rabczuk, T.: Artificial neural network methods for the solution of second order boundary value problems. Comput. Mater. Contin. 59(1), 345–359 (2019)

Arshad, A., Jabbal, M., Sardari, P.T., Bashir, M.A., Faraji, H., Yan, Y.: Transient simulation of finned heat sinks embedded with PCM for electronics cooling. Therm. Sci. Eng. Prog. 18, 100520 (2020)

Banga S, Gehani H, Bhilare S, Patel S, Kara L (2018) 3D topology optimization using convolutional neural networks. arXiv Prepr arXiv180807440.

Ben Abdelmlek, K., Araoud, Z., Ghnay, R., Abderrazak, K., Charrada, K., Zissis, G.: Effect of thermal conduction path deficiency on thermal properties of LEDs package. Appl. Therm. Eng. 102, 251–260 (2016)

Bendsøe, M.P.: Optimal shape design as a material distribution problem. Struct. Optim. 1(4), 193–202 (1989)

Bishop, C.M., Nasrabadi, N.M.: Pattern recoginiton and machine learning, New York: springer. Springer (2006)

Chandrasekhar, A., Suresh, K.: TOuNN: topology optimization using neural networks. Struct. Multidiscip. Optim. 63(3), 1135–1149 (2021)

Chen, X., Zhao, X., Gong, Z., Zhang, J., Zhou, W., Chen, X., Yao, W.: A deep neural network surrogate modeling benchmark for temperature field prediction of heat source layout. Sci. China Phys., Mech. Astron. 64(11), 1–30 (2021)

Guo, X., Cheng, G.D.: Recent development in structural design and optimization. Acta Mech. Sin. Xuebao 26(6), 807–823 (2010)

Guo, X., Zhang, W.S., Zhong, W.L.: Doing topology optimization explicitly and geometrically-a new moving morphable components based framework. J. Appl. Mech. Trans. ASME 81(8), 1–12 (2014)

Guo, H., Zhuang, X., Rabczuk, T.: A deep collocation method for the bending analysis of Kirchhoff plate. Comput. Mater. Contin. 59(2), 433–456 (2019)

Guo, H., Zhuang, X., Chen, P., Alajlan, N., Rabczuk, T.: Stochastic deep collocation method based on neural architecture search and transfer learning for heterogeneous porous media. Eng. Comput. 38(6), 5173–5198 (2022)

Hamdia, K.M., Ghasemi, H., Bazi, Y., AlHichri, H., Alajlan, N., Rabczuk, T.: A novel deep learning based method for the computational material design of flexoelectric nanostructures with topology optimization. Finite Elem. Anal. Des. 165(January), 21–30 (2019a)

Hamdia, K.M., Ghasemi, H., Zhuang, X., Alajlan, N., Rabczuk, T.: Computational machine learning representation for the flexoelectricity effect in truncated pyramid structures. Comput. Mater. Contin. 59(1), 79–87 (2019b)

Hamdia, K.M., Ghasemi, H., Zhuang, X., Rabczuk, T.: Multilevel Monte Carlo method for topology optimization of flexoelectric composites with uncertain material properties. Eng. Anal. Bound Elem. 134, 412–418 (2022)

Hinton, G.E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Kambampati, S., Gray, J.S., Alicia Kim, H.: Level set topology optimization of load carrying battery packs. Int. J. Heat Mass Transf. 177, 121570 (2021)

Kim, Y.Y., Yoon, G.H.: Multi-resolution multi-scale topology optimization—a new paradigm. Int. J. Solids Struct. 37(39), 5529–5559 (2000)

Kingma, D.P., Ba, J.L.: Adam: A method for stochastic optimization (2014). arXiv Prepr arXiv14126980.

Lei, X., Liu, C., Du, Z., Zhang, W., Guo, X.: Machine learning-driven real-time topology optimization under moving morphable component-based framework. J. Appl. Mech. Trans. ASME 86(1), 1–9 (2019)

Li, Q., Steven, G.P., Querin, O.M., Xie, Y.M.: Shape and topology design for heat conduction by evolutionary structural optimization. Int. J. Heat Mass Transf. 42(17), 3361–3371 (1999)

Li, B., Huang, C., Li, X., Zheng, S., Hong, J.: Non-iterative structural topology optimization using deep learning. CAD Comput. Aided Des. 115, 172–180 (2019)

Lin, Q., Liu, Z., Hong, J.: Method for directly and instantaneously predicting conductive heat transfer topologies by using supervised deep learning. Int. Commun. Heat Mass Transf. 109, 104368 (2019)

Liu, L., Yan, J., Cheng, G.: Optimum structure with homogeneous optimum truss-like material. Comput. Struct. 86(13–14), 1417–1425 (2008)

Rade, J., Balu, A., Herron, E., Pathak, J., Ranade, R., Sarkar, S., Krishnamurthy, A.: Algorithmically-consistent deep learning frameworks for structural topology optimization. Eng. Appl. Artif. Intell. 106, 104483 (2021)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: Convolutional networks for biomedical image segmentation In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 234–241. Springer, Cham (2015)

Rozvany, G.I.N., Zhou, M., Birker, T.: Generalized shape optimization without homogenization. Struct. Optim. 4(3–4), 250–252 (1992)

Samaniego, E., Anitescu, C., Goswami, S., Nguyen-Thanh, V.M., Guo, H., Hamdia, K., Zhuang, X., Rabczuk, T.: An energy approach to the solution of partial differential equations in computational mechanics via machine learning: concepts, implementation and applications. Comput. Methods Appl. Mech. Eng. 362, 112790 (2020)

Seo, J., Kapania, R.K.: Development of deep convolutional neural network for structural topology optimization. AIAA Sci. Technol. Forum Expo AIAA Sci. Tech. Forum (2022). https://doi.org/10.1007/s00158-013-0978-6

Sigmund, O., Maute, K.: Topology optimization approaches: a comparative review. Struct. Multidiscip. Optim. 48(6), 1031–1055 (2013)

Sosnovik, I., Oseledets, I.: Neural networks for topology optimization. Russ. J. Numer. Anal. Math. Model 34(4), 215–223 (2019)

Takezawa, A., Yoon, G.H., Jeong, S.H., Kobashi, M., Kitamura, M.: Structural topology optimization with strength and heat conduction constraints. Comput. Methods Appl. Mech. Eng. 276, 341–361 (2014)

Tao, W.Q.: Numerical heat transfer. Xi’an Jiaotong University Press, Xi’an (2001)

Ulu, E., Zhang, R., Kara, L.B.: A data-driven investigation and estimation of optimal topologies under variable loading configurations. Comput. Methods Biomech. Biomed. Eng. Imaging vis. 4(2), 61–72 (2016)

Wang, M.Y., Wang, X., Guo, D.: A level set method for structural topology optimization. Comput. Methods Appl. Mech. Eng. 192(1–2), 227–246 (2003)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4), 600–612 (2004)

Xie, Y.M., Steven, G.P.: A simple evolutionary procedure for structural optimization. Comput. Struct. 49(5), 885–896 (1993)

Xue, L., Liu, J., Wen, G., Wang, H.: Efficient, high-resolution topology optimization method based on convolutional neural networks. Front. Mech. Eng. 16(1), 80–96 (2021)

Yan, S., Wang, F., Sigmund, O.: On the non-optimality of tree structures for heat conduction. Int. J. Heat Mass Transf. 122, 660–680 (2018)

Yan, J., Zhang, Q., Xu, Q., Fan, Z., Li, H.: U (2022) Deep learning driven real time topology optimisation based on initial stress learning. Adv. Eng. Inf. 51, 101472 (2022)

Yu, Y., Hur, T., Jung, J., Jang, I.G.: Deep learning for determining a near-optimal topological design without any iteration. Struct. Multidiscip. Optim. 59(3), 787–799 (2019)

Zhang, W., Yuan, J., Zhang, J., Guo, X.: A new topology optimization approach based on Moving Morphable Components (MMC) and the ersatz material model. Struct. Multidiscip. Optim. 53(6), 1243–1260 (2016)

Zhang, W., Chen, J., Zhu, X., Zhou, J., Xue, D., Lei, X., Guo, X.: Explicit three dimensional topology optimization via Moving Morphable Void (MMV) approach. Comput. Methods Appl. Mech. Eng. 322, 590–614 (2017)

Zhang, Y., Chen, A., Peng, B., Zhou, X., Wang, D.: A deep convolutional neural network for topology optimization with strong generalization ability. (2019). arXiv Prepr arXiv190107761.

Zheng, S., Fan, H., Zhang, Z., Tian, Z., Jia, K.: Accurate and real-time structural topology prediction driven by deep learning under moving morphable component-based framework. Appl. Math. Model 97, 522–535 (2021)

Zhou, M., Rozvany, G.I.N.: The COC algorithm, Part II: topological, geometrical and generalized shape optimization. Comput. Methods Appl. Mech. Eng. 89(1–3), 309–336 (1991)

Zhu, J., Zhou, H., Wang, C., Zhou, L., Yuan, S., Zhang, W.: A review of topology optimization for additive manufacturing: status and challenges. Chin. J. Aeronaut. 34(1), 91–110 (2021)

Zhuang, C., Xiong, Z., Ding, H.: Topology optimization of multi-material for the heat conduction problem based on the level set method. Eng. Optim. 42(9), 811–831 (2010)

Zhuang, X., Guo, H., Alajlan, N., Zhu, H., Rabczuk, T.: Deep autoencoder based energy method for the bending, vibration, and buckling analysis of Kirchhoff plates with transfer learning. Eur. J. Mech. A/Solids 87, 104225 (2021)

Acknowledgements

This research was financially supported by the National Natural Science Foundation of China (No. U1906233), the National Key R&D Program of China (2021YFA1003501), the Fundamental Research Funds for the Central Universities (DUT22ZD209, DUT22QN251), Programs Supported by Ningbo Natural Science Foundation, 2021J002. These supports are gratefully acknowledged.

Funding

National Natural Science Foundation of China, U1906233, Jun Yan, the National Key R&D Program of China (2021YFA1003501), Jun Yan, the Fundamental Research Funds for the Central Universities, DUT22ZD209, Jun Yan, DUT22QN251, Jun Yan, Programs Supported by Ningbo Natural Science Foundation, 2021J002, Jinlong Chen.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

The hyperparameter related to network training include the number of training rounds (Epoch), batch size, learning rate, etc. This section discusses the selection of hyperparameter related to neural network training according to the CNN structure and loss function form established in the previous content.

Firstly, fix the initial learning rate and discuss different batch sizes. If the Batch size value is too large, it will affect the generalization capability of the model, while if the value is too small, it will lead to gradient oscillation in adjacent iterations, which is not conducive to the convergence of the model. In this section, set the batch size to 4, 8, 16, 32, 64, and train the models to obtain loss function curves under different batch sizes (Fig.

19). Among them, when Batch size = 4 (blue curve) and Batch size = 8 (red curve), the curve oscillates violently when Epoch is 0–200. To guarantee that the model has strong generalization capability, the loss function value of the model needs to decline steadily. Therefore, Batch size = 4 and Batch size = 8 are not applicable to the current model. At the same time, compared with the Batch size curves of the other three values, it can be seen that when Batch size = 16, the loss function value is small and converges more smoothly. Therefore, 16 is selected here as the Batch size value for the current model training.

Secondly, the setting of the learning rate is directly related to the convergence rate and prediction accuracy of the CNN model. Therefore, this section discusses the value of learning rate. Set the Batch size to 16 and then discuss the various initial learning rates separately. In this section, the learning rate is set to 1e-5, 5e-5, 1e-4, 5e-4, 8e-4. The model is then trained to obtain the loss function curve under different learning rates, as shown in Fig.

20.

It is evident from the figure that when the learning rate is 1e-5 (blue curve), the loss function oscillates violently when the Epoch is in the range of 0–200, and drops slowly. When the learning rate is 5e-5 (red curve), the value of the model loss function drops rapidly, and there is no severe oscillation. When the value of the learning rate is further increased, although the loss function value drops rapidly, there is an upward trend in the curve during the convergence stage, indicating that overfitting has occurred currently. Therefore, the learning rate value of 5e-5 is chosen here for the current model training.

For the discussion of hyperparameter Epoch, the Fig. 19 and the Fig. 20 show that the loss function value has not changed significantly after 500 steps of Epoch, and the change of its number will not affect the prediction effect of the model. Therefore, this chapter does not discuss hyperparameter Epoch. Through the above discussion, the hyperparameter settings of the current model are shown in Table

10.

Appendix 2

Figure

21 shows the 3D CNN architecture, and Table

11 shows the hyperparameters of the proposed 3D CNN architecture.

Figures

22 and

23 show the convergence process of training the HC-TG model in 2D and 3D cases, respectively. These two figures demonstrate that the training loss and verification loss are in good agreement, without overfitting and under fitting.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, Q., Duan, Z., Yan, H. et al. Deep learning-driven topology optimization for heat dissipation of integrated electrical components using dual temperature gradient learning and MMC method. Int J Mech Mater Des 20, 291–316 (2024). https://doi.org/10.1007/s10999-023-09676-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10999-023-09676-3