Abstract

In traditional semantic segmentation, knowing about all existing classes is essential to yield effective results with the majority of existing approaches. However, these methods trained in a Closed Set of classes fail when new classes are found in the test phase, not being able to recognize that an unseen class has been fed. This means that they are not suitable for Open Set scenarios, which are very common in real-world computer vision and remote sensing applications. In this paper, we discuss the limitations of Closed Set segmentation and propose two fully convolutional approaches to effectively address Open Set semantic segmentation: OpenFCN and OpenPCS. OpenFCN is based on the well-known OpenMax algorithm, configuring a new application of this approach in segmentation settings. OpenPCS is a fully novel approach based on feature-space from DNN activations that serve as features for computing PCA and multi-variate gaussian likelihood in a lower dimensional space. In addition to OpenPCS and aiming to reduce the RAM memory requirements of the methodology, we also propose a slight variation of the method (OpenIPCS) that uses an iteractive version of PCA able to be trained in small batches. Experiments were conducted on the well-known ISPRS Vaihingen/Potsdam and the 2018 IEEE GRSS Data Fusion Challenge datasets. OpenFCN showed little-to-no improvement when compared to the simpler and much more time efficient SoftMax thresholding, while being some orders of magnitude slower. OpenPCS achieved promising results in almost all experiments by overcoming both OpenFCN and SoftMax thresholding. OpenPCS is also a reasonable compromise between the runtime performances of the extremely fast SoftMax thresholding and the extremely slow OpenFCN, being able to run close to real-time. Experiments also indicate that OpenPCS is effective, robust and suitable for Open Set segmentation, being able to improve the recognition of unknown class pixels without reducing the accuracy on the known class pixels. We also tested the scenario of hiding multiple known classes to simulate multimodal unknowns, resulting in an even larger gap between OpenPCS/OpenIPCS and both SoftMax thresholding and OpenFCN, implying that gaussian modeling is more robust to settings with greater openness.

Graphic Abstract

Similar content being viewed by others

1 Introduction

The development of new technologies for the acquisition of aerial images onboard satellites or aerial vehicles has made it possible to observe and study various phenomena on the Earth’s surface, both on a small and large scale. A highly requested task, in this sense, is automated geographic mapping, which gives an easier and faster approach of monitoring cities, regions, countries, or entire continents. Automatic mapping of remote sensing images is typically modeled as a supervised classification task, commonly known as semantic segmentation, in which a model is first trained using labeled pixels and then used to classify other pixels in a new region. Commonly, this process is based on the Closed Set (or Closed World) assumption: it assumes that all training and testing pixels come from the same label space, e.g., train and test sets have the same set of classes. It is easy to notice that this assumption does not hold in real-world scenarios, mainly for Earth Observation applications, such as geographic mapping, given the huge size of the images and the (possible) elevated number of distinct objects (classes). In these scenarios, the model is likely to observe, during the prediction phase, samples of classes not seen during the training. In these cases, Closed Set semantic segmentation methods are error-prone to unknown classes given that they will wrongly recognize it as one of the known classes during the inference. This limits the use of such approaches to real-world Earth Observation applications, such as automated geographic mapping.

Towards solving this, Open Set Recognition (OSR) can be described as the set of algorithms that address the problem of identifying, during the inference phase, samples of unknown classes, e.g., instances of classes not seen during the training. Using the same definitions of Geng et al. (2020) and Scheirer et al. (2014), during the inference phase, an OSR system, such as an Open Set semantic segmentation approach, should be able to correctly classify the instances/pixels of classes employed during the training (Known Known Classes – KKCs) whereas recognizing the samples/pixels of classes not seen during training (Unknown Unknown Classes – UUCs).

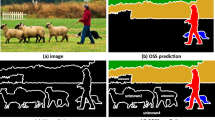

By this definition, it is possible to state that the main difference between the closed and Open Set scenarios is related to the knowledge of the world, e.g., the knowledge of all possible classes. Specifically, while in the Closed Set scenario the methods should have full knowledge of the world, Open Set approaches must assume that they do not know all the possible classes during the training. Obviously, different approaches may have a distinct knowledge of the world depending on the problem. A visual example of this difference, in terms of knowledge of the world, is depicted in Fig. 1.

Graphical depiction of the problem settings of Closed Set, Anomaly Detection and Open Set Recognition in dense labeling scenarios. The x-axis ranges from fully Closed Set (that is, assuming full knowledge of the world) on the left side to Open Set on the rightmost example. In the middle, there is the binary task of Pixel Anomaly Detection, wherein pixels are segmented either into KKCs or UUCs without discerning between distinct KKCs. We also depict the Label Space (Semantic Segmentation map) and a sample of some pixels’ representation in a 2D manifold of the Feature Space separated by labels and decision boundaries for each class

Technically, OSR is usually achieved via transductive learning (Li and Wechsler 2005) by trying to infer samples from UUCs using only the test data distribution. Another approach is to adapt Anomaly Detection techniques for OSR by using auxiliary data to try to learn a generative model via supervision, such as the Outlier Exposure (OE) (Hendrycks et al. 2018). However, neither of these approaches is ideal for Open Set semantic segmentation. This is because transduction requires continuous updates of a model in order to cope with new data, presenting an overhead that might be too expensive in most real-world applications, and the OE (Hendrycks et al. 2018) depends on the existence and availability of auxiliary Out-of-Distribution (OOD) data for training, which may not be possible or even useful in semantic segmentation. Therefore, an Open Set semantic segmentation algorithm must rely solely on inductive learning and use only the available training data and KKCs, while also addressing performance concerns, given the higher output dimensions of segmentation.

In this paper, we proposed two novel approaches for Open Set semantic segmentation of remote sensing image. We evaluate our methods and compare it with baselines by simulating Open Set situations in well known urban scenes such as Vaihingen and Potsdam datasets. It is important to mention that the concept of Open Set semantic segmentation is still very little explored in the literature. The first and unique work that introduces this problem is da Silva et al. (2020), which proposes a method based on OpenMax (Bendale and Boult 2016) for pixelwise classification, which have many limitations concerning both effectiveness and efficiency.

The main contributions of this work are:

-

1.

The first fully convolutional methodology for semantic segmentation in RS imagery or otherwise adapted from OpenMax (Bendale and Boult 2016), explained in Sect. 3.1;

-

2.

Proposal of a completely novel fully convolutional methodology for identifying UUCs in dense labeling scenarios using Principal Components from the internal feature space of the DNNs, further described in Sect. 3.2;

-

3.

Definition of a benchmark evaluation protocol with standard threshold-dependent and threshold-independent metrics for testing OSR Semantic Segmentation tasks;

-

4.

Extensive evaluations of architectures and thresholding for the proposed approaches in both Open and Closed Set baselines.

This paper is organized in sections, as follow: Sect. 2 presents the related work, other papers that try to solve the Open Set semantic segmentation problem; Sect. 3 describes the proposed methods, explaining in details how it works; Sect. 4 shows the setup used for this paper, the datasets evaluated and the metrics; Sect. 5 presents the obtained results from the experimental setup; Finally, Sect. 6 contains the conclusion over the obtained results and the proposed method.

2 Related work and background

Convolutional Neural Networks (CNNs) have become the backbone of visual recognition for the last decade. AlexNet (Krizhevsky et al. 2012) reintroduced image feature learning, allowing for better scalability than the first CNNs (e.g. LeNet LeCun et al. 1998) in order to perform inference over harder tasks (e.g. ImageNet Deng et al. 2009 and CIFAR Krizhevsky et al. 2009). AlexNet took advantage of larger convolutional kernels in the earlier layers and contained a total of eight layers, between convolutional and fully-connected ones. VGG (Simonyan and Zisserman 2014) simplified CNN architectures by using the same \(3\times 3\) kernels in all convolutions and max-poolings for downscaling. In contrast to VGG, the GoogleNet architecture (Szegedy et al. 2015)—also known as Inception—studied a diverse set of kernel sizes to enforce disentanglement in activations. Inception modules mix combinations of multiple kernel sizes and poolings. Both VGG and Inception allow for deeper networks with smaller convolutional kernels in each module, which proved to be more efficient than shallower networks with larger convolutions, at least up to around 20 layers.

It was observed that adding layers beyond a total of 20 was detrimental to the training of CNNs, as the gradients did not reach the earlier layers, effectively preventing their training. Residual Networks (ResNets) (He et al. 2016) based on residual identity functions that allow for shortcuts in the backpropagation were then introduced. ResNets with between 18 and 151 convolutional blocks were investigated by He et al. (2016), with little benefit being observed beyond that. With time, some tweaks were proposed to the standard ResNet architecture, with the more noteworthy ones being Wide ResNets (WRNs) (Zagoruyko and Komodakis 2016) and ResNeXt (Xie et al. 2017), which yielded considerable improvements to the traditional ResNets. However, ResNets were observed to be highly inefficient, as the activations of most convolutions throughout the network could be dropped with little-to-no effect on classification performance (Srivastava et al. 2015). Densely Connected Convolutional Networks (DenseNets) (Huang et al. 2017) improved on the parameter efficiency of ResNets by replacing the identity function by concatenation and adding bottleneck and transition layers, which lowered the parameter requirements of the architecture. Huang et al. (2017) tested in DenseNet a variation between 121 and 264 layers, observing them to be more efficient than ResNets in both parameter and flops, when similar error values were compared in the validation set.

The remainder of this section presents the main concepts to the understand of this work and the recent literature about semantic segmentation (Sect. 2.1) and Open Set recognition (Sect. 2.2).

2.1 Deep semantic segmentation

CNNs (Krizhevsky et al. 2012) are considered the state-of-the-art for sparse labeling tasks as object/scene classification due to their feature learning capabilities. The literature quickly learned to adapt CNNs for dense labeling tasks by patchwise training, using the label for the central pixel (Farabet et al. 2012; Pinheiro and Collobert 2014). However, Fully Convolutional Networks (FCNs) (Long et al. 2015) were shown to be considerably more efficient than patchwise training, providing the first end-to-end framework for semantic segmentation. Besides the accuracy and efficiency benefits of fully convolutional training, any traditional CNN architecture could be converted into an FCN by adding a bilinear interpolation to the activations and replacing the dense layers by convolutional ones, as shown in Fig. 2. This simple scheme also allowed for transfer learning from large labeled datasets as ImageNet (Deng et al. 2009) to relatively smaller semantic segmentation datasets as Pascal VOC (Everingham et al. 2015) and MS COCO (Lin et al. 2014). FCNs are also shown to benefit from skip connections that merge the high semantic level activations at the end of the network with the high spatial resolution information from earlier layers (Long et al. 2015).

Architecture example of a CNN for image classification and its equivalent FCN architecture with the same backbone for semantic segmentation. Activations from layer l are depicted as \(a^{(l)}\) for all layers in the network (\(L_{1}\) through \(L_{7}\) for the CNN and \(L_{1}\) through \(L_{5}\) for the FCN). One should notice that in both architectures the input layer \(a^{(L_{1})}\) has the number of channels \(n^{ch}\) depending on the input data’s number of channels (in the case of RGB images, \(n^{ch} = 3\)). In the CNN, the number of neurons in the output layer must match the number of KKCs (\(n^{KKCs}\)) in the data. Equivalently, in the FCN, the number of channels in the output layer \(n^{KKCs}\), as suggested by the notation, depends on the number of KKCs of the dataset. In this example, \(n^{KKCs} = 4\) as there are 4 known classes in this example

More recently, several semantic segmentation methods have been proposed specifically to deal with different aspects of remote sensing images such as spatial constraints (Nogueira et al. 2016; Maggiori et al. 2017; Marmanis et al. 2018; Wang et al. 2017; Audebert et al. 2016; Nogueira et al. 2019) or non-RGB data (Kemker et al. 2018; Guiotte et al. 2020). Nogueira et al. (2016) use patchwise semantic segmentation in RS imaging for both urban and agricultural scenarios. Maggiori et al. (2017) proposed a multi-context method based on upsampled and concatenated features extracted from distinct layers of a fully convolutional network. In Marmanis et al. (2018), the authors proposed multi-context methods that combine boundary detection with deconvolution networks. In Audebert et al. (2016), the authors fine-tuned a deconvolutional network using \(256\times 256\) fixed size patches. To incorporate multi-context knowledge into the learning process, they proposed a multi-kernel technique at the last convolutional layer. Wang et al. (2017) proposed to extract features from distinct layers of the network to capture low- and high-level spatial information. In Kemker et al. (2018), the authors adapt state-of-the-art semantic segmentation approaches to work with multi-spectral images. Guiotte et al. (2020) proposed an aprooach for semantic segmentation from LiDAR point clouds.

2.2 Open set recognition

The Open Set recognition problem was first introduced by Scheirer et al. (2012). They discussed about the notion of “openness”, that occurs when we do not have knowledge of the entire set of possible classes during supervised training, and must account for unknowns during predicting phase. The first studies and applications involving Open Set recognition were adaptations of “shallow” methods that acted in the feature space of visual samples and consisted mainly of threshold-based or support-vector-based methods (Scheirer et al. 2012). More recent work has extended the concept to deep neural networks (Bendale and Boult 2016; Cardoso et al. 2017).

A recent survey by Geng et al. (2020) splits Open Set methods mainly between discriminative and generative approaches. Discriminative approaches usually use 1-vs-All Support Vector Machines (SVMs) (Scheirer et al. 2012) in order to delineate the space between valid samples from the training classes and outliers—which ideally would identify UUCs. Meta-recognition can also be used to predict failures in visual learning tasks (Scheirer et al. 2012, 2014). Extreme Value Theory (EVT) is one of the most common modelings for meta-recognition using DNNs (Bendale and Boult 2016; Ge et al. 2017; Oza and Patel 2019) for classification.

Earlier deep OSR methods (Bendale and Boult 2016; Ge et al. 2017; Liang et al. 2017) aimed to incorporate the prediction of UUCs directly onto the prediction of the DNN output layer. Bendale and Boult (2016) and Ge et al. (2017) perform this by reweighting the output probabilities of the SoftMax activation to accomodate a UUC into the prediction during test time. This approach is known as OpenMax (Bendale and Boult 2016), and further developments to it have been proposed; for instance, aiding the computation of OpenMax with synthetic images from a Generative Adversarial Network (GAN) in G-OpenMax (Ge et al. 2017). OpenMax (Bendale and Boult 2016) will be further detailed in the methodology (Sect. 3.1), as it is the basis for one of the proposed methods in this paper.

Inspired by adversarial attacks (Goodfellow et al. 2014), Out-of-Distribution Detector for Neural Networks (ODIN) (Liang et al. 2017) insert small perturbations in the input image x in order to increase the separability in the SoftMax predictions between in- and out-of distribution data (\({\mathcal {D}}^{in}\) and \({\mathcal {D}}^{out}\), respectively). This separability allows ODIN to work similarly to OpenMax (Bendale and Boult 2016) and operate close to the label space, using a threshold over class probabilities to discern between KKCs and UUCs. The manuscript reports Area Under ROC curve metrics between 0.90 and 0.99 for CIFAR-10 (Krizhevsky et al. 2009) as \({\mathcal {D}}^{in}\) and between 0.70 and 0.85 for CIFAR-100 (Krizhevsky et al. 2009) as \({\mathcal {D}}^{in}\), depending on the \({\mathcal {D}}^{out}\) (e.g. TinyImageNet,Footnote 1 LSUN (Yu et al. 2015), random noise, etc). Extensive hyperparameter tuning experiments are reported in the paper as well. As will be further discussed in Sect. 3.2, restricting the information used for OSR to the activations in the last layers has severe limitations. Thus, modern methods have employed different strategies than simply thresholding the output probabilities to split samples into KKCs and UUCs.

A recent trend in both Anomaly Detection and OSR for deep image classification has been to incorporate input reconstruction error in supervised DNN training as a way to identify OOD samples (Yoshihashi et al. 2019; Oza and Patel 2019; Sun et al. 2020). These approaches fall under the branch of generative OSR, according to the taxonomy by Geng et al. (2020). Classification-Reconstruction learning for Open-Set Recognition (CROSR) (Yoshihashi et al. 2019) trains conjointly a supervised DNN for classification model (\(x \rightarrow y\)) and an AutoEncoder (AE) to encode the input (x) into an bottleneck embedding (z) and then decode it to reconstruct \({\tilde{x}}\). Conjoint training allows the DNN to optimize a compound loss function that minimizes both the classification and reconstruction errors. During the test phase, the reconstruction error magnitude between \(err(x, {\tilde{x}}) = ||x - {\tilde{x}}||\) dictates if the input x is indeed from the predicted class \({\hat{y}}\) or an OOD sample.

Class Conditional AutoEncoder (C2AE) (Oza and Patel 2019), similarly to CROSR, uses the reconstruction error of the input (\(||x - {\tilde{x}}||\)) from an AE and EVT modeling to determine a threshold in order to discern between KKC and UUC samples. Following the same trend of thresholding a certain point in the density function of the reconstruction error from the inputs, Conditional Gaussian Distribution Learning (CGDL) (Sun et al. 2020) uses a Variational AutoEncoder (VAE) to model the bottleneck representation of the input images according to a vector of gaussian means \(\mu\) and standard deviations \(\sigma\) in a lower-dimensional high semantic-level space. This modeling allows CGDL to unsupervisedly discriminate between KKCs and UUCs by thresholding the likelihood of the embedding \(z_{i}\) generated from a novel sample \(x_{i}\) pertaining to the multivariate gaussians \({\mathcal {N}}(z_{i}, \mu _{k}, \sigma ^{2}_{k})\), where k represents the predicted class for sample \(x_{i}\).

2.2.1 Open set semantic segmentation

OpenPixel (da Silva et al. 2020) is based on patchwise training of classification DNNs for image classification (Nogueira et al. 2016) of RS images. The method builds on top of OpenMax (Bendale and Boult 2016) in order to recognize out-of-distribution pixels in urban scenarios. However, OpenPixel is highly inefficient during both training and test times due to the patchwise training using a customly built CNN.

As we have discussed in the introduction, to the best of our knowledge, the unique work in the literature that address the Open Set segmentation problem was proposed by da Silva et al. (2020). The authors introduce the concept and proposes two methods based on OpenMax (Bendale and Boult 2016) for pixelwise classification. Although promising, the approaches proposed in da Silva et al. (2020) have several limitations both in effectiveness and efficiency aspects. In this work we have extended the OpenPixel method proposed in da Silva et al. (2020) to be feasible in practical situations. We better explain the improvements and adaptations in Sect. 3.1.

As far as the authors are aware, there are no fully convolutional architectures for deep Open Set semantic segmentation in neither the remote sensing nor computer vision communities. Section 3 bridges this gap with the proposal of two approaches based on OpenMax (Bendale and Boult 2016) (Sect. 3.1) and Principal Component likelihood scoring (Tipping and Bishop 1999) (Sect. 3.2) in the domain of urban scene segmentation.

3 Proposed methods

This section details the two proposed methods presented in this work: (1) Open Fully Convolutional Network (OpenFCN), a fully convolutional extension of OpenMax (Bendale and Boult 2016; da Silva et al. 2020) for dense labeling tasks (Sect. 3.1); and (2) Open Principal Component Scoring (OpenPCS), a novel approach that uses feature-level information to fit multivariate gaussian distributions to a low-dimensional manifold of the data in order to obtain a score based on the data likelihood for identifying failures in recognition (Sect. 3.2).

3.1 OpenFCN

OpenFCN relies on traditional FCN-based architectures, which are normally composed of traditional CNN backbones with the inference layers replaced by bilinear interpolation and more convolutions. As the dense prediction is treated at training time as a classification task, the distinction between OpenFCN and FCN can be seen more clearly during validation and predicting. A meta-recognition module based on OpenMax (Bendale and Boult 2016) is added to the prediction procedure of traditional FCNs, as can be seen in Fig. 3.

OpenFCN scheme for Open Set Semantic Segmentation. During training, OpenFCN behaves like the traditional Closed Set FCN, with only Known Known Classes (KKCs) being fed to a supervised loss, such as Cross Entropy. OpenFCN differs from FCN only during validation and testing, when OpenMax is computed and the probabilities are thresholded in order to predict Unknown Unknown Classes (UUCs)

Let \(\{X,\ Y\}\) be a paired set of image pixels and semantic labels from a dataset containing C KKCs. A deep model \({\mathcal {M}}\) can be trained in a stochastic manner by feeding samples \(X_{i}\) to a gradient descent optimizer as Kingma et al. (2014) with a loss function as Cross Entropy, given by:

This strategy ultimately yields an activation \(a_{i} \in {\mathbb {R}}^{C}\) for each pixel i after the last layer. Thus, \({\mathcal {M}}\) can be seen as a function \({\mathbb {R}}^{3} \rightarrow {\mathbb {R}}^{C}\) that converts the input space \(X_{i}\) into the prior SoftMax prediction \(\sigma _{i}\) for sample i. Obtaining the class prediction \({\hat{Y}}^{pri}_{i}\) can be easily done by finding the class with the larger probability in \(\sigma _{i}\) across all KKCs. Thus, OpenFCNs are trained using the exact same procedure as traditional FCNs for Closed Set semantic segmentation. Posteriori predictions \({\hat{Y}}^{pos}\) are only computed on validation and testing, as described in the following paragraphs.

OpenMax (\({\mathcal {O}}\)) relaxes the traditional SoftMax requirement that prediction probabilities for KKCs must add to 1, introducing an extra class to the posterior prediction \({\hat{Y}}^{pos}\) to the prediction set for X. The function \({\mathcal {O}}(X, Y, {\mathcal {M}})\) is, therefore, able to reweight the SoftMax predictions, aggregating the probability of misclassifications due to UUCs. Following the protocol of OpenMax (Bendale and Boult 2016), during OpenFCN’s validation procedure each KKC \(c_{k}, k \in \{0, 1, \ldots , C-1\}\) yields one Weibull distribution \({\mathcal {W}}_{k}\). \({\mathcal {W}}_{k}\) is fit to the deviations from the mean \(\mu _{k}\) of \(a^{(L_{5})}\) according to some distance (e.g. euclidean, cosine, hybrid distances). Averages \(\mu _{k}\) are computed in the validation set according only to the correctly classified pixels of class \(c_{k}\).

Finally, in order to identify Out-of-Distribution (OOD) samples, quantiles from the Cumulative Distribution Function (CDF) for \(\mathcal{W}_{k}\) are computed, with all pixels in the set \({\hat{Y}}^{pri}\) predicted to be from \(c_{k}\) and with less confidence than \({\mathcal {T}}_{k}\) being attributed to be from a UUC. Thus, the posterior OpenMax prediction \({\hat{Y}}^{pos}_{i}\) for a specific pixel \(X_{i}\) is given by:

where l is the output layer in a DNN and \(c_{unk}\) is the identifier for the UUC in the Open Set scenario. This scheme is shown in Fig. 4.

OpenFCN’s generative modeling for detecting UUC samples. A Weibull model \({\mathcal {W}}_{c}\) for KKC c is fit using the last layer’s activations (\(a^{(L_{5})}\)) from correctly classified samples of this class in the training set. According to the CDF for each class’ Weibull distribution, a threshold \({\mathcal {T}}_{c}\) is set and samples that do not reach this threshold are classified as pertaining from a UUC

Dense labeling tasks (e.g. instance/semantic segmentation or detection) inherently have higher dimensional outputs than sparse labeling tasks (e.g. classification or single-target regression). Dense predictions also present their particular set of difficulties, including how to handle boundaries between adjacent objects. Early in our experiments, we have seen a large number of border artifacts in OpenFCN predictions. As depicted in Fig. 5, adjacent areas between objects with distinct classes naturally yield class predictions with smaller certainties than the central pixels of these objects, resulting in warped Weibull distributions. This happens because the last layer in the network tries to model directly the label space distribution, retaining little-to-no information about the original input. Hence, activations from later layers in the network are more affected by object boundary uncertainties.

In order to mitigate this limitation of OpenFCNs in dense labeling tasks, we propose a completely novel method that is able to fuse information from low and high semantic level information into one single model. This method will be presented in Sect. 3.2.

3.2 OpenPCS

OpenPCS works similarly to CGDL (Sun et al. 2020), but with three important differences: (1) we fit gaussian priors using not only the input images X, but also the intermediary activations (e.g. \(a^{(L_{3})}\), \(a^{(L_{4})}\), \(a^{(L_{5})}\), etc); (2) we use a PCA instead of a VAE; and (3) the training is purely supervised and the low dimensional gaussians are fitted only during the validation phase, and not conjointly with the training, as CGDL. This was all done for simplicity and aiming to ease computational complexity, as open set semantic segmentation is a very recent field of research.

It is well known that the deeper a certain layer l is placed in a DNN, the closer to the label space the activation features \(a^{(l)}\) are (Shwartz-Ziv and Tishby 2017). In fact, Shwartz-Ziv and Tishby (2017) argue that any supervised DNN can be seen as a Markov chain of sequential tensorial representations that gradually morph the information processed by the network from the input space (in the input layer) to the label space (in the output layer). Thus, by using only the last layer’s activations to fit Weibull distributions to each KKC, OpenFCNs—and, by extension, OpenMax (Bendale and Boult 2016)—limit themselves to work with information close to the label space.

Unlike OpenFCN, OpenPCS takes into account feature maps from earlier layers, which encode information closer to the input space, and combine them with activations from the last layers, fusing low and high semantic level information. This can be seen in Figs. 6 and 7 in the form of the yellow columns shown in the lower part of the image. Each output pixel in \({\hat{Y}}^{pri}\) gets a correspondent activation vector (\(a^{*}\)) made by the concatenation of earlier layer activations for the corresponding prediction map region in the channel axis. As earlier layers (\(a^{(L_{4})}\) and \(a^{(L_{3})}\)) have lower spatial resolution due to the network’s bottleneck, the activations from these layers are upsampled where the \(\uparrow\) function is shown in order to match the dimensions of the input image and output prediction. In the example shown in Fig. 6, \(a^{*} = (a^{(L_{5})}, \uparrow ^{2} a^{(L_{4})}, \uparrow ^{4} a^{(L_{3})})\), so, for instance, if \(a^{(L_{5})} \in {\mathbb {R}}^{4 \times MN}\), \(\uparrow ^{2} a^{(L_{4})} \in {\mathbb {R}}^{8 \times MN}\) and \(\uparrow ^{4} a^{(L_{3})} \in {\mathbb {R}}^{16 \times MN}\), then \(a^{*}\) has dimensionality \(28 \times MN\). The concatenated feature vector for each input/output pixel of index i in this example would be, therefore, \(a^{*}_{i} \in {\mathbb {R}}^{(28)}\).

OpenPCS general schematics. Subsequent activations (\(a^{(L_{1})}\), \(a^{(L_{2})}\), ..., \(a^{(L_{4})}\)) from an FCN with a certain CNN backbone (as in Fig. 2) is shown. Activations from the last layers (e.g. \(a^{(L_{5})}\), \(a^{(L_{4})}\) and \(a^{(L_{3})}\), in this case) are concatenated to form column vectors for each predicted output pixel in \({\hat{Y}}^{pri}\). The prior prediction \({\hat{Y}}^{pri}\) is then processed according to the scheme shown in Fig. 7 using a generative model \({\mathcal {G}}\) in order to detect OOD pixels and, thus, classify them as unknowns

Graphical scheme for the generative modeling in OpenPCS. In contrast to OpenFCN’s Weibull fitting, OpenPCS uses a gaussian modeling in a low-dimensional representation \(a^{low}\) of the activations \(a^{*}\). The Principal Components for each pixel are computed according to the concatenation of activations \(a^{*}\) from multiple layers (e.g. \(a^{(L_{3})}\), \(a^{(L_{4})}\), \(a^{(L_{5})}\), etc) for the corresponding region of each specific pixel. One multivariate gaussian is fit for each KKC and thresholds defined according to likelihoods from these models are used in order to identify OOD pixels

Concatenating activations from multiple layers into \(a^{*}\) yields high dimensionality feature vectors for each pixel, as modern CNNs/FCNs easily output hundreds or thousands of activation channels from each layer. The large redundancy found in activation maps from convolutional layers (Srivastava et al. 2015; Huang et al. 2017) should also render \(a^{*}\) to be highly redundant. OpenPCS mitigates both problems by computing a lower dimension manifold \(a^{low}\) of each pixel’s activation with Principal Component Analysis (PCA) previously to fitting a generative model (\({\mathcal {G}}\)), as shown in Fig. 7. This approach grants two desirable properties to OpenPCS: (1) faster inference time during testing, as PCA implementations can be highly parallelized via vectorial operations and low-dimensional gaussian likelihood scoring can be computed in a fast manner; and (2) PCA feature selection guarantees that only the most important activation channels are used to compute a scoring function to detect OOD samples and, consequently, UUCs.

3.2.1 Open set scoring with principal components

Besides being purely a tensorial operation used for dimensionality reduction, PCA can be seen as a probability density estimator with gaussian priors. As described by Tipping and Bishop (1999), this allows PCA to be used as a generative model for novelty detection. In other words, the low dimensional Principal Components generated by PCA using a multivariate gaussian prior can yield likelihoods that allow for OOD recognition in new data.

PCA reduces dimensionality by finding latent variables composed of combinations of features in the original input space such that the reconstruction error when returning to this original space is minimized. This operation works by computing eigenvalues (\(\lambda\)) and eigenvectors (v) of a covariance matrix A (computed, in this work, using the Singular Value Decomposition on the input data). Those values can be calculated using the equation \(Av = \lambda v\). In the PCA procedure, the eigenvalues represent how impacting their correspondent eigenvector direction is to the data variability. Since reconstructions provided by PCA emphasize variation, in order to perform dimensionality reduction, the standard procedure is to project your data into an orthogonal basis composed by the eigenvectors that have the biggest correspondent eigenvalues, i.o.w., a subspace with the highest variance possible.

Exemplifying the application of PCA in OpenPCS, first we compute a covariance matrix using feature vector \(a^{*}\) – that is, a concatenation of activations from different layers in a DNN for a specific set of pixels. After that, we calculate the eigenvalues and eigenvectors of this matrix and select the set of low eigenvectors (\(v^{low}\)) that have the correspondent low largest eigenvalues (\(\lambda ^{low}\)). Finally, we use those eigenvectors as a basis and project our input vectors (\(a^{*}\)) on it, resulting in \(a^{low} = a^{*} \cdot v^{low}\).

3.2.2 OpenIPCS

OpenPCS is highly memory intensive, as fitting \(n^{KKC}\) PCA models using millions of pixels with feature vectors in the scale of hundreds or thousands of bins requires all this data to be stored in RAM. In practice, for training the traditional OpenPCS we used a subsample of randomly selected patches to fit the models using a reasonable amount of memory. Empirically, we found that 150 patches with \(224 \times 224\) pixels each resulted in an acceptable trade-off between memory and enough training data for the computation of Principal Components for each class. Even with this approach, OpenPCS required between 20 and 30 GB of memory, depending on the dimensionality of the feature vectors \(a^{*}\). In addition, PCA models trained using subrepresented classes in the imbalanced datasets (e.g. Cars) did not fit correctly due to a low number of correctly classified samples used for training the generative model. Ultimately, OpenPCS for large and highly imbalanced datasets can result in underperformance on the task of UUC identification due to the subsampling required by the large memory usage of the method.

In an effort to minimize this problem, in addition to the traditional OpenPCS we also evaluate an Incremental PCA (IPCA) for generative modeling and likelihood scoring. The full OSR segmentation methodology will be henceforth referred to as OpenIPCA. In contrast to the traditional offline training of the standard PCA, IPCA allows for mini-batch online training, which is highly useful for correctly computing the Principal Components in large datasets. OpenIPCA also allows for the model to be further updated in an efficient manner whenever new data might arise, not requiring a full retraining of the standard PCAs from scratch, but instead updating the IPCA model by feeding newly acquired patches. We emphasize that, apart from the incremental/online training that allows all the training dataset to be used in the computation of the Principal Components, all other aspects of the implementation of OpenIPCS were identical to the standard OpenPCS.

4 Experimental setup

This section describes the experimental setup for the evaluation of OpenFCN against open and Closed Set baselines. In order to encourage reproducibility, we provide details regarding the datasets and evaluation protocol (Sect. 4.1), fully convolutional architectures and baselines (Sect. 4.2). In addition, we are publicizing the code for OpenFCN, OpenPCS and OpenIPCS in this project’s webpageFootnote 2 in a conscious effort to encourage reproducibility of our results and follow-up research on OSR segmentation. OpenFCN’s Weibull fitting and distance computation was based on libMRFootnote 3 and OpenPCS’ Principal Components and likelihood scoring were computed using the scikit-learnFootnote 4 library.

4.1 Datasets and evaluation protocol

In order to validate the effectiveness of OpenFCN and OpenPCS on RS image segmentation, we used two urban scene 2D semantic labeling datasets from the International Society for Photogrammetry and Remote Sensing (ISPRS) with pixel-level labeling: VaihingenFootnote 5 and Potsdam.Footnote 6 Vaihingen presents a spatial resolution of 5 cm/pixel with patches ranging from 2000–2500 pixels in each axis and Potsdam has 9 cm/pixel samples with \(6000\times 6000\) patches. Both datasets contain IR-R-G-B spectral channels paired with semantic maps divided into 6 KKCs: impervious surfaces, buildings, low vegetation, high vegetation, cars and miscellaneous; and 1 KUC: segmentation boundaries between objects.

The data also allows for 3D information to be incorporated into the models via Digital Surface Model (DSM) images, which is also made available in its normalized form (nDSM). In order to follow standard procedures in the RS literature (Sherrah 2016; Audebert et al. 2016; da Silva et al. 2020), we ignored the blue channel in our evaluation, limiting the experiments to the IR-R-G channels, in addition to the nDSM data, which was simply added as another channel to the inputs. Aiming to ease the computation complexity of our experiments, we also ignored the miscellaneous class, as it contains a rather small number of samples mainly on Vaihingen. For both datasets we used the standard procedure in the literature of training with most patches, while separating some specific patches for testing: 11, 15, 28, 30 and 34 for Vaihingen; and 2_11, 2_12, 4_10, 5_11, 6_7, 7_8 and 7_10 in the case of Potsdam.

Additionally to Vaihingen and Potsdam, we conducted experiments on the dataset of the 2018 IEEE GRSS Data Fusion ChallengeFootnote 7, from now on referred to as Houston dataset. Like Vaihingen and Potsdam, Houston dataset contains RGB and DSM images with pixel resolutions of 5 cm and 50 cm, respectively. These previously discussed bands are paired with 48 hyperspectral bands in a 1 m resolution in the Houston dataset. For consistency with the experimental setups in Vaihingen and Potsdam datasets, we employed only the RGB and DSM bands in Houston. In order to match the differing RGB and DSM bands in Houston, we simply resize the RGB band to the lower resolution of the DSM using bilinear interpolation. While this is not the best use of the high resolution RGB information, the goal of this work is not to achieve state-of-the-art segmentation through some clever multi-scale data fusion scheme, but instead to use the available data for testing OSR in segmentation scenarios.

Compared to Vaihingen and Potsdam, Houston allows for a stress test of the open set segmentation methods in a scenario with a considerably larger amount of known classes: unclassified, healthy grass, stressed grass, artificial turf, evergreen trees, deciduous trees, bare earth, water, residential buildings, non-residential buildings, roads, sidewalks, crosswalks, major thoroughfares, highways, railways, paved parking lots, unpaved parking lots, cars, trains, and stadium seats. Among these classes, we explicitly ignore three ones in all experiments during both training and evaluation: unclassified, stadium seats, and water. The unclassified class is closer to a Known Unknown Class (KUC) than to a KKC, even containing unlabeled samples from other KKCs (e.g. cars and buildings) that were not labeled; while the latter two classes were removed due to extreme imbalance in the training set, making their samples unrepresentative for both the closed and open set models without the hyperspectral bands. Similarly to Vaihingen/Potsdam, experiments on Houston are conducted using the standard split between train and test of this dataset.

In order to simulate the Open Set environments on the Closed Set RS datasets, we employed a Leave-One-Class-Out (LOCO) protocol. LOCO works by selecting one class as UUC and ignoring its samples during training as shown in Fig. 8. This protocol allows for verifying both the overall performances of the segmentation architectures and class-by-class metrics, as there is a large class imbalance mainly when comparing classes as impervious surfaces and cars in the datasets. In order to not take into account the samples from the UUCs in each experiment, we only compute and backpropagate the loss according to the labels of the KKCs, with UUC pixels in the ground truths being skipped. This scheme guarantees that no information about the UUCs is fed to the model during the training procedure. Additionally, we test groupings semantically similar classes (e.g. vegetation, buildings, vehicles) as UUCs in experiments on Vaihingen, Potsdam and Houston.

One should notice that all samples in the data used in our experiments pertains to Closed Set Semantic Segmentation datasets, which allows for an objective evaluation of the effect of OSR segmentation on well-known classification metrics. Aiming to capture all nuances of training and testing Open Set scenarios, we propose a new standard set of test metrics that accounts for KKCs and UUCs, while also capturing the overall performance over all known and unknown classes. In order to evaluate the impact of adding the meta-recognition step to the inference procedure of KKCs, we computed the accuracy for known classes (\(Acc^{K}\)). The main metric adopted by da Silva et al. (2020) for evaluating the performance of OSR segmentation on UUCs is the binary accuracy between KKCs and UUCs (\(Acc^{U}\)), as in the case of Anomaly Detection. However, \(Acc^{U}\) is artificially inflated due to the large imbalance between the number of pixels from KKCs and UUC in most cases. We solved this by adding the precision of unknown detection (\(Pre^{U}\)) to the evaluation in order to observe the trade-off in performance in the binary meta-recognition task. The Kappa (\(\kappa\)) metric was used to evaluate the overall performance in both KKCs and UUC of the algorithms, as it is a common metric for the evaluation of semantic segmentation in remote sensing. All of the metrics above are, however, threshold dependent, making it hard to compare methods with distinct threshold ranges. As the thresholds do not represent equal statistical entities in SoftMax Thresholding, OpenFCN and OpenPCS, we define the thresholds for the methods based on preset values of True Positive Rate (TPR). This evaluation protocol also has the benefit of yielding information about the recall of the methods, as TPR and recall are equivalent in this case.

In order to evaluate the methods in a threshold-independent fashion, we complement our analysis with the Receiver Operating Characteristic (ROC) curve—which evaluates the whole range of thresholds for the different methods on a TPR vs. False Positive Rate (FPR) 2D plot—and its corresponding Area Under Curve (AUC). The ROC/AUC is a suitable measure since we want to evaluate the performance of the proposed methods and baselines across the whole threshold spectrum. Note that different choices of threshold values may result in an imbalance in the performance over closed and open classes, possibly skewing the results to one side or another. Thus, we believe that by evaluating the ROC/AUC one can assess if one algorithm is better than another for all possible thresholds.

Due to the large spatial resolution of Vaihingen and Potsdam patches, we sliced them into \(224\times 224\) mini-patches for training, in order to fit ImageNet’s (Deng et al. 2009) traditional patch size, which is the most commonly expected input size for the CNNs used as backbones in our experiments. During training, we used data augmentation based on random cropping for patch selection, random flips in the horizontal and vertical dimensions, and random rotations by multiples of 90 degrees. In order to solve inconsistencies due to lack of context and to compensate for patch border uncertainties, we employed overlapping patches of \(224\times 224\) pixels during testing. We explicit that this procedure considerably increased the computational budget of our experiments mainly in the Potsdam dataset for OpenFCN due to inefficiencies and non-vectorized operations in libMR. A more thorough evaluation of time complexity can be found in Sect. 5.2.4.

4.2 Fully convolutional architectures and baselines

We compared several distinct fully convolutional architectures for OSR semantic segmentation, including five distinct CNN backbones: VGG-19 (Simonyan and Zisserman 2014), ResNet-50 (He et al. 2016), Wide ResNet-50-2 (Zagoruyko and Komodakis 2016) (WRN-50), ResNeXt-50-32x4d (Xie et al. 2017) (ResNeXt-50) and DenseNet-121 (Huang et al. 2017). All FCN implementations were based on the CNN source codes from torchvisionFootnote 8.

In order to spare memory and computational resources, only a subset of layers in each FCN architecture is fed to the generative model. As the last convolutional layers after the FCN bilinear interpolation already possess high semantic level information about the prediction (classes), we added earlier layers to \(a^{*}\) aiming to insert more information about the raw data (pixels). Table 1 compiles all layers concatenated in \(a^{*}\) for pixel open set recognition, as well as information about which layers were fed via skip connections to the classification layers in order to improve the spatial resolution of FCN predictions.

Based on the methodology of OpenMax (Bendale and Boult 2016), we also compare OpenFCN and OpenPCS with traditional SoftMax followed by thresholding. SoftMax Thresholding (\({\hbox {SoftMax}}^{{\mathcal {T}}}\)) follows the premise that least certain network predictions may be motivated by outlier classes seen in test time. In addition to these Open Set approaches, we evaluate the proposed methods in comparison with traditional Closed Set fully convolutional architectures for dense labeling using the LOCO protocol. Closed Set FCNs wrongly classify UUCs by design, forcing the pixel to be segmented according to the higher probability prediction among KKCs. One should notice that OpenPCS, OpenFCN, \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and Closed Set FCNs were evaluated according to the same pretrained DNNs, differing only on test time. This protocol allows for direct comparisons in objective metrics disregarding performance variability due to the random nature of gradient descent optimization.

4.3 Cutoff values for OOD detection

All methods investigated in our experimental setup require a cutoff value \({\mathcal {T}}_{k}\) to delineate the boundary between KKC pixels from a class k and UUC pixels. Aiming to mimic a true OSR task, these thresholds were defined empirically according to the KKCs available during training. That is, no information about the UUCs should be fed to the process of choosing cutoff values from the network’s confidence—in the case of \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN—and to the multivariate gaussian likelihoods, for OpenPCS and OpenIPCS.

OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) follow the methodology of OpenMax (Bendale and Boult 2016), with the cutoffs being cross-validated experimentally. OpenPixel (da Silva et al. 2020) also performed this analysis across all UUCs in Vaihingen and Potsdam, finding 0.7 to be a suitable value for thresholding between KKCs and UUCs. In other words, all pixels predicted to be from a certain class with confidence smaller than 0.7 should be considered as OOD, while predictions with confidence of 0.7 or above keep their predicted KKC.

The same cutoff idea is employed in OpenPCS and OpenIPCS, albeit with a crucial distinction: while all \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenMax predictions are bounded to the interval \(\left[ 0, 1\right]\), gaussian likelihood ranges vary depending on the number of Principal Components of PCA. Thus, in order to consistently compute cutoff values that work on any architecture and with a distinct number of Principal Components, we define threshold values based on TPR quantiles. One last remark about OpenIPCS is that, even if iterative/online training allows it to be fitted on the whole training set, we still used random patch subsampling in order to shorten the training time for the generative part of OpenIPCS.

Log-likelihoods for two scenarios in open set segmentation with distinct UUCs: Impervious Surfaces (a) and Low Vegetation (b). A much greater separation between the likelihoods of KKCs (\(\ell ^{KKC}\)) and the likelihoods of the UUCs (\(\ell ^{UUC}\)) can be seen in (a) than in (b), as the class Impervious Surfaces contains considerably less intra-class variability and similarity with other classes than Low Vegetation in the datasets described in Sect. 4.1. One should notice that worse separations between these distributions of likelihoods results in worse overall OSR performance

Additionally to using the log-likelihoods for cutoff in the case of OpenPCS and OpenIPCS, we also observed in our exploratory experiments that the reconstruction error of the encoded \(a^{*}\) tensors produces similar results. Thus, an alternative with considerably lower computational cost to the score-based thresholding can be to perform the thresholding on the reconstruction error, whilst keeping the rest of the pipeline as is. This early finding is, in fact, aligned to other recent works in the OSR literature (Oza and Patel 2019; Yoshihashi et al. 2019). We highlight that the experiments that support this claim had a limited and exploratory nature and that a much more thorough assessment should be conducted to validate this hypothesis. Figure 9 contains the Log-likelihood distributions overall classes for the models in which the UUC were Impervious Surfaces and Low Vegetation, respectively. Those models used a fcndensenet121 as a backbone on the Vaihingen dataset. In (a), we can see a clear separation between the distributions of KKC and UUC, unlike (b), in which most of them overlap each other. It is important to mention that for acceptable discrimination between KKC and UUC, it is desirable that their Log-likelihood distributions are as separate as possible.

5 Results and discussion

In this section we present and discuss the obtained results. We design our experimental evaluation in order to cover all possible aspects desirable to know in an OSR task. First, an analysis is performed in Sect. 5.1 in order to define the most suitable architectures for the proposed approaches. This section contains both overall and per-class threshold-independent analysis (AUC-ROC). Section 5.2 compares the proposed methods with the baseline in terms of quantitative metrics, qualitative segmentation maps (Sect. 5.2.3), and runtime performance (Sect. 5.2.4). At last, Sect. 5.3 presents our analysis of scenarios with a larger proportion of UUCs to KKCs, evaluating the performance of the proposed methods and baselines in settings with greater openness (Scheirer et al. 2012).

Note that, for the sake of simplicity and clarity, only the most relevant results were reported in this Section. For a full report of the results, please, check the supplementary material on this project’s webpage linked in Sect. 4.

5.1 Architecture analysis

In this Section, we analyze the networks in order to define the most suitable architectures for the proposed techniques. To perform this evaluation, we employed the AUC, a threshold-independent performance measurement that allows comparisons between methods without resorting to (potentially) arbitrary thresholds. Furthermore, it is important to emphasize that we performed this analysis by using only the Vaihingen dataset. This is due to the fact that the Potsdam dataset is very similar to Vaihingen and, therefore, analysis and decisions made over the latter dataset are also applicable to the former one.

Table 2 presents the average AUC results over all runs of the LOCO protocol. For \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN, the ResNet-50 architecture (He et al. 2016) produced the best outcomes, followed closely by WRN-50 (Zagoruyko and Komodakis 2016) and DenseNet-121 (Huang et al. 2017). OpenPCS showed better results with WRN-50 (Zagoruyko and Komodakis 2016), followed closely by DenseNet-121 (Huang et al. 2017) and VGG (Simonyan and Zisserman 2014). Since WRN (Zagoruyko and Komodakis 2016) and DenseNet (Huang et al. 2017) were the most stable networks, producing good results in all approaches, they were selected and used in further experiments shown in the following sections.

Based on the best results for Vaihingen, we also report the threshold independent average AUC analysis over all UUCs on Potsdam. Table 3 shows the results for DenseNet-121 and WRN-50 on the considerably larger Potsdam dataset, wherein extensive architectural comparisons were not feasible.

One can easily see that in both tables OpenPCS and OpenIPCS present better AUC results than OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\), while the distinction between OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) is often rather small or nonexistent. These evaluations are averages and standard deviations computed across all classes in the LOCO protocol, therefore, apart from a raw evaluation of the overall performance of each method, the previously mentioned.

5.2 Baseline comparison

Based on the analysis performed in previous sections, we have conducted several experiments to investigate the effectiveness of the proposed methods using the most promising architectures: WRN-50 and DenseNet-121. We further investigate the performance on both KKCs and UUCs of the proposed methods (OpenFCN and OpenPCS), and baseline (\({\hbox {SoftMax}}^{{\mathcal {T}}}\)) using the threshold-dependent metrics described in Sect. 4.1. This section also contains qualitative segmentation predictions taken from the experiments.

5.2.1 Per-class analysis

Table 4 presents the results, in terms of AUC per UUC, for the Vaihingen dataset. As can be seen, the OpenPCS obtained most of the best results when evaluating the UUCs and networks independently (bold values). When considering all the networks together and only the UUCs independently (values with \(\dagger\)), OpenPCS produced the best outcomes for four UUCs, while OpenFCN yielded the best result for one UUC.

Analysis of Tables 4 and 5 reveal that OpenPCS excels in almost all architectures and UUCs in the LOCO protocol. The overall best results for four out of five UUCs in the LOCO protocol (Impervious Surfaces, Building, High Vegetation and Car) pertain to OpenPCS. Most AUCs for OpenPCS in these UUCs achieve values larger than 0.80, peaking at 0.94 in the class Building. It is also notable that OpenPCS achieved a significant increase in AUC performance for most of the cases. Not coincidentally, these classes are also the most semantically and visually distinct from the other ones. More detailed insights regarding the nature of the superiority of OpenPCS over the other methods will be discussed further in the next sections.

The outlier in this pattern is the class Low Vegetation, as its samples present a much larger intraclass variability than the others, encompassing sidewalks, bushes, grass fields in the interior and exterior of buildings, gardens, backyards, some sections of parking lots and even train tracks partially covered with vegetation. Due to this higher variation in samples, the lower-dimensional multivariate gaussian fitting of OpenPCS was not able to properly map the whole variation in the data, as was the simple Weibull fitting of OpenFCN. Thus, \({\hbox {SoftMax}}^{{\mathcal {T}}}\) overcame both proposed methods and achieved a peak AUC of 0.81 on ResNet-50. Even though the best result in the UUC High Vegetation was achieved by OpenPCS, the peak AUC for this class was also 0.81, this time using a DenseNet-121 backbone. The main visual distinction between High Vegetation and Low Vegetation is not in the visible spectrum, though. While textures for most patches of both classes are rather similar when taking into account grass fields and bushes for Low Vegetation, the DSM data is more visually distinct than the IRRG data, as the peak altitude for tree tops are above the mostly plain areas of Low Vegetation. This implies that using the inputs channels (IRRG and DSM) coupled with the middle and later activations of the DNNs on the computation of PCAs for OpenPCS could have improved the performance of both classes. However, more research on this must be done to either confirm or deny this hypothesis.

To better demonstrate the differences in performance between the techniques, Figs. 10 and 11 present the ROC curves for samples from the Vaihingen and Potsdam datasets, respectively. It is important to emphasize that each figure was created using a binary mask that separates KKCs from the evaluated UUC. Through Fig. 10, it is possible to observe that, in general, OpenPCS produces better results with lower FPRs for the same TPRs. It is also possible to visually assess that the detection capabilities of the methods for UUC Impervious Surfaces were considerably superior to other classes. The ROCs for Buildings, High Vegetation and Car in Vaihingen also highlight the superiority of OpenPCS when compared to the other approaches.

Figure 11 provides distinct insights, as Potsdam is a much harder dataset than Vaihingen. Figure 11b, e show that OpenPCS and its online/incremental implementation (OpenIPCS) behaves dramatically better than both OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\). OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) stay mostly close to randomly selecting pixels in the images, which is delineated in the figures by the 45 degree dashed line in the plot. For Building and Car, the ROCs and AUCs show that \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN achieved worse than random results, while OpenPCS and OpenIPCS reached close to or above 0.90 in AUC.

Analysis of the per-class ROCs and Tables reveal that OpenPCS (and its variant OpenIPCS) behave considerably better than both \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN in the binary task of Pixel Anomaly Detection for most UUCs. One can clearly see the distinction of the distinct methods in Pixel Anomaly Detection performance between UUCs in Figs. 10 and 11. OpenPCS excels by a large margin on some UUCS, especially Building and High Vegetation on Vaihingen, presenting lower FPR values than OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) by almost any TPR threshold in the ROC. The evaluation on the other classes is more nuanced, as the plots cross at some points. OpenPCS tends to perform better on the lower end of FPR detections, providing the first evidence that OSR using Principal Components lessens the effect of UUC segmentation on the KKC classification performance, as smaller FPRs indicate that a smaller number of KKC pixels were misclassified as UUCs. This relation between UUCs and their distinction in performance on both KKCs and UUCs will further explored in the next Section.

5.2.2 Performance for KKCs and UUCs

OSR tasks are inherently multi-objective, as Open Set algorithms must be able to successfully discern UUCs from KKCs, while still being able to correctly classify samples from KKCs. Table 6 presents the results, in terms of accuracy for KKCs (\(Acc^{K}\)), Precision for UUC (\(Pre^{U}\)), and \(\kappa\), for the Vaihingen dataset using a FCN with DenseNet-121 backbone. For simplicity, this table only reports the results for UCCs Impervious Surfaces and Building. However, detailed results, for the Vaihingen and Potsdam datasets, can be found in Appendices A and B.

Through the table, it possible to observe that the comparison of TPRs larger than one—that is, thresholds that allow for OSR—with their Closed Set counterparts (TPR = 0) using \(\kappa\) reveals that, in many cases, assuming openness improves object recognition in scenarios where full knowledge of the world is not possible. Specifically, for Impervious Surfaces, we obtained gains of 0.13 in terms of \(\kappa\) (0.52 when considering the Closed Set versus 0.65 when using OpenPCS with 0.50 TPR). For the UCC Building, the OpenPCS improved \(\kappa\) from 0.50 (in the Closed set) to 0.70 using 0.70 TPR. These gains mean that, for both UUCs, OpenPCS was able to maintain the accuracy of KKCs (\(Acc^k\)) while considerably increasing the precision of the UUCs (\(Pre^U\)).

On the other hand, despite also improving (in a smaller magnitude) the results in terms of \(\kappa\), \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN were not as effective at preserving the \(Acc^k\) while increasing \(Prec^U\). This means that pixels that were correctly classified by the original DNNs were cast as UUCs by the OSR post-processing resulting in a high FPR.

Aside from these UUCs, similar conclusions can be drawn from other ones, such as High Vegetation and Car. Furthermore, similar outcomes were obtained for the Potsdam dataset. As aforementioned, a detailed discussion of all obtained results, for the Vaihingen and Potsdam datasets, can be found in Appendices A and B. Overall, the results allow us to conclude that OpenPCS is more effective to perform open set semantic segmentation when compared to the other approaches. This difference can be better observed in the qualitative results presented in Sect. 5.2.3.

5.2.3 Qualitative analysis

Figures 12 and 13 present some visual examples of the results generated by the FCN with DenseNet-121 backbone in the Vaihingen and Potsdam datasets respectively. Qualitatively, the effectiveness of OpenPCS to distinguish KKCs from UUCs is even clearer. As can be observed, OpenPCS is capable of producing more accurate UUC identification for both KKCs and UUCs, when compared to the ground-truth label. On the other hand, outcomes generated by the \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN are very similar to each other, but not very alike to the ground-truth label, mainly for the UUCs. This corroborates with previous analysis and conclusions about the effectiveness of the OpenPCS mainly for discriminating UUCs.

Performing a deep analysis of the qualitative results, we can see that the proposed OpenPCS identified the UUC Building almost perfectly, while the \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN techniques quite confused this UUC with other KCCs using the same threshold TPR. This same outcome can be seen for the UUC Car. Although this UCC comprises only a tiny percentage of the total amount of pixels and does not contribute a lot in terms of \(\kappa\), it was much better identified by the OpenPCS than by other approaches. In fact, the above outcomes are repeated for all UUCs, except for the Low Vegetation one, which has enormous intra-class variability (with pixels of grass fields, sidewalk-like areas and other structures) and, consequently, natural erratic behaviour. For a more detailed qualitative analysis, please, check the project’s webpage.

5.2.4 Runtime performance analysis

One of the most important motivations for proposing OpenPCS was the observation that OpenMax did not scale well to dense labeling, being prohibitively expensive when operating in a pixelwise fashion, while the simple \({\hbox {SoftMax}}^{{\mathcal {T}}}\) performed exponentially faster. In order to properly quantify the time differences between \({\hbox {SoftMax}}^{{\mathcal {T}}}\), OpenFCN and OpenPCS, we computed the per-patch runtimes of each method for \(224 \times 224\) patch resolution. These results are shown in Fig. 14 for both Vaihingen and Potsdam (Fig. 14a, b, respectively) and these same results are also presented in \(\log _{10}\) scale (Fig. 14c, d, respectively), as the linear time comparisons severely hampered the visualization of \({\hbox {SoftMax}}^{{\mathcal {T}}}\)’s performance when compared to OpenFCN.

Per-patch time comparison between the proposed approaches. The time presented in the y-axis is shown in seconds for each (\(224 \times 224\)) patch across all test patches. The left part of the figure (a, c) shows times for Vaihingen, while the rightmost figures (b, d) depict times for Potsdam. Results are also shown in linear (a, b) and \(log_{10}\) scale (c, d) in order to show the exponential distinction between execution times across \({\hbox {SoftMax}}^{{\mathcal {T}}}\), OpenFCN and OpenPCS. Confidence intervals for each plot are shown as error bars computed with the t-Student distribution on the average execution runtimes for patches over 5 runs of the LOCO protocol (one for each class set as UUC) for each backbone

Visual analysis of Fig. 14 reveals the discrepancies between OSR methods, with \({\hbox {SoftMax}}^{{\mathcal {T}}}\) inference being the fastest, usually taking between 0.03 and 0.1 s per \(224 \times 224\) patch. On the opposite side, OpenFCN was observed to be by far the slowest method, with runtimes for one single patch in the range between 10 and 20 s. This may be justified by the fact that, at each inference, the method needs to sort the predictions (softmax activations) for each sample (e.g., for each pixel) in order to multiply them by the correct alpha value previously calculated by the OpenMax, then recalibrating the prediction scores. This sample-wise sorting at each inference, originally proposed in the OpenMax and consequently incorporated into the OpenFCN, significantly affects the running time of the algorithm, making it highly unsuitable for real-time computer vision applications.

On the faster end of the spectrum between \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN, there were OpenPCS and OpenIPCS, with execution times between 0.25 and 0.7 seconds per patch. Both the online and offline PCAs used for the inference of UUCs from the proposed methods are highly vectorized operations, which allows them to be parallelized into several processing cores and be faster even in single-core architectures. While we did not tune the algorithms for this purpose, OpenPCS’ and OpenIPCS’ runtimes allow for near real-time inference on applications as self-driving cars or autonomous drone control. In contrast, the original implementation of OpenMax from libMR is not naturally vectorized, requiring inferences to be performed linearly on pixels and severely hampering OpenFCN’s performance.

5.3 Experiments with multiple UUCs

In addition to the results shown in Sects. 5.1 and 5.2, we also conducted experiments on multiple UUCs aiming to test scenarios with a larger proportion of UUCs to KKCs. The current section will be focused on presenting and discussing these experiments quantitatively and qualitatively for Vaihingen and Potsdam (Sect. 5.3.1) and for GRSS (Sect. 5.3.2).

5.3.1 Multiple UUCs on vaihingein and potsdam

In order to simplify and speed up the experiments, we split the classes in groups of KKCs with considerable semantic similarities, forming the divisions presented in Table 7. This table also presents our nomenclature for the multiple UUC experiments, in order to more easily refer to them in the text.

While some divisions are clear in purpose (e.g. \(E^{(0,1,4)}\) and \(E^{(2,3)}\) separate man-made constructions from vegetation; and \(E^{(0,4)}\) split elements present in streets from the other classes), other combinations of UUCs were added to test the proposed methods in more diverse environments (e.g. \(E^{(0,1)}\) or \(E^{(0,2,3)}\)). All AUC results for the 6 experiments with multiple UUCs (\(E^{(0,1)}\), \(E^{(0,4)}\), \(E^{(1,4)}\), \(E^{(2,3)}\), \(E^{(0,1,4)}\) and \(E^{(0,2,3)}\)) in DenseNet-121 and WRN-50 backbones, as well as the additional metrics \(Acc^{K}\), \(Pre^{U}\) and \(\kappa\) for \(E^{(0,1)}\), \(E^{(2,3)}\) and \(E^{(0,1,4)}\) using the DenseNet-121 backbone are shown in Tables 8, 9, 10 and 11. However, for the sake of simplicity and objectivity, \(Acc^{K}\), \(Pre^{U}\) and \(\kappa\) for \(E^{(0,4)}\), \(E^{(1,4)}\) and \(E^{(0,2,3)}\) for DenseNet-121, as well as the experiments for all threshold-dependent metrics using the WRN-50 as a backbone are reported only in Appendix C.

Tables 8 and 9 show a much larger margin between OpenPCS/OpenIPCS and OpenFCN/\({\hbox {SoftMax}}^{{\mathcal {T}}}\), indicating that likelihood scoring from principal components is considerably more reliable in OSR Segmentation than both SoftMax Thresholding and OpenMax. Specifically, AUC results show significant improvements in both Vaihingen and Potsdam when using principal components modeling than the baselines for almost all experiments. OpenPCS and OpenIPCS showed far superior performance to both OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) in the majority of multiple UUC experiments, even reaching AUCs greater than 0.9 in many cases, allowing for reliable UUC identification in scenarios with larger openness. On Vaihingen five out of six experiments—\(E^{(0,1)}\), \(E^{(0,4)}\), \(E^{(1,4)}\) and \(E^{(0,1)}\)—showed significantly greater performance for OpenPCS, reaching AUCs of 0.91, 0.86, 0.92, 0.92 and 0.86 using the DenseNet-121 backbone, respectively. With ROC curves this close to the left upper corner, one can set the cutoff value for TPRs between 0.6 and 0.9 with relatively small FPRs in the range of 0.1–0.2. In other words, in scenarios with a high proportion of UUCs to KKCs, the recognition of unknown pixels using OpenPCS and OpenIPCS can be done without compromising as much the performance of KKCs, which might also be caused by a better modeling of the fewer classes by the FCNs. This assessment will be further discussed in the results presented in Tables 10 and 11.

The only exception to the superior performance of OpenPCS/OpenIPCS in multiple UUC experiments was \(E^{(2,3)}\) on Potsdam—that is, when High and Low Vegetation were removed from the set of KKCs used during training. This was an expected outcome, as OpenIPCS did show their worse results in High/Low Vegetation on the experiments discussed in Sect. 5.1. However, one should notice that no method was able to achieve in \(E^{(2,3)}\) the same performance of OpenPCS/OpenIPCS in the other multiple UUC experiments. We attribute this failure case to the high intra-class variability of Low Vegetation, while we raise the hypothesis that multimodal modeling for the likelihood distribution and/or \(a^{*}\) multimodal gaussian modeling should improve the results on these classes.

Similarly to Sect. 5.2.1, Tables 10 and 11 show the quantitative measures of \(Acc^{K}\), \(Pre^{U}\) and \(\kappa\), aiming to quantitatively assess the performance of the proposed methods and baselines on the KKCs, on the UUCs and the overall performance encompassing known and unknown classes.

These tables show major improvements, in terms of \(\kappa\), for all experiments, except the ones using High/Low Vegetation in the Potsdam dataset. As previously explained, we hypothesize that this is due to the high intra-class variability of those classes. Disregarding this experiment, for all others, the gains, in terms of \(\kappa\), were even better than the ones obtained with only one UCC (Sect. 5.2.2), implying that the proposed OpenPCS/OpenIPCS are more robust to problems with higher openness. Deeply analyzing such gains, we may observe that they come from the fact that the proposed methods are capable of improving the recognition of UUCs (\(Pre^{U}\)) without significantly sacrificing the identification of KKCs (\(Acc^{K}\)). Precisely, in many cases, OpenPCS/OpenIPCS achieved more than 0.90 of \(Pre^U\) with only 1 2% loss in \(Acc^{K}\). This shows the capacity of the proposed methods to efficiently perform open set semantic segmentation even in datasets with greater openness. As with the experiments with only one UCC, all obtained results using multiple UUCs as well as a discussion about them can be seen in Appendix C.

Images, ground truths and predictions for \({\hbox {SoftMax}}^{{\mathcal {T}}}\), OpenFCN and OpenPCS on the Vaihingen dataset for the six experiments with distinct UUC combinations presented in Table 7. These predictions were obtained using a DenseNet-121

Images, ground truths and predictions for \({\hbox {SoftMax}}^{{\mathcal {T}}}\), OpenFCN and OpenPCS on the Potsdam dataset for the six experiments with distinct UUC combinations presented in Table 7. These predictions were obtained using a DenseNet-121

It is noticeable in Figs. 15 and 16 that OpenPCS produces better predictions when compared to the other methods for almost all pairs or triplets of UUCs (\(E^{(0,1)}\), \(E^{(0,4)}\), \(E^{(1,4)}\), \(E^{(0,1,4)}\) and \(E^{(0,2,3)}\)), but exhibits worse results in experiment \(E^{(2,3)}\). These results reiterate the inability of OpenPCS to deal with the high intra-class variability present in Low Vegetation, as previously shown in Sects. 5.1 and 5.2.

As previously reported in Sect. 5.2.3, OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) still suffered with the naturally lower prediction confidences in multiple UUC experiments, with most of the UUC pixels predicted by these methods lying on object boundaries, even between two KKCs. Again, this is likely responsible for the considerably poorer quantitative results of these methods shown in Tables 8, 9, 11, and 11, with OpenPCS and OpenIPCS excelling due to their use of middle-level features from the networks, which still contain information about the input space, not being entirely bound by the output class space as OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\).

One last remark about the qualitative results in Figs. 15 and 16 is regarding \(E^{(0,1)}\), \(E^{(2,3)}\) and \(E^{(0,2,3)}\). In all those cases, the class Car is a KKC, being naturally the most unrepresented objects in both Vaihingen and Potsdam due to the large class imbalance. While \({\hbox {SoftMax}}^{{\mathcal {T}}}\) and OpenFCN severely struggled with this class imbalance, being unable to properly identify Cars as KKCs, OpenPCS and OpenIPCS preserved vastly more correctly predicted Car pixels, indicating that they are more robust to high class imbalance during the training of their generative model.

5.3.2 Multiple UUCs on GRSS

Figure 17 shows ROC curves and AUCs for the Houston dataset on two scenarios with multiple UUCs at a time: (i) the first scenario, referenced as Vegetation, is composed of the classes healthy grass, stressed grass, artificial turf, evergreen trees, and deciduous trees; and (ii) the second scenario, referenced as Building, is composed of residential buildings, and non-residential buildings. As presented in Sects. 5.2 and 5.3.1, one can clearly see the superiority of OpenPCS and OpenIPCS in comparison to \({\hbox {SoftMax}}^{{\mathcal {T}}}\) or OpenFCN, with Principal Component Scoring obtaining higher AUCs than the other methods. Additionally, in both experiments, OpenIPCS presented considerably higher performance than OpenPCS, with the former surpassing the latter in AUC by approximately 0.1. Even though further experimentation is required for any definitive assertion, this result serves as initial evidence that OpenIPCS is more adaptable to a scenario with a larger number of KKCs.

Table 12 presents threshold-dependent metrics for experiments in the Houston dataset. Overall, known class accuracy results are not as high as in Vaihingen and Potsdam; an expected outcome given that this is a very fine-grained dataset. Aside from this, we can observe that for Vegetation as UUCs the OpenIPCS outperformed all other methods, as well as the Closed Set scenario, in terms of \(\kappa\) and \(Pre^{U}\). This outcome is similar to those previously reported for Vaihingen/Potsdam. For the Buildings as UUCs, all approaches produced very similar outcomes, with the best result, in terms of \(\kappa\), being the one obtained by the Closed Set. We believe that this is due to the class imbalance of the Houston dataset, i.e., Buildings classes represent a small fraction of the dataset and therefore do not impact the final result as much as the Vegetation classes, which are more prevalent in the dataset.

6 Conclusion

In this manuscript we introduced, formally defined and explored the problem of Open Set Segmentation and proposed two approaches for solving OSR segmentation tasks: (1) OpenFCN, which is based on the well-known OpenMax (Bendale and Boult 2016) approach; and (2) OpenPCS, a completely novel method for Open Set Semantic Segmentation. A comprehensive evaluation protocol based on a set of standard objective metrics to be used on this novel field that takes into account KKCs and UUCs was proposed, executed and discussed for the proposed methods and the main baseline: \({\hbox {SoftMax}}^{{\mathcal {T}}}\).

OpenPCS—and its more scalable variant referred to as OpenIPCS—yielded significantly better results than OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\). OpenPCS and OpenIPCS are able to merge activations from both shallower layers—containing input pixel-level information—and deeper layers that encode class-level information, while OpenFCN can only take into account information about class, as it operates on the outputs of the network. Performance analysis of OpenPCS/OpenIPCS vs. OpenFCN suggests that using middle-level feature information that combines both the input and output spaces of the FCN is highly useful for OSR segmentation. The latter scheme works well for sparse labeling scenarios (Bendale and Boult 2016) (e.g. image classification), however, dense labeling inherently consists of highly correlated samples (pixels) and labels (semantic maps) with border regions naturally presenting lower confidence than the interior pixels of objects. This distinction between sparse and dense labeling severely hampered the capabilities of OpenFCN and \({\hbox {SoftMax}}^{{\mathcal {T}}}\) of detecting OOD samples.