Abstract

Research at the secondary school level provides compelling evidence to suggest that a positive school climate is related to improved health, wellbeing, and cognitive outcomes for students. In response to these findings, school systems around the world are mandating the collection of school climate data in schools. However, reviews of the literature indicate that there are relatively few instruments that provide evidence to support translation and criterion validity and assess the school climate from the perspectives of primary school students; and all of them were considered unsuitable or problematic for our purposes. To overcome this gap in the literature, this article describes the development and validation of a school climate survey designed to assess primary school students’ perceptions of school climate. Evidence to support translation validity is provided through data collected at various stages of the development process. Further, data collected from a sample of 1193 primary school students (years 3 to 6) were analysed to provide evidence to support convergent, discriminant concurrent, and predictive validity. The survey and evidence of its psychometric properties are significant for researchers, schools, and education systems seeking to assess primary school students’ perception of the school climate.

Similar content being viewed by others

Introduction

Improving student outcomes has been placed firmly on the reform agenda and is a priority across schools and systems in Australia. Despite ambitious goals outlined in the Australian Education Act (2013) (Cth) and increased expenditure, improvements in student achievement remain limited and the achievement gap continues to widen (Hunter, 2022). These findings highlight that a focus on increased school autonomy and national testing, which emphasise competition and standardisation, has had a limited impact on student achievement (Harrington, 2013; Sahlberg, 2007).

A growing body of research suggests that, without addressing problems related to school climate, the context in which learning takes place, reform efforts (however logical or tempting they appear) will be limited (Aldridge & McLure, 2023). Students spend up to 15,000 hours in school, making these settings highly influential. Unlike factors in the wider environment (such as the family or home context), schools offer malleable factors that can be targeted for change or improvement (Garcia-Carrion et al., 2019; Long et al., 2021). In fact, according to Shindler et al. (2016), identifying and addressing problems related to school climate could be the key needed to improve student outcomes and, potentially, close the achievement gap (e.g. Shindler et al., 2016). Furthermore, if, as research evidence suggests, a positive school climate supports the adaptive functioning of young people and promotes academic outcomes (Lenz et al., 2021), then it makes sense to direct school improvement efforts to improve the malleable aspects of the school climate and create contexts in which young people flourish.

In response to the growing recognition that a school’s climate plays a pivotal role in the success of educational change efforts (Aldridge & McLure, 2023), measures have been developed to tap into the views of school members as diagnostic tools. Despite the growing number of instruments available, only a handful have been developed for use with primary school students. A review of existing primary school-level measures (see below) suggests that they were either unsuitable or problematic. Therefore, the goal of this study was to fill this gap by developing an instrument that fulfills the requirements of both translation and criterion validity to assess the school climate of primary schools from the students’ perspective.

Background

School climate

School climate is a multidimensional construct that provides a measure of the character and quality of the experiences of school members (Bear et al., 2017). These experiences are influenced by social and cultural factors, and shaped by the prevailing ethos, norms, values and beliefs that pervade the fabric of school life (Cohen et al., 2009). Ultimately, these factors dictate the quality of the daily interactions between school (teachers, parents, leaders and students) and shape almost all aspects of school life (Wang & Degol, 2016).

A growing body of research suggests that a school’s climate influences a range of student outcomes (see reviews by Aldridge & McChesney, 2018; Patalay & Fitzsimons, 2016; Thapa et al., 2013). Past findings suggest that school climate factors are associated with range of health-related outcomes such as students’ mental health (Aldridge & McChesney, 2018), emotional well-being and resilience (Aldridge et al., 2016), and prosocial behaviours (O’Brennan et al., 2014). Studies have also revealed that, when perceptions of school climates are positive, there are fewer reports of bullying (e.g. Aldridge et al., 2018; Stigler et al., 2011), delinquency (Aldridge et al., 2018), risk-taking (Reaves et al., 2018) and violence (Steffgen et al., 2013). Further, mounting evidence suggests that positive school climates are related to reduced absenteeism (Burton et al., 2014) and dropout rates (Jia et al., 2016), and improved student achievement (Adeogun & Olisaemeka, 2011; Bear et al, 2014; Berkoqitz et al., 2017; Cohen et al., 2009; Kwong & Davis, 2015; Shindler et al., 2016; Uline & Tschannen-Moran, 2008).

Conceptualising and assessing school climate

Efforts to conceptualise and measure school climate build on the pioneering work of Moos (1974) who examined social environments across different milieus including hospitals, prisons, and schools. Moos (1984) suggested that, like people, social environments have personalities and that the way in which people perceive this environment influences how they behave within it. In his framework, Moos (1974) classified elements of human environments into three domains: relationship (nature and intensity of personal relationships within the environment), personal development (basic directions along which personal growth and self-enhancement tend to occur), and system maintenance and system change (extent to which the environment is orderly, clear in expectations, maintains control and is responsive to change). Although these domains have been widely used to categorise elements of classroom climates (e.g. Fraser, 2012; Trickett & Quinlan, 1979), elements of school climates, which have a degree of overlap with those identified by Moos, have generally been categorised according to the four domains of community/relationships, safety, teaching and learning, and environmental-structure (e.g. Cohen, 2006; Freiberg, 1999; Wang & Degol, 2016). Whilst some researchers have added the fifth domain of school improvement (e.g. Thapa et al., 2013), Wang and Degol’s (2016) four domains were adapted in our study to inform the development of the psychosocial (as opposed to physical) school climate measures used in the survey:

-

The community domain, which aligns with Moos’ (1974) relationship dimension, refers to the quality of the relationships within a school. These relationships are reflected in the interactions between members, including those between students and between students and teachers and other staff members. Although Wang and Degol (2016) included elements related to the degree to which school members feel included in this domain, in our study of psychosocial school climates, we moved these institutional-level norms into the institutional environment domain.

-

The academic atmosphere domain is related to the support provided at the school and, although this domain includes leadership and the professional development of teachers, for the development of the survey, a focus on teaching and learning was taken. This domain considers factors that impact students’ learning experiences, including instructional practices and expectations of the teacher (Deemer, 2004). Drawing on research in which students, despite being socially disadvantaged, perform well at school (Modin & Östberg, 2009), our focus was on characteristics that promote positive outcomes such as teachers’ expectations (Wang & Degol, 2016).

-

The safety domain overlaps with Moos’ system maintenance and system change dimensions, which involve the structures within the school that encourage either system maintenance, such as the rules of a school, or system change, such as the mechanisms to report and seek help. In our study, this domain masures the quality of the physical and emotional safety of a school, such as the clarity of the rules and the disciplinary practices.

-

The institutional environment domain in many studies (e.g. Wang & Degol, 2016) refers to the physical structure of the school. However, because our study examined the psychosocial school climate, it was meaningful to include constructs related to Moos’ (1974) personal development dimensions. Therefore, this domain was reconceptualised to include elements influenced by institutional-level norms related to inclusion, such as respect and acceptance.

Increasingly, the school climate has been examined using school members’ perceptions of the strengths and weaknesses of the school. Perception data provide a meaningful measure because, regardless of their accuracy, perceptions are powerful predictors of behaviour (Walberg & Haertel, 1980) and often more useful than observations, which can only offer an opinion about behaviour (Duckenfield & Reynolds, 2013).

Measures of school climate have been developed to assess the perceptions of different stakeholders, including teachers (e.g. Aldridge & Fraser, 2016; Johnson & Stevens, 2006; Van Horn, 2003), parents (e.g. Aldridge & McChesney, 2021), and students (e.g. Aldridge & Ala’i, 2013; Bear et al., 2011). Two recent literature reviews identified school climate surveys available to assess students’ perceptions of the school climate. One review identified surveys with evidence of validity and reliability (Marraccini et al., 2020), and the other identified surveys that provided both a description of its development and evidence of reliability and validity (Lenz et al., 2021). In both cases, most surveys were developed for use in secondary schools, such as the What’s Happening In this School (Aldridge & Ala’i, 2013), School Climate Measure (Zullig et al., 2010), Georgia Brief School Climate Survey (White et al., 2014), and California School Climate and Safety Survey (Furlong et al., 2005). Surveys developed for use across all levels of schooling (primary school, middle school, and secondary school), were also identified, such as the Delaware School Climate Survey (Bear et al, 2011), Meriden School Climate Survey (Gage et al., 2016), and adaptations of the School Climate Survey (Emmons et al., 2002; Frazier et al., 2015; Haynes et al., 1994).

Despite the growing number of school climate surveys, relatively few have been developed for use in primary schools. Of the 26 school climate surveys identified by Marraccini et al (2020), five were developed explicitly for use with primary school students. Close inspection of these surveys identified that two of these surveys (the My Class Inventory (Fraser & Fisher, 1982; Sink & Spencer, 2005) and Classroom Climate Scale (Lopez et al., 2018)), assessed the classroom-level rather than school-level climate. The third survey (the Elementary School Ethical Climate (Keiser & Schulte, 2007)) assessed only a single domain (community) and the fourth survey, developed by Griffith (1999), included problematic items (identified during factor analysis) and a scale (Social Action-Expressive) that was more relevant to the classroom-level climate. The fifth survey, the Abbreviated School Climate Survey (SCSA; Ding et al., 2011), was the only instrument identified by Lenz et al. (2021), whose review only included surveys with a description of the development. Notably, the factor structure, internal consistency reliability, and confirmatory factor analysis provided evidence of sound psychometric properties and, according to Lenz et al. (2021), the SCSA scales covered three of Wang and Degol’s (2016) four dimensions (community, safety, and academic atmosphere). However, the inclusion of scales that assessed enjoyment of school and student behaviour (positive and negative) were considered problematic as these are more indicative of outcomes rather than features of school climate.

To our knowledge, no other surveys have been developed for use at the primary school level. Given this dearth of suitable surveys, our study sought to develop an instrument to assess the views of primary school students, to fill this research gap.

Theoretical framework

The development of the new school climate survey drew on two bodies of work. First, it drew on Lewin’s (1936) field theory, which uses the equation B = f(P,E) to posit that the environmental (E) and social and psychological factors (P) influence a person’s behaviour (B). According to Lewin’s theory, the unique characteristics of individuals (such as their history or personality) are only partly responsible for behaviour, with the environment (including both physical and social factors) also shaping it. Drawing on the work of Lewin allowed us to make sense of how the school climate might influence the actions of individuals in different settings.

Second, we drew on Bronfenbrenner’s (1989) ecological systems theory, in which his ecological model depicts a nested series of systems. The systems range in proximity with the microsystem involving the most immediate interactions and contexts and exerting the greatest influence on an individual’s development. Although Bronfenbrenner’s systems all affect a school’s climate, schools operate as microsystems with school members experiencing interactions and relationships through direct contact (Rudasill et al., 2018). Drawing on the work of Bronfenbrenner (1989) helped to explain the complex role of contextual factors and how they influence student development.

Methods

This section describes the research methods, including the process used to develop the survey, the samples used to confirm face and criterion validity, the data collection methods, and the data analysis techniques.

Development of the survey

The development and validation of the survey involved a refined multistage approach that has been used successfully in previous studies (e.g. Aldridge & Ala’i, 2013; Velayutham et al., 2011).

-

1.

Stage 1: Delineation of the scales. A review of the literature was used to identify constructs important to student outcomes and included in previously-developed surveys with evidence of reliability and validity.

-

2.

Stage 2: Writing items to assess the scales. The development of items involved writing new items as well as extrapolating and modifying items in existing surveys. To ensure comprehension and legibility, the language and wording in extrapolated items were refined and, to ensure the survey length was manageable, consideration was given about the number of items in each construct.

-

3.

Stage 3: Expert panel review. Once the scales and items were developed, an expert panel made up of primary school teachers and school principals evaluated the constructs and items. Specifically, panel members were asked to rate the suitability of the constructs (in terms of malleability and usefulness to schools), whether the items adequately assessed the constructs, and whether the language used in individual items was appropriate and suitable for primary school students.

-

4.

Stage 4: Establishing a suitable response format. Decisions related to a suitable response format considered the age and maturity of the respondents. Ensuring a manageable response format was considered key to achieving meaningful responses.

-

5.

Stage 5: Multiple trials to establish face validity. Multiple trials with students in different year levels and schools were used to examine the face validity of items. Discussions with students during administration to small groups were used to examine the reasons for responses, and whether students interpreted items in the way intended. Feedback from students, and teachers in some cases, was used to refine the items and the response format.

-

6.

Stage 6: Pilot testing the survey. The survey was pilot tested to provide information about students’ ability to use the online version of the survey independently, the length of time it took them to respond to the survey and any technical issues that might arise during the large-scale administration. The pilot test also allowed us to examine whether the teachers' guide, provided to assist with the administration process was usable and adequate to ensure consistency in data collection.

-

7.

Stage 7: Large-scale administration (to support criterion validity). A large-scale administration of the survey was used to collect the data needed for analysis to support the criterion validity.

Sample

In this section, a description of the sample selection is provided for different stages of the multistage approach, including, the expert panel review (stage 4), multiple trials (stage 5), pilot test (stage 6), and large-scale administration (stage 7).

Stage 4: Expert panel review

The selection of the expert panel involved purposeful sampling to ensure that the participants had the relevant experience. The panel was made up of two primary school principals and three primary school teachers. As described above, the panel members used a questionnaire, developed by the researchers, to rate the suitability of the scales and items prior to the trials.

Stage 5: Multiple trials

Item trials were carried out at three schools, whose selection involved convenient sampling, based on researchers’ contacts. One school was in regional Western Australia (enrolment of 165 students) and two were in the suburbs of Perth (with enrolments of 285 and 315 students). Each trial involved the administration of the survey to groups of six students in years 3 and 4. The students were generally selected by the teacher to provide a range of abilities,

The survey trials, along with a scale used to assess wellbeing (described below), were carried out by the researchers with small groups of students in each school. The researchers sat with the students and, as they responded to items, they were invited to share their responses and why they selected them. These sessions were used to determine the students’ interpretations of the items and to examine ways to adjust the language for clarity and understanding. Based on the results of trials in one school, revisions were made to the survey before the next trial at a different school.

Stage 6: Pilot test

A pilot test was carried out with 23 students in one intact year 3 class. Although the selection of the school was based on convenience (proximity to the researchers), the selection of a year 3 class was purposeful in that it involved the youngest students anticipated to respond to the survey.

Stage 7: Large-scale administration

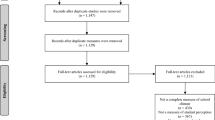

To increase the generalisability of the results, sample selection for the large-scale administration involved non-probability sampling to provide a range of primary schools.

The sites for the large-scale administration included nine schools from three Australian states. Of these schools, six were located in metropolitan areas and three in regional areas. In each school, students enrolled in years 3 to 6 (around 7 to 12 years of age), who had parental permission and were present on the day of administration, were invited to respond to the survey. The newly-developed school climate survey and a wellbeing scale (described below) were administered online in all but two schools. For one of the two schools, the survey was administered as a paper copy because there was limited internet access and, for the other, the teachers requested paper copies for students in year 3.

The administration yielded a total of 1230 cases. Prior to analysis, the data were screened for missing data and disengaged responses. Cases with more than 15% of missing data were removed from the dataset (n = 7) and, for all other missing data, the average for the year level in that school was used. Screening for disengaged responses (cases with a standard deviation of zero across responses) resulted in the removal of 38 cases (approximately 3%).

Once cases with missing data and disengaged responses were removed, a total of 1193 student responses were considered complete and usable. Of the 1193 students, 570 (47.8%) were male and 623 (52.2%) were female. There was a relatively even spread of students across school levels, with 588 (49.3%) in middle primary (aged 7 to 10 years) and 605 (50.7%) in upper primary (aged 10 to 12 years), which reflected the sample size across year levels, with 286 (24%) students in year 3, 302 (25.3%) in year 4, 298 (25%) in year 5 and 307 (25.7%) in year 6. The differences in the number of students from schools in metropolitan (n = 963) and regional (n = 230) areas reflect the differences in enrolment sizes in schools in these areas.

Once data screening was complete, checks for multivariate normality and sampling adequacy were made. The Kaiser–Meyer–Olkin’s (KMO) measure of sampling adequacy was 0.906 and Bartlett’s test of sphericity was statistically significant (p < 0.001), suggesting that the data were suitable for further analysis.

Instruments

Two instruments were administered, the newly-developed school climate survey and a scale to assess student wellbeing, as described below.

Assessing the school climate

The newly-developed survey, named the What’s Happening In This School-Primary (WHITS-P), assesses students’ perceptions of school climate across four broad domains (modified from Wang & Degol, 2016): community, academic atmosphere, safety, and institutional environment. The survey is made up of 27 items in seven scales: Teacher Support, Peer Connectedness, Reporting and Seeking Help, Rule Clarity, Support for Learning, High Expectations, and School Connectedness. A description of the origins and development of these scales is provided in the results section. An additional four items in one scale, Affirming Diversity, were responded to by upper primary students. Table 1 provides the domain in which each scale falls, a scale description, and a sample item for each WHITS-P scale.

During the development and trial phases, decisions were made to improve the reliability and utility of the survey. First, to improve comprehensibility, items associated with a scale were grouped together and a child-friendly header was included to provide a contextual cue. Second, the response format utilised a five-point frequency response scale that was refined for use with younger students (see the results section for more details). Finally, to examine person-environment fit, the modified five-point response scale was incorporated into a side-by-side format to allow students to respond twice for each item, once for how often they perceived the statement happenned (actual), and once for how often they would prefer it to happen (preferred). This format has been used successfully with students in secondary school (see, for example, Aldridge et al., 2012) and, to increase the application of the survey, we sought to examin whether primary school students could use the format meaningfully.

Assessing student wellbeing

A wellbeing scale was included to support the predictive validity of the survey. Wellbeing was selected because past research reports strong correlations between the school climate and student wellbeing at the secondary school level (e.g. Aldridge & McChesney, 2018; Riekie & Aldridge, 2017). Given these findings, it was predicted that the school climate would also be related to the wellbeing of primary school students.

The wellbeing scale was modified from an existing scale, developed for use in secondary school (Riekie & Aldridge, 2017), by refining the language and reducing the number of items. The scale was designed to provide feeling-good indicators (e.g. “Over the past two weeks, I have felt cheerful”) that were responded to using the same five-point frequency response format as the WHITS-P. The Cronbach alpha reliability coefficient, calculated using data collected during the large-scale administration, was 0.74, suggesting that the scale was suitable for use in our study.

Analyses

This section describes the analysis used to support criterion validity (the extent to which an instrument works in the ways intended) in terms of convergent, discriminant, concurrent, and predictive validity. The analyses involved the sample during the large-scale administration (n = 1193 students). Apart from predictive validity, for which only actual responses were used, all analyses were carried out separately for actual and preferred responses.

Convergent validity refers to how closely the items in a scale assess the same construct. To support the convergent validity, factor analysis and internal consistency reliability were used. Factor analysis involved principal axis factor analysis with oblique rotation, considered suitable because of the overlapping nature of the school climate factors (Brown, 2006). The criteria for an acceptable factor loading were that an item should load at 0.4 or more on its own scale and less than 0.4 on other scales, as recommended by Field (2009). Cronbach’s alpha coefficient (Cronbach, 1951) was used as a measure of internal consistency reliability and, although the criteria for an acceptable cut-off has been debated, for this study, a coefficient of 0.6 or more was considered acceptable.

Discriminant validity refers to the extent to which the items in one scale assess a similar construct but assess something different from other scales in the survey. To provide evidence to support the discriminant validity, the correlation matrix generated during factor analysis was used. In this study, the criteria for acceptable discriminant validity were correlations of less than 0.8 because, although there should be a moderately strong relationship between factors, correlations greater than this suggests an unacceptable overlap (Brown, 2006).

Concurrent validity refers to the extent to which a scale in a survey can distinguish between groups that, theoretically, it should. It was hypothesised that students in one school would perceive the school climate in similar ways to each other, but differently from students in other schools. Therefore, to provide evidence of concurrent validity, a one-way analysis of variance (ANOVA) with the school membership as the independent variable, was carried out separately for students’ actual and preferred responses to items in each scale. To provide an indication of magnitude, the eta2 was calculated by dividing the sums of squares for the within-group by the total sums of squares in the ANOVA model.

Predictive validity refers to the extent to which the scales can predict an outcome or behaviour that it theoretically should. In this study, it was predicted that the school climate would be related to student wellbeing. To provide support for predictive validity, simple correlation analysis was used to examine the bivariate relationships between the scales of the WHITS-P and the wellbeing scale.

Results

This section reports the results supporting translational validity, established during the development of the survey, and criterion validity.

Translational validity

Translational validity needs to include both content validity, to ensure the scales are theoretically based, and face validity, to ensure the items provide a clear representation of the construct. Translation validity focuses on the operationalisation of the scales, or how well the theoretical construct is translated into a measure.

Content validity

Content validity of the new survey was established by selecting the scales based on sound theoretical grounds. Scale selection involved a review of past research (to examine factors that affect important outcomes) and past surveys, and considered coverage of the four domains modified from Wang and Degol (2016): academic atmosphere, community, safety, and institutional environment. Six scales were extrapolated from the secondary school version of the What’s Happening In This School? (WHITS; Aldridge & Ala’i, 2013): Teacher Support, Peer Connectedness, School Connectedness, Affirming Diversity, Rule Clarity, and Reporting and Seeking Help. These scales represent three of the four domains and have evidence of strong psychometric properties (Lenz et al., 2021). To provide coverage of the fourth domain, academic atmosphere, two additional scales were developed to assess Support for Learning and High Expectations for success.

At this stage, a total of 41 items were developed to assess the eight scales. Extrapolated items were modified to ensure the concept and language were suitable for primary school students. A decision against using negatively worded items was made because, even though they are used to guard against passive responses, they can create threats to validity and reliability (Chyung et al., 2018). Further, as reverse scoring suggests, using negative items with younger students, can be confusing and not necessarily interpreted as the opposite of its positive equivalent.

To verify content validity, the expert panel (Stage 4) reviewed the scales and items. The panel provided feedback regarding whether the constructs were appropriate for primary school students and whether they were malleable (something that could be changed at the school level). In both cases, the panel members were satisfied with the selected constructs. Panel members also provided feedback regarding the items in terms of a) whether they were a good measure of the constructs and b) whether the wording was accessible to primary school students. Based on advice from the panel, four of the 41 items were omitted and other items, identified as too complex or including language that was not accessible to younger students, were reworded. In addition, two of the panel members commented that the survey was too long for younger students. However, decisions about a further reduction to the number of items were made during the trials with students.

Face validity

The item trials (involving the remaining 37 items) with students were carried out sequentially at three schools (Stage 5). In each trial, the researchers sat with students as they responded to hard copies of the surveys, discussing reasons for their responses, determining their understanding of items, and refining items using their language. The trials provided opportunities to use student feedback to simplify or change the language used and to confirm that students attributed similar meanings to the items. Conducting the trials in different schools and at different times allowed the researchers to use feedback from students in one school to adjust, and sometimes omit, items before trialling a refined version to students in the next school.

As a result of the trials, six of the 37 items were omitted because they were found to be problematic. Further, the scale of Affirming Cultural Diversity was identified as unuable with younger students (as the concepts were not understood) and was not included in the version administered to students in middle primary (years 3 and 4) but retained for those in years 5 and 6.

The trials also allowed us to examine the utility of the five-point frequency response format we had opted to use. This format has been used in past school climate surveys developed for use in secondary schools (e.g., Aldridge & Ala'i, 2013), as it allows students to consider how often each statement takes place. The results of the initial trial suggested that, although upper primary students (ages 11 and 12 years) were able to use a five-point response format effectively, some students in middle primary could not. In response, the second trial involved a three-point format with middle primary students. However, in the second trial, approximately 15% of students placed a mark between two response options (e.g. between sometimes and almost always), suggesting that they were dissatisfied with a three-point scale. Based on this, a decision was made to utilise what appeared to be a three-point scale of almost never, sometimes, and almost always, and include two additional points, one between almost never and sometimes and one between sometimes and almost always. This format reduced confusion and provided students with a greater range of response options. Further, to provide a visual prompt, emojis were used to describe the three main response options. For example, a sad face emoji was included above the words ‘almost never’. This stage also confirmed that primary-aged students were able to use the side-by-side format to indicate their perceptions of the actual and preferred school climate.

A pilot test involved the administration of the survey to one year three class (Stage 6). This pilot test allowed the researchers to trial the online version of the survey, determine the length of time to administer it, and assess the suitability of the administration instructions designed for teachers. The pilot test indicated that students could effectively access and use the online version and that the survey took between 15 and 25 min to complete. The pilot test revealed that teachers would benefit from further resources to assist with administration. As a result, we developed an instructional PowerPoint, to accompany the administration guide, for teachers to share with students prior to administration.

Criterion validity

To examine the criterion validity of the WHITS-P, the data collected during the large-scale administration were used (n = 1193 students in 9 schools). All students responded to 27 items in seven scales and upper primary students responded to an additional four items for the Affirming Diversity scale. The results reported in this section support convergent, discriminant, concurrent, and predictive validity.

Convergent validity

The factor structure and internal consistency were examined to provide evidence to support convergent validity. Principal axis factor analyses (with oblique rotation) using only the items responded to by all students (27 items in seven scales), were carried out separately for actual and preferred responses. Despite the omission of 10 items (based on findings from the expert panel and multiple trials), the 27-tem survey was still considered too long for some of the younger students. In response, and using the results of an initial factor analysis, an additional item from each scale was removed (except the High Expectations scale which only had three items to start with).

A second factor analysis, using only the remaining 21 items, was used to confirm the suitability of the factor structure. Table 2 shows that 293 of the 294 (21 items x seven scales × 2 − actual and preferred responses) possible factor loadings were 0.4 or more on their own scale and less than 0.4 for all other scales. Whilst Item 3 of the School Connectedness scale (for the preferred response) did not meet the criteria, the decision was made to retain it because, first, the factor loading for the actual responses was acceptable, second, the factor did not load at 0.4 or more on any other scale and, third, the internal consistency reliability (reported below) was acceptable (α = 0.769). The results, reported at the bottom of Table 2, suggest the total proportion of variance explained by the seven WHITS scales for students’ actual experience was 68.531% and for their preferred experience was 69.729%.

The Cronbach alpha coefficients, reported in Table 3, were considered acceptable for six of seven scales for the actual responses (α > 0.7) and for all scales for the preferred responses (α > 0.6). For the exception, the actual responses for High Expectations, the Cronbach alpha coefficient was 0.53. Because the factor loadings (reported above) for the items in this scale strongly support convergent validity, a decision was made to retain it.

Discriminant validity

The component correlation matrix generated during oblique rotation was used to support the discriminant validity of the WHTIS-P. The correlations, reported in Table 3, ranged from 0.101 to 0.416 for the actual responses and from 0.267 to 0.459 for the preferred responses. All correlations were relatively low and well below the cut-off of 0.8 which, when combined with the results of the factor structure reported above, provides strong support for the discriminant validity of the WHITS-P.

Concurrent validity

A one-way ANOVA with school membership as the independent variable was used to support the concurrent validity. The results, reported in Table 4, were statistically significant (p < 0.01) for six of the seven scales for the actual responses and all scales for the preferred responses. For the preferred responses, the results were statistically significant for all seven WHITS-P scales. These findings suggest that, except for Reporting and Seeking Help, students responded in similar ways to students in the same school but differently from those in other schools.

Predictive validity

Finally, to support the predictive validity of the WHITS-P, simple correlation analysis was used to examine the relationships between the wellbeing scale and the actual responses of the WHITS-P. The results, reported in Table 4, suggest the correlations for all WHITS-P scales were statistically significant (p < 0.01) for all seven WHITS-P scales.

Confirming an upper primary version with Affirming Diversity

Although the aim of the study was to develop and validate a survey for use with primary school students in years 3 to 6, the importance of the Affirming Cultural Diversity scale led to separate factor analyses using only the responses of upper primary students. An initial factor analysis involved 31 items (27 of which were administered to all students and four additional items, for Affirming Diversity, administered only to upper primary students). The results suggested that two items were problematic (item 2 for the School Connectedness scale and item 1 for the Affirming Cultural Diversity scale). These two items were removed and a second factor analysis involving 29 items was run. The results, reported in the Table 5, indicate that, of the possible 464 loadings (29 items × 8 scales × 2 − actual and preferred responses), one loading (the preferred response to item one for the School Connectedness scale) did not meet the criteria. The decision to retain this item was made for two reasons. First, the loading for the actual responses for this item met the criteria and, second, the factor loadings for the preferred responses did not load at 0.4 or more or any other scale. The total variance accounted for the eight scales of the upper primary version was 68.05% for the actual responses and 69.12% for the preferred responses.

Limitations

Although the researchers went to great lengths to establish a valid and reliable school climate survey, as in all studies, there are some limitations. First, because the study was carried out in one country, therefore, schools and researchers outside Australia should consider suitability in terms of the needs and language of the respective countries. Second, because the sample for the trials and main administration did not include students in remote Australia, generalising these findings to schools with large populations of indigenous students should be done with caution. For these schools, it is important to examine the face validity of the items before use. Third, use of the survey as a diagnostic tool was not examined in the study. Whilst school climate factors are rarely used as measures of effectiveness (Bear et al., 2011), many programs and interventions aimed at preventing bullying, behaviour problems and class management make improving school climate a focus (e.g. Wang et al., 2013). Therefore, future studies might consider examining the efficacy of using the school climate factors delineated in the WHITS-P as process criteria for evaluating the effectiveness of programs.

Discussion and implications

The WHITS-P was designed to provide a subjective portrayal of students’ experiences of the school climate. With 21 items in seven scales, the WHITS-P provides schools with an economical survey that can be administered across years 3 to 6. In addition, the inclusion of a slightly longer version for years 5 and 6 allows schools to examine perceptions of Affirming Cultural Diversity and includes additional items in some constructs.

Operationalising constructs for younger students was challenging: the wording and complexity of the items not only had to accommodate the reading and comprehension levels of younger students, but the length of the survey had to be appropriate for their attention span. Whilst other school climate surveys have been developed for use in primary schools, to the researchers’ knowledge, only one other provides information about development (Griffith, 1999). In this study, the multistage approach, although not new (e.g. Velayutham et al., 2011), allowed translation validity to be established. First, feedback from an expert panel supported the viability of scales in terms of malleability and usefulness, and a review of the literature ensured coverage of the psychosocial school climate across four domains (e.g. Wang & Degol, 2016). Second, the results of multiple trials with students suggest that refining items over several iterations and drawing on student input to enhance the wording support face validity. Our findings add to the literature because, although the multiple trials in survey development have not been widely reported, the results of analyses used to support the criterion validity suggest that this approach was effective with younger students.

The results provide strong evidence to support the criterion validity of the WHITS-P in terms of convergent, discriminant, concurrent and predictive validity. These findings were consistent with those reported using the original WHITS with secondary students (Aldridge & Alai, 2013), suggesting the WHITS-P can be used with confidence to evaluate students’ perceptions of school climate in primary schools.

Of note, our findings support the concurrent validity of the WHITS-P scales, indicating that students’ responses were sufficiently nuanced to allow differentiation between the responses of students in different schools. This finding supports those of studies that used the original WHITS (e.g. Aldridge & Ala’i, 2013), but it was noted that many studies do not report concurrent validity and, in cases for which they did, concurrent validity was reported using within-school groups (e.g. Keiser & Schulte, 2007), or the results of correlation analysis (e.g. with achievement; Bear et al., 2011), which arguably, is more aligned to predictive validity. The concurrent validity, reported in our study, is important given the increasing use of school climate surveys as diagnostic tools across systems. The findings suggest that educational systems seeking to use school climate data to inform strategic and planning decisions with respect to individual or groups of schools can do so with confidence using the WHITS-P.

Evidence to support the predictive validity of the WHITS-P adds weight to past studies that have found that school climate perceptions related to student outcomes. From a social-ecological perspective (Moos, 1974), the predictive validity suggests that, for schools seeking to improve behavioural, wellbeing, or developmental factors, using the WHITS-P could provide information about school climate factors that could be leveraged to support these efforts.

Conclusion

From an ecological perspective, improving the school climate focuses on monitoring and improving the environment to enhance student outcomes across the whole school population, as opposed to focusing on individual diagnosis and treatment (Kloos & Shah, 2009; Moos, 1974, 1976). Given that small fluctuations in school climate represent a substantial difference in students’ experiences (Capp et al., 2022), the notion of leveraging school climate factors could be a sine qua non for improving the goals of system-wide and national policies.

The development of the WHITS-P provides education systems, individual schools, and researchers with a reliable and economical tool for assessing and understanding primary school students’ experiences. Use of the WHITS-P in schools and across education systems has the potential to guide strategic and planning decisions leading to a proactive (rather than reactive) approach to school improvement that makes changes at the level of culture. Further, the WHITS-P provides a diagnostic tool that can be used to measure and improve environments or used as process criteria for assessing the efficacy of interventions and programs.

References

Adeogun, A. A., & Olisaemeka, B. U. (2011). Influence of school climate on students’ achievement and teachers’ productivity for sustainable development. International Journal of Education and Research, 8(4), 552–557.

Aldridge, J., & Ala’i, K. (2013). Assessing students’ views of school climate: Developing and validating the What’s Happening In This School? (WHITS) questionnaire. Improving Schools, 16(1), 47–66. https://doi.org/10.1177/1365480212473680

Aldridge, J. M., & Fraser, B. J. (2016). Teachers’ views of their school climate and its relationship with teacher self-efficacy and job satisfaction. Learning Environments Research, 19, 291–307. https://doi.org/10.1007/s10984-015-9198-x

Aldridge, J. M., Fraser, B. J., Fozdar, F., Alai, K., Earnest, J., & Afari, E. (2016). Students’ perceptions of school climate as determinants of wellbeing, resilience and identity. Improving Schools, 19, 5–26. https://doi.org/10.1177/1365480215612616

Aldridge, J. M., & McChesney, K. (2018). The relationships between school climate and adolescent mental health and wellbeing: A systematic literature review. International Journal of Educational Research, 88, 121–145. https://doi.org/10.1016/j.ijer.2018.01.012

Aldridge, J. M., & McChesney, K. (2021). Parents’ and caregivers’ perceptions of the school climate: Development and validation of the Parent and Caregiver Survey (PaCS). Learning Environments Research, 24, 23–41. https://doi.org/10.1007/s10984-020-09308-z

Aldridge, J. M., McChesney, K., & Afari, E. (2018). The relationships between school climate, bullying and delinquent behaviours. Learning Environments Research, 21, 153–172. https://doi.org/10.1007/s10984-017-9249-6

Aldridge, J. M., & McLure, F. I. (2023). Preparing for change in schools: Barriers and supports to educational reform—A systematic literature review. Leadership and Policy in Schools. https://doi.org/10.1080/15700763.2023.2171439

Aldridge, J.M., Bell, L.M., Fraser, B.J., & Dorman, J. (2012). Using a new learning environment questionnaire for reflection in teacher action research. Journal of Science Teacher Education, 23, 259–290.

Australian Education Act 2013 (Cth). Act No. 67 of 2013.

Bear, G. G., Gaskins, C., Blank, J., & Chen, F. F. (2011). Delaware School Climate Survey–Student: Its factor structure, concurrent validity, and reliability. Journal of School Psychology, 49, 157–174. https://doi.org/10.1016/j.jsp.2011.01.001

Bear, G. G., Yang, C., Mantz, L. S., & Harris, A. B. (2017). School-wide practices associated with school climate in elementary, middle, and high schools. Teaching and Teacher Education, 63, 372–383. https://doi.org/10.1016/j.tate.2017.01.012

Bear, G. G., Yang, C., Pell, M., & Gaskins, C. (2014). Validation of a brief measure of teachers’ perceptions of school climate: Relations to student achievement and suspensions. Learning Environments Research, 17(3), 339–354.

Berkoqitz, R., Moore, H., Astor, R., & Benbenishty, R. (2017). A research synthesis of the associations between socioeconomic background, inequality, school climate, and academic achievement. Review of Educational Research, 87, 425–469. https://doi.org/10.3102/0034654316669821

Bronfenbrenner, U. (1989). Ecological systems theory. Annals of Child Development, 6, 187–249.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. Guilford Press.

Burton, C. M., Marshal, M. P., & Chisolm, D. J. (2014). School absenteeism and mental health among sexual minority youth and heterosexual youth. Journal of School Psychology, 52, 37–47. https://doi.org/10.1016/j.jsp.2013.12.001

Capp, G. P., Avi Astor, R., & Moore, H. (2022). Positive school climate for school staff? The roles of administrators, staff beliefs, and school organization in high and low resource school districts. Journal of Community Psychology, 50(2), 1060–1082.

Chyung, S. Y., Barkin, J. R., & Shamsy, J. A. (2018). Evidence-based survey design: The use of negatively worded items in surveys. Performance Improvement, 57(3), 16–25.

Cohen, J. (2006). Social, emotional, ethical, and academic education: Creating a climate for learning, participation in democracy, and well-being. Harvard Educational Review, 76, 201–237.

Cohen, J., McCabe, E. M., Michelli, N. M., & Pickeral, T. (2009). School climate: Research, policy, practice, and teacher education. Teachers College Record, 111(1), 180–213.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334. https://doi.org/10.1007/bf02310555

Deemer, S. (2004). Classroom goal orientation in high school classrooms: Revealing links between teacher beliefs and classroom environments. Educational Research, 46, 73–90.

Ding, C., Liu, Y., & Berkowitz, M. (2011). The study of factor structure and reliability of an abbreviated school climate survey. Canadian Journal of School Psychology, 26(3), 241–256. https://doi.org/10.1177/0829573511414005

Duckenfield, M., & Reynolds, B. (2013). School climate and dropout prevention (School Climate Practice Brief). National School Climate Center.

Emmons, C. L., Haynes, N. M., & Comer, J. P. (2002). The school climate survey revised: Elementary and middle school version (Revised). Yale University Child Study Center.

Field, A. (2009). Discovering statistics using SPSS. Sage.

Fraser, B. J. (2012). Classroom learning environments. In Fraser, B., Tobin, K. G., & McRobbie. C. J. (Eds.), Second international handbook of science education (pp. 1191–1239). New York: Springer.

Fraser, B. J., & Fisher, D. L. (1982). Predictive validity of My Class Inventory. Studies in Educational Evaluation, 8(2), 129–140.

Frazier, S. L., Mehta, T. G., Atkins, M. S., Glisson, C., Green, P. D., Gibbons, R. D., et al. (2015). The social context of urban classrooms: Measuring student psychological climate. The Journal of Early Adolescence, 35(5–6), 759–789. https://doi.org/10.1177/0272431615570056

Freiberg, H. J. (1999). Three creative ways to measure school climate and next steps. In H. J. Freiberg (Ed.), School climate: Measuring, improving and sustaining healthy learning environments (pp. 208–218). Falmer Press.

Furlong, M. J., Greif, J. L., Bates, M. P., Whipple, A. D., Jimenez, T. C., & Morrison, R. (2005). Development of the California School Climate and Safety Survey-Short Form. Psychology in the Schools, 42(2), 137–149. https://doi.org/10.1002/pits.20053

Gage, N. A., Larson, A., & Chafouleas, S. M. (2016). The meriden school climate survey-student version: Preliminary evidence of reliability and validity. Assessment for Effective Intervention, 41(2), 67–78. https://doi.org/10.1177/1534508415596960

García-Carrión, R., Villarejo-Carballido, B., & Villardón-Gallego, L. (2019). Children and adolescents’ mental health: A systematic review of interaction-based interventions in schools and communities. Frontiers in Psychology, 10, 918–928.

Griffith, J. (1999). School climate as “social order” and “social action”: A multi-level analysis of public elementary school student perceptions. Social Psychology of Education, 2(3–4), 339–369. https://doi.org/10.1023/A:1009657422344

Harrington, M. (2013). Funding the national plan for school improvement: An explanation (Background note). Parliamentary Library, Canberra.

Haynes, N. M., Emmons, C., & Comer, J. P. (1994). School Climate Survey: Elementary and middle school version. Yale University Child Study Center.

Hunter, J. (2022). Australian school education is falling short. Grattan Institute. https://grattan.edu.au/news/australian-school-education-is-falling-short/

Jia, Y., Konold, T. R., & Cornell, D. (2016). Authoritative school climate and high school dropout rates. School Psychology Quarterly, 31, 289–303. https://doi.org/10.1037/spq0000139

Johnson, B., & Stevens, J. J. (2006). Student achievement and elementary teachers’ perceptions of school climate. Learning Environments Research, 9(2), 111–122. https://doi.org/10.1007/s10984-006-9007-7

Keiser, K. A., & Schulte, L. E. (2007). The development and validation of the Elementary School Ethical Climate Index. The School Community Journal, 17(2), 73–88.

Kloos, B., & Shah, S. (2009). A social ecological approach to investigating relationships between housing and adaptive functioning for persons with serious mental illness. American Journal of Community Psychology, 44(3–4), 316–326.

Kwong, D., & Davis, J. R. (2015). School climate for academic success: A multilevel analysis of school climate and student outcomes. Journal of Research in Education, 25(2), 68–81.

Lenz, A. S., Rocha, L., & Aras, Y. (2021). Measuring school climate: A systematic review of initial development and validation studies. International Journal of Advanced Counselling, 43, 48–62. https://doi.org/10.1007/s10447-020-09415-9

Lewin, K. (1936). Principles of topological psychology. McGraw-Hill.

Long, E., Zucca, C., & Sweeting, H. (2021). School climate, peer relationships, and adolescent mental health: A social-ecological perspective. Youth & Society, 53(8), 1400–1415. https://doi.org/10.1177/0044118X20970232

López, V., Torres-Vallejos, J., Ascorra, P., Villalobos-Parada, B., Bilbao, M., & Valdés, R. (2018). Construction and validation of a classroom climate scale: A mixed methods approach. Learning Environments Research, 21(3), 407–422. https://doi.org/10.1007/s10984-018-9258-0

Marraccini, M. E., Fang, Y., Levine, S. P., et al. (2020). Measuring student perceptions of school climate: A systematic review and ecological content analysis. School Mental Health, 12, 195–221. https://doi.org/10.1007/s12310-019-09348-8

Modin, B., & Östberg, V. (2009). School climate and psychosomatic health: A multilevel analysis. School Effectiveness and School Improvement, 20(4), 433–455. https://doi.org/10.1080/09243450903251507

Moos, R. H. (1984). Context and coping: Toward a unifying conceptual framework. American Journal of Community Psychology, 12, l–36.

Moos, R. H. (1974). Evaluating treatment environments: A social ecological approach. Wiley.

Moos, R. H. (1976). The human context: Environmental determinants of behavior. Wiley.

O’Brennan, L. M., Bradshaw, C. P., & Furlong, M. J. (2014). Influence of classroom and school climate on teacher perceptions of student problem behavior. School Mental Health, 6, 125–136. https://doi.org/10.1007/s12310-014-9118-8

Patalay, P., & Fitzsimons, E. (2016). Correlates of mental illness and wellbeing in children: Are they the same? Results from the UK Millennium Cohort Study. Journal of the American Academy of Child & Adolescent Psychiatry, 55(9), 771–783.

Reaves, S., McMahon, S. D., Duffy, S. N., & Ruiz, L. (2018). The test of time: A meta-analytic review of the relation between school climate and problem behavior. Aggression and Violent Behavior, 39, 100–108. https://doi.org/10.1016/j.avb.2018.01.006

Riekie, H., & Aldridge, J. M. (2017). The role of the school climate in school students’ mental health and identity formation: A South Australian study. British Journal of Educational Research, 43(1), 95–123. https://doi.org/10.1002/berj.3254

Rudasill, K. M., Snyder, K. E., Levinson, H. L., & Adelson, J. (2018). Systems view of school climate: A theoretical framework for research. Educational Psychology Review, 30(1), 35–60.

Sahlberg, P. (2007). Education policies for raising learning: The Finnish approach. Journal of Education Policy, 22(2), 147–171.

Shindler, J., Jones, A., Williams, A. D., Taylor, C., & Cardenas, H. (2016). The school climate-student achievement connection: If we want achievement gains, we need to begin by improving the climate. Journal of School Administration Research and Development, 1(1), 9–16.

Sink, C. A., & Spencer, L. R. (2005). My Class Inventory-Short Form as an accountability tool for elementary school counselors to measure classroom climate. Professional School Counseling, 9, 37–48. https://doi.org/10.1177/2156759X0500900112

Steffgen, G., Recchia, S., & Viechtbauer, W. (2013). The link between school climate and violence in school: A meta-analytic review. Aggression and Violent Behavior, 18(2), 300–309. https://doi.org/10.1016/j.avb.2012.12.001

Stigler, M., Neusel, E., & Perry, C. (2011). School-based programs to prevent and reduce alcohol use among youth. Alcohol Research and Health, 34(2), 157–162.

Thapa, A., Cohen, J., Guffey, S., & Higgins-D’Alessandro, A. (2013). A review of school climate research. Review of Educational Research, 83(3), 357–385.

Trickett, E. J., & Quinlan, D. M. (1979). Three domains of classroom environment: Factor analysis of the Classroom Environment Scale. American Journal of Community Psychology, 7, 279–291. https://doi.org/10.1007/BF00890692

Uline, C., & Tschannen-Moran, M. (2008). The walls speak: The interplay of quality facilities, school climate, and student achievement. Journal of Educational Administration, 46(1), 55–73.

Van Horn, M. L. (2003). Assessing the unit of measurement for school climate through psychometric and outcome analysis of the school climate survey. Educational and Psychological Measurement, 63(6), 1002–1019. https://doi.org/10.1177/0013164403251317

Velayutham, S., Aldridge, J., & Fraser, B. (2011). Development and validation of an instrument to measure students’ motivation and self-regulation in science learning. International Journal of Science Education, 33(15), 2159–2179.

Walberg, H. J., & Haertel, E. (1980). Research integration: Introduction and overview. Evaluation in Education: International Progress, 4(1), 5–12.

Wang, C., Berry, B., & Swearer, S. M. (2013). The critical role of school climate in effective bullying prevention. Theory into Practice, 52(4), 296–302. https://doi.org/10.1080/00405841.2013.829735

Wang, M. T., & Degol, J. (2016). School climate: A review of the construct, measurement, and impact on student outcomes. Educational Psychology Review, 28, 315–352. https://doi.org/10.1007/s10648-015-9319-1

White, N., La Salle, T., Ashby, J. S., & Meyers, J. (2014). A brief measure of adolescent perceptions of school climate. School Psychology Quarterly, 29(3), 349–359. https://doi.org/10.1037/spq0000075

Zullig, K. J., Koopman, T. M., Patton, J. M., & Ubbes, V. A. (2010). School climate: Historical review, instrument development, and school assessment. Journal of Psychoeducational Assessment, 28(2), 139–152. https://doi.org/10.1177/0734282909344205

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Neither author has financial or non-financial conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aldridge, J.M., Blackstock, M.J. Assessing students’ perceptions of school climate in primary schools. Learning Environ Res (2024). https://doi.org/10.1007/s10984-024-09492-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10984-024-09492-2