Abstract

We enrich the theory of variational inequalities in the case of an aggregative structure by implementing recent results obtained by using the Selten–Szidarovszky technique. We derive existence, semi-uniqueness and uniqueness results for solutions and provide a computational method. As an application we derive very powerful practical equilibrium results for Nash equilibria of sum-aggregative games and illustrate with Cournot oligopolies.

Similar content being viewed by others

1 Introduction

When dealing with optimisation, equilibrium or related problems, a usual program is to study existence, semi-uniqueness (i.e. there is at most one solution), uniqueness and computation of solutions. For such problems, variational inequalities provide a unifying, natural, simple and quite novel setting. The systematic study of this subject began in the early 1960 s with the influential work of Hartman and Stampacchia in [9] for the study of (infinite)-dimensional partial differential equations. The present theory of (finite dimensional) variational inequalities has found applications in mathematical programming, engineering, economics and finance.Footnote 1 In particular this theory applies to Nash equilibria of games in strategic form. However, various quite sophisticated recent results for sum-aggregative games with pseudo-concave conditional payoff functions do not follow from this theory. The results we have in mind here concern uniqueness results as in [11] which are derived by what was called ‘the Selten-Szidarovszky technique’ (SS-technique) in [26].

The origin of the SS-technique can be found in the book [21] of Selten dealing with aggregative games and in the article [22] of Szidarovszky dealing with Cournot oligopolies.Footnote 2 The aim of the present article is to go a theoretical step further by integrating an advanced version of the SS-technique into the theory of variational inequalities. For more on the SS-technique, see [4, 11].

We consider two types of variational inequalities that are special cases of the following quite general form

where X is a non-empty subset of \({\mathbb {R}}^n\) and \(\textbf{F} = (F_1,\ldots ,F_n): X \rightarrow {\mathbb {R}}^n\) is a function. A solution of \(\textrm{VI}(X,\textbf{F})\) is defined as an \(\textbf{x}^{\star } \in X\) that satisfies all inequalities in (1).Footnote 3 Both cases relate to the aggregative variational inequality \(\textrm{VI}(\textbf{X},\textbf{T})\) with \(\textbf{X} = {\mathbb {R}}^n_+\) or \(\textbf{X} = \textsf {X}_{l=1}^n [0,m_l]\) where, with \(N {{\; \mathrel {\mathop {:}}= \;}}\{1,\ldots ,n\}\), letting \(x_N {{\; \mathrel {\mathop {:}}= \;}}\sum _{l \in N} x_l\),

So here \(T_i\) depends on \(x_i\) and the aggregate \(x_N\). (A precise definition concerning \(t_i\) is in order.) One may refer to this problem as an ‘aggregative variational inequality’. In case \(X = {\mathbb {R}}^n_+\) this variational inequality specialises to a nonlinear complementarity problem and in the other case to a mixed nonlinear complementarity problem. We shall study the complete set of solutions and do not exclude boundary or degenerateFootnote 4 ones.

In Sect. 2 the results are obtained by applying standard theory for these aggregative variational inequalities. Although results in this section are not really new, they may contribute to the literature in the sense that the presentation there is efficient, self-contained and in addition critically reviews and repairs a result in [19]. The new and much more powerful results are obtained by the Selten–Szidarovszky technique in Sects. 3 and 4 assuming \(X = {\mathbb {R}}^n_+\). In Sect. 3, contrary to Sect. 4, there are no differentiability assumptions for the \(t_i\), just continuity is assumed. However, a discontinuity at (0, 0) always is allowed.

A vast part of the ideas of proving the results in Sects. 3 and 4 is based on [3, 11, 29] dealing with sum-aggregative games and [26] dealing with so-called abstract games. In particular Sect. 4.5 provides necessary and sufficient conditions for the variational inequality (2) to have a unique solution. As we shall see, the used mathematics in the SS-technique is quite elementary (although technical): for example, no deep results like Brouwer’s fixed point theorem, Gale–Nikaido theorem or advanced theories like topological fixed point index theory is needed. The fundamental idea behind the SS-technique is the transformation of the n-dimensional problem for the aggregate variational inequality into a 1-dimensional fixed point problem for the correspondence \( b {{\; \mathrel {\mathop {:}}= \;}}\sum _i b_i\) with \(b_i: {\mathbb {R}}_+ \multimap \mathbb {R}\) is given byFootnote 5

see Theorem 3.2. Various assumptions made on the \(t_i\) relate to the so-called At Most Single Crossing From Above property; see Definition 3.1. In the differentiable case checking these assumptions may be straightforward. Theorem 3.2 also is at the base for computational methods as shown in [1, 24] for the Cournot oligopoly context.

Section 5 explains how the theory of (aggregative) variational inequalities applies to Nash equilibria of (sum-aggregative) games in strategic form. Especially economic games in strategic form have an aggregative structure. Among others this concerns oligopolistic, public good, cost-sharing, common resource, contest and rent-seeking games (e.g. see [3, 27]). The most important results concerning Nash equilibria of sum-aggregative games are in Theorem 5.1 which provides a very practical uniqueness result and Theorem 4.3 which is, as illustrated in Sect. 5.4, at the base for games with a possible discontinuity at the origin. The latter one is especially important for contest and rent-seeking games and in fact provides a (very abstract) generalisation and improvement of the results in [10, 23]. Both theorems do not use explicit pseudo-concavity conditions for conditional payoff functions (which may be not so easy to verify in various applications); in fact they implicitly hold. In doing so, the game theoretic results in [11] are improved upon.

When one looks to the articles on Cournot oligopoly theory it becomes clear that generalised convexity properties of the price function play an important role in more sophisticated results; also Assumption (c) for the \(t_i\) in Theorem 5.1 is closely related to such properties.Footnote 6 In this context it may be interesting to note that minima of various (pre)invex functions (see [17, 18]) can be characterised by so-called variational-like inequalities.

There are three appendices: on variational inequalities, on smoothness issues and on various types of matrices.

2 Standard Technique

2.1 Setting

With \(\textrm{VI}(X,\textbf{F})\) the general variational inequality as in (1), we consider in this section

where \(\textbf{X} = {\mathbb {R}}_+^n\) (unbounded case) or \(\textbf{X} = \textsf {X}_{l=1}^n [0,m_l]\) with \(m_l > 0\) (bounded case) and

with \(t_i: {\mathbb {R}}_+ \times {\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) (unbounded case) and \(t_i: [0,m_i] \times [0, \sum _{l=1}^n m_l] \rightarrow {\mathbb {R}}\) (bounded case). Further we suppose \(n \ge 2\). Let

Results in this section not being really new, we shall not use the designation ‘theorem’ for them.

2.2 Assumptions

In this section the following assumptions will occur.

- \(\overline{\textrm{CONT}}\).:

-

\(t_i\) is continuous.

- \(\overline{\textrm{DIFF}}\).:

-

\(T_i\) and \(t_i\) are continuously differentiable.

- \(\overline{\textrm{EC}}\).:

-

(For unbounded case) There exists \(\overline{x}_i > 0\) such that, \(t_i(x_i,y) < 0\) for every \((x_i,y) \in {\mathbb {R}}^2_+\) with \(\overline{x}_i \le x_i \le y\).Footnote 7 Let \(K_i {{\; \mathrel {\mathop {:}}= \;}}[0,\overline{x}_i]\).

For the unbounded case, with \(K_i\) as in Assumption \(\overline{\textrm{EC}}\), let \(\textbf{K} {{\; \mathrel {\mathop {:}}= \;}}\textsf {X}_{l=1}^n K_l\).

These assumptions are supposed to hold for every \(i \in N\).Footnote 8 Below we often consider situations where such an assumption just holds for a specific i; then we add [i] to the assumption; for example, \(\overline{\textrm{EC}}\)[i].

Some comments concerning \(\overline{\textrm{DIFF}}\) are in order. Of course, in \(\overline{\textrm{DIFF}}\), properties of \(T_i\) and \(t_i\) are related. However, it is comfortable to present them here as stated. As the domain of \(T_i \; (t_i)\) is not open, we interpret continuous differentiability in \(\overline{\textrm{DIFF}}\) as usual: there exists a continuously differentiable extension of \(T_i \; (t_i)\) to an open set.

If Assumption \(\overline{\textrm{DIFF}}\) holds, then the Jacobi matrix \(\textbf{J}(\textbf{x})\) of \(\textbf{T}: \textbf{X} \rightarrow {\mathbb {R}}^n\) is given by

2.3 Existence

Proposition 2.1

\(\textbf{0} \) is a solution of \(\mathrm {\overline{AVI}}\) if and only if \(N_> = \emptyset \). \(\diamond \)

Proof

\(\textbf{0}\) is a solution if and only if \(\textbf{T}(\textbf{0}) \cdot \textbf{x} \ge 0 \; (\textbf{x} \in \textbf{X})\), i.e. if and only if \(\sum _{i=1}^n t_i(0,0) x_i \le 0 \; (\textbf{x} \in \textbf{X})\). And this is equivalent with \(t_i(0,0) \le 0 \; (i \in N)\), i.e. with \(N_> = \emptyset \). \(\square \)

Lemma 2.1

Consider the unbounded case. Suppose \(\overline{\textrm{EC}}\) holds. Let B be a subset of \({\mathbb {R}}^n_+\) with \(\textbf{K} \subseteq B\). Each solution of \(\textrm{VI}(B,\textbf{T})\) is a solution of \(\textrm{VI}(\textbf{K},\textbf{T})\).Footnote 9\(\diamond \)

Proof

Suppose \(\textbf{x}^{\star }\) is a solution of \( \textrm{VI}(B,\textbf{T})\). As \(\textbf{K} \subseteq B\), it is sufficient to show that \(\textbf{x}^{\star } \in \textbf{K}\). By contradiction, suppose \(x^{\star }_j > \overline{x}_j\) for some j. \(\overline{\textrm{EC}}\) implies \(t_j(x_j^{\star }, x_N^{\star }) < 0\). We have \(\textbf{x}^{\star } \in B\) and \(\sum _i t_i(x_i^{\star }, x_N^{\star }) (x_i - x^{\star }_i) \le 0\) for all \(\textbf{x} \in B\). By taking \(x_i = x^{\star }_i \; (i \ne j)\) and \(x_j =0\), \( t_j(x_j^{\star }, x_N^{\star }) x^{\star }_j \ge 0\) follows. Thus, \(t_j(x_j^{\star }, x_N^{\star }) \ge 0\), a contradiction. \(\square \)

Proposition 2.2

Suppose Assumption \(\overline{\textrm{CONT}}\) holds.

-

1.

Consider the bounded case. The set of solutions of \(\overline{\textrm{AVI}}\) is a non-empty compact subset of \({\mathbb {R}}^n\).

-

2.

Consider the unbounded case. If Assumption \(\overline{\textrm{EC}}\) holds, then the set of solutions of \(\overline{\textrm{AVI}}\) is a non-empty compact subset of \({\mathbb {R}}^n\) and each solution belongs to \(\textbf{K}\). \(\diamond \)

Proof

1. \(\overline{\textrm{CONT}}\) implies that \(\textbf{T}\) is continuous. Now apply Lemma A.9 in “Appendix A”.

2. By Lemma 2.1 with \(B = {\mathbb {R}}^n_+\) each solution of \(\overline{\textrm{AVI}}\) is a solution of \(\textrm{VI}(\textbf{K},\textbf{T})\) and thus belongs to \(\textbf{K}\). Next we are going to apply Lemmas A.9 and A.10 with \(X= {\mathbb {R}}^n_+\). Fix an \(r > 0\) such that \(\textbf{K} \subseteq X_{r/2} \subset X_r \subseteq 137 \textbf{K}\).Footnote 10 As \(137 \textbf{K}\) is compact, Lemma A.9 guarantees that \(\textrm{VI}(137 \textbf{K}, \textbf{T})\) has a solution, say \(\textbf{x}^{\star }\). Lemma 2.1 guarantees that \(\textbf{x}^{\star } \in \textbf{K}\). So also \(\textbf{x}^{\star } \in X_{r/2} \subset X_r\). This implies that \(\textbf{x}^{\star }\) also is a solution of \(\textrm{VI}(X_r,\textbf{T})\) and that \({{\parallel \textbf{x}^{\star } \parallel }} \le r/2 < r\). By Lemma A.10 in “Appendix A”, \(\textbf{x}^{\star }\) is a solution of \(\overline{\textrm{AVI}}\).

In order to prove that the set of solutions of \(\overline{\textrm{AVI}}\) is compact, it is sufficient, as this set is, by part 1, bounded, that this set is closed. Well, this is guaranteed by Lemma A.7. \(\square \)

2.4 Semi-uniqueness

Suppose Assumption \(\overline{\textrm{DIFF}}\) holds. Thus, by (4), in short notations,

It is important to realise that \(\textbf{J}(\textbf{x})\) may not be symmetric.

Proposition 2.3

Consider the unbounded case. Suppose Assumption \(\overline{\textrm{DIFF}}\) holds. Each of the following two conditions separately is sufficient for \(\mathrm {\overline{AVI}}\) to have at most one solution.

-

(a).

The matrix \(\textbf{J}(\textbf{x})\) is for every \(\textbf{x} \in {\mathbb {R}}^n_+\) positive quasi-definite.Footnote 11

-

(b).

The matrix \(\textbf{J}(\textbf{x})\) is for every \(\textbf{x} \in {\mathbb {R}}^n_+\) a P-matrix. \(\diamond \)

Proof

In order for \(\mathrm {\overline{AVI}}\) to have at most one solution, it is, by Lemma A.4 in “Appendix A”, sufficient to show that \(\textbf{T}: \textbf{X} \rightarrow {\mathbb {R}}^n\) is strictly monotone on \(\textbf{X}\) or a P-function on \(\textbf{X}\).

(a). Suppose \(\textbf{J}(\textbf{x})\) is positive quasi-definite for every \(\textbf{x} \in {\mathbb {R}}^n_+\). By Lemma A.5, \(\textbf{T}\) is strictly monotone.

(b). Suppose \(\textbf{J}(\textbf{x})\) is for every \(\textbf{x} \in {\mathbb {R}}^n_+\) a P-matrix. By Lemma A.6, \(\textbf{T}\) is a P-function.

\(\square \)

Now results for \(\overline{\textrm{AVI}}\) for the unbounded case are implied by conditions that guarantee that each matrix \(\textbf{J}(\textbf{x})\) is positive quasi-definite or a P-matrix. Such conditions can be found in “Appendix C”. The next proposition presents such a result.

Proposition 2.4

Consider the unbounded case. Suppose Assumption \(\overline{\textrm{DIFF}}\) holds. Sufficient for \(\mathrm {\overline{AVI}}\), to have at most one solution is that \((D_1 + D_2) t_i(x_i,x_N) < - (n-1) | D_2 t_i(x_i,x_N) | \) for every \(i \in N\) and \(\textbf{x} \in \textbf{X}\). \(\diamond \)

Proof

The proof is, by Proposition 2.3(b) complete if \(\textbf{J}(\textbf{x})\) is for every \(\textbf{x} \in \textbf{X}\) a P-matrix. Well, if \(\textbf{J}(\textbf{x})\) is row diagonally dominant with positive diagonal entries, then it is a P-matrix. By (4), this specialises to that for every \(\textbf{x} \in \textbf{X}\) and \(i \in N\): \( (D_1 + D_2) t_i(x_i,x_N) < 0\) and \( (D_1 + D_2) t_i(x_i,x_N) < - (n-1) \mid D_2 t_i(x_i,x_N) \mid \), i.e. to \( (D_1 + D_2) t_i(x_i,x_N) < - (n-1) \mid D_2 t_i(x_i,x_N) \mid \). \(\square \)

Clearly, as n grows the inequality in part 2 of this proposition gets more difficult to be satisfied. And note that, by Proposition 2.1, if we add in addition that \(N_> \ne \emptyset \), then we obtain the result that if \(\mathrm {\overline{AVI}}\) has a solution, then this solution is unique and nonzero.

2.5 Uniqueness

Combining Proposition 2.4 (or a variant of it) with Proposition 2.2, we obtain a uniqueness result for the aggregative variational inequality \(\mathrm {\overline{AVI}}\). In Sects. 3 and 4 we shall obtain more interesting results by using the SS-technique.

2.6 Application: Cournot Oligopoly

In this subsection, we critically reconsider and repair with Proposition 2.5 below an equilibrium uniqueness result in [19].Footnote 12 This result is as far as we know, the first one analysing equilibria of Cournot oligopolies by means of nonlinear complementarity problems. The setting for this result is a Cournot oligopoly game \(\varGamma \) with \(n \ge 2\) firms without capacity constraints with a price function \(p: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) and with a cost function \(c_i: {\mathbb {R}}\rightarrow {\mathbb {R}}\) for firm \(i \in N\). With these notations the profit function \(f_i: {\mathbb {R}}^n_+ \rightarrow {\mathbb {R}}\) for firm i is given by

This defines a game in strategic form \(\varGamma \) with N as player set and with \({\mathbb {R}}_+\) as strategy set for each player and with \(f_i\) as payoff function of firm i.

If p and every \(c_i\) is twice continuously differentiable, then the aggregative variational inequality \(\mathrm {\overline{AVI}}\) where \(t_i: {\mathbb {R}}^2_+ \rightarrow {\mathbb {R}}\) is given by

is referred here to as ‘oligopolistic variational inequality’ and will be denoted by OVI. In fact this aggregative variational inequality concerns what we call in Definition 5.1 in Sect. 5, for a more general setting, the associated variational inequality VI(\(\varGamma )\). Proposition 2.5 deals with the solution set of the oligopolistic variational inequality and the Nash equilibrium set of \(\varGamma \). Concerning the latter we have to refer in the proof of Proposition 2.5 to results which are developed in Sect. 5.

Proposition 2.5

Consider a Cournot oligopoly \(\varGamma \) where \(p: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) and every \(c_i: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) is twice continuously differentiable with the following two conditions.

-

(a).

For every \(i \in N\) and \(\textbf{x} \in {\mathbb {R}}^n_+\):

$$\begin{aligned} 2 p'(x_N) + p''(x_N) x_i - c''_i(x_i) < - (n-1)\, | p'(x_N) + p''(x_N) x_i |. \end{aligned}$$ -

(b).

For every \(i \in N\) there exists an \(\overline{x}_i >0 \) such that for every \(\textbf{x} \in {\mathbb {R}}^n_+\) with \(x_i \ge \overline{x}_i\),

$$\begin{aligned} p'(x_N) x_i + p(y) - c'_i(x_i) < 0. \end{aligned}$$

The following results hold.

-

1.

Under condition (a), the oligopolistic variational OVI has at most one solution and \(\varGamma \) has at most one Nash equilibrium.

-

2.

Under condition (b), the OVI has a solution.

-

3.

Under conditions (a) and (b), the OVI has a unique solution and \(\varGamma \) has a Nash equilibrium. \(\diamond \)

Proof

1. Note that \(\overline{\textrm{DIFF}}\) holds. The inequality in Proposition 2.4 specialises to the inequality of the statement and so guarantees that OVI has at most one solution. By Proposition 5.1(1) the set of Nash equilibria is contained in the set of solutions of OVI. So the game has at most one Nash equilibrium.

2. Note that \(\overline{\textrm{EC}}\) holds. Apply Proposition 2.2(2).

3. By parts 1 and 2, OVI has a unique solution, say \(\textbf{e}\). We prove that all conditional profit functions \(f_i^{(\textbf{z})}\) are pseudo-concave. Then Proposition 5.1(2) implies that \(\textbf{e}\) is a Nash equilibrium and then next with Proposition 5.1(1) it follows that \(\textbf{e}\) is a unique Nash equilibrium. Well, as \({( f_i^{(\textbf{z})} )}''(x_i) = p''(x_i + \sum _j z_j) x_i + 2 p'(x_i + \sum _j z_j) - c''_i(x_i)\), condition (a) implies that \({( f_i^{(\textbf{z})} )}'' < 0\) and thus \(f_i^{(\textbf{z})}\) even is strictly concave. \(\square \)

For more on Cournot oligopolies, see, for example, [20, 25, 27].

3 SS-Technique; Without Differentiability Assumptions

3.1 Setting

Let us fix again the setting. Let

With \(\textrm{VI}(X,\textbf{F})\) being the general variational inequality (1), the special case that we consider in this section is

where \(T_i(\textbf{x}) {{\; \mathrel {\mathop {:}}= \;}}- t_i(x_i,x_N) \) with \(t_i: \varDelta \rightarrow {\mathbb {R}}\). Further we suppose \(n \ge 2\).

Comparing \(\textrm{AVI}\) with \(\overline{\textrm{AVI}}\), note that for \(\textrm{AVI}\) we only consider the unbounded case. The reason for this is that an analysis with the SS-technique becomes here much more technical. Also note that the setting uses a smaller domain of \(t_i\) than that in Sect. 2.1: \(\varDelta \) instead of \({\mathbb {R}}^2_+\). \(\varDelta \) is of course all that matters as \((x_i,x_N) \in \varDelta \) for every \(\textbf{x} \in {\mathbb {R}}^n_+\).

We always assume in this section that  and denote the set of solutions of \(\textrm{AVI}\) by

and denote the set of solutions of \(\textrm{AVI}\) by

3.2 AMSCFA-Property

The following definition is very important for assumptions on the \(t_i\) in the following subsection.

Definition 3.1

A function \(g: I \rightarrow \mathbb {R}\), where I is a real interval, has the AMSCFA-property (‘At Most Single Crossing From Above’ property) if the following holds: if z is a zero of g, then \(g(x) > 0 \; (x \in I \text{ with } x < z)\) and \(g(x) < 0 \; (x \in I \text{ with } x > z)\). \(\diamond \)

Thus, a function with the AMSCFA-property has at most one zero. Sufficient for a function to have the AMSCFA-property is that it is strictly decreasing. Two other simple results, that we freely use throughout the article, are the following: suppose \(g: I \rightarrow {\mathbb {R}}\) is continuous where I is a proper real interval. Then:

– If g is at every \(x \in I\) with \(g(x) =0\) differentiable with \(g'(x) < 0\), then g has the AMSCFA-property.

– If g has the AMSCFA-property, then for all \(x, x' \in I\)

3.3 Assumptions

For \(i \in N\) and \(\mu \in [0,1]\), defining the function \(\overline{t}_i^{(\mu )}: {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) by

the following assumptions appear in the analysis.Footnote 13

-

AMSV.

For every \(y >0\), the function \(t_i(\cdot ,y): [0,y] \rightarrow {\mathbb {R}}\) has at most one zero and if it has a positive zero, then \(t_i(0,y) > 0\).

-

LFH’.

For every \(y >0\), the function \(t_i(\cdot ,y): [0,y] \rightarrow {\mathbb {R}}\) has the AMSCFA-property.

-

RA.

For every \(\mu \in {] {0},{1} ] }\), the function \(\overline{t}_i^{(\mu )}\) has the AMSCFA-property.

-

RA1.

The function \(\overline{t}_i^{(1)}\) has the AMSCFA-property.

-

RA0.

For every \(0< y < y'\): \(t_i(0,y) \le 0 \; \; \Rightarrow \; t_i(0,y') \le 0\).

-

EC.

There exists \(\overline{x}_i > 0\) such that \(t_i(x_i,y) < 0\) for every \((x_i,y) \in {\mathbb {R}}^2_+\) with \(\overline{x}_i \le x_i \le y\).

These assumptions are supposed to hold for every \(i \in N\). Below we very often consider situations where such an assumption just holds for a specific i; then we add [i] to the assumption; for example, RA[i]. Note that the above assumptions do not depend on the value of \(t_i\) at (0, 0). In fact this value is not important for results on \(\textrm{AVI}^{\bullet } \setminus \{ \textbf{0} \}\); the reader also may see Lemma A.1.

Of course, RA[i] \(\Rightarrow \) RA1[i], and LFH’[i] \( \Rightarrow \) AMSV[i]. In addition to these assumptions, we use the following terminology. We call \(i \in N\) of

type \(I^+\) if \(\overline{t}_i^{(1)}(\lambda ) > 0\) for \(\lambda > 0\) small enough;

type \(I^-\) if \(\overline{t}_i^{(1)}(\lambda ) < 0\) for \(\lambda > 0\) small enough;

type \(II^-\) if \(\overline{t}_i^{(0)}(\lambda ) < 0\) for \(\lambda > 0\) large enough.

Lemma 3.1

[LFH’[i] \(\wedge \) RA[i] ] \( \Rightarrow \) RA0[i]. \(\diamond \)

Proof

By contradiction. So suppose LFH’[i] and RA[i] hold, and \(0< y < y'\), with \(t_i(0,y) \le 0\) and \(t_i(0,y') > 0\). The continuity of \(t_i(0,\cdot ): {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) implies that there exists \(y'' \in {[ {y},{y'} \,[}\) with \(t_i(0,y'') =0\). Also \(t_i(x_i,y') > 0\) for \(x_i > 0\) small enough. LFH’[i] implies \(t_i(x_i,y'')< 0 \; (0 < x_i \le y'')\). Now take \(\mu > 0\) so small that \( \overline{t}_i^{(\mu )}(y') = t_i(\mu y', y') > 0\). As \( \overline{t}_i^{(\mu )}(y'') = t_i(\mu y'', y'') < 0\) and \( \overline{t}_i^{(\mu )}\) is continuous, there exists \(y''' \in {] {y''},{y'} \, [ }\) with \( \overline{t}_i^{(\mu )}(y''') =0\). But this is impossible as by virtue of RA[i], \(\overline{t}_i^{(\mu )}\) has the AMSCFA-property. \(\square \)

Lemma 3.2

Suppose Assumption RA1[i] holds.

-

1.

i is of type \(I^+\) or of type \(I^-\).

-

2.

If i is of type \(I^-\), then \(\overline{t}_i^{(1)} < 0\). \(\diamond \)

Proof

1. In the case when \(\overline{t}_i^{(1)}\) has a zero, say m, we have, \(\overline{t}_i^{(1)}(x_i) >0\) for \(x_i \in {] {0},{m} \, [ }\) and thus i is of type \(I^+\). Now suppose that \(\overline{t}_i^{(1)}\) does not have a zero. As \(\overline{t}_i^{(1)}\) is continuous, we have \(\overline{t}_i^{(1)} > 0\) or \(\overline{t}_i^{(1)} <0\). In the first case i is of type \(I^+\) and in the second of type \(I^-\).

2. By contradiction. So suppose i is of type \(I^-\) and \(\overline{t}_i^{(1)}(a_i) \ge 0\) for some \(a_i > 0\). As \(\overline{t}_i^{(1)}(x_i) < 0\) for \(x_i > 0\) small enough, the continuity of \(\overline{t}_i^{(1)}\) implies the existence of an \(l_i \in {] {0},{a_i} ] }\) with \(\overline{t}_i^{(1)}(l_i) = 0\). Assumption RA1[i] implies \(\overline{t}_i^{(1)}(x_i) > 0\) for \(0< x_i < l_i\), a contradiction with i being of type \(I^-\). \(\square \)

3.4 Classical Nonlinear Complementarity Problem

Lemma A.2 in “Appendix A” implies: \(\textbf{x}^{\star } \in {\mathbb {R}}^n_+\) is a solution of \(\textrm{AVI}\) if and only if \(\textbf{x}^{\star }\) satisfies

A solution \(x^{\star }\) of \(\textrm{AVI}\) is degenerate if there exists \(i \in N\) such that

3.5 Solution \(\textbf{0}\)

Besides \(N_>\) in (3), let

Proposition 3.1

-

1.

\(\textbf{0} \in \textrm{AVI}^{\bullet } \; \Leftrightarrow \; N_> = \emptyset \).

-

2.

Suppose Assumption AMSV[i] holds. If \(\textbf{e} \in \textrm{AVI}^{\bullet }\) and \(i \not \in \tilde{N}\), then \(e_i = 0\). Thus, if \(\tilde{N} = \emptyset \), then \( \textrm{AVI}^{\bullet } \subseteq \{ \textbf{0} \}\).

-

3.

Suppose \(\tilde{N} = N_> = \emptyset \) and Assumption AMSV holds. Then \(\textrm{AVI}^{\bullet } = \{ \textbf{0} \}\). \(\diamond \)

Proof

1. Exactly the same proof as in Proposition 2.1 (with \(\textbf{X} = {\mathbb {R}}^n_+)\).

2. By contradiction. So suppose \(\textbf{e}\) is a solution of AVI, \(i \not \in \tilde{N}\) and \(e_i > 0\). Now \(e_N > 0\). By (9), \(t_i(e_i,e_N) = 0\). AMSV implies \(t_i(0,e_N) > 0\). So \(i \in \tilde{N}\), a contradiction.

3. By parts 1 and 2. \(\square \)

Proposition 3.2

Suppose Assumption RA1 holds and every \(i \in N\) is of type \(I^-\). Then \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \} \; \Rightarrow \# \{ j \in N \; | \; x_j^{\star } > 0 \} \ge 2\). \(\diamond \)

Proof

By contradiction. So suppose \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet }\) with \(\textbf{x}^{\star } \ne \textbf{0}\) and \(\# \{ j \in N \; | \; x_j^{\star } \ne 0 \} \le 1\). Let \(x_i^{\star } \ne 0\) and \(x_j^{\star } = 0 \; (j \ne i)\). By (9), \(\overline{t}_i^{(1)}(x_i^{\star }) = t_i(x_i^{\star },x_i^{\star }) = 0\). As RA1[i] holds, Lemma 3.2(2) gives a contradiction. \(\square \)

3.6 Computation

Definition 3.2

-

1.

For \(i \in N\), define the correspondence \(b_i: {\mathbb {R}}_+ \multimap \mathbb {R}\) by

$$\begin{aligned} b_i(y) {{\; \mathrel {\mathop {:}}= \;}}\{ x_i \in {\mathbb {R}}_+ \; | \; x_i \in [0,y] \, \wedge \, x_i t_i(x_i,y) = 0 \, \wedge \, t_i(x_i,y)\le 0 \}. \end{aligned}$$And define the correspondences \(\textbf{b}: {\mathbb {R}}_+ \multimap \mathbb {R}^n\) and \(b: {\mathbb {R}}_+ \multimap \mathbb {R}\) by

$$\begin{aligned} \textbf{b}(y) {{\; \mathrel {\mathop {:}}= \;}}{b}_1(y) \times \cdots \times {b}_n(y), \;\;\; b(y) {{\; \mathrel {\mathop {:}}= \;}}\{ \sum _{i \in N} x_i \; | \; \textbf{x} \in \textbf{b}(y) \}. \end{aligned}$$ -

2.

Define the correspondences \(s_i: {\mathbb {R}}_{++} \multimap \mathbb {R} \; (i \in N)\) and \(s: {\mathbb {R}}_{++} \multimap \mathbb {R}\) by

$$\begin{aligned} s_i(y) {{\; \mathrel {\mathop {:}}= \;}}{b}_i(y) / y, \;\; s(y) {{\; \mathrel {\mathop {:}}= \;}}b(y)/y. \;\; \diamond \end{aligned}$$

Note thatFootnote 14

The correspondence \(b_i\) provides global information on the \(t_i\). Denote by \(\textrm{fix}(b)\) the set of fixed points of the correspondence \(b: {\mathbb {R}}_+ \multimap \mathbb {R}\), i.e. the set of \(y \in {\mathbb {R}}_+\) for which \(y \in b(y)\).

Definition 3.3

The aggregative variational inequality \(\textrm{AVI}\) is

-

internal backward solvable if \( \textrm{AVI}^{\bullet } \subseteq \cup _{ y \in \textrm{fix}(b) } \textbf{b}(y)\);

-

external backward solvable if \( \textrm{AVI}^{\bullet } \supseteq \cup _{ y \in \textrm{fix}(b) } \textbf{b}(y)\);

-

backward solvable if it is internal and external backward solvable. \(\diamond \)

Lemma 3.3

\(\textbf{x} \in \textrm{AVI}^{\bullet } \; \Leftrightarrow \; \textbf{x} \in \textbf{b}(x_N) \; \Leftrightarrow \; [\textbf{x} \in \textbf{b}(x_N) \text{ and } x_N \in \textrm{fix}(b)]\). \(\diamond \)

Proof

Write the statement as \(A \Leftrightarrow B \Leftrightarrow C\).

\(A \Rightarrow B\): suppose \(\textbf{x} \in \textrm{AVI}^{\bullet }\). By (9), we have for every i that \(x_i \in {\mathbb {R}}_+, \; x_i t_i(x_i,x_N) = 0\) and \(t_i(x_i,x_N) \le 0\). As \(x_i \in [0,x_N]\), we have, \(x_i \in {b}_i(x_N)\).

\(B \Rightarrow C\): suppose \( \textbf{x} \in \textbf{b}(x_N)\). This implies \(x_N = \sum _i x_i \in \sum _i {b}_i(x_N) = b(x_N)\). Thus \(x_N \in \textrm{fix}(b)\).

\(C \Rightarrow A\): suppose \(\textbf{x} \in \textbf{b}(x_N) \text{ and } x_N \in \textrm{fix}(b)\). Then for every i we have \(x_i \in {\mathbb {R}}_+, \; x_i t_i(x_i,x_N) = 0\) and \(t_i(x_i,x_N) \le 0\). By (9), \(\textbf{x}\) is a solution of \(\textrm{AVI}\). \(\square \)

The solution aggregator is defined as the function \(\sigma : \textrm{AVI}^{\bullet } \rightarrow {\mathbb {R}}\) given by

Theorem 3.1

-

1.

\(\sigma ( \textrm{AVI}^{\bullet } ) = \textrm{fix}(b)\).

-

2.

\(\textrm{AVI}\) is internal backward solvable.

-

3.

If b is at most single-valued on \(\textrm{fix}(b)\), then \(\textrm{AVI}\) is backward solvable. \(\diamond \)

Proof

1. ‘\(\subseteq \)’: suppose \(\textbf{x}\) is a solution of AVI. By Lemma 3.3, \(x_i \in {b}_i(x_N)\; (i \in N)\). This implies \(x_N = \sigma (\textbf{x}) = \sum _i x_i \in \sum _i {b}_i(x_N) = b(x_N)\), i.e. \(\sigma (\textbf{x}) \in \textrm{fix}(b)\).

‘\(\supseteq \)’: suppose \(y \in \textrm{fix}(b)\). So \(y \in b(y) = \sum _i {b}_i(y)\). Fix \(x_i \in {b}_i(y) \; (i \in N)\) with \(y = \sum _i x_i\). So \(y = x_N\) and \(\textbf{x} \in \textbf{b}(x_N)\). By Lemma 3.3, \(\textbf{x} \in \textrm{AVI}^{\bullet }\).

2. Suppose \(\textbf{x} \in \textrm{AVI}^{\bullet }\). By Lemma 3.3, \(\textbf{x} \in \textbf{b}(x_N)\) and \(x_N \in \textrm{fix}(b)\). It follows that \(\textbf{x} \in \textbf{b}(x_N) \subseteq \cup _{ y \in \textrm{fix}(b) } \textbf{b}(y)\). Thus, \(\textbf{x} \in \cup _{ y \in \textrm{fix}(b) } \textbf{b}(y)\).

3. By part 2, we still have to prove ‘\(\supseteq \)’. So suppose \(\textbf{x} \in \cup _{ y \in \textrm{fix}(b) } \textbf{b}(y)\). Fix \(y \in \textrm{fix}(b)\) with \(\textbf{x} \in \textbf{b}(y)\). As \(y \in b(y)\) and b is at most single-valued on \(\textrm{fix}(b)\), we have \(b(y) = \{ y \}\). Noting that \(x_N = \sum _l x_l \in \sum _l {b}_l(y) = b(y)\), \( x_N =y\) follows. Thus, \( \textbf{x} \in \textbf{b}(x_N)\). Now apply Lemma 3.3. \(\square \)

The standard Szidarovszky variant of the SS-technique deals with at most single-valued \({b}_i\). For such situation also b is at most single-valued and Theorem 3.1(3) shows that \(\textrm{AVI}\) is backward solvable. So what is a (weak) sufficient condition for the \({b}_i\) to be at most single-valued? Well, the next lemma provides such a condition.

Lemma 3.4

If Assumption AMSV[i] holds, then for every \(y \in {\mathbb {R}}_{+}\) there exists at most one \(x_i \in [0,y]\) with \(x_i t_i(x_i,y) = 0 \wedge t_i(x_i,y) \le 0\). \(\diamond \)

Proof

Suppose AMSV[i] holds, By contradiction, suppose \(x_i, x'_i \in [0,y]\) with \(x_i < x'_i\) are such. As \(x'_i > 0\), \(t_i(x'_i,y) = 0\) follows. Because of AMSV[i], \(t_i(x_i,y) \ne 0\) and \(t_i(0,y) > 0\). This implies \(x_i =0\) and \(t_i(0,y) \le 0\), a contradiction. \(\square \)

Furthermore, for \(i\in N\) let \(W_i\) denote the essential domain of the correspondence \({b}_i\), i.e. the set \(\{ y \in {\mathbb {R}}_+ \; | \; {b}_i(y) \ne \emptyset \}\). Now, the essential domain of \(s_i\) is \(W_i^{\star } {{\; \mathrel {\mathop {:}}= \;}}W_i \setminus \{ 0 \}\), that of b is \( W {{\; \mathrel {\mathop {:}}= \;}}\cap _{i \in N} W_i\) and that of s is

Note that \( 0 \in W_i \; \Leftrightarrow \; i \not \in N_> \text{ and } \text{ that } 0 \in W \; \Leftrightarrow \; N_> = \emptyset . \)

Let  , i.e. the restriction of the correspondence \({b}_i\) to \(W_i\); so \(\hat{b}_i: W_i \multimap \mathbb {R}\). Also define

, i.e. the restriction of the correspondence \({b}_i\) to \(W_i\); so \(\hat{b}_i: W_i \multimap \mathbb {R}\). Also define  . Finally, let

. Finally, let  ,

,  and

and  . If Assumption AMSV holds, then by Lemma 3.4 the correspondences \(\hat{b}_i, \; \hat{s}_i, \; \hat{b}\) and \(\hat{s}\) are single-valued and we can and will interpret them as functions. Then in particular \(\hat{\textbf{b}}(y) = ( \hat{b}_1(y), \ldots , \hat{b}_n(y) )\).

. If Assumption AMSV holds, then by Lemma 3.4 the correspondences \(\hat{b}_i, \; \hat{s}_i, \; \hat{b}\) and \(\hat{s}\) are single-valued and we can and will interpret them as functions. Then in particular \(\hat{\textbf{b}}(y) = ( \hat{b}_1(y), \ldots , \hat{b}_n(y) )\).

Theorem 3.2

Suppose Assumption AMSV holds. Then

-

1.

\( \textrm{AVI}^{\bullet } = \{ \hat{\textbf{b}}(y) \; | \; y \in \textrm{fix}(\hat{b}) \}\).

-

2.

\(\sigma ( \textrm{AVI}^{\bullet } ) = \textrm{fix}(\hat{b})\).

-

3.

\( \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \} = \{ \hat{\textbf{b}}(y) \; | \; y \in W^{\star } \text{ with } y \in \textrm{fix}(\hat{b}) \} = \{ \hat{\textbf{b}}(y) \; | \; y \in W^{\star } \text{ with } \hat{s}(y) =1 \}\).

-

4.

\( \textbf{x}^{\star } \in \textrm{AVI}^{\bullet } \; \Rightarrow \; x^{\star }_i = \hat{b}_i(x_N^{\star }) \; (i \in N)\). \(\diamond \)

Proof

1. Theorem 3.1(3) guarantees that \(\textrm{AVI}\) is backward solvable. As b is at most single-valued, we obtain \(\textrm{AVI}^{\bullet } = \cup _{y \in \textrm{fix}(b)} \textbf{b}(y) = \cup _{y \in \textrm{fix}(\hat{b})} (\hat{b}_1(y), \ldots , \hat{b}_n(y) ) = \{ \hat{\textbf{b}}(y) \; | \; y \in \textrm{fix}(\hat{b}) \}\).

2. By Lemma 3.1(1). 3. By parts 1 and 2.

4. Suppose \( \textbf{x}^{\star } \in \textrm{AVI}^{\bullet }\). By part 1, there exists \(y \in \textrm{fix}(\hat{b}) \}\) such that \( x^{\star }_i = \hat{b}_i(y) \; (i \in N)\). It follows that \(y = \hat{b}(y) = \sum _i \hat{b}_i(y) = \sum _i x^{\star }_i = x^{\star }_N\). Thus, \(x^{\star }_i = \hat{b}_i(x_N^{\star }) \; (i \in N)\). \(\square \)

Proposition 3.3

If Assumption AMSV holds, then the solution aggregator \(\sigma \) is injective. \(\diamond \)

Proof

By contradiction. So suppose AMSV holds and \(\textbf{x}, \textbf{x}'\) are distinct solutions with \(\sigma (\textbf{x}) = \sigma (\textbf{x}') \; =: \;y\). As \(\textbf{x} \ne \textbf{x}'\), we can fix \(i \in N\) with \(x'_i > x_i\). Note that \(y \ne 0\). By (9), \(t_i(x_i,y) \le 0 = t_i(x'_i,y)\) AMSV implies \(t_i(0,y) > 0\). So \(x_i > 0\) and therefore, by (9), \(t_i(x_i,y) = 0\) which is a contradiction with AMSV. \(\square \)

Proposition 3.4

Suppose \(t_1=\cdots = t_n\). If Assumption AMSV holds, then each solution \(\textbf{x}^{\star }\) of \(\textrm{AVI}\), is symmetric, i.e. \(x^{\star }_1 = \cdots = x^{\star }_n\). \(\diamond \)

Proof

By contradiction. So suppose \(\textbf{x}^{\star }\) is a non-symmetric solution. Fix \(\pi \in S_n\) such thatFootnote 15\(P_{\pi } ( \textbf{x}^{\star } ) \ne \textbf{x}^{\star }\); The assumption \(t_1=\cdots =t_n\) implies that the aggregative variational inequality \(\textrm{AVI}\) is symmetric.Footnote 16 By Lemma A.11, \(P_{\pi } ( \textbf{x}^{\star } )\) is another solution. As \(\sigma (\textbf{x}^{\star }) = \sigma ( P_{\pi } ( \textbf{x}^{\star } ) )\), we have a contradiction with Proposition 3.3, i.e. with the injectivity of \(\sigma \). \(\square \)

3.7 Structure of the Sets \(W_i, W_i^+\) and \(W_i^{++}\)

For the further analysis it is important to obtain more insight into the structure of \(W_i\). If Assumption AMSV holds, then let

Note that

Lemma 3.5

Suppose Assumptions AMSV[i] and RA0[i] hold, \(y \in W_i^{\star }\) and \(y' > y\). Then \(\hat{b}_i(y) = 0 \; \Rightarrow \; [y' \in W_i^{\star } \wedge \hat{b}_i(y') =0]\). Thus, \(W_i^{\star }\) is a real interval. \(\diamond \)

Proof

Suppose \(\hat{b}_i(y) = 0\). We have \(t_i(0,y) = t_i(\hat{b}_i(y),y) \le 0\). By RA0[i], \(t_i(0,y') \le 0\). So \(\hat{b}_i(y') = 0\) and \(y' \in W_i^{\star }\). \(\square \)

Lemma 3.6

Suppose Assumption LFH’[i] holds. Then

-

1.

\(W_i^{++}\) is open.

-

2.

If Assumption RA1[i] and RA0[i] hold, then \(W_i^{++}\) and \(W_i^+\) are real intervals. \(\diamond \)

Proof

1. Suppose \(y \in W_i^{++}\). So \(t_i(\hat{b}_i(y), y ) = 0\). By LFH’[i], \(t_i(y,y)< 0 < t_i(0,y)\). As \(t_i(0,\cdot ): {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) and \(\overline{t}_i^{(1)}\) are continuous, there exists \(\delta > 0\) such that \( t_i(y',y')< 0< t_i(0,y') \; (0< y-\delta< y' < y + \delta )\). For every \(y' \in {] {y-\delta },{y+\delta } \, [ }\), the function \(t_i(\cdot ,y'): [0,y'] \rightarrow {\mathbb {R}}\) is continuous, and therefore, there exists \(x_i \in {] {0},{y'} \, [ }\) with \(t_i(x_i,y') = 0\). Thus, \(W_i^{++}\) is open.

2. Suppose RA1[i] and RA0[i] hold. First we prove that \(W_i^{++}\) is an interval. To this end suppose \(y, y' \in W_i^{++}\) with \(y < y'\) and \( y'' \in {] {y},{y'} \, [ }\). We have \(0< \hat{b}_i(y) \le y, \; 0 < \hat{b}_i(y') \le y', \; t_i(\hat{b}_i(y),y) = 0\) and \(t_i(\hat{b}_i(y'),y') =0\). By LFH’[i], the continuous functions \(t_i(\cdot ,y)\) and \(t_i(\cdot ,y')\) have the AMSCFA-property. It follows that \(t_i(y,y) \le 0\) and \( t_i(0,y') > 0\). Now RA0[i] implies \(t_i(0,y'') > 0\). By RA1[i], the continuous function \(\overline{t}_i^{(1)}\) has the AMSCFA-property. It follows that \(t_i(y'',y'') < 0\). Next the continuity of \(t_i(\cdot ,y'')\) implies that there exists an \(x_i \in {] {0},{y''} \, [ }\) with \(t_i(x_i,y'') = 0\) and therefore \(y'' \in W_i^{++}\). Thus, \(W_i^{++}\) is an interval.

Statement concerning \(W_i^+\): suppose \(y, y' \in W_i^{+}\) with \(y < y'\) and \( y'' \in {] {y},{y'} \, [ }\). Now the above proof again applies, and shows that \(y'' \in W_i^{++} \subseteq W_i^+\). \(\square \)

Lemma 3.7

Suppose Assumptions AMSV[i] and EC[i] hold. Then \(\hat{b}_i(y) < \overline{x}_i \; (y \in W_i)\). \(\diamond \)

Proof

This is, as \(\overline{x}_i > 0\), trivial if \(\hat{b}_i(y) = 0\). Now suppose \(\hat{b}_i(y) > 0\). We have \(0 =t_i(\hat{b}_i(y),y) \). As \((\hat{b}_i(y), y) \in \varDelta ^+\), EC[i] implies \(\hat{b}_i(y) < \overline{x}_i\). \(\square \)

If \(\overline{t}_i^{(1)}: {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) has a unique zero, then we denote it by

(Thus, \(\underline{x}_i > 0\).) Sufficient for \(\underline{x}_i\) to be well-defined is that \(\overline{t}_i^{(1)}\) has a zero and that Assumption RA1[i] holds. If in addition Assumption AMSV[i] holds, then we have \(\hat{b}_i ( \underline{x}_i ) = \underline{x}_i \in W_i^+\).

Note that if i is of type \(I^-\) and Assumption RA1[i] holds, then, by Lemma 3.2(2), \(\underline{x}_i\) is not well-defined.

Lemma 3.8

If \(\underline{x}_i\) is well-defined and Assumption EC[i] holds, then \(\underline{x}_i \le \overline{x}_i\). \(\diamond \)

Proof

By the definitions of \(\underline{x}_i\) and \(\overline{x}_i\). \(\square \)

Lemma 3.9

Suppose i is of type \(I^+\) and Assumption RA1[i] holds. Any of the following three assumptions is sufficient for \(\underline{x}_i\) to be well-defined.

-

(a).

Assumptions LFH’[i] and RA0[i] hold and \(W_i^{\star } \ne \emptyset \).

-

(b).

Assumption EC[i] holds.

-

(c).

Assumption LFH’[i] holds and i is of type \(II^-\). \(\diamond \)

Proof

Having RA1[i], we prove that \(\overline{t}_i^{(1)}\) has a zero. As \(\overline{t}_i^{(1)}\) is continuous, it is sufficient to show that this function assumes a positive and a negative value.

(a). Fix \(y \in W_i^{\star }\). We have \(t_i(\hat{b}_i(y),y) \le 0\). LFH’[i] implies \(\overline{t}_i^{(1)}(y) = t_i(y,y ) \le t_i(\hat{b}_i(y),y) \le 0\). As i is of type \(I^+\), we can fix \(x_i \in {] {0},{y} ] }\) with \(\overline{t}_i^{(1)}(x_i) = t_i(x_i,x_i) > 0\).

(b). As i is of type \(I^+\), \(\overline{t}_i^{(1)}(x_i) > 0\) for \(x_i\) small enough. EC[i] implies \(\overline{t}_i^{(1)}(x_i) < 0 \; (x_i > \overline{x}_i)\).

(c). As i is of type \(I^+\), \(\overline{t}_i^{(1)}(\lambda ) = t_i(\lambda ,\lambda ) > 0\) for \(\lambda > 0\) small enough. As i is of type \(II^-\), \(t_i(0,\lambda ) < 0\) for \(\lambda >0 \) large enough. As, by LFH’[i], \(t_i(\cdot ,\lambda )\) has the AMSCFA-property, it follows that \(\overline{t}_i^{(1)}(\lambda ) < 0\) for \(\lambda > 0\) small enough. \(\square \)

Lemma 3.10

Suppose i is of type \(I^+\), Assumptions LFH’[i] and RA1[i] hold and \(\underline{x}_i\) is well-defined. Then

-

1.

\(W_i^{\star } = {[ { \underline{x}_i },{+\infty } \,[}\).

-

2.

If \(t_i(0,y)> 0 \; ( y > 0)\), then \(W_i^{++} = {] { \underline{x}_i },{+\infty } \, [ }\) and \(W_i^{+} = {[ { \underline{x}_i },{+\infty } \,[}\). \(\diamond \)

Proof

1. ‘\(\subseteq \)’: by contradiction. So suppose \( y \in W_i^{\star }\) and \(y < \underline{x}_i\). As \( y >0\), the AMSCFA-property of \(\overline{t}_i^{(1)}\) (by virtue of RA1[i]) gives \(t_i(y,y) = \overline{t}_i^{(1)}(y) > \overline{t}_i^{(1)}(\underline{x}_i) = 0\). As (by virtue of LFH’[i]) \(t_i(\cdot ,y)\) has the AMSCFA-property, we have \(t_i(x_i,y) > 0\) for all \(0 \le x_i \le y\). Thus, \(y \not \in W_i\), a contradiction.

‘\(\supseteq \)’: suppose \(y \ge \underline{x}_i\). If \(t_i(0,y) \le 0\), then \(y \in W_i^{\star }\). Now suppose \(t_i(0,y) > 0\). RA1[i] implies \(t_i(y,y) \le 0\). As \({t}_i(\cdot ,y)\) is continuous, there exists an \(x_i \in {] {0},{y} ] }\) with \(t_i(x_i,y) = 0\). Thus, \(y \in W_i^{\star }\).

2. First statement ‘\(\subseteq \)’: suppose \(y \in W_i^{++}\). Then \(t_i(\hat{b}_i(y),y) = 0\) and \(0< \hat{b}_i(y) < y\). By LFH’[i], \(t_i(y,y) < 0\). RA1[i], implies \(y > \underline{x}_i\).

First statement ‘\(\supseteq \)’: suppose \(y > \underline{x}_i\). We have \(t_i(0,y) > 0\) and, by RA1[i], \(t_i(y,y) < 0\). The continuity of \(t_i(\cdot ,y)\) implies that there exists \(x_i \in {] {0},{y} \, [ }\) with \(t_i(x_i,y) = 0\). As LFH’[i] holds, \(y \in W_i^{++}\) follows.

Second statement ‘\(\subseteq \)’: suppose \(y \in W_i^{+}\). Then \(t_i(\hat{b}_i(y),y) = 0\) and \(0 < \hat{b}_i(y) \le y\). LFH’[i] implies \(t_i(y,y) \le 0\). Now, RA1[i] implies \(y \ge \underline{x}_i\).

Second statement ‘\(\supseteq \)’: suppose \(y \ge \underline{x}_i\). We have \(t_i(0,y) > 0\) and, by RA1[i], \(t_i(y,y) \le 0\). The continuity of \(t_i(\cdot ,y)\) implies that there exists \(x_i \in {] {0},{y} ] }\) with \(t_i(x_i,y) = 0\). So \(0 < x_i = \hat{b}_i(y) \le y\). Thus, \(y \in W_i^{+}\). \(\square \)

Lemma 3.11

Suppose Assumptions AMSV[i] and RA1[i] hold and i is type \(I^-\). Then

-

1.

\(\{ y> 0 \; | \; t_i(0,y) > 0 \} \subseteq W_i^{++}\).

-

2.

\(W_i^{\star } = {\mathbb {R}}_{++}\).

-

3.

\(W_i^{++} = W_i^+\).

-

4.

If Assumption EC[i] holds, then \(\hat{b}_i(y) < \overline{x}_i \; (y > 0)\). \(\diamond \)

Proof

1. Suppose \(y > 0\) with \(t_i(0,y) > 0\). By Lemma 3.2(2), \(t_i(y,y) < 0\). As \(t_i(\cdot ,y)\) is continuous, there exists an \(x_i \in {] {0},{y} \, [ }\) with \(t_i(x_i,y) = 0\). So \(y \in W_i^{++}\).

2. ‘\(\subseteq \)’: trivial. ‘\(\supseteq \)’: suppose \(y > 0\). If \(t_i(0,y) \le 0\), then \(y \in W_i^{\star }\). Now suppose \(t_i(0,y) > 0\). By part 1, \(y \in W_i^{++} \subseteq W_i^{\star }\).

3. ‘\(\subseteq \)’ is trivial. Now suppose \(y \in W_i^+\). The proof is complete if we show that \(\hat{b}_i(y) < y\). Well, if \(\hat{b}_i(y) = y\), then \(\overline{t}_i^{(1)}(y) = t_i(\hat{b}_i(y),y) = 0\) contradicting Lemma 3.2(2).

4. Suppose EC[i] holds. Fix \(y > 0\). The statement is clear if \(\hat{b}_i(y) = 0\). Now suppose \(\hat{b}_i(y) > 0\). We have \(0 =t_i(\hat{b}_i(y),y) \). EC[i] implies that \(\hat{b}_i(y) < \overline{x}_i\). \(\square \)

Lemma 3.12

Suppose Assumption LFH’, RA1 and RA0 hold. Let \(N' = \{ k \in N \; | \; k \text{ is } \text{ of } \text{ type } I^+ \}\).

-

1.

If \(N' = \emptyset \), then \(W^{\star } = {\mathbb {R}}_{++}\).

-

2.

Suppose \(N' \ne \emptyset \) and that for every \(i \in N'\): Assumption EC[i] holds or i is of type \(II^-\). Then, with \(\underline{x} = \max _{k \in N'} \underline{x}_k\), \(W^{\star } = {[ {\underline{x}},{+ \infty } \,[}\). \(\diamond \)

Proof

By Lemma 3.2(1) every i is of type \(I^+\) or \(I^-\). Remember that \(W^{\star } = \cap _i W_i^{\star }\).

1. Suppose \(N' = \emptyset \). So every i is of type \(I^-\). Now apples Lemma 3.11(2).

2. Lemma 3.9(b,c) guarantees that \(\underline{x}_k \; (k \in N')\) are well-defined. By Lemma 3.10(1), \(W_k^{\star } = {[ {\underline{x}_k},{+\infty } \,[} \; (k \in N')\) and, by Lemma 3.11(2) \(W_l^{\star } = {\mathbb {R}}_{++} \; (l \in N {\setminus } N')\). Thus, \(W^{\star } = {[ {\underline{x}},{+ \infty } \,[}\). \(\square \)

3.8 Properties of the Functions \(\hat{b}_i\) and \(\hat{s}_i\)

Proposition 3.5

Suppose Assumptions LFH’[i], RA1[i] and RA0[i] hold. Then the function \(\hat{b}_i: W_i^{\star } \rightarrow {\mathbb {R}}\) is continuous. \(\diamond \)

Proof

We may suppose that \(W_i^{\star } \ne \emptyset \). By Lemma 3.5, \(W_i^{\star }\) is a non-empty interval. It is sufficient to prove that \(\hat{b}_i\) is continuous on each non-empty compact interval I with \(I \subseteq W_i^{\star }\). Fix such an interval. Further consider the function \(\hat{b}_i: I \rightarrow \mathbb {R}\). As \(0 \le \hat{b}_i(y) \le y \; (y \in I)\), \(\hat{b}_i\) is bounded. As \(\hat{b}_i\) is bounded, continuity of \(\hat{b}_i\) is equivalent to the closedness of its graph, i.e. of the closedness of the subset \(\{ (y, \hat{b}_i(y) ) \; | \; y \in I \}\) in \(I \times {\mathbb {R}}\). As \(I \times {\mathbb {R}}\) is closed in \({\mathbb {R}}^2\), it remains to be proved that this graph is closed in \({\mathbb {R}}^2\). In order to do this take a sequence \(( ( y_m, \hat{b}_i(y_m) ) )\) in \(I \times {\mathbb {R}}\) that is in \({\mathbb {R}}^2\) convergent, with, say, limit \((y_{\star }, {b}_{\star } )\), and prove that \((y_{\star }, {b}_{\star } ) \in \{ (y, \hat{b}_i(y) ) \; | \; y \in I \}\), i.e. that \(y_{\star } \in I\) and \(\hat{b}_i(y_{\star }) = b_{\star }\). We have \(\lim y_m = y_{\star }\) and \(\lim \hat{b}_i(y_m) = {b}_{\star }\). As I is closed, \(y_{\star } \in I\) follows; so \(y_{\star } > 0\). We have \( 0 \le \hat{b}_i(y_m) \le y_m\), \({b}_i(y_m) t_i(\hat{b}_i(y_m), y_m ) = 0\) and \(t_i(\hat{b}_i(y_m), y_m ) \le 0\). Taking limits and noting that \(t_i: \varDelta ^+ \rightarrow \mathbb {R}\) is continuous, we obtain \(0 \le \hat{b}_{\star } \le y_{\star }\), \({b}_{\star } t_i({b}_{\star }, y_{\star }) =0 \) and \(t_i({b}_{\star }, y_{\star } ) \le 0\). Thus, as desired, \(\hat{b}_i(y_{\star }) = b_{\star }\).

\(\square \)

Proposition 3.6

-

1.

If Assumptions AMSV and RA[i] hold, then \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is injective.

-

2.

If Assumptions LFH’[i], RA1[i] and RA0[i] hold, then \(\hat{s}_i\) is on the interval \(W_i^+\) strictly increasing or strictly decreasing. \(\diamond \)

Proof

1. Suppose AMSV and RA[i] hold. We prove by contradiction that \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is injective. So suppose \(\hat{s}_i(y) = \hat{s}_i(y') = w\) where \(y, y' \in W_i^{+}\) with \(y \ne y'\). So \(\hat{b}_i(y) = w y > 0\) and \(\hat{b}_i(y') = w y' > 0\). It follows that \(t_i(w y, y) = t_i(w y', y') = 0\), i.e. \(\overline{t}_i^{(w)}(y) = \overline{t}_i^{(w)}(y') =0\). But, by RA[i], the function \(\overline{t}_i^{ (w) }\) has the AMSCFA-property.

2. Suppose LFH’[i], RA1[i] and RA0[i] hold, Lemma 3.6(2) guarantees that \(W_i^+\) is an interval. Remember that \(W_i^+\) is a subset of \(W_i^{\star }\). Proposition 3.5 implies that \(\hat{s}_i\) is continuous on \(W_i^+\). It now follows with part 1 that \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is strictly decreasing or strictly increasing. \(\square \)

Lemma 3.13

Suppose Assumptions LFH’[i] and RA[i] hold and: i is of type \(I^+\) or of type \(II^-\) or Assumption EC[i] holds. Then \(\hat{s}_i\) is strictly decreasing on the interval \(W_i^+\). \(\diamond \)

Proof

By Lemma 3.1, RA0[i] holds and by Lemma 3.6(2), \(W_i^+\) is a real interval. We may assume that \(W_i^+\) is not empty. Now Lemma 3.5 implies that \(W_i^{\star }\) is an interval without upper bound. By Proposition 3.6(2) \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is strictly decreasing or strictly increasing. By contradiction we prove that \(\hat{s}_i\) is strictly decreasing on \(W_i^+\); so suppose \(\hat{s}_i\) is strictly increasing on \(W_i^+\). By Proposition 3.5, \(\hat{s}_i: W_i^{\star }\) is continuous.

Case where i is of type \(I^+\): by Lemma 3.9(a), \(\underline{x}_i\) in (12) is well-defined. We have \(\underline{x}_i \in W_i^+\) and \(\hat{s}_i(\underline{x}_i) = 1\). Since \(\hat{s}_i\) is strictly increasing on \(W_i^+\), \(y \not \in W_i^+\) for every \(y > \underline{x}_i\). Fix such an y. Then \(\hat{s}_i(y) = 0\). The continuity of \(\hat{s}_i\) implies that there exists \(y' \in {] {\underline{x}_i},{y} \, [ }\) with \(\hat{s}_i(y') = 1/137\). But then \(y' \in W_i^+\), a contradiction.

Case where i is of type \(II^-\): fix \(y' \in W_i^+\). So \(\hat{s}_i(y') > 0\). As i is of type \(II^-\), \(\hat{s}_i(y) = 0\) for y large enough. Let \(y''\) with \(y'' > y'\) be such an y. The continuity of \(\hat{s}_i\) implies that there exists \(\tilde{y} \in {] {y'},{y''} \, [ }\) with \(\hat{s}_i(\tilde{y}) = \hat{s}_i(y')/137\). But then \(\tilde{y} \in W_i^+\) and \(\hat{s}_i(\tilde{y}) < \hat{s}_i(y')\), a contradiction.

Case where EC[i] holds: fix \(y' \in W_i^+\). So \(\hat{s}_i(y') > 0\). By Lemma 3.7, \(\hat{s}_i(y) \le \overline{x}_i /y \; (y \in W_i^{\star })\). This implies \(\lim _{y \rightarrow + \infty } \hat{s}_i(y) = 0\). The continuity of \(\hat{s}_i\) implies that there exists \(\tilde{y} > y'\) with \(\hat{s}_i(\tilde{y}) = \hat{s}_i(y')/137\). But then \(\tilde{y} \in W_i^+\) and \(\hat{s}_i(\tilde{y}) < \hat{s}_i(y')\), a contradiction. \(\square \)

Lemma 3.14

Suppose Assumptions LFH’ and RA0 hold and every \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is strictly decreasing. Then \(\hat{s}\) is strictly decreasing on the subset of its domain \(W^{\star }\) where it is positive. \(\diamond \)

Proof

We may suppose that the subset of \(W^{\star }\) where \(\hat{s}\) is positive contains at least two elements. Let \(y_a, y_b\) with \(y_a < y_b\) be such. So \(\hat{s}(y_a) > 0\) and \(\hat{s}(y_b) > 0\). Note that \(y_a, y_b \in W_i^{\star } \; (i \in N)\) and that \(y \in W_i^{\star } {\setminus } W_i^+ \; \Rightarrow \; \hat{s}_i(y) = 0\).

First we prove \(\hat{s}_i(y_a) - \hat{s}_i(y_b) \ge 0 \; (i \in N)\). We consider four cases.

Case where \(y_a, y_b \in W_i^+\): \(\hat{s}_i(y_a) - \hat{s}_i(y_b) > 0\), by assumption.

Case where \(y_a \in W_i^+, y_b \not \in W_i^+\): \(\hat{s}_i(y_a) - \hat{s}_i(y_b) = \hat{s}_i(y_a) - 0 = \hat{s}_i(y_a) > 0\).

Case where \(y_a \not \in W_i^+, y_b \not \in W_i^+\): \(\hat{s}_i(y_a) - \hat{s}_i(y_b) = 0 - 0 = 0\).

Case where \(y_a \not \in W_i^+, y_b \in W_i^+\): this case is impossible by Lemma 3.5.

Next fix j with \(\hat{s}_j(y_a) > 0\). If also \(\hat{s}_j(y_b) > 0\), then \(y_a, y_b \in W_i^+\) and by the above, \(\hat{s}_j(y_a) - \hat{s}_j(y_b) > 0\). If \(\hat{s}_j(y_b) = 0\), then also \(\hat{s}_j(y_a) - \hat{s}_j(y_b) = \hat{s}_j(y_a)> 0\). As desired, we obtain that \(s(y_a) - s(y_b) = \sum _{i \in N } (\hat{s}_i(y_a) - \hat{s}_i(y_b) ) > 0\). \(\square \)

Lemma 3.15

Suppose Assumptions AMSV, RA1 and RA0 hold. If every \(i \in N\) is of type \(I^-\), then \(W^{\star } = {\mathbb {R}}_{++}\) and for every \(y' > 0\) with \(\hat{s}(y') >0\) it holds that \(\hat{s}(y) > 0 \; (0 < y \le y')\). \(\diamond \)

Proof

By Lemma 3.11(2), \(W^{\star } = {\mathbb {R}}_{++}\). Fix \(0< y \le y'\) with \(\hat{s}(y') > 0\). Then \( \hat{b}_i(y') > 0\) for at least one i. For such an i, Lemma 3.5 implies \(\hat{b}_i(y) > 0\). So \(\hat{b}(y) = \sum _{l \in N} \hat{b}_l(y) > 0\). Thus, \(\hat{s}(y') > 0\). \(\square \)

Lemma 3.16

Suppose Assumptions LFH’, RA1, EC hold and for every \(i \in N\): i is of type \(I^+\) and \(t_i(0,y)> 0 \; ( y > 0)\). Let \(\underline{x} = \max _{i \in N} \underline{x}_i\). Then \(W^{\star } = {[ {\underline{x}},{+\infty } \,[}\), \( \hat{s} (\underline{x}) > 1\) and for every \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \}\), it holds that \(x_N^{\star } >\underline{x}\). \(\diamond \)

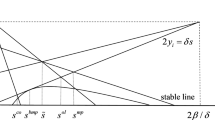

Proof

By Lemma 3.9(b),the \(\underline{x}_i\) are well-defined. Fix \(k_{\star }\) such that \(\underline{x} = \underline{x}_{k_{\star }}\). By Lemma 3.10(1,2), \(W^{\star } = {[ {\underline{x}},{+\infty } \,[}\) and \(W_i^+ = {[ {\underline{x}_i},{+\infty } \,[} \; (i \in N)\). Noting that \(\underline{x} \in W_i^+ \; (i \in N)\) and \(n \ge 2\), we obtain \( \hat{s} (\underline{x}) = \hat{s}_{k_{\star }}(\underline{x}_{k_{\star }}) + \sum _{k \ne k_{\star } } \hat{s}_k (\underline{x}) = 1 + \sum _{k \ne k_{\star } } \hat{s}_k (\underline{x}) > 1\). If \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \}\), then, by Theorem 3.2(2), \(\hat{b}( x_N^{\star } ) = x_N^{\star } \in W^{\star }\). So \(\hat{s}(x_N^{\star }) = 1\) and thus \(x_N^{\star } > \underline{x}\). \(\square \)

3.9 Semi-uniqueness, Existence and Uniqueness

The proof of the following proposition follows a reasoning similar to a result in [2] for sum-aggregative games.

Proposition 3.7

Suppose Assumption LFH’ holds and every \(t_i\) is decreasing in its second variable. Then AVI has at most one solution. \(\diamond \)

Proof

By contradiction. So suppose \(\textbf{x}, \textbf{x}' \in \textrm{AVI}^{\bullet }\) with \(\textbf{x} \ne \textbf{x}'\). We may suppose \(x_N \le x'_N\). Note that \(x'_N > 0\). As \(\textbf{x} \ne \textbf{x}'\), we can fix i with \(x_i < x'_i\). (9) implies \(t_i(x'_i,x'_N ) = 0 \ge t_i(x_i,x_N)\). By LFH’[i], the function \( t_i(\cdot ,x'_N )\) has the AMSCFA-property; so \( t_i(x_i,x'_N ) > 0\) follows. As \(t_i\) is decreasing in its second variable, \(0 < t_i(x_i,x'_N ) \le t_i(x_i,x_N )\) holds, which is a contradiction. \(\square \)

Theorem 3.3

Suppose Assumptions LFH’ and RA hold and for every \(i \in N\): i is of type \(I^+\) or of type \(II^-\) or EC[i] holds. Then AVI has at most one nonzero solution. \(\diamond \)

Proof

By Lemma 3.1, RA0 holds. Lemma 3.13 guarantees that every \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is strictly decreasing. By Lemma 3.14, \(\hat{s}\) is strictly decreasing on the subset of its domain where it is positive. Theorem 3.2(3) now implies the desired result. \(\square \)

Of course, if we add \(N_> \ne \emptyset \) as assumption to this theorem, then (by Proposition 3.1(1)) AVI has at most one solution and such a solution is nonzero.

Theorem 3.4

Suppose Assumptions LFH’, RA1, RA0 hold and at least one \(i \in N\) is of type \(I^+\). Any of the following two assumptions is sufficient for AVI to have a nonzero solution and for the solution set of AVI to be a non-empty compact subset of \({\mathbb {R}}^n\).

-

(a.)

Assumption EC holds.

-

(b.)

Every \(i \in N\) is of type \(II^-\).

If in addition to (a) and (b) Assumption RA holds, then AVI has a unique nonzero solution. \(\diamond \)

Proof

We prove the first statement about existence; then the second about uniqueness follows from Theorem 3.3.

Let \(N'= \{ k \in N \; | \; k \text{ is } \text{ of } \text{ type } I^+ \}\). For both cases (a) and (b), Lemma 3.12(2) guarantees that \(W^{\star } = {[ {\underline{x}},{+ \infty } \,[}\) with \(\underline{x} = \underline{x}_p\) for some \(p \in N'\). It follows that \( \hat{s}(\underline{x}) = \sum _{i \in N} \hat{s}_i(\underline{x}) \ge \hat{s}_p (\underline{x}_p) = 1\). The solution set of AVI is a non-empty compact subset of \({\mathbb {R}}^n\) if \( \textrm{AVI}^{\bullet } \setminus \{ \textbf{0} \}\) is a non-empty compact subset of \({\mathbb {R}}^n_+\); we shall prove the latter. By Theorem 3.2(3), \(\textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \}\) equals \(\hat{b} (Z)\) where Z is the set of zeros of the function \(\hat{b}: {[ {\underline{x}},{+ \infty } \,[} \rightarrow {\mathbb {R}}\). As this function is continuous, it follows that Z is a closed subset of \({[ {\underline{x}},{+ \infty } \,[}\), so also a closed subset of \({\mathbb {R}}\). Below we show that Z also is a bounded subset of \({\mathbb {R}}\) and therefore a compact subset of \({\mathbb {R}}\). As Proposition 3.5 also implies that \(\hat{\textbf{b}}: {[ {\underline{x}},{+ \infty } \,[} \rightarrow {\mathbb {R}}^n\) is continuous, it then follows that \( \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \} = \hat{\textbf{b}} ( Z )\) is a compact subset of \({\mathbb {R}}^n\). Finally note that, by Lemma 3.2, each i is of type \(I^+\) or of type \(I^-\).

(a.) Having EC, fix \(\overline{y}\) with \(\overline{y} \ge \sum _{i \in N} \overline{x}_i\). By Lemma 3.8, \(\overline{y} \ge \overline{x}_p > \underline{x}_p = \underline{x}\). Thus, \( \overline{y} \in W^{\star }\). With Lemmas 3.7 and 3.11(4), we obtain, \( \hat{b}( \overline{y} )= \sum _{i} \hat{b}_i(\overline{y}) \le \sum _{i} \overline{x}_i \le \overline{y}\); thus \(\hat{s}(\overline{y}) \le 1\). By the intermediate value theorem, there exists \(y_{\star } \in [\underline{x}, \overline{y}]\) with \(\hat{s}(y_{\star }) = 1\); so \(y_{\star } \in \textrm{fix}(\hat{b})\). By Theorem 3.2(3), \( \hat{\textbf{b} }( y_{\star } )\) is a nonzero solution of AVI. With Lemmas 3.7 and 3.11(4), we obtain, \(\hat{b}(y) \le \sum _i \overline{x}_i \; (y \ge \underline{x})\). So Z is a bounded subset of \({\mathbb {R}}\).

(b). As every i is of type \(II^-\), we have \(\hat{b}_i(y) = 0 \; (i \in N)\) for y large enough. So \(\hat{s}(y) = 0\) for y large enough. Fix \(\overline{y}\) with \(\overline{y} \ge \underline{x}\) and \(\hat{s}(\overline{y}) = 0\). Consider \(\hat{s}: {[ {\underline{x} },{+\infty } \,[} \rightarrow \mathbb {R}\). Proposition 3.5 implies that \(\hat{s}\) is continuous. By the intermediate value theorem, there exists \(y_{\star } \in [\underline{x}, \overline{y}]\) with \(\hat{s}(y_{\star }) = 1\). Thus, \(y_{\star } \in \textrm{fix}(\hat{b})\). By Theorem 3.2(3), \( \hat{\textbf{b} }( y_{\star } )\) is a nonzero solution of AVI. By the above, \(\hat{b}(y) = 0 \) for y large enough. So Z is a bounded subset of \({\mathbb {R}}\). \(\square \)

4 SS-Technique; with Differentiability Assumptions

4.1 Setting

The setting here is the same as in Sect. 3.1. However, we always assume here not only  .Footnote 17 Partial differentiability is necessary for defining Assumptions LFH, DIR and DIR’ given below.

.Footnote 17 Partial differentiability is necessary for defining Assumptions LFH, DIR and DIR’ given below.

4.2 Assumptions

Besides Assumptions AMSV, LFH’, RA, RA1, RA0 and EC from Sect. 3.3, we here also consider four new ones:

-

DIFF.

\(t_i: \varDelta ^+ \rightarrow {\mathbb {R}}\) is continuously partially differentiable.

-

LFH.

For every \((x_i,y)\in \varDelta ^+\): \( t_i(x_i,y)= 0 \; \Rightarrow \; D_1 t_i(x_i,y)<0\).

-

DIR.

For every \((x_i,y) \in \varDelta ^+\) with \(x_i > 0\): \(t_i(x_i,y)=0 \; \Rightarrow \; (x_i D_1 + y D_2)t_i(x_i,y)<0\).

-

DIR’.

For every \(x_i > 0\): \( t_i(x_i,x_i)=0 \; \Rightarrow \; (D_1+ D_2)t_i(x_i,x_i)<0\).

Note that Assumptions LFH, DIR and DIR’ concern local conditions.Footnote 18 Note that

DIR[i] \(\Rightarrow \) DIR’[i] and that LFH[i] \(\Rightarrow \) LFH’[i] \( \Rightarrow \) AMSV[i].

Lemma 4.1

-

1.

[ DIFF[i] \(\wedge \) DIR’[i] ] \( \Rightarrow \) RA1[i].

-

2.

[ DIFF[i] \(\wedge \) DIR[i] ] \(\Rightarrow \) RA[i]. \(\diamond \)

Proof

1. Suppose Assumptions DIFF[i] and DIR’[i] hold. We prove that \(\overline{t}_i^{(1)}: {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) has the AMSCFA-property, by showing that \(\overline{t}_i^{(1)}(\lambda ) = 0 \; \Rightarrow \; {( \overline{t}_i^{(1)}(\lambda ) )}' < 0\). Well, by Lemma B.1 in “Appendix B”, we have \( {( \overline{t}_i^{(1)} )}'(\lambda ) = (D_1 + D_2) t_i(\lambda ,\lambda )\). DIR’[i] implies the desired result.

2. Suppose DIFF[i] and DIR[i] hold. Fix \(\mu \in {] {0},{1} ] }\). We have to prove that \(\overline{t}_i^{(\mu )}: {\mathbb {R}}_{++} \rightarrow {\mathbb {R}}\) has the AMSCFA-property. This we do by showing \(\overline{t}_i^{(\mu )}(\lambda ) = 0 \; \Rightarrow \; {( \overline{t}_i^{(\mu )} )}' (\lambda ) ) < 0\). Well, by Lemma B.1, \({( \overline{t}_i^{(\mu )} )}'(\lambda ) = (\mu D_1 + D_2) t_i (\mu \lambda , \lambda )\). If \(\overline{t}_i^{(\mu )}(\lambda ) = 0\), then \(t_i(\mu \lambda , \lambda ) = 0\) and DIR[i] implies \(\mu \lambda D_1 t_i(\mu \lambda , \lambda ) + \lambda D_2 t_i(\mu \lambda , \lambda ) < 0\). So \({( \overline{t}_i^{(\mu )} )}' (\lambda ) < 0\). \(\square \)

Lemma 4.2

Suppose Assumptions DIFF[i], LFH[i] and DIR[i] hold. Then for every \((x_i,y) \in \varDelta ^+\)

Proof

Suppose \((x_i,y) \in \varDelta ^+\) with \( t_i(x_i,y) =0\). We have the following identity:

If \(x_i > 0\), then LFH[i] and DIR[i] imply the desired result. Now suppose \(x_i = 0\). We have to prove \( t_i(0,y) = 0 \; \Rightarrow \; (D_1 + D_2) t_i(0,y) \le 0\). Well, by LFH[i], \(D_1 t_i(0,y) < 0\). By Lemmas 4.1(2) and 3.1, RA0[i] holds and as \(t_i(0,y) = 0\) it follows that \(t_i(0,y +h) \le 0 \; (h > 0)\). Therefore, \(D_2 t_i(0,y) = \lim _{h \downarrow 0} \frac{ t_i(0,y+h) - t_i(0,y) }{h} = \lim _{h \downarrow 0} \frac{ t_i(0,y+h) }{h} \le 0\). \(\square \)

4.3 Properties of the Functions \(\hat{b}_i\) and \(\hat{s}_i\)

In the next lemma we consider the differentiability of \(\hat{b}_i\); note that by Lemma 3.6(1), \(W_i^{++}\) is open.

Proposition 4.1

Suppose Assumptions DIFF[i] and LFH[i] hold and \(W_i^{++} \ne \emptyset \). Then

-

1.

\(\hat{b}_i\) is continuously differentiable on \(W_i^{++}\) with \( { \hat{b}_i }' = - \frac{D_2 t_i}{D_1 t_i}\) on \(W_i^{++}\).

-

2.

\(\hat{s}_i\) is continuously differentiable on \(W_i^{++}\) with \( { \hat{s}_i }'(y) = - \frac{ \hat{b}_i(y) D_1 t_i( \hat{b}_i(y), y) + y D_2 t_i (\hat{b}_i(y),y) }{ y^2 \cdot D_1 t_i( \hat{b}_i(y), y) }\).

-

3.

If Assumption DIR[i] holds, then \({ \hat{s}_i }'(y) < 0 \; (y \in W_i^{++})\). \(\diamond \)

Proof

1. For every \(y \in W_i^{++}\) we have \(\hat{b}_i(y) > 0\) and therefore, by (9), \( t_i(\hat{b}_i(y),y) = 0\). As DIFF[i] holds, \(t_i\) is continuously differentiable on \( {\textrm{Int}(\varDelta ^+)}\). As by LFH[i], \(D_1 t_i (\hat{b}_i(y), y) < 0 \; ( y \in W_i^{++})\), the classical implicit function theorem implies that \(W_i^{++}\) is open and \(\hat{b}_i\) is continuously differentiable on \(W_i^{++}\). Differentiating the identity \(t_i(\hat{b}_i(y),y) = 0 \; (y \in W_i^{++})\), the second statement follows.

2. By part 1.

3. Suppose DIR[i] holds. As for \(y \in W_i^{++}\) we have \(t_i(\hat{b}_i(y),y) = 0\), the formula in part 2 together with LFH[i] and DIR[i] imply \(\hat{s}'_i(y) < 0 \; (y \in W_i^{++})\). \(\square \)

Lemma 4.3

Suppose Assumptions DIFF, LFH and DIR hold. Then \(\hat{s}: W^{\star } \rightarrow {\mathbb {R}}\) and every \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) are strictly decreasing where positive. \(\diamond \)

Proof

By Lemma 4.1(2), RA holds; so with Lemma 3.1 RA0 also holds. By Lemma 3.14, the proof is complete if we show that every \(\hat{s}_i: W_i^+ \rightarrow {\mathbb {R}}\) is strictly decreasing. Well, by Lemma 3.13, this holds if i is of type \(I^+\). As each i is, by Lemma 3.2(1), of type \(I^+\) or \(I^-\), the proof is complete if strict decreasingness holds for i of type \(I^-\). So suppose i is of type \(I^-\). If \(W_i^+ = \emptyset \), we are done. Suppose \(W_i^+ \ne \emptyset \). Proposition 4.1(2) together with RA implies \(\hat{s}'_i(y) < 0 \; (y \in W_i^{++})\). By Lemma 3.11(3), \(W_i^+ = W_i^{++}\). Thus, \(\hat{s}_i: W_i^{+} \rightarrow {\mathbb {R}}\) is strictly decreasing. \(\square \)

4.4 Semi-uniqueness, Existence and Uniqueness

The following theorems provide variants of Theorems 3.3 and 3.4. Concerning this note that, by Lemma 4.1(2), DIFF together with DIR imply RA. As a matter of fact this makes that the other assumptions about type \(I^+\), type \(II^-\) and EC in Theorem 3.3 are not used anymore.

Theorem 4.1

Suppose Assumptions DIFF, LFH and DIR hold. Then AVI has at most one nonzero solution. \(\diamond \)

Proof

By Lemma 4.3, \(\hat{s}\) is strictly decreasing on the subset of its domain where it is positive. Theorem 3.2(3) now implies the desired result. \(\square \)

Theorem 4.2

Suppose Assumptions DIFF, LFH, DIR’ and RA0 hold and at least one \(i \in N\) is of type \(I^+\). Then any of the following two assumptions is sufficient for AVI to have a nonzero solution and for the solution set of AVI to be a non-empty compact subset of \({\mathbb {R}}^n\).

-

(a.)

Assumption EC holds.

-

(b.)

Every \(i \in N\) is of type \(II^-\).

If in addition to (a) and (b) Assumption DIR holds, then AVI has a unique nonzero solution. \(\diamond \)

Proof

First statement (about existence): by Lemma 4.1(1), RA1 holds and so by Lemma 3.1 also RA0 holds. So the first statement in Theorem 4.1 applies and implies the desired result.

Second statement (about uniqueness): by Lemma 4.1(2), RA holds. So the second statement in Theorem 4.1 applies and implies the desired result. \(\square \)

In addition to the previous theorem that presupposes that at least one \(i \in N\) is of type \(I^+\), we provide with the next theorem a result that can handle situations where every \(i \in N\) is of type \(I^-\). Remember the definition of \(\tilde{N} \) in (11).

Theorem 4.3

Suppose Assumptions DIFF, LFH, DIR and EC hold and every \(i \in N\) is of type \(I^-\). Then

-

1.

For every \(i \in \tilde{N}\) and sufficiently small \(y > 0\), there exists a unique \(\xi _i(y) \in {] {0},{y} \, [ }\) with \(t_i(\xi _i(y),y) = 0\).

-

2.

For every \(i \in \tilde{N}\) the limit \(\overline{s}_i {{\; \mathrel {\mathop {:}}= \;}}\lim _{y \downarrow 0} \frac{\xi _i(y)}{y}\) exists and \(\overline{s}_i \in {] {0},{1} ] }\).

-

3.

\(\sum _{i \in \tilde{N} } \overline{s}_i > 1 \; \Leftrightarrow \; \textrm{AVI}\) has a unique nonzero solution. \(\diamond \)

Proof

Note that by Lemma 4.1(2), RA holds. Now by Lemma 3.1, RA0 also holds.

1. Suppose \(i \in \tilde{N}\), so \(t_i(0,\tilde{y}_i) > 0\) for some \(\tilde{y}_i > 0\). By RA0, \(t_i(0,y) > 0 \; (0 < y \le \tilde{y}_i)\). So, by Lemma 3.11(1), \({] {0},{\tilde{y}_i} ] } \in W_i^{++}\). Thus, for every \(y \in {] {0},{\tilde{y}_i} ] }\), \(\xi _i(y) = \hat{b}_i(y)\) is as looked for.

2. By the proof of part 1, we have \(\hat{b}_i(y) > 0 \; (0 < y \le \tilde{y}_i)\). Lemma 4.3 guarantees that \(\hat{s}_i\) is strictly decreasing on \({] {0},{\tilde{y}_i} \, [ }\). As \(\hat{s}_i \le 1\), the limit \(\overline{s}_i\) exists and \(0 < \overline{s}_i \le 1\).

3. For \(i \in N \setminus \tilde{N}\), we have \(t_i(0,y) \le 0 \; (y > 0)\) and thus \(\hat{b}_i(y) =0 \; (y > 0)\). Therefore \(\hat{s}_i(y) = 0 \; (y > 0)\). Also we already know (by the proof of part 1) that, in parts 1 and 2, \(\xi _i(y) = \hat{b}_i(y)\).

‘\(\Rightarrow \)’: suppose \(\sum _{i \in \tilde{N} }\overline{s}_i > 1\); so \(\tilde{N} \ne \emptyset \). By Theorem 4.1 we still have to prove that \(\textrm{AVI}\) has a nonzero solution. As RA1 holds, Lemma 3.12(2) guarantees that \(W^{\star } = {\mathbb {R}}_{++}\). Consider \(\hat{s}: {\mathbb {R}}_{++} \rightarrow \mathbb {R}\). By part 2, we obtain \(\lim _{y \downarrow 0} \hat{s}(y) = ( \sum _{ i \in \tilde{N}} + \sum _{i \in N {\setminus } \tilde{N}} ) \lim _{y \downarrow 0} \hat{s}_i(y) = \sum _{i \in \tilde{N} } \overline{s}_i > 1\). By virtue of EC, we can fix \(\overline{y}\) with \(\overline{y} > \sum _{k \in N} \overline{x}_k\). So \(\tilde{y} \in W^{\star }\). By Lemma 3.7, \(\hat{b}_i(\overline{y}) \le \overline{x}_i \; (i \in N)\). It follows that \(b(\overline{y}) = \sum _{k \in N} \hat{b}_k(\overline{y}) \le \overline{y}\) and therefore \(\hat{s}(\overline{y}) \le 1\). Proposition 3.5 implies that \(\hat{s}\) is continuous. By the intermediate value theorem, there exists \(y_{\star }\in W^{\star }\) with \(\hat{s}(y_{\star }) = 1\). Theorem 3.2(3) implies \(\hat{\textbf{b}}(y_{\star }) \in \textrm{AVI}^{\bullet } {\setminus } \{ \textbf{0} \}\).

‘\(\Leftarrow \)’: suppose \(\textrm{AVI}\) has a unique nonzero solution \(\textbf{e}\). By Theorem 3.2(2), \(\hat{s}(e_N) = 1\). By Lemma 3.15, \(\hat{s} > 0 \) on \({] {0},{e_N} ] }\). By Lemma 4.3, \(\hat{s}\) is strictly decreasing on \({] {0},{e_N} ] }\). So \(\sum _{i\in \tilde{N} } \overline{s}_i = \sum _{i \in \tilde{N}} \lim _{y \downarrow 0} \hat{s}_i(y) = \lim _{y \downarrow 0} \sum _{i \in \tilde{N} } \hat{s}_i(y) = \lim _{y \downarrow 0} \sum _{i \in N} \hat{s}_i(y) = \lim _{y \downarrow 0} \hat{s}_(y) > \hat{s}(e_N) = 1\). \(\square \)

The fundamental result about the existence of the limit in Theorem 4.3(2) guarantees that this limit in various cases can be computed as we shall illustrate in Sect. 5.4. Its part 3 then gives a sufficient and necessary condition for \(\textrm{AVI}\) to have a unique solution while \(\textbf{0}\) is not a solution.

4.5 Sufficient and Necessary Conditions

For Cournot oligopolies there are powerful results dealing with sufficient and necessary conditions for equilibrium uniqueness. Concerning this, [7] is a milestone. It concerns a variant of a result in [14]. Contrary to the latter result, it considers the whole equilibrium set and in particular does not exclude degenerate ones.Footnote 19 The proof in [7] also is much more elementary than the proof in [14] which deals with Cournot equilibria as solutions of a complementarity problem to which differential topological fixed point index theory is applied. The more simple nature of this proof was realised by using ideas from the Selten–Szidarovszky technique. A shortcoming of the result in [7] is that a strong variant of a Fisher–Hahn condition (see footnote 13) has to hold.Footnote 20 Another is that the price function is not allowed to be everywhere positive (which is an assumption that often is used). In [29] a generalisation of the result in [7] was provided solving these shortcomings; in addition, can deal with sum-aggregative games. Below we even go a step further, by further generalising such that results apply to aggregative variational inequalities. In addition we improve them intrinsically (by using the \(\hat{s}_i\) besides the \(\hat{b}_i\)). However, we only do this for the case where every i is of type \(I^+\) and \(t_i(0,y)> 0 \, (y > 0)\).Footnote 21

Theorem 4.4

Suppose Assumptions DIFF, LFH, DIR’, RA0 and EC hold, \(N_> \ne \emptyset \) and for every \(i \in N\): i is of type \(I^+\) and \(t_i(0,y)> 0 \; (y > 0)\). Then

-

1.

For every \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet }\), it holds that \(x_i^{\star } > 0 \; (i \in N)\) and \( D_1 t_i(x^{\star }_i, x^{\star }_N) < 0\).

-

2.

\(\textrm{AVI}^{\bullet }\) is a non-empty compact subset of \({\mathbb {R}}^n_+\) that contains a nonzero element.

-

3.

\(- \sum _{i \in N} \frac{ x_i^{\star } D_1 t_i(x^{\star }_i, x^{\star }_N) + x^{\star }_N D_2 t_i(x^{\star }_i, x^{\star }_N)}{ D_1 t_i( x^{\star }_i, x^{\star }_N ) } < 0 \; (\textbf{x}^{\star } \in \textrm{AVI}^{\bullet }) \;\; \Rightarrow \;\; \# \textrm{AVI}^{\bullet } = 1\).

-

4.

\( \textrm{AVI}^{\bullet } = \{ \textbf{x}^{\star } \} \; \Rightarrow \; - \sum _{i \in N} \frac{ x_i^{\star } D_1 t_i(x^{\star }_i, x^{\star }_N) + x^{\star }_N D_2 t_i(x^{\star }_i, x^{\star }_N)}{ D_1 t_i( x^{\star }_i, x^{\star }_N ) } \le 0\). \(\diamond \)

Proof

The assumptions imply (by Lemma 4.1(1)) that RA1 holds; so Lemma 3.10 applies. By the latter lemma, it holds for every \(i \in N\) that \(W_i^{\star } = {[ {\underline{x}_i},{+ \infty } \,[}\) and \(W_i^{++} = {] {\underline{x}_i},{+ \infty } \, [ }\). So, with \(\underline{x} = \max _i \underline{x}_i\), the domain of \(\hat{s}\) is \( W^{\star } = {[ {\underline{x}},{+\infty } \,[}\). Note that \( {] {\underline{x}},{+\infty } \, [ } \subseteq W_i^{++} \; (i \in N)\). Proposition 4.1(2) implies that \(\hat{s}\) is differentiable at every \(y \in {] {\underline{x}},{+\infty } \, [ }\) with

1. Suppose \(\textbf{x}^{\star } \in \textrm{AVI}^{\bullet }\) and let \(i \in N\). As \(N_> \ne \emptyset \), we have by Proposition 3.1(1) that \( x_N^{\star } \ne 0\). By Theorem 3.2(4), \(x^{\star }_i = \hat{b}_i(x_N^{\star })\). If \(x^{\star }_i = 0\), then \(t_i(0,x_N^{\star }) = t_i( \hat{b}_i(x_N^{\star }), x_N^{\star }) \le 0\) which thus is impossible. So we have \(x^{\star }_i > 0\) and therefore \(t_i(x^{\star }_i, x_N^{\star }) = t_i ( \hat{b}_i(x_N^{\star }), x_N^{\star } ) = 0 \). Now LFH[i] implies \( D_1 t_i(x^{\star }_i, x^{\star }_N) < 0\).

2. By Theorem 5.1.

3. Suppose \(- \sum _{i \in N} \frac{ x_i^{\star } D_1 t_i(x^{\star }_i, x^{\star }_N) + x^{\star }_N D_2 t_i(x^{\star }_i, x^{\star }_N)}{ D_1 t_i( x^{\star }_i, x^{\star }_N ) } < 0 \; (\textbf{x}^{\star } \in \textrm{AVI}^{\bullet })\). By part 1, it is sufficient to prove that \(\# \textrm{AVI}^{\bullet } = 1\). By Theorem 3.2(3) and part 1, \( \textrm{AVI}^{\bullet } = \{\hat{\textbf{b}}(y) \; | \; \underline{x} \le y < +\infty \text{ with } \hat{s}(y) = 1 \}\). We prove that there exists at most one \(y \in {[ {\underline{x}},{+\infty } \,[}\) with \(\hat{s}(y) = 1\). As \(\hat{s}\) is (by Proposition 3.5) continuous, this in turn can be done by showing that \(\hat{s} - 1\) has the AMSCFA-property. For this in turn it is sufficient that \( \hat{s}'( y) < 0\) for every \(y \in {[ {\underline{x}},{+\infty } \,[}\) with \(\hat{s}(y) = 1\). So suppose \(y \in {[ {\underline{x}},{+\infty } \,[}\) with \(\hat{s}(y) = 1\). Let \(\textbf{x}^{\star } = \hat{\textbf{b}}(y)\). By Theorem 3.2(1) and part 1, \(\textbf{x}^{\star }\) is a nonzero solution of AVI. This implies \(x^{\star }_N = \sum _i \hat{b}_i(y) = \hat{b}(y) = y\). As \(x^{\star }_i = \hat{b}_i(y) = \hat{b}_i(x^{\star }_N)\), we obtain \(- \sum _{i \in N} \frac{ \hat{b}_i(y) D_1 t_i( \hat{b}_i(y), y) + x^{\star }_N D_2 t_i( \hat{b}_i(y), y)}{ y^2 D_1 t_i( \hat{b}_i(y), y) } < 0\). By Lemma 3.16 we have \(y > \underline{x}\). Now Proposition 4.1(3) implies \({\hat{s}}'( y ) < 0\).

4. Suppose \( \textrm{AVI}^{\bullet } = \{ \textbf{x}^{\star } \}\). By part 1, \(\textbf{x}^{\star } \ne \textbf{0}\). By Theorem 3.2(2), \(\textrm{fix}(\hat{b}) = \{ x^{\star }_N \}\). This implies that \(\hat{s}- 1\) has \( x^{\star }_N\) as unique zero. By Lemma 3.16, \(x_N^{\star } > \underline{x}\). So \(\hat{s}\) is differentiable at \(x_N^{\star }\). We now prove by contradiction that \(\hat{s}'(x_N^{\star }) \le 0\). Well, suppose \(\hat{s}'(x_N^{\star }) > 0\). Let \(g {{\; \mathrel {\mathop {:}}= \;}}\hat{s} - 1\). So \(g(x_N^{\star }) = 0\) and \(g'(x_N^{\star }) = \hat{s}'(x_N^{\star }) > 0\); this implies that there exists \(x' \in {] { \underline{x} },{ x_N^{\star } } \, [ }\) with \(g(x') < 0\). Also, by Lemma 3.16, \(g(\underline{x}) = \hat{s}(\underline{x}) - 1 > 0\). As g is continuous, g has a zero in \({] {\underline{x}},{x_N^{\star }} \, [ }\), which is a contradiction. As by Theorem 3.2(4), \(x^{\star }_i= \hat{b}_i(x^N_{\star }) \; (i \in N)\), we obtain by Proposition 4.1(2), \( - \sum _{i \in N} \frac{ x_i^{\star } D_1 t_i(x^{\star }_i, x^{\star }_N) + x^{\star }_N D_2 t_i(x^{\star }_i, x^{\star }_N)}{ D_1 t_i( x^{\star }_i, x^{\star }_N ) } = {( x^{\star }_N )}^2 \hat{s}'(x_N^{\star }) \le 0\). \(\square \)

5 Variational Inequalities and Nash Equilibria

5.1 Setting

Consider a game in strategic form with player set \(N {{\; \mathrel {\mathop {:}}= \;}}\{ 1, \ldots , n \}\), for player \(i \in N\) a strategy set \(X_i\) and payoff function \(f_i\). So every \(X_i\) is a non-empty set and every \(f_i\) a function \(X_1 \times \cdots \times X_n \rightarrow \mathbb {R}\). We denote the set of strategy profiles \( X_1 \times \cdots \times X_n\) also by \(\textbf{X}\). For \(i \in N\), define \( \textbf{X}_{\hat{\imath }} {{\; \mathrel {\mathop {:}}= \;}}X_1 \times \cdots \times X_{i-1} \times X_{i+1} \times \cdots \times X_n\). Further assume \(n \ge 2\). We denote such a game by \(\varGamma \).

Given \(i \in N\), we sometimes identify \(\textbf{X}\) with \(X_i \times \textbf{X}_{\hat{\imath }}\) and then write \(\textbf{x} \in \textbf{X}\) as \(\textbf{x} = ( x_i; \textbf{x}_{\hat{\imath }} )\). For \(i \in N\) and \(\textbf{z} \in \textbf{X}_{\hat{\imath }}\), the conditional payoff function \(f_i^{(\textbf{z})}: X_i \rightarrow \mathbb {R}\) is defined by \( f_i^{(\textbf{z})}(x_i) {{\; \mathrel {\mathop {:}}= \;}}f_i(x_i;\textbf{z})\) and the best-reply correspondence \(R_i: \textbf{X}_{\hat{\imath }} \multimap X_i\) is defined by \(R_i(\textbf{z}) {{\; \mathrel {\mathop {:}}= \;}}\textrm{argmax}_{x_i \in X_i}\; f_i^{(\textbf{z})}(x_i)\).