Abstract

I consider the problem of deriving couplings of a statistical model from measured correlations, a task which generalizes the well-known inverse Ising problem. After reminding that such problem can be mapped on the one of expressing the entropy of a system as a function of its corresponding observables, I show the conditions under which this can be done without resorting to iterative algorithms. I find that inverse problems are local (the inverse Fisher information is sparse) whenever the corresponding models have a factorized form, and the entropy can be split in a sum of small cluster contributions. I illustrate these ideas through two examples (the Ising model on a tree and the one-dimensional periodic chain with arbitrary order interaction) and support the results with numerical simulations. The extension of these methods to more general scenarios is finally discussed.

Similar content being viewed by others

Notes

Periodic boundary conditions enforce the presence of a single loop of length N, so that the model is not exactly a tree. Nevertheless, for N large enough and for g sufficiently distant from critical points of the model, if any, the presence of such loop can be neglected.

References

Ackley, D., Hinton, G., Sejnowski, T.: A learning algorithm for Boltzmann machines. Cogn. Sci. 9, 147 (1985)

Aurell, E., Ekeberg, M.: Inverse Ising inference using all the data. Phys. Rev. Lett. 108(9), 090201 (2012)

Aurell, E., Ollion, C., Roudi, Y.: Dynamics and performance of susceptibility propagation on synthetic data. Eur. Phys. J. B 77(4), 587–595 (2010)

Bailly-Bechet, M., Braunstein, A., Pagnani, A., Weigt, M., Zecchina, R.: Inference of sparse combinatorial-control networks from gene-expression data: a message passing approach. BMC Bioinform. 11(1), 355 (2010)

Braunstein, A., Pagnani, A., Weigt, M., Zecchina, R.: Inference algorithms for gene networks: a statistical mechanics analysis. J. Stat. Mech. 2008(12), P12001 (2008)

Cocco, S., Leibler, S., Monasson, R.: Neuronal couplings between retinal ganglion cells inferred by efficient inverse statistical physics methods. Proc. Natl. Acad. Sci. USA 106, 14058 (2009)

Cocco, S., Monasson, R.: Adaptive cluster expansion for inferring Boltzmann machines with noisy data. Phys. Rev. Lett. 106(9), 090601 (2011)

Cocco, S., Monasson, R.: Adaptive cluster expansion for the inverse Ising problem: convergence, algorithm and tests. J. Stat. Phys. 147(2), 252–314 (2012)

Cover, T., Thomas, J.: Elements of Information Theory, vol. 6. Wiley, New York (1991)

Gori, G., Trombettoni, A.: The inverse Ising problem for one-dimensional chains with arbitrary finite-range couplings. J. Stat. Mech. 2011, P10021 (2011)

Higuchi, S., Mézard, M.: 2009, Susceptibility propagation for constraint satisfaction problems. ArXiv preprint. http://arxiv.org/abs/0903.1621v1

Jaynes, E.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620–630 (1957)

Jaynes, E.: Information theory and statistical mechanics. II. Phys. Rev. 108(2), 171 (1957)

Kappen, H., Rodriguez, F.: Efficient learning in Boltzmann machines using linear response theory. Neural Comput. 10, 1137–1156 (1998)

de Lachapelle, D., Challet, D.: Turnover, account value and diversification of real traders: evidence of collective portfolio optimizing behavior. New J. Phys. 12, 075039 (2010)

Lillo, F., Moro, E., Vaglica, G., Mantegna, N.: Specialization and herding behavior of trading firms in a financial market. New J. Phys. 10, 043019 (2008)

Marinari, E., Van Kerrebroeck, V.: Intrinsic limitations of the susceptibility propagation inverse inference for the mean field Ising spin glass. J. Stat. Mech. 2010, P02008 (2010)

Mézard, M., Montanari, A.: Information, Physics and Computation. Oxford University Press, London (2009)

Mézard, M., Mora, T.: Constraint satisfaction problems and neural networks: a statistical physics perspective. J. Physiol. Paris 103, 107–113 (2009)

Moro, E., Vicente, J., Moyano, L., Gerig, A., Farmer, J.D., Vaglica, G., Lillo, F., Mantegna, N.: Market impact and trading profile of hidden orders in stock markets. Phys. Rev. E 80, 066102 (2009)

Nguyen, H., Berg, J.: Bethe–Peierls approximation and the inverse Ising problem. J. Stat. Mech. Theory Exp. 2012(03), P03,004 (2012)

Ricci-Tersenghi, F.: The Bethe approximation for solving the inverse Ising problem: a comparison with other inference methods. J. Stat. Mech. 2012(08), P08015 (2012)

Roudi, Y., Aurell, E., Hertz, J.: Statistical physics of pairwise probability models. Front. Comput. Neurosci. 3(22), 1–15 (2009)

Roudi, Y., Hertz, J.: Mean field theory for nonequilibrium network reconstruction. Phys. Rev. Lett. 106(4), 048702 (2011)

Schneidman, E., Berry, M. II, Segev, R., Bialek, W.: Weak pairwise correlations imply strongly correlated network states in a neural population. Nature 440, 1007–1012 (2006)

Sessak, V., Monasson, R.: Small-correlation expansions for the inverse Ising problem. J. Phys. A, Math. Theor. 42, 055,001 (2009)

Shlens, J., Field, G., Gauthier, J., Grivich, M., Petrusca, D., Sher, A., Litke, A., Chichilnisky, E.: The structure of multi-neuron firing patterns in primate retina. J. Neurosci. 26(32), 8254–8266 (2006)

Socolich, M., Lockless, S., Russ, W., Lee, H., Gardner, K., Ranganathan, R.: Evolutionary information for specifying a protein fold. Nature 437, 512–518 (2005)

Tanaka, T.: Mean field theory of Boltzmann machine learning. Phys. Rev. E 58, 2302 (1998)

Tarantola, A.: Inverse Problem Theory and Methods for Model Parameter Estimation. SIAM, Philadelphia (2005)

Wainwright, M., Jordan, M.: Graphical models, exponential families, and variational inference. Found. Trends Mach. Learn. 1(1–2), 1–305 (2008)

Wainwright, M., Ravikumar, P., Lafferty, J.: High-dimensional graphical model selection using ℓ1-regularized logistic regression. Adv. Neural Inf. 19, 1465–1472 (2006)

Weigt, M., White, R., Szurmant, H., Hoch, J., Hwa, T.: Identification of direct residue contacts in protein–protein interaction by message passing. Proc. Natl. Acad. Sci. USA 106, 67 (2009)

Welling, M., Teh, Y.: Approximate inference in Boltzmann machines. Artif. Intell. 143(1), 19–50 (2003)

Acknowledgements

I acknowledge M. Marsili, G. Gori and S. Cocco for very useful discussions.

Author information

Authors and Affiliations

Corresponding author

Appendix: Factorization Property for the One-Dimensional Chain

Appendix: Factorization Property for the One-Dimensional Chain

I show in the following that for a one-dimensional periodic chain defined as in (22), the factorization property

holds, where Γ n ={nρ+1,…,nρ+R} and γ n ={(n+1)ρ+1,…,nρ+R}. To obtain this result, one can consider the auxiliary two-dimensional model defined by the log-probability

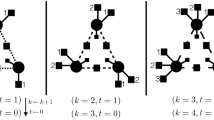

in which the configuration space contains the original degrees of freedom are \(s_{i}^{n} \in\{ -1,1\}\) (with n=0,…,N/ρ−1 and i=1+nρ,…,R+nρ) and the auxiliary ones \(t_{i}^{n} \in\{ -1,1\}\) (with n=0,…,N/ρ−1 and i=1+(n+1)ρ,…,R+nρ). The relation between the original model and the auxiliary one is sketched in Fig. 6. In particular the coupling λ controls the strength of the bond in the auxiliary dimension (labeled by n), so that the limit λ→∞ describes the original chain with the obvious identification \(s^{n}_{i} \to s_{i}\) and \(t^{n}_{i} \to s_{i}\). By defining the row variables \(\underline{s}^{n} = \{ s_{i}^{n}\}_{i=1+n\rho }^{i=R+n\rho}\) and \(\underline{t}^{n} = \{ t_{i}^{n}\}_{i=1+(n+1)\rho }^{i=R+n\rho}\), the log-probability for the two dimensional model can be written as

Hence the distribution over the degrees of freedom \(\underline{s}^{n}\) and \(\underline{t}^{n}\) defines a tree, because only successive row of variables interact.Footnote 1 The marginals associated with the clusters Γ n and γ n can be used in order to express the probability \(p^{\lambda}(\underline{s},\underline{t})\) as

where for the two-dimensional model Γ n and γ n are analogously defined. By taking the λ→∞ limit, the identification

allows to recover the factorization property (23).

Rights and permissions

About this article

Cite this article

Mastromatteo, I. Beyond Inverse Ising Model: Structure of the Analytical Solution. J Stat Phys 150, 658–670 (2013). https://doi.org/10.1007/s10955-013-0707-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-013-0707-y