Abstract

In this paper we consider the numerical solution of fractional differential equations. In particular, we study a step-by-step procedure, defined over a graded mesh, which is based on a truncated expansion of the vector field along the orthonormal Jacobi polynomial basis. Under mild hypotheses, the proposed procedure is capable of getting spectral accuracy. A few numerical examples are reported to confirm the theoretical findings.

Similar content being viewed by others

1 Introduction

In recent years fractional differential equation modelling has become more and more frequent—see, for example, the two monographs [20, 37]. In fact the use of fractional models has quite a long history and early applications considered their behaviour in describing anomalous diffusion in a randomly hetergeneous porous medium [31] and in water resources modelling [6]. In mathematical biology, a number of researchers have considered fractional reaction diffusion equations—see, for example, Bueno-Orovio et al. [16] who have studied via a spectral approach the interfacial properties of the Allen-Cahn equation with a quartic double well potential, a model often used for alloy kinetics and the dynamics of phase boundaries. Henry, Langlands and Wearne [27] analysed Turing pattern formation in fractional activator-inhibitor systems. In a computational medicine setting, Henry and Langlands [26] developed fractional cable models for spiny neuronal dendrites. Orsingher and Behgin [36] have considered the dynamics of fractional chemical kinetics for unimolecular systems using time change. The authors in [17] have made a series of arguments based on Riesz potential theory and the wide range of heterogeneous cardiac tissues for the use of fractional models in cardiac electrophysiology, while Cusimano et al. [18] have explored the effects of fractional diffusion on the cardiac action potential shape and electrical propagation. In addition, Magin et al. [34], Hori et al. [28], Bueno-Orovio and Burrage [15] have considered the calibration of fractional cardiac models through a fractional Bloch–Torrey equation.

Due to these application advances there is clearly a need to develop advanced numerical methods for fractional differential equations. In a purely time fractional setting the standard approach has been to use a first order approximation to the Caputo derivative. Lubich [32] has extended this and developed higher order approximations based on an underlying linear multistep method that are convergent of the order of this multistep method.

There are two important issues when designing effective numerical methods for Fractional Differential equations: memory and non-locality. In the case of memory, in principle one needs to evaluate the numerical approximations all the way back to the initial time point at each time step and this becomes very computationally intensive over long time intervals. Techniques such as fixed time windowing have been proposed [37] and more recently a number of approaches have been considered to compute the discrete convolutions to approximate the fractional operator [39, 44]. Zeng et al. [43] developed fast memory-saving time stepping methods in which the fractional operator is decoupled into a local part with fixed memory length and the history part is computed by Laguerre–Gauss quadrature, whose error is independent of the stepsize.

In the second situation the solutions to FDEs are often non-smooth and may have a strong singularity at \(t = 0\). In these cases the vector field may inherit this singularity behavior and so a constant stepsize would be very inefficient. Thus graded meshes have been proposed by a number of authors [30, 40, 42]. Lubich [33] on the other hand deals with singularities by introducing certain correction terms. Other approaches have been considered such as Adams methods [21], trapezoidal methods [23] and Bernstein splines [38] while Garrappa [24] has given a survey and software tutorial on numerical methods for FDEs.

In this paper, we consider a major improvement of the recent solution approach described in [2, 9], based on previous work on Hamiltonian Boundary value Methods (HBVMs) [7, 8, 11, 14] (also used as spectral methods in time [3, 5, 12, 13]), for solving fractional initial value problems in the form:

where, for sake of brevity, we omit t as a formal argument, at the r.h.s. For the same reason, hereafter the dimension m of the state vector will be also omitted, when unnecessary.

Here, for \(\alpha \in (0,1]\), \(y^{(\alpha )}(t) \equiv D^\alpha y(t)\) is the Caputo fractional derivative:

The Riemann–Liouville integral associated to (2) is given by:

It is known that [37]:

Consequently, the solution of (1) is formally given by:

We shall here generalize the approach given in [2], by defining a step-by-step procedure, whereas the procedure given in [2] was of a global nature. This strategy, when combined with a graded mesh selection, enables better handling of singularities in the derivative of the solution to (1) at the origin, which occur, for example, when f is a suitably regular function. Additionally, our approach partially mitigates issues stemming from the non-local nature of the problem. Indeed, at a given time-step of the integration procedure, it requires the solution of a differential problem within the current time interval along with a finite sequence of pure quadrature problems that account for the contribution brought by the history term.

With this premise, the structure of the paper is as follows: in Sect. 2 we recall some basic facts about Jacobi polynomials, for later use; in Sect. 3 we describe a piecewise quasi-polynomial approximation to the solution of (1); in Sect. 4 the analysis of the new method is carried out; in Sect 5 we report a few numerical tests for assessing the theoretical achievements; at last, in Sect. 6 a few concluding remarks are given.

2 Orthonormal Jacobi Polynomials

Jacobi polynomials form an orthogonal set of polynomials w.r.t. a given weighting function. In more detail, for \(r,\nu >-1\):

where as is usual, \(\varPi _i\) is the vector space of polynomials of degree at most i and \(\delta _{ij}\) is the Kronecker symbol. Consequently, the shifted and scaled Jacobi polynomials

are orthonormal w.r.t.

In particular, hereafter we shall consider the polynomials Footnote 1

with \(\alpha \in (0,1]\) the same parameter in (1), such that:

where we have slightly changed the weighting function, in order that it has a unit integral:

We refer to Gautschi [25] and the accompanying software, for their computation, and for computing the nodes and weights of the Gauss–Jacobi quadrature of order 2k.

Remark 1

As is clear, when in (6)–(8) \(\alpha =1\), we obtain the scaled and shifted Legendre polynomials, orthonormal in [0, 1].

As is well known, the polynomials (6) form an orthonormal basis for the Hilbert space \(L_2[0,1]\), equipped with the scalar product

and the associated norm

Consequently, from the Parseval identity, it follows that any square summable function can be expanded along such a basis, and the corresponding expansion is convergent to the function itself.

That said, with reference to the vector field in (1), let us consider the interval [0, h], and the expansion

with the Fourier coefficients given by:

Consequently, on the interval [0, h], (1) can be rewritten as

and, by virtue of (3)–(4), one obtains:

In particular, due to (7) and (8), one has:

Remark 2

By considering that \(P_0(c)\equiv 1\), from (12) one derives that

Consequently, because of (3), (15) becomes

i.e., (5) with \(t=h\). This clearly explains the use of the Jacobi basis for the expansion (11).

Further, we observe that, when \(\alpha =1\), one retrieves the approach described in [11] for ODE-IVPs.

We also need the following preliminary results.

Lemma 1

Let \(G:[0,h]\rightarrow V\), with V a vector space, admit a Taylor expansion at \(t=0\). Then,

Proof

By the hypothesis and (7), one has:

\(\square \)

Remark 3

Concerning the above result, we observe that, by considering (9)–(10), from the Cauchy–Schwarz theorem one derives that

From Lemma 1, one has the following:

Corollary 1

Assume that the r.h.s. of problem (1) admits a Taylor expansion at \(t=0\), then the coefficients defined at (12) satisfy:

The result of the previous lemma can be weakened as follows (the proof is similar and, therefore, omitted).

Lemma 2

Let \(G:[0,h]\rightarrow V\), with V a vector space, admit a Taylor polynomial expansion of degree k with remainder at \(t=0\). Then,

In such a case, the result of Corollary 1 is weakened accordingly.

Corollary 2

Assume that the r.h.s. of problem (1) admits a Taylor polynomial expansion of degree k with remainder at \(t=0\). Then, the coefficients defined at (12) satisfy:

However, in general, at \(t=0\) the solution may be not regular, since the derivative may be singular (this is, indeed, the case, when f is a regular function). In such a case, we shall resort to the following result.

Lemma 3

Assume that the r.h.s. in (1) is continuous for all \(t\in [0,h]\). Then, for a convenient \(\xi _t\in (0,t)^m\), one hasFootnote 2:

Proof

In fact, from (4), and by using the weighted mean-value theorem for integrals, one has:

\(\square \)

As a consequence, we derive the following weaker results concerning the Fourier coefficients (12).

Corollary 3

In the hypotheses of Lemma 3, and assuming f is continuously differentiable in a neighborhood of \(y_0\), one has:

Proof

In fact, from Lemma 3, one has:

From this relation, and from (12), the statement then follows. \(\square \)

For later use, we also need to discuss the quadrature error in approximating the first s Fourier coefficients (12) by means of the Gauss–Jacobi formula of order 2k, for a convenient \(k\ge s\), whose abscissae are the zeros of \(P_k\), as defined in (7), and with weights:

That is,

Concerning the quadrature errors

the following result holds true.

Theorem 1

Assume the function \(G_{j,h}(c):=P_j(c)f(y(ch))\) be of class \(C^{(2k)}[0,h]\). Then, with reference to (18), one has, for a suitable \(\xi =(0,1)^m:\)

with the constants \(K_j\) independent of both \(G_{j,h}\) and h.

Proof

The statement follows by considering that the quadrature is exact for polynomials in \(\varPi _{2k-1}\). \(\square \)

However, as recalled above, when f is a smooth function, the derivative of the solution of problem (1) may have a singularity at \(t=0\). In such a case, estimates can be also derived (see, e.g., [19, 41] and [35, Theorem 5.1.8]). However, for our purposes, because of (7), for \(j=0,\dots ,s-1\), from Corollary 3 we can easily derive the following one, assuming that \(k\ge s\) and (13) hold true:

Consequently,

In order to derive a polynomial approximation to (13), it is enough to truncate the infinite series in (11) to a finite sum, thus obtaining:

with \(\gamma _j(\sigma )\) formally given by (12) upon replacing y by \(\sigma \). In so doing, (14) and (15) respectively become:

and

It can be shown that Corollary 1, Corollary 2, Lemma 3, and Corollary 3 continue formally to hold for \(\sigma \). Further, by considering the approximation of the Fourier coefficients obtained by using the Gauss–Jacobi quadrature of order 2k,

the result of Theorem 1 continues to hold. Moreover, we shall assume that the expansion

holds true, similarly as (11), from which also (20) follows for the quadrature error \(\varSigma _j(\sigma ,h)\), when \(\sigma \) has a singular derivative at 0. In the next sections, we shall better detail, generalize, and analyze this approach.

3 Piecewise Quasi-Polynomial Approximation

To begin with, in order to obtain a piecewise quasi-polynomial approximation to the solution of (1), we consider a partition of the integration interval in the form:

where

Then, according to (21)–(23), on the first subinterval \([0,h_1]\) we can derive a polynomial approximation of degree \(s-1\) to (11), thus getting the fractional initial value problem

where \(\gamma _j(\sigma _1)\) is formally given by (12), upon replacing y by \(\sigma _1\) at the right-hand side:

The solution of (28) is a quasi-polynomial of degree \(s-1+\alpha \), formally given by:

However, in order to actually compute the Fourier coefficients \(\{\gamma _j(\sigma _1)\}\), one needs to approximate them by using the Gauss–Jacobi quadrature (16)Footnote 3:

In so doing, (30) now formally becomes

Moreover, one solves the system of equations, having (block) dimension s independently of k:

This kind of procedure is typical of HBVM(k, s) methods, in the case of ODE-IVPs [7]: the main difference, here, derives from the non-locality of the operator. As is clear [compare with (23)], the approximation at \(t=h_1\) will be now given by: Footnote 4

Remark 4

For an efficient and stable evaluation of the integrals

we refer to the procedure described in [4].

3.1 The General Procedure

We now generalize the previous procedure for the subsequent integration steps. For later use, we shall denote:

the restriction of the solution on the interval \([t_{n-1},t_n]\). Similarly, we shall denote by \(\sigma (t)\approx y(t)\) the piecewise quasi-polynomial approximation such that:

Then, by using an induction argument, let us suppose one knows the quasi-polynomial approximations

where \(\phi ^\alpha _{i-1}(c,\sigma )\) denotes a history term, to be defined later, such that:

-

in the first subinterval, according to (31), \(\phi _0^\alpha (c,\sigma )\equiv y_0\), \(c\in [0,1]\);

-

for \(i>1\), \(\phi _{i-1}^\alpha (c,\sigma )\) only depends on \(\sigma _1,\dots ,\sigma _{i-1}\).

The corresponding approximations at the grid-points are given by

and assume we want to compute

Hereafter, we shall assume that the time-steps in (26) define a graded mesh. In more detail, for a suitable \(r>1\):

Remark 5

As is clear, in the limit case where \(r=1\), (40) reduces to a uniform mesh with a constant time-step \(h=T/N\).

In order to derive the approximation (39), we start computing the solution of the problem in the subinterval \([t_n,t_{n+1}]\). Then, for \(t\equiv t_n+ch_{n+1}\), \(c\in [0,1]\), one has:

having set the history term

which is a known quantity, since it only depends on \(y_1,\dots ,y_n\) [see (35)]. Consequently, we have obtained

which reduces to (5), when \(n=0\) and \(t\in [0,h]\). Further, by considering the expansion

with the Fourier coefficients given by

one obtains that (42) can be rewritten as

with the value at \(t=h\) given by:

The corresponding approximation is obtained by truncating the series in (42) after s terms, and approximating the corresponding Fourier coefficients via the Gauss–Jacobi quadrature of order 2k,

and

with \(\phi _n^\alpha (c,\sigma )\) an approximation of \(G_n^\alpha (c,y)\) in (41), defined as followsFootnote 5:

having set

which, as we shall see in Sect. 3.3, can be accurately and efficiently computed.

As is clear, in (49) we have used the approximation

with the error term given by [see (17)–(18) and (25)]:

Because of the results of Sect. 2, we shall hereafter assume that

Consequently, considering that \(k\ge s\), \(\alpha \in (0,1)\), and \(j=0,\dots ,s-1\), one deduces:

In so doing, see (47), the Fourier coefficients satisfy the system of equations:

having (block)-size s, independently of the considered value of k.

Clearly,

with \(\gamma _j(\sigma _{n+1})\) formally defined as in (44), upon replacing \(y_{n+1}\) with \(\sigma _{n+1}\), and

the corresponding quadrature errors.

3.2 Solving the Discrete Problems

The discrete problem (55), to be solved at each integration step, can be better cast in vector form by introducing the matrices

and the (block) vectors:

In fact, in so doing (55) can be rewritten asFootnote 6:

This formulation is very similar to that used for HBVMs in the case \(\alpha =1\) [10]. The formulation (56) has a twofold use:

-

it shows that, assuming, for example, f Lipschitz continuous, then the solution exists and is unique, for all sufficiently small timesteps \(h_{n+1}\);

-

it induces a straightforward fixed-point iteration:

$$\begin{aligned} {{\varvec{\gamma }}}^{n+1,0}= & {} {{\varvec{0}}},\nonumber \\ {{\varvec{\gamma }}}^{n+1,\ell }= & {} \mathcal{P}_s^\top \varOmega \otimes I_m f\left( {{\varvec{\phi }}}_n^\alpha + h_{n+1}^\alpha \mathcal{I}_s^\alpha \otimes I_m{{\varvec{\gamma }}}^{n+1,\ell -1}\right) ,\quad \ell =1,2,\dots , \end{aligned}$$(57)which we shall use for the numerical tests.

Concerning the first point, the following result holds true.

Theorem 2

Assume f be Lipschitz with constant L in in the interval \([t_n,t_{n+1}]\). Then, the iteration (57) is convergent for all timesteps \(h_{n+1}\) such that

Proof

In fact, one has:

hence the iteration function defined at (57) is a contraction, when (58) holds true. \(\square \)

A simplified Newton-type iteration, akin to that defined in [10] for HBVMs, will be the subject of future investigations.

Remark 6

We observe that the discrete problem (56) can be cast in a Runge-Kutta type form. In fact, the vector

in argument to the function f at the r.h.s. in (56), can be regarded as the stage vector of a Runge–Kutta method, tailored for the problem at hand. Substituting (56) into (59), and using \(Y^{n+1}\) as argument of f, then gives

It is worth noticing that (60) has (block) dimension k, instead of s. However, by considering that usually \(k> s\) (see Sect. 5), it follows that solving (60) is less efficient than solving (56).

This fact is akin to what happens for a HBVM(k, s) method [7, 8, 11], which is the k-stage Runge–Kutta method obtained when \(\alpha =1\). In fact, for such a method, the discrete problem can also be cast in the form (56), then having (block) dimension s, independently of k (which is usually much larger than s).

From (59), one easily derives that the Butcher tableau of the Runge–Kutta type method is given by:

where \({{\varvec{b}}},{{\varvec{c}}}\in \mathbb {R}^k\) are the vectors with the Gauss–Jacobi weights and abscissae, respectively. As anticipated above, when \(\alpha =1\), (61) becomes the Butcher tableau of a HBVM(k, s) method [7, 8, 11].

Because of what exposed in the previous remark, we give the following definition.

Definition 1

The Runge–Kutta type method defined by (61), with \(\alpha \in (0,1)\), will be referred to as a Fractional HBVM(k, s) method or, in short, FHBVM(k, s).

3.3 Computing the Integrals \(J_j^\alpha (x)\)

From (55) and (49), it follows that one needs to compute the integrals

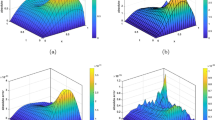

with \(\{c_1,\dots ,c_k\}\) the abscissae of the Gauss–Jacobi quadrature (16). As an example, in Fig. 1 we plot the Gauss–Jacobi abscissae for \(\alpha =0.5\) and \(k=5,10,15,20,25,30\).

Further, there is numerical evidence that a sufficiently high-order Gauss–Legendre formula can compute the integrals (50) up to round-off, when \(x\ge 1.5\). Namely,

and

However, this is no more the case, when computing:

Consequently, there is the problem of accurately and efficiently computing these latter integrals.

Remark 7

We observe that the evaluation of the integrals (63)–(64), \(j=0,\dots ,s-1\), via a 2p-order Gauss–Legendre formula is inexpensive. As matter of fact, only the values \(P_j(\xi _i)\) need to be computed, \(j=0,\dots ,s-1\), with

the Gauss–Legendre abscissae of the considered formula. Further, we observe that, with reference to (62), only the integrals

need to be actually computed: as matter of fact, the remaining integrals,

are inherited from the previous steps.

The starting point to derive an efficient algorithm for computing the integrals (65), is that the Jacobi polynomials (6) satisfy, as does any other family of orthogonal polynomials, a three-term recurrence:

with prescribed \(a_j,b_j,d_j\), \(j\ge 0\). In fact, by setting \(\phi (c)=c\), and using the scalar product (9), to enforce (7), for \(P_0,\dots ,P_{s-1}\), one obtains:

Consequently, by recalling the definition (50), from (67) one has:

and

From the last two formulae, one derives that Jjalfa(a,b,d,alfa,1+ci*r) computes all the integrals in (65) corresponding to the abscissa ci, with Jjalfa the Matlab\(^{\copyright }\) function listed in Table 1, and the vectors a,b,d containing the scalars (68). An implementation of the previous function, using the standard variable precision arithmetic (i.e., using vpa in Matlab\(^{\copyright }\)), allows handling values of s up to 20, at least.

4 Analysis of the Method

From (45) and (47), and with reference to (51)–(54), one derives:

Assuming f Lipschitz with constant L in a suitable neighborhood of the solution, and setting

one then obtains that, for \(h_{n+1}^\alpha \) sufficiently small, and a suitable constant \(K_1>0\):

with

Considering that

and [see (54)]

so that [see (40)], for a suitable constant \(K_2>0\),

one eventually obtains:

At last, by considering that, for \(n=1,\dots ,N-1\),

setting

and considering that \(e_1\le O(h_1^{2\alpha })\), from the Gronwall lemma (see, e.g., [29]) one eventually obtains, for a suitable \(K>0\):

Considering that [see (27) and (40)] \(N = \log _r\left( 1 + T(r-1)/h_1\right) \), the error is optimal when \(h_1^{2\alpha }\sim h_N^{s+\alpha }\).

Remark 8

In the case where the vector field of problem (1) is suitably smooth at \(t=0\), so that a constant timestep \(h=T/N\) can be used, the estimate

can be derived for the error, by using similar arguments as above.

Remark 9

From (72) [or (73)], one deduces that, for a given prescribed accuracy, it is possible to decrease the value of the number N of the time steps, by increasing the degree s of the polynomial approximating the vector field. By considering that, as anticipated in the introduction, one main difficulty in solving the problem (1) stems from the evaluation of the memory terms, reducing N has a welcome impact on the overall complexity of the method.

5 Numerical Tests

We here report a few numerical tests to illustrate the theoretical findings. For all tests, when not otherwise specified, we use \(k=30\) for the Gauss–Jacobi quadrature (16), and \(p=30\) for the Gauss–Legendre quadrature formula (66). Also, the following values of s will be considered:

All the numerical tests have been performed in Matlab\(^{\copyright }\) (Rel. 2023b) on a Silicon M2 laptop with 16GB of shared memory. As anticipated, we use the fixed-point iteration (57) to solve the generated discrete problems (56). The iteration is carried out until full machine accuracy is gained.

5.1 Example 1

The first problem, taken from Garrappa [24], is given by:

whose solution is given by the following Mittag–Leffler functionFootnote 7:

In Table 2, we list the obtained results, in terms of maximum error, when using different values of s and \(h_1\), with \(h_N\) fixed, in order to ascertain the contribution of the first term of the error in the bound (72). In order to satisfy the requirements:

for all combinations of \(h_1\) and N, we use the value \(r=1.01\) in (40), and the number of timesteps N is chosen in order that \(t_N\) is the closest point to the end of the integration interval. The ***, in the line corresponding to \(s=1\), means that the solution is not properly evaluated: this is due to the failure of the fixed-point iteration (57) in the last integration steps (the same notation will be used in the subsequent tables). As is expected from (72), the error decreases as s increases and the initial timestep \(h_1\) decreases. Further, having a large value of s is not effective, if \(h_1\) is not suitably small, and vice versa (again from the bound (72)). Remarkably, by choosing s large enough and \(h_1\) suitably small, full machine accuracy is gained (cf. the last 4 entries in the last column of the table, having the same error).

In Table 3 we list the results obtained by using \(k=s\), which is the minimum value allowed for k. In such a case, the accuracy is generally slightly worse, due to the fact that the quadrature error is of the same order as the truncation error. It is, however, enough choosing k only slightly larger than s, to achieve a comparable accuracy, as is shown in Table 4 for \(k=s+5\). Nevertheless, it must be stressed that choosing larger values of k is not an issue, since the discrete problem (56), to be solved at each integration step, has (block) size s, independently of k.

5.2 Example 2

The next problem that we consider is from Diethelm et al. [21] (see also [24]):

whose solution is

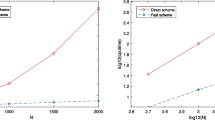

According to Garrappa [24], “this problem is surely of interest because, unlike several other problems often proposed in the literature, it does not present an artificial smooth solution, which is indeed not realistic in most of the fractional-order applications.” Despite this, a constant timestep \(h=1/N\) turns out to be appropriate since, unlike the solution (76), the vector field (75) is very smooth at the origin, as one may see in Fig. 2. In Table 5 we list the maximum error by using different values of N: as is clear, by using \(s\ge 8\), only 32 steps are needed to gain full machine accuracy for the computed solution. Further, in Fig. 3 is the work-precision diagram (i.e., execution time Footnote 8 vs. computed accuracy) for the following methods, used on a uniform grid with \(N+1\) points, with \(N=2^\nu \), as below specified:

-

FHBVM(30,1), \(\nu = 5,6,\dots ,12\);

-

FHBVM(30,2), \(\nu = 4,5,\dots ,12\);

-

FHBVM(30,5), \(\nu = 2,3,\dots ,8\);

-

HFBVM(30,10), \(\nu = 2,3,4,5\);

-

FHBVM(30,20), \(\nu = 2,3\);

-

the BDF-2 method implemented in the Matlab code flmm2 [23],Footnote 9\(\nu = 6,\dots ,20\).

The rationale for this choice is to use FHBVMs both as spectral methods and not, with a further comparison with a state-of-art numerical code. In the plots, the computed accuracy (ca) is measured as follows: if \(e_i\) is the absolute error at the i-th grid point, then

From the obtained results, one concludes that:

-

low-order FHBVMs are less competitive than high-order ones;

-

high-order FHBVMs require less time steps and are, therefore, able to obtain a fully accurate solution;

-

when used as spectrally accurate methods in time, FHBVMs are very effective, and competitive with existing methods.

solution (continuous line) and vector field (dashed line) for problem (75)

Work-precision diagram for Problem (75)

5.3 Example 3

We now consider the following problem taken from Satmari [38],

whose solution is \(y(t) = t^{2/3}+1\). We solve it by using \(h_1=10^{-11}\) and \(r=1.2\). In such a case, \(N=130\) timesteps are needed to cover approximately the integration interval, with \(h_N\le 0.2\). The obtained results, by considering increasing values of s, are listed in Table 6. Also in this case, we obtain full accuracy starting from \(s=8\).

Remark 10

Concerning the choice of the parameters \(h_1\), r, and N for the graded mesh, one has to consider that, in principle [see (72)]:

It is then important to properly choose \(h_1\), and arrange the other parameters accordingly. In such a case, one may reduce the number N of time steps by increasing s, as observed in Remark 9. The optimal choice of such parameters, however, deserves to be further investigated.

5.4 Example 4

Next, we consider the following problem, again taken from [38],

whose solution is \(y(t) = t^{4/3}\). We solve this problem by using a constant timestep \(h=1/N\): in fact, the vector field can be seen to be a polynomial of degree 1. The obtained results are listed in Table 7: an order 1 convergence can be observed for \(s=1\) [which is consistent with (73)], whereas full machine accuracy is obtained for \(s\ge 2\), due to the fact that, as anticipated above, the vector field of problem (78) is a polynomial of degree 1 in t and, consequently, (13) and (21) coincide, for all \(s\ge 2\).

5.5 Example 5

Finally, we reformulate the two problems (77) and (78) as a system of two equations, having the same solutions as above, as follows:

We solve Problem (79) by using the same parameters used for Problem (77): \(h_1=10^{-11}\), \(r=1.2\), and 130 timesteps. The obtained results are again listed in Table 6: it turns out that they are similar to those obtained for Problem (77) and, also in this case, we obtain full accuracy starting from \(s=8\).

6 Conclusions

In this paper we have devised a novel step-by-step procedure for solving fractional differential equations. The procedure, which generalizes that given in [2], relies on the expansion of the vector field along a suitable orthonormal basis, here chosen as the shifted and orthonormal Jacobi polynomial basis. The analysis of the method has been given, along with its implementation details. These latter details show that the method can be very efficiently implemented. A few numerical tests confirm the theoretical findings.

It is worth mentioning that systems with FDEs of different orders can be also solved by slightly adapting the previous framework: as matter of fact, it suffices using different Jacobi polynomials, corresponding to the different orders of the FDEs. This, in turn, allows easily managing fractional differential equations of order \(\alpha >1\), by casting them as a system of \(\lfloor \alpha \rfloor \) ODEs, coupled with a fractional equation of order \(\beta :=\,\alpha -\lfloor \alpha \rfloor \in (0,1)\).

As anticipated in Sect. 3.2, a future direction of investigation will concern the efficient numerical solution of the generated discrete problems, which is crucial when using larger stepsizes. Also the optimal choice of the parameters for the methods will be further investigated, in particular those for generating the graded mesh, as well as the possibility of adaptively defining it. Further, the parallel implementation of the methods could be also considered, following an approach similar to [1].

Data Availability

All data reported in the manuscript have been obtained by a Matlab\(^{\copyright }\) implementation of the methods presented. They can be made available on request.

Notes

Hereafter, for sake of brevity, we shall omit the upper indices for such polynomials.

Hereafter, \(y^{(\alpha )}(\xi _t)\) means that each component of the function is evaluated at the corresponding entry of the argument.

Hereafter, as a notational convention, \(\gamma _j^i:=\hat{\gamma }_j(\sigma _i)\), \(i=1,\dots ,N\).

Hereafter, we shall in general denote by \(\bar{y}_n\) the approximation to \(y(t_n)\), since \(y_n(t)\) will be later used to denote the restriction of y(t) to the subinterval \([t_{n-1},t_n]\), \(n=1,\dots ,N\).

As is usual, the function f, here evaluated in a (block) vector of dimension k, denotes the (block) vector made up by f evaluated in each (block) entry of the input argument.

We refer to [22] and the accompanying software, for its efficient Matlab\(^{\copyright }\) implementation.

The execution time is measured in seconds.

The code is called with parameters tol=1e−15 and itmax=1000.

References

Amodio, P., Brugnano, L.: Parallel implementation of block boundary value methods for ODEs. J. Comput. Appl. Math. 78, 197–211 (1997). https://doi.org/10.1016/S0377-0427(96)00112-4

Amodio, P., Brugnano, L., Iavernaro, F.: Spectrally accurate solutions of nonlinear fractional initial value problems. AIP Confer. Proc. 2116, 140005 (2019). https://doi.org/10.1063/1.5114132

Amodio, P., Brugnano, L., Iavernaro, F.: Analysis of Spectral Hamiltonian Boundary Value Methods (SHBVMs) for the numerical solution of ODE problems. Numer. Algorithms 83, 1489–1508 (2020). https://doi.org/10.1007/s11075-019-00733-7

Amodio, P., Brugnano, L., Iavernaro, F.: A note on a stable algorithm for computing the fractional integrals of orthogonal polynomials. Appl. Math. Lett. 134, 108338 (2022). https://doi.org/10.1016/j.aml.2022.108338

Amodio, P., Brugnano, L., Iavernaro, F.: (Spectral) Chebyshev collocation methods for solving differential equations. Numer. Algoritms 93, 1613–1638 (2023). https://doi.org/10.1007/s11075-022-01482-w

Benson, D.A., Wheatcraft, S.W., Meerschaert, M.M.: Applications of a fractional advection-dispersion equation. Water Resour. Res. 36(6), 1403–1412 (2000)

Brugnano, L., Iavernaro, F.: Line Integral Methods for Conservative Problems. Chapman et Hall/CRC, Boca Raton (2016)

Brugnano, L., Iavernaro, F.: Line integral solution of differential problems. Axioms 7(2), 36 (2018). https://doi.org/10.3390/axioms7020036

Brugnano, L., Iavernaro, F.: A general framework for solving differential equations. Ann. Univer. Ferrara Sez. VII Sci. Mat. 68, 243–258 (2022). https://doi.org/10.1007/s11565-022-00409-6

Brugnano, L., Iavernaro, F., Trigiante, D.: A note on the efficient implementation of Hamiltonian BVMs. J. Comput. Appl. Math. 236, 375–383 (2011). https://doi.org/10.1016/j.cam.2011.07.022

Brugnano, L., Iavernaro, F., Trigiante, D.: A simple framework for the derivation and analysis of effective one-step methods for ODEs. Appl. Math. Comput. 218, 8475–8485 (2012). https://doi.org/10.1016/j.amc.2012.01.074

Brugnano, L., Montijano, J.I., Iavernaro, F., Randéz, L.: Spectrally accurate space-time solution of Hamiltonian PDEs. Numer. Algorithms 81, 1183–1202 (2019). https://doi.org/10.1007/s11075-018-0586-z

Brugnano, L., Montijano, J.I., Iavernaro, F., Randéz, L.: On the effectiveness of spectral methods for the numerical solution of multi-frequency highly-oscillatory Hamiltonian problems. Numer. Algorithms 81, 345–376 (2019). https://doi.org/10.1007/s11075-018-0552-9

Brugnano, L., Frasca-Caccia, G., Iavernaro, F., Vespri, V.: A new framework for polynomial approximation to differential equations. Adv. Comput. Math. 48, 76 (2022). https://doi.org/10.1007/s10444-022-09992-w

Bueno-Orovio, A., Burrage, K.: Exact solutions to the fractional time-space Bloch–Torrey equation for magnetic resonance imaging. Commun. Nonlinear Sci. Numer. Simul. 52, 91–109 (2017)

Bueno-Orovio, A., Kay, D., Burrage, K.: Fourier-spectral methods for fractional in space reaction diffusion equations. BIT 54, 937–954 (2014)

Bueno-Orovio, A., Kay, D., Grau, V., Rodriguez, B., Burrage, K.: Fractional diffusion models of cardiac electrical propagation: role of structural heterogeneity in dispersion of repolarization. J. R. Soc. Interface 11(97), 20140352 (2014)

Cusimano, N., Bueno-Orovio, A., Turner, I., Burrage, K.: On the order of the fractional Laplacian in determining the spatio-temporal evolution of a space fractional model of cardiac electrophysiology. PLoS One 10(12), e0143938 (2015)

De Vore, R., Scott, L.R.: Error bounds for Gaussian quadrature and weighted-\(L^1\) polynomial approximation. SIAM J. Numer. Anal. 21(2), 400–412 (1984). https://doi.org/10.1137/0721030

Diethelm, K.: The Analysis of Fractional Differential Equations. An Application-oriented Exposition using Differential Operators of Caputo Type. Lecture Notes in Math. Springer, Berlin (2010)

Diethelm, K., Ford, N.J., Freed, A.D.: Detailed error analysis for a fractional Adams method. Numer. Algorithms 36, 31–52 (2004). https://doi.org/10.1023/B:NUMA.0000027736.85078.be

Garrappa, R.: Numerical evaluation of two and three parameter Mittag–Leffler functions. SIAM J. Numer. Anal. 53(3), 1350–1369 (2015). https://doi.org/10.1137/140971191

Garrappa, R.: Trapezoidal methods for fractional differential equations: theoretical and computational aspects. Math. Comput. Simul. 110, 96–112 (2015). https://doi.org/10.1016/j.matcom.2013.09.012

Garrappa, R.: Numerical solution of fractional differential equations: a survey and a software tutorial. Mathematics 6(2), 16 (2018). https://doi.org/10.3390/math6020016

Gautschi, W.: Orthogonal Polynomials Computation and Approximation. Oxford University Press (2004)

Henry, B.I., Langlands, T.A.M.: Fractional cable models for spiny neuronal dendrites. Phys. Rev. Letts. 100(12), 128103 (2008)

Henry, B.I., Langlands, T., Wearne, S.: Turing pattern formation in fractional activator-inhibitor systems. Phys. Rev. E 72(2), 026101 (2005)

Hori, M., Fukunaga, I., Masutani, V., Taoka, T., Kamagata, K., Suzuki, Y., et al.: Visualising non Gaussian diffusion—clinical application of q-space imaging and diffusional kurtosis imaging of the brain, and spine. Magn. Reson. Med. Sc. 11, 221–233 (2012)

Lakshmikantham, V., Trigiante, D.: Theory of Difference Equations. Academic Press Inc, Boston (1988)

Li, C., Yi, Q., Chen, A.: Finite difference methods with non-uniform meshes for nonlinear fractional differential equations. J. Comput. Phys. 316, 614–631 (2016)

Lindenberg, K., Yuste, S.B.: Properties of the reaction front in a reaction-subdiffusion process. Noise Complex Syst. Stoch. Dyn. II(5471), 20–28 (2004)

Lubich, Ch.: Fractional linear multistep methods for Abel–Volterra integral equations of the second kind. Math. Comput. 45(172), 463–469 (1985). https://doi.org/10.1090/S0025-5718-1985-0804935-7

Lubich, Ch.: Discretized fractional calculus. SIAM J. Math. Anal. 17, 704–719 (1986)

Magin, R., Feng, X., Baleanu, D.: Solving the fractional order Bloch equation. Concepts Magn. Res., Part A 34A, 16–23 (2009)

Mastroianni, G., Milovanovic, G.: Interpolation processes. In: Basic Theory and Applications. Springer Monogr. Math. Springer, Berlin (2008)

Orsingher, E., Beghin, L.: Fractional diffusion equations and processes with randomly varying time. Ann. Probab. 37(1), 206–249 (2009)

Podlubny, I.: Fractional Differential Equations. An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of their Solution and Some of their Applications. Academic Press, Inc., San Diego (1999)

Satmari, Z.: Iterative Bernstein splines technique applied to fractional order differential equations. Math. Found. Comput. 6, 41–53 (2023). https://doi.org/10.3934/mfc.2021039

Schädle, A., Lopez-Fernandez, M., Lubich, Ch.: Fast and oblivious convolution quadrature. SIAM J. Sci. Comput. 28, 421–438 (2006)

Stynes, M., O’Riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55, 1057–1079 (2017)

Themistoclakis, W.: Some error bounds for Gauss–Jacobi quadrature rules. Appl. Numer. Math. 116, 286–293 (2017). https://doi.org/10.1016/j.apnum.2017.02.009

Zeng, F., Zhang, Z., Karniadakis, G.E.: Second order numerical methods for multi-term fractional differential equations. Comput. Methods Appl. Mech. Eng. 327, 478–502 (2017)

Zeng, F., Turner, I., Burrage, K.: A stable fast time-stepping method for fractional integral and derivative operators. J. Sci. Comput. 77, 283–307 (2018)

Zeng, F., Turner, I., Burrage, K., Karniadakis, G.: A new class of semi-implicit methods with linear complexity for nonlinear fractional differential equations. SIAM J. Sci. Comput. 40(5), A2986–A3011 (2018). https://doi.org/10.1137/18M1168169

Acknowledgements

The authors wish to thank two anonymous referees for their useful comments. L. Brugnano and F. Iavernaro are members of GNCS-INdAM.

Funding

Open access funding provided by Università degli Studi di Firenze within the CRUI-CARE Agreement. No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest, nor Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brugnano, L., Burrage, K., Burrage, P. et al. A Spectrally Accurate Step-by-Step Method for the Numerical Solution of Fractional Differential Equations. J Sci Comput 99, 48 (2024). https://doi.org/10.1007/s10915-024-02517-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02517-1

Keywords

- Fractional differential equations

- Fractional integrals

- Jacobi polynomials

- Hamiltonian Boundary Value Methods

- HBVMs

- FHBVMs