Abstract

An inverse problem framework for constructing reaction systems with prescribed properties is presented. Kinetic transformations are defined and analysed as a part of the framework, allowing an arbitrary polynomial ordinary differential equation to be mapped to the one that can be represented as a reaction network. The framework is used for construction of specific two- and three-dimensional bistable reaction systems undergoing a supercritical homoclinic bifurcation, and the topology of their phase spaces is discussed.

Similar content being viewed by others

1 Introduction

Chemical reaction networks under the mass action kinetics are relevant for both pure and applied mathematics. The time evolution of the concentrations of chemical species is described by kinetic equations which are a subset of first-order, autonomous, ordinary differential equations (ODEs) with polynomial right-hand sides (RHSs). On the one hand, the kinetic equations define a canonical form for a subset of ODEs, thus being important for pure mathematics [1, 2]. They can display not only the chemically regular phenomenon of having a globally stable fixed point, but also the chemically exotic phenomena (multistability, oscillations and chaos). It is then no surprise that chemical reaction networks can perform the same computations as other types of physical networks, such as electronic and neural networks [3]. On the other hand, reaction networks are a versatile modelling tool, decomposing processes from applications into a set of simpler elementary steps (reactions). The exotic phenomena in systems biology often execute specific biological functions, example being the correspondence between limit cycles and biological clocks [4, 5].

The construction of reaction networks displaying prescribed properties may be seen as an inverse problem in formal reaction kinetics [6], where, given a set of properties, a set of compatible reaction networks is searched for. Such constructions are useful in many application areas, including systems biology (as caricature models), synthetic biology (as blueprints), and numerical analysis (as test problems) [7–9]. In systems biology, kinetic ODEs often have higher nonlinearity degree and higher dimension, thus not being easily amenable to mathematical analysis. Having ODEs with lower nonlinearity degree and lower dimension allows for a more detailed mathematical analysis, and also adds to the set of test problems for numerical methods designed for more challenging real-world problems. In synthetic biology, such constructed systems may be used as a blueprint for engineering artificial networks [9, 10].

A crucial property of the kinetic equations is a lack of so-called cross-negative terms [11], corresponding to processes that involve consumption of a species when its concentration is zero. Such terms are not directly describable by reactions, and may lead to negative values of concentrations. The above mentioned issues with cross-negative terms, together with the requirement that the dependent variables are uniformly bounded in time, imply that not every nonnegative polynomial ODE system is kinetic, and, thus, further constrain the possible dynamics. A trivial example of an ODE with a cross-negative term is given by \({\mathrm {d}} x/{\mathrm {d}} t = - k\), for constant \(k > 0\), where the term \(-k\), although a polynomial of degree zero, nevertheless cannot be directly represented by a reaction, and results in \(x < 0\).

In two dimensions, where the phase plane diagram allows for an intuitive reasoning, the exotic dynamics of ODE systems reduces to cycles and multistability. While two-dimensional nonkinetic polynomial ODE systems exhibiting a variety of such dynamics can be easily found in the literature, the same is not true for the more constrained two-dimensional kinetic ODE systems [12] (see also Appendix 1). Motivated by this, this paper consists of two main results: firstly, building upon the framework from [6, 11], an inverse problem framework suitable for constructing the reaction systems is presented in Sect. 3, with the focus on the so-called kinetic transformations, allowing one to map a nonkinetic into a kinetic system. Secondly, in Sect. 4, the framework is used for construction of specific two- and three-dimensional bistable kinetic systems undergoing a global bifurcation known as a supercritical homoclinic bifurcation. The corresponding phase planes contain a stable limit cycle and a stable fixed point, with a parameter controlling the distance between them, and their topology is discussed. Definitions and basic results regarding reaction systems are presented in Sect. 2. A summary of the paper is presented in Sect. 5.

2 Notation and definitions

The notation and definitions in this paper are inspired by [11, 13, 14].

Definition 2.1

Let \({\mathbb {R}}\) be the space of real numbers, \({\mathbb {R}}_{\ge }\) the space of nonnegative real numbers, \({\mathbb {R}}_{>}\) the space of positive real numbers and \({\mathbb {N}} = \{0, 1, 2, 3, \ldots \}\) the set of natural numbers. Given a finite set \({\mathcal {S}}\), with cardinality \(|{\mathcal {S}}| = S\), the real space of formal sums \(c = \sum _{s \in {\mathcal {S}}} c_s s\) is denoted by \({\mathbb {R}}^{{\mathcal {S}}}\) if \(c_s \in {\mathbb {R}}\) for all \(s \in {\mathcal {S}}\). It is denoted by \({\mathbb {R}}_{\ge }^{{\mathcal {S}}}\) if \(c_s \in {\mathbb {R}}_{\ge }\) for all \(s \in {\mathcal {S}}\); by \({\mathbb {R}}_{>}^{{\mathcal {S}}}\) if \(c_s \in {\mathbb {R}}_{>}\) for all \(s \in {\mathcal {S}}\); and by \({\mathbb {N}}^{{\mathcal {S}}}\) if \(c_s \in {\mathbb {N}}\) for all \(s \in {\mathcal {S}}\); where the number \(c_s\) is called the s-component of c for \(s \in {\mathcal {S}}\). Support of \(c \in {\mathbb {R}}^{{\mathcal {S}}}\) is defined as \(\text {supp}(c) = \{s \in {\mathcal {S}}: c_s \ne 0\}\). Complement of a set \({\mathcal {M}} \subset {\mathcal {S}}\) is denoted by \({\mathcal {M}}^{\mathsf {c}}\), and given by \({\mathcal {M}}^{\mathsf {c}} = {\mathcal {S}} \setminus {\mathcal {M}}\).

The formal sum notation is introduced so that unnecessary ordering of elements of a set can be avoided, such as when general frameworks involving sets are described, and when objects under consideration are vector components with irrelevant ordering. The usual vector notation is used when objects under consideration are equations in matrix form, and is put into using starting with Eq. (9).

2.1 Reaction networks and reaction systems

Definition 2.2

A reaction network is a triple \(\{{\mathcal {S}}, {\mathcal {C}}, {\mathcal {R}}\}\), where

-

(i)

\({\mathcal {S}}\) is a finite set, with elements \(s \in {\mathcal {S}}\) called the species of the network.

-

(ii)

\({\mathcal {C}} \subset {\mathbb {N}}^{{\mathcal {S}}}\) is a finite set, with elements \(c \in {\mathcal {C}}\), called the complexes of the network, such that \(\bigcup _{c \in {\mathcal {C}}} \text {supp}(c) = {\mathcal {S}}\). Components of c are called the stoichiometric coefficients.

-

(iii)

\({\mathcal {R}} \subset {\mathcal {C}} \times {\mathcal {C}}\) is a binary relation with elements \(r = (c,c^{\prime })\), denoted \(r = c \rightarrow c^{\prime }\), with the following properties:

-

(a)

\(\forall c \in {\mathcal {C}}\) \((c \rightarrow c) \notin {\mathcal {R}}\);

-

(b)

\(\forall c \in {\mathcal {C}}\) \(\exists c^{\prime } \in {\mathcal {C}}\) such that \((c \rightarrow c^{\prime }) \in {\mathcal {R}}\) or \((c^{\prime } \rightarrow c) \in {\mathcal {R}}\).

Elements \(r = c \rightarrow c^{\prime }\) are called reactions of the network, and it is said that c reacts to \(c^{\prime }\), with c being called the reactant complex, and \(c^{\prime }\) the product complex. The order of reaction r is given by \(o_r = \sum _{s \in {\mathcal {S}}} c_s < \infty \) for \(r = c \rightarrow c^{\prime } \in {\mathcal {R}}\).

-

(a)

Note that as set \({\mathcal {R}}\) implies sets \({\mathcal {S}}\) and \({\mathcal {C}}\), reaction networks are denoted with \({\mathcal {R}}\), for brevity. Also, as it is unlikely that a reaction between more than three reactants occurs [11], in this paper we consider reactions with \(o_r \le 3\). To represent some of the non-chemical processes as quasireactions, the zero complex is introduced, denoted with \(\varnothing \), with the property that \(\text {supp}(\varnothing ) = \emptyset \), where \(\emptyset \) is the empty set.

Definition 2.3

Let \({\mathcal {R}}\) be a reaction network and let \(\kappa : {\mathbb {R}}_{\ge }^{{\mathcal {S}}} \rightarrow {\mathbb {R}}_{\ge }^{{\mathcal {R}}}\) be a continuous function which maps \(x \in {\mathbb {R}}_{\ge }^{{\mathcal {S}}}\) (called “species concentrations”) into \(\kappa (x) \in {\mathbb {R}}_{\ge }^{{\mathcal {R}}}\) (called “reaction rates”). Then \(\kappa \) is said to be a kinetics for \({\mathcal {R}}\) provided that, for all \(x \in {\mathbb {R}}_{\ge }^{{\mathcal {S}}}\) and for all \(r = (c \rightarrow c^{\prime }) \in {\mathcal {R}}\), positivity \(\kappa _{r}(x) > 0\) is satisfied if and only if \({\mathrm {supp}}(c) \subset {\mathrm {supp}}(x)\).

An interpretation of Definition 2.3 is that a reaction, to which a kinetics can be associated, can occur if and only if all the reactant species concentrations are nonzero.

Definition 2.4

A reaction network \({\mathcal {R}}\) augmented with a kinetics \(\kappa \) is called a reaction system, and is denoted \(\{{\mathcal {R}}, \kappa \}\).

Definition 2.5

Given a reaction system \(\{{\mathcal {R}}, \kappa \}\), the induced kinetic function, \({\mathcal {K}}(\cdot ; \, {\mathcal {R}}) : {\mathbb {R}}_{\ge }^{{\mathcal {S}}} \rightarrow {\mathbb {R}}^{{\mathcal {S}}}\), is given by \({\mathcal {K}}(x ; \, {\mathcal {R}}) = \sum _{r \in {\mathcal {R}}} \kappa _{r}(x) (c^{\prime } - c)\) where \(r = c \rightarrow c^{\prime }\). The induced system of kinetic equations, describing the time evolution of species concentrations \(x \in {\mathbb {R}}_{\ge }^{{\mathcal {S}}}\), takes the form of a system of autonomous first-order ordinary differential equations (ODEs), and is given by

Note that the kinetic function uniquely defines the system of kinetic equations, and vice-versa. In this paper, the species concentrations satisfying equation (1) are required to be finite, i.e. \(x_s < \infty \), for \(s \in {\mathcal {S}}\), and for \(t \ge 0\). Infinite-time blow-ups are allowed for initial conditions located on a finite number of \((S-z)\)-dimensional subspaces of \({\mathbb {R}}_{\ge }^{{\mathcal {S}}}\), \(z \ge 1\). Finite-time blow-ups [15], being chemically more unrealistic, are not allowed in this paper.

Definition 2.6

Kinetics \(\kappa \) is called the mass action kinetics if \(\kappa _{r}(x) = k_{r} x^{c}\), for \(r = (c \rightarrow c^{\prime }) \in {\mathcal {R}}\), where \(k_{r} > 0\) is the rate constant of reaction r, and \(x^{c} = \prod _{s \in S} x_s^{c_{s}}\), with \(0^0 = 1\). A reaction system with the mass action kinetics is denoted \(\{{\mathcal {R}}, k\}\), and the corresponding kinetic function is denoted \({\mathcal {K}}(x; \, k) \equiv {\mathcal {K}}(x; \, {\mathcal {R}}) = \sum _{r \in {\mathcal {R}}} k_{r} (c^{\prime } - c) x^{c}\), where \(k \in {\mathbb {R}}_{>}^{{\mathcal {R}}}\).

A review of the mass action kinetics can be found in [16]. In this paper, most of the results are stated with kinetics fixed to the mass action kinetics. Let us note that the results are also applicable to nonpolynomial ODEs that can be reduced to the polynomial ones [1].

Example 1

Consider the following reaction network (consisting of one reaction) under the mass action kinetics:

so that \({\mathcal {S}} = \{s_1,s_2\}\), \({\mathcal {C}} = \{ s_1 + s_2, 2 s_2\}\), \({\mathcal {R}} = \{ s_1 + s_2 \rightarrow 2 s_2 \}\) and \(k = \{k_{1}\}\). Concentration \(x \in {\mathbb {R}}_{\ge }^{{\mathcal {S}}}\) has two components. To simplify our notation, we write \(x_1 = x_{s_1}\), \(x_2 = x_{s_2}\), and \({\mathcal {K}}_{1}(x ; \, k) = {\mathcal {K}}_{s_1}(x ; \, {\mathcal {R}})\), \({\mathcal {K}}_{2}(x ; \, k) = {\mathcal {K}}_{s_2}(x ; \, {\mathcal {R}})\). Then the induced system of kinetic equations is given by

2.2 Kinetic and nonkinetic functions

In this subsection, nonkinetic functions are defined, and further notation for kinetic and nonkinetic functions taking the mass action form is presented.

Definition 2.7

Let \(f: {\mathbb {R}}^{{\mathcal {S}}}_{\ge } \rightarrow {\mathbb {R}}^{{\mathcal {S}}}\) be given by \(f_{s}(x) = \sum _{r \in {\mathcal {R}}} f_{s r}(x)\), where \(f_{s r}(x) \in {\mathbb {R}}\), for \(x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\), \(s \in {\mathcal {S}}\) and \(r \in {\mathcal {R}}\). If \(\exists s \in {\mathcal {S}}\), \(\exists r \in {\mathcal {R}}\) and \(\exists x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\) such that \(s \in {\mathrm {supp}}^{\mathsf {c}}(x)\) and \(f_{s r}(x) < 0\), then \(f_{s r}(x)\) is called a cross-negative term, and function f(x) and ODE system \({\mathrm {d}} x/{\mathrm {d}} t = f(x)\) are said to be nonkinetic.

An interpretation of a cross-negative term is that the process corresponding to such a term would consume at least one reactant even when its concentration is zero, so that it cannot be represented as kinetic reactions.

Kinetic and nonkinetic functions taking the mass action kinetics form are central to this paper. The related notation is introduced in the following definition.

Definition 2.8

Let \({\mathcal {P}}(\cdot ; \, k):{\mathbb {R}}^{{\mathcal {S}}} \rightarrow {\mathbb {R}}^{{\mathcal {S}}}\), \(k \in {\mathbb {R}}^{{\mathcal {R}}}\), be a polynomial function with polynomial degree \({\mathrm {deg}}({\mathcal {P}}(x; \, k)) \le m\), \(m \in {\mathbb {N}}\), i.e. \({\mathcal {P}}_s(x ; \, k)\) is a polynomial in \(x \in {\mathbb {R}}^{{\mathcal {S}}}\) of degree at most m with coefficients \(k \in {\mathbb {R}}^{{\mathcal {R}}}\), for all \(s \in {\mathcal {S}}\). Then, the set of functions \({\mathcal {P}}(x ; \, k)\) is denoted by \({\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\). If \({\mathcal {P}}(x ; \, k)\) is a kinetic function, it is denoted by \({\mathcal {K}}(x; \, k)\), \(k \in {\mathbb {R}}_{>}^{{\mathcal {R}}}\), and the set of such functions is denoted by \({\mathbb {P}}_{m}^{{\mathcal {K}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\). If \({\mathcal {P}}(x ; \, k)\) is a nonkinetic function, it is denoted by \({\mathcal {N}}(x ; \, k)\), \(k \in {\mathbb {R}}^{{\mathcal {R}}}\), and the set of such functions with domain \({\mathbb {R}}^{{\mathcal {S}}}\) is denoted by \({\mathbb {P}}_{m}^{{\mathcal {N}}}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\), while with domain \({\mathbb {R}}_{\ge }^{{\mathcal {S}}}\) by \({\mathbb {P}}_{m}^{{\mathcal {N}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\).

Note that a system \(\{R, k\}\), corresponding to \({\mathcal {N}}(x ; \, k)\) in Definition 2.8, has a well-defined reaction network R (for \(r = c \rightarrow c^{\prime }\), \(r \in {\mathcal {R}}\), we restrict \(c, c^{\prime }\) to positive integers), but an ill-defined kinetics taking the mass action form (we allow set k to have elements that are negative). Thus, set k corresponding to \({\mathcal {N}}(x ; \, k)\) cannot be interpreted as a set of reaction rate constants, as opposed to set k corresponding to \({\mathcal {K}}(x ; \, k)\) (see also Example 2). Note also that \({\mathbb {P}}_{m}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) = {\mathbb {P}}_{m}^{{\mathcal {K}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) \cup {\mathbb {P}}_{m}^{{\mathcal {N}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\), with \({\mathbb {P}}_{m}^{{\mathcal {K}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) \cap {\mathbb {P}}_{m}^{{\mathcal {N}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) = \emptyset \).

2.3 Properties of kinetic functions

From Definition 2.3 it follows that a kinetic function \({\mathcal {K}}(x ; \, {\mathcal {R}})\) has a structural property: cross-negative terms are absent. In this subsection, further properties of \({\mathcal {K}}(x ; \, {\mathcal {R}})\) are defined: nonnegativity (absence of cross-negative effect), and a structural property called x-factorability.

Definition 2.9

Let \(f: {\mathbb {R}}^{{\mathcal {S}}}_{\ge } \rightarrow {\mathbb {R}}^{{\mathcal {S}}}\) be given by \(f_{s}(x) = \sum _{r \in {\mathcal {R}}} f_{s r}(x)\), where \(f_{s r}(x) \in {\mathbb {R}}\), for \(x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\), \(s \in {\mathcal {S}}\) and \(r \in {\mathcal {R}}\). If \(\forall s \in {\mathcal {S}}\), \(\forall x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\), \(s \in {\mathrm {supp}}^{\mathsf {c}}(x) \Rightarrow f_s(x) \ge 0\), then f(x) and \({\mathrm {d}} x/{\mathrm {d}} t = f(x)\) are said to be nonnegative. Otherwise, f(x) and \({\mathrm {d}} x/{\mathrm {d}} t = f(x)\) are said to be negative, and a cross-negative effect is said to exists \(\forall x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\) for which \(\exists s \in {{\mathcal {S}}}\) such that \(s \in {\mathrm {supp}}^{\mathsf {c}}(x)\) and \(f_s(x) < 0\).

Note that the absence of cross-negative terms implies nonnegativity, but the converse is not necessarily true [11, 17], i.e. an ODE system may have cross-negative terms, without having a cross-negative effect, as we will show in Example 2.

Cross-negative terms play an important role in mathematical constructions of reaction systems, in the context of chaos in kinetic equations, and pattern formation via Turing instabilities [18, 19]. It has been proved that quadratic two-dimensional ODEs without cross-negative terms, and of the form such that the induced reactions involve at most two reactants and two products, cannot display limit cycle oscillations [12, 20]. In Appendix 1, by generalizing [12], we prove that the nonexistence of a cross-negative effect in the ODEs of such form is a sufficient condition for nonexistence of limit cycles.

Example 2

Consider the following ODE system with polynomial RHS:

where \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{2}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\), \(S = 2\), \(k \in {\mathbb {R}}\) and \(x = \{x_1, x_2\}\). Considering \(x_1 = 0\) and \(x_2 > 0\), it follows that \({\mathcal {P}}_1(\{0, x_2\}; \, k) = 1 + 2 k x_2 + x_2^2\). Then:

-

(i)

If \(k \ge 0\), then (5)–(6) contains no cross-negative terms, and so it is kinetic: \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{2}^{{\mathcal {K}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\).

-

(ii)

If \(k < 0\), then (5)–(6) contains one cross-negative term, \(2 k x_2\), and so it is nonkinetic: \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{2}^{{\mathcal {N}}}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\).

System (5)–(6) induces a reaction system only in case (i). In particular, nonnegative ODE system (5)–(6) with \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{2}^{{\mathcal {N}}}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\) in part (ii)(a) does not induce a reaction system (although, given a nonnegative initial condition, the solution of (5)–(6) is nonnegative for all forward times).

Definition 2.10

Let \(f: {\mathbb {R}}^{{\mathcal {S}}}_{\ge } \rightarrow {\mathbb {R}}^{{\mathcal {S}}}\) be given by \(f_{s}(x) = \sum _{r \in {\mathcal {R}}} f_{s r}(x)\), where \(f_{s r}(x) \in {\mathbb {R}}\), for \(x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\), \(s \in {\mathcal {S}}\) and \(r \in {\mathcal {R}}\). Then term \(f_{s r}(x)\) is said to be \(x_s\)-factorable if \(f_{s r}(x) = x_s p_{s r}(x)\), where \(p_{s r}(x)\) is a polynomial function of x. If \(\exists s \in {\mathcal {S}}\), such that \(f_s(x) = x_s \sum _{r \in {\mathcal {R}}} p_{s r}(x)\), then f(x) and ODE system \({\mathrm {d}} x/{\mathrm {d}} t = f(x)\) are said to be \(x_s\)-factorable. If \(\forall s \in {\mathcal {S}}\) it is true that \(f_s(x) = x_s \sum _{r \in {\mathcal {R}}} p_{s r}(x)\), then f(x) and ODE system \({\mathrm {d}} x/{\mathrm {d}} t = f(x)\) are said to be x-factorable.

Example 3

System (3)–(4) is x-factorable, since \({\mathcal {K}}_{1}(x; \, k) = x_1 (-k_1 x_2)\) and \({\mathcal {K}}_{2}(x; \, k)= x_2 (k_1 x_1)\).

X-factorable ODE systems are a subset of kinetic equations under the mass action kinetics [21] (see also Sect. 3.2.2).

3 Inverse problem for reaction systems

In some applications, we are interested in the direct problem: we are given a reaction network with kinetics, i.e. a reaction system \(\{{\mathcal {R}}, \kappa \}\), and we then analyse the induced system of kinetic equations (1) in order to determine properties of the reaction system. For example, an output of a direct problem might consist of verifying that the kinetic equations undergo a bifurcation. In this paper, we are interested in the inverse problem: we are given a property of an unknown reaction system, and we would then like to construct a reaction system displaying the property. The inverse problem framework described in this section is inspired by [6, 11].

The first step in the inverse problem is, given a quantity that depends on a kinetic function, to find a compatible kinetic function \({\mathcal {K}}(x ; \, {\mathcal {R}})\), while the second step is then to find a reaction system \(\{{\mathcal {R}}, \kappa \}\) induced by the kinetic function. The second step is discussed in more detail in Sect. 3.1, while the first step in Sect. 3.2. The constructions of a reaction system \(\{{\mathcal {R}}, \kappa \}\) often involve constraints defining simplicity of the system (e.g. see [22]), and the simplicity can be related to the kinetic equations (structure and dimension of the equations, and/or the phase space), and/or to reaction networks (cardinality, conservability, reversibility, deficiency). How the simplicity constraints are prioritized depends on the application area, with simplicity of the kinetic equations being more important for mathematical analysis, while simplicity of the reaction networks for synthetic biology.

3.1 The canonical reaction network

Let us assume that we are able to construct an ODE system of the form (1) where its RHS is a kinetic function, \({\mathcal {K}}(x ; \, {\mathcal {R}})\), and the system has the property required by the inverse problem. Then, one can always find a reaction system induced by the kinetic function [6, 18]. While, for a fixed kinetics, a reaction network induces a kinetic function uniquely by definition (see Definition 2.5), the converse is not true—the inverse mapping of the kinetic function to the reaction networks is not unique—a fact known as the fundamental dogma of chemical kinetics [14, 18, 23, 24]. For example, in [24], for a fixed kinetic function and a fixed set of complexes (\({\mathcal {C}}\) fixed), mixed integer programming is used for numerical computation of different induced reaction networks with varying properties. On the other hand, a constructive proof that every kinetic function induces a reaction system is given in [6, 18], where \({\mathcal {C}}\) is generally not fixed (product complexes may be created), but the construction can be performed analytically, and it uniquely defines an induced reaction system for a given kinetic function under the mass action kinetics. The same procedure is used in this paper, so it is now defined.

Definition 3.1

Let \(\kappa : {\mathbb {R}}_{\ge }^{{\mathcal {S}}} \rightarrow {\mathbb {R}}_{\ge }^{{\mathcal {R}}}\) be a kinetics. Consider the kinetic function given by \({\mathcal {K}}(x ; \, {\mathcal {R}}) = \sum _{r \in {\mathcal {R}}} d_r \kappa _{r}(x)\), where \(x \in {\mathbb {R}}^{{\mathcal {S}}}_{\ge }\) and \(d_r \in {\mathbb {R}}^{{\mathcal {S}}}\). Let us map \({\mathcal {K}}(x ; \, {\mathcal {R}})\) to a reaction system \(\{{\mathcal {R}}_{{\mathcal {K}}^{-1}},\kappa _{{\mathcal {K}}^{-1}}\}\) with complexes and kinetics given by:

-

(i)

Reactant complexes, \(c_r\), are obtained from \(\kappa _{r}(x)\) for \(r \in {\mathcal {R}}\). The zero term in \({\mathcal {K}}(x ; \, {\mathcal {R}})\) is not allowed to induce reactant complexes. Then, the complexes are uniquely obtainable in the case of the mass action kinetics.

-

(ii)

Reaction \(c_r \rightarrow c_{rs}^{\prime }\) is then constructed for each \(r \in {\mathcal {R}}\) and \(s \in {\mathcal {S}}\), where new product complexes are given by \(c_{rs}^{\prime } = c_r + {\mathrm {sign}}(d_{rs}) s\), with \({\mathrm {sign}}(\cdot )\) being the sign function.

-

(iii)

The new kinetics is then defined as \(\kappa _{{\mathcal {K}}^{-1} r^{\prime }}(x) \equiv |d_{rs}| \kappa _{r}(x)\), for \(r \in {\mathcal {R}}\), \(s \in {\mathcal {S}}\), where \(r^{\prime } \in {\mathcal {R}}_{{\mathcal {K}}^{-1}}\).

The induced reaction system \(\{{\mathcal {R}}_{{\mathcal {K}}^{-1}},\kappa _{{\mathcal {K}}^{-1}}\}\) is called the canonical reaction system, with \({\mathcal {R}}_{{\mathcal {K}}^{-1}}\) being the canonical reaction network.

Note that the procedure in Definition 3.1 creates a reaction for each term in each kinetic equation. Note also that each reaction leads to a change in copy number of precisely one chemical species, and the change in the copy number is equal to one. Thus, the canonical reaction networks are simple in the sense that they can be constructed from a kinetic function in a straightforward way, while they generally do not contain minimal number of reactions, nor complexes.

Example 4

The canonical reaction network for system (3)–(4) is given by

so that \({\mathcal {S}} = \{s_1,s_2\}\), \({\mathcal {C}} = \{ s_1 + s_2, s_2, s_1 + 2 s_2\}\), \({\mathcal {R}}_{{\mathcal {K}}^{-1}} = \{ s_1 + s_2 \rightarrow s_2, s_1 + s_2 \rightarrow s_1 + 2 s_2 \}\) and \(k_{{\mathcal {K}}^{-1}} = \{k_{1},k_{2}\}\), \(k_2 = k_1\). Note that the canonical reaction network (7) contains more reactions than the original network (2).

3.2 Kinetic transformations

Firstly, mapping a solution-dependent quantity to the RHS of an ODE system is much more likely to result in nonkinetic functions, \({\mathcal {N}}(x ; \, {\mathcal {R}})\), on the RHS (see Definition 2.7) [11]. However, only kinetic functions induce reaction networks, as exemplified in Example 2. Secondly, even if mapping a solution-dependent quantity results in a kinetic function, it may be necessary to modify the function in order to satisfy given constraints, and this may change the kinetic function into a nonkinetic function. For these two reasons, it is beneficial to study mappings that can transform arbitrary functions into kinetic functions. This motivates the following definition, for the case of mass action kinetics, that relies on the notation introduced in Definition 2.8.

Definition 3.2

Let \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\), \(k \in {\mathbb {R}}^{{\mathcal {R}}}\), i.e. \({\mathcal {P}}(x; \,k)\) is a polynomial function. Consider the corresponding ODE system in the formal sum notation

where \(x \equiv x(t) \in {\mathbb {R}}^{{\mathcal {S}}}\). Then, a transformation \(\varPsi \) is called a kinetic transformation if the following conditions are satisfied:

-

(i)

\(\varPsi {:}\, {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) \rightarrow {\mathbb {P}}_{\bar{m}}^{{\mathcal {K}}}({\mathbb {R}}_{\ge }^{{\mathcal {\bar{S}}}}; \, {\mathbb {R}}^{{\mathcal {\bar{S}}}})\), \(\bar{m} \ge m\), \(\bar{S} \ge S\), maps the polynomial function \({\mathcal {P}}(x; \, k)\) into a kinetic function \({\mathcal {K}}(\bar{x}; \, \bar{k}) \equiv (\varPsi ({\mathcal {P}}) )(\bar{x}; \, \bar{k})\) for \(\bar{x} \in {\mathbb {R}}_{\ge }^{{\mathcal {\bar{S}}}}\) and \(\bar{k} \in {\mathbb {R}}_{>}^{{\mathcal {\bar{R}}}}\).

-

(ii)

Let \(x^{*}\) be a fixed point of (8) that is mapped by \(\varPsi \) to fixed point \(\bar{x}^{*} \in {\mathbb {R}}_{\ge }^{{\mathcal {\bar{S}}}}\) of the system of kinetic equations (1) with \({\mathcal {K}}(\bar{x}; \, \bar{k})\) on its RHS. Let also the eigenvalue of the Jacobian matrix of \({\mathcal {P}}(x; \, k)\), \(J(x^{*}; \, k)\), denoted by \(\lambda _{n}\), be mapped to the eigenvalue of Jacobian of \({\mathcal {K}}(\bar{x}; \, \bar{k})\), \(J_{\varPsi }(\bar{x}^{*}; \, \bar{k})\), which is denoted by \(\bar{\lambda }_{n}\), for \(n = 1, 2, \ldots , S\). Then, for every such fixed point \(x^{*}\), it must be true that \({\mathrm {sign}}({\mathrm {Re}}(\lambda _{n})) = {\mathrm {sign}}({\mathrm {Re}}(\bar{\lambda }_{n}))\) (preservation of the fixed point stability), \({\mathrm {sign}}({\mathrm {Im}}(\lambda _{n})) = {\mathrm {sign}}({\mathrm {Im}}(\bar{\lambda }_{n}))\) (preservation of the fixed point orientability), \(n = 1, 2, \ldots , S\), and, if there are any additional eigenvalues \(\bar{\lambda }_{n}\), \(n = S+1, S+2, \ldots , \bar{S}\), they must satisfy \({\mathrm {sign}}({\mathrm {Re}}(\bar{\lambda }_{n})) < 0\) (stability of the fixed point along the added dimensions), where \({\mathrm {Re}}(\cdot )\) and \({\mathrm {Im}}(\cdot )\) denote the real and imaginary part of a function.

If any of the condition (i)–(ii) is not true, \(\varPsi \) is called a nonkinetic transformation.

Put more simply, given an input polynomial function, a kinetic transformation must (i) map the input polynomial function into an output kinetic function, and (ii) the output function must be linearly locally topologically equivalent to the input function in the neighbourhood of the corresponding fixed points, and the fixed points are asymptotically stable along any additional dimensions of the output function (corresponding to the additional species), preventing the state of the system from escaping the fixed point neighbourhood along the added dimensions. Let us note that the output function is defined only in the nonnegative orthant, so that the topological equivalence must hold only near the fixed points of the input function that are mapped to the nonnegative orthant under kinetic transformations.

One may wish to impose a set of constraints on an output function, such as requiring that a predefined region of interest in the phase space of the input function is mapped to the positive orthant of the corresponding output function. The subset of constraints is now defined that can be formulated purely in terms of the reaction rate constants.

Definition 3.3

Let \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\), \(k \in {\mathbb {R}}^{{\mathcal {R}}}\). Let also \(\phi _j : {\mathbb {R}}^{{\mathcal {R}}} \rightarrow {\mathbb {R}}\) be a continuous function, mapping set k into \(\phi _j(k) \in {\mathbb {R}}\), \(j = 1, 2, \ldots , J\). Then, set \(\varPhi \equiv \{\phi _j(k) \ge 0 : j = 1, 2, \ldots , J\}\) is called a set of constraints.

There are two sets of kinetic transformations. The first, and the preferred, set of possible kinetic transformations are affine transformations, which are discussed in Sect. 3.2.1. Affine transformations may be used, not only as possible kinetic transformations, but also to satisfy a set of constraints. The second set, necessarily used when affine transformations fail, are nonlinear transformations that replace cross-negative terms, with x-factorable terms (see Definition 2.10), without introducing new cross-negative terms, and two such transformations are discussed in Sects. 3.2.2 and 3.2.3. In choosing a nonlinear transformation, one generally chooses between obtaining, on the one hand, lower-dimensional kinetic functions with higher-degree of nonlinearity [i.e. lower \(\bar{S}/S\) and higher \(\bar{m}/m\) in Definition 3.2(i)] and/or higher numbers of the nonlinear terms, and, on the other hand, higher-dimensional kinetic functions with lower degree of nonlinearity (i.e. higher \(\bar{S}/S\) and lower \(\bar{m}/m\)) and/or lower numbers of the nonlinear terms.

Before describing the transformations in a greater detail, the usual vector notation is introduced and related to the formal sum notation from Sect. 2. The vector notation is used when ODE systems are considered in matrix form, while the formal sum notation is used when ODE systems are considered component-wise.

Notation

Let \(|{\mathcal {S}}| = S\), \(|{\mathcal {C}}| = C\) and \(|{\mathcal {R}}| = R\), and suppose \({\mathcal {S}}\), \({\mathcal {C}}\) and \({\mathcal {R}}\) are each given a fixed ordering with indices being \(n = 1, 2, \ldots , S\), \(i = 1, 2, \ldots , C\), and \(l = 1, 2, \ldots , R\), respectively, i.e. one can identify the ordered components of formal sums with components of Euclidean vectors. Let also the indices \(s_n\) be denoted by n, \(n = 1, 2, \ldots , S\), for brevity. Then, the kinetic equations under the mass action kinetics in the formal sum notation are given by (1). In this section, we start with equations which have more general polynomial, and not necessarily kinetic, functions on the RHS, i.e. the ODE system is written in the formal sum notation as (8), while in the usual vector notation by

where \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}}) \in {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S})\), \({\mathbf {x}} \in {\mathbb {R}}^{S}_{\ge }\), and \({\mathbf {k}} \in {\mathbb {R}}^{R}\).

3.2.1 Affine transformation

Definition 3.4

Consider applying an arbitrary nonsingular matrix \(A \in {\mathbb {R}}^{S \times S}\) on Eq. (9), resulting in:

where \(\bar{{\mathbf {x}}} = A {\mathbf {x}}\), and \(\bar{{\mathbf {k}}}\) is a vector of new rate constants obtained from \({\mathbf {k}}\) by rewriting the polynomial on the RHS of (10) into the mass action form. Then \(\varPsi _A : {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S}) \rightarrow {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S})\), mapping \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \,{\mathbf {k}})\) to \((\varPsi _A \varvec{{\mathcal {P}}})(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\), is called a centroaffine transformation (also known as a linear transformation). If A is an orthogonal matrix, then \(\varPsi _A\) is called an orthogonal transformation.

Definition 3.5

Consider substituting \(\bar{{\mathbf {x}}} = {\mathbf {x}} + \varvec{{\mathcal {T}}}\) in Eq. (9), where \(\varvec{{\mathcal {T}}} \in {\mathbb {R}}^{S}\), which results in:

where \(\bar{{\mathbf {k}}}\) is a vector of the new rate constants obtained from \({\mathbf {k}}\) by rewriting the polynomial on the RHS of (11) into the mass action form. Then \(\varPsi _{{\mathcal {T}}}: {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S}) \rightarrow {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S})\), mapping \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}})\) to \((\varPsi _{\mathcal {T}} \varvec{{\mathcal {P}}})(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\), is called a translation transformation.

A composition of a translation and a centroaffine transformation, \(\varPsi _{A,{\mathcal {T}}} = \varPsi _{A} \circ \varPsi _{{\mathcal {T}}}\), i.e. an affine transformation, may be used as a possible kinetic transformation (see Definition 3.2). Let us note that condition (ii) in Definition 3.2 is necessarily satisfied for all affine transformation, i.e. affine transformations preserve the topology of the phase space, as well as the polynomial degree of the functions being mapped [25]. For these reasons, affine transformations are preferred over the alternative nonlinear transformations, discussed in the next two sections. However, affine transformations do not necessarily satisfy condition (i) in Definition 3.2, so that they are generally nonkinetic transformations. However, despite being generally nonkinetic, affine transformations map sets k into new sets \(\bar{k}\) [see Eqs. (10), (11)], so that they may be used for satisfying a given set of constraints imposed on the output function (see Definition 3.3). This motivates the following definition.

Definition 3.6

Let \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\). If there exist \(A \in {\mathbb {R}}^{S \times S}\), \({\mathcal {T}} \in {\mathbb {R}}^{S}\), such that simultaneously \((\varPsi _{A} \circ \varPsi _{{\mathcal {T}}} {\mathcal {P}})(\bar{x}; \, \bar{k})\) is a kinetic function, and a given set of constraints \(\varPhi \equiv \{\phi _j(\bar{k}) \ge 0 : j = 1, 2, \ldots , J\}\) is satisfied, then it is said that \({\mathcal {P}}(x; \, k)\) and the corresponding Eq. (8) are affinely kinetic, given the constraints. Otherwise, they are said to be affinely nonkinetic, given the constraints.

If the set of constraints in Definition 3.3 is empty, affinely nonkinetic functions are called essentially nonkinetic, while those that are affinely kinetic are called removably nonkinetic. Such labels emphasize that, if a function is essentially nonkinetic, a kinetic function that is globally topologically equivalent cannot be obtained, while if a function is removably nonkinetic, a globally topologically equivalent kinetic function can be obtained.

Explicit sufficient conditions for a polynomial function \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \,{\mathbf {k}})\) to be affinely kinetic, or nonkinetic, are generally difficult to obtain. Even in the simpler case \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \,{\mathbf {k}}) \in {\mathbb {P}}_{2}({\mathbb {R}}^{2}; \, {\mathbb {R}}^{2})\), such conditions are complicated, and cannot be easily generalized for higher-dimensional systems and/or systems with higher degree of nonlinearity [25]. In [19], based on the polar and spectral decomposition theorems, it has been argued that if no orthogonal transformation is kinetic, then no centroaffine transformation is kinetic. The result is reproduced in this paper using the more concise singular value decomposition theorem, and is generalized to the case when the set of constraints is nonempty. Loosely speaking, the theorem states that “orthogonally nonkinetic” functions are affinely nonkinetic as well, given certain constraints.

Theorem 3.1

If \({\mathcal {P}}(x; \, k) \in {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}})\) is nonkinetic under \(\varPsi _{Q} \circ \varPsi _{{\mathcal {T}}}\), given a set of constraints \(\varPhi \), for all orthogonal matrices \(Q \in {\mathbb {R}}^{S \times S}\) and for all \({\mathcal {T}} \in {\mathbb {R}}^{S}\), then \({\mathcal {P}}(x; \, k)\) is also affinely nonkinetic, given \(\varPhi \), provided the following condition holds: \({\mathrm {sign}}(\phi _j(k)) = {\mathrm {sign}}(\phi _j(\bar{k}))\), \(j = 1, 2, \ldots , J\), for all diagonal and positive definite matrices \(\varLambda \in {\mathbb {R}}^{S \times S}\), with \(\varPsi _{\varLambda } {\mathcal {P}} = (\varPsi _{\varLambda } \,{\mathcal {P}})(\bar{x}; \, \bar{k})\).

Proof

By the singular value decomposition theorem, nonsingular matrices \(A \in {\mathbb {R}}^{S \times S}\) can be written as \(A = Q_1 \varLambda Q_2\), where \(Q_1, Q_2 \in {\mathbb {R}}^{S \times S}\) are orthogonal, and \(\varLambda \in {\mathbb {R}}^{S \times S}\) diagonal and positive definite. Cross-negative terms are invariant under transformation \(\varPsi _{\varLambda }\) for all \(\varLambda \) [19]. If \(\varPhi \) from Definition 3.3 is such that functions \({\mathrm {sign}}(\phi _j(k))\), \(j = 1, 2, \ldots , J\), are invariant under all positive definite diagonal matrices \(\varLambda \in {\mathbb {R}}^{S \times S}\), the statement of the theorem follows. \(\square \)

3.2.2 X-factorable transformation

Definition 3.7

Consider multipling the RHS of Eq. (9) by a diagonal matrix \({\mathcal {X}}({\mathbf {x}}) = {\mathrm {diag}}(x_1, x_2, \ldots , x_S)\), resulting in

Then \(\varPsi _{{\mathcal {\mathcal {X}}}}: {\mathbb {P}}_{m}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S}) \rightarrow {\mathbb {P}}_{m + 1}({\mathbb {R}}^{S}; \, {\mathbb {R}}^{S})\), mapping \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}})\) to \((\varPsi _{{\mathcal {X}}} \varvec{{\mathcal {P}}})({\mathbf {x}}; \, {\mathbf {k}})\), is called an x-factorable transformation. If \({\mathcal {X}}\) is diagonal and its nonzero elements are

where \({\mathcal {S}}^{\prime } \subset {\mathcal {S}}\), \({\mathcal {S}}^{\prime } \ne \emptyset \), then the transformation is denoted \(\varPsi _{{\mathcal {X}}_{{\mathcal {S}}^{\prime }}}\), and is said to be \(x_{{\mathcal {S}}^{\prime }}\)-factorable.

When \({\mathcal {X}} \in {\mathbb {R}}^2\) is \(x_1\)-factorable, i.e. \({\mathcal {X}}(x_1) = {\mathrm {diag}}(x_1,1)\), we write \(\varPsi _{{\mathcal {X}}_{1}} \equiv \varPsi _{{\mathcal {X}}_{\{1\}}}\).

Theorem 3.2

\((\varPsi _{{\mathcal {X}}} \varvec{{\mathcal {P}}})({\mathbf {x}}; \, {\mathbf {k}})\) from Defnition 3.7 is a kinetic function, i.e. \((\varPsi _{{\mathcal {X}}} \varvec{{\mathcal {P}}})({\mathbf {x}}; \, {\mathbf {k}}) \in {\mathbb {P}}^{{\mathcal {K}}}_{m + 1}({\mathbb {R}}_{\ge }^{S}; \, {\mathbb {R}}^{S})\).

Proof

See [21]. \(\square \)

Functions \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}})\) and \((\varPsi _{{\mathcal {X}}} \varvec{{\mathcal {P}}})({\mathbf {x}}; \, {\mathbf {k}})\) are not necessarily topologically equivalent due to two overlapping artefacts that \(\varPsi _{{\mathcal {X}}}\) can produce, so that \(\varPsi _{{\mathcal {X}}}\) is generally a nonkinetic transformation. Firstly, the fixed points of the former system can change the type and/or stability under \(\varPsi _{{\mathcal {X}}}\), and, secondly, the latter system has an additional finite number of boundary fixed points. The following theorem specifies the details of the artefacts for two-dimensional systems.

Theorem 3.3

Let us consider the ODE system (9) in two dimensions with RHS \( \varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}}) = ({\mathcal {P}}_1({\mathbf {x}}; \, {\mathbf {k}}), {\mathcal {P}}_2({\mathbf {x}}; \, {\mathbf {k}}))^{\top }. \) The following statements are true for all the fixed points \({\mathbf {x}}^{*}\) of the two-dimensional system (9) in \({\mathbb {R}}_{>}^2\) under \(\varPsi _{{\mathcal {X}}_{{\mathcal {S}}^{\prime }}}\), \({\mathcal {S}}^{\prime } \subseteq {\mathcal {S}}\), \({\mathcal {S}}^{\prime } \ne \emptyset \):

-

(i)

All the saddle fixed points are unconditionally invariant, i.e. saddle points of (9) correspond to saddle points of (12).

-

(ii)

A sufficient condition for stability of a fixed point \({\mathbf {x}}^{*}\) to be invariant is:

$$\begin{aligned} \frac{\partial {\mathcal {P}}_1({\mathbf {x}}; \, {\mathbf {k}})}{\partial x_1}\left| _{{\mathbf {x}} = {\mathbf {x}}^{*}} \frac{ \partial {\mathcal {P}}_2({\mathbf {x}}; \, {\mathbf {k}}) }{\partial x_2}\right| _{{\mathbf {x}} = {\mathbf {x}}^{*}} \ge 0. \end{aligned}$$ -

(iii)

A sufficient condition for the type of a fixed point \({\mathbf {x}}^{*}\) to be invariant is:

$$\begin{aligned} \frac{\partial {\mathcal {P}}_1({\mathbf {x}}; \, {\mathbf {k}}) }{\partial x_2}\left| _{{\mathbf {x}} = {\mathbf {x}}^{*}} \frac{\partial {\mathcal {P}}_2({\mathbf {x}}; \, {\mathbf {k}}) }{\partial x_1}\right| _{{\mathbf {x}} = {\mathbf {x}}^{*}} \ge 0. \end{aligned}$$

Assume that the ODE system (9) does not have fixed points on the axes of the phase space. Nevertheless, the two-dimensional system (12) can have additional fixed points on the axes of the phase space, called boundary fixed points, denoted \({\mathbf {x}}_{b}^{*} \in {\mathbb {R}}_{\ge }^{2}\). The boundary fixed points can be either nodes or saddles, and the following statements are true:

-

(iv)

If system (12) is x-factorable, then the origin is a fixed point, \({\mathbf {x}}_{b}^{*} = {\mathbf {0}}\), with eigenvalues \(\lambda _i = {\mathcal {P}}_i({\mathbf {x}}_{b}^{*}; \, {\mathbf {k}}) \ne 0\), \(i = 1,2\), and the corresponding eigenvectors along the phase space axes.

-

(v)

For \(x_{b, i}^{*} = 0\), \(x_{b, j}^{*} \ne 0\), \({\mathbf {x}}_{b}^{*} \in {\mathbb {R}}_{\ge }^2\), \(i,j =1,2\), \(i \ne j\), a boundary fixed point is a node if and only if

$$\begin{aligned} {\mathcal {P}}_i({\mathbf {x}}_{b}^{*}; \, {\mathbf {k}}) \frac{ \partial {\mathcal {P}}_{j}({\mathbf {x}}; \, {\mathbf {k}}) }{\partial x_{j}}|_{{\mathbf {x}} = {\mathbf {x}}_{b}^{*}} > 0, \end{aligned}$$with the node being stable if \({\mathcal {P}}_i({\mathbf {x}}_{b}^{*}; \, {\mathbf {k}}) < 0\), and unstable if \({\mathcal {P}}_i({\mathbf {x}}_{b}^{*}; \, {\mathbf {k}}) > 0\), \(i,j = 1, 2\), \(i \ne j\). Otherwise, the fixed point is a saddle.

Proof

Without loss of generality, we consider two forms of the system (12) with \(S = 2\):

where \(p \in \{0,1\}\), so that system (13)–(14) is x-factorable for \(p = 1\), but only \(x_1\)-factorable for \(p = 0\). The results derived for an \(x_1\)-factorable system hold when the system is \(x_2\)-factorable, if the indices are swapped. By writing \({\mathcal {P}}_i({\mathbf {x}}; \, {\mathbf {k}}) = {\mathcal {P}}_i\), \(i = 1,2\), the Jacobian of (13)–(14), \(J_{{\mathcal {X}}}\), is for \(p \in \{0,1\}\) given by

First, consider how fixed points of \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}})\) are affected by transformation \(\varPsi _{{\mathcal {X}}_{{\mathcal {S}}^{\prime }}}\). Denoting the Jacobian of two-dimensional system (9) by J, and assuming the fixed points are not on the axes of the phase space (i.e. \({\mathbf {x}}^{*} \in {\mathbb {R}}_{>}^2\)), the Jacobians evaluated at \({\mathbf {x}}^{*}\) are given by:

Comparing the trace, determinant and discriminant of \(J({\mathbf {x}}^{*})\) and \(J_{{\mathcal {X}}}({\mathbf {x}}^{*})\), we deduce (i)–(iii).

To prove (iv)–(v), we evaluate \(J_{{\mathcal {X}}}\) at the boundary fixed points of the form \({\mathbf {x}}_{b}^{*} = (0,x_{b, 2}^{*})\) to get

If \(p = 1\), then one of the boundary fixed points is \({\mathbf {x}}_{b}^{*} = {\mathbf {0}}\), and the Jacobian becomes a diagonal matrix, so that condition (iv) holds. If \(x_{b, 2}^{*} \ne 0\), then \({\mathcal {P}}_2(0,x_{b, 2}^{*};{\mathbf {k}})=0\) in (15), and comparing the trace, determinant and discriminant of \(J({\mathbf {x}}^{*})\) and \(J_{{\mathcal {X}}}({\mathbf {x}}_b^{*})\), we deduce (v). \(\square \)

Theorem 3.3 can be used to find conditions that an x-factorable transformation given by \(\varPsi _{{\mathcal {\mathcal {X}}}}: {\mathbb {P}}_{m}({\mathbb {R}}^{2}; \, {\mathbb {R}}^{2}) \rightarrow {\mathbb {P}}_{m + 1}({\mathbb {R}}^{2}; \, {\mathbb {R}}^{2})\) is a kinetic transformation. While conditions (ii)–(iii) in Theorem 3.3 may be violated when \(\varPsi _{{\mathcal {X}}}\) is used, so that \(\varPsi _{{\mathcal {X}}}\) is a nonkinetic transformation, a composition of an affine transformation and an x-factorable transformation, i.e. \(\varPsi _{{\mathcal {X}}, A,{\mathcal {T}}} = \varPsi _{{\mathcal {X}}} \circ \varPsi _{A} \circ \varPsi _{{\mathcal {T}}}\), may be kinetic. Furthermore, such a composite transformation may also be used to control the boundary fixed points introduced by \(\varPsi _{{\mathcal {X}}}\). Finding an appropriate A and \({\mathcal {T}}\) to ensure the topological equivalence near the fixed points typically means that the region of interest in the phase space has to be positioned at a sufficient distance from the axes. However, since the introduced boundary fixed points may be saddles, this implies that the phase curves may be significantly globally changed, regardless of how far away from the axes they are. The most desirable outcome of controlling the boundary fixed points is to eliminate them, or shift them outside of the nonnegative orthant. The former can be attempted by ensuring that the nullclines of the original ODE system (9) do not intersect the axes of the phase space, while the latter by using the Routh–Hurwitz theorem [26].

An alternative transformation, which is always kinetic, that also does not change the dimension of an ODE system is the time-parametrization transformation [27]. However, while \(\varPsi _{{\mathcal {X}}}\) increases the polynomial degree by one, and introduces only a finite number of boundary fixed points which are given as solutions of suitable polynomials, the time-parameterization transformation generally increases the nonlinearity degree more than \(\varPsi _{{\mathcal {X}}}\), and introduces infinitely many boundary fixed points.

3.2.3 The quasi-steady state transformation

The quasi-steady state assumption (QSSA) is a popular constructive method for reducing the dimension of ODE systems by assuming that, at a given time-scale, some of the species reach a quasi-steady state, so that they can be described by algebraic, rather than differential equations. The QSSA is based on Tikhonov’s theorem [28, 29] that specifies conditions ensuring that the solutions of the reduced system are asymptotically equivalent to the solutions of the original system. The original system is referred to as the total system, and it consists of the reduced subsystem, referred to as the degenerate system, and the remaining subsystem, called the adjoined system, so that the QSSA consists of replacing the total system with the degenerate one, by eliminating the adjoined system. Korzukhin’s theorem [28, 29] is an existence result ensuring that, given any polynomial degenerate system, there exists a corresponding total system that is kinetic.

Thus, Tikhonov’s theorem can be seen as a constructive direct asymptotic dimension reduction procedure, while Korzukhin’s theorem as an inverse asymptotic dimension expansion existence result. Korzukhin’s theorem has an important implication that an application of the QSSA can result in a degenerate system that is structurally different than the corresponding total system. In this paper, the QSSA is assumed to necessarily be compatible with Tikhonov’s theorem. If this is not the case, then it has been demonstrated in [27, 30] that application of a QSSA can create dynamical artefacts, i.e. it can result in degenerate systems, not only structurally different, but also dynamically different from the total systems. The artefacts commonly occur due to the asymptotic parameters in Tikhonov’s theorem not being sufficiently small. For example, it has been shown that exotic phenomena such as multistability and oscillations can exist in a degenerate system, while not existing in the corresponding total system [27, 30].

Using Korzukhin’s and Tikhonov’s theorems, a family of kinetic total systems for an arbitrary nonkinetic polynomial degenerate system can be constructed, as is now shown. For simplicity, we denote \( x^c = \prod _{s \in S} x_s^{c_s}, \) for any \(x \in {\mathbb {R}}^{{\mathcal {S}}}\) and \(c \in {\mathbb {N}}^{{\mathcal {S}}}\).

Definition 3.8

Consider Eq. (8), and assume that the reaction set is partitioned, \({\mathcal {R}} = {\mathcal {R}}_1 \cup {\mathcal {R}}_2\), \({\mathcal {R}}_1 \cap {\mathcal {R}}_2 = \emptyset \), so that (8), together with the initial conditions, can be written as

where \(x \in {\mathbb {R}}^{{\mathcal {S}}}\), \(\alpha _{s r} \in {\mathbb {N}}^{{\mathcal {S}}}\), \(\beta _{s r} \in {\mathbb {N}}^{{\mathcal {S}}}\), \(\alpha _{s r} \ne \beta _{s r}\), \(a_{s r} \in {\mathbb {R}}_{\ge }\) and \(b_{s r} \in {\mathbb {R}}_{\ge }\) for all \(s \in {\mathcal {S}}\) and \(r \in {\mathcal {R}}\). Assume further that the species set is partitioned, \({\mathcal {S}} = {\mathcal {S}}_1 \cup {\mathcal {S}}_2\), \({\mathcal {S}}_1 \cap {\mathcal {S}}_2 = \emptyset \), so that equations for species \(s \in {\mathcal {S}}_1\) are kinetic, while those for species \(s \in {\mathcal {S}}_2\) are nonkinetic. Consider the following total system, consisting of a degenerate system given by

which satisfies the initial condition (17) with \(x_s^0 > 0\) for \(s \in {\mathcal {S}}_2\), and an adjoined system given by

where \(\mu > 0\), \(\omega _s > 0\) are parameters, and p(x) is a polynomial function of x satisfying \(p(x) \in {\mathbb {P}}_{m_0}({\mathbb {R}}_{\ge }^{{\mathcal {S}}}; \, {\mathbb {R}}_{>}^{{\mathcal {S}}_2})\) for \(m_0 \in {\mathbb {N}}\). Then \(\varPsi _{{\mathrm {QSSA}}} : {\mathbb {P}}_{m}({\mathbb {R}}^{{\mathcal {S}}}; \, {\mathbb {R}}^{{\mathcal {S}}}) \rightarrow {\mathbb {P}}_{\bar{m}}({\mathbb {R}}_{\ge }^{{\mathcal {S}} + {\mathcal {S}}_2}; \, {\mathbb {R}}^{{\mathcal {S}} + {\mathcal {S}}_2})\), mapping the RHS of differential equations in system (16)–(17), denoted \({\mathcal {P}}(x; \, k)\), to the RHS of differential equations of system (18)–(21), denoted \((\varPsi _{{\mathrm {QSSA}}} {\mathcal {P}})(\{x, y\}; \, \bar{k})\), with the constraint that \(x_s > 0\) for \(s \in {\mathcal {S}}_2\), is called a quasi-steady state transformation. Here, \(\bar{m} \le m + m_0 + 2\), and \(\bar{k}\) is a vector of the new rate constants obtained from k by rewriting the polynomial \((\varPsi _{{\mathrm {QSSA}}} {\mathcal {P}})(\{x, y\}; \, \bar{k})\) into the mass action form.

Theorem 3.4

Solutions of systems (16)–(17) and (18)–(21), corresponding to \({\mathcal {P}}(x;k)\) and \((\varPsi _{{\mathrm {QSSA}}} {\mathcal {P}})(\{x, y\}; \, \bar{k})\), are asymptotically equivalent in the limit \(\mu \rightarrow 0\), and \((\varPsi _{{\mathrm {QSSA}}} {\mathcal {P}})(\{x, y\}; \, \bar{k})\) is a kinetic function.

Proof

Fixed points of system (20) satisfy \(y_s^{*} = \omega _s (x_s p_s(x) )^{-1}\). The fixed points are isolated, and, since (from Definition 3.8) \(x_s > 0\) and \(p_s(x) > 0\), \(\forall x \in {\mathbb {R}}_{\ge }^{{\mathcal {S}}}\), \(\forall s \in {\mathcal {S}}_2\), it follows that the fixed points are globally stable. Thus, the conditions of Tikhonov’s theorem [28] are satisfied by the total system (18)–(21). Then, by applying the theorem, i.e. substituting \(y_s^{*}\) into (19), one recovers the corresponding degenerate system given by (16)–(17). Finally, the total system (18)–(21) is kinetic, as can be verified by using Definition 2.7. \(\square \)

Corollary 3.1

The quasi-steady state transformation \(\varPsi _{{\mathrm {QSSA}}}\), defined in Definition 3.8, is a kinetic transformation in the limit \(\mu \rightarrow 0\).

An alternative transformation, for which condition (i) in Definition 3.2 is also always satisfied, and that also expands the dimension of an ODE system, is an incomplete Carleman embedding [2, 31]. However, condition (ii) in Definition 3.2 is satisfied for the incomplete Carleman embedding only provided initial conditions for the adjoined system are appropriately constrained, and, furthermore, the transformation generally results in an adjoined system with a higher nonlinearity degree when compared to \(\varPsi _{{\mathrm {QSSA}}}\). In fact, Theorem 3.4 can be seen as an asymptotic alternative to the incomplete Carleman embedding, i.e. instead of requiring adjoined variables to satisfy \(y_{s}(t) = \omega _s \, x_s^{-1}(t) \, p_s^{-1}(x(t))\), one requires them to satify \(\lim _{\mu \rightarrow 0} y_{s}(t) = \omega _s \, x_s^{-1}(t) \, p_s^{-1}(x(t))\). The theorem can also be seen as an extension of using the QSSA to represent reactions of more than two molecules as a limiting case of bimolecular reactions [32] to the case of using the QSSA to represent cross-negative terms as a limiting case of kinetic ones.

4 Construction of reaction systems undergoing a supercritical homoclinic bifurcation

In this section, a brief review of a general bifurcation theory, and a more specific homoclinic bifurcation, is presented. This is followed by applying the framework developed in Sect. 3 to construct specific reaction systems displaying the homoclinic bifurcation.

Variations of parameters in a parameter dependent ODE system may change topology of the phase space, e.g. a change may occur in the number of invariant sets or their stability, shape of their region of attraction or their relative position. At values of the bifurcation parameters at which the system becomes topologically nonequivalent it is said that a bifurcation occurs, and the bifurcation is characterized by two sets of conditions: bifurcation conditions defining the type of bifurcation, and genericity conditions ensuring that the system is generic, i.e. can be simplified near the bifurcation to a normal form [33]. If it is sufficient to analyse a small neighbourhood of an invariant set to detect a bifurcation, the bifurcation is said to be local. Otherwise, it is called global, and the analysis becomes more challenging. Bifurcations are common in kinetic equations, where, in the case of the mass action kinetics, the rate constants play the role of bifurcation parameters [4, 5, 7, 34, 35]. In this paper, focus is placed on a global bifurcation giving rise to stable oscillations, called a supercritical homoclinic bifurcation [4, 33, 36].

Definition 4.1

Suppose \({\mathbf {x}}^{*}\) is a fixed point of system (9). An orbit \(\gamma ^{*}\) starting at a point \({\mathbf {x}}\) is called homoclinic to the fixed point \({\mathbf {x}}^{*}\) if its \(\alpha \)-limiting and \(\omega \)-limiting sets are both equal to \({\mathbf {x}}^{*}\).

Put more simply, a homoclinic orbit connects a fixed point to itself. An example of a homoclinic orbit to a saddle fixed point can be seen in Fig. 1b on page 20, where the homoclinic orbit is shown as the purple loop, while the saddle as the blue dot at the origin.

If a homoclinic orbit to a hyperbolic fixed point is present in an ODE system, then the system is structurally unstable, i.e. small perturbations to the equations can destroy the homoclinic orbit and change the structure of the phase space, so that a bifurcation can occur. For two-dimensional systems, the bifurcation and genericity conditions are completely specified by the Andronov-Leontovich theorem [33] given in Appendix 2. In summary, the theorem demands that the sum of the eigenvalues corresponding to the saddle at the bifurcation point, called the saddle quantity, must be nonzero (nondegeneracy condition), and that the so-called Melnikov integral at the bifurcation point evaluated along the homoclinic orbit must be nonzero (transversality condition).

4.1 The inverse problem formulation

Construction of reaction systems with prescribed properties is an inverse problem which we will solve by applying kinetic transformations described in Sect. 3. Our goal is to find a reaction system with the mass action kinetics (see Definition 2.6) such that the kinetic equations satisfy assumptions of Andronov-Leontovich theorem in Appendix 2, i.e. they must contain a homoclinic orbit defined on a two-dimensional manifold in the nonnegative orthant, and undergo the homoclinic bifurcation in a generic way. The output of this inverse problem will be a canonical reaction network which corresponds to the constructed ODE system. Thus the inverse problem is solved in three steps given in Algorithm 1. The first step is solved by using results of Sandstede [36] which leads to a set of polynomial functions satisfying the first three conditions of Andronov-Leontovich theorem in Appendix 2. An additional transformation is then performed ensuring that the final condition of Andronov-Leontovich theorem is satisfied. In this paper, nonlinear kinetic transformations are applied on the resulting polynomial function (using Step (2), case (b), in Algorithm 1).

4.2 Step (1): construction of polynomial function \({\mathcal {P}}(x; \, k)\)

Definition 4.2

A version of a plane algebraic curve called Tschirnhausen cubic (also known as Catalan’s trisectrix, and l’Hospital’s cubic) [37] given by:

is referred to as the alpha curve. The part of the curve with \(x_2 < 0\) is called the alpha loop, while the part with \(x_2 > 0\) is called the alpha branches, with the branch for \(x_1 < 0\) being the negative alpha branch, and for \(x_1 > 0\) being the positive alpha branch. Solutions \(x_1\) of Eq. (22) are denoted \(x_1^{\pm } = \pm x_2 \sqrt{1 + x_2}\).

a The alpha curve (22), with branch \(x_1^{-}\) plotted as the solid purple curve, and branch \(x_1^{+}\) as the dashed green curve. b Phase plane diagram of system (23)–(24) for \(a = -0.8\), with the stable node, the saddle, and the unstable spiral represented as the green, blue and red dots, respectively. The alpha curve is shown in purple, while the vector field as gray arrows (Color figure online)

The \(\alpha \) curve is shown in Fig. 1a, with \(x_1^{-}\) plotted as the solid purple curve, and \(x_1^{+}\) as the dashed green curve. It can be seen that the curve consists of the tear-shaped alpha loop located in region \([-2 \sqrt{3}/9,2 \sqrt{3}/9 ]\times [-1,0 ]\), and the positive and negative alpha branches in the first and second quadrant, respectively. As is done in [36], the alpha curve is mapped into a system of polynomial ODEs.

Lemma 4.1

The two-dimensional polynomial ODE system

contains the alpha curve (22) as phase plane orbits, with the alpha loop as a homoclinic orbit to the fixed point \({\mathbf {x}}^{*} = {\mathbf {0}}\), provided \(a^2 < 1\). If \(a \in (-1, 0)\), the alpha loop is stable from the inside, and system (23)–(24) has three fixed points: a saddle at the origin, an unstable spiral inside the alpha loop, and a stable node on the positive alpha branch.

Proof

Setting \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}}) = ({\mathcal {P}}_1({\mathbf {x}}; \, {\mathbf {k}}), {\mathcal {P}}_2({\mathbf {x}}; \, {\mathbf {k}}))\) in system (9) to be a polynomial function of \({\mathbf {x}} =(x_1,x_2)\) with undetermined coefficients \({\mathbf {k}}\), and requiring \(\varvec{{\mathcal {P}}} \cdot \nabla H = 0\), one obtains system (23)–(24), as was done in [36]. As there is only one free parameter, denoted a, we write: \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, {\mathbf {k}}) = \varvec{{\mathcal {P}}}({\mathbf {x}}; \, a)\). System (23)–(24) has three fixed points: \(\big (0, 0 \big )\), \(\big (2a/9, -2/3\big )\), and \(\big (a^{-1} (1 - a^{-2}), \, -1 + a^{-2} \big )\). The condition \(a^2 < 1\) ensures that fixed points \(\big (2a/9, -2/3\big )\) and \(\big (a^{-1} (1 - a^{-2}), \, -1 + a^{-2} \big )\) are not on the alpha loop. The Jacobian \(J = \nabla {\varvec{{\mathcal {P}}}}({\mathbf {x}}; \, a)\) is given by

Let the determinant and trace of J be denoted by \({\mathrm {det}}(J)\) and \({\mathrm {tr}}(J)\), respectively. Fixed point \(\big (0, 0 \big )\) is a saddle, since \({\mathrm {det}}(J) = a^2 -1 < 0\). The saddle quantity from Andronov-Leontovich theorem in Appendix 2 is given by \(\sigma _0 = \lambda _1 + \lambda _2 = (a - 1) + (a + 1) = 2 a\), were \(\lambda _1\) and \(\lambda _2\) are the saddle eigenvalues. The alpha loop is stable from the inside provided \(\sigma _0 < 0\), implying \(a < 0\). It then follows that \(\big (2/9 a, -2/3\big )\) is an unstable spiral, and \(\big (a^{-1} (1 - a^{-2}), \, -1 + a^{-2} \big )\) a stable node. \(\square \)

A representative phase plane diagram of system (23)–(24) is shown in Fig. 1b. Note that a part of the positive alpha branch \(x_1^{+}\) is a heteroclinic orbit connecting the saddle and the node. The distance between the saddle and the node is given by \(d(a) = a^{-3} (a^2 - 1) \sqrt{1 + a^2}\), so that \(\lim _{a \rightarrow 0} d(a) = + \infty \) and \(\lim _{a \rightarrow -1} d(a) = 0\), i.e. increasing a increases length of the heteroclinic orbit by sliding the node along \(x_1^{+}\).

System (23)–(24) satisfies the first three conditions of Andronov-Leontovich theorem in Appendix 2. In order to satisfy the final condition, a set of perturbations must be found that destroy the alpha loop in a generic way, and this is ensured by the Melnikov condition (43). The bifurcation parameter controlling the existence of the alpha loop is denoted as \(\alpha \in {\mathbb {R}}\). Note that \({\mathcal {P}}({\mathbf {x}}; \, a)\) perturbed by a function of the form \(\alpha \nabla H(x_1,x_2) = \alpha (-2 x_1, 2 x_2 + 3 x_2^2)\) satisfies the Melnikov condition [36], but \({\mathcal {P}}({\mathbf {x}}; \, a) + \alpha \nabla H(x_1,x_2)\) has three terms dependent on \(\alpha \). In the following theorem, a simpler set of perturbations is found, introducing only one \(\alpha \) dependent term in system (23)–(24).

Theorem 4.1

If a perturbation of the form \((\alpha f(x_1),0)^T\), where \(\alpha \in {\mathbb {R}}\), is added to the RHS of system (23)–(24), and if \(f(x_1)\) is an odd function, \(f(-x_1) = - f(x_1)\), and \(f(x_1) \ne 0\), \(\forall x_1 \in [-2 \sqrt{3}/9,0)\), then the perturbed system undergoes a supercritical homoclinic bifurcation in a generic way as \(\alpha \) is varied in the neighbourhood of zero.

Proof

Consider the perturbed version of system (23)–(24):

Melnikov integral (43) for system (26)–(27) is given by

Using (27), we have \({\mathcal {P}}_2(x_1, x_2; \, a) {\mathrm {d}} t = {\mathrm {d}} x_2\). Thus we can express M(0) in terms of \(x_2\) as follow:

where \(t^{+}(x_2)\) (resp. \(t^{-}(x_2)\)) is the dependence of time t on \(x_2\) along the positive (resp. negative) alpha branch for the trajectory which is at point \((x_1,x_2) = (0,-1)\) at time \(t=0\) (for \(\alpha =0\)). Since f is odd and \(\varphi (t^{\pm }) > 0\), we deduce

\(\square \)

For further simplicity of (26)–(27), function \(f(x_1)\) is set to \(f(x_1) = x_1\) in the rest of this paper.

4.3 Step (2): construction of kinetic function \({\mathcal {K}}(\bar{x}; \, \bar{k})\)

The RHS of system (26)–(27), \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, a, \alpha )\), is a kinetic function. However, the alpha loop, which is the region of interest, is not located in the nonnegative orthant. In order to position the loop into the positive orthant, we will apply affine transformations. First, we show that system (26)–(27) with the homoclinic orbit in nonnegative orthant is nonkinetic under all translation transformations for \(a \in (-1,0)\), \(\alpha \in {\mathbb {R}}\).

Lemma 4.2

Function \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, a, \alpha )\), given by the RHS of (26)–(27), is nonkinetic under all translation transformations \(\varPsi _{{\mathcal {T}}}\), for \(a \in (-1,0)\) and \(\alpha \in {\mathbb {R}}\), given the condition that the homoclinic orbit is mapped into \({\mathbb {R}}^2_{>}\).

Proof

Let us apply the translation transformation \(\varPsi _{{\mathcal {T}}}\) (see Definition 3.5), \(\varvec{{\mathcal {T}}} = ({\mathcal {T}}_1,{\mathcal {T}}_2) \in {\mathbb {R}}^2\), on function \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, a, \alpha )\), given by the RHS of (26)–(27), resulting in:

with \(\bar{{\mathbf {x}}} = {\mathbf {x}} + \varvec{T}\), and coefficients \(\bar{{\mathbf {k}}} = \bar{{\mathbf {k}}}(a, \alpha , \varvec{{\mathcal {T}}})\) given by

Consider the point \({\mathbf {x}}_{\mathbf{0}} = (0,-1)\), which is on the alpha loop. It is mapped by \(\varPsi _{{\mathcal {T}}}\) to the point \(\bar{{\mathbf {x}}}_{\mathbf{0}} = ({\mathcal {T}}_1,-1 + {\mathcal {T}}_2)\). Requiring that the alpha loop is mapped to \({\mathbb {R}}^2_{>}\) implies that we must have \(\bar{{\mathbf {x}}}_{\mathbf{0}} \in {\mathbb {R}}_{>}^2\), so that the following set of constraints (see Definition 3.3) must be satisfied:

Using the fact that \(a \in (-1,0)\) and the constraints (30), it follows that \(\bar{k}_0^2\) from (29) is negative, \(\bar{k}_0^2< 0\). Thus, \((\varPsi _{{\mathcal {T}}} \varvec{{\mathcal {P}}})(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\) has a cross-negative term, and the statement of the theorem follows. \(\square \)

One can also readily prove that \(\varvec{{\mathcal {P}}}({\mathbf {x}}; \, a, \alpha )\) is nonkinetic under all affine transformations for \(|a| \ll 1\), and \(|\alpha | \ll 1\). Thus, in the next two sections, we follow Step (2), case (b), in Algorithm 1, applying transformations \(\varPsi _{{\mathcal {X}}}\) and \(\varPsi _{{\mathrm {QSSA}}}\) on the kinetic function \((\varPsi _{{\mathcal {T}}} \varvec{{\mathcal {P}}})(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\) given by (28). We require the following conditions to be satisfied:

with the set of constraints \(\varPhi \) ensuring that the homoclinic orbit is in \({\mathbb {R}}^2_{>}\). The reason for requiring \(|\alpha | \ll 1\) is that then the following results are more readily derived, and the condition is sufficient for studying system (28) near the bifurcation point \(\alpha = 0\). A representative phase plane diagram corresponding to the ODE system with RHS (28) is shown in Fig. 2a, with fixed points, the alpha curve, and the vector field denoted as in Fig. 1b, and with the red segments on the axes corresponding to the phase plane regions where the cross-negative effect exists (see Definition 2.9).

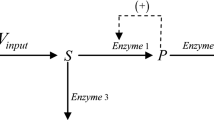

Phase plane diagrams of a ODE system with RHS (28); b ODE system with RHS (32); and c ODE system with RHS (37). The stable node, the saddle, and the unstable spiral are represented as the green, blue and red dot, respectively. The alpha curve is shown in purple, and the vector field as gray arrows. On each plot it is indicated which kinetic transformation is applied to system (26)–(27). Red segments of the phase plane axes in (a) denote the regions where the cross-negative effect exists. In b and c, boundary fixed points are represented as the black dots, purple curves with a coarser dashing represent the saddle manifolds that asymptotically approach an axis, while with a finer dashing those that are outside of \({\mathbb {R}}_{\ge }^2\). d Phase plane diagram of a system for which x-factorable transformation significantly globally influences the phase curves in such a way that a pure translation cannot resolve the artefacts. For more details, see the text. The parameters are fixed to \(a = -0.8\), \(\alpha = 0\), \({\mathcal {T}}_1 = 2\), \({\mathcal {T}}_2 = 2\) (Color figure online)

4.3.1 X-factorable transformation

Let us apply the x-factorable transformation \(\varPsi _{{\mathcal {X}}}\) on system (28). Letting \(\varPsi _{{\mathcal {X}}, {\mathcal {T}} } \equiv \varPsi _{{\mathcal {X}}} \circ \varPsi _{{\mathcal {T}}}\), the resulting kinetic function \(\varvec{{\mathcal {K}}}_{{\mathcal {X}}, {\mathcal {T}}}(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}}) \equiv (\varPsi _{{\mathcal {X}}, {\mathcal {T}}} \varvec{{\mathcal {P}}}) (\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\) is given by

Theorem 4.2

ODE systems with RHSs (28) and (32) are topologically equivalent in the neighbourhood of the fixed points in \({\mathbb {R}}_{>}^2\), with the homoclinic orbit in \({\mathbb {R}}^2_{>}\), and a saddle at the origin being the only boundary fixed point in \({\mathbb {R}}_{\ge }^2\), if:

Proof

Let us assume \(\alpha = 0\).

From statement (i) of Theorem 3.3 it follows that the saddle fixed point of (28) is preserved under \(\varPsi _{{\mathcal {X}}}\). Denoting the node and spiral fixed points of (28) by \(\bar{{\mathbf {x}}}^{*}_{{\mathrm {nd}}}\) and \(\bar{{\mathbf {x}}}^{*}_{{\mathrm {sp}}}\), respectively, one finds that the Jacobian is given by:

Conditions (ii) and (iii) of Theorem 3.3 are both satisfied for the node, but only condition (ii) is satisfied for the spiral. The condition for the spiral to remain invariant is obtained by demanding \({\mathrm {disc}}(J_{{\mathcal {X}}, {\mathcal {T}}}|_{\bar{{\mathbf {x}}} = \bar{{\mathbf {x}}}^{*}_{{\mathrm {sp}}}}) < 0\), where \(J_{{\mathcal {X}}, {\mathcal {T}}}\) is the Jacobian of (32), and, taking into consideration (31), this leads to

Boundary fixed points are given by (0, 0), \(({\mathcal {T}}_1 + a^{-1} {\mathcal {T}}_2,0)\) and \((0, 1/2(1 \pm \sqrt{1 + 4 a^{-1} {\mathcal {T}}_1}) + {\mathcal {T}}_2)\). The second fixed point can be removed from the nonnegative quadrant by demanding \({\mathcal {T}}_2 > - a {\mathcal {T}}_1\), while the pair of the last ones always has nonzero imaginary part due to (31). Statement (iv) of Theorem 3.3 implies that the eigenvalues at the origin are given by \(\lambda _1 = k_0^1 =3/2({\mathcal {T}}_2 - 2/3) (a {\mathcal {T}}_1 + {\mathcal {T}}_2) > 0\) and \(\lambda _2 = k_0^2 = -{\mathcal {T}}_1 + a {\mathcal {T}}_2 ({\mathcal {T}}_2 - 1) < 0\), so origin is a saddle fixed point.

As \(\alpha \) can be chosen arbitrarily close to zero, and as \(\varvec{{\mathcal {K}}}_{{\mathcal {X}}, {\mathcal {T}}}(\bar{{\mathbf {x}}}; \, \bar{{\mathbf {k}}})\) is a continuous function of \(\alpha \), the theorem holds for sufficiently small \(|\alpha | \ne 0\), as well. \(\square \)

A representative phase plane diagram corresponding to the ODE system with RHS (32) is shown in Fig. 2b, where the saddle fixed point at the origin is shown as the black dot. It can be seen that one of the stable manifolds of the nonboundary saddle, represented as a dashed purple curve, approaches \(x_2\)-axis asymptotically, instead of crossing it as in Fig. 2a.

The homoclinic orbit of the ODE system with RHS (32) is positioned below the node in the phase plane. Suppose the relative position of the stable sets is reversed by, say, applying an improper orthogonal matrix with the angle fixed to \(3\pi /2\), \(\varPsi _{Q_{3\pi /2 -}}\), with a representative phase plane shown in Fig. 2d. In this case, one can straightforwardly show that the boundary fixed point given by \(\big ( {\mathcal {T}}_1 + 1/2 (1 + \sqrt{1 - 4 a^{-1} {\mathcal {T}}_2}), 0 \big )\), shown as the black dot in Fig. 2d, cannot be removed from \({\mathbb {R}}_{\ge }^2\), and is always a saddle. The same conclusions are true for other similar configurations of the stable sets obtained by rotations only. This demonstrates that x-factorable transformation can produce boundary artefacts that have a significant global influence on the phase curves, that cannot be eliminated by simply translating a region of interest sufficiently far away from the axes. In order to eliminate the particular boundary artefact, a shear transformation may be applied. Consider applying \(\varPsi _{{\mathcal {X}}, {\mathcal {T}}, {\mathcal {Q}}_{3\pi /2 -}, {\mathcal {S}}_2} = \varPsi _{{\mathcal {X}}} \circ \varPsi _{{\mathcal {T}}} \circ \varPsi _{{\mathcal {Q}}_{3\pi /2 -}} \circ \varPsi _{{\mathcal {S}}_2}\) on (26)–(27), where

and \({\mathcal {T}}_1 = {\mathcal {T}}_2 \equiv {\mathcal {T}} \in {\mathbb {R}}\), for simplicity, leading to

where the coefficients \(\bar{{\mathbf {k}}} = \bar{{\mathbf {k}}}(a, \alpha , {\varvec{{\mathcal {T}}}})\) are given by:

together with \(a \in (-1,0)\), \(|\alpha | \ll 1\) and \(\varPhi = \{ {\mathcal {T}} > 1 \}\).

Theorem 4.3

ODE systems with RHSs (26)–(27) and (37) are topologically equivalent in the neighbourhood of the fixed points in \({\mathbb {R}}_{>}^2\), with the homoclinic orbit in \({\mathbb {R}}^2_{>}\), and a saddle at the origin and a saddle with coordinates \((0, 1/2 {\mathcal {T}} a^{-1} (1 + 2 a))\) being the only boundary fixed points in \({\mathbb {R}}_{\ge }^2\), if:

Proof

Following the same procedure as in the proof of Theorem 4.2, and noting that the saddle, node and spiral fixed points are given by \(\big ( {\mathcal {T}}, {\mathcal {T}} \big )\), \(\big ( {\mathcal {T}} -2 a^{-2} (1-a^2), {\mathcal {T}} + a^{-3} (1-a^2) \big )\), and \(\big ( {\mathcal {T}} + 2/9 (3 + a^2), {\mathcal {T}} - 2/9 a \big )\), respectively, while the five boundary fixed points are (0, 0), \(({\mathcal {T}} + 1/2 \big ( 5{\mathcal {T}} a \pm \sqrt{{\mathcal {T}} (8 a^{-1}(1-a^2) + 9 {\mathcal {T}} a^2)}\big ),0)\), \((0, 1/2 {\mathcal {T}} a^{-1} (1 + 2 a))\), \((0, a^{-1} \big (2/3 + {\mathcal {T}} (1 + a) \big ))\), one finds (39). \(\square \)

Note that as \(\alpha \rightarrow -\frac{1}{2}\), the only boundary fixed point in \({\mathbb {R}}_{\ge }^2\) is a saddle at the origin, and it is connected via a heteroclinic orbit to the saddle in \({\mathbb {R}}_{>}^2\). A representative phase plane diagram corresponding to the ODE system with RHS (37) is shown in Fig. 2c.

While systems (32) and (37) contain specific variations of the specific homoclinic orbit given by (22), they, nevertheless, indicate possible phase plane topologies of the kinetic equations containing homoclinic orbits of shapes similar to (22). With a fixed shape and orientation of a homoclinic loop which is similar to (22), three possible orientations of a corresponding saddle manifold in \({\mathbb {R}}_{>}^2\) are: it may extend in \({\mathbb {R}}_{>}^2\) without asymptotically approaching a phase plane axis, it may asymptotically approach an axis, or it may cross an axis at a fixed point. In Fig. 2b, a combination of the first and second orientation is displayed, while in Fig. 2c of the first and third orientation.

4.3.2 The quasi-steady state transformation

In Lemma 4.2, it was demonstrated that system (26)–(27) has at least one cross-negative term under translation transformations. It can be readily shown that system (26)–(27) in fact has minimally two cross-negative terms under translation transformations, \(\bar{k}_2^1 < 0\) and \(\bar{k}_0^2 < 0\), and this is the case when \(a \in (-1,0)\), \(\varPhi = \{{\mathcal {T}}_1 \in (2 \sqrt{3}/9, -{\mathcal {T}}_2 a), {\mathcal {T}}_2 > 1\}\). Let us apply a quasi-steady state transformation \(\varPsi _{{\mathrm {QSSA}}}\) on system (28) that eliminates the two cross-negative terms, i.e. two new variables are introduced, \(\bar{y}_1, \bar{y}_2 \in {\mathbb {R}}_{>}^{2}\), and we take \(p_1(\bar{x}) = p_2(\bar{x}) = 1\), in Definition 3.8. Letting \(\varPsi _{{\mathrm {QSSA}}, {\mathcal {T}}} \equiv \varPsi _{{\mathrm {QSSA}}} \circ \varPsi _{{\mathcal {T}}}\), the resulting kinetic function \({\mathcal {K}}_{{\mathrm {QSSA}}, {\mathcal {T}}}(\{ \bar{x}, \bar{y} \}; \, \bar{k}, \omega , \mu ) \equiv \big (\varPsi _{{\mathrm {QSSA, {\mathcal {T}}}}} {\mathcal {P}}) (\{ \bar{x}, \bar{y} \}; \, \bar{k}, \omega , \mu )\) is given by

with \(\bar{x}_n (0) > 0\), \(\omega _n > 0\), \(n = 1, 2\), and \(\mu \rightarrow 0\).

In (40), \(\lim _{x_n \rightarrow 0} \lim _{\mu \rightarrow 0} y_n = + \infty \), \(n = 1,2\), and a geometrical implication is that, say, the saddle manifold crossing the \(x_2\)-axis in Fig. 2a, instead asymptotically approaches the \(y_1\)-axis. The asymptotic behavior of the saddle manifolds is achieved by the additional (boundary) fixed points in (32) displayed in Fig. 2b, and by additional phase space dimensions in (40).