Abstract

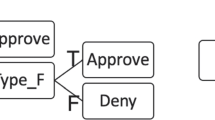

Decision trees have been a very popular class of predictive models for decades due to their interpretability and good performance on categorical features. However, they are not always robust and tend to overfit the data. Additionally, if allowed to grow large, they lose interpretability. In this paper, we present a mixed integer programming formulation to construct optimal decision trees of a prespecified size. We take the special structure of categorical features into account and allow combinatorial decisions (based on subsets of values of features) at each node. Our approach can also handle numerical features via thresholding. We show that very good accuracy can be achieved with small trees using moderately-sized training sets. The optimization problems we solve are tractable with modern solvers.

Similar content being viewed by others

References

Bennett, K.P., Blue, J.: Optimal decision trees. Technical Report 214, Rensselaer Polytechnic Institute Math Report (1996)

Bennett, K.P., Blue, J.A.: A support vector machine approach to decision trees. Neural Netw. Proc. IEEE World Congr. Comput. Intell. 3, 2396–2401 (1998)

Bertsimas, D., Dunn, J.: Optimal classification trees. Mach. Learn. 106(7), 1039–1082 (2017)

Bertsimas, D., Shioda, R.: Classification and regression via integer optimization. Oper. Res. 55(2), 252–271 (2017)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. Chapman and Hall, New York (1984)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27:1–27:27 (2011)

Dash, S., Günlük, O., Wei, D.: Boolean Decision Rules via Column Generation. Advances in Neural Information Processing Systems. Montreal, Canada (2018)

FICO Explainable Machine Learning Challenge https://community.fico.com/s/explainable-machine-learning-challenge

Hyafil, L., Rivest, R.L.: Constructing optimal binary decision trees is np-complete. Inform. Process. Lett. 5(1), 15–17 (1976)

Kotsiantis, S.B.: Decision trees: a recent overview. Artif. Intell. Rev. 39(4), 261–283 (2013)

Lichman, M.: UCI machine learning repository (2013)

Malioutov, D.M., Varshney, K.R.: Exact rule learning via boolean compressed sensing. In: Proceedings of the 30th International Conference on Machine Learning, volume 3, pp. 765–773 (2013)

Murthy, S., Salzberg, S.: Lookahead and pathology in decision tree induction. In: Proceedings of the 14th International Joint Conference on Artificial Intelligence, volume 2, pp. 1025–1031, San Francisco, CA, USA, (1995). Morgan Kaufmann Publishers Inc

Norouzi, M., Collins, M., Johnson, M.A., Fleet, D.J., Kohli, P.: Efficient non-greedy optimization of decision trees. In: Advances in Neural Information Processing Systems, pp. 1720–1728, (2015)

Ross, J.: Quinlan. C4.5: Programs for Machine Learning. Morgan Kaufmann Publishers Inc., San Francisco (1993)

Therneau, T., Atkinson, B., Ripley, B.: rpart: Recursive partitioning and regression trees. Technical Report (2017). R package version 4.1-11

Wang, T., Rudin, C.: Learning optimized or’s of and’s. Technical report, (2015). arxiv:1511.02210

Wang, T., Rudin, C., Doshi-Velez, F., Liu, Y., Klampfl, E., MacNeille, P.: A Bayesian framework for learning rule sets for interpretable classification. J. Mach. Learn. Res. 18(70), 1–37 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of Katya Scheinberg was partially supported by NSF Grant CCF-1320137. Part of this work was performed while Katya Scheinberg was on sabbatical leave at IBM Research, Google, and University of Oxford, partially supported by the Leverhulme Trust.

Rights and permissions

About this article

Cite this article

Günlük, O., Kalagnanam, J., Li, M. et al. Optimal decision trees for categorical data via integer programming. J Glob Optim 81, 233–260 (2021). https://doi.org/10.1007/s10898-021-01009-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01009-y