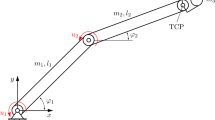

In an optimal control problem one seeks a time-varying input to a dynamical systems in order to stabilize a given target trajectory, such that a particular cost function is minimized. That is, for any initial condition, one tries to find a control that drives the point to this target trajectory in the cheapest way. We consider the inverted pendulum on a moving cart as an ideal example to investigate the solution structure of a nonlinear optimal control problem. Since the dimension of the pendulum system is small, it is possible to use illustrations that enhance the understanding of the geometry of the solution set. We are interested in the value function, that is, the optimal cost associated with each initial condition, as well as the control input that achieves this optimum. We consider different representations of the value function by including both globally and locally optimal solutions. Via Pontryagin’s maximum principle, we can relate the optimal control inputs to trajectories on the smooth stable manifold of a Hamiltonian system. By combining the results we can make some firm statements regarding the existence and smoothness of the solution set.

Similar content being viewed by others

References

Bardi M., Capuzzo-Dolcetta I. (1997). Optimal Control and Viscosity Solugions of Hamilton Jacobi Bellman Equations. Birkhäuser, Boston

Buttazzo G., Giaquinta M., Hildebrandt S. (1998). One-Dimensional Variational Problems. Oxford University Press, New York

Cesari L. (1983). Optimization – Theory and Applications: Problems with Ordinary Differential Equations. Springer-Verlag, New York

Dacorogna B. (1989). Direct Methods in the Calculus of Variations. Springer-Verlag, New York

Day M.V. (1998). On Lagrange manifolds and viscosity solutions. J. Math. Syst, Estimation Control 8(3): 369–372

Hauser, J., and Osinga, H. M. (2001). On the geometry of optimal control: the inverted pendulum example. In Proceedings Amer. Control Conference, Arlington VA, pp. 1721–1726.

Jadbabaie, A., Yu, J., and Hauser, J. (1999). Unconstrained receding horizon control: Stability region of attraction results. In Proceedings Conference on Decision and Control, No. CDC99-REG0545, Phoenix, AZ.

Jadbabaie A., Yu J., Hauser J. (2001). Unconstrained receding horizon control of nonlinear systems. IEEE Trans. Autom. Control 46(5): 776–783

Krauskopf B., Osinga H.M. (2003). Computing geodesic level sets on global (un)stable manifolds of vector fields. SIAM J. Appl. Dyn. Syst. 2(4): 546–569

Krauskopf B., Osinga H.M., Doedel E.J., Henderson M.E., Guckenheimer J., Vladimirsky A., Dellnitz M., Junge O. (2005). A survey of methods for computing (un)stable manifolds of vector fields. Int. J. Bifurcation Chaos 15(3): 763–791

Lee E.B., Markus L. (1967). Optimization – Theory and Applications: Problems with Ordinary Differential Equations. Wiley, New York

Lukes D.L. (1969). Optimal regulation of nonlinear dynamical systems. SIAM J. Control 7(1): 75–100

Malisoff M. (2002). Viscosity solutions of the Bellman equation for exit time optimal control problems with vanishing Lagrangians. SIAM J. Control Optim. 40(5): 1358–1383

Malisoff M. (2003). Further results on the Bellman equation for optimal control problems with exit times and nonnegative Lagrangians. Syst. & Control Lett. 50, 65–79

Malisoff M. (2004). Bounded-from-below solutions of the Hamilton-Jacobi equation for optimal control problems with exit times: vanishing Lagrangians, eikonal equations, and shape-from-shading. Nonlinear Diff. Eq. Appl. 11(1): 95–122

Osinga H. M., and Hauser, J. (2005). Multimedia supplement with this paper; available at http://www.enm.bris.ac.uk/anm/preprints/2005r28.html.

Palis J., de Melo W. (1982). Geometric Theory of Dynamical Systems. Springer-Verlag, New York

Pontryagin L.S., Boltyanski V.G., Gamkrelidze R.V., Mischenko E.F. (1962). The Mathematical Theory of Optimal Processes. Wiley, New York

Van der Schaft A.J. (2000). L 2-Gain and Passivity Techniques in Nonlinear Control. 2nd edn. Springer-Verlag, New York

Sontag E.D. (1998). Mathematical Control Theory: Deterministic Finite Dimensional Systems, 2nd edn. Texts in Applied Mathematics 6, Springer-Verlag, New York

Sussmann H.J., Willems J.C. (1997). 300 years of optimal control: From the brachystochrone to the maximum principle. IEEE Control Syst. Maga. 17(3): 32–44

Sussmann H.J. (1998). Geometry and optimal control. In: Baillieul J., Willems J.C. (eds). Mathematical Control Theory. Springer-Verlag, New York, pp. 140–198

Zelikin M.I., Borisov V.F. (1994). Theory of Chattering Control with Applications to Astronautics, Robotcs, Economics, and Engineering. Birkhäuser, Boston

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Osinga, H.M., Hauser, J. The Geometry of the Solution Set of Nonlinear Optimal Control Problems. J Dyn Diff Equat 18, 881–900 (2006). https://doi.org/10.1007/s10884-006-9051-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-006-9051-0

Keywords

- Hamilton–Jacobi–Bellman equation

- Hamiltonian system

- optimal control

- Pontryagin’s maximum principle

- global stable manifolds