Abstract

In this work, we revisit the global denoising framework recently introduced by Talebi and Milanfar. We analyze the asymptotic behavior of its mean-squared error restoration performance in the oracle case when the image size tends to infinity. We introduce precise conditions on both the image and the global filter to ensure and quantify this convergence. We also make a clear distinction between two different levels of oracle that are used in that framework. By reformulating global denoising with the classical formalism of diagonal estimation, we conclude that the second-level oracle can be avoided by using Donoho and Johnstone’s theorem, whereas the first-level oracle is mostly required in the sequel. We also discuss open issues concerning the most challenging aspect, namely the extension of these results to the case where neither oracle is required.

Similar content being viewed by others

Notes

For instance, for NL-means we would have \(K_{i,j} = \mathrm{e}^{-\frac{\Vert P_i-P_j\Vert ^2}{2h^2}}\), with \(P_i\) and \(P_j\) the patches centered at i and j and h a parameter.

From now on, we will write \(b_j^N\) instead of \(b_j\) to remember that the behavior of these coefficients strongly depend on the image size N.

References

Alvarez, L., Gousseau, Y., Morel, J.M.: The Size of Objects in Natural and Artificial Images, vol. 111. Elsevier, Amsterdam (1999). doi:10.1016/S1076-5670(08)70218-0

Awate, S., Whitaker, R.: Image denoising with unsupervised information-theoretic adaptive filtering. In: International Conference on Computer Vision and Pattern Recognition (CVPR 2005), pp. 44–51 (2004)

Brand, M.: Fast low-rank modifications of the thin singular value decomposition. Linear Algebra Appl. 415(1), 20–30 (2006). doi:10.1016/j.laa.2005.07.021. http://linkinghub.elsevier.com/retrieve/pii/S0024379505003812

Brodatz, P.: Textures: A Photographic Album for Artists and Designers. Dover Pubns, New York (1966)

Buades, A., Coll, B., Morel, J.M.: A non-local algorithm for image denoising. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005. CVPR 2005, vol. 2, pp. 60–65. IEEE (2005)

Chan, S.H., Zickler, T., Lu, Y.M.: Demystifying symmetric smoothing filters. arXiv preprint arXiv:1601.00088 (2016)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Donoho, D.L., Johnstone, I.M., et al.: Ideal denoising in an orthonormal basis chosen from a library of bases. Comp. Rendus l’Acad. Sci. Ser. Math. 319(12), 1317–1322 (1994)

Donoho, D.L., Johnstone, J.M.: Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3), 425–455 (1994)

Duval, V., Aujol, J.F., Gousseau, Y.: A bias-variance approach for the nonlocal means. SIAM J. Imaging Sci. 4(2), 760–788 (2011)

Facciolo, G., Almansa, A., Aujol, J.F., Caselles, V.: Irregular to regular sampling, denoising, and deconvolution. SIAM MMS 7(4), 1574–1608 (2009). doi:10.1137/080719443

Guichard, F., Moisan, L., Morel, J.M.: A review of PDE models in image processing and image analysis. In: Journal de Physique IV (Proceedings), vol. 12, pp. 137–154. EDP sciences (2002)

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011). doi:10.1137/090771806

Lebrun, M., Buades, A., Morel, J.M.: A nonlocal bayesian image denoising algorithm. SIAM J. Imaging Sci. 6(3), 1665–1688 (2013). doi:10.1137/120874989

Levin, A., Nadler, B., Durand, F., Freeman, W.T.: Patch complexity, finite pixel correlations and optimal denoising. In: ECCV 2012, LNCS 7576 LNCS(PART 5), 73–86 (2012). doi:10.1007/978-3-642-33715-4_6

Mallat, S.: A Wavelet Tour of Signal Processing: The Sparse Way. Academic press, London (2008)

Milanfar, P.: A tour of modern image filtering: New insights and methods, both practical and theoretical. Signal Process. Mag. IEEE 30(1), 106–128 (2013)

Milanfar, P.: Symmetrizing smoothing filters. SIAM J. Imaging Sci. 6(1), 263–284 (2013)

Ordentlich, E., Seroussi, G., Verdu, S., Weinberger, M., Weissman, T.: A discrete universal denoiser and its application to binary images. In: Proceedings of 2003 International Conference on Image Processing, 2003. ICIP 2003, vol. 1, pp. I–117. IEEE (2003)

Pati, Y.C., Rezaiifar, R., Krishnaprasad, P.: Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In: 1993 Conference Record of the Twenty-Seventh Asilomar Conference on Signals, Systems and Computers, 1993. pp. 40–44. IEEE (1993)

Pierazzo, N., Rais, M., Morel, J.M., Facciolo, G.: DA3D: fast and data adaptive dual domain denoising. In: 2015 IEEE International Conference on Image Processing (ICIP), pp. 432–436. IEEE (2015)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1), 259–268 (1992)

Talebi, H., Milanfar, P.: Global denoising is asymptotically optimal. In: International Conference on Image Processing (ICIP), pp. 818–822. IEEE. Paris (2014)

Talebi, H., Milanfar, P.: Global image denoising. IEEE Trans. Image Process. 23(2), 755–768 (2014)

Talebi, H., Milanfar, P.: Asymptotic performance of global denoising. SIAM J. Imaging Sci. 9(2), 665–683 (2016)

Weissman, T., Ordentlich, E., Seroussi, G., Verdú, S., Weinberger, M.J.: Universal discrete denoising: known channel. IEEE Trans. Inf Theory 51(1), 5–28 (2005)

Yaroslavsky, L.: Digital Picture Processing: An Introduction. Springer, New York (2012)

Yu, G., Sapiro, G., Mallat, S.: Solving inverse problems with piecewise linear estimators: from Gaussian mixture models to structured sparsity. IEEE Trans. Image Process. 21(5), 2481–2499 (2012). doi:10.1109/TIP.2011.2176743. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6104390. http://www.di.ens.fr/mallat/papiers/SSMS-journal-subm.pdf. http://www.ncbi.nlm.nih.gov/pubmed/22180506

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors would like to thank H. Talebi and P. Milanfar for encouraging and insightful discussions and the anonymous reviewers for critical observations that helped to clarify and improve the paper. This work has been partially funded by the French Research Agency (ANR) under Grant No. ANR-14-CE27-001 (MIRIAM). Most of this work was done while A. Almansa worked with CNRS LTCI at Télécom ParisTech.

Appendices

Appendix 1: Global Denoising for Gaussian Textures on Fourier Basis

Proposition 3

Let \( {\mathbf {u}}= {\mathbf {h}} * {\mathbf {m}}\) be a Gaussian texture where \(m_i \sim {\mathcal {N}}(0,\tau ^2)\) iid, and the kernel \({\mathbf {h}}\in \ell ^1 ({\mathbb {Z}}^2)\) has a smooth Fourier transform \(\widehat{{\mathbf {h}}}\in L^1\left( \left[ -\frac{\pi }{2},\frac{\pi }{2}\right] ^2\right) \cap C^\infty \). Then, when choosing V as a Fourier or DCT basis, and \(\lambda \) as the optimal oracle eigenvalues for that basis, then the MSE for global denoising is upper-bounded by

Asymptotically, we have a strictly positive MSE bound

Proof

Consider for simplicity images of square size \(N=n^2\) with \(n\in {\mathbb {Z}}^2\).

Now consider \({\mathbf {m}}^N\) the restriction of m to \(I_N = [-\frac{N}{2},\frac{N}{2}^2) \cap {\mathbb {Z}}^2\).

In the truncated case, the convolution is understood in the periodic sense, so that we can write

with \(\widehat{{\mathbf {h}}_N}(k) = {\hat{h}}(\frac{2 \pi k}{n})\).

Now if we decompose \({\mathbf {u}}_N\) in the Fourier basis \(V=F^*\), then

So the upper bound given in Eq. (14) for the optimal MSE is

In the asymptotic case when \(N\rightarrow \infty \), a simple Riemann sum argument based on the regularity of \({\hat{h}}\) and on the known value of \(\int _{\mathrm{d}I} |{\hat{m}}| = |\mathrm{d}I| {\mathbb {E}}(\widehat{m_N}(k)) = \tau \sqrt{\frac{2}{\pi }}|\mathrm{d}I|\) over a small interval (mean absolute deviation of a gaussian) leads to the conclusion that the upper bound tends to a strictly positive constant

\(\square \)

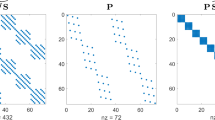

Left column the decay of the \(|b_j^N|\) for each size and the result of the model fitting (dotted lines) for the image simpson for the different bases (from top to bottom) DCT, wavelet and O-NLM. Right column the decay of the \({{\mathrm{MSE}}}^\star \) (blue), the upper bound from (14) (orange) and the fitted bound (yellow) (Color figure online)

Left column the decay of the \(|b_j^N|\) for each size and the result of the model fitting (dotted lines) for the image bricks for the different bases (from top to bottom) DCT, wavelet and O-NLM. Right column the decay of the \({{\mathrm{MSE}}}^\star \) (blue), the upper bound from (14) (orange) and the fitted bound (yellow) (Color figure online)

Left column the decay of the \(|b_j^N|\) for each size and the result of the model fitting (dotted lines) for the image sparse for the different bases (from top to bottom) DCT, wavelet and O-NLM. Right column the decay of the \({{\mathrm{MSE}}}^\star \) (blue), the upper bound from (14) (orange) and the fitted bound (yellow) (Color figure online)

Left column the decay of the \(|b_j^N|\) for each size and the result of the model fitting (dotted lines) for the image synthetic for the different bases (from top to bottom) DCT, wavelet and O-NLM. Right column the decay of the \({{\mathrm{MSE}}}^\star \) (blue), the upper bound from (14) (orange) and the fitted bound (yellow) (Color figure online)

Left column the decay of the \(|b_j^N|\) for each size and the result of the model fitting (dotted lines) for the image man for the different bases (from top to bottom) DCT, Wavelet, and O-NLM. Right column the decay of the \({{\mathrm{MSE}}}^\star \) (blue), the upper bound from (14) (orange) and the fitted bound (yellow) (Color figure online)

Appendix 2: An Oracle Filter that Provides Asymptotically Zero MSE

Proposition 4

Consider an oracle filter which consists in denoising \({\tilde{\mathbf {u}}}\) by averaging at pixel i all values \({\tilde{\mathbf {u}}}_j\) such that \(|u_i - u_j| \le \varepsilon \) for a given threshold \(\varepsilon >0\) and an infinite image \({\mathbf {U}}_{}\) bounded with values in [0, 1]. Then, the value \({{\mathrm{MSE}}}(\widehat{{\mathbf {u}}^N} | {{\mathbf {u}}^N})\) converges to a limit smaller than \(\varepsilon ^2\).

Proof

Indeed, let \(n = \lceil \frac{1}{\varepsilon } \rceil \), for \(0\le k <n\) let \(C_k = \{i \,{\in }\, \varOmega ;\; u_i^N \,{\in }\, \left[ \frac{k}{n}, \frac{k+1}{n} \right] \}\), and for every pixel i let \(A_i^{\varepsilon } = \{j; \; |u_i^N-u_j^N|\le \varepsilon \}\). It is clear that if \(i \in C_k\), then \(C_k \subset A_i^{\varepsilon }\). We can write

For a given value of \(\varepsilon \), this term converges to \(\varepsilon ^2\) when \(N\,{\rightarrow }\, \infty \). \(\square \)

Appendix 3: Additionnal Experiments

Figures 12, 13, 14, 15 and 16 show the detailed asymptotic convergence results for the images in Fig. 7 and Table 1.

Rights and permissions

About this article

Cite this article

Houdard, A., Almansa, A. & Delon, J. Demystifying the Asymptotic Behavior of Global Denoising. J Math Imaging Vis 59, 456–480 (2017). https://doi.org/10.1007/s10851-017-0716-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-017-0716-6