Abstract

Let \(\mathcal {A}\) be a Weyl arrangement in an \(\ell \)-dimensional Euclidean space. The freeness of restrictions of \(\mathcal {A}\) was first settled by a case-by-case method by Orlik and Terao (Tôhoku Math J 52: 369–383, 1993), and later by a uniform argument by Douglass (Represent Theory 3: 444–456, 1999). Prior to this, Orlik and Solomon (Proc Symp Pure Math Amer Math Soc 40(2): 269–292, 1983) had completely determined the exponents of these arrangements by exhaustion. A classical result due to Orlik et al. (Adv Stud Pure Math 8: 461–77, 1986) asserts that the exponents of any \(A_1\) restriction, i.e., the restriction of \(\mathcal {A}\) to a hyperplane, are given by \(\{m_1,\ldots , m_{\ell -1}\}\), where \(\exp (\mathcal {A})=\{m_1,\ldots , m_{\ell }\}\) with \(m_1 \le \cdots \le m_{\ell }\). As a next step towards conceptual understanding of the restriction exponents, we will investigate the \(A_1^2\) restrictions, i.e., the restrictions of \(\mathcal {A}\) to the subspaces of type \(A_1^2\). In this paper, we give a combinatorial description of the exponents and describe bases for the modules of derivations of the \(A_1^2\) restrictions in terms of the classical notion of related roots by Kostant (Proc Nat Acad Sci USA 41:967–970, 1955).

Similar content being viewed by others

1 Introduction

Assume that \(V=\mathbb {R}^\ell \) with the standard inner product \((\cdot ,\cdot )\). Denote by \(\Phi \) an irreducible (crystallographic) root system in V and by \(\Phi ^+\) a positive system of \(\Phi \). Let \(\mathcal {A}\) be the Weyl arrangement of \(\Phi ^+\). Denote by \(L(\mathcal {A})\) the intersection poset of \(\mathcal {A}\). For each \(X\in L(\mathcal {A})\), we write \({\mathcal {A}}^{X}\) for the restriction of \({\mathcal {A}}\) to X. Set \(L_p(\mathcal {A}):=\{X \in L(\mathcal {A}) \mid \mathrm{codim}(X)=p\}\) for \(0 \le p \le \ell \). Let W be the Weyl group of \(\Phi \) and let \(m_1, \ldots , m_\ell \) with \(m_1 \le \cdots \le m_\ell \) be the exponents of W.

Notation 1.1

If \(X \in L_p(\mathcal {A})\), then \(\Phi _X := \Phi \cap X^{\perp }\) is a root system of rank p. A positive system of \(\Phi _X\) is taken to be \(\Phi ^+_X:=\Phi ^+\cap \Phi _X\). Let \(\Delta _X\) be the base of \(\Phi _X\) associated with \(\Phi ^+_X\).

Definition 1.2

A subspace \(X\in L(\mathcal {A})\) is said to be of type T (or T for short) if \(\Phi _X\) is a root system of type T. In this case, the restriction \(\mathcal {A}^{X}\) is said to be of type T (or T).

Weyl arrangements are important examples of free arrangements. In other words, the module \(D(\mathcal {A})\) of \(\mathcal {A}\)-derivations is a free module. Furthermore, the exponents of \(\mathcal {A}\) are the same as the exponents of W, i.e., \(\exp ({\mathcal {A}})=\{m_1,\ldots , m_{\ell }\}\) (e.g., [23]). It is shown by Orlik and Solomon [19], using the classification of finite reflection groups, that the characteristic polynomial of the restriction \({\mathcal {A}}^{X}\) (\(X \in L(\mathcal {A})\)) of an arbitrary Weyl arrangement \(\mathcal {A}\) is fully factored. Orlik and the second author [22] proved a stronger statement that \(D(\mathcal {A}^X)\) is free by a case-by-case study. Soon afterwards, Douglass [8] gave a uniform proof for the freeness using the representation theory of Lie groups.

We are interested in studying the exponents of \(\mathcal {A}^X\) and bases for \(D(\mathcal {A}^X)\). For a general X, it is not an easy task. The only known general result due to Orlik, Solomon and the second author [20], asserts that for each \(X\in \mathcal {A}\), i.e., X is of type \(A_1\), \(\exp (\mathcal {A}^X)=\{m_1,\ldots , m_{\ell -1}\}\) and a basis for \(D(\mathcal {A}^X)\) consists of the restrictions to X of the corresponding basic derivations. In this paper, we consider a “next” general class of restrictions, that is when X is of type \(A_1^2\). We prove that \(\exp (\mathcal {A}^X)\) is obtained from \(\exp (\mathcal {A})\) by removing either the two largest exponents, or the largest and the middle exponents, depending upon a combinatorial condition on X. Furthermore, similar to the result of [20], our method produces an explicit basis for \(D(\mathcal {A}^X)\) in each case. The main combinatorial ingredient in our description is the following concept defined by Kostant:

Definition 1.3

[12] Two non-proportional roots \(\beta _1\), \(\beta _2\) are said to be related if

-

(a)

\((\beta _1,\beta _2)=0\),

-

(b)

for any \(\gamma \in \Phi {\setminus } \{\pm \beta _1,\pm \beta _2\}\), \((\gamma ,\beta _i)=0\) implies \((\gamma ,\beta _{3-i})=0\) for all \(i \in \{1,2\}\).

In this case, we call the set \(\{\beta _1,\beta _2\}\) relatedly orthogonal (RO), and the subspace \(X=H_{\beta _1} \cap H_{\beta _2}\) is said to be RO.

Remark 1.4

The relatedly orthogonal sets presumably first appeared in [12], wherein Kostant required \(\beta _1,\beta _2\) to have the same length and allowed a root is related to itself and its negative. Green called the relatedly orthogonal sets strongly orthogonal and defined the strong orthogonality in a more general setting [9, Definition 4.4.1]. It should be noted that the notion of strongly orthogonal sets is probably more well-known with the definition that neither sum nor difference of the two roots is a root. For every \(\beta \in \Phi \), set \(\beta ^\perp :=\{\alpha \in \Phi \mid (\alpha ,\beta )=0\}\). Condition (b) in Definition 1.3 can be written symbolically as (b’) \(\beta _1^{\perp }{\setminus } \{\pm \beta _2\}=\beta _2^{\perp } {\setminus } \{\pm \beta _1\}\).

Let \(\mathrm{h}\) be the Coxeter number of W. For \(\phi \in D(\mathcal {A})\), let \(\phi ^X\) be the restriction of \(\phi \) to X. We now formulate our main results.

Theorem 1.5

Assume that \(\ell \ge 3\). If \(X\in L(\mathcal {A})\) is of type \(A_1^2\), then \(\mathcal {A}^X\) is free with

Theorem 1.6

Assume that \(\ell \ge 3\).

-

(i)

Suppose that X is \(A_1^2\) and not RO. Let \(\{\varphi _{1}, \ldots , \varphi _\ell \}\) be a basis for \(D(\mathcal {A})\) with \(\deg {\varphi }_j=m_j\) (\(1 \le j \le \ell \)). Then, \(\{ {\varphi }^X_1, \ldots , {\varphi }^X_{\ell -2}\}\) is a basis for \(D(\mathcal {A}^X)\).

-

(ii)

Suppose that X is both \(A_1^2\) and RO. Then, \(\Phi \) must be of type \(D_\ell \) with \(\ell \ge 3\). Furthermore, a basis for \(D(\mathcal {A}^X)\) is given by \(\{ \tau _1^X, \ldots , \tau _{\ell -2}^X\}\), where

$$\begin{aligned} \tau _i := \sum _{k=1}^\ell x_k^{2i-1}\partial /\partial x_k\,\, (1 \le i \le \ell -2). \end{aligned}$$

Theorem 1.5 gave a little extra information: when X is RO, half of the Coxeter number is an exponent of W (hence, it lies in the “middle” of the exponent sequence). We emphasize that given [22], Theorem 1.5 can be verified by looking at the numerical results in [19] (the case of type D is rather non-trivial). It is interesting to search for a proof, free of case-by-case considerations. In this paper, we provide a conceptual proof with a minimal use of classifications of root systems: Theorem 1.5 holds true trivially for \(\ell =3\), and we use up to the classification of rank-4 root systems (for the proofs of some supporting arguments). As far as we are aware, a formula of the exponents of a restricted Weyl arrangement given under the RO condition is new. Our proof shows how the RO concept arrives at the exponent description, hoping that it will reveal a new direction for future research of the exponents through the combinatorial properties of the root system.

Theorem 1.6 seems to be, however, less straightforward even if one relies on the classification. In comparison with the classical result of [20], Theorem 1.6(i) gives a bit more flexible construction of basis for \(D(\mathcal {A}^X)\). Namely, a wanted basis is obtained by taking the restriction to X of any basis for \(D(\mathcal {A})\), without the need of basic derivations. Nevertheless, the remaining part of the basis construction [Theorem 1.6(ii)] cannot avoid the classification. It may happen that there are more than one derivations in a basis for \(D(\mathcal {A})\) having the same degree. Hence, some additional computation is required to examine which derivation vanishes after taking the restriction to X (see Remark 2.10 and Example 5.2).

Beyond the \(A_1^2\) restrictions, driving conceptual understanding on the restrictions in higher codimensions, or of irreducible types is much harder (see Remarks 3.15 and 4.5). The numerical results [19] say that we have a similar formula for the exponents of any \(A_1^k\) restriction (\(k\ge 2\)) in terms of the strongly orthogonal sets of [9]. The details are left for future research.

The remainder of this paper is organized as follows. In Sect. 2, we first review preliminary results on free arrangements and their exponents, and a recent result (Combinatorial Deletion) relating the freeness to combinatorics of arrangements (Theorem 2.8). We also prove an important result in the paper, a construction of a basis for \(D(\mathcal {A}^H)\) (\(H\in \mathcal {A}\)) from a basis for \(D(\mathcal {A})\) when \(\mathcal {A}\) and \(\mathcal {A}{\setminus }\{H\}\) are both free (Theorem 2.9). We then review the background information on root systems, Weyl groups and Weyl arrangements. Based on the Combinatorial Deletion Theorem, we provide a slightly different proof for the result of [20] (Remark 2.15). In Sect. 3, we give an evaluation for the cardinality of every \(A_1^2\) restriction (Proposition 3.13). This evaluation can be expressed in terms of the local and global second smallest exponents of the Weyl groups (Remark 3.14). In Sect. 4, we first provide a proof for the freeness part of Theorem 1.5, i.e., the freeness of \(A_1^2\) restrictions (Theorem 4.4). The proof is different from (and more direct than) the proofs presented in [8, 22]. We then complete the proof of Theorem 1.5 by providing a proof for the exponent part (Theorem 4.6). The proof contains two halves which we present the proof for each half in Theorems 4.8 and 4.28. We close the section by giving two results about local–global inequalities on the second smallest exponents and the largest coefficients of the highest roots (Corollary 4.34), and the Coxeter number of any irreducible component of the subsystem orthogonal to the highest root in the simply laced cases (Corollary 4.35). In Sect. 5, we present the proof of Theorem 1.6 (Theorem 5.1 and Example 5.2). In Sect. 6, we give an alternative and bijective proof of Theorem 4.30, one of the key ingredients in the proof of the exponent part of Theorem 1.5.

2 Preliminaries

2.1 Free arrangements and their exponents

For basic concepts and results of free arrangements, we refer the reader to [21].

Let \(\mathbb {K}\) be a field and let \(V := \mathbb {K}^\ell \). A hyperplane in V is a subspace of codimension 1 of V. An arrangement is a finite set of hyperplanes in V. We choose a basis \(\{x_1,\ldots , x_\ell \}\) for \(V^*\) and let \(S:= \mathbb { K}[x_1,\ldots , x_\ell ]\). Fix an arrangement \(\mathcal {A}\) in V. The defining polynomial \(Q(\mathcal {A})\) of \(\mathcal {A}\) is defined by

where \( \alpha _H=a_1x_1+\cdots +a_\ell x_\ell \in V^*{\setminus }\{0\}\), \(a_i \in \mathbb {K}\) and \(H = \ker \alpha _H\). The number of hyperplanes in \(\mathcal {A}\) is denoted by \(|\mathcal {A}|\). It is easy to see that \(Q(\mathcal {A})\) is a homogeneous polynomial in S and \(\deg Q(\mathcal {A})=|\mathcal {A}|\).

The intersection poset of \(\mathcal {A}\), denoted by \(L(\mathcal {A})\), is defined to be

where the partial order is given by reverse inclusion. We agree that \(V \in L(\mathcal {A})\) is the unique minimal element. For each \(X \in L(\mathcal {A})\), we define the localization of \(\mathcal {A}\) on X by

and define the restriction \({\mathcal {A}}^{X}\) of \({\mathcal {A}}\) to X by

The Möbius function \(\mu : L(\mathcal {A}) \rightarrow \mathbb Z\) is formulated by

The characteristic polynomial \(\chi (\mathcal {A}, t)\) of \(\mathcal {A}\) is defined by

A derivation of S over \( \mathbb {K}\) is a linear map \(\phi :S\rightarrow S\) such that for all \(f,g \in S\), \(\phi (fg)=f\phi (g)+g\phi (f).\) Let \(\mathrm{{Der}}(S)\) denote the set of derivations of S over \( \mathbb {K}\). Then, \(\mathrm{{Der}}(S)\) is a free S-module with a basis \(\{\partial _1,\ldots , \partial _\ell \}\), where \(\partial _i:=\partial /\partial x_i\) for \(1 \le i \le \ell \). Define an S-submodule of \(\mathrm{{Der}}(S)\), called the module of \(\mathcal {A}\)-derivations, by

A nonzero element \(\phi = f_1\partial _1+\cdots +f_\ell \partial _\ell \in \mathrm{Der}(S)\) is homogeneous of degree b if each nonzero polynomial \(f_i\in S\) for \(1 \le i \le \ell \) is homogeneous of degree b. We then write \(\deg \phi =b\). The arrangement \(\mathcal {A}\) is called free if \(D(\mathcal {A})\) is a free S-module. If \(\mathcal {A}\) is free, then \(D(\mathcal {A})\) admits a basis \(\{\phi _1, \ldots , \phi _\ell \}\) consisting of homogeneous derivations [21, Proposition 4.18]. Such a basis is called a homogeneous basis. Although homogeneous basis needs not be unique, the degrees of elements of a basis are unique (with multiplicity but neglecting the order) depending only on \(\mathcal {A}\) [21, Proposition A.24]. In this case, we call \(\deg \phi _1, \ldots , \deg \phi _\ell \) the exponents of \(\mathcal {A}\), store them in a multiset denoted by \(\exp (\mathcal {A})\) and write

Interestingly, when an arrangement is free, the exponents turn out to be the roots of the characteristic polynomial due to the second author.

Theorem 2.1

(Factorization) If \(\mathcal {A}\) is free with \(\exp (\mathcal {A}) = \{d_1, \ldots , d_\ell \}\), then

Proof

See [27] or [21, Theorem 4.137]. \(\square \)

For \(\phi _1, \ldots , \phi _\ell \in D(\mathcal {A})\), we define the \((\ell \times \ell )\)-matrix \(M(\phi _1, \ldots , \phi _\ell )\) as the matrix with (i, j)th entry \(\phi _j(x_i)\). In general, it is difficult to determine whether a given arrangement is free or not. However, using the following criterion, we can verify that a candidate for a basis is actually a basis.

Theorem 2.2

(Saito’s criterion) Let \(\phi _1, \ldots , \phi _\ell \in D(\mathcal {A})\). Then, \(\{\phi _1, \ldots , \phi _\ell \}\) forms a basis for \(D(\mathcal {A})\) if and only if

In particular, if \(\phi _1, \ldots , \phi _\ell \) are all homogeneous, then \(\{\phi _1, \ldots , \phi _\ell \}\) forms a basis for \(D(\mathcal {A})\) if and only if the following two conditions are satisfied:

-

(i)

\(\phi _1, \ldots , \phi _\ell \) are independent over S,

-

(ii)

\(\sum _{i=1}^\ell \deg \phi _i = |\mathcal {A}|\).

Proof

See [21, Theorems 4.19 and 4.23]. \(\square \)

In addition to the Saito’s criterion, we have a way to check if a set of derivations is part of a homogeneous basis, and sometimes a \({\{d_1, \ldots , d_\ell \}_{\le }}\) indicates \(d_1\le \cdots \le d_\ell \).

Theorem 2.3

Let \(\mathcal {A}\) be a free arrangement with \(\exp (\mathcal {A}) =\{d_1, \ldots , d_\ell \}_{\le }\). If \(\phi _1, \ldots , \phi _k \in D(\mathcal {A})\) satisfy for \(1 \le i\le k\),

-

(i)

\(\deg \phi _i =d_i\),

-

(ii)

\(\phi _i \notin S\phi _1 +\cdots +S\phi _{i-1}\),

then \(\phi _1, \ldots , \phi _k\) may be extended to a basis for \(D(\mathcal {A})\).

Proof

See [21, Theorem 4.42]. \(\square \)

Definition 2.4

For \(X\in L(\mathcal {A})\), let \(I=I(X):=\sum _{H\in \mathcal {A}_X}\alpha _HS\) and \({\overline{S}}:=S/I\). For \(\phi \in D(\mathcal {A})\), define \(\phi ^X \in \mathrm{Der}({\overline{S}})\) by \(\phi ^X(f+I)=\phi (f)+I\). We call \(\phi ^X\) the restriction of \(\phi \) to X.

Proposition 2.5

If \(\phi \in D(\mathcal {A})\), then \(\phi ^X\in D(\mathcal {A}^X)\). If \(\phi ^X \ne 0\), then \(\deg \phi ^X=\deg \phi \).

Proof

See [20, Lemma 2.12]. \(\square \)

Fix \(H \in \mathcal {A}\), denote \(\mathcal {A}':=\mathcal {A}{\setminus } \{H\}\) and \(\mathcal {A}'':=\mathcal {A}^H\). We call \((\mathcal {A}, \mathcal {A}', \mathcal {A}'')\) the triple with respect to the hyperplane \(H \in \mathcal {A}\).

Proposition 2.6

Define \(h : D(\mathcal {A}') \rightarrow D(\mathcal {A})\) by \(h(\phi ) = \alpha _H\phi \) and \(q : D(\mathcal {A}) \rightarrow D(\mathcal {A}'')\) by \(q(\phi ) ={\phi }^H\). The sequence

is exact.

Proof

See [21, Proposition 4.45]. \(\square \)

Then, the freeness of any two of the triple, under a certain condition on their exponents, implies the freeness of the third.

Theorem 2.7

(Addition–Deletion) Let \(\mathcal {A}\) be a non-empty arrangement and let \(H \in \mathcal {A}\). Then, two of the following imply the third:

-

(1)

\(\mathcal {A}\) is free with \(\exp (\mathcal {A}) = \{d_1, \ldots , d_{\ell -1}, d_\ell \}\).

-

(2)

\(\mathcal {A}'\) is free with \(\exp (\mathcal {A}')=\{d_1, \ldots , d_{\ell -1}, d_\ell -1\}\).

-

(3)

\(\mathcal {A}''\) is free with \(\exp (\mathcal {A}'') = \{d_1, \ldots , d_{\ell -1}\}\).

Moreover, all the three hold true if \(\mathcal {A}\) and \(\mathcal {A}'\) are both free.

Proof

See [26] or [21, Theorems 4.46 and 4.51]. \(\square \)

The Addition Theorem and Deletion Theorem above are rather “algebraic” as they rely on the conditions concerning the exponents. Recently, the first author has found “combinatorial” versions for these theorems [2,3,4]. In this paper, we focus on the Deletion theorem.

Theorem 2.8

(Combinatorial deletion) Let \(\mathcal {A}\) be a free arrangement and \(H \in \mathcal {A}\). Then, \(\mathcal {A}'\) is free if and only if \(|\mathcal {A}_X| - |\mathcal {A}^H_X|\) is a root of \(\chi (\mathcal {A}_X, t)\) for all \(X \in L(\mathcal {A}^H)\).

Proof

See [2, Theorem 8.2]. \(\square \)

When \(\mathcal {A}\) and \(\mathcal {A}'\) are both free, one may construct a basis for \(D(\mathcal {A}'')\) from a basis for \(D(\mathcal {A})\). Although the following theorem is probably well-known among experts, we give a detailed proof for the sake of completeness.

Theorem 2.9

Let \(\mathcal {A}\) be a non-empty free arrangement and \(\exp (\mathcal {A})= \{d_1, \ldots , d_\ell \}_{\le }\). Assume further that \(\mathcal {A}'\) is also free. Let \(\{\varphi _{1}, \ldots , \varphi _\ell \}\) be a basis for \(D(\mathcal {A})\) with \(\deg {\varphi }_j=d_j\) for \(1 \le j \le \ell \). Then, there exists some p with \(1 \le p \le \ell \) such that \(\{ {\varphi }^H_1, \ldots , {\varphi }^H_\ell \} {\setminus } \{{\varphi }^H_{p}\}\) forms a basis for \(D(\mathcal {A}'')\).

Proof

By Theorem 2.7, \(\mathcal {A}''\) is also free and we may write \(\exp (\mathcal {A}'')=\exp (\mathcal {A}){\setminus }\{d_k\}\) for some k with \(1 \le k \le \ell \). Denote \({\tau }_i := {\varphi }_i\) (\(1 \le i \le k-1\)), and \({\tau }_j := {\varphi }_{j+1}\) (\(k \le j \le \ell -1\)).

If \({\tau }^H_i \notin {\overline{S}}{\tau }^H_1 + \cdots + {\overline{S}}{\tau }^H_{i-1}\) for all \(1 \le i \le \ell -1\), then by Theorem 2.3, \(\{{\tau }^H_1, \ldots , {\tau }^H_{\ell -1}\}=\{ {\varphi }^H_1, \ldots , {\varphi }^H_\ell \} {\setminus } \{{\varphi }^H_{k}\}\) forms a basis for \(D(\mathcal {A}'')\).

If not, there exists some p, \(1 \le p \le \ell -1\), such that \({\tau }^H_p \in {\overline{S}}{\tau }^H_1 + \cdots + {\overline{S}}{\tau }^H_{p-1}\). By Proposition 2.6,

where \(f_i \in S\) (\(1 \le i \le p-1\)), \(\tau \in D(\mathcal {A}')\), \(\deg \tau =d_p-1\). By Theorem 2.2 (Saito’s criterion), \(\{{\tau }_{1},\ldots , {\tau }_{p-1}, \tau , {\tau }_{p+1}, \ldots , {\tau }_{\ell -1}, \varphi _k\}\) is a basis for \(D(\mathcal {A}')\). By Theorem 2.7, \(\exp (\mathcal {A}'') =\exp (\mathcal {A}){\setminus } \{d_p\}\). It means that \(d_k=d_p\). Note that if \(d_k\) appears only once in \(\exp (\mathcal {A})\), then we obtain a contradiction here and the proof is completed. Now, suppose \(d_k\) appears at least twice. Again by Theorem 2.2, \(\{{\tau }_{1},\ldots , {\tau }_{p-1},\alpha _H \tau , {\tau }_{p+1}, \ldots , {\tau }_{\ell -1}, \varphi _k\}\) is a basis for \(D(\mathcal {A})\). Since the map \(q: D(\mathcal {A}) \rightarrow D(\mathcal {A}'')\) is surjective [21, Proposition 4.57], \(D(\mathcal {A}'')\) is generated by \(\{ {\tau }^H_{1},\ldots , {\tau }^H_{p-1}, {\tau }^H_{p+1}, \ldots , {\tau }^H_{\ell -1}, {\varphi }^H_k\}\). This set is the same as \(\{ {\varphi }^H_1, \ldots , {\varphi }^H_\ell \} {\setminus } \{{\varphi }^H_{p}\}\) which indeed forms a basis for \(D(\mathcal {A}'')\) by [21, Proposition A.3]. It completes the proof. \(\square \)

Remark 2.10

If \(d_k\) appears only once in \(\exp (\mathcal {A})\), then Theorem 2.9 gives an explicit basis for \(D(\mathcal {A}'')\). However, if \(d_k\) appears at least twice, Theorem 2.9 may not be sufficient to derive an explicit basis for \(D(\mathcal {A}'')\). It requires some additional computation to examine which derivation vanishes after taking the restriction to H. This observation will be useful to construct an explicit basis for \(D(\mathcal {A}^X)\) when X is \(A_1^2\) and RO (Example 5.2).

2.2 Root systems, Weyl groups and Weyl arrangements

Our standard reference for root systems and their Weyl groups is [6].

Let \(V := \mathbb {R}^\ell \) with the standard inner product \((\cdot ,\cdot )\). Let \(\Phi \) be an irreducible (crystallographic) root system spanning V. The rank of \(\Phi \), denoted by \(\mathrm {rank}(\Phi )\), is defined to be dim(V). We fix a positive system \(\Phi ^+\) of \(\Phi \). We write \(\Delta :=\{\alpha _1, \ldots ,\alpha _\ell \}\) for the simple system (base) of \(\Phi \) associated with \(\Phi ^+\). For \(\alpha = \sum _{i=1}^\ell d_i \alpha _i\in \Phi ^+\), the height of \(\alpha \) is defined by \( \mathrm{ht}(\alpha ) :=\sum _{i=1}^\ell d_i\). There are four classical types: \(A_\ell \) (\(\ell \ge 1\)), \(B_\ell \) (\(\ell \ge 2\)), \(C_\ell \) (\(\ell \ge 3\)), \(D_\ell \) (\(\ell \ge 4\)) and five exceptional types: \(E_6\), \(E_7\), \(E_8\), \(F_4\), \(G_2\). We write \(\Phi =T\) if the root system \(\Phi \) is of type T, otherwise, we write \(\Phi \ne T\).

A reflection in V with respect to a vector \(\alpha \in V{\setminus }\{0\}\) is a mapping \(s_{\alpha }: V \rightarrow V\) defined by \( s_\alpha (x) := x -2\frac{( x,\alpha )}{( \alpha , \alpha )} \alpha .\) The Weyl group \(W:=W(\Phi )\) of \(\Phi \) is a group generated by the set \(\{s_{\alpha }\mid \alpha \in \Phi \}\). An element of the form \(c=s_{\alpha _1}\dots s_{\alpha _\ell }\in W\) is called a Coxeter element. Since all Coxeter elements are conjugate (e.g., [6, Chapter V, \(\S \)6.1, Proposition 1]), they have the same order, characteristic polynomial and eigenvalues. The order \(\mathrm{h}:=\mathrm{h}(W)\) of Coxeter elements is called the Coxeter number of W. For a fixed Coxeter element \(c\in W\), if its eigenvalues are of the form \(\exp (2\pi \sqrt{-1}m_1/\mathrm{h}),\ldots , \exp (2\pi \sqrt{-1}m_\ell /\mathrm{h})\) with \(0< m_1 \le \cdots \le m_\ell <\mathrm{h}\), then the integers \(m_1,\ldots , m_\ell \) are called the exponents of W (or of \(\Phi \)).

Theorem 2.11

For any irreducible root system \(\Phi \) of rank \(\ell \),

-

(i)

\(m_j + m_{\ell +1-j}=\mathrm{h}\) for \(1 \le j \le \ell \),

-

(ii)

\(m_1+m_2+\cdots +m_\ell =\ell \mathrm{h}/2\),

-

(iii)

\(1=m_1< m_2 \le \cdots \le m_{\ell -1} <m_\ell =\mathrm{h}-1\),

-

(iv)

\(\mathrm{h}= 2\left| \Phi ^+ \right| /\ell ,\)

-

(v)

\(\mathrm{h}=\mathrm{ht}(\theta )+1\), where \(\theta \) is the highest root of \(\Phi \).

-

(vi)

\(\mathrm{h} = 2 \sum _{\mu \in \Phi ^+}( \widehat{\gamma }, {\widehat{\mu }} )^2,\) for arbitrary \(\gamma \in \Phi ^+\). Here \({\widehat{x}} :=x/(x,x)\).

Proof

See, e.g., [6, Chapter V, \(\S \)6.2 and Chapter VI, \(\S \)1.11]. \(\square \)

Let \(\Theta ^{(r)}\subseteq \Phi ^+\) be the set consisting of positive roots of height r, i.e., \(\Theta ^{(r)}=\{\alpha \in \Phi ^+ \mid \mathrm{ht}(\alpha )=r\}\). The height distribution of \(\Phi ^+\) is defined as a multiset of positive integers:

where \(t_r := \left| \Theta ^{(r)}\right| \). The dual partition \({\mathcal {DP}}(\Phi ^+)\) of the height distribution of \(\Phi ^+\) is given by a multiset of non-negative integers:

where notation \((a)^b\) means the integer a appears exactly b times.

Theorem 2.12

The exponents of W are given by \({\mathcal {DP}}(\Phi ^+)\).

Proof

See, e.g., [1, 13, 16, 24]. \(\square \)

Let \(S^W\) denote the ring of W-invariant polynomials. Let \(\mathcal {F} = \{f_1, \ldots , f_\ell \}\) be a set of basic invariants with \(\deg f_1 \le \cdots \le \deg f_\ell \). Then, \(S^W=\mathbb {R}[f_1, \ldots , f_\ell ]\) and \(m_i=\deg f_i -1\) (\(1 \le i \le \ell \)). Let \({\mathcal {D}}=\{\theta _{f_1}, \ldots , \theta _{f_{\ell }}\}\) be the set of basic derivations associated with \(\mathcal {F} \) (see, e.g., [20, Definition 2.4] and [21, Definition 6.50]). The Weyl arrangement of \(\Phi ^+\) is defined by

Theorem 2.13

\(\mathcal {A}\) is free with \(\exp (\mathcal {A}) = \{m_1, \ldots , m_\ell \}\) and \(\{\theta _{f_1}, \ldots , \theta _{f_{\ell }}\}\) is a basis for \(D(\mathcal {A})\).

Proof

See, e.g., [21, Theorem 6.60] and [23]. \(\square \)

Recall from Proposition 2.6 the map \(q : D(\mathcal {A}) \rightarrow D(\mathcal {A}'')\) defined by \(q(\phi ) ={\phi }^H\).

Theorem 2.14

If \(H\in \mathcal {A}\), then \(\mathcal {A}^H\) is free with \(\exp (\mathcal {A}^H)=\{m_1,\ldots , m_{\ell -1}\}\). Furthermore, \(\{ {\theta }^H_{f_1}, \ldots , {\theta }^H_{f_{\ell -1}}\}\) is a basis for \(D(\mathcal {A}^H)\).

Proof

See [20, Theorem 1.12]. \(\square \)

Remark 2.15

Theorem 2.14 can be proved in a slightly different way. By [20, Theorem 3.7], \(|\mathcal {A}|-|\mathcal {A}^H|=m_\ell \). Thus, \(|\mathcal {A}|-|\mathcal {A}^H|\) is a root of \(\chi (\mathcal {A}, t)\) by Theorems 2.13 and 2.1. By Theorem 2.8, \(\mathcal {A}'\) is free (see also Theorem 4.4 for a similar and more detailed explanation). Thus, \(\mathcal {A}^H\) is free with \(\exp (\mathcal {A}^H)=\{m_1,\ldots , m_{\ell -1}\}\) which follows from Theorem 2.7. A basis for \(D(\mathcal {A}^H)\) can be constructed a bit more flexibly, without the need to introduce the basic derivations. Namely, let \(\{\varphi _{1}, \ldots , \varphi _\ell \}\) be any basis for \(D(\mathcal {A})\) with \(\deg {\varphi }_j=m_j\) for \(1 \le j \le \ell \). Note that \(m_{\ell }\) appears exactly once in \(\exp (\mathcal {A})\). Then, by Theorem 2.9, \(\{ {\varphi }^H_1, \ldots , {\varphi }^H_{\ell -1}\}\) is a basis for \(D(\mathcal {A}'')\).

A subset \(\Gamma \subseteq \Phi \) is called a (root) subsystem if it is a root system in \(\text{ span}_\mathbb {R}(\Gamma ) \subseteq V\). For any \(J \subseteq \Delta \), set \(\Phi (J):=\Phi \cap \text{ span }(J)\). Let W(J) be the group generated by \(\{s_{\delta }\mid \delta \in J\}\). By [7, Proposition 2.5.1], \(\Phi (J)\) is a subsystem of \(\Phi \), J is a base of \(\Phi (J)\) and the Weyl group of \(\Phi (J)\) is W(J). For any subset \(\Gamma \subseteq \Phi \) and \(w \in W\), denote \(w\Gamma :=\{w(\alpha ) \mid \alpha \in \Gamma \}\subseteq \Phi \). A parabolic subsystem is any subsystem of the form \(w\Phi (J)\), likewise, a parabolic subgroup of W is any subgroup of the form \(wW(J)w^{-1}\), where \(J \subseteq \Delta \) and \(w \in W\).

Theorem 2.16

For \(\Gamma \subseteq \Phi \), \(\Phi \cap \text{ span }(\Gamma )\) is a parabolic subsystem of \(\Phi \) and its Weyl group is a parabolic subgroup of W.

Proof

See, e.g., [10, Proposition 2.6] and [14, Lemma 3.2.3]. \(\square \)

Recall the notation of \(\Phi _X\), \(\Phi ^+_X\), \(\Delta _X\) for \(X \in L(\mathcal {A})\) from Notation 1.1. Note that if \(X \in L_p(\mathcal {A})\), then \(\Phi _X\) is a parabolic subsystem of rank p (Theorem 2.16). For each \(X \in L(\mathcal {A})\), define the fixer of X by

Proposition 2.17

If \(X \in L(\mathcal {A})\), then

-

(i)

\(W_X\) is the Weyl group of \(\Phi _X\). Consequently, \(W_X\) is a parabolic subgroup of W.

-

(ii)

\({\mathcal {A}}_X\) is the Weyl arrangement of \(\Phi _X^+\).

Proof

-

(i)

The first statement follows from [7, Proposition 2.5.5]. The second statement follows from Theorem 2.16. (ii) follows from (i) and [6, Chapter V, \(\S \)3.3, Proposition 2].

\(\square \)

Definition 2.18

Two subsets \(\Phi _1, \Phi _2\subseteq \Phi \) (resp., two subspaces \(X_1, X_2\in L(\mathcal {A})\)) lie in the same W-orbit if there exists \(w\in W\) such that \(\Phi _1 = w\Phi _2\) (resp., \(X_1=w X_2\)). Two subgroups \(W_1, W_2\) of W are W-conjugate if there exists \(w\in W\) such that \(W_1 = w^{-1}W_2w\).

Lemma 2.19

Let \(X_1, X_2\) be subspaces in \(L(\mathcal {A})\). The following statements are equivalent:

-

(i)

\(\Phi _{X_1}\) and \(\Phi _{X_2}\) lie in the same W-orbit.

-

(ii)

\(\Delta _{X_1}\) and \(\Delta _{X_2}\) lie in the same W-orbit.

-

(iii)

\(X_1\) and \(X_2\) lie in the same W-orbit.

-

(iv)

\(W_{X_1}\) and \(W_{X_2}\) are W-conjugate.

Consequently, if any one of the statements above holds, then \(|\mathcal {A}^{X_1} | =|\mathcal {A}^{X_2}|\).

Proof

The equivalence of the statements follows from [19, Lemmas (3.4), (3.5)] (see also [11, Chapter VIII, 27-3, Proposition B]). The consequence is straightforward. \(\square \)

3 Enumerate the cardinalities of \(A_1^2\) restrictions

In this section, we present the first step towards proving conceptually the exponent formula in Theorem 1.5, the most important result in our paper. When X is of type \(A_1^2\), we express the cardinality \(|\mathcal {A}^X|\) in terms of the Coxeter number \(\mathrm{h}\) and a certain sum of inner products of positive roots (Proposition 3.13).

Definition 3.1

A set \(\{\beta _1,\beta _2\}\subseteq \Phi \) with \(\beta _1\ne \pm \beta _2\) is called an \(A_1^2\) set if it spans a subsystem of type \(A_1^2\), i.e., \(\Phi \cap \mathrm {span}\{\beta _1,\beta _2\}=\{\pm \beta _1,\pm \beta _2\}\).

Thus, \(X \in L(\mathcal {A})\) is of type \(A_1^2\) if and only if \(\Delta _X\) is an \(A_1^2\) set (Notation 1.1).

Lemma 3.2

-

(i)

For any \(\beta =\sum _{\alpha \in \Delta }c_\alpha \alpha \in \Phi \), the set of \(\alpha \in \Delta \) such that \(c_\alpha \ne 0\) forms a non-empty connected induced subgraph of the Dynkin graph of \(\Phi \).

-

(ii)

If G is a non-empty connected subgraph of the Dynkin graph, then \(\sum _{\alpha \in G}\alpha \in \Phi \).

-

(iii)

If \(\{\beta _1,\beta _2\} \subseteq \Delta \) and \((\beta _1,\beta _2)=0\), then \(\{\beta _1,\beta _2\}\) is an \(A_1^2\) set.

Proof

Proofs of (i) and (ii) can be found in [6, Chapter VI, §1.6, Corollary 3 of Proposition 19]. (iii) is an easy consequence of (i). \(\square \)

Let \({\mathcal {T}}(A_1^2)\) (resp., \({\mathcal {T}}(RO)\)) be the set consisting of \(A_1^2\) (resp., RO) sets.

Proposition 3.3

-

(i)

If \(\{ \beta _1 ,\beta _2\}\) is \(A_1^2\) (resp., RO), then \(w\{ \beta _1 ,\beta _2\}\) is \(A_1^2\) (resp., RO) for all \(w \in W\).

-

(ii)

\({\mathcal {T}}(A_1^2)= {\mathcal {T}}(\Delta )\), where \({\mathcal {T}}(\Delta ):=\{w\{\alpha _{i},\alpha _j\} \mid \{\alpha _{i},\alpha _j\} \subseteq \Delta , (\alpha _i, \alpha _j)=0, w \in W\}\).

Proof

\(\square \)

Remark 3.4

By Proposition 3.3 and Definition 1.3, \({\mathcal {T}}(A_1^2) \ne \emptyset \) (resp., \({\mathcal {T}}(RO)\ne \emptyset \)) only when \(\dim (V)\ge 3\).

Remark 3.5

Only for giving additional information, we collect some numerical facts about \({\mathcal {T}}(A_1^2)\) and \({\mathcal {T}}(RO)\). These facts shall not be used in any of upcoming arguments that support the proof of Theorem 1.5 or Theorem 1.6(i). Some of these facts will be rementioned in the proof of Theorem 1.6(ii) (Example 5.2), which is the part we are unable to avoid the classification of all irreducible root systems. By a direct check, \({\mathcal {T}}(RO)\cap {\mathcal {T}}(A_1^2)\ne \emptyset \) if and only if \(\Phi =D_\ell \) with \(\ell \ge 3\) (\(D_3=A_3\)). In general, \({\mathcal {T}}(RO) {\setminus } {\mathcal {T}}(A_1^2)\ne \emptyset \), for example when \(\Phi =B_\ell \) (\(\ell \ge 3\)), \(\{\epsilon _1-\epsilon _2,\epsilon _1+\epsilon _2\}\) is RO but spans a subsystem of type \(B_2\) (notation in [6]). There is only one orbit of \(A_1^2\) sets, with the following exceptions:

-

(i)

when \(\Phi =D_4\), \({\mathcal {T}}(RO)={\mathcal {T}}(A_1^2)\), and there are three different orbits,

-

(ii)

when \(\Phi =D_\ell \) \((\ell \ge 5)\), \({\mathcal {T}}(RO) \subsetneq {\mathcal {T}}(A_1^2)\), and there are two different orbits: \({\mathcal {T}}(RO)=\{ w\{\epsilon _1-\epsilon _2,\epsilon _1+\epsilon _2\} \mid w \in W\}\) and \({\mathcal {T}}(A_1^2) {\setminus } {\mathcal {T}}(RO)\).

-

(iii)

when \(\Phi \in \{B_\ell , C_\ell \}\) \((\ell \ge 4)\), \({\mathcal {T}}(RO)\cap {\mathcal {T}}(A_1^2)=\emptyset \), and there are two different orbits: \({\mathcal {T}}(\Delta ^{=}) :=\{ \{\alpha ,\beta \} \in {\mathcal {T}}(A_1^2) \mid \Vert \alpha \Vert = \Vert \beta \Vert \}\), and \({\mathcal {T}}(A_1^2) {\setminus }{\mathcal {T}}(\Delta ^{=})\).

Recall the notation \(\beta ^\perp =\{\alpha \in \Phi \mid (\alpha ,\beta )=0\}\) for \(\beta \in \Phi \).

Definition 3.6

For an \(A_1^2\) set \(\{ \beta _1, \beta _2\}\subseteq \Phi \), define

and for each \(i \in \{1,2\}\),

and

Proposition 3.7

\(\mathcal {N}_0\) is not empty.

Proof

For \(\beta _1, \beta _2 \in \Phi \), there exists \(\delta \in \Phi \) such that \((\delta ,\beta _1)\ne 0\) and \((\delta ,\beta _2)\ne 0\), e.g., see [10, Lemma 2.10]. Thus, \(\Phi \cap \text{ span }\{\beta _1, \beta _2, \delta \} \in \mathcal {N}_0\). \(\square \)

In the remainder of this section, we assume that \(X=H_{\beta _1} \cap H_{\beta _2}\) is an \(A_1^2\) subspace with \(\beta _1, \beta _2 \in \Phi ^+\). If \(Y \in \mathcal {A}^X\), then \(\Phi _Y\) is a subsystem of rank 3 and contains \(\Delta _X=\{\beta _1, \beta _2\}\).

Proposition 3.8

If \(X \in L_{2}(\mathcal {A})\) is an \(A_1^2\) subspace, then

Proof

We only give a proof for the first equality. The others follow by a similar method. Let \(\Psi \in \mathcal {N}_0\). There exists \(\delta \in \Phi ^+\) such that \(\Psi =\Phi \cap \text{ span }\{\beta _1, \beta _2, \delta \}\). Thus, \(\Psi =\Phi \cap Y^{\perp }\) where \(Y:=X\cap H_{\delta }\). Therefore, \(\Psi = \Phi _{Y}\) with \(Y \in \mathcal {A}^X\). \(\square \)

Lemma 3.9

Let \(X \in L(\mathcal {A})\).

-

(i)

\(\mathcal {A}= \bigcup _{Y \in \mathcal {A}^X}\mathcal {A}_{Y}\).

-

(ii)

If \(Y, Y' \in \mathcal {A}^X\) and \(Y \ne Y'\), then \(\mathcal {A}_{Y} \cap \mathcal {A}_{Y'}=\mathcal {A}_{X}\).

-

(iii)

\(\mathcal {A}{\setminus } \mathcal {A}_X= \bigsqcup _{Y \in \mathcal {A}^X}(\mathcal {A}_{Y}{\setminus } \mathcal {A}_X)\) (disjoint union).

Proof

(i) is straightforward. For (ii), \(\mathcal {A}_{X} \subseteq \mathcal {A}_{Y} \cap \mathcal {A}_{Y'}\) since \(Y, Y' \subseteq X\). Arguing on the dimensions, and using the fact that \(\dim (X)-\dim (Y)=1\) yield \(X=Y+Y'\). Thus, if \(H \in \mathcal {A}_{Y} \cap \mathcal {A}_{Y'}\), then \(X \subseteq H\), i.e., \(H \in \mathcal {A}_X\). (iii) follows automatically from (i) and (ii). \(\square \)

Corollary 3.10

Set \(\mathcal {N}:=\mathcal {N}_0\bigsqcup \mathcal {N}_{\beta _2}(\beta _1)\bigsqcup \mathcal {N}_{\beta _1}(\beta _2)\bigsqcup \mathcal {N}_3\), where

For \(\Psi \in \mathcal {N}\), set \(\Psi ^+:=\Phi ^+ \cap \Psi \). Then,

Proof

It follows from Proposition 2.17(ii), Proposition 3.8 and Lemma 3.9. \(\square \)

Proposition 3.11

For each \(i \in \{1,2\}\), set

Then, \(2(\mathcal {K}_0+\mathcal {K}_{\beta _{3-i}}(\beta _i)+1)=\mathrm{h}\). In particular, \(\mathcal {K}_{\beta _2}(\beta _1)=\mathcal {K}_{\beta _1}(\beta _2)\).

Proof

By Corollary 3.10,

which equals \(\mathrm{h}\) by Theorem 2.11(vi). \(\square \)

Proposition 3.12

Proof

It follows from items (iv) and (vi) of Theorem 2.11. \(\square \)

Proposition 3.13

If \(X=H_{\beta _1} \cap H_{\beta _2}\) is of type \(A_1^2\), then for each \(i \in \{1,2\}\)

Proof

The proof is similar in spirit to the proof of [20, Proposition 3.6]. By Corollary 3.10,

It is not hard to see that \(|\mathcal {A}^X|=|\mathcal {N}|\) (via the bijection \(Y \mapsto \Phi _Y\)). By Proposition 3.12,

By Theorem 2.14 and Proposition 3.11,

\(\square \)

Remark 3.14

-

(i)

The conclusion of Proposition 3.13 can also be written as

$$\begin{aligned} |\mathcal {A}^{H_{\beta _i}}| - |\mathcal {A}^X|=m_\ell -\mathcal {K}_0. \end{aligned}$$ -

(ii)

For each \(\Psi \in \mathcal {N}_0\), denote by \(\mathrm{h}(\Psi )\) the Coxeter number of \(\Psi \) and write \(m_1(\Psi ) \le m_2(\Psi )\le m_3(\Psi )\) for the exponents of \(\Psi \). In fact, \(\mathrm{{h}}(\Psi ) =2m_2(\Psi )\) since \(\mathrm {rank}(\Psi )=3\). Thus,

$$\begin{aligned} \mathcal {K}_0= \sum _{\Psi \in \mathcal {N}_0}\left( m_2(\Psi )-1 \right) . \end{aligned}$$In particular, if \(\ell = 3\), then \(\mathcal {N}_0=\{\Phi \}\) and \(\mathcal {K}_0=m_2-1\). In this case, \(|\mathcal {A}^{H_{\beta _i}}| - |\mathcal {A}^X| =m_2=\mathrm{h}/2\).

Remark 3.15

If \(\mathrm{codim}(X)>2\), for example, X is of type \(A_1^k\) with \(k>2\), the calculation in Proposition 3.13 is expected to be more difficult (and harder to avoid classifications) as it involves the consideration on the rank \(k+1\) subsystems of \(\Phi \) containing \(\Phi _X\).

4 Proof of Theorem 1.5

Theorem 1.5 is a combination of two theorems below:

Since we prove the freeness part as a consequence of the exponent part and the proof is much more simple, we give the proof for the freeness part first (provided that the exponent part is given). Let us recall some general facts on hyperplane arrangements.

Lemma 4.1

-

(i)

If \(X, Y \in L(\mathcal {A})\), then \((\mathcal {A}^X)^{X \cap Y}=\mathcal {A}^{X \cap Y}\). Similarly, \((\phi ^X)^{X \cap Y}=\phi ^{X \cap Y}\) for any \(\phi \in D(\mathcal {A})\).

-

(ii)

If \(H \in \mathcal {A}\) and \(X \in L(\mathcal {A}^H)\), then \((\mathcal {A}^H)_X=(\mathcal {A}_X)^{H}\). We will use the notation \(\mathcal {A}_X^H\) to denote these arrangements.

Proof

Straightforward. \(\square \)

Lemma 4.2

Let \((\mathcal {A}_i, V_i)\) be irreducible arrangements (\(1 \le i \le n\)). Let \(\mathcal {A}= \mathcal {A}_1 \times \cdots \times \mathcal {A}_n\) and \(V= \oplus _{i=1}^n V_i\). Then,

-

(i)

\(\chi (\mathcal {A}, t)=\prod _{i=1}^n \chi (\mathcal {A}_i, t)\),

-

(ii)

for \(H=V_1 \oplus \cdots \oplus V_{k-1} \oplus H_k \oplus V_{k+1} \oplus \cdots \oplus V_n \in \mathcal {A}\) (\(H_k \in \mathcal {A}_k\)),

$$\begin{aligned} \mathcal {A}^H=\mathcal {A}_1 \times \ldots \times \mathcal {A}_{k-1} \times \mathcal {A}_k^{H_k}\times \mathcal {A}_{k+1} \times \cdots \times \mathcal {A}_n. \end{aligned}$$

Proof

(i) is a well-known fact, e.g., [21, Lemma 2.50]. (ii) follows by the definition of restriction. \(\square \)

The freeness of every restriction is settled by a case-by-case study in [22], and by a uniform method in [8]. In Theorem 4.4, we give a different and more direct proof for the freeness of \(A_1^2\) restrictions by using the Addition–Deletion Theorem (Theorem 2.7) and the Combinatorial Deletion Theorem (Theorem 2.8). We also need the following immediate consequence of Theorems 2.14 and 4.6.

Proposition 4.3

If \(\{\alpha ,\beta \}\) is an \(A_1^2\) set, then \(|\mathcal {A}^{H_{\alpha }}| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}|\) is a root of \(\chi (\mathcal {A}^{H_{\alpha }}, t)= \prod _{i=1}^{\ell -1} (t-m_i)\).

Theorem 4.4

If \(\{\alpha ,\beta \}\) is an \(A_1^2\) set, then the following statements are equivalent:

-

(1)

\(\mathcal {A}^{H_{\alpha } \cap H_{\beta }}\) is free and \(\exp (\mathcal {A}^{H_{\alpha } \cap H_{\beta }})=\exp (\mathcal {A}){\setminus } \{ m_i,m_\ell \} \) for some i with \(1 \le i \le \ell -1\).

-

(2)

\(|\mathcal {A}^{H_{\alpha }}| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}|=m_i\).

Proof

It is sufficient to prove \((2) \Rightarrow (1)\). By Theorem 2.14, \(\mathcal {A}^{H_{\alpha }}\) is free with \(\exp (\mathcal {A}^{H_{\alpha }})=\{ m_1, \ldots , m_{\ell -1}\}\). By Lemma 4.1, \(\mathcal {A}^{H_{\alpha } \cap H_{\beta }}=(\mathcal {A}^{H_{\alpha }})^{H_{\alpha }\cap H_{\beta }}\). If we can prove that \(\mathcal {A}^{H_{\alpha }} {\setminus } \{H_{\alpha }\cap H_{\beta }\}\) is free, then Theorem 2.7 and Condition (2) guarantee that \(\mathcal {A}^{H_{\alpha } \cap H_{\beta }}\) is free with \(\exp (\mathcal {A}^{H_{\alpha } \cap H_{\beta }})=\exp (\mathcal {A}){\setminus } \{ m_i,m_\ell \} \) for some \(1 \le i \le \ell -1\).

To show the freeness of \(\mathcal {A}^{H_{\alpha }} {\setminus } \{H_{\alpha }\cap H_{\beta }\}\), we use Theorem 2.8. We need to show that \(|\mathcal {A}^{H_{\alpha }}_X| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}_X|\) is a root of \(\chi (\mathcal {A}^{H_{\alpha }}_X, t)\) for all \(X \in L(\mathcal {A}^{H_{\alpha } \cap H_{\beta }})\). It is clearly true by Proposition 4.3 provided that \(\mathcal {A}_X\) is irreducible. If \(\mathcal {A}_X\) is reducible, write \(\mathcal {A}_X = \mathcal {A}_1 \times \cdots \times \mathcal {A}_n\) where each \(\mathcal {A}_i\) is irreducible. By Lemma 4.2, \(|\mathcal {A}^{H_{\alpha }}_X| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}_X|\) either equals \(|\mathcal {A}^{H_k}_k| - |\mathcal {A}^{H_k \cap H'_k}_k|\) where \(1 \le k \le n\), \(H_k, H'_k \in \mathcal {A}_k\), \(H_k \cap H'_k\) is \(A_1^2\) with respect to \(\mathcal {A}_k\), or equals \(|\mathcal {A}_j| - |\mathcal {A}_j^{H_j}|\) for some \(j \ne k\). In either case, \(|\mathcal {A}^{H_{\alpha }}_X| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}_X|\) is a root of \(\chi (\mathcal {A}^{H_{\alpha }}_X, t)=\chi (\mathcal {A}_1, t)\ldots \chi (\mathcal {A}^{H_k}_k, t)\ldots \chi (\mathcal {A}_j, t)\ldots \chi (\mathcal {A}_n, t)\). \(\square \)

Remark 4.5

If the subsystem \(\Phi _{\{\alpha ,\beta \}}\subseteq \Phi \) spanned by \(\{\alpha ,\beta \}\) is not of type \(A_1^2\), then Condition (2) in Theorem 4.4 may not occur, i.e., the number \(|\mathcal {A}^{H_{\alpha }}| - |\mathcal {A}^{H_{\alpha } \cap H_{\beta }}|\) may not be an exponent of W. In this case, we are unable to use the Addition–Deletion Theorem (Theorem 2.7) to derive \(\exp (\mathcal {A}^{H_{\alpha } \cap H_{\beta }})\). To see an example, let \(\Phi =E_6\) and \(\Phi _{\{\alpha ,\beta \}}\) be of type \(A_2\), or let \(\Phi =F_4\) and \(\Phi _{\{\alpha ,\beta \}}\) be of type \(B_2\) (see, e.g., [19, Tables V and VI]).

Theorem 4.6

If \(\{\beta _1, \beta _2\} \subseteq \Phi ^+\) is \(A_1^2\), then for each \(i \in \{1,2\}\)

Proof of Theorem 4.6 will be divided into two halves: Theorems 4.8 and 4.28.

4.1 First half of Proof of Theorem 4.6

Lemma 4.7

Let \(C=(c_{ij})\) with \(c_{ij} = 2(\alpha _i, \alpha _j)/(\alpha _j, \alpha _j)\) be the Cartan matrix of \(\Phi \). The roots of the characteristic polynomial of C are \(2+2\cos (m_i\pi /\mathrm{h})\) (\(1 \le i \le \ell \)).

Proof

See, e.g., [5, Theorem 2]. \(\square \)

We denote by \({{\mathcal {D}}}(\Phi )\) the Dynkin graph and by \({\widetilde{{\mathcal {D}}}}(\Phi )\) the extended Dynkin graph of \(\Phi \).

Theorem 4.8

Assume that there exists a set \(\{\beta _1, \beta _2\} \subseteq \Phi ^+\) such that \(\{\beta _1, \beta _2\}\) is both \(A_1^2\) and RO. Then, \(\mathrm{h}/2\) is an exponent of W. Moreover, for each \(i \in \{1,2\}\),

Proof

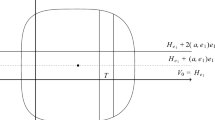

By Lemma 4.7, it suffices to prove that the characteristic polynomial of the Cartan matrix C admits 2 as a root. By Proposition 3.3, we may assume that \(\{\beta _1, \beta _2\} \subseteq \Delta \). Since the Dynkin graph \({\mathcal {D}}(\Phi )\) of \(\Phi \) is a tree, there is a unique path in \({\mathcal {D}}(\Phi )\) which admits \(\beta _1\) and \(\beta _2\) as endpoints. By the definition of RO sets, this path contains exactly one more vertex of \({\mathcal {D}}(\Phi )\), say \(\beta _3\) with \((\beta _1, \beta _3)\ne 0\) and \((\beta _2, \beta _3)\ne 0\). Also, the other vertices of \({\mathcal {D}}(\Phi )\), if any, connect to the path only at \(\beta _3\). The Cartan matrix has the following form:

By applying the Laplace’s formula, it is easily seen that \(\det (xI_\ell - C)\) is divisible by \(x-2\).

Assume that \(\mathcal {M}_{\beta _2}(\beta _1) \ne \emptyset \) and let \(\Lambda \in \mathcal {M}_{\beta _2}(\beta _1)\) (notation in Definition 3.6). Since \(\{\beta _1, \beta _2\}\) is RO, the fact that \(\Lambda \subseteq \beta _2^\perp \) implies that \((\beta _1, \alpha )=0\) for all \( \alpha \in \Lambda {\setminus } \{\pm \beta _1\}\). This contradicts the irreducibility of \(\Lambda \). Thus, \(\mathcal {K}_{\beta _2}(\beta _1)=0\). Proposition 3.13 completes the proof. \(\square \)

4.2 Root poset, Dynkin diagram and exponents

The second half of Proof of Theorem 4.6 is complicated but uses nothing rather than combinatorial properties of root systems. We need to recall and prove several (technical) properties of the root poset, Dynkin diagram and exponents. This section is devoted to doing so, and every statement will be provided in great detail.

First, we study the positive roots of height \(\ge m_{\ell -1}\) in the root poset and a certain set of vertices in the extended Dynkin diagram. For \(\alpha \in V\), \(\beta \in V{\setminus }\{0\}\), denote \(\langle \alpha ,\beta \rangle := \frac{2( \alpha ,\beta ) }{( \beta , \beta )}\). Let \(\theta := \sum _{i=1}^\ell c_{\alpha _i}\alpha _i\) be the highest root of \(\Phi \), and we call \(c_{\alpha _i} \in \mathbb {Z}_{>0}\) the coefficient of \(\theta \) at the simple root \(\alpha _i\). Denote by \(c_{\max }:=\max \{c_{\alpha _i}\mid 1 \le i\le \ell \}\) the largest coefficient.

Proposition 4.9

Let \(\Phi \) be an irreducible root system in \(\mathbb {R}^\ell \). Let \(\theta \) be the highest root of \(\Phi \), and denote \(\lambda _0 := -\theta \), \(c_{\lambda _0}:=1\). Suppose that the elements of a fixed base \(\Delta =\{\lambda _1, \ldots , \lambda _\ell \}\) are labeled so that \(\Lambda :=\{\lambda _0, \lambda _1, \ldots , \lambda _q\}\) is a set of minimal cardinality such that \(c_{\max }=c_{\lambda _q}\) and \((\lambda _s,\lambda _{s+1}) < 0\) for \(0 \le s \le q -1\).

-

(i)

Then, \(c_{\lambda _s}=s + 1\) for \(0\le s \le q\) and \(|\Lambda |=c_{\max }\).

-

(ii)

Assume that \(c_{\max }\ge 2\). Then, \((\lambda _0,\lambda _1, \ldots , \lambda _{q-1})\) is a simple chain of \({\widetilde{{\mathcal {D}}}}(\Phi )\) connected to the other vertices only at \(\lambda _{q-1}\).

Proof

See, e.g., [18, Lemma B.27, Appendix B] and [28, Proposition 3.1]. \(\square \)

Remark 4.10

Proposition 4.9 was first formulated and proved in terms of coroots in [25, Lemma 1.5] under the name lemma of the string. The proof of [18, Lemma B.27, Appendix B] contains a small error, which was resolved in [28, Proposition 3.1].

Corollary 4.11

-

(i)

If \(c_{\max }=1\), then all roots of \(\Phi \) have the same length. In addition, if \(\ell \ge 2\), then \({{\mathcal {D}}}(\Phi )\) is a simple chain and \(-\theta \) is connected only to two terminal vertices of \({{\mathcal {D}}}(\Phi )\).

-

(ii)

If \(c_{\max }\ge 2\), then \(-\theta \) is connected only to one vertex \(\lambda \) of \({{\mathcal {D}}}(\Phi )\) with \(\langle \theta ,\lambda \rangle \in \{1,2\}\) and \(c_{\lambda }=2\). In particular, if \(\lambda _i \in \Delta \) with \(c_{\lambda _i}=1\) is connected to \(\lambda _j \in \Delta \) with \(c_{\lambda _j}\ge 2\), then \(\lambda _i\) must be a terminal vertex of \({{\mathcal {D}}}(\Phi )\) and \(\langle \lambda _j,\lambda _i\rangle =-1\).

Proof

The second statement of (ii) follows from the equation \(\langle \theta ,\lambda _i \rangle =0\). The other statements can be found in [28, Proposition 3.1 and Remark 3.2]. \(\square \)

Corollary 4.12

Assume that \(c_{\max }\ge 2\). Either \(\langle \lambda _{q-1},\lambda _{q}\rangle \in \{-2,-3\}\) or \(\lambda _{q}\) is a ramification point of \({\widetilde{{\mathcal {D}}}}(\Phi )\).

Proof

See [28, Corollary 3.3]. \(\square \)

Denote \(\mathcal {U}:=\{\theta _i\in \Phi ^+ \mid \mathrm{ht}(\theta _i) >m_{\ell -1}\}\), and set \(m:=|\mathcal {U}|\). By Theorems 2.12 and 2.11(i), (iii), we have \(m=m_{\ell }-m_{\ell -1}=m_{2}-1>0\). Suppose that the elements of \(\mathcal {U}\) are labeled so that \(\theta _1\) denotes the highest root, and \(\xi _i=\theta _i-\theta _{i+1} \in \Delta \) for \(1 \le i \le m-1\). We also adopt a convention \(\xi _0:=-\theta _1\). Set \(\Xi :=\{\xi _i \mid 0 \le i \le m-1\}\). Note that \(\Xi \) is a multiset, not necessarily a set. For a finite multiset \(S=\{(a_1)^{b_1},\ldots , (a_n)^{b_n}\}\), write \({\overline{S}}\) for the base set of S, i.e., \({\overline{S}}=\{a_1,\ldots , a_n\}\). Let us call

-

Case 1:

“there is an integer t such that \(1 \le t \le m-1\) and \(\langle \theta _t,\xi _t \rangle = 3\)”.

-

Case 2:

Negation of Case 1.

Proposition 4.13

-

(i)

If Case 1 occurs, then \(t=m-2\) and \(\Xi =\{\xi _0, \xi _1, \ldots , (\xi _{m-2})^2\}\) with \(\xi _i \ne \xi _j\) for \(0 \le i < j \le m-2\). As a result, \(|\overline{\Xi }|=m_2-2\).

-

(ii)

If Case 2 occurs, then \(\Xi =\{\xi _0, \xi _1, \ldots , \xi _{m-1}\}\) with \(\xi _i \ne \xi _j\) for \(0 \le i < j \le m-1\). As a result, \(|\Xi |=m_2-1\).

Proof

See [28, Propositions 3.10 and 3.11]. \(\square \)

Theorem 4.14

With the notations we have seen from Propositions 4.9 to 4.13, we have that \(q=m-1\) and \(\lambda _i = \xi _i\) for all \(1 \le i \le q\). In particular, \(\overline{\Xi }=\Lambda \) (as sets). Moreover, if Case 1 occurs, then \(m_2 = c_{\max }+2\); and if Case 2 occurs, then \(m_2 = c_{\max }+1\).

Proof

See [28, Theorem 4.1]. \(\square \)

Corollary 4.15

-

(i)

If Case 1 occurs, then \(\theta _i-\theta _j \in \Phi ^+\) for \(1 \le i<j \le m\), \(\{i,j\} \ne \{m-2,m\}\), and \(\theta _{m-2}-\theta _m \in 2\Delta \).

-

(ii)

If Case 2 occurs, then \(\theta _i-\theta _j \in \Phi ^+\) for \(1 \le i<j \le m\).

Proof

See [28, Corollary 3.12]. \(\square \)

Corollary 4.16

The following statements are equivalent: (i) Case 1 occurs, (ii) \({\text {rank}}(\Phi )=2\), (iii) \(\Phi =G_2\), (iv) \(c_{\max }=m_2-2\).

Proof

See [28, Theorem 4.2]. \(\square \)

Recall the notation \(\Theta ^{(r)}=\{\alpha \in \Phi ^+ \mid \mathrm{ht}(\alpha )=r\}\).

Proposition 4.17

There is always a long root in \(\Theta ^{(m_{\ell -1})}\).

Proof

We may assume that \(c_{\max }\ge 2\). If not, by Corollary 4.11, all roots of \(\Phi \) have the same length. The assertion is trivial. If Case 1 occurs, then by Corollary 4.16, \(\Phi =G_2\). The assertion is also trivial.

Now, we can assume that Case 2 occurs. By Proposition 4.13, \(\xi _i \ne \xi _j\) for \(0 \le i < j \le m-1\). If \(\langle \xi _{m-2},\xi _{m-1}\rangle =-2\), then \(\langle \theta _{m-1},\xi _{m-1}\rangle =2\). Thus, \(\theta _{m-1}-2\xi _{m-1} \in \Theta ^{(m_{\ell -1})}\), that is a long root and we are done. We are left with the case \(\langle \xi _{m-2},\xi _{m-1}\rangle =-1\). By Corollary 4.12, \(\xi _{m-1}\) is connected to at least two vertices of \({\widetilde{{\mathcal {D}}}}(\Phi )\) apart from \(\xi _{m-2}\), say \(\mu _1,\ldots , \mu _k\) (\(k\ge 2\)).

We claim that there exists \(\mu _i\) such that \(\langle \xi _{m-1},\mu _i\rangle =-1\). Proof of the claim when \(m=2\) (i.e., \(c_{\max }=2\)) and \(m\ge 3\) uses very similar technique [the case \(m=2\) is actually Lemma 4.23(2)]. We only give a proof when \(m\ge 3\). From \(\langle \theta , \xi _{m-1}\rangle =0\) (it equals 1 if \(m=2\)), \(c_{\xi _{m-1}}=c_{\max }\), \(c_{\xi _{m-2}}=c_{\max }-1\), and \(\xi _{m-1}\) is a long root, we have \(c_{\max }+1-\sum _{i=1}^k c_{\mu _i}=0\). Suppose to the contrary that \(\langle \xi _{m-1},\mu _i\rangle \le -2\) for all \(1 \le i \le k\). From \(0=\langle \theta , \mu _i\rangle \le 2c_{\mu _i}+c_{\max }\langle \xi _{m-1}, \mu _i\rangle \), we obtain \(c_{\mu _i}=c_{\max }\). Thus, \(c_{\max }+1-kc_{\max }=0\), a contradiction. So we can choose \(\mu _i\) so that \(\langle \xi _{m-1},\mu _i\rangle =-1\). Therefore, \(\langle \theta _m, \mu _i\rangle =-\langle \xi _{m-1},\mu _i\rangle =1\) and \(\theta _{m}-\mu _i \in \Theta ^{(m_{\ell -1})}\), that is a long root. \(\square \)

Lemma 4.18

Suppose \(\beta _1, \beta _2, \beta _3 \in \Phi \) with \(\beta _1+ \beta _2+ \beta _3 \in \Phi \) and \(\beta _i+ \beta _j \ne 0\) for \(i \ne j\). Then, at least two of the three partial sums \(\beta _i+ \beta _j\) belong to \( \Phi \).

Proof

See, e.g., [15, §11, Lemma 11.10]. \(\square \)

Proposition 4.19

If \(\gamma \in \Theta ^{(m_{\ell -1})}\), then \(\theta _i - \gamma \in k\Phi ^+\) with \(k\in \{1,2,3\}\) for every \(1 \le i \le m\).

Proof

To avoid the triviality, we assume that Case 2 occurs and \(1 \le i \le m-1\). Denote \(\mu := \theta _m -\gamma \in \Delta \).

Assume that \( \mu =\xi _{m-1}\). Then, \(\theta _{m-1}- \gamma =2\xi _{m-1} \in 2\Delta \). We have \(\langle \theta _{m-1},\xi _{m-1} \rangle =\langle \gamma ,\xi _{m-1} \rangle +4\). It follows that \(\langle \theta _{m-1},\xi _{m-1} \rangle =2\). Fix i with \(1 \le i \le m-2\), and set \(\alpha := \theta _i -\theta _{m-1}\in \Phi ^+\) (by Corollary 4.15). Since \( \theta _i = \theta _1 - (\xi _1+\cdots +\xi _{i-1})\), we have \(\langle \theta _i,\xi _{m-1} \rangle =0\). Thus, \(\langle \alpha ,\xi _{m-1} \rangle =-\langle \theta _{m-1},\xi _{m-1} \rangle =-2\). Then, \(\theta _i -\gamma =\alpha +2\xi _{m-1}\in \Phi ^+\).

Assume that \( \mu \ne \xi _{m-1}\). Then, \(\theta _{m-1}= \gamma +\mu +\xi _{m-1}\). By Lemma 4.18, \(\mu +\xi _{m-1}\in \Phi ^+\) since \(\gamma +\xi _{m-1}\notin \Phi ^+\). Thus, \(\mu \) and \(\xi _{m-1}\) are adjacent on \({{\mathcal {D}}}(\Phi )\). If \(\mu \ne \xi _{m-2}\) (of course, \(\mu \ne \xi _i\) for all \(1 \le i \le m-3\) since \({{\mathcal {D}}}(\Phi )\) is a tree), then by Lemma 3.2(ii), \(\theta _i -\gamma =\xi _i+\cdots +\xi _{m-1}+\mu \in \Phi ^+\) for each \(1 \le i \le m-1\). If \(\mu = \xi _{m-2}\), then \(\theta _{m-2}= \gamma +\xi _{m-1}+2\xi _{m-2}\). Thus, \(\langle \theta _{m-2},\xi _{m-2} \rangle =\langle \gamma ,\xi _{m-2} \rangle +\langle \xi _{m-1} ,\xi _{m-2} \rangle +4\). Using the fact that \(\xi _{m-2}\) is a long root, we obtain a contradiction since the left-hand side is at most 1, while the right-hand side is at least 2. \(\square \)

Corollary 4.20

If \(\ell \ge 5\), then \(m_{\ell -2}<m_{\ell -1}\).

Proof

By Theorem 2.12, it suffices to prove that there are exactly two roots of height \(m_{\ell -1}\), i.e., \(|\Theta ^{(m_{\ell -1})}|=2\). To avoid the triviality, we assume that Case 2 occurs and \(c_{\max }\ge 2\). We need to consider two cases: \(\langle \xi _{m-2},\xi _{m-1}\rangle =-2\) or \(\langle \xi _{m-2},\xi _{m-1}\rangle =-1\). Since the proofs are very similar, we only give a proof for the latter (slightly harder case).

Suppose to the contrary that \(\Theta ^{(m_{\ell -1})}=\{\gamma _1,\ldots ,\gamma _k\}\) with \(k \ge 3\). By Proposition 4.19, \(\theta _{m-1}-\gamma _i =\xi _{m-1}+\mu _i \in \Phi ^+\), where \(\mu _i \in \Delta \) (\(1 \le i \le k\)). Thus, \(\mu _i\) is adjacent to \(\xi _{m-1}\) on \({\widetilde{{\mathcal {D}}}}(\Phi )\). By the same argument as the one used in the end of Proof of Proposition 4.19, we obtain \(\mu _i \ne \xi _{m-2}\) for all \(1 \le i \le k\). If \(m\ge 3\), then the same argument as in Proof of Proposition 4.17 gives \(c_{\max }+1=\sum _{i=1}^k c_{\mu _i}\). From \(0=\langle \theta , \mu _i\rangle \le 2c_{\mu _i}-c_{\max }\), we obtain \(c_{\mu _i}\ge c_{\max }/2\) for all \(1 \le i \le k\). This forces \(k=3\). But it implies that \(c_{\max }\le 2\), i.e., \(m\le 2\), a contradiction. Now, consider \(m=2\). A similar argument as above shows that \(k=3\) and \(c_{\mu _1}=c_{\mu _2}=c_{\mu _3}=1\). The second statement of Corollary 4.11(ii) implies that \(c_{\mu _i}\) must be all terminal. Thus, \(\ell =4\), a contradiction. \(\square \)

From now on, we require the classification of root systems of rank \(\le 4\) to make some arguments work. The classification of rank 3 or 4 root systems will be announced before use, while that of rank 2 root systems (has been and) will be used without announcing.

Lemma 4.21

If \(m_2=\mathrm{h}/2\), then \(\ell \le 4\). More specifically, when \(\ell =4\), \(m_2=\mathrm{h}/2\) if and only if \(\Phi =D_4\).

Proof

If \(m_2 = c_{\max }+2\), then by Corollary 4.16, \(\Phi =G_2\). However, \(m_2>\mathrm{h}/2\) by a direct check. Now, assume that \(m_2 = c_{\max }+1\). Recall from Proposition 4.9 that the coefficients of \(\theta \) at elements of \(\Lambda =\{\lambda _0, \lambda _1, \ldots , \lambda _q\}\) form an arithmetic progression, starting with \(c_{\lambda _0}=1\) and ending with \(c_{\lambda _q}= c_{\max }=q+1\). From \(\sum _{\lambda \in \Lambda }c_{\lambda }+\sum _{\lambda \in \Delta {\setminus } \Lambda }c_{\lambda } = \mathrm{h}\), we have

Thus, \(\ell \le (-c_{\max }^2+5c_{\max } +2)/2\). Therefore, \(\ell \le 4\). The second statement is clear from the classification of irreducible root systems of rank 4. \(\square \)

Remark 4.22

The first statement of Lemma 4.21 is an easy consequence of the well-known fact that every exponent of \(\Phi \) appears at most twice. A uniform proof of this fact is probably well-known among experts.

Now, we investigate the “local” picture of the extended Dynkin graph at the subgraph induced by the negative \(-\theta \) of the highest root and the simple root adjacent to it, and find a connection with RO properties.

Lemma 4.23

Assume that \(\ell \ge 2 \) and \(c_{\max }\ge 2\). Let \(\lambda \) be the unique simple root connected to \(-\theta \) (Corollary 4.11). Denote by \(\gamma _1,\ldots , \gamma _k\) (\(k\ge 1\)) the simple roots connected to \(\lambda \). Then, there are the following possibilities:

-

(1)

If \(\langle \theta ,\lambda \rangle =2\), then \(k=1\), \(c_{\gamma _1}\in \{1,2\}\).

-

(2)

If \(\langle \theta ,\lambda \rangle =1\) (in particular, \(\lambda \) is long), then either (2a) \(k=3\), \(c_{\gamma _1}=c_{\gamma _2}=c_{\gamma _3}=1\) (i.e., \(\Phi =D_4\)), or (2b) \(k=2\), \(c_{\gamma _1}=2\), \(c_{\gamma _2}=1\) (\(\gamma _2\) is terminal and long), or (2c) \(k=1\), \(c_{\gamma _1}=3\).

Proof

We have \(\langle \theta ,\lambda \rangle =2c_{\lambda }+ \sum _{i=1}^k c_{\gamma _i}\langle \gamma _i,\lambda \rangle \le 4- \sum _{i=1}^k c_{\gamma _i}\). Since \(\langle \theta ,\lambda \rangle \ge 1\), we have \(\sum _{i=1}^k c_{\gamma _i} \in \{1,2,3\}\). Then, we can list all possibilities and rule out impossibilities. For example, if \(\langle \theta ,\lambda \rangle =1\) and \(\sum _{i=1}^k c_{\gamma _i} =2\), then either (i) \(k=1\), \(c_{\gamma _1}=2\), or (ii) \(k=2\), \(c_{\gamma _1}=c_{\gamma _2}=1\). For (i), it follows that \(1=\langle \theta ,\lambda \rangle =4+2\langle \gamma _i,\lambda \rangle \), which is a contradiction since \(\lambda \) is long. For (ii), the second statement of Corollary 4.11(ii) implies that \(\gamma _1,\gamma _2\) must be all terminal. Thus, \(\ell =3\). However, such root system does not exist by the classification of irreducible root systems of rank 3. Similarly, to conclude that \(\Phi =D_4\) in (2a), we need the classification of irreducible root systems of rank 4. \(\square \)

It is known that \(\theta ^\perp \) is the standard parabolic subsystem of \(\Phi \) generated by \(\{\alpha \in \Delta \mid (\alpha ,\theta )=0\}\). Also, \(\theta ^\perp \) may be reducible and decomposed into irreducible, mutually orthogonal components.

Corollary 4.24

If \(\theta ^\perp \) is reducible, then either Possibility (2a) or (2b) occurs.

Proof

It follows immediately from Lemma 4.23 (taking \(k\ge 2\)). \(\square \)

Corollary 4.25

When \(\ell =4\), there exists a set that is both \(A_1^2\) and RO if and only if \(\Phi =D_4\). Moreover, if \(\Phi =D_4\), then every \(A_1^2\) set is RO.

Proof

Use the classification of irreducible root systems of rank 4. \(\square \)

Proposition 4.26

Assume that \(\ell \ge 4\). If \(\theta ^\perp \) is reducible and there exists an \(A_1^2\) set that is not RO, then Possibility (2b) in Lemma 4.23 occurs. In particular, \(\theta ^\perp = \{\pm \gamma _2\} \times \Omega \) for a long simple root \(\gamma _2\) and \(\Omega \) is irreducible with \(\mathrm {rank}(\Omega )\ge 2\).

Proof

If \(c_{\max }=1\), then Corollary 4.11(i) implies that \(\theta ^\perp \) is irreducible and of rank at least 2, a contradiction. Now, consider \(c_{\max }\ge 2\). By Corollary 4.24, either Possibility (2a) or (2b) occurs. However, Corollary 4.25 ensures that Possibility (2a) cannot occur because a non-RO \(A_1^2\) set exists. Thus, Possibility (2b) must occur. Then, \(\theta ^\perp = \{\pm \gamma _2\} \times \Omega \) where \(\Omega \) is irreducible with \(\mathrm {rank}(\Omega )\ge 2\). \(\square \)

Proposition 4.27

Assume that \(\ell \ge 4\). Suppose that \(\{\theta , \alpha \}\) is an \(A_1^2\) set with \(\alpha \in \Delta \). If \(\{\pm \alpha \}\) is a component of \(\theta ^\perp \), then \(\{\theta , \alpha \}\) is RO.

Proof

We may assume that \(c_{\max }\ge 2\). If not, Corollary 4.11(i) implies that \(\theta ^\perp \) is irreducible and of rank at least 2, a contradiction. Since \(-\theta \) connects only to one vertex of \({{\mathcal {D}}}(\Phi )\), \(\theta ^\perp \) must be reducible; otherwise, \(\theta ^\perp =\{\pm \alpha \}\), a contradiction. Thus, \(\{\beta , \alpha \}\) is \(A_1^2\) for every \(\beta \in \theta ^\perp {\setminus } \{\pm \alpha \}\) because \(\{\beta , \alpha \}=w\{\alpha ', \alpha \}\) for some \(w \in W\) and \(\alpha ' \in \Delta \) such that \((\alpha ', \alpha )=0\). With the notations in Definition 3.6 and Proposition 3.11, we have \(\mathcal {M}_\theta (\alpha )=\emptyset \) and \(\mathcal {K}_\theta (\alpha )=0\).

Note that \(\theta ^\perp {\setminus } \{\pm \alpha \} \subseteq \alpha ^\perp {\setminus } \{\pm \theta \}\) since \(\{\pm \alpha \}\) is a component of \(\theta ^\perp \). Suppose to the contrary that \(\{\theta , \alpha \}\) is not RO. Then, there exists \(\beta \in \alpha ^\perp {\setminus } \{\pm \theta \}\) such that \((\beta , \theta )\ne 0\). Note that \(\Gamma :=\Phi \cap \mathrm {span}\{\beta , \theta , \alpha \}\) is a subsystem of rank 3 since it contains the \(A_1^2\) set \(\{\theta , \alpha \}\). \(\Gamma \) must be irreducible, otherwise, \(\Gamma \in \mathcal {M}_\alpha (\theta )\) and \(\mathcal {K}_\alpha (\theta )\ne 0\) which contradicts Proposition 3.11. Relying on the classification of rank-3 irreducible root systems, and two facts: (i) \(\{\theta , \alpha \}\) is \(A_1^2\) in \(\Gamma \), (ii) \(\alpha ^\perp \cap \Gamma \) is an irreducible subsystem of rank 2 of \(\Gamma \) (as it contains \(\beta \) and \(\theta \)), we conclude that \(\Gamma = B_3\) and \(\alpha \) is the unique short simple root of \(\Gamma \). Since \(\theta ^\perp \) is reducible, either Possibility (2a) or (2b) occurs by Corollary 4.24. Thus, Possibility (2b) must occur since \(\Phi \) contains the subsystem \(\Gamma \) of type \(B_3\). Since \(\{\pm \alpha \}\) is a component of \(\theta ^\perp \) and \(\alpha \) is short, with the notation in Possibility (2b), \(\Delta =\{\gamma _2, \lambda , \alpha \}\). Thus, \(\ell =3\), a contradiction. \(\square \)

4.3 Second half of Proof of Theorem 4.6

In this subsection, we complete the Proof of Theorem 4.6 by proving its second half, Theorem 4.28.

Theorem 4.28

If \(\{\beta _1, \beta _2\} \subseteq \Phi ^+\) is \(A_1^2\) but not RO, then for each \(i \in \{1,2\}\)

The first key ingredient is Theorem 4.30. It asserts that Theorem 4.28 is always true for a special class of \(A_1^2\) sets, and we can describe it by the height function without requiring the RO condition. We were recently informed that Mücksch and Röhrle [17] study a similar property (to the non-RO case) of Weyl arrangement restrictions (which they called the accuracy) via MAT-free techniques of [1]. Their main result together with Corollary 4.20 indeed gives a proof of Theorem 4.30. We will use this proof here; however, we remark that our primary method gives a different and bijective proof, which we refer the interested reader to “Appendix” for more details.

Unless otherwise stated, we assume that \(\ell \ge 3\) in the remainder of the paper.

Lemma 4.29

If \(\{\gamma _1,\gamma _2\} \subseteq \Theta ^{(r)}\) with \(\gamma _1\ne \gamma _2\) and \(r \ge \left\lfloor {m_\ell /2 }\right\rfloor +1\) (floor function), then \(\{\gamma _1,\gamma _2\}\) is \(A_1^2\). The assertion is true, in particular, if \(r=m_{\ell -1}\).

Proof

We have \(\gamma _1+\gamma _2 \notin \Phi \) and \(\gamma _1-\gamma _2 \notin \sum _{\alpha \in \Delta }\mathbb Z_{\ge 0}\alpha \). Use the classification of irreducible root systems of rank 2. \(\square \)

Theorem 4.30

If \(\gamma _1,\gamma _2\in \Theta ^{(m_{\ell -1})}\) with \(\gamma _1\ne \gamma _2\), then for each \(i \in \{1,2\}\)

Proof

The formula holds true trivially for \(\ell =3\), and we use the classification of irreducible root systems for \(\ell =4\). Assume that \(\ell \ge 5\). Then, by Corollary 4.20, there are exactly two roots of height \(m_{\ell -1}\). The rest follows from [17, Theorem 4.3]. \(\square \)

The second key ingredient is Proposition 4.31. In fact, the \(A_1^2\) sets described in Theorem 4.30 are not enough to generate all possible W-orbits of the \(A_1^2\) sets (cf. Remark 3.5). Notice that the focus of Theorem 4.28 is non-RO \(A_1^2\) sets. Although, Theorem 4.30 alone is not enough to prove Theorem 4.28, it guarantees that the problem is solved if the involving non-RO \(A_1^2\) set lies in the same W-orbit with a pair of roots of height \(m_{\ell -1}\).

Proposition 4.31

If there exists an \(A_1^2\) set that is not RO, then the set \(\mathcal {S}: = \{ \{\gamma _1,\gamma _2\}\subseteq \Theta ^{(m_{\ell -1})} \mid \text{ at } \text{ least } \text{ one } \text{ of } \gamma _1,\gamma _2\hbox { is a long root}\}\) contains a non-RO set.

Proof

Note that \(\mathcal {S}\ne \emptyset \) since \(\Theta ^{(m_{\ell -1})}\) always contains a long root (Proposition 4.17). The case \(\ell = 3\) is checked directly by the classification. Assume that \(\ell \ge 4\) and suppose to the contrary that every element in \(\mathcal {S}\) is RO. We can take \(\{\gamma _1,\gamma _2\} \in \mathcal {S}\) and assume that \(\gamma _1\) is long. By Theorems 4.8 and 4.30, we have \(m_{\ell -1}=\mathrm{h}/2\). By Lemma 4.21, \(\ell =4\). By Corollary 4.25, \(\Phi =D_4\), and all \(A_1^2\) sets must be RO. This contradicts the Proposition’s assumption. \(\square \)

Of course, if \(\ell \ge 5\), then Corollary 4.20 implies that the set \(\mathcal {S}\) contains only one element. However, the present statement is enough for us.

The third (and the final) key ingredient is Corollary 4.32. From the previous discussions, we will be in the situation that there exist two non-RO \(A_1^2\) sets which may form different orbits. So we want to find a relation between them.

Corollary 4.32

Assume that \(\ell \ge 4\). If there are two \(A_1^2\) sets \(\{\theta , \lambda _1\}\), \(\{\theta , \lambda _2\}\) that are both non-RO, then both \(\lambda _1\) and \(\lambda _2\) lie in the unique irreducible component \(\Omega \) of \(\theta ^\perp \) with \(\mathrm {rank}(\Omega )\ge 2\).

Proof

The statement is trivial if \(\theta ^\perp \) is irreducible. If \(\theta ^\perp \) is reducible, then the statement follows from Propositions 4.26 and 4.27. \(\square \)

We need one more simple lemma.

Lemma 4.33

If \(\{\beta _1, \beta _2\} \subseteq \Phi ^+\) contains a long root and \((\beta _1, \beta _2)=0\), then \(\{\beta _1, \beta _2\}\) lies in the same W-orbit with \(\{\theta , \mu \}\) for some \(\mu \in \Delta \).

Proof

This is well-known. There is an irreducible component \(\Psi \subseteq \theta ^\perp \) such that \(\{\beta _1, \beta _2\}\) lies in the same W-orbit with \(\{\theta , \gamma \}\) for some \(\gamma \in \Psi \). There exist \(\mu \in \Delta \cap \Psi \) and \(w \in W(\Psi )\) such that \(\gamma = w(\mu )\) and this w fixes \(\theta \), i.e., \( \theta = w( \theta )\). Thus, \(\{\beta _1, \beta _2\}\) lies in the same W-orbit with \(\{\theta ,\mu \}\). \(\square \)

Now, we are ready to prove Theorem 4.28.

Proof of Theorem 4.28

It suffices to prove the theorem under the condition that the \(A_1^2\) set \(\{\beta _1, \beta _2\}\) contains a long root. Otherwise, we would consider the dual root system \(\Phi ^\vee \) where short roots become long roots. By Lemma 4.33, \(\{\beta _1, \beta _2\}\) lies in the same W-orbit with \(\{\theta , \lambda _1\}\) for some \(\lambda _1 \in \Delta \). We may also assume that \(\ell \ge 4\) since the case \(\ell =3\) is done in Remark 3.14(ii). We note that by Remark 3.14(i), proving Theorem 4.28 is equivalent to showing that \(\mathcal {K}_\theta (\lambda _1)=m_{\ell -1}-\mathrm{h}/2\) (notation in Proposition 3.11).

By Proposition 4.31, we can find a non-RO set \(\{\gamma _1,\gamma _2\}\subseteq \Theta ^{(m_{\ell -1})}\) where \(\gamma _1\) is a long root. Again by Lemma 4.33, \(\{\gamma _1,\gamma _2\}\) lies in the same W-orbit with \(\{\theta , \lambda _2\}\) for some \(\lambda _2 \in \Delta \). By Proposition 3.3(i), \(\{\theta , \lambda _1\}\) and \(\{\theta , \lambda _2\}\) are \(A_1^2\) and non-RO. Corollary 4.32 implies that \(\lambda _1\) and \(\lambda _2\) lie in the unique irreducible component \(\Omega \) of \(\theta ^\perp \) with \(\mathrm {rank}(\Omega )\ge 2\). We already know from Theorem 4.30 that Theorem 4.28 is automatically proved for \(\{\theta , \lambda _2\}\), i.e., \(\mathcal {K}_\theta (\lambda _2)=m_{\ell -1}-\mathrm{h}/2\). So, we want to prove that

If \(\Vert \lambda _1\Vert =\Vert \lambda _2\Vert \), then \(\{\theta , \lambda _1\}\) and \(\{\theta , \lambda _2\}\) lie in the same W-orbit. So, Formula (1) follows. Now, consider \(\Vert \lambda _1\Vert \ne \Vert \lambda _2\Vert \). Note that at most two root lengths occur in \(\Omega \), they are \(\Vert \lambda _1\Vert \) and \(\Vert \lambda _2\Vert \). Then, the fact that \(\{\theta , \lambda _1\}\) and \(\{\theta , \lambda _2\}\) are both \(A_1^2\) implies that \(\{\theta , \beta \}\) is \(A_1^2\) for all \(\beta \in \Omega \). For \(\beta \in \Omega \), with the notations in Definition 3.6 applied to the \(A_1^2\) set \(\{\theta , \beta \}\), we have

Indeed, if \(\Lambda \subseteq \theta ^\perp \) and \(\Lambda \) is irreducible, then \(\Lambda \subseteq \Omega \). We further make the following definition

Using Proposition 3.11 and Theorem 2.11(vi), we compute

We claim that \(\mathcal {M}'_\theta (\beta )= \emptyset \) for every \(\beta \in \Omega \). Suppose not and let \(\Lambda \in \mathcal {M}'_\theta (\beta )\). Note that \(\Gamma :=\Phi \cap \text{ span }(\{\theta \} \cup \Lambda )\) admits \(\theta \) as the highest root in its positive system \(\Phi ^+\cap \text{ span }(\{\theta \} \cup \Lambda )\). By a direct check on all rank-3 irreducible root systems and using the fact that \(\{\theta , \beta \}\) is \(A_1^2\) for all \(\beta \in \Lambda \subseteq \Gamma \cap \Omega \), we obtain a contradiction. Thus, the claim is proved and we have \(\mathcal {M}'_\theta (\lambda _1)=\mathcal {M}'_\theta (\lambda _2)= \emptyset \). By the computation above, \(\mathcal {K}_\theta (\lambda _1)=\mathcal {K}_\theta (\lambda _2)=\mathrm{h}(\Omega )/2- 1\). This completes the proof. \(\square \)

We close this section (Sect. 4) by giving two corollaries.

Corollary 4.34

(Local–global inequalities) Assume that a set \(\{ \beta _1, \beta _2\}\subseteq \Phi \) is \(A_1^2\). Recall the notation \(\mathcal {N}_0=\mathcal {N}_0(\{ \beta _1,\beta _2\})\) in Definition 3.6. For each \(\Psi \in \mathcal {N}_0\), denote by \(c_{\max }(\Psi )\) the largest coefficient of the highest root of the subsystem \(\Psi \). Then,

-

(a)

\(\sum _{\Psi \in \mathcal {N}_0}\left( m_2(\Psi )-1 \right) \ge m_2-1,\)

-

(b)

\(\sum _{\Psi \in \mathcal {N}_0}c_{\max }(\Psi )\ge c_{\max }\).

The equality in (a) [resp., (b)] occurs if and only if either (i) \(\ell \le 4\), or (ii) \(\ell \ge 5\) and \(\{ \beta _1, \beta _2\}\) is not RO.

Proof

Since \(\ell \ge 3\), \(m_2 = c_{\max }+1\) by Theorem 4.14 and Corollary 4.16. Thus, (a) and (b) are essentially equivalent. The left-hand sides of these inequalities are equal to \(\mathcal {K}_0\) by Remark 3.14. By Theorem 4.6 and Remark 3.14,

Thus, the inequalities follow. If \(\ell =3\), the equalities always occur since \(\mathrm{h}/2=m_2\). If \(\ell = 4\), we need only care about the case \(\{ \beta _1, \beta _2\}\) is both \(A_1^2\) and RO. This condition forces \(\Phi =D_4\) by Corollary 4.25. Again, we have \(\mathrm{h}/2=m_2\). So, the equalities always occur if \(\ell \le 4\). If \(\ell \ge 5\), by Lemma 4.21, \(\mathrm{h}/2>m_2\) . Thus, the equalities occur if \(\{ \beta _1, \beta _2\}\) is not RO. \(\square \)

Corollary 4.35

Let \(\Omega \) be an irreducible component of \(\theta ^\perp \). If \(\Phi \) is simply-laced, then the Coxeter number of \(\Omega \) is given by

Proof

We need only to give a proof for the second line. Note that by Lemma 4.23, there exists at most one irreducible component \(\Omega \) of \(\theta ^\perp \) satisfying \(\mathrm {rank}(\Omega )\ge 2\). For every \(\beta \in \Omega \), \(\{\theta , \beta \}\) is \(A_1^2\) since \(\Phi \) is simply laced. Moreover, \(\{\theta , \beta \}\) is not RO by the reason of rank. With the notations in Proof of Theorem 4.28, \(\mathcal {M}'_\theta (\beta )=\emptyset \). It completes the proof. \(\square \)

5 Proof of Theorem 1.6

Theorem 1.6 follows from Theorem 5.1 and Example 5.2.

Theorem 5.1

Assume that \(X=H_1 \cap X_2\) is \(A_1^2\) but not RO. Let \(\{\varphi _{1}, \ldots , \varphi _\ell \}\) be a basis for \(D(\mathcal {A})\) with \(\deg {\varphi }_j=m_j\) (\(1 \le j \le \ell \)). Then, \(\{ {\varphi }^X_1, \ldots , {\varphi }^X_{\ell -2}\}\) is a basis for \(D(\mathcal {A}^X)\).

Proof

The statement is checked by a case-by-case method when \(\ell \le 4\). If \(\ell \ge 5\), then \(m_{\ell -1}\) appears exactly once in \(\exp (\mathcal {A})\) (Corollary 4.20). By Remark 2.15, \(\mathcal {A}^{H_1}\) is free and \(\{ {\varphi }^{H_1}_1, \ldots , {\varphi }^{H_1}_{\ell -1}\}\) is a basis for \(D(\mathcal {A}^{H_1})\). By Theorem 4.6, \(|\mathcal {A}^{H_1}| - |\mathcal {A}^X|=m_{\ell -1}\). By Proof of Theorem 4.4, \(\mathcal {A}^{H_1} {\setminus } \{X\}\) is also free. Theorem 2.9 completes the proof. \(\square \)

Example 5.2

Assume that \(X\in L(\mathcal {A})\) is both \(A_1^2\) and RO. By Remark 3.5, \(\Phi =D_\ell \) with \(\ell \ge 3\) (\(D_3=A_3\)). Suppose that

where \(\{x_1,\ldots , x_\ell \}\) is an orthonormal basis for \(V^*\). Let \(H_1 = \ker (x_1+x_2)\), \(H_2 = \ker (x_1-x_2)\), and \(X=H_1 \cap X_2\). Then, again by Remark 3.5, X is \(A_1^2\) and RO. Define

Then, it is known that \(\tau _1,\ldots , \tau _{\ell -1},\eta \) form a basis for \(D(\mathcal {A})\). Let

Then, it is not hard to verify that \(\varphi \in D(\mathcal {A}{\setminus }\{H_1\})\) and thus \((x_1+x_2)\varphi \in D(\mathcal {A})\). By Saito’s criterion, we may show that \(\tau _1,\ldots , \tau _{\ell -2},\eta , (x_1+x_2)\varphi \) also form a basis for \(D(\mathcal {A})\), and \(\tau _1,\ldots , \tau _{\ell -2},\eta , \varphi \) form a basis for \(D(\mathcal {A}{\setminus }\{H_1\})\). Therefore, \(\{ \tau _1^{H_1}, \ldots , \tau _{\ell -2}^{H_1}, \eta ^{H_1}\}\) is a basis for \(D(\mathcal {A}^{H_1})\). This basis may have two elements having the same degree, for example, \(\deg \eta = \deg \tau _{\ell /2}=\ell -1\) when \(\ell \) is an even number. However, it is easy to check that for every case \(\eta ^X=0\). Hence, \(\{ \tau _1^X, \ldots , \tau _{\ell -2}^X\}\) is a basis for \(D(\mathcal {A}^X)\) and \(\exp (\mathcal {A}^X)=\{1,3, 5,\ldots , 2\ell -5\}\) as predicted in Theorem 2.9. This is also consistent with the fact that the \(\mathcal {A}^X\) above is exactly the Weyl arrangement of type \(B_{\ell -2}\).

References

Abe, T., Barakat, M., Cuntz, M., Hoge, T., Terao, H.: The freeness of ideal subarrangements of Weyl arrangements. J. Eur. Math. Soc. 18, 1339–1348 (2016)

Abe, T.: Plus-one generated and next to free arrangements of hyperplanes. Int. Math. Res. Not. (2019). https://doi.org/10.1093/imrn/rnz099

Abe, T.: Addition-deletion theorem for free hyperplane arrangements and combinatorics. arXiv preprint (2018). arxiv:1811.03780

Abe, T.: Deletion theorem and combinatorics of hyperplane arrangements. Math. Ann. 373(1–2), 581–595 (2019)

Berman, S., Lee, Y.S., Moody, R.V.: The spectrum of a Coxeter transformation, affine Coxeter transformations, and the defect map. J. Algebra 121, 339–357 (1989)

Bourbaki, N.: Groupes et Algèbres de Lie. Chapitres 4,5 et 6, Hermann, Paris (1968)

Carter, R.W.: Simple Groups of Lie Type. Wiley-Interscience, New York (1972)

Douglass, J.M.: The adjoint representation of a reductive group and hyperplane arrangements. Represent. Theory 3, 444–456 (1999)

Green, R.M.: Combinatorics of Minuscule Representations. Cambridge Tracts in Mathematics. Cambridge University Press, Cambridge (2013)