Abstract

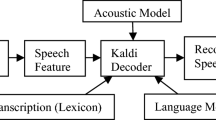

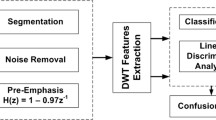

Automatic speech recognition (ASR) for a given audio file is a challenging task due to the variations in the type of speech input. Variations may be the environment, language spoken, emotions of the speaker, age/gender of speaker etc. The two main steps in ASR are converting the audio file into features and classifying it appropriately. Basic unit of speech sound is phoneme and the list of such phoneme is language dependent. In Indian languages, basic unit of language is known as Akshara i.e the alphabet. It is known to be an alphasyllabary unit. In our work, we have analyzed the behavior of the acoustic features like, Mel frequency cepstral coefficients and linear predictive coding for various aksharas using techniques like, visualization, probability density function (pdf), Q–Q plot and F-ratio. The classifiers, support vector machine (SVM) and hidden Markov model (HMM) are used for classifying the recorded audio into corresponding aksharas. We have also compared the classification performance of HMM and SVM.

Similar content being viewed by others

References

Alpaydin, E. (2004). Introduction to machine learning. India: PHI Publications, ISBN-81-203-2791-8.

Atal, B. S. (1976). Automatic recognition of speakers from their voices. Proceedings of IEEE, 64(4), 460–476.

Atal, B. S., & Hanauer, S. L. (1971). Speech analysis and synthesis by linear prediction of the speech wave. The Journal of the Acoustical Society of America, 50(2), 637–655.

Atal, B. S., & Rabiner, L. (1976). A pattern recognition approach to voiced-unvoiced-silence classification with applications to speech recognition. IEEE Transactions on Acoustics, Speech and Signal Processing, 24(3), 201–212.

Axelrod, S., & Maison, B. (2004). Combination of HMM With DTW for speech recognition. In Proceedings of international conference on acoustics, speech and signal processing (ICASSP 2004) (pp. 173–176).

Chien, J., Shinoda, K., & Furui, S. (2007). Predictive minimum bayes risk classification for robust speech recognition. In INTERSPEECH 2007, August 27–31, Belgium (pp. 1062–1065).

Das, B., Mandal, S., Mitra, P., & Basu, A. (2013). Effect of aging on speech features & phoneme recognition: A study on Bengali vowels. The International Journal of Speech Technology, 16, 19–31.

Davis, S. B., & Mermelstien, P. (1980). Comparison of parametric representation for monosyllabic word recognition in continuous speech recognition. IEEE Transactions on Acoustics, Speech And Signal Processing, 28(4), 357–365.

Duda, R. O., Hart, P. E., & Stork, D. G. (2006). Pattern classification. New York: WILEY Publications.

Ephraim, Y. (1992). Statistical model based speech enhancement systems. Proceedings of IEEE, 80(10), 1526–1555. ISSN 0018– 9219.

Ganga Shetty, S. V., & Yagnanarayana, B. (2001). Neural network models for recognition of consonant–vowel (CV) utterances. In INNS-IEEE international joint conference on neural networks, Washington, DC (pp. 1542–1547), July, 2001.

Hegde, S., Achary, K. K., & Shetty, S. (2012). Isolated word recognition for Kannada language using support vector machine. In International conference on information processing 2012, CCIS 292 (Vol. 292, pp. 262–269). Berlin: Springer

He, X., & Zhou, X. (2005). Audio classification by hybrid support vector machine / hidden Markov model. UK World Journal of Modeling and Simulation, 1(1), 56–59. ISSN 1746–7233.

Jiang, H., Li, X., & Liu, C. (2006). Large margin HMM for speech recognition. IEEE Transactions on Audio, Speech and Language Processing, 14(5), 1584–1595.

Johnson, R. A., & Wichern, D. W. (2007). Applied multivariate statistical analysis. Englewood Cliffs: PHI Publications. ISBN-978-81-203-4587-4.

Kaur, Er, A., & Singh, Er, T. (2010). Segmentation of continuous Punjabi speech signal into syllables. In Proceedings of the world congress on engineering and computer, WCECS 2010, October 20–22, 2010, San Francisco, USA.

Kinsner, W., & Peters, D. (1988). A speech recognition system using linear predictive coding and dynamic time warping. In Engineering in medicine and biology society, 1988. Proceedings of the annual international conference of the IEEE. 4–7 Nov. 1988 (Vol. 3, pp. 1070–1071 ) New Orleans, LA, USA.

Kumar, S. R. K., & Lajish, V. L. (2013). Phoneme recognition using zero crossing interval distribution of speech patterns & ANN. The International Journal of Speech Technology, 16, 125–131.

Lamel, L. F., Rabiner, L. R., Rosenberg, A. E., & Wilpon, J. G. (1981). An improved end point detector for isolated speech recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing., 29(4), 777–785.

Lakshmi, A., & Murthy, A. H. (2006). Syllable based continuous speech recognizer for Tamil, Proceedings of international conference on spoken language, INTERSPEECH 2006 - ICSLP, September 17–21, Pittsburgh, Pennsylvania (pp. 1878–1881).

Linde, Y., Buzo, A., & Gray, R. M. (1980). An algorithm for vector quantiser design. IEEE Transactions on Communications, 28(1), 84–95.

McLoughlin, I. (2009). Applied speech and audio processing. Cambridge: Cambridge University Press.

Nag, S., Treiman, R., & Snowling, M. J. (2010). Learning to spell in an alphasyllabary: The case of Kannada. Writing Systems Research, 2, 41–52. doi:10.1093/wsr/wsq001.

Patro, H., Senthil, R. G., & Dandapat, S. (2007). Statistical feature evaluation for classification of stressed speech. International Journal of Speech Technology, 10, 143–152.

Peltonen, V., Tuomi, J., Klapuri, A., Huopaniemi, J., & Sorsa, T. (2002). Computational auditory scene recognition. In IEEE international conference on acoustics speech and signal processing, (Vol. 2, pp. II-1941 - II-1944) 2002.

Rabiner, L., & Juang, B.-H. (1993). Fundamentals of speech recognition. Englewood Cliffs, NY, USA,: Prentice Hall PTR. ISBN:0-13-015157-2.

Rahim, M. G., & Juang, B.-H. (1996). Signal bias removal by maximum likelihood estimation for robust telephone speech recognition. IEEE Transactions on Speech and Audio Processing, 4(1), 19.

Sarah, Hawkins (2003). Contribution of fine phonetic detail to speech understanding. In textitProceedings of the 15th international congress of phonetic sciences (pp. 293–296).

Sha, F., & Saul, L. K. (2009). Large margin training of continuous density hidden Markov models. In J. Keshet & S. Bengio (Eds.), Automatic speech and speaker recognition: Large margin and kernel methods. New Jersey: Wiley-Blackwell.

Sohn, J., Kim, N. S., & Sung, W. (1999). Statistical model-based voice activity detection. IEEE Signal Processing Letters, 6(1), 1–3.

Tan, P., Steinbach, M., & Kumar, V. (2006). Introduction to data mining. Boston: Pearson Addison Wesley, ISBN: 978-81-317-1472-0.

Thangarajan, R., Natarajan, A. M., & Selvam, M. (2009). Syllable modeling in continuous speech recognition for Tamil language. International Journal of Speech Technology, 12(1), 47–57.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hegde, S., Achary, K.K. & Shetty, S. Statistical analysis of features and classification of alphasyllabary sounds in Kannada language. Int J Speech Technol 18, 65–75 (2015). https://doi.org/10.1007/s10772-014-9250-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-014-9250-8