Abstract

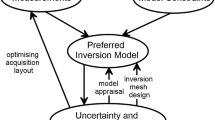

This review paper addresses the development of numerical modeling of electromagnetic fields in geophysics with a focus on recent finite element simulation. It discusses ways of estimating errors of our solutions for a perfectly matched modeling domain and the problems that arise from its insufficient representation. After a brief outline of early methods and modeling approaches, the paper mainly discusses the capabilities of the finite element method formulated on unstructured grids and the advantages of local h-refinement allowing for both a flexible and largely accurate representation of the geometries of the multi-scale geomaterial and an accurate evaluation of the underlying functions representing the physical fields. In summary, the accuracy of the solution depends on the geometric mapping, the choice of the mathematical model, and the spatial discretization. Although the available error estimators do not necessarily provide reliable error bounds for our complex geomodels, they are still useful to guide grid refinement. Therefore, an overview of the most common a posteriori error estimators is given. It will be shown that the sensitivity is the most important function in both guiding the geometric mapping and the local refinement.

Similar content being viewed by others

Article Highlights

-

Modern finite element simulations of electromagnetic fields are able to incorporate complex close-to-reality geometries in the geo-models

-

A posteriori error estimators provide efficient tools to guide the adaptive refinement of unstructured meshes

-

The sensitivity function governs both the level of geometric detailedness and goal-oriented mesh refinement

1 Introduction

The goal of electromagnetic (EM) modeling in geophysics is to find mathematical and physical models that represent as well as possible the reality of our perceived geo-environment and allow us to predict and understand the behavior of EM fields in space and time. As a matter of fact, these models are always an abstract and incomplete representation of this reality. They therefore contain a multitude of sources of error and are subject to a limited range of validity. Particularly, the geometry of our physical models in the Earth sciences is extremely challenging due to its multi-scale nature.

On a small scale, we may end up in microscopic, possibly self-similar material structures (Pape et al. 1982, 1998a, b; Weiss et al. 2020) where a variety of pore-scale and inner-surface electrochemical processes are usually not covered anymore by our chosen mathematical model. Electric conductivity can become complex-valued, frequency dependent and nonlinear. Moreover, texture or heterogeneity below a certain spatial scale can appear as anisotropy, leading to a matrix representation of electromagnetic parameters in the equations to be discretized (Weidelt 1999; Li and Pek 2008; Yan et al. 2016; Wang et al. 2018; Li et al. 2021). Much of this is subject to current petrophysical research, which gives us new insights into the fundamental properties of these parameters (e.g., Börner et al. 2013, 2017).

On medium and large scales - and this is actually the main focus of this article - there are sources of error associated with the representation of macroscopic geometry and its discretization. Whereas geometry modeling started with homogeneous and layered halfspaces with flat surface topography and only a handful of parameters in the first half of the twentieth century and up to the end of the 1960s (e.g., Wait 1953; Gosh 1971), we were able to approximate rough 2D and 3D geometries in the 1970s (Jones and Pascoe 1971; Schmucker 1971; Brewitt-Taylor and Weaver 1976; Dey and Morrison 1979) and 1980s (Scriba 1981; Oristaglio and Hohmann 1984) using rectangular building blocks to construct geometric models in a brick-like and conforming fashion mostly using finite difference (FD) methods. These are simple and easy to handle but sometimes also inefficient, particularly when it comes to adopting to the geometric idiosyncrasies of our geo-reality. Staircase-like structures associated with rectangular Cartesian grids tend to introduce artifacts into the model response once the configuration of sources and receivers generates sensitivity toward the artificial geometric features. Figure 1 shows an example for the DC resistivity case. Note, however, that this applies in general to EM methods, especially but not exclusively, if they apply point sources or receivers. Find another very illustrative example in fig. 7 of Li and Pek (2008) for the marine magnetotelluric method in 2D, where the rectangular discretization of a triangularly shaped sea mount leads to an erroneous oscillating response for the FD method.

Virtual DC resistivity experiment across a ramp-like topography (left-hand subplot) showing the influence of the insufficient geometric representation of the slope using tensor product grids. The yellow dots in the inset panel show apparent resistivities for a receiver located in the inner vertex of a stair, whereas the red dots indicate the response at the outer vertex. The blue line shows the response for an exact match of the topography using the finite element method with an unstructured grid. Note that the deviation of the response between inner and outer observation points vanishes with distance of the receiver to the ramp structure which is due to the decreasing sensitivity of the measurement configuration with respect to the artificial geometric features. For more details of the DC sensitivity patterns see Spitzer (1998)

Therefore, more elegant and accurate ways of representing geometry evolved during the last 20 years incorporating unstructured and non-conforming grids or, recently, even meshless approaches (Wittke and Tezkan 2014; Wittke 2017).

Before we start discretizing, however, we need to describe the geometry itself which is a major problem to tackle. We need tools to disassemble the computational domain into ‘water-tight’ subdomains with arbitrary shapes. These domains may encompass a large range of spatial scales. Boreholes, mining tunnels, bathymetry, or the topography of the Earth’s surface, for example, can all significantly influence the electromagnetic response within a specific frequency range or time interval at certain locations. This ultimately means that we have to deal with a multi-scale issue which requires an appropriate level of geometric detailedness due to the particular sensitivity pattern of the experiment carried out to account for the true physical response once a reliable mathematical model has been chosen. In other words, the geometry has to be mapped onto the model in great detail where the sensitivity of the experiment is high and can be less detailed in regions of low sensitivity. See Zehner et al. (2015) for a variety of approaches to geometry modeling and Börner et al. (2015a, 2015b) for a series of virtual electromagnetic experiments using one geometric model described by non-uniform rational B-splines (NURBS) for different EM methods.

In the following, we consider the case where the geometry is perfectly matched. Even in this case, however, there remain sources of error. Since there are no closed-form solutions of Maxwell’s equations with arbitrarily varying coefficients, discrete solutions inevitably lead to approximation errors, the magnitude of which is generally difficult to estimate even when the computational domain is perfectly geometrically consistent. FE error analyses, which began with the important work of Babuška and Rheinboldt (1978), nowadays provide us with a-priori and, even better and practically more relevant, a posteriori error estimation that give us certain means to estimate the accuracy of the computed solution or at least to bring the properties of the used discretization to a desired level (Sect. 3). Particularly, we will see that the mesh refinement is driven by goal-oriented error estimators that are basically also governed by the sensitivity function (Sect. 3.3.3).

Very instructive and worth reading reviews on EM modeling have been published by Avdeev (2005), Börner (2010), Everett (2012), Newman (2014), Pankratov and Kuvshinov (2016), and Ren and Kalscheuer (2020) focusing on different aspects of the development of electromagnetic modeling including uncertainty issues and computational efficiency. Here, I will focus on the numerical and computational side of modeling mainly during the last decade where finite element (FE) methods became the methods of choice for many research groups in geo-electromagnetics (geo-EM) due to their inherent flexibility and power to represent complex geometries, especially when they are formulated on unstructured grids.

Having appropriately solved the forward problem we then would need to address the geometry in the inverse problem by trying to find a meaningful parametrization for the inverse solution. This, however, goes beyond the scope of this article. The paper much more reviews some attempts to gain control over the error sources in the forward process, particularly with respect to error estimators defined within the theory of FE (Sect. 3). Before doing so, Sect. 2 gives a brief overview how the different discretization methods, mainly FD (Sect. 2.1) and FE (Sects. 2.2 and 2.2.1), have evolved in mathematical research over time. I will then quickly browse through the different types of FE (Sect. 2.2.2) used in our approaches to modeling vector electromagnetic fields and scalar potentials, and discuss the most important types of meshes underlying the discretization schemes with respect to their ability to represent arbitrary geometry. Section 2.2.3 reviews the work that has been done particularly in geo-EM where I have organized the subsection according to the chronological evolution of the simulation strategies starting with early nodal and edge elements on structured grids and moving on to their implementation on unstructured and non-conforming grids.

I will not address the inverse problem and efforts to quantify uncertainty in a Bayesian sense. Moreover, I will not discuss the idiosyncrasies of quasi-static, frequency or time domain methods and their particular applications. However, the methods discussed range from quasi-static to diffusive. The main focus is on the spatial part of those partial differential equations that are underlying our electromagnetic problems. Particularly, I will address discretization schemes of the partial differential operators of the divergence and the curl that lead to their numerical representations. I will not address specific time integration approaches (e.g., Druskin and Knizhnerman 1988, 1994; Commer 2003; Commer and Newman 2004; Börner et al. 2008, 2015c; Zimmerling et al. 2018), integral equation techniques (e.g., Raiche 1974; Weidelt 1975; Hohmann 1975; Zhdanov 1988; Wannamaker 1991; Xiong and Kirsch 1992; Avdeev et al. 2002; Pankratov and Kuvshinov 2016; Chen et al. 2021), and will rather briefly touch finite difference methods.

The emphasis of this review is on the FE technique in its different variants and its solid mathematical background that leads to the attempt of quantifying errors in the solution which, in turn, drive the adaptive mesh refinement. Consequently, Sect. 3 starts with some remarks on a-priori error estimation in FE. After a brief historical outline of the evolution of error estimation in Sect. 3.1, Sect. 3.2 presents the basics of the variational problem including its weak formulation by means of a simple model problem, which is used to introduce three different popular a posteriori error estimators in Sect. 3.3: the residual-based (Sect. 3.3.1), the recovery-based (Sect. 3.3.2), and the goal-oriented error estimators (Sect. 3.3.3). The insights from Sects. 3.2 and 3.3 are subsequently transferred from the scalar model problem to geo-EM problems using a typical vectorial frequency-domain secondary field approach in the form of the curl–curl equation for the electric field as an example in Sect. 3.4. Sections 3.4.1 to 3.4.3 summarize the main work done in the geo-EM community for the different types of error estimation, each in a chronological order with illustrative example figures.

There are mainly three types of refinement that are discussed in the literature: h-, p- and r-refinement. h-refinement stands for the increase of the spatial resolution of the grid by decreasing the size of the grid cells. Cells are earmarked if the estimated local error of the solution exceeds a certain value relative to the largest estimated error and subsequently refined in a way that is briefly outlined in Sect. 4. p-refinement denotes the increase of the order p of the basis functions. Finally, r-refinement is very similar to h-refinement and rearranges a given number of grid nodes for a given order p of the basis functions. The latter is rather uncommon in geo-EM research and the exclusive p-refinement is also quite rarely used. It leads to the class of spectral FE methods (e.g., Zhu et al. 2020; Weiss et al. 2022), which will not be discussed further here. In the practice of geo-EM applications, h-refinement with moderate order basis functions (i.e., linear to second or third order) has become essentially the prevailing method, with quadratic basis functions as the best compromise between accuracy and numerical effort (Schwarzbach et al. 2011; Grayver and Kolev 2015). Examples of joint h- and p-refinement are described by Pardo et al. (2006) for the 2D simulation of EM logging-while-drilling measurements on structured grids and by Pardo et al. (2011) in the context of marine 2.5D CSEM simulation.

Section 4 closes with some thoughts on alternative strategies of representing spatial structures and an appendix gives some more details on the energy norm and the derivation of the variational problem.

2 Discretization Techniques and Meshes

This section is dedicated to the discretization methods and their development over time. Thereby, I restrict myself as far as possible to FE methods. Due to the practical relevance, I briefly browse through FD techniques and the closely related finite volume (FV) methods. Concerning the grids I will address structured (rectangular, Cartesian, tetrahedral, hexahedral), unstructured (tetrahedral) and non-conforming grids (quad- and octrees). In general, all methods can be formulated on all grids. Nevertheless, e.g., FD methods are mainly associated with structured rectangular (2D) or hexahedral (3D) grids with only a few exceptions [e.g., triangular grids for DC resistivity by Erdogan et al. (2008), Demirci et al. (2012) and Penz et al. (2013) going back to Liszka and Orkisz (1980)]. FE methods were formulated on both structured and unstructured grids. However, they develop their full strength only on unstructured grids due to the geometric flexibility of the mesh, the solid theoretical foundation and the resulting elaborated error analysis.

This section is divided into a brief outline of FD methods in geo-EM (Sect. 2.1) followed by a more comprehensive discussion of the FE technique and its application to geo-EM research (Sect. 2.2).

2.1 Finite Differences and Finite Volumes

In FD, a very early reference goes back to a work done by the German-American mathematician Richard Courant (1888–1972) in Göttingen in which he presented basic FD formulations (Courant et al. 1928). Even earlier Lewis Fry Richardson (1881–1953), a British meteorologist and peace researcher, came up with FD formulations in meteorology (Richardson 1922) but was not successful right away due to the limited computational facilities (i.e., performing calculations using paper and pencil) and the resulting inaccurate results in predicting the weather for a day. This alleged failure was interpreted by his peer group as the impossibility of calculating weather forecasts. A major breakthrough was achieved only decades later when moderate electronic computational facilities became available. Yee (1966) introduced a staggered grid cell to account for the different locations at which the electric and magnetic fields are calculated when a curl operator is discretized (Fig. 2).

The so-called Yee cell has been the blueprint for generations of FD modelers in geo-EM for discretizing the curl operator on a staggered grid (after Yee 1966)

Also in geo-EM, FD has a long history and important developments were made from the beginning of the 1970s (see the references in Sect. 1). In the 1990s, computing facilities became more powerful and iterative equation solvers like the preconditioned conjugate gradient method were able to solve large systems of equations with moderate memory requirements and reasonable run times (Mackie et al. 1993; Wang and Hohmann 1993; Druskin and Knizhnerman 1994; Spitzer 1995; Newman and Alumbaugh 1995; Smith 1996b; Newman and Alumbaugh 1997; Spitzer and Wurmstich 1999). In the end, millions of rectangular building blocks were used to construct geometric models in a brick-like and conforming fashion. FD methods are still widely used today for practical data interpretation (Xiong et al. 2000; Sasaki 2001; Siripunvaraporn et al. 2002; Davydycheva et al. 2003; Sasaki 2004; Streich 2009; Streich and Becken 2011; Egbert and Kelbert 2012; Grayver et al. 2013). They are implemented in forward and/or inverse modeling schemes and compete with upcoming FE techniques. The problem of incorporating topography using FD is usually solved by either applying appropriate boundary conditions at the staircase-shaped earth’s surface or assigning high resistivities to the air cells. Resolution of both geometry and grid is usually controlled only by the modeler’s experience. Systematic studies on the grid quality are rare (see, e.g., Ingerman et al. 2000), and a rigorous investigation of the influence of the stair-like topography is still missing.

Using a singularity removal procedure according to Lowry et al. (1989), more geometric flexibility on structured grids can be achieved by detaching the source positions from the grid nodes (Spitzer et al. 1999; Spitzer and Chouteau 1997, 2003). Singh and Dehiya (2023) use a coarse mesh to calculate boundary conditions for a smaller fine mesh to improve the condition number of the system matrix.

In addition to these well-known FD techniques used in EM, relatively few FV approaches were reported, some on structured rectangular grids (Haber et al. 2000; Haber and Ascher 2001; Mulder 2006; Plessix et al. 2007) and others on more flexible meshes like quad-/octrees or unstructured tetrahedral meshes. The latter allow for local refinement and will be discussed in more detail later in the context of FE formulations. Haber et al. (2007) presented FV approaches using octrees for forward and inverse problems in EM. Parallel inversion of large-scale airborne time-domain EM data on multiple octree meshes for FV or FE were outlined by Haber and Schwarzbach (2014). FV implementations on 3D unstructured tetrahedral/Voronoi grids for a total-field A-phi Coulomb-gauged formulation (i.e., the magnetic vector potential \(\varvec{A}\) and the scalar electric potential \(\phi\)) were made by Jahandari and Farquharson (2015).

2.2 Finite Elements

In this section, FE techniques will be illuminated in more detail. For this purpose, we look at the historic development of FE techniques at first and briefly sketch the underlying concepts of variational problems leading to the weak formulation of the problem where equalities are typically defined in the sense of inner products with test functions. Because it usually takes a while for mathematical innovations to find their way into the fields of application I have included the use of FE in geo-EM in a separate Sect. 2.2.3.

2.2.1 A Short Historical Overview of the Development of the Finite Element Method

The variational problem dates back to 1696, when Johann Bernoulli (1644–1748) formulated the so-called brachistochrone problem, in which a curve AMB was sought that gives the shortest possible time for a body to slide from A to B under gravity, where A and B are two fixed points on a vertical plane. In 1744, Euler formulated a general solution to the variational problem in the form of a differential equation that was a decade later justified by Joseph Louis de Lagrange (1736–1813). The origins of the FE method date back to the early nineteenth century when Karl Heinrich Schellbach (1805–1892), a German mathematician, solved a minimum surface area problem with some steps typical for FE (Schellbach 1851). Later, in the early twentieth century, Walter Ritz (1878–1909), a Swiss mathematician and physicist in Göttingen, minimized a quadratic functional in a finite-dimensional functional space (Ritz 1909). Whereas Ritz used eigen functions, Boris Grigoryevich Galerkin (1871–1945) and Ivan Grigoryevich Bubnov (1872–1919), two Russian mathematicians and engineers, later used polynomials or trigonometric functions to solve engineering problems. Their method is called the method of weighted residuals, Galerkin or Galerkin-Bubnov method (Galerkin 1915). Again, Richard Courant used ansatz functions with small support (hat functions) for the first time in the mid-twentieth century (Courant 1943). From the 1950s onward, the engineering community discovered FE techniques. In mechanical engineering, solids were disassembled into a finite number of elements and the displacements were calculated under given loads in the nodes of the FE. First practical applications came up in the aerospace and automotive industry (Turner et al. 1956; Clough 1960). In the 1960s, there was support coming from the Math community and very important monographs by Zienkiewicz and Cheung (1967) and Strang and Fix (1973) appeared. Furthermore, the computer program NASTRAN, that was originally developed for NASA, was commercialized by the MacNeal-Schwendler Corporation at the end of the 1960s. The method was extended to vector functions that are particularly important for the solution of electromagnetic problems by Raviart and Thomas (1977) and Nédélec (1980). Very good and comprehensive text books on the FE technique for Maxwell’s equations were published by Jin (2002) and Monk (2003). An excellent historical review is given by Gander and Wanner (2012).

While initially FE methods in geo-EM were formulated on structured grids just like FD methods, they were later mostly used in conjunction with unstructured triangular (2D) or tetrahedral (3D) meshes which develop their strengths mainly with respect to the flexible adaptation to arbitrary geometries. Since nodal or Lagrange elements enforce continuity of the scalar function over element boundaries, they were only applicable to those cases where components of the electromagnetic fields or potentials behave in such a way. As we know from EM theory, some components like the normal component of the electric field \(\varvec{E}\) or the tangential component of the magnetic field \(\varvec{H}\) are discontinuous at parameter jumps. In this case, the simulation with Lagrange elements becomes erroneous, which was not remedied until the introduction of vector FE in the late 1970s/early 1980s (Raviart and Thomas 1977; Nédélec 1980).

2.2.2 Types of Finite Elements

In the following, I will introduce several types of FE, but will discuss only three of them in more detail because of their particular importance to EM modeling. These are Lagrangian, Nédélec, and Raviart–Thomas FE. In Table 1, there is a compilation of various FE types including the type of basis functions they are associated with (scalar, vector, matrix).

Lagrange elements (Fig. 3, left-hand subplot) are chosen if a piecewise smooth scalar function v as, e.g., in the case of the scalar electric potential is considered that lives in the Sobolev space \({\mathcal{H}}^{1}(\Omega ):= \left\{ v\in L^{2} (\Omega ):\nabla v\in [L^{2}(\Omega )]^{3}\right\}\) with \(L^{2}(\Omega ):= \left\{ v:\Omega \rightarrow {\mathbb{C}}^{3}:\int _{\Omega } |v|^{2} dx < \infty \right\}\), i.e., v is square-integrable and its gradient exists. Lagrange elements are also called nodal elements because their degrees of freedom (dof) live at the nodes of the mesh. They enforce continuity of v across element boundaries. Using 2D triangular meshes and linear basis functions (\(p=1\)), three dof are associated with a Lagrange element (\(n=3\)). Increasing the order of the basis functions to \(p=2\) (quadratic), the number of dof n rises to 6 where the additional dof are associated with the center of each edge. In case of three dimensions and tetrahedral meshes, \(n=4\) for \(p=1\) and \(n=10\) for \(p=2\).

The most important element type for EM modeling in 3D is the Nédélec FE (Nédélec 1980) (Fig. 3, right-hand subplot). For a piecewise polynomial to be \({\mathcal{H}} (\text{curl})\)-conforming, the tangential component of \({{\varvec{v}}}\) must be continuous. \({{\varvec{v}}}\in {\mathcal{H}} (\text{curl};\Omega ):= \left\{ {\varvec{v}}\in [L^{2} (\Omega )]^{3}:\nabla \times {\varvec{v}}\in [L^{2} (\Omega )]^{3}\right\}\), i.e., \({\varvec{v}}\) is square-integrable and its curl exists. In case of \(p=1\), they are also called edge elements because their vectorial basis functions \({\varvec{v}}\) are situated along the edges of the elements. Consequently, \(n=3\) in 2D and \(n=6\) in 3D. For \(p=2\), additional dof are associated with the element face centers giving \(n=8\) in 2D and \(n=20\) in 3D.

The third and final type of element introduced here is the mixed FE according to Raviart and Thomas (1977) (Fig. 3, center subplot). It guarantees the normal component of \({\varvec{v}}\) to be continuous and is mainly used for fluid flow and transport modeling. In geo-EM, it is important in conjunction with the regularization of the inverse problem (Schwarzbach and Haber 2013; Blechta and Ernst 2022). The corresponding Sobolev space is \({\mathcal{H}} ({\text{div}};\Omega ):= \left\{ {\varvec{v}}\in [L^{2} (\Omega )]^{3}:\nabla \cdot {\varvec{v}}\in L^{2} (\Omega )\right\}\), i.e., \({\varvec{v}}\) is square-integrable and its divergence exists.

2.2.3 Finite Elements in Geo-electromagnetics

In this section, I will review the course that FE modeling has taken in geo-EM starting with nodal elements on structured grids in the 1970s, edge elements on structured grids in the 1990s, nodal elements on unstructured grids in the 2000s and Nédélec elements of different order on unstructured grids from the end of the 2000s until today. Note that all approaches presented in this section are not subject to any kind of quantitative error estimation and use meshes defined by the modeler. Yet, these meshes show regions of refinement usually around sources and/or receivers and/or at specific geometric features. But these refined regions are identified through the experience of the modeler in conjunction with a mesh generator that serves for mesh quality (see Sect. 3.4.4). It is not until Sect. 3 that I introduce different kinds of error estimation and present work that employs these techniques for the adaptive refinement of grids.

2.2.3.1 Structured Grids and Nodal Elements

There were a number of early works based on structured rectangular/triangular/tetrahedral grids using nodal FE. As early as 1971, Coggon (1971) published a secondary field 2D EM/DC/IP approach for a line source on a small structured triangular grid (Fig. 4). Reddy et al. (1977) reported the application of FE to the 3D MT and magnetovariational (MV) methods and already recognized that by enforcing the continuity of the normal component of the electric field, an erroneous transition zone is created in the vicinity of conductivity jumps. Later, Pridmore et al. (1981) used FE on a rectangular grid for the discretization of the 3D equation of continuity in a DC resistivity application. The 2D MT method was tackled by Wannamaker et al. (1987), and Gupta et al. (1989) presented a 3D time-domain (TD) code on a rectangular grid. 2.5D EM simulations for a horizontal electric dipole (HED) were carried out by Unsworth et al. (1993). The same year Livelybrooks (1993) came up with 3D nodal element simulations for a secondary plane-wave and electric dipole field. He introduced so-called discontinuity terms to correct for the errors resulting from the violation of the continuity conditions. The 3D DC resistivity case was formulated on a structured tetrahedral grid by Sasaki (1994). Everett and Schultz (1996) used a 3D nodal A-phi formulation on tetrahedrals for the simulation of induction phenomena in a heterogeneous conducting sphere excited by an external source current. A 3D secondary field Coulomb-gauged A-phi approach to induction logging was performed by Badea et al. (2001) for controlled-source EM (CSEM) in cylindrical coordinates using linear nodal basis functions on tetrahedra. Li (2002) developed a 2D simulation code for MT in anisotropic electric media using triangular grids. Shortly thereafter, Li and Spitzer (2002) compared linear FE and 7-stencil FD simulations in 3D for the DC case on rectangular hexahedral grids and came up with the conclusion that for this particular setup, the two approaches were comparable in their performance. The accuracy was slightly higher with FE, however, they were also a bit more expensive in numerical terms. The investigated FE approach gives 27 entries per row of the system matrix whereas it is only 7 for FD. Later Li and Spitzer (2005) extended the DC approach to cases with arbitrary anisotropy. Erdogan et al. (2008) presented 2D DC simulations on structured triangular grids with simple topography using both FD and FE.

2D structured triangular grid for the simulation of the EM, DC resistivity and IP method. The mesh is designed to include a vertical dike structure in the center part and an overburden (taken from Coggon 1971)

2.2.3.2 Structured Grids and Edge Elements

Already Sugeng (1998) came up with a 3D edge FE code on structured hexahedral grids. Mitsuhata and Uchida (2004) used Lagrangian and edge elements for discretizing a 3D T-Omega formulation (with \({\varvec{T}}\) as the electric vector potential and \(\Omega\) as the magnetic scalar potential) on a hexahedral grid. Isoparametric hexahedral grids were used by Nam et al. (2007) for 3D MT with simple topography. They implemented edge elements and solved the resulting system of equations using a biconjugate gradient method with a Jacobian preconditioner. Farquharson and Miensopust (2011) solved the 3D MT problem on rectangular hexahedral grid and applied a divergence correction scheme according to Smith (1996a) within the stabilized conjugate gradient solver BICGSTAB. For this purpose, a scalar electric potential is calculated from the divergence of the electric current density to correct for numerical inaccuracies of the electric field. This correction potential is repeatedly applied to the electric field after a number of steps within the iterative equation solver. The electric field was discretized with linear Nédélec elements, the scalar electric potential with nodal elements. Another 3D CSEM secondary field approach using edge elements on hexahedral grids solved by a multi-frontal direct solver was outlined by Da Silva et al. (2012). Cai et al. (2014) used edge elements on isoparametric hexahedral grids for 3D CSEM in anisotropic environments with simple topography. Finally, Kordy et al. (2016) described a 3D magnetotelluric inversion scheme including topography using deformed hexahedral edge FE and direct solvers parallelized on symmetric multiprocessor computer architectures.

2.2.3.3 Unstructured Grids and Nodal Elements

While FE and FD on structured grids did not seem to differ significantly in performance, the breakthrough came with the introduction of unstructured meshes. A very early work goes back to Rücker et al. (2006) for 3D DC secondary field simulations on unstructured tetrahedral meshes including realistic topography inferred from a digital terrain model (Fig. 5).

An early work of incorporating a digital terrain model into a 3D model using an unstructured tetrahedral grid (Merapi volcano, Indonesia) for the simulation of the DC resistivity method (b). The mesh yields 566,736 dof for second order basis functions. It is fine around the electrodes (red dots) and becomes coarser with increasing distance from them. The top subplot a shows a projection of the mesh onto a plane (taken from Rücker et al. 2006)

Due to the topography, there is no simple analytical solution to the reference field so that it was accurately calculated on a very fine grid. Only Penz et al. (2013) showed later that this was actually not necessary if the no-flow boundary condition at the surface is adopted correspondingly. Blome et al. (2009) reported on advances in three-dimensional geoelectric forward modeling by calculating the primary field by fast multipole-based boundary element methods and applying infinite elements at boundaries. Udphuay et al. (2011) carried out three-dimensional resistivity tomography in extreme coastal terrain using unstructured tetrahedral grids. A Lagrange element secondary field A-phi approach to 3D CSEM modeling on unstructured tetrahedral meshes and anisotropic conductivities was presented by Puzyrev et al. (2013). The inversion of 2D MT and radiomagnetotellurics (RMT) data using FE on unstructured grids was communicated by Özyildirim et al. (2017). An example of an underground mining tunnel that was incorporated into a 3D DC resistivity inversion was given by Pötschke (2017). The tunnel surface was captured with great accuracy using a laser scanner technology. Electrodes were placed along the tunnel wall. It turned out that, in regions of high sensitivity, it is extremely important to match the true locations of the electrodes very accurately and to incorporate the tunnel geometry with a high level of detailedness to avoid artifacts in the inversion results (Fig. 6).

A 3D meshed geometry model of a tunnel in the Reiche Zeche Mine in Freiberg, Germany, gives an illustrative example of mapping interior geometry onto a model using unstructured tetrahedral grids. Electrode locations are depicted by red dots (a). The results of a DC resistivity inversion are shown in (b) (taken from Pötschke 2017)

2.2.3.4 Unstructured Grids and Nédélec Elements

Very early work using Nédélec elements in 3D on unstructured tetrahedral grids and second-order basis functions goes back to Franke et al. (2007a, 2007c) and Kütter et al. (2010). The latter already included the topography and bathymetry around Stromboli volcano, Italy, using a digital elevation model (Fig. 7). Um et al. (2012) addressed a 3D iterative first-order Nédélec FE time-domain method formulated on unstructured tetrahedral meshes. To account for the ineffective time integration through time stepping, they introduced an adaptive time step doubling. A 3D CSEM modeling algorithm using edge elements on unstructured tetrahedral grids was published by Mukherjee and Everett (2011). They included both the magnetic permeability and electrical conductivity for a secondary field A-phi approach. An adaptation to geometry or topography was, however, not performed. Schwarzbach and Haber (2013) reported a FE-based inversion for time-harmonic electromagnetic problems using unstructured tetrahedral meshes and edge elements. Nédélec elements on 3D unstructured tetrahedral grids were used by Um et al. (2013) for a frequency domain E-field formulation. The system of linear equations was solved by the QMR iterative solver with an ILU preconditioning. A 3D Coulomb-gauged A-phi formulation on unstructured grids using Nédélec and nodal elements and electric and magnetic sources was reported by Ansari and Farquharson (2013) and Ansari et al. (2017). The latter apply their scheme to a realistic geophysical scenario using a model of the Ovoid ore deposit at Voisey’s Bay, Labrador, Canada, for helicopter-borne EM (Fig. 8). Usui (2015) presented a 3D MT inversion code based on linear edge elements and unstructured tetrahedral grids written in C++. Jahandari et al. (2017) compare two FE and three FV schemes on unstructured tetrahedral grids. They conclude that both FE and FV schemes are comparable in terms of accuracy and computational resources. However, the FE schemes are slightly more accurate but also more expensive than the FV schemes. Cai et al. (2017) used an anisotropic total field approach for the CSEM method on a 3D unstructured tetrahedral grid. It was implemented on a parallel computer architecture using linear Nédélec elements and solved by a multifrontal direct solver. Käufl et al. (2018) investigated the influence of 3D topography on the magnetotelluric (MT) response using high-order Nédélec FE. Note that they have formulated the problem on locally refined octrees. A 3D marine CSEM inversion scheme including anisotropic conductivities was presented by Wang et al. (2018). The C++ code uses an unstructured tetrahedral grid Nédélec forward modeling code based on the FEMSTER library (Schwarzbach et al. 2011; Castillo et al. 2005). A 3D secondary field marine CSEM solution with linear Nédélec elements on a tetrahedral unstructured mesh was reported by Castillo-Reyes et al. (2018). They used the PETSc library to solve the resulting system of linear equations and refined the meshes due to the skin effect. The code was written in Python and implemented on high-performance computing (HPC) facilities. A 3D secondary field frequency domain parallel higher-order Nédélec FE approach for the marine CSEM forward problem with isotropic conductivities discretized on tetrahedral unstructured meshes was reported by Castillo-Reyes et al. (2019). Their mesh refinement was roughly guided by the skin effect. Rochlitz et al. (2019) presented a further total and secondary field frequency domain approach for CSEM based on the open-source library FEnics using linear and quadratic edge elements on unstructured tetrahedral grids for the E-, H-, and A-phi formulations. An open-source 3D magnetotelluric forward modeling code on unstructured meshes using higher order edge elements and being optimized for both HPC and non-HPC architectures was outlined by Gallardo-Romero and Ruiz-Aguilar (2022), Finally, Blechta et al. (2022) presented a generic Matlab-based forward modeling and inversion library at the 25th International Workshop on Electromagnetic Induction in the Earth held in Çeşme, Turkey, in 2022 including higher order Lagrange, Nédélec, and Raviart–Thomas elements covering basically all frequency domain geo-EM methods including DC resistivity and induced polarization (IP).

3D model of Stromboli volcano, Sicily, and its bathymetric surrounding using a digital terrain model of the area mapped onto a tetrahedral grid of 546,759 elements (a). The MT response for a homogeneous resistive Earth and a conductive ocean is simulated in the frequency domain along profiles running along the seafloor and crossing the volcano in the EW and NS directions (b, c top). As an example, apparent resistivities (c center) and phases (c bottom) in xy- and yx-polarization at a period of \(T = 1000\)s are shown for the xN- and xS-profiles. Note that the terrain effect is extremely significant changing the apparent resistivity by two orders of magnitude (taken from Kütter et al. 2010)

3D model of the Ovoid ore body, a massive sulfide lens in the Voisey’s Bay nickel–copper–cobalt deposit in Labrador, Canada, for the simulation of a HEM field survey. The tetrahedral mesh consists of 954,092 cells (taken from Ansari et al. 2017)

3 Error Estimation

In this section, I am going to review the most important a posteriori error estimators used in the geo-EM community. Basically, a priori error analysis is useful too because it provides information about the convergence behavior of the solution. However, it is very expensive in terms of numerical work. In general, the FE theory states that an arbitrarily exact solution can be obtained if the discretization width h goes toward zero. There are two very important and fundamental theorems on which this statement is based. According to the theorem of Lax–Milgram (Lax and Milgram 1954), the solution exists and is unique. Céa’s lemma (Céa 1964) states that the Galerkin method yields the best solution with respect to the underlying FE subspace. The error of the numerical solution can theoretically be estimated in terms of the \(L^{2}\)-norm \(\left\Vert e_{h}\right\Vert _{L^{2}}=\left\Vert u - u_{h}\right\Vert _{L^{2}}\le C N^\alpha\), where C is a constant depending on the regularity of the solution k, the polynomial degree p of the basis functions, the modeling domain \(\Omega\), and the triangulation \({\mathcal{T}}\) but does not depend on the exact solution u itself and the number N of degrees of freedom. The asymptotic rate of convergence (or simply convergence rate) is then defined by

(Babuška and Aziz 1972; Strang and Fix 1973). To estimate the convergence rate in practice, solutions must be computed for a series of refinements and, with \(h \rightarrow 0\), the numerical effort becomes very large (Franke-Börner 2012). Therefore, while such error analyses are informative, they are of limited use in practice.

In contrast, a posteriori error estimators, which indicate the magnitude of the error and can theoretically estimate error bounds, are very helpful and numerically cheap. A posteriori error estimators are generally based on the convergence theory and require local convergence, which is not always the case as the work of Franke-Börner et al. (2013) shows. This has far-reaching consequences, since in cases of highly inhomogeneous discretization and complex high-contrast parameter models (as is usually the case in geo-EM modeling), neither the convergence rate nor the error bounds can be reliably predicted. According to Grätsch and Bathe (2005) all the error estimators generally do not provide guaranteed bounds so that the estimation technique most useful is probably the method that works efficiently in general analyses. They actually conclude that the practical error estimation techniques do not provide mathematically proven bounds on the error and need to be used with care. Also, Schwarzbach and Haber (2013) note that the mesh-dependent convergence rate determines the accuracy of the numerical solution only up to a mesh-independent constant factor. This factor is essentially determined by the smoothness of the true solution which is generally difficult to quantify as it varies with problem-dependent parameters like, for example, discontinuities in electrical conductivity or magnetic permeability, source type and geometry. Still error estimators are the best option we have for getting an idea of the accuracy of our solutions. They are therefore definitely helpful tools when it comes to adaptive meshing.

After a short historical outline, we are going to look at a simple model problem in the form of Poisson’s equation and subsequently transfer the findings to the induction (curl–curl) problems that we are facing in geo-EM research.

3.1 A Short Historical Overview of Error Estimators

The beginning of modern a posteriori error estimation dates back to the end of the 1970s and beginning of the 1980s when Babuška and Rheinboldt (1978) defined the error in the energy norm (see Appendix 1). In the 1980s, element residual methods were established by Demkowicz et al. (1984) and Bank and Weiser (1985) where typically the weak residual was exploited to derive quantitative error measures. At the end of 1980s a new type of error estimator, the recovery-based method, was formulated taking into account the behavior of the gradient of the solution on a patch of elements (Zienkiewicz and Zhu 1987, 1992a, b). In the early 1990s, the basic principles of error estimation were established for scalar functions. The extension to vector FE was achieved in the mid 1990s for elliptic problems and Raviart–Thomas elements by Braess and Verfürth (1996), for parabolic problems by Eriksson and Johnson (1991) and Maxwell’s equations with edge elements by Beck and Hiptmair (1999). Goal-oriented error estimation takes into account the adjoint problem using any of the previous error estimators (Prudhomme and Oden 1999; Oden and Prudhomme 2001). An extensive overview was given by Ainsworth and Oden (2000) and an informative review article by Grätsch and Bathe (2005).

3.2 The Variational Problem and the Weak Formulation

I demonstrate the principles of error estimation in FE using a simple model boundary value problem for a scalar function u with appropriate boundary conditions. The so-called strong formulation of Poisson’s equation reads

and represents an elliptic linear boundary value problem on a domain \(\Omega \in {\mathbb{R}}^{3}\) that is Lipschitz-bounded, i.e., the data \(f\in L^{2}(\Omega )\) and \(g\in L^{2}(\Gamma _{\textrm{N}})\) are sufficiently smooth and square-integrable. \({\varvec{n}}\) denotes the outward normal on the boundary \(\Gamma =\Gamma _{\textrm{D}} \cup \Gamma _{\textrm{N}}\) (split into a Dirichlet and a Neumann part). Multiplying both sides with test functions \(v\in {\mathcal{V}} =\left\{ v\in {\mathcal{H}}^{1}(\Omega ):v=0 {\text{ on }} \Gamma _{\textrm{D}}\right\}\) yields, after some rearrangement, the weak formulation

See Appendix 2 for more details. There are infinitely many functions in \({\mathcal{V}}\); therefore, one restricts oneself to finite dimensional spaces \({\mathcal{V}}_{h}\), which are easier to deal with. \({\mathcal{V}}\) contains the exact solution, i.e. any residual becomes 0. \({\mathcal{V}}_{h}\) is contained in \({\mathcal{V}}\) and therefore functions from \({\mathcal{V}}_{h}\) cannot make the residual vanish. Reducing \({\mathcal{V}}\) to \({\mathcal{V}}_{h} \subset {\mathcal{V}}\) we end up with finding \(u_{h}\in {\mathcal{V}}_{h}\) so that

This is the Galerkin equation with \({\mathcal{V}}_{h}\) as the FE subspace of cellwise polynomial functions of order p over the FE partition \({\mathcal{T}}_{h}\), the latter of which denotes a mesh consisting of individual cells. Galerkin or Rayleigh–Ritz methods are a class of methods for converting a continuous problem in terms of a differential equation in its weak formulation, e.g., according to Eq. (2), into a discrete problem yielding a linear system of equations. The Galerkin method uses the basis functions \(\phi \in {\mathcal{V}}^{h}\) as test functions, a finite weighted sum of which approximates the sought solution \(u_{h}=\sum _{i=1}^{n} u_{i}\phi _{i}\). The coefficient values \(u_{i}\) associated with each test function are determined such that the error between the linear combination of the test functions and the solution is minimized in a chosen norm yielding the linear system of equations

with \(A_{ij}=\displaystyle \int _{\Omega }\nabla \phi _{i}\cdot \nabla \phi _{j} \, {\textrm{d}}\Omega\) defining the elements of the system matrix and \({\varvec{f}}\) as the right-hand side vector.

3.3 A Posteriori Error Estimation

In this section, using the scalar boundary value problem introduced above, I briefly outline the principles of three important a posteriori error estimators used in the geo-EM community. These are the so-called residual-based, recovery-based and goal-oriented error estimators. After that, I will explain how error estimation is applied to Maxwell’s equations and used for our geo-EM problems.

3.3.1 Residual-Based Error Estimation

Generally, in a posteriori error estimation we want to estimate the discretization error while not knowing the exact solution u. The error is therefore defined as the difference between the unknown solution u and the FE solution \(u_{h}\): \(e_{h}=u-u_{h}\). It satisfies the error representation

with \({\mathscr {R}}_{h}\) as the weak residual. The key property of the Galerkin approach is that the error is orthogonal to the chosen subspaces. Since \({\mathcal{V}}_{h} \subset {\mathcal{V}}\), we can use \(v_{h}\) as a test vector in the original equation. Subtracting the two gives

which is called the Galerkin orthogonality.

Explicit error estimates are determined by the direct computation of interior element residuals and jumps at element boundaries (Babuška and Rheinboldt 1978). The global error representation over the whole domain is therefore split up into the sum over elemental integrals

Integration by parts and rearranging gives

with

as the interior element residual R in element K and J as the jump of the gradient across the element face \(\gamma\):

In the first case where \(\gamma\) is not part of an outer boundary (\(\gamma \not \subseteq \Gamma\)), \(\gamma\) separates two elements \(K_{1}\) and \(K_{2}\). Using interpolation theory and the Cauchy–Schwarz inequality elementwise, this directly leads to a local error indicator for element K

with constants \(c_{1},c_{2}\in {\mathbb{R}}\) and \(h_{K}\) as the circumdiameter of element K. For more details see, e.g., Grätsch and Bathe (2005) and Ainsworth and Oden (2000).

3.3.2 Recovery-Based Error Estimation

Recovery-based error estimators consider an ‘improved’, i.e., generally smoothed gradient of the solution \({\mathscr {M}}[ u_{h} ]\) and compare it to the original unsmoothed gradient. Large differences point toward large errors of the solution and provide some estimate of the true error because, in general, gradients across element boundaries tend to be discontinuous. The a posteriori error estimator according to Zienkiewicz and Zhu (1987, 1992a, 1992b) therefore reads

and constitutes a rather heuristic approach. In practical application, the error estimate is calculated with respect to each element

with \(\eta _{K}^{2}=\Vert \nabla u_{h}^{\star } - \nabla u_{h}\Vert ^{2}_{L^{2}(K)}\) and \(\nabla u_{h}^{\star }\) being a ‘recovered’ gradient in element K. It reads

and can be obtained by smoothing over a patch of elements \(\Lambda\) surrounding element K. \({\varvec{P}}\) is a vector polynomial defined on \(\Lambda\), \(\phi _{i}\) are the basis functions, and \(\xi _{i}\) and n are the coordinates and the number of vertices in element K. The assumption that variations in the gradient indicate errors and that smooth solutions stand for accurate solutions is, however, not strictly valid. Therefore, the method might fail in cases of intrinsically smooth solutions.

Another approach of recovery-based error estimation goes back to Beck and Hiptmair (1999) and is formulated directly for time-harmonic Maxwell’s equations. They determine the magnetic field using basis functions from different Sobolev spaces, i.e., \({\hat{\varvec{H}}}_{\textrm{s}}\in {\mathcal{H}}_{\textrm{curl}}\) and \(\tilde{\varvec{H}}_{\textrm{s}}\notin {\mathcal{H}}_{\textrm{curl}}\) giving the error indicator

where \(\tilde{\varvec{H}}_{\textrm{s}}\) serves as the ‘improved’ quantity in the sense above. The asterisk denotes the complex conjugate here.

3.3.3 Goal-Oriented Error Estimators

The last type of error estimator we consider here is called a goal-directed error estimator, which means that we take into account not only the physical field being modeled, but also the fact that the field is observed exclusively at a limited number of locations. Basically, we are not interested in the solution being accurate in regions where we have no observations. Consequently, the global energy norm or total error is not necessarily the most important quantity we are interested in.

For understanding the concept of goal-oriented error estimation we consider the parallels that exist with respect to the solution of the inverse problem. There, we have functions that, in some way, take into account the source and the receiver locations at the same time. The function is called the sensitivity function, and it is defined by the Jacobian matrix consisting of derivatives of the data with respect to the model parameters. There are several ways to determine its spatial behavior (Spitzer 1998). One is called the sensitivity theorem (Lanczos 1961; Geselowitz 1971) that allows for the determination of the sensitivity function \(\Phi _{lmn}\) by forming an inner product of the solution of the actual physical field \({\varvec{F}}^{l}\) excited by a source at location l and a virtual field \({\varvec{F}}^{m}\) excited by a virtual source at the receiver location m integrated over a volume \(\tau _{n}\) at location n. It reads

In mathematics, the virtual problem is called the adjoint or dual problem. It is solved as an additional auxiliary problem using the original system matrix with a different right-hand side. The adjoint field \({\varvec{F}}^{m}\) can be viewed as a weighting function.

The underlying idea of using the solution of the adjoint problem as a weighting function is also the basis of goal-oriented error estimation (Ainsworth and Oden 2000). In FE terminology the virtual weighting function is called an influence function and serves for a more accurate determination of the so-called quantity of interest. With goal-oriented adaptivity, refinements are directed toward a most beneficial increase of the accuracy of this quantity of interest Q(u). It typically yields fine meshes around sources and receivers. Note that the nature of the reciprocity principle is closely related to self-adjointness that finds expression in a symmetric or Hermitian system matrix.

Let again \(u_{h}\in {\mathcal{H}}_{0}^{1}\) denote the FE approximation of the solution of the model problem

and let \(Q:{\mathcal{V}}\rightarrow {\mathbb{R}}\) be a bounded, linear functional with \(w^{Q}\) as the solution of the adjoint problem

Then the error in estimating Q(u) using the FE approximation \(Q(u_{h})\) is

where e and \(e^{Q}\) are the errors of the FE approximation of u and \(w^{Q}\), respectively, and \(\Vert e^{Q}\Vert \le \eta ^{Q}\) and \(\Vert e\Vert \le \eta\). This means the true solution of the quantity of interest is bounded by \(Q(u_{h})-\eta \eta ^{Q}\le Q(u)\le Q(u_{h})+\eta \eta ^{Q}\). The errors of the primal and dual problems e and \(e^{Q}\) can be estimated using any of the error estimators presented above or possibly also others not mentioned here.

A very illustrative example is given by Ainsworth and Oden (2000) for our model problem (1) where the function

is defined as the right hand side f and homogeneous boundary conditions are given by

The solution of function (7) displays steep gradients as can be seen in Fig. 9a. Using a global energy norm the original rectangular mesh is strongly refined within the area of these gradients (Fig. 9b) using a non-conforming mesh to achieve the given target accuracy. The goal-oriented algorithm refines also around the given point \({\varvec{x}}_{0}=(0.6,0.4)\) (Fig. 9c) referring to the quantity of interest Q(u) to reach the target accuracy with less numerical effort and a steeper convergence rate.

3.4 Error Estimation in Geo-electromagnetics

In this section, we are going to transfer the findings from the general case above to geo-EM starting with Maxwell’s classical equations

and discussing a typical geo-EM case in terms of the curl–curl equation for the secondary electric field in the frequency domain. We assume a time-harmonic factor \(e^{i\omega t}\) (see, e.g., Schwarzbach et al. 2011; Grayver and Bürg 2014; Grayver 2015) yielding

which is a type of vector Helmholtz equation subject to, e.g., a homogeneous Dirichlet boundary condition as stated in Eq. (14). Equation (13) is derived from Maxwell’s equations by taking the curl of Faraday’s law stated in Eq. (10) and substituting the resulting curl of the magnetic field by Ampère’s law Eq. (9). Furthermore, we have considered the constitutive relations \(\varvec{B}=\mu \varvec{H}\), \(\varvec{D}= \varepsilon \varvec{E}\) and Ohm’s law \({\varvec{j}}=\sigma \varvec{E}\) implying the material to be linear and isotropic. In order, \(\varvec{B}, \varvec{H}, \varvec{D}\), and \(\varvec{E}\) are the complex-valued EM fields in terms of the magnetic flux density, the magnetic field, the dielectric displacement and the electric field. Note that the lower case letters in Eqs. (9) to (12) refer to the corresponding fields in the time domain. \(\varvec{E}^{p}\) is the primary electric field associated with a background conductivity model \(\sigma ^{p}\). \(\varvec{E}^{s}\) is the secondary electric field due to the anomalous conductivity \(\sigma ^{a}=\sigma -\sigma ^{p}\). \(\mu\) is the scalar magnetic permeability, \(\varepsilon\) the scalar electric permittivity, \({\varvec{j}}\) the current density, \(\omega\) the angular frequency, and i the imaginary unit. \(\varvec{D}\) is usually neglected in typical induction problems considered in geo-EM but has to be taken into account for sufficiently high frequencies especially in resistive environments.

Now, Eq. (13) is multiplied by vectorial test functions \({\varvec{v}} \in {\mathcal{H}}_{0}({\text{curl}},\Omega ):=\left\{ {\varvec{v}}\in {\mathcal{H}}({\text{curl}};\Omega ):n \times {\varvec{v}}=0{\text{ on }}\partial \Omega \right\}\) and integrated over the computational domain \(\Omega\) giving the weak formulation of Eq. (13)

We again apply integration by parts and convert the integrals into sums over the elements and faces. In the manner of Eqs. (3) and (4), the elemental residual- and jump-based terms boil down to

with elemental residuals

and jumps over elemental faces

where \(h_{K}\) is a measure of the size of element K (e.g., the circumdiameter of a cell), e is an interior face of element K with \(e\cap \Gamma =\emptyset\), \(h_{e}\) is the circumdiameter of e, and \({\varvec{n}}_{e}\) is the outward normal on it. Brackets denote the jump of the quantity across element boundaries. The residual-based term \(\eta _{R,K}^{2}\) vanishes if both field equation and its divergence are exactly fulfilled. The jump-based term \(\eta _{J,K}^{2}\) vanishes if the tangential components of the magnetic field and the normal component of the current density are continuous. The continuity of these quantities is not guaranteed by the weak formulation.

In most cases, error estimation in geo-EM has been used in conjunction with unstructured 2D triangular and 3D tetrahedral grids. However, there were also some cases where quad- and octrees have been used as we will see below.

3.4.1 Residual-Based Error Estimators in Geo-EM

A 2D MT residual-based adaptive FE unstructured triangular grid approach was described by Franke et al. (2007b). The 2D and 3D MT boundary value problem has been investigated by Franke et al. (2007a), Franke-Börner et al. (2011), and Franke-Börner (2012) in terms of (1) the electric field (2) the magnetic field, (3) the magnetic vector potential and the electric scalar potential (\({\varvec{A}}-\phi\)), (4) the magnetic vector potential only, and (5) the anomalous magnetic vector potential in a secondary field formulation using a residual-based adaptive unstructured tetrahedral discretization with nodal and vector elements. Franke-Börner (2012) also did extensive research on the a-priori convergence behavior of these FE solutions.

3.4.2 Recovery-Based Error Estimators in Geo-EM

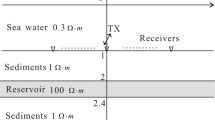

Li and Key (2007) and Li and Constable (2007) used nodal FE and recovery-based refinement strategies for the 2D secondary-field marine CSEM case on triangular unstructured grids (Fig. 10). Ren and Tang (2010) introduced recovery-based error estimators for the 3D DC resistivity case. The 3D secondary-field CSEM case was tackled by Schwarzbach et al. (2011) using a recovery-based adaptive technique according to Beck and Hiptmair (1999) on unstructured tetrahedral grids and higher order Nédélec elements (Fig. 11). The code allows for topography and arbitrary conductivity, magnetic permeability and dielectric permittivity. Another 3D recovery-based adaptive FE modeling code for marine CSEM with seafloor topography on the basis of secondary magnetic vector potentials was outlined by Ye et al. (2018).

2D Model of a complex oil reservoir and salt diapir and a linear array of 200 receivers positioned along the seafloor. The unstructured triangular mesh is adaptively refined using a recovery-based error estimator. Starting model grid containing 1461 elements (a), fifteenth refinement containing 8818 elements (b), and final grid after 31 refinement steps containing 80,233 elements (c) (taken from Li and Key 2007)

3D Model of a marine conductivity environment including seabed topography (bathymetry) in terms of a fixed digital terrain model (two times vertically exaggerated) (a), the coarse unstructured tetrahedral starting mesh including 380,809 elements (b), and the final adaptively refined mesh using a recovery-based error estimator yielding 542,425 elements after the 6th iteration (c). Note that the refinement has mainly taken place around the focus of the secondary sources just beneath the receiver at the seafloor in the central part of the model yielding smallest edge lengths of 5.5 m (taken from Schwarzbach et al. 2011)

3.4.3 Goal-Oriented Error Estimators in Geo-EM

Key and Weiss (2006) used a goal-oriented gradient recovery operator for the adaptive refinement of an unstructured triangular grid in 2D MT modeling. Adaptive FE modeling of 2D magnetotelluric fields in general anisotropic media using a goal-oriented gradient recovery operator for the refinement of an unstructured triangular grid was published by Li and Pek (2008). The 2D marine CSEM case was treated in a similar manner by Li and Dai (2011). A parallel goal-oriented recovery-based adaptive FE method for 2.5-D EM was outlined by Key and Ovall (2011). Three different a posteriori goal-oriented residual-based error estimators were investigated by Ren et al. (2013) for the 3D MT case (Fig. 12). These are based on the nonzero residuals of the electric field and the continuity of both the normal component of the current density and the tangential component of the magnetic field across interior elemental interfaces. The FE formulation uses linear Nédélec elements on unstructured tetrahedral grids with arbitrary conductivity, magnetic permeability and dielectric permittivity.

Goal-oriented refinement for a 3D trapezoidal hill model in the MT case. The colors denote a relative residual-based error indicator in the form of Eq. (6) based on the jump of the normal component of the current density [Eq. (16)] shown along a vertical slice of the 3D model for a frequency of 2 Hz. The initial model is displayed in (a), the third refinement stage in (b), and the final 7th refinement stage in (c) (taken from Ren et al. 2013)

Another goal-oriented adaptive unstructured tetrahedral grid approach was outlined by Ren and Tang (2014) for the 3D DC resistivity case using secondary fields and boundary conditions according to Penz et al. (2013) but driving the refinement by a superconvergent patch recovery-based a posteriori error estimator. A residual-based a posteriori error estimator for 3D MT on unstructured non-conforming hexahedral meshes (octrees) was presented by Grayver and Bürg (2014) using direct solvers or conjugate gradient type methods pre-conditioned with an auxiliary space technique based on highly efficient algebraic multigrid methods. The code is based on the open-source software deal.II (Bangerth et al. 2007), and parallelization was established using the message passing interface MPI. Grayver and Kolev (2015) reported a 3D EM adaptive high-order FE method using fully parallel and distributed robust and scalable linear solvers based on optimal block-diagonal and auxiliary space preconditioners. Adaptivity is implemented through residual-based goal-oriented adaptive mesh refinement (Fig. 13).

Goal-oriented refinement for 3D MT based on the residuals and jumps according to Eqs. (15) and (16) for an octree mesh and a digital terrain model of Kronotsky volcano on the Kamchatka Peninsula, Russia. The initial (a) and adaptively refined meshes (b) include 11,752 and 169,966 cells, respectively. Receivers are indicated by white rectangles. The top air layer is not shown (taken from Grayver and Kolev 2015)

A 3D inversion for MT based on a Nédélec unstructured grid forward operator and goal-oriented residual-based adaptivity was presented by Grayver (2015). Another 2D inversion code for MT and CSEM called MARE2DEM was published by Key (2016) where a recovery-based goal-oriented adaptivity scheme was implemented on unstructured triangular grids. Likewise on unstructured triangular grids, adaptive FE modeling of DC resistivity in 2D generally anisotropic structures was described by Yan et al. (2016) using a gradient recovery-based scheme. The 3D airborne EM case was tackled using a goal-oriented jump-based adaptive edge FE algorithm implemented by Yin et al. (2016) on unstructured tetrahedral grids. Anisotropic conductivities were incorporated in the 3D MT problem by Liu et al. (2018) using linear Nédélec FE on unstructured tetrahedral grids. Adaptivity was achieved by a goal-oriented residual-based error estimator. The resulting system of linear equation was solved using direct solvers from the MUMPS library (Amestoy et al. 2000). Recently, Rulff et al. (2021) reported a 3D CSEM total electric field forward operator in the frequency domain using first-order Nedelec elements and a residual-based goal-oriented error estimator. And finally, a 3D marine CSEM forward modeling code for general anisotropic structures was presented by Li et al. (2021) using goal-oriented residual-based adaptivity and Nédélec FE.

3.4.4 Mesh Generation and Refinement, FE Toolboxes and Equation Solver Libraries

Mesh generators, FE toolboxes, equation solvers and the actual procedure of mesh refinement, referred to in FE terminology as enrichment of the FE space, are beyond the scope of this article. This section will nevertheless present some key features and facilities used in the geo-EM community for these purposes. Besides the fineness of the grid, the accuracy of the solution is also influenced by geometric properties of the cells, independent of the function to be modeled. This so-called mesh quality can be steered via different options in the mesh generator. The mesh generator is usually an external tool to set up meshes with appropriate properties and features which affect the mesh quality and, in return, the accuracy of the solution of the PDE. According to Shewchuk (1997), we can generally and very briefly state that

-

small dihedral angles in cells cause poor conditioning of the system matrix,

-

large angles cause discretization errors,

-

fewer elements yield faster solutions, and

-

more elements yield higher accuracy.

High-quality meshes should not include poorly-shaped elements (needles, wedges, caps, and slivers), should be as small as possible, and should be locally refined with moderate grading from small to large. See Dey et al. (1992), Shewchuk (2002), and Bern et al. (2017) for more details. The mesh refinement itself is a wide field of research of the meshing community and is only perceived as a utility by geo-EM modelers. There are a multitude of different criteria and ways to split up elements into smaller ones, each affecting the solution of the PDE in an individual way.

Especially simple is the refinement of rectangular cells in a non-conforming way into quad- and octrees. Most widespread for unstructured triangular/tetrahedral meshes is the regular refinement where, for 2D, four new triangles are created from an old one by bisecting each edge (Fig. 14a) or the longest-edge bisection that creates two new triangles from an old one (Fig. 14b). The minimum inner angle of the elements can be used as a criterion. According to Bern et al. (2017), another geometric criterion for splitting up a cell is the circumradius-to-shortest edge ratio of a triangle or tetrahedron, where the circumradius refers to a circle or sphere through all vertices. Any triangle whose circumradius-to-shortest edge ratio is larger than a given value is split up by inserting a new vertex at its circumcenter (Fig. 14c, d). Theoretically, meshing algorithms still cannot guarantee all tetrahedra to include good angles. But, in practice, it is possible to create meshes with sufficient quality even if the process is tedious.

Refinement techniques. Regular refinement (a) and longest-edge bisection (b). Creation of new triangles in case of an element that violates the circumradius-to-shortest edge ratio. Initial situation with triangle in red and circumradius with circumcenter in green (c), refined mesh with new node in the circumcenter (d)

Very often, if the new mesh is not supposed to contain any hanging nodes, it has to be regenerated from scratch, which is time-consuming. Quad- and octrees avoid this circumstance by creating a hierarchical set of meshes but leave the modeler with the treatment of hanging nodes. Hanging nodes appeared as early as the 1930s in a work by Shortley and Weller (1938). There is one approach to refine also unstructured tetrahedral grids by the introduction of hanging edges (the equivalent of hanging nodes) for time-domain EM problems. This technique was presented by Schneider et al. (2022) and is able to rapidly adopt the mesh, e.g., to the temporal change of a decaying electric field.

Sometimes mesh generators are also integrated into FE toolboxes (libraries) that include the assembly of the system matrix. The computation of FE coefficients for unstructured meshes requires a high administrative effort, but is highly automatable once the underlying PDE is identified, the boundary conditions to be implemented are set, and appropriate basis functions as well as the desired mesh type are selected. This is the reason for the increasing number of ready-to-use and nearly all-purpose FE libraries that can be tailored toward the intended application. 2D and 3D mesh generators or FE toolboxes frequently used in the geo-EM community are, e.g., Gmsh (Geuzaine and Remacle 2009), Tetgen (Si 2015), Triangle (Shewchuk 1996), NetGen (Schöberl 1997), libmesh (Kirk et al. 2006), FEniCS (Alnæs et al. 2015), Dune (Blatt et al. 2016), OpenFOAM (Weller et al. 1998), deal.II (Bangerth et al. 2007), and MFEM (Anderson et al. 2021).

Some popular and powerful libraries for the direct or iterative numerical solution of large sparse systems of linear algebraic equations are, e.g., the shared-memory multiprocessing parallel direct sparse solver library PARDISO (Schenk and Gärtner 2004; PARDISO Solver Project 2023; Bollhöfer et al. 2020), the multifrontal massively parallel sparse direct solver MUMPS (Amestoy et al. 2000), and the portable, extensible toolkit for scientific computation PETSc (Balay et al. 2022).

4 Concluding Remarks

In this paper, I have addressed some important lines of development of geo-EM modeling including their historical evolution in mathematics. The key innovations, in my opinion, are the introduction of FE in the context of unstructured grids including their local and adaptive refinement, which allow in an elegant and flexible way to integrate complex multi-scale structures, as we find them in the Earth sciences, into our conductivity models. The underlying and comprehensive finite element theory enables us to roughly assess the accuracy of the solution using a posteriori error estimators which, in turn, are mainly employed to adaptively control and optimize the grids, even if they are not able to provide definite and helpful bounds. Fast direct and iterative equation solvers as well as powerful mesh generators are indispensable tools without which we would not be able to obtain solutions for close-to-reality models, even if they basically always remain abstract and incomplete.

I have pointed out that the sensitivity function plays an important role when it comes to goal-oriented error estimation. It guides the refinement of the meshes with respect to the quantity of interest. The sensitivity is likewise important to determine the level of detailedness of geometric features of the model such as topography, bathymetry or any other interior structure.

There are certainly other strategies to build realistic models, both on the physical and mathematical side. Primarily, it is the technical framework and developments in neighboring scientific fields that initiate and enable streams of development. In our case, computer architectures and new mathematical approaches and algorithms are of the utmost importance. Parallel computer architectures and distributed systems have been with us for a while and we are not yet fully exploiting their possibilities in geo-EM.

Moreover, there are alternative approaches to describe structures in a modified form to better represent the multi-scale properties. There is, for example, the hierarchical parameterization as introduced by Weiss (2017) and Beskardes and Weiss (2018). It is able to efficiently include one- and two-dimensional structures such as lines (representing, e.g., boreholes) or surfaces (e.g., for fissures) in the modeling of large volumes via separate integrals over edges and faces of the finite elements. Equivalent materials associated with coordinate transformations allow simplification of complicated structures and mapping to regular grids, which in turn leads to simplification of model handling (Kamm et al. 2020). Wavelet expansions can represent spatial structures with variable accuracy, which can help to reduce the number of free parameters (Nittinger and Becken 2018).

Creating a high-quality unstructured tetrahedral mesh that meets all the requirements for solution accuracy and mapping fidelity of the geometry in a practical simulation task takes a significant amount of time and is still always a matter of hands-on tailoring. On the contrary, FD methods impress by their simplicity and straightforward implementation on conforming grids, which greatly simplifies the overall handling and facilitates techniques such as domain decomposition (see, e.g., Bohlen (2002) for seismic modeling). If we look at the development in consumer electronics, we can, for example, see that higher resolution of screens is not achieved by completely new approaches, but by the huge number of pixels (which are comparable to the parameter cells here). Over the decades, the evolution went from VGA (640\(\times\)480 pixels) over HD (920 \(\times\)1080 pixels) to currently 4K (4096 \(\times\) 2160) without changing the concept of the pixel. 8K and 16K will be reached in the not too distant future. Transferring this to our modeling approaches, one could use simple structured grids for extremely high resolution, if only one can provide enough cells. Of course, this is only possible if there is sufficiently large and adequate computing power. All the techniques we use unfold their strength and power only in connection with the currently available resources. Sometimes moving forward can mean to go back one step first.

References

Ainsworth M, Oden JT (2000) A posteriori error estimation in finite element analysis. In: Pure and applied mathematics. Wiley, New York

Alnæs MS, Blechta J, Hake J et al (2015) The FEniCS project version 1.5. Arch Numer Softw 3(100):9–23

Amestoy P, Duff I, l’Excellent JY (2000) Multifrontal parallel distributed symmetric and unsymmetric solvers. Comput Methods Appl Mech Eng 184(2–4):501–520

Anderson R, Andrej J, Barker A et al (2021) MFEM: a modular finite element methods library. Comput Math Appl 81:42–74

Ansari S, Farquharson CG (2013) 3D finite-element forward modeling of electromagnetic data using vector and scalar potentials and unstructured grids. Geophysics 79(4):E149–E165

Ansari SM, Farquharson CG, MacLachlan SP (2017) A gauged finite-element potential formulation for accurate inductive and galvanic modelling of 3-D electromagnetic problems. Geophys J Int 210(1):105–129

Avdeev DB (2005) Three-dimensional electromagnetic modelling and inversion from theory to application. Surv Geophys 26(6):767–799

Avdeev DB, Kuvshinov AV, Pankratov OV et al (2002) Three-dimensional induction logging problems, part I: an integral equation solution and model comparisons. Geophysics 67:413–426

Babuška I, Aziz AK (1972) Survey lectures on the mathematical foundations of the finite element method with applications to partial differential equations. In: Aziz AK (ed) The mathematical foundations of the finite element method with applications to partial differential equations. Academic Press, New York

Babuška I, Rheinboldt WC (1978) A posteriori error estimates for the finite element method. Int J Numer Methods Eng 12(10):1597–1615

Badea EA, Everett ME, Newman GA et al (2001) Finite-element analysis of controlled-source electromagnetic induction using Coulomb-gauged potentials. Geophysics 66(3):786–799

Balay S, Abhyankar S, Adams MF et al (2022) PETSc web page. https://petsc.org/. Accessed January 2023

Bangerth W, Hartmann R, Kanschat G (2007) deal.II–a general-purpose object-oriented finite element library. ACM Trans Math Softw 33(4):24

Bank RE, Weiser A (1985) Some a posteriori error estimators for elliptic partial differential equations. Math Comput 44(170):283–301

Beck R, Hiptmair R (1999) Multilevel solution of the time-harmonic maxwell’s equations based on edge elements. Int J Numer Methods Eng 45(7):901–920

Bern M, Shewchuk JR, Amenta N (2017) Triangulations and mesh generation. In: Goodman JE, O’Rourke J, Toth CD (eds) Handbook of discrete and computational geometry, 3rd edn. Chapman and Hall, London, pp 763–786

Beskardes GD, Weiss CJ (2018) Modelling DC responses of 3-D complex fracture networks. Geophys J Int 214(3):1901–1912

Blatt M, Burchardt A, Dedner A et al (2016) The distributed and unified numerics environment, version 2.4. Arch Numer Softw 4(100):13–29

Blechta J, Ernst OG (2022) Efficient solution of parameter identification problems with h1 regularization. https://doi.org/10.48550/ARXIV.2209.02815. arXiv:2209.02815

Blechta J, Börner RU, Ernst O et al (2022) A MATLAB FE library for the simulation and inversion of EM problems. In: 25th international workshop on electromagnetic induction in the earth, Çeşme, Turkey, 11–17 September 2022, 1 p

Blome M, Maurer HR, Schmidt K (2009) Advances in three-dimensional geoelectric forward solver techniques. Geophys J Int 176(3):740–752

Bohlen T (2002) Parallel 3-D viscoelastic finite difference seismic modelling. Comput Geosci 28(8):887–899

Bollhöfer M, Schenk O, Janalik R et al (2020) State-of-the-art sparse direct solvers. In: Grama A, Sameh AH (eds) Parallel algorithms in computational science and engineering. Springer, Cham, pp 3–33

Börner RU (2010) Numerical modelling in geo-electromagnetics: advances and challenges. Surv Geophys 31(2):225–245

Börner RU, Ernst OG, Spitzer K (2008) Fast 3D simulation of transient electromagnetic fields by model reduction in the frequency domain using Krylov subspace projection. Geophys J Int 173:766–780

Börner JH, Herdegen V, Repke JU et al (2013) The impact of CO2 on the electrical properties of water bearing porous media—laboratory experiments with respect to carbon capture and storage. Geophys Prospect 61(SUPPL.1):446–460