Abstract

In the past twenty years, satellite gravimetry missions have successfully provided data for the determination of the Earth static gravity field (GOCE) and its temporal variations (GRACE and GRACE-FO). In particular, the possibility to study the evolution in time of Earth masses allows us to monitor global parameters underlying climate changes, water resources, flooding, melting of ice masses and the corresponding global sea level rise, all of which are of paramount importance, providing basic data on, e.g. geodynamics, earthquakes, hydrology or ice sheets changes. Recently, a large interest has developed in novel technologies and quantum sensing, which promise higher sensitivity, drift-free measurements, and higher absolute accuracy for both terrestrial surveys and space missions, giving direct access to more precise long-term measurements. Looking at a time frame beyond the present decade, in the MOCAST+ study (MOnitoring mass variations by Cold Atom Sensors and Time measures) a satellite mission based on an “enhanced” quantum payload is proposed, with cold atom interferometers acting as gravity gradiometers, and atomic clocks for optical frequency measurements, providing observations of differences of the gravitational potential. The main outcomes are the definition of the accuracy level to be expected from this payload and the accuracy level needed to detect and monitor phenomena identified in the Scientific Challenges of the ESA Living Planet Program, in particular Cryosphere, Ocean and Solid Earth. In this paper, the proposed payload, mission profile and preliminary platform design are presented, with end-to-end simulation results and assessment of the impact on geophysical applications.

Similar content being viewed by others

Article Highlights

-

We present the proposal of an innovative gravimetry mission (MOCAST+) based on a quantum payload (atomic interferometers + atomic clocks)

-

From simulations, in specific mission configurations MOCAST+ can improve the knowledge of the Earth gravity field and its time variations

-

Improvements with respect to GRACE can be expected in detecting and monitoring deglaciation processes, hydrologic basins and lakes, earthquakes

1 Introduction

In the past twenty years, space missions like GOCE (Drinkwater et al. 2003; Floberghagen et al. 2011), GRACE (Tapley and Reigber 2001; Kvas et al. 2019) and GRACE-FO (Kornfeld et al. 2019; Landerer et al. 2020) have successfully demonstrated their capability to deliver high-resolution and high-accuracy global models of the Earth gravity field (GOCE) and of its temporal variations (GRACE and GRACE-FO). In particular, studies and results from the last two missions fostered the building of a well-organized science user community tracking the Earth mass movement to study environmental changes on a global scale using data from satellite gravity measurements. In fact, monitoring global parameters underlying climate change, water resources, flooding, melting of ice masses and the corresponding global sea level rise is of paramount importance (Pail 2015a). Remote sensing of the changes of the Earth gravitational field provides basic data on, e.g., geodynamics, earthquakes, hydrology or ice sheets changes. In fact, considering the five macro-areas of the Scientific Challenges of the ESA Living Planet Program (Drinkwater et al. 2003), the gravity field is fundamental in studies regarding Cryosphere, Ocean and Solid Earth.

Since classical sensors have reached a high level of maturity with a limited potential for further improvement, a large interest has developed in novel technologies based on quantum sensing (Carraz et al. 2014). These technologies promise to offer higher sensitivity and drift-free measurements, and higher absolute accuracy for terrestrial surveys as well as space missions, thus giving direct access to more precise long-term measurements and comparisons. Europe is at the forefront of quantum technologies, and a call has been issued (HORIZON-CL4-2021-SPACE-01-62: Quantum technologies for space gravimetry) aiming at the development of EU technologies and components for a space quantum gravimeter or gradiometer, as a first step towards the deployment within this decade of a gravimeter pathfinder mission based on cold atom interferometry. This will in turn pave the way for a fully fledged quantum space gravimetry mission by the end of the next decade.

Quantum sensors show high sensitivity and allow precision measurements for fundamental physics experiments and applications (Tino and Kasevich 2014). In the field of gravitational physics, atomic interferometry (Chu 1998) is used for precise measurements of the Earth gravitational acceleration (Kasevich and Chu 1992) and gravitational gradients (McGuirk et al. 2002), of gravity at small distances (Ferrari 2006), and of the gravitational constant (Rosi et al. 2014). Atomic interferometers have also been developed as accelerometers (Abend et al. 2016), gradiometers (Sorrentino et al. 2014) and gyroscopes (Dutta et al. 2016) for various practical applications in the fields of geodesy, geophysics, geological prospecting and inertial navigation (Geiger et al. 2011, Cheiney et al. 2018, Bidel et al. 2018). At the same time, there has been a rapid development of optical clock technology (Takamoto et al. 2020), which has led to an improvement of about two orders of magnitude in the accuracy of time / frequency measurements in the time frame of a decade. The technologies of atomic interferometers and optical clocks have been the object of several studies and technological developments for space applications (Becker et al. 2018; Douch et al. 2018). As part of various ESA, ASI, CNES, DLR programs, designs of space instruments and prototypes of high Technical Readiness Level (TRL) components have already been developed (ESA 2015). Atomic clocks provide time measurements, hence if mounted as payload on two different satellites can provide observations of the gravitational potential differences at two points along the satellite orbit (Flury 2016).

In this paper, we present the results of the MOCAST+ (MOnitoring mass variations by Cold Atom Sensors and Time measures study), based on the proposal of a future satellite gravimetry mission providing quantum measurements of the Earth gravity field and of its temporal variations, with the aim of assessing under which conditions it will be possible to improve the knowledge and understanding of several geophysical phenomena identified as priorities in the ESA Living Planet Program, in particular, those regarding the determination of the mass of glaciers and lakes and its variations over time. Other investigated key issues are the monitoring of tectonic processes in the marine environment, such as submarine volcanoes and mega-fault movements in subduction areas, the study of variations in the hydrological cycle and of the relative mass exchange between atmosphere, oceans, cryosphere and solid Earth. The MOCAST+ project relies on the heritage from the previous MOCASS (Mass Observation with Cold Atom Sensors in Space) study (Migliaccio et al. 2019; Reguzzoni et al. 2021, Pivetta et al. 2021) which was based on the proposal of an innovative space mission for the acquisition of observations of the Earth gravitational field with accuracy and resolution higher than those already achieved by successfully completed missions such as GRACE and GOCE. During the MOCASS study, the characteristics of a cold atom interferometer used as a gradiometer were analysed; in particular, the sensitivity of the interferometer was evaluated by considering an appropriate transfer function (Fourier transform of the sensitivity function) (Cheinet et al. 2008) and subsequent calculation of the power spectral density (PSD) of the instrument. From a geophysical point of view, the MOCASS project provided very promising results, as a mission of this type could have great potential for monitoring specific geophysical phenomena. However, several aspects of the proposed mission needed to be further investigated, including the interferometer design and the experimental activity aimed at verifying the level of maturity reached by the technology of cold atom interferometry in preparation for a future space mission, the possibility to design a different mission profile and the need to consider the accommodation of the instrument on the spacecraft.

Furthermore, the basic innovative idea of the MOCAST+ study was to propose an “enhanced” cold atom interferometer, capable not only of functioning as a gradiometer but also of providing time measurements, leading to the possibility of improving the estimation of gravity models even at very low harmonic degrees, with consequent advantages in the modelling of mass transport and its global variations.

Moreover, numerical simulations have been performed in order to assess the possible mission scenarios, considering the cases of a single pair satellite formation and of a “Bender” double pair satellite formation (Bender et al. 2008), with payload for each satellite represented by a single-axis atomic gradiometer and an atomic clock, plus an optical link between the two satellites of a pair. The direction of the gradiometer axis has been investigated, considering the xx, the yy and the zz directions (x = along-track, y = out-of-plane, z = radial), keeping in mind that in the case of a quantum gravimetry mission like MOCAST+ the goal is to improve the estimate of the low frequencies of the gravity model. For the end-to-end simulations, the quantities simulated along the orbit have been treated by applying the space-wise strategy for the data analysis and derivation of gravity field models (Migliaccio et al. 2004).

2 Mission objectives and observation requirements

The MOCAST+ study is based on the idea that quantum interferometers and quantum clocks can represent a powerful payload in a satellite gravimetry mission, enabling to perform measurements of the gravitational gradients and gravitational potential differences in space with high accuracy and stability.

For the study of glaciers and water masses, the contribution of a gravitational mission is fundamental, because the multispectral images provided for example by Landsat and Sentinel satellites can be used to determine the surface extension of glaciers and lakes, but not the mass. The temporal evolution from multispectral observations can also provide the loss in the surface extension of a lake, and, in combination with the exposed topography, the estimation of the volume of lost water, but there is no information on the variation of water mass in the subsoil. Using existing global or regional publicly available databases of glacier and lake extent (e.g., Zhang et al. 2021; Pfeffer et al. 2014) combined with estimates of the temporal variations of the water level/ice thickness (e.g., Gardner et al. 2013; Zhang et al. 2017), it is possible to simulate the gravitational signal at satellite altitude for selected areas of the Earth surface and accomplish the sensitivity analysis of the proposed mission. One of the goals of the study is to evaluate the improvement achievable by a QSG (Quantum Space Gravimetry) mission in retrieving the coverage of glaciers, compared to existing results obtained from GRACE and GRACE-FO. The first simulations of the glaciers in Tibet-Himalayas were carried out as part of the MOCASS project (Pivetta et al. 2021), an experience that has been the basis of the present study, which extended the simulations to the Andes. Furthermore, the simulations were also extended to the hydrosphere, in particular considering the variations of water masses of endorheic lakes (Zhang et al. 2013), (Zhang et al. 2017), as potentially this is an effect that interferes with the ice signal. The simulation of the glacio-hydrological signal is fulfilled in the Andean chain which has an extensive glacial area (> 25,000 km2) and is subjected to significant mass variations (Gardner et al. 2013).

The problem of determining water and glacial masses and their variations over time has been recognized through the IUGG resolution of 2015, to be improved through future gravity missions (Pail et al. 2015a, 2015b). Among the identified challenges, we consider here the following ones: C1: Regional and seasonal distribution of sea-ice mass, C2: Mass balance of grounded ice sheets, ice caps and glaciers, their relative contribution to sea level change, their current stability, and their current stability to climate change, C3: Individual sources of mass transport in the Earth system at various spatial and temporal scales.

Regarding the tectonic effects, the MOCAST+ simulations focus on gravity signals generated by fault movements, which are of particular interest for the underwater environment, due to its difficult accessibility. While in continental areas the observations of ground displacements by Global Navigation Satellite System (GNSS) and Differential Interferometric Synthetic Aperture Radar (DInSAR) techniques allow to identify the rate of crustal deformation, and thus, the tectonic movements related to the seismic cycle, in subduction areas this is not possible, as monitoring would require the observation of movements that typically occur on the ocean floor. At plate edges, it is essential to measure the rate of relative motion on both plate edges, and estimate the motion on the fault, in order to distinguish a creeping, aseismic fault from a blocked fault, which is accumulating elastic energy. The mega-faults, estimated from a fault length larger than 300 km, are candidates to be observable from a gravitational mission (Wiese et al. 2011; Cambiotti et al. 2020). To estimate the resolving power of the satellite for the part of the seismic cycle including the co-seismic to post-seismic signal, the simulation must be carried out using a stratified and viscoelastic half-space in which the fault is immersed (Wang et al. 2017). Another tectonic signal to be evaluated is the one linked to marine volcanism, which produces submarine volcanoes. The existing database of submarine volcanoes (Wessel et al. 2010) allows to simulate the signal produced at satellite level, both for static and growing volcanoes. A growing marine volcano presents a navigation hazard, and the gravimetric method is the only suitable one for observing growth before it reaches the ocean surface. Unfortunately, although the signal is very small, at least based on the mass variations which can be found in literature, it cannot be excluded that greater mass variations could be present, but this requires a dedicated in-depth study.

The theme of mega-earthquakes and marine volcanoes is part of the ESA Challenges: G1 (Physical processes associated with volcanoes, earthquakes, tsunamis, and landslides in order to better asses the natural hazards), G2 (Individual sources of mass transport in Earth system at various spatio-temporal scales).

3 Payload concept

In order to fulfil the objectives of the proposed mission, the payload must allow us to observe the Earth gravity field at a level of precision and spatial resolution that is higher than the GOCE, GRACE, and GRACE-FO missions. The new technologies inherent to the observation of the gravitational gradients with a cold atom gradiometer and of the gravitational potential through atomic clocks constitute an innovative payload that has not yet been exploited on board a satellite. Ground-based technology is in an advanced state, and first measurements of the absolute gravity field, vertical gravity gradient, and potential difference have been already performed with cold atom technology (Tino et al. 2013; Takamoto et al. 2020).

The payload under study in MOCAST+ represents an evolution of the system designed in MOCASS, with the addition of the time measurement functionality of an optical clock, which allows for the reconstruction of geopotential profiles. This is possible through the use of new techniques based on interferometry with alkaline-earth atoms, in particular, Strontium (Grotti 2018).

In fact, although in most cases atomic interferometry sensors are based on alkali atoms (mainly Rubidium, using two-photon Raman or Bragg transitions, see, e.g., Kasevich 1992, Altin et al. 2013), there is a growing interest in the development of atomic interferometers involving metastable states coupled through narrow single-photon transitions of alkaline-earth atoms, in particular Strontium (Yu 2011), (Graham 2013). An experimental test of this new interferometric scheme was carried out for the first time at the University of Florence using the clock transition at 698 nm of 88Sr of atoms in a gradiometric configuration (Hu 2017). Interferometers based on Bragg transitions on 88Sr have been also developed (Mazzoni et al. 2015), where the atomic wave packet lies in the ground state during the free evolution time. Unlike Rubidium, the 88Sr ground state is almost insensitive to external magnetic fields, a characteristic that may be crucial for field and space applications.

Optical atomic clocks are currently the most accurate instruments for measuring time (Bothwell et al. 2019). Unlike microwave atomic clocks, which use hyperfine transitions in the ground state of alkali atoms (Cs or Rb) as a frequency reference, optical clocks use inter-combination optical transitions in alkaline-earth atoms (Ca, Sr, Yb) as a frequency reference. The optical reference frequencies are larger by a factor of the order of 105 than the microwave reference frequencies, while the uncertainty on the frequency (the line width) in the Fourier limit is comparable in the two cases. As a consequence, the relative precision, given by the ratio between frequency and line width, is much higher in optical clocks. An optical clock is obtained by exploiting a sample of ultra-cold alkaline-earth atoms, trapped in the nodes of an optical lattice to eliminate the Doppler effect, photonic recoil and interatomic collisions (Lamb-Dicke regime); the atoms are interrogated by an ultra-stable laser, with a line width of less than 1 Hz, in resonance with an optical inter-combination transition of the atomic species.

Given that from the point of view of the necessary technologies and the clock hardware Rubidium and Strontium interferometers are equivalent, MOCAST+ will define an innovative scheme to optimize its integration. In this respect, the MOCAST+ project investigated how the proposed Strontium gravitational gradiometer integrated with the optical clock can be made operational in space.

Regarding the atom gradiometer, the same interferometer geometry proposed in the previous MOCASS study (Migliaccio et al. 2019) has been adopted also for the Strontium case. The main differences lie in the laser setup: while for Rubidium only few laser wavelengths are necessary (780 and 1560 nm) for Strontium manipulation several independent laser sources must be employed (461, 689, 707, 679 and 698 nm), leading to a further complexity of the instrument. However, it is important to highlight that the overall laser system can be shared with the optical clock.

In the case of the Strontium gradiometer, the Bragg transitions are realized with 461 nm radiation that produces a larger split of the atomic wave packet. Consequently, the shot noise limited sensitivity is improved by a factor 780/461 ≈ 1.7.

Unfortunately, unlike Rubidium, a high flux (106/s) of ultracold Strontium atoms has not been yet demonstrated. However, recent efforts in producing degenerate Strontium gases in a continuous way (Chen et al. 2022) and the realization of novel apparatus specifically designed for delta-kick cooling technique implementation (Abe et al. 2021) will make such kind of values attainable in the near future. In any case, the sensitivity gain is only virtual: sources of noise related to inertial effects (such as rotations and accelerations of the satellite, e.g., Lan et al. 2012; Roura 2017), which are instrument-independent, can easily surpass the shot noise level. During this study, the disturbances due to the satellite attitude have been included in the gradiometer PSD: it was found that angular rotations (\(\varOmega\)), through the centrifugal term, put a serious limitation in the measurement of the gravity gradient in the orbital plane. In Fig. 1, different Strontium gradiometer noises are reported depending on the level of the spacecraft attitude control, in terms of Amplitude Spectral Density (ASD, i.e. the square root of PSD). Spectra have been calculated by evaluating in the frequency domain the residual centrifugal term \({\varOmega }^{2}-{\varOmega }_{m}^{2}\), where \({\varOmega }_{m}\) is the applied rotation compensation (see Trimeche et al. 2019), under different conditions: without real-time compensation (\({\varOmega }_{m}\;=\;const)\) and with active, real-time compensation (\({\varOmega }_{m}\cong \varOmega\)) assuming various levels of accuracy. The difference between Rubidium and Strontium ASDs in the case of fully compensated angular rotations (i.e. shot noise only) is shown too. It is evident that the shot noise limit can be approached only with a real-time active compensation at the level of 0.1 nrad/s/sqrt(Hz). Whereas effective compensation actuators can be conceived for the atom interferometer, this requirement is well beyond the current state-of-the-art of gyroscopes (Dutta et al. 2016). Consequently, we conclude that gradiometer performances can be fully exploited only in the direction perpendicular to the orbit plane, where the centrifugal term is negligible. In the other directions, the degradation due to a limited compensation of the angular rotations must be taken into account.

Gradiometer noise ASD, for different levels of active rotation compensation: no correction for the satellite residual rotations is applied (blue line); real-time active compensation at the level of 1 nrad/s/sqrt(Hz) and 0.1 nrad/s/sqrt(Hz) (yellow and red lines, respectively); ideal case with full compensation (purple and green lines for the Rb and Sr atoms, respectively). Note that the ASD is independent from the atomic species, when angular rotations are not fully compensated, because this effect dominates the noise. Calculations have been performed starting from simulated satellite attitude data (see Sect. 4)

Regarding the sensitivity to magnetic fields, the 88Sr isotope presents a ground state which is virtually immune to them. This is an important feature since for the Rb gradiometer the need to magnetically shield the large interferometer volume is quite demanding in terms of payload mass. The robustness of the Sr gradiometer was experimentally investigated against magnetic gradients: we observed differential phase shifts which are about 103 time less that for a Rb gradiometer.

While the atom gradiometer payload concept was already developed in the MOCASS study (cf. Migliaccio et al. 2019), an effective scheme to retrieve the gravitational potential from clock measurements had to be conceived. Since the local gravitational potential modifies the clock transition frequency ω0, the clock fringes will present a phase shift \(\phi ={\delta \omega }_{0}T\) that can be linked to the gravity potential difference \(\varDelta U\) by

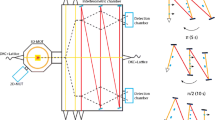

As a clock interrogation scheme, a Ramsey π/2 - π/2 scheme was chosen with an interrogation time \(T\) of 10 s and a number of atoms equal to 104. With these parameters, the expected shot noise level for a single clock is 3.7 × 10− 19/shot corresponding to a sensitivity on gravitational potential equal to 0.047 m2s− 2/shot. Since only gravitational potential differences can be detected, it is necessary to compare at least two clocks in two different locations, that in this case correspond to two satellites separated by a given baseline. The main issues to fully exploit the instrument sensitivity are constituted by phase noise of the local oscillator (clock laser) and baseline fluctuations. A strategy based on the use of three clocks in combination with an optical link in heterodyne has been identified to better mitigate them. The idea (represented in Fig. 2) is to perform a phase lock loop (PLL) between the clock laser M1 (source of the clock pulses) on board of the first satellite (Master) and the clock laser M2 on board the second satellite (Slave), and to follow a second phase lock loop between the clock laser M3 (a third clock on board the master satellite) and the clock laser M2. Through appropriate timing of the pulses, the phase noise of M1 + forward baseline noise can be copied on the Clock 2 output, while twice the baseline noise + the phase noise of M1 will be projected on Clock 3 output. The gravitational potential phase shift \({\Delta }\phi\) can be thus retrieved by the double differential measurement \(\Delta \phi = \Delta \phi _{{12}} - \Delta \phi _{{13}}\) where \({{\Delta }\phi }_{12}\) is the differential phase between Clock 2 on the Slave satellite and Clock 1 on Master satellite, while \({{\Delta }\phi }_{13}\) represents the differential shift between Clock 3 and Clock 1 on the Master satellite. In this way, it will be possible to reject both the laser phase noise and the baseline noise. The latter rejection, however, is not perfect as the possible fluctuations of the optical path due to the movement of the master satellite plus residual atmospheric turbulence during the time of the laser pulse are neglected. Such noise can be expressed using the following relation

where \(y\left(t\right)\) is the position of the master satellite, \({t}_{d}\) is the travel time of the light and \(k\) the light wave vector. To mitigate this problem, a heterodyne optical link is introduced using the local oscillators (LO) present on each satellite, enslaved to ultra-stable optical cavities. The goal is to use the stability in length of these cavities over short time periods (of the order of hundreds of microseconds) to correct the fast baseline fluctuations. For this purpose, two beat note signals (BN1 and BN2) are recorded and combined in the following way

where \({\phi }_{n}\) is the noise of the LO. Ultimately the last quantity determines how good the short-term fluctuations can be tracked.

In addition, a mathematical model that allows us to evaluate the residual effect of these fluctuations on the atomic optical clock signal has been developed, quantitatively identifying the correction band based on the propagation time and the noise of the ultra-stable cavity. It is also important to put in evidence that the beat notes are also fundamental for the real-time compensation of the Doppler frequency shift: indeed, the velocity difference between satellites can be huge (several m/s) with the risk of putting out of resonance the incoming pulse from the master satellite. The beat note signal provides a frequency correction to be added/subtracted to avoid such kind of condition.

The MOCAST+ clock measurement scheme. The first clock laser on the Master satellite (M1), while performing the clock interrogation sequence given by a pulse generator (IMP1), is sent towards the Slave satellite. In order to common-mode suppress the M1 phase noise, a phase lock loop (PLL1) with the clock laser on the Slave satellite (M2) is realized, while a second pulse generator (IMP2), synchronized with the previous one, generates the clock interrogation sequence. The scheme is repeated for the second optical clock on the Master satellite. In parallel, an optical link between local oscillators (LO1 and LO2) is employed to provide Doppler compensation and to monitor short-term baseline fluctuations

In short, based on the previously described clock configuration, the overall nominal measurement scheme is as follows:

-

two satellites, at a distance of about 100 km, carrying on board atomic samples interrogated by the same clock laser (noise of the local oscillator in common);

-

introduction of a third clock on the first satellite in order to cancel out the propagation noise;

-

atomic species: 87Sr (clock) in optical lattice, 88Sr (gradiometer) in free fall;

-

optical link based on remote phase locking;

-

introduction of a second optical link to track baseline length variations;

-

in order to avoid dead time in the instrumental cycle, an interleaved operation scheme can be a envisioned: during the Clock 1 free evolution time (10 s), another atomic sample (Clock 1′) is prepared in a separated chamber (not shown in Fig. 1) and then transferred in the clock cell by moving lattice technique;

-

afterwards, the atomic sample is adiabatically loaded in the clock optical lattice formed by retro-reflection from the flat rear surface of a corner cube and then prepared for the clock interrogation; in this way, the first π/2 pulse of Clock 1′ will correspond to the last clock pulse of Clock 1; finally, the detection phase can be performed by placing back the atoms in the preparation chamber.

Using the proposed scheme, we can achieve a sensitivity up to 0.21 m2s− 2/sqrt(Hz). It is important to remark that in principle the repetition rate (0.1 Hz) can be enhanced by interleaving the clock interrogation on more atomic samples; this can be done at the expense of a further complexity of the vacuum system, since in this case the number of independent atomic sources to be integrated in the clock cell must be proportionally increased. However, since there is no need of a large volume like in the gradiometer case, an appropriate instrument engineering could lead to a flux gain of up to a factor of ten.

As previously mentioned, the baseline length or, more specifically, the light propagation time between satellites constitutes a limit in the rejection/correction of the baseline fluctuation. In Fig. 3, the noise spectra for three different baseline lengths are shown. They are obtained using the phase noise of one of the most stable clock laser reported in the scientific literature up to date (Oelker et al. 2019). As expected, the longer is the baseline, the higher will be the residual noise.

We can also integrate the so obtained PSD in order to evaluate the maximum correction band. The noise limit is defined by the clock shot noise (σ = 10 mrad). We obtained a correction band of 175 Hz for 100 km which, as expected, is reduced down to 80 Hz for 300 km length. As a consequence, we can state that baseline length can be increased from the nominal value (100 km) to some hundreds of km, exploiting the robustness of the optical link (which allows for band correction in the tens of Hz range) and PPL for signal regeneration (no need of high optical power), without changing significantly the accuracy of the computed potential differences.

Finally, in Table 1 the error budget is reported in terms of technical requirements for both instruments. The most demanding ones are related to the optical clock: the black body radiation temperature and the magnetic fields stability. This one is particularly demanding, fortunately only in the time scale of interrogation time. Drifts on a longer time scale can be cancelled by switching between Zeeman states.

4 Mission configuration and orbit simulations

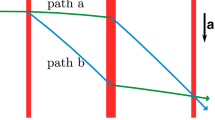

The proposal for a mission scenario starts from the identification of all ingredients participating to the design of a new mission with scientific objectives in terms of space and time resolution and, at the same time, impacting on the system design and associated costs and risks, mainly in terms of on-board resources and technological implications (Panet et al. 2013; Gruber et al. 2014). The instrumental design indicated that the optical clocks must be put in at least two different locations and then properly synchronized among them. This directed the solution towards pairs of satellites, as in the so-called NGGM (Next Generation Gravity Mission), see, e.g., Gruber et al. 2014. This configuration is represented in Fig. 4a. This is the simplest mission scenario with two satellites flying on moving along the same orbit, with different true anomalies, sampling the gravity field in the along-track direction only. Placed on a polar orbit, this formation is more sensitive to gravity field variations (and mass transport) in the North–South than in the East–West direction, which is reflected in anisotropic signal structures leading to the well-known North–South striations noted in the GRACE solutions (Cesare et al. 2016).

a) Single pair in-line formation, b) Bender configuration (Cesare et al. 2016)

Moreover, it is well understood that a single orbit (even a polar one, spanning all latitudes, as in GRACE) does not achieve a full and uniform coverage of the Earth's surface in short time periods. Consequently, it is not possible to generate frequent gravity filed solutions, and the resulting time series of the geopotential and of its related product (like the geoid) are affected by aliasing of high frequency phenomena (e.g., tides, atmosphere dynamics) onto lower frequencies due to under-sampling (Wiese et al. 2009; Visser et al. 2010). This is today one of the major limitations to the field reconstruction and not yet fully investigated. To mitigate the temporal aliasing issue, the most effective solution consists in increasing the number of satellite pairs (flying on different orbits) involved in the sampling of the gravity field, so as to decrease the time needed to achieve the global coverage of the Earth surface. The Bender constellation (Bender et al. 2008), see Fig. 4b, consisting of two in-line satellite pairs on circular orbits at polar and medium inclinations, is the simplest scenario enabling the simultaneous sampling of the gravity fields in North–South and East–West directions at higher temporal frequency, thus leading to a substantial reduction in spatial and temporal aliasing and of the anisotropic errors in the retrieval of gravity field models. Of course, the quality of the results can be further improved by enhancing this basic constellation with other satellite pairs (Pail et al. 2019) or considering more than two in-line satellites.

Orbits in the 350–550 km range are affected by air drag forces of a magnitude that depends on the surface areas and on the altitude and solar activity. The latter is difficult to predict; normally models parametrised on solar activity are used, and activity predictions are issued at different confidence levels. Assuming, for the sake of the argument, 1 m2 cross section, one can calculate forces ranging from several mN (350 km, high solar activity) to some µN (550 km, low solar activity), i.e. a dynamic range > 1000.

Taking into account the previously described mission configurations, the following aspects have been considered for the simulations:

-

implementation of the Bender constellation, that is two pairs of satellites in complementary orbits, polar and inclined, to improve Earth coverage and remove part of the aliasing as a source of error (Wiese et al. 2009);

-

implementation of a fine altitude control, as was practised with GOCE, to realize a controlled ground track pattern and place the data points on a fine regular grid without large gaps, constant in time;

-

low orbit altitude, as low as allowed by the spacecraft resources, to maximise the power of the gravity signal;

-

small satellite cross section always kept oriented towards the flight direction, to minimise the magnitude of the drag force on the satellite;

-

three-axis linear and angular active drag control (DFACS) in the measurement band, to avoid the satellite drag accelerations coupling into the gravity signal and extend mission duration;

-

electrostatic accelerometer of high quality, equal or better than that of GOCE, to support DFACS operations;

-

proportional thrusters to actuate the attitude and drag control without injecting noise;

-

stable temperature environment with very low thermal fluctuations in the measurement band.

The algorithms implemented in the simulator employed for MOCAST+ are the heritage of the Thales Alenia Space NGGM E2E Simulator, previously derived from GOCE, which can compute the overall force and torque acting on the spacecraft as the resultants of the environmental disturbances, i.e. the interaction between the spacecraft and the outside world, and of the control actions of the actuators. Elements included in the orbital simulator are reported in Tables 2 and 3.

Data have been printed at 20 s steps, with a simulated mission duration of 115 days. In fact, the simulation internal step was 0.05 s and the output data have been filtered before down-sampling to avoid aliasing. The first 20 days in the simulation output are to be considered as settling time (transient) of the controllers; hence, for the scientific performance analysis, only the subsequent data points are considered.

5 Simulated data analysis by the space-wise approach

Three types of mission scenarios have been considered during the study (see also Rossi et al. 2022):

-

nominal scenario: the starting scenario was a GRACE-like configuration with two in-line satellites; each satellite also accommodates an atomic clock to perform measurements of potential differences between two points.

-

Bender scenario: the nominal scenario was generalized by considering a Bender configuration (similar to the NGGM proposal), with the advantage of improving the spatial resolution and time resolution of the observations, as well as reducing the aliasing effects.

-

Bender scenario with six satellites: this more complex scenario with two in-line formations each with three satellites was also tested, implying an obvious improvement of the performances at the cost of a more expensive implementation.

The orbits of the Bender configuration were simulated at mean altitudes equal to 371 km and 347 km, corresponding to inclinations of 88° and 66°, respectively, starting with a nominal inter-satellite distance of 100 km for a time span of 1 month. The solution with a single pair of satellites was easily obtained by disregarding the inclined orbit, while the solution with two in-line formations of three satellites was obtained by adding a third satellite on the same orbits, by introducing a time shift but interpreting it as a spatial shift. This “trick” was also adopted for the simulations with increased inter-satellite distance.

As for the simulated signal, the static part was taken from the EGM2008 coefficients (Pavlis et al. 2012), consistently with the orbits, while the non-tidal time-variable part was derived from the 3-hourly updated ESA Earth System Model (ESM) (Dobslaw et al. 2015). The latter was used to investigate the effect of non-tidal temporal variations due to atmosphere, oceans, continental ice sheets, groundwater, and solid Earth on the estimated solution. Note that to perform a realistic simulation the de-aliasing model provided with the ESM model has been considered, along with its perturbations due to the uncertainty in the knowledge of atmosphere and ocean models (AOerr).

As for the simulated noise, this was randomly sampled from the given PSDs. In the case of potential differences, the PSD is white with a noise standard deviation of 0.2 m2/s2 for the atomic clock, considering this value as a limit under which the simulation would not be realistic enough. In the case of gravity gradients, the different noise PSDs presented in Sect. 3 (see Fig. 1) were considered, depending on the used atomic species and the level of the spacecraft attitude control.

The simulated data have been analysed according to the “space-wise” approach, which basically consists of a filter–gridding–harmonic analysis scheme that is repeated for several Monte Carlo samples extracted for the same simulated scenario to produce a sample estimate of the covariance matrix of the error of the spherical harmonic coefficients (or at least a sample estimate of their error variances).

The space-wise approach is based on the idea of estimating the spherical harmonic coefficients of the geopotential model by exploiting the spatial correlation of the Earth gravity field (Rummel et al. 1993; Kaula 1966). For this purpose, a collocation solution is applied (Sansò 1986), modelling the covariance of the signal as a function of the spatial distance and not of the temporal distance, as is done for the covariance of the noise. In this way, data that are close in space but far in time can be filtered together, thus overcoming the problems associated with the strong temporal correlation of observation noise. A single collocation solution, although theoretically possible, is not feasible from the computational point of view due to the amount of data to be analysed (Pail et al. 2011). The size of the system to be solved would in fact be equal to the number of processed data. For this reason, the space-wise approach is implemented as a multi-step procedure (Migliaccio et al. 2004; Reguzzoni and Tselfes 2009). In the following, the main steps of the procedure are described.

-

Application of a Wiener filter (Papoulis 1984; Reguzzoni 2003; Albertella et al. 2004) along orbit to reduce the temporal correlation of the measurement noise. A generalization of the MOCASS deconvolution filter (Migliaccio et al. 2019), also considering correlations between clock and gradiometer data, could be developed. Nevertheless, this solution was not implemented to leave these two types of observations as independent as possible before applying the gridding procedure.

-

Interpolation of the observations to obtain one or more data grids at the average altitude of the satellites, applying a collocation procedure to local data “clouds” (Migliaccio et al. 2007; Reguzzoni et al. 2014); since the observations of the potential contribute to the low harmonic degrees, while the gravitational gradients to the high degrees, it is necessary to optimize the size of the local areas, or perform several steps focusing firstly on the lower frequencies and then on the higher ones (such as in a multi-resolution analysis); more attention has to be paid to the modelling of the local covariance of the signal (which must be adapted to both the second radial derivative of the potential and the potential); finally, it is also necessary to model any spatial (in the signal) and temporal (in the noise) correlation between gradients and potential. This theoretically complete scheme has been then simplified by using the spherical harmonic coefficients estimated from the clock data only to reduce the filtered gravity gradients along the orbits and then applying the gridding procedure to the gradient residuals. In this way, there is no need of implementing a multi-resolution approach, or at least it might be required only to split medium and high frequencies, both mainly derived from the gradient information.

-

Spherical harmonic analysis of the data of the grid (or grids) by means of numerical integration (Colombo 1981) to obtain the estimates of the geopotential coefficients. If several grids are analysed, each of them related to a different functional of the gravity field, the obtained spherical harmonic coefficients are combined on the basis of the Monte Carlo error variances, neglecting information from error covariances. If more satellites are considered, each of them with a gradiometer on board, the combination of their data is instead performed at the stage of the gridding where all correlations are taken into account by collocation inside each local patch.

The fundamental space-wise approach scheme described above has been maintained for this study; however, since the aim here was mainly to recover low-resolution information from clock data, a global data processing was introduced and so the data analysis scheme was adapted as shown in Fig. 5.

The potential observations were processed by a Least Squares adjustment for the estimation of low harmonic degrees; afterwards, the gravitational gradient observations were reduced by the contribution given by the potential and then, processed to produce the spherical harmonic estimates. This strategy offers advantages from a computational point of view, but it neglects any correlation between the two different types of observations, which could reasonably lead to recovering some additional information on the Earth gravitational field.

Once the methodological scheme has been devised, the performance of the proposed sensors must be assessed by means of numerical simulations. The assessment in this case relied on a Monte Carlo approach, consisting of simulating signal and noise time series according to the given deterministic/stochastic models, applying the space-wise approach to each simulated sample, and then computing sample statistics (error covariance matrices, or at least error variances) of the estimated spherical harmonic coefficients. The pros and cons of different mission scenarios can be highlighted by comparing the corresponding spectral responses (e.g., in terms of error degree variances). Note that, in the spirit of the stochastic collocation approach, also the static part of the gravity field was considered as a random field, using the EGM2008 coefficients as mean values and applying a random perturbation from EGM2008 error degree variances to produce different signal samples. In other words, the noiseless signal of each Monte Carlo sample is different and takes into account the variability of the actual knowledge of the gravity field (Migliaccio et al. 2009). As for the time-variable part, this variability was not applied because there is no available information of the Earth System Model error. Therefore, it was taken as a purely deterministic signal.

5.1 Clock only solution

The first set of simulations investigated the performance of a clock-only system, without any gradiometer onboard the satellites. The impact of different mission scenarios was considered, also changing the sampling frequency of the clocks, orbit altitude and inter-satellite distance, and the results were compared to the performance of GRACE (computed by averaging the coefficient error variances of the monthly ITSG-GRACE 2018 solutions (Kvas et al. 2019) over a time span of about 14 years). By increasing to three, the number of in-line satellites along each orbit at the maximum allowable distance (i.e. about 1000 km each), a remarkable result was obtained (see Fig. 6): in fact, working at 1 Hz sampling rate the solution is basically equivalent to GRACE below degree 40 (above degree 40 the time variable signal power would be anyway quite low and therefore the gravity estimates less interesting).

Error degree variances of different 1-month clock-only solutions considering the Bender configuration with two or three satellites per orbit, changing the sampling frequency and the inter-satellite distance D; in case of three in-line satellites on the same orbit, the potential differences between the first and last satellite of the in-line formation are not measured

The fact that in this scenario there are no gradiometers on board means that the overall cost of the mission could be significantly reduced, especially if the physical size of the atomic clock can be further decreased in the future. Of course, the possibility of using longer in-line formations of satellites may further improve the quality of the solution.

5.2 Combined clock and gradiometer solution

The second set of simulations investigated the performances of adding a single-axis gradiometer as payload to the clock-only configuration, so the first question to answer regards the gradiometer direction for each satellite of the constellation. To this aim, different simulations were implemented, considering only one satellite (either on the polar or on the inclined orbit) with a single-axis gradiometer oriented in the x direction (along-track) or in the y direction (out-of-plane) or in the z direction (radial). In all the simulations, the gravity gradients were reduced exploiting a Bender clock-only solution based on two pairs of satellites with nominal 100 km satellite distance, 0.1 Hz sampling frequency (even if in the gridding procedure, only one satellite was processed).

Considering the results of these simulations, the following solutions combining clock and gradiometer information were considered: (i) for the Bender scenario with two pairs of satellites, Tzz gradiometers only in the case of optimal PSD (fully compensated angular rotations) and Tyy gradiometers only in the case of degraded PSD (that is actually equivalent to the optimal PSD because the degradation does not play any role in the y direction, at least as a first approximation, see Sect. 3); (ii) for the Bender scenario with two in-line formations of three satellites, the same two solutions proposed for the two pairs, adding also other solutions by orienting the gradiometers on board the three satellites along three different directions (namely Txx, Tyy and Tzz) in order to test whether the combination of different information can somehow contribute to improve the overall solution.

For the sake of completeness, the results with four satellites (i) are shown in Fig. 7. However, we will focus on the configuration with six satellites (ii), which of course performs better (see Fig. 8). It is quite clear that the gradient contribution allows a better estimate of the middle-high spherical harmonic degrees up to about degree 200 with one month of data, that is a quite remarkable results with respect to GRACE and GOCE performances. As for the lowest degrees, one could say that about below degree 10 the solution is mostly controlled by the clock information, while about above degree 30 the gradiometers only are playing a role. In comparison with GRACE, the achieved performances are superior at all degrees when considering the six-satellite formation, while the proposed solution appears weaker with respect to the expected outcomes of the NGGM/MAGIC mission as presented in Purkhauser et al. 2020). In other words, the proposed MOCAST+ quantum technology mission can contribute to improve the current knowledge of the gravity field and of its time variation, provided that the mission configuration is made more complex by considering 1 Hz (instead of 0.1 Hz) clock observations, longer inter-satellite distances (about 1000 km instead of 100 km) and three satellites for each of the two orbits in a Bender configuration. Without these “complications”, the mission profile would not really be competitive with GRACE at low-medium harmonic degrees.

Error degree variances for the Bender configuration with three satellites per orbit in the optimal and degraded gradiometer noise PSD scenarios (spherical harmonic degree up to 50) with an inter-satellite distance D is 1000 km; D is the distance between two consecutive satellites, meaning that the distance between the first and the third satellite along each orbit is about 2000 km

5.3 Commission errors at ground level

For the sake of completeness, the cumulative geoid undulation and gravity anomaly commission errors at ground level (in spherical approximation) were computed and are shown in Figs. 9 and 10 for some relevant scenarios, namely the best clock-only solution, the best clock + gradio solutions in the Bender configuration with two or three satellites per orbit, and a reasonable solution orienting the gradiometer arms in the three possible directions with degraded noise PSDs.

5.4 Assessment of the effect of introducing the time-variable signal into the simulation

To conclude the discussion regarding the simulations, a preliminary analysis was performed to assess the impact on the estimation accuracy of monthly solutions when also considering the time-variable gravity signal due to non-tidal mass variations generated by the variability in the atmosphere (A component), oceans (O component), continental ice sheets (I component), groundwater (H component), and solid Earth (S component). This under-sampled signal inevitably introduces aliasing effects into the solution because it includes variations with period shorter than 1 month, e.g., from hours to days in atmosphere and oceans.

In this preliminary analysis, the overall effect due to AOHIS was added to the static gravity field and reduced with the de-aliasing model (DEAL) provided along with the updated ESM. To properly account for model errors in the AO components of DEAL, this term was corrected by the AOerr term also provided with the updated ESM model, thus simulating a realistic scenario with a consistent dataset (Dobslaw et al. 2016). Moreover, we rely on the fact that the Bender configuration is more robust against these aliasing effects than the simpler GRACE-like configuration (Visser et al. 2010).

The result of the application of the space-wise approach to this dataset is presented in Fig. 11, which shows that the aliasing only affects spherical harmonic degrees lower than 20, as expected by looking at the degree variances of the non-tidal time-variable signal. On the other hand, the degradation is quite small, namely the error is at most doubled (compare solid and dashed lines in Fig. 11). This might be due to the processing strategy, although this explanation requires further investigations to be confirmed.

Assessment of non-tidal time-variable signal effect into the simulation: error degree variances for Bender configuration with two or three satellites per orbit in the case of optimal gradiometer noise PSD. Solid lines represent solutions including the non-tidal time-variable signal, whereas dashed lines represent solutions including the static gravity signal only. Purple line represents the power of the non-tidal time-variable signal

6 Impact on the detection of geophysical phenomena

The geophysical phenomena that have been evaluated are the contribution of thickness variation in glaciers and movements of water masses, the effect of earthquakes and the effect of submarine volcanoes. The simulations focus on orogenic areas, including the Tibetan plateau and surrounding orogens, and the Andean range, and include the definition of the masses and time variations from literature or databases, the calculation of the spectral signatures of the simulated phenomena, and their comparison with the spectral error curves of the MOCAST+ mission. The Randolph Glacier Inventory (RGI) is a comprehensive inventory of glacier contours and is currently a supplement to the Global Land Ice Measurements from Space Initiatives (GLIMS) multi-temporal database. While GLIMS is a multi-temporal database that includes several attributes, the RGI is intended as an instant representation of the world’s glaciers as they appeared at the beginning of the twenty-first century. In particular, the RGI database reports 15,908 glaciers covering a total surface of 29.4 × 103 km2 in the “Southern Andes” area number 17. Additional glaciers are found in the “Low Latitudes” area number 16, covering 4.1 × 103 km2 of frozen surface. For the Andes, thickness variations of selected glaciers are available from the WGMS (World Glacier Monitoring Service) database (WGMS 2021). We constructed the yearly mass change models by combining these two independent databases.

Regarding lakes, according to the work of Zhang et al. (2013) in the Tibetan plateau there are 4345 lakes covering a total area of 4.07 × 104 km2. Tibetan lakes are experiencing systematic level rising in recent years, as testified by Zhang et al. (2013) or by the recent DAHITI database (Schwatke et al. 2015), which reports the time-series of lake water level for the whole world. South America also hosts several large lakes, some of them natural, but there are also some extended artificial reservoirs employed for hydroelectric power production. Some of them can be quite large, with areal extension exceeding 8 × 103 km2; especially the artificial reservoirs are associated with large water level oscillations which can exceed 10 m in just one year. To construct the temporal mass changes models of the lakes, we used the outlines of lakes from two databases (Lehner and Döll 2004; Zhang et al. 2021) and the water level time-series reported in the DAHITI database (Schwatke et al. 2015).

As for South America, we additionally evaluated the gravity changes induced by sub-surface water storage variations calculated from the GLDAS (Global Land Data Assimilation System) model (Rodell et al. 2004). We chose three significant hydrologic basins, with different areal extents and water mass variations, i.e. the Amazon, Paraná and Orinoco ones.

Regarding earthquakes, we simulate the gravity signal of a multitude of earthquakes, testing the dependency of the gravity field spectrum on earthquake magnitude, fault mechanism, and size of the fault plane for a finite fault rupture model.

Regarding volcanos, we have identified the Hunga Tonga submarine volcano which erupted in 2014, creating a new island, which vanished with the unrest of 2022; we used the documentation from previous studies, to obtain an estimate of the mass change, and the corresponding gravity signal.

The sensitivity level of MOCAST+ to resolve the simulated phenomena was evaluated by comparison of the signal spectral curves with the mission error curves, both expressed as spherical harmonic degree variances. A signal is observable if its spectral signal curve, or part of it, is above the noise curve. This detectability criterion is independent of the ability to isolate the given signal from the observation, a problem which depends on the knowledge of the background models due to atmosphere, hydrology, ocean mass variations, and known past earthquakes. Since the latter is a rapidly improving topic, we think it is justified to consider the relation between signal and satellite observation noise curves. A spatio-localization strategy was applied for the spectral analysis of the signals as defined by Wieczorek and Simons (2005; 2007). The goal of this method is to overcome the problematic assessment of the signal of localized phenomena using global spherical harmonics (Sneeuw 2000). In addition to that, localized analysis allows to involve portions of global models in the forward gravity modelling step, resulting in substantial reduction in computational resources. It also allows to isolate geographical areas of interest, simulating a realistic strategy of geophysical modelling and isolation from other contributions that cannot be otherwise reduced. As we show below, even the signal due to a single seamount eruption (its extent being confined within a radius of less than 1 km) has most of its power at wavelengths much larger than the body size.

The size of some of the modelled bodies can be smaller than the wavelengths at which their gravity effect may be sensed by the satellite-borne measurements under assessment. Also, if gravity forward modelling was to be carried out in the spherical harmonic domain, computational criticalities would arise due to the large maximum degree requirements. To avoid this issue, forward modelling of local phenomena was carried out in the spatial domain, following discretization of the modelled masses in elementary bodies (e.g., spherical tesseroids, Uieda et al. 2016).

6.1 Glaciers, lakes and surface hydrology in South America and Tibet

The present section discusses the sensitivity of MOCAST+ to mass changes in lakes and glaciers in South America and Tibet, and the improvement in comparison with GRACE in detecting smaller basins and glaciers, and smaller lake level changes. The improvement in detecting mass change rates also translates in the detection of possible change rate accelerations, an aspect important in detecting nonlinear mass changes due to climate change. Here, a few representative lakes and glaciers are presented, for a more exhaustive selection of examples we refer to Pivetta et al. (2022). The annual gravity field change rates were modelled for selected glacierized areas of South America, for the Tibetan lakes, for the subsurface hydrologic mass variation in major South American basins, and the change rates induced by two artificial hydrologic reservoirs in South America. The gravity change rates have been calculated starting from the mass changes estimated for each of the glaciers, lakes, basins or reservoirs, discretizing the mass changes with tesseroids (Uieda et al. 2016), and then finally computing the gravity field change rates. Figure 12 shows four examples of the simulated gravity fields, of which three are related to South America and one to the Tibetan lakes.

Generally, we see that the largest variations in terms of both mass change and gravity anomaly are due to subsurface hydrology. Long period trends for this component can be quite extended in terms of spatial scale. Apart from the Amazon basin, we considered two additional hydrologic basins which are smaller in terms of both areal extension and involved mass changes during the hydrologic year. The two basins are the Paraná basin and the Orinoco basin. The subsurface hydrology, as it is evident, involves continuous and widespread mass variations occurring over large areas, while the other considered phenomena, such as mass variations of glaciers or lakes, are generally localized or occurring in clusters. For instance, the endorheic lakes of Tibet are present diffusely in the whole plateau and most of them are showing coherent long-term mass increase (Zhang et al. 2017). The origin of this mass variation can be ascribed to a combination of increase in the average yearly precipitation and to the deglaciation of the peripheral glaciers, which drain their waters into the plateau (Zhang et al. 2017). This coherent mass increase in the lakes (cumulatively larger than about 8 Gt/yr) causes a large positive gravity anomaly; via the superposition effect, the anomaly extends over a very large area which is circumscribed by a spherical cap with radius of about 15°. The amplitude of the gravity signal is about 0.0003 mGal/yr. The effect of the single lakes is obviously smaller; one of the largest signals is due to the Qinghai which peaks up to about 0.0001 mGal/yr.

Long-term simulated gravity fields in South America (SA) and Tibetan plateau; in all plots, the circles bound the regions included for the spectral analysis; the deformation of the circles is due to the map projection. a) yearly gravity change rate due to glaciers thickness variation; b) yearly gravity change rate due to rise in water level of lakes in Tibet, black areas lakes according to Zhang et al. (2021); c) yearly rate according to GLDAS model for the Amazon basin; the other basins outlines considered in the study are reported in grey. d) long period gravity rates for an artificial reservoir (Sobradinho) and the Titicaca Lake; 250 km calculation height

Important localized mass variations due to deglaciation occur in Patagonia where a cluster of glaciers is experiencing a coherent and systematic mass loss (Wouters et al. 2019) causing remarkable yearly rate anomalies of 0.001 mGal/yr. The cluster of glaciers loses about 20–30 Gt/yr mostly localized inside an area of radius equal to 8°.

The lakes and artificial reservoirs considered for South America do not present coherent mass variations as those observed in Tibet. Hence, here we mostly observe the effect of a single lake/reservoir. Despite being small in terms of areal extension, some artificial reservoirs in South America experience very large seasonal mass variations (> 10 Gt) and pluri-annual trends of few Gt/yr. Seasonal gravity signals can peak up to 0.001 mGal, but over smaller areas with respect to the Patagonian glaciers. Long period trends are smaller in amplitude by over an order of magnitude.

6.1.1 Earthquake modelling

The challenge of an improved gravity mission is to detect smaller earthquakes than presently possible. The importance relies particularly in those fault movements that are difficult to be observed with terrestrial observations, as faults in oceanic areas. Our objective is to define the spectral signal curves of characteristic earthquakes, representative of different fault mechanisms and magnitudes, so as to give feedback to the amount of improved sensitivity of the MOCAST+ mission, depending on different mission and payload characteristics. The GRACE/GRACE-FO, and MOCASS spectral error curves are taken as comparison with evaluate relative performance. An ensemble of earthquake gravity signals was computed, through numerical forward modelling of the gravity effect of a set of reference synthetic earthquakes. By comparing these products with the error estimates of the satellite payloads and mission designs, it was then assessed how different earthquake source parameters affect detectability. We show that the involved energy (i.e. the seismic moment) is in a direct relationship with positive detectability, a trivial relationship in a first-order approximation. However, the investigation of varying earthquake source mechanism and depth in the framework of a double-couple model shows how the same energy release results in significantly different power distribution in the gravity signal spectra. This needs to be considered in the detectability assessment of an earthquake.

The QSSPSTATIC modelling code by Wang et al. (2017) was adopted. It is based on the QSSP code, which the authors describe as an “all-in-one” tool for the efficient computation of synthetic seismograms on a radially layered spherical Earth model. QSSPSTATIC, a stand-alone extension, allows modelling of longer-term deformation, including viscoelastic post-seismic deformation. With that code, they implemented a hybrid approach, overcoming the specific issues affecting both analytical and numerical strategies of degeneration and consequent loss-of-precision. The core of their hybrid approach lies in the separate solution of long-wavelength spheroidal modes, where the gravity effect on the deformation is significant, using numerical integration, while an analytical integration method using the Haskell propagator (Wang et al. 2017) is used for solving the small-wavelength spheroidal modes and the complete toroidal modes. The underlying theory stems from the analytical solutions by Takeuchi and Saito (1972). An example of the coseismic gravity field is shown in Fig. 13.

Modelled co-seismic gravity field change due to an earthquake source: the September 16, 2015, M 8.3 earthquake, occurred south of Illapel, Chile, modelled as a centroid point source in QSSPSTATIC. The signal is shown in the 0 to 70 spherical harmonic degree range. Data from USGS (https://earthquake.usgs.gov/earthquakes/eventpage/us20003k7a/finite-fault)

The fault is modelled as an extended fault plane, discretized with single point sources. The fault mechanism produces different gravity signals, in both amplitude and spectral characteristics, for the same magnitude. Therefore, when defining the threshold magnitude of a satellite mission, the depth and fault plane mechanism, and fault length should be mentioned as well, to give an accurate sensitivity estimate. Due to the viscosity of the mantle, an earthquake generates both a static co-seismic gravity change and a post-seismic gravity change which lasts for years and typically reduces the co-seismic signal due to viscous relaxation. A useful property is the scaling of the gravity signal by the seismic moment (unit Nm), which is a linear scaling property. The linearity originates from the linear relationship between the body forces arising from a given moment tensor and the moment tensor itself, in the case of the point source approximation. The scaling law applies to the degree variances of the gravity field, which scale proportionally to the square of the ratio of the seismic moments of two earthquakes of homogeneous mechanism and depth. This scaling allows to trivially obtain the degree variance spectra of earthquakes with different seismic moments, by modelling only one reference earthquake. The equivalent point source of the finite fault is obtained from a slip-weighted fault plane centroid, with a slip weighted rake angle, and the published seismic moment.

6.1.2 MOCAST+ improved detection of mass variation rates in lakes and glaciers

The performance of the proposed MOCAST+ mission was assessed by comparing localized spectra of the yearly gravity rates with the error curves for the mission after 1 year of observations, see Fig. 14a, where the GRACE error curve is also shown for comparison, still using the average errors of all the monthly GRACE geopotential solutions ITSG-GRACE 2018. Regarding the MOCAST+ curves, four different possible scenarios were considered (see Table 4), properly rescaling the curves reported in Sect. 5 to account for the longer time span of the solutions. Some phenomena can already be seen clearly by GRACE observations, while other signals can only be resolved by the more performant MOCAST+ mission.

We consider a phenomenon to be detectable, if part of the signal curve is above the noise curve. In general, the higher degrees of the signal fall below the noise curve, limiting the highest degree resolvable by the mission, and therefore, the spatial resolution of the phenomenon. For the phenomena which can already be detected by GRACE, the improvement of MOCAST+ is therefore defined in terms of the higher spatial resolution achievable. The signal curves give the yearly gravity change rate, or the gravity change after one year. We consider integrating the time interval of the change rate over k years, to enhance the signal amplitude, and determine whether a given phenomenon can be observed over a longer time interval of k years, in the case when it is too small to be observed in one year. Another way to discuss the relative performance of the MOCAST+ mission against GRACE is to scale the given signal curve by a factor b, to determine which theoretical increase in change rate could be detected, if the actual signal is below the noise curve. Considering the increase in spatial resolution by MOCAST+, the Patagonia deglaciation (light grey curve with stars in Fig. 14a) is observed at a higher spatial resolution compared to GRACE, and this increase in the resolution is relevant in achieving observation of the anomaly up to almost degree and order 55 in the spherical harmonic expansion for the best scenario, while GRACE stops at degree 32. This corresponds to a leap ahead in spatial resolution from about 600 km to 400 km in terms of half-wavelength. Figure 14b and c shows this improvement for the Patagonian glaciers in the spatial domain. Similar considerations can be made for the Amazon basin, where the spectra corresponding to the two blue anomalies seen in Fig. 12c are reported in green solid and green decorated lines. Compared to the glaciers, the improvement is less marked but still appreciable, the minimum observable half wavelength being reduced from about 720 to 620 km.

a) Spectral analysis of the long period geophysical phenomena considered in comparison with MOCAST+ error curves, GRACE and NGGM. b) and c) improvement in spatial resolution of MOCAST+ mission in depicting the mass variations in Patagonia. All spectra and gravity fields are computed at 250 km height

The lower error curve of MOCAST+ with respect to GRACE also implies that smaller yearly mass change rates occurring in both the Amazon basin and the Patagonian glaciers can be monitored. In fact, if for instance we scale the signal spectra of the Patagonian glaciers by a factor b = 1/10, we reach the noise level of MOCAST+, while with just a factor b = 1/2 we approach the GRACE error curve. This means that with a mission like MOCAST+ we can monitor annual deglaciation rates in Patagonia which are less than half those presently resolvable by GRACE.

The contribution of the MOCAST+ mission design is more evident when considering smaller amplitude signals such as the Paraná basin, which is less than than half the size of the Amazon basin in terms of areal extent, or the Tibetan lakes, which cover a quite extended area but present smaller mass rates and corresponding gravity changes than those observed in the Amazon. Here, the signal spectra are above the MOCAST+ noise level, implying that these signals can be detected just after one year of observations, while in the case of GRACE at least two years of observations are necessary, which corresponds to multiplying the signal curves by a factor two, and the degree variances by a factor four (not shown in Fig. 14a). Furthermore, considering two years of data integration, MOCAST+ is capable of imaging the Tibetan lakes anomaly pattern at higher resolution compared to GRACE, because the intersection of the signal and noise curves shifts to higher degrees; the improvement is not outstanding but anyway a less blurred picture of the water mass variation is obtained. Considering a two-year solution, also the long-term variations of the artificial reservoir “Sobradinho” in South America, at the limit of resolution in the yearly rate, would become detectable by MOCAST+, while GRACE could not offer a sufficient resolution to depict the anomaly, as the signal would remain below its noise level. Long-term variations in large natural lakes as the Titicaca (> 8,000 km2) can only be seen after five years of observations of MOCAST+.

Finally, also the MOCAST+ sensitivity to seasonal signals in monitoring both sub-surface hydrology variations (GLDAS) and seasonal lakes mass variations was evaluated (Fig. 15). The detectability threshold was assessed by comparing monthly error curves with the geophysical signal curve corresponding to the month when the seasonal hydrologic component is maximum. The contribution of MOCAST+ here is again the possibility of recovering the anomalies at higher spatial resolution. The improvement is, as expected, more pronounced for the smaller basins, such as the Orinoco and Paraná, with respect to the Amazon: for instance, the Orinoco is seen up degree and order 53 with MOCAST+, while GRACE stops at degree 35.

Apart from the increase in resolution, MOCAST+ would be able to detect smaller amplitude seasonal signals with respect to GRACE. For instance, we can lower the Orinoco signal curve by two orders of magnitude to reach the noise level of MOCAST+ while for GRACE the factor is “only” 10. Since the seasonal mass variation of the Orinoco is about 80 Gt, MOCAST+ would be able to monitor a basin with similar area extension but with a lower seasonal mass variation, down to about 10 Gt. For GRACE the threshold is higher, so that a basin with seasonal mass variations of about 25 Gt is observable.

6.2 MOCAST+ improved detection of earthquakes

The discussion of the MOCAST+ sensitivity to an earthquake must take into account not only the earthquake magnitude, but also its fault mechanism, the fault inclination, and to minor importance the fault depth. We have tested these aspects with a synthetic earthquake fault, for which the parameters as magnitude, fault inclination, fault slip, are varied. The localized spectrum of the earthquake field was compared to the spectral error curves of MOCAST+ and GRACE. Signal and error estimates were compared on consistent timescales: one month of cumulative co-seismic and post-seismic signal against the errors of a geopotential solution obtained from one month of simulated data (in the case of MOCAST+) or the average errors of all the monthly GRACE geopotential solutions.

Ranging from a thrust to a strike slip fault, the greatest signal is obtained for the thrust fault. The strike-slip fault on a dipping fault plane gives a slightly smaller signal, and the vertical strike slip fault results in the smallest signal among the three, all with the same earthquake magnitude. The improvement of the MOCAST+ error curve with respect to GRACE from degrees 15 onwards is beneficial in retrieving the earthquake signal. Considering a magnitude M 8.5 but with different fault mechanisms leads to earthquakes which are not visible with GRACE after a 1-month observation period, whereas the pure thrust and the dipping strike slip mechanisms would be visible by MOCAST+.

The earthquake magnitude is clearly an important parameter controlling the detectability of an event and acts as a scaling parameter acting over the entire spectrum. Considering a point source representing a thrust earthquake, it can be seen how the earthquake spectra are rapidly pushed downwards for decreasing earthquake magnitude (Fig. 16), with a magnitude M 8.6 being just below the limit of detectability by GRACE, magnitude M 8.5 to M 8.4 being only detectable by MOCAST+, and magnitude M 8.3 at the limit of being observed. Besides, considering earthquakes of large magnitudes, above Mw = 8.7, which can be detected by both missions, the covered interval of detectable spherical harmonic degrees is much wider in the MOCAST+ case.

Degree variance spectra of the 1-month gravity change (expressed as potential \(T\)) for the same earthquake focal mechanism, at different moment magnitudes. The error degree variances for MOCAST+ and GRACE are superimposed. Signal and errors are all expressed at ground level. The signal was localized with a spherical cap window with radius = 8°, centered at the earthquake epicenter

Detectability of example earthquakes with respect to one of the MOCAST+ configurations (“MOCAST+ Bender 2 couples nominal” in Table 4). The lines show the field of magnitude and resolution combinations that result in a SNR greater than one. In panels a, b, and c, the sensitivity to three source parameters (dip, depth, and strike-slip components, respectively) is shown. a) 30 km deep thrust, dip varies; b) 15° dipping thrust, depth varies; c) 30 km deep thrust and strike slip sources (dipping and vertical). d) presents the effect of the observation timespan, where an earthquake signal reduction due to post-seismic relaxation occurs concurrently with improvement in error curves, for a 30 km deep, 22.5° dipping thrust

Figure 17 presents the effect of source parameters and of the covered post-seismic timespan on the detectability of an earthquake. The signal-to-noise ratio (SNR) is computed according to the definition adopted by Han and Ditmar (2008), as the ratio between the degree-RMS spectra of the signals and the error-degree-RMS spectra of the mission error estimates. The depth of the epicentre (panel b) is a parameter, which has a nonlinear effect, being influenced by the depth of the density variation in the crust and mantle, and the changing rheology with depth.

It was found that, if the earthquake depth is below the model crustal thickness (35 km), the curves increase the gravity signal with increasing depth. The same is found for the superficial crustal earthquakes, for depths above the Moho, but a sudden decrease in the gravity signal is found for depths in the lower crust, as the 30 km depth curve shows.