Abstract

Some test amplification tools extend a manually created test suite with additional test cases to increase the code coverage. The technique is effective, in the sense that it suggests strong and understandable test cases, generally adopted by software engineers. Unfortunately, the current state-of-the-art for test amplification heavily relies on program analysis techniques which benefit a lot from explicit type declarations present in statically typed languages. In dynamically typed languages, such type declarations are not available and as a consequence test amplification has yet to find its way to programming languages like Smalltalk, Python, Ruby and Javascript. We propose to exploit profiling information —readily obtainable by executing the associated test suite— to infer the necessary type information creating special test inputs with corresponding assertions. We evaluated this approach on 52 selected test classes from 13 mature projects in the Pharo ecosystem containing approximately 400 test methods. We show the improvement in killing new mutants and mutation coverage at least in 28 out of 52 test classes (≈ 53%). Moreover, these generated tests are understandable by humans: 8 out of 11 pull-requests submitted were merged into the main code base (≈ 72%). These results are comparable to the state-of-the-art, hence we conclude that test amplification is feasible for dynamically typed languages.

Similar content being viewed by others

1 Introduction

Modern software projects contain a considerable amount of hand-written tests which assure that the code does not regress when the system under test evolves. Indeed, several researchers reported that test code is sometimes larger than the production code under test (Daniel et al. 2009; Tillmann and Schulte 2006; Zaidman et al. 2011). More recently, during a large scale attempt to assess the quality of test code, Athanasiou et al. reported six systems where test code takes more than 50% of the complete codebase (Athanasiou et al. 2014). Moreover, Stack Overflow posts mention that test to code ratios between 3:1 and 2:1 are quite common (Agibalov 2015).

Test amplification is a field of research which exploits the presence of these manually written tests to strengthen existing test suites (Danglot et al. 2019a). The main motivation of test amplication is based on the observation that manually written test cases mainly exercise to the default scenarios and seldom cover corner cases. Nevertheless, experience has shown that strong test suites must cover those corner cases in order to effectively reveal failures (Li and Offutt 2016). Test amplification therefore automatically transforms test-cases in order to exercise the boundary conditions of the system under test.

Danglot et al. conducted a literature survey on test amplification, identifying a range of papers that take an existing test suite as the seed value for generating additional tests (Fraser and Arcuri 2012; Rojas et al. 2016; Yoo and Harman 2012). This culminated in a tool named DSpot which represents the state-of-the-art in the field (Baudry et al. 2015; Danglot et al. 2019b). In these papers, the authors demonstrate that DSpot is effective, in the sense that the tool is able to automatically improve 26 test classes (out of 40) by triggering new behaviors and adding valuable assertions. Moreover, test cases generated with DSpot are well perceived by practitioners — 13 (out of 19) pull requests with amplified test have been incorporated in the main brach of existing open source projects (Danglot et al. 2019b).

Unfortunately, the current state-of-the-art for test amplification heavily relies on program analysis techniques which benefit a lot from explicit type declarations present in statically typed languages. Not surprisingly, previous research has been confined to statically typed programming languages including Java, C, C++, C#, Eiffel (Danglot et al. 2019a). In dynamically typed languages, performing static analysis is difficult since source code does not embed type annotation when defining variable. As a consequence test amplification has yet to find its way to dynamically-typed programming languages including Smalltalk, Python, Ruby, Javascript, etc.

In this paper, we demonstrate that test amplification is feasible for dynamically typed languages by exploiting profiling information readily available from executing the test suite. As a proof of concept, we present Small-Amp which amplifies test cases for the dynamically typed language Pharo (Black et al. 2010; Bergel et al. 2013); a variant of Smalltalk (Goldberg and Robson 1983). We argue that Pharo is a good vehicle for such a feasibility study, because it is purely object-oriented and it comes with a powerful program analysis infrastructure based on metalinks (Costiou et al. 2020). Pharo uses a minimal computation model, based on object and message passing, thus reducing possibilities to experiences biases due to some particular and singular language constructions. Moreover, Pharo has a growing and active community with several open source projects welcoming pull requests from outsiders. Consequently, we replicate the experimental set-up of DSpot (Danglot et al. 2019b) by including a quantitative and qualitative analysis of the improved test suite.

This paper is an extension of a previous paper presenting the proof-of-concept to the Pharo community (Abdi et al. 2019b). As such, we make the following contributions:

-

Small-Amp, a test amplification algorithm and tool, implemented in Pharo Smalltalk. To the best of our knowledge this is the first test amplification tool for a dynamically typed language.

-

Demonstrating the use of dynamic type profiling as a substitute for type declarations within a system under test.

-

Quantitative evaluation of our test amplification for the Pharo dynamic programming language on 13 mature projects with good testing and maintenance practices. We repeated the experiment three times. For 28 out of 52 test classes we see an improvement in killing new mutants and consequently the mutation score. Our evaluation shows that generated test methods are focused (i.e. they do not overwhelm the developer) and all amplification steps are necessary to obtain strong and understandable tests.

-

Qualitative evaluation of our approach by submitting pull requests containing amplified tests on 11 active projects. 8 of them (≈ 72%) were accepted and successfully merged into the main branch.

-

We contribute to open science by releasing our tool as an open-source package under the MIT license (https://github.com/mabdi/small-amp). The experimental data is publicly available as a replication package (https://github.com/mabdi/SmallAmp-evaluations).

The remainder of this paper is organised as follows. Section 2, provides the necessary background information on test amplification and the Pharo ecosystem. Sections 3 and 4 explain the inner workings of Small-Amp, including the use of dynamic profiling as a substitute for static type information. Section 5 discusses the quantitative and qualitative evaluation performed on 13 mature open source projects; a replication of what is reported by Danglot et al. (2019a). Section 6 enumerates the threats to validity while Section 7 discusses related work and Section 8 lists limitations and future work. Section 9 summarizes our contributions and concludes our paper.

2 Background

2.1 Test amplification

In their survey paper, Danglot et al. define test amplification as follows:

Test amplification consists of exploiting the knowledge of a large number of test cases, in which developers embed meaningful input data and expected properties in the form of oracles, in order to enhance these manually written tests with respect to an engineering goal (e.g., improve coverage of changes or increase the accuracy of fault localization). Danglot et al. (2019a)

Test amplification is a not replacement for other test generation techniques and should be considered as a complementary solution. The main difference between test generation and test amplification is the use of an existing test suite. Most work on test generation accept only the program under test or formal specifications and ignore the original test suite which is written by an expert.

A typical test amplification tool is based on two complementary steps.

-

(i)

Input amplification. The existing test code is altered in order to force previously untested paths. This involves changing the set-up of the object under test, providing parameters that represent boundary conditions. Additional calls to state-changing methods of the public interface are injected as well.

-

(ii)

Assertion amplification. Extra assert statements are added to verify the expected output of the previously untested path. The system under test is then used as an oracle: while executing the test the algorithm inspects the state of the object under test and asserts the corresponding values.

The input amplification step is typically governed by a series of amplification operators. These operators represent syntactical changes to the test code that are likely to force new paths in the system under test. To verify that this is indeed the case, the amplification tool compares the (mutation) coverage before and after the amplification operator. It is beyond the scope of this paper to explain the details of mutation coverage; we refer the interested reader to the survey by Papadakis et al. (2019).

We illustrate the input and assertion amplification steps via an example based on SmallBankFootnote 1 and its test class SmallBankTest in Listing 1. In this example testWithdraw is the original test method while testWithdrawAll and testWithdrawOnZero are two new test methods derived from it. In the testWithdrawAll, the input amplification has changed the literal value of 100 with 30 (line 19), and the assertion-amplification step regenerated the assertions on the balance (line 20) and added a missing assertion on the status of the operation (line 22). The testWithdrawAll test method thus verifies the boundary condition of withdrawing by an amount equal to the balance. In the testWithdrawOnZero, on the other hand, an input amplifier has removed the call to the deposit: method in line 11. This test method now verifies the boundary condition that calling a withdraw: with an amount more than zero when the balance is zero is not allowed. This is illustrated by the extra assertions in line 29 and 30.

2.2 Pharo

Pharo [http://www.pharo.org/] is a pure object oriented language based on Smalltalk (Black et al. 2010; Bergel et al. 2013). It is dynamically typed; i.e. there are no type declarations for variables, parameters, nor return values statically, but dynamically, the environment enforces that all objects to have a type and only respond to messages part of the interface. It includes a run-time engine and an integrated development environment with code browsers and live debugging. Pharo users work in a live environment called Pharo image where writing code and executing it is tied seamlessly together.

Invoking a method in Pharo is called message sending. As a pure language, every action in Pharo is achieved by sending messages to objects. There are no predefined operators, like + or -, nor control structures like if or while. Instead, a Pharo program sends the message #+ or #- to a number object, a #ifTrue:ifFalse: message to a boolean object, or the message #whileTrue: to a boolean returning block object. Any message can be sent to any object. In case the message is not part of the object interface, instead of a compile-time syntax error, the system raises a MessageNotUnderstood exception in runtime. Thus, when transforming test code, a test amplification tool should be attentive to not create faulty test codes.

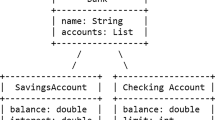

Like Java, all ordinary classes inherit from the class Object and every class can add instance variables and methods. Unlike Java, all instance variables are private and all methods are public. Pharo encourages programmers to write short methods with intention revealing names so that the code becomes self explanatory.

Protocols

Pharo, and Smalltalk in general, features protocols to organize the methods defined in classes. The notion of protocol is a tag of a method and it acts like a metadata provided by the integrated development environment. As such, classifying a method under a particular protocol has no impact on the behavior.

Since all instance variables are private in Pharo, in order to make them accessible by the external world, accessor methods should be provided which are typically grouped into the protocol accessing. In a similar vein, all methods used to set the content of an object upon initialization are grouped into the protocol instance creation. Long lived classes who evolve over time, use the deprecated protocol, signalling that these methods will be removed from the public interface in the near future. And while all methods are public, Pharo uses the protocol private to mark methods which are not expected to be used from the outside. However, as we mentioned earlier, protocols are a tag and Pharo does not block an access to a private method.

The most similar concepts to protocols in other languages are naming conventions, annotations and also access modifiers. For instance, a Java equivalent for methods in accessing protocol is following a naming convention like setVar() and getVar(). In a similar vein, Java uses @Deprecated annotation to identify the deprecated methods. An equivalent for methods in private protocol in Python is the naming convention of using underscore before the name of private methods, but Java uses access modifiers for this purpose.

2.3 Coding Conventions in Dynamically Typed Languages

In this section, we describe typical coding conventions that are used by programmers to compensate for the lack of type declarations. When we transform code (like we do when amplifying tests), special care must be taken to adhere to such coding conventions otherwise the code will look artificial and will decrease chances to be adopted by test engineers. Our perspective comes from Pharo / Smalltalk (as documented in Ducasse 2019), but similar coding conventions must be adhered to when amplifying tests in Python, Ruby or Javascript.

Untyped Parameters

In dynamically typed languages, when defining a method which accepts a parameter, the type of the parameter is not specified. However, it is a convention to name the parameter after the class one expects or the role it takes. This is illustrated by the code snippet in Listing 2. Line 1 specifies that this is a method drawOn: defined on the class Morph which expects one parameter. The parameter itself is represented by an untyped variable aCanvas however the name of the variable suggests that the method expects an instance of the class Canvas, or one of its subclasses. Line 7 on the other hand specifies that the method withdraw: expects one parameter and its role is to be an amount. There is no clue on the type of the parameter (integer, longinteger, float, …); all we can infer from looking at the code is that we should be allowed to pass it as an argument when invoking the messages >= (line 8) and - (line 10) on balance.

-

⇒ When passing a parameter to a method, a test amplification tool has no guaranteed way of knowing the expected type. The name of the parameter only hints at the expected type, hence during input assertion special care must be taken.

No Return Types

In dynamically typed languages, there is no explicit declaration of the return type of a method. In Pharo, all computation is expressed with objects sending messages and a message sends always returns an object. By default a method returns the receiving object, which is the equivalent of the void return type in Java. However a program can explicitly return another value using ˆ followed by an expression.

This is illustrated in Listing 3, showing the methods printOn: (displays the receiver on a given stream) and printString (which returns a string representation of the receiver). printOn: is the equivalent of a void call thus returns the receiving object; however the method is declared on Object so the receiver object can be anything. printString on the other hand returns the result of sending the message contents to aStream. The exact type of what is returned is difficult to infer via static code analysis. Smalltalk programmers would assume that the return type is a String because of the intention revealing name of the method. However, there is no guarantee that this is indeed the case. Thus, when a test amplification tool manipulates the result of a method, it cannot easily infer the type of what is returned.

-

⇒ The lack of explicit return types makes it hard to manipulate the result of a method call while ensuring that no MessageNotUnderstood exceptions will be thrown.

Different Return Types

In addition to the lack of return type declarations, it is also possible to write a method that can returns different types of values. For example, in Listing 4 the method someMethod: can return an instance of the classes Integer, Boolean or Object (the default return value is self).

As a result, removing the return operator (a common mutation operator) will not cause a syntax error yet may cause a change in the return type of a methods. For example, in Listing 4 the method width (lines 5 and 6), if the return operator is removed in the mutation testing, the type of the return value will be converted from a number to a Shape object.

-

⇒ Methods in dynamically typed languages can return various types. Test amplification tools must be aware that a small change in the code may lead to changes in the returned type. Consequently, assertions verifying the result of a method call must be adapted.

Accessor Methods

In Pharo, all instance variables are private and only accessible by the object itself. If one wants to manipulate the internal state of an object one should implement a method for it, as illustrated in Listing 5 which shows the setter method x: and the getter method x. In Pharo, such accessor methods are typically collected in the protocol accessing and are a convenient way for programmers to look for ways to read or write the internal state of an object.

Such accessor methods are especially relevant for all test generation algorithms (Fischer et al. 2020). For test amplification in particular, the setter methods are necessary in the input amplification step to force the object into a state corresponding to a boundary condition. The getter methods are necessary in the assertion amplification step to verify whether the object is in the appropriate state. However, there is no explicit declaration for the type of the parameter passed to the setter method x: nor for the type to be returned by the getter method x.

-

When manipulating the state of an object one cannot rely on type declarations to infer which parameter to pass to a setter method and which result to expect from a getter method.

Pass-by-reference

In dynamic languages including Pharo, when sending messages, all arguments are passed by reference. This may imply that sometimes the state is changed and sometimes it is not. This is illustrated by the method r in Listing 6, which returns the radius in polar coordinates. This involves some calculation (the invocation of dotProduct:) which passes the receiver object as a reference. There is no “pass-by-value” type declaration for dotProduct:, so one cannot know whether the internal state is changed or not. If dotProduct: does not alter the internal state it may be used as a pure accessor method during assertion amplification anywhere in the test. However, if the accessor method does change the internal state the order in which the accessor methods are called has an effect on the outcome of the test.

-

⇒ The pass-by-reference parameter passing makes it difficult to distinguish pure accessor methods. Pure accessor methods can be inserted anywhere during assertion amplification, for accessor methods changing the internal state one must take into account the calling order.

Cascading

Listing 7 shows the archetypical Hello World example. Line 2 specifies that this is a method helloWorld defined on a class HelloWorld. Line 4 and 6 each sends the message cr (a message without any parameters) to the global variable Transcript which emits a carriage return on the console. Line 5 sends the message show: with as parameter the string ‘hello world' to the global variable Transcript which writes out the expected message.

However, a Pharo programmer would never write this piece of code like that. When a series of messages is being sent to the same receiver, this can be expressed more succinctly as a cascade. The receiver is specified just once, and the sequence of messages is separated by semi-colons as illustrated on lines 7—10.

Instance Creation

Cascading is frequently used when creating instances of a class as illustrated by the createBorder example in the left of Listing 8. In line 2 it creates a new SimpleBorder object and then initialises the object with color blue (line 3) and width 2 (line 4). During input amplification we need to change the internal state of the object under test, hence it is tempting to inject extra calls in such a cascade. However, because we cannot distinguish between state-changing and state-accessing methods, we risk injecting errors. The code snippet to the right illustrates that injecting an extra isComplex call (a call to a state-accessing method) at the end of the cascade erroneously returns a boolean instead of an instance of SimpleBorder. This will eventually result in a run-time type error via a messageNotUndersood exception when the program tries to use the result of createBorderErroneous.

-

⇒ When injecting additional calls during instance creation, one runs the risk of returning an inappropriate value.

3 Small-Amp Design

In this section, we explain the design of the Small-Amp which is an adaption and extension of DSpot (Baudry et al. 2015; Danglot et al. 2019b) for the Pharo ecosystem. DSpot is an opensourceFootnote 2 test amplification tool to amplify tests for Java programs. Our Small-Amp implementation is also publicly availableFootnote 3 on GitHub.

3.1 Main Algorithm

The main amplification algorithm is presented in Algorithm 1 and represents a search-based test amplification algorithm. The algorithm accepts a class under test (CUT) and its related test class (TC) and returns the set of amplified test methods (ATM). In addition, the algorithm needs a set of input amplification operators (AMPS) and is governed by a series of hyperparameters:

-

Niteration – This parameter specifies the number of iterations and shows the maximum number of transformations on a test input. The default value for this parameter is 3.

-

NmaxInputs – This parameter specifies the maximum number of generated test inputs that algorithm keeps. It discards other test inputs. The default value for this parameter is 10.

Initially, the code of CUT and TC is instrumented to allow for dynamic profiling. The test class is executed, all required information is collected and then the instrumentation is removed again. This extra information including the type information allows us to perform input amplification more efficiently and circumvent the lack of type information in the source code (line 2). We discuss about the profiling in Section 4.1.

The main loop of the algorithm amplifies all test methods one by one (line 3). V is the set of test inputs, thus test methods without assertion statements. In the beginning, V has only one element which is obtained from removing assertion statements in the original test method (line 4). U is the set of generated test methods which are generated by adding new assertion statements to the elements in V (lines 5). Then the coverage is calculated using the generated test methods accumulated in U and the tests increasing the coverage are added to the final result. Small-Amp uses mutation score as a coverage criteria (line 6).

In the inner loop of the algorithm (lines 7 to 15), Small-Amp generates additional tests by repeating the following steps Niteration times:

-

1.

Small-Amp applies different input amplification operators on V (the current test inputs) to create new variants of test methods accumulated in the variable TMP (line 10). We discuss input amplification in Section 3.2.

-

2.

Small-Amp reduces TMP by keeping only NmaxInputs of current inputs and discarding the rest (line 12). We discuss input reduction in Section 4.2.

-

3.

Small-Amp injects assertions on the remaining test inputs in V and stores the result in U (line 13). We discuss assertion amplification in Section 3.3.

-

4.

Small-Amp selects all test methods in U that increase mutation score and adds them to the final result ATM (line 14). We discuss about test selection in Section 3.4.

After both loops have terminated, Small-Amp applies a set of post-processing steps to increase the readability of the generated tests (line 17). We discuss these steps in Section 4.3.

Algorithm 1 is heavily inspired by DSpot, but not entirely the same. In other words, we have added a pre-process step (line 2) to collect the necessary information about CUT and TC before entering the main loop. We also have added a post-processing step (line 17) to make the output more readable. We discuss about extras to DSpot algorithm in Section 4.

3.2 Input Amplification

During input amplification, existing test code is altered to force previously untested paths. Input amplification involves changing the set-up of the object under test, passing arguments which represent boundary conditions. Additional calls to state-changing methods of the public interface are injected as well. Such changes are bound to fail the original assertions of TC, therefore Small-Amp removes all assertions from a test t in TC.

The test code itself is transformed via a series of Input Amplification Operators. These change the code in such a way that they are likely to force untested paths and cover boundary conditions. Input amplification operators are based on the genetic operators introduced in Evolutionary Test Classes (Tonella 2004). Below we explain the Input Amplification Operators adopted from DSpot.

Amplifying Literals

This input amplifier scans the test input source code to find literal tokens (numbers, booleans, strings). Then it transforms the literal to a new literal based on its type according to Table 1. For example, test input shown in Listing 9 is transformed into testVectorGreater_L by manipulating the second element from the literal array.

Amplifying Method Calls

This input amplifier scans the test input source code to find the method invocations on an object. Then it transforms the source code by duplicating or removing the method invocations. It also adds new method invocations on the objects. If the method requires new values as arguments, the amplifier creates new objects. For primitive parameters, a random value is chosen from the profiled values. For object parameters, the default constructor is used i.e it creates a new instance by sending #new message to the class. Small-Amp ignores private and deprecated methods (regarding to their protocol) when it adds a new method call. The type information required do safely apply these transformations is obtained in the profiling step explained in Section 4.1 – p. 13.

3.3 Assertion Amplification

During the assertion amplification step, we inject assertion statements which verify the state of the object under test. The object under test is then used as an oracle: while executing the test the algorithm inspects the state of the object under test and asserts the corresponding values. The assertion amplification step is based on Regression Oracle Checking (Xie 2006).

Note that assertion amplification is applied twice during the amplification algorithm (Algorithm 1, in lines 5 and 13). There are two reasons for this seemingly redundant design. (1) We assure that the original test method is assertion amplified as well. Since the test inputs are reduced in line 12, there is a possibility that the original test method is discarded and never reaches the assertion amplification in line 13. (2) We can run only assertion amplification by setting the value of Niteration = 0. This way no new tests will be generated, but existing tests may become stronger because they check more conditions.

Observing State Changes Via Object Serialisation

Small-Amp manipulates the test code and surrounds each statement with a series of what we call “observer meta-statements” (see Listing 10). Such meta-statements include a surrounding block to capture possible exceptions (lines 19–20 and 24–25) and calls to observer methods to capture the state of the receiver (line 17 and line 18) and the return value (line 18 and line 23). When necessary, temporary variables are added to capture intermediate return values (tmp1 on line 21 and line 23).

After manipulating the test method, Small-Amp runs the test to capture the values by the observer methods. Small-Amp serializes objects by capturing the values from its accessor methods. If the return value of an accessor method is another object, it recursively repeats the object serialization up to Nserialization times. Nserialization is a configurable value (default value is 3). The output of this step of assertion amplification is a set of trace logs which reflect the object states.

Identifying Accessor Methods

Small-Amp relies on the Pharo/Smalltalk coding conventions and therefore selects methods if they belong to protocols #accessing or #testing or when their name is identical to one of the instance variables. From the selected methods, all methods lacking an explicit return statement and all methods in the protocols #private or #deprecated are rejected and the remaining are considered as accessors.

Preventing Flaky Tests Via Trace Logs

A flaky test is a test that may occasionally succeed (green) or fail (red). This may happen if the test is asserting a non-deterministic value. Small-Amp tries to detect non-deterministic values before making assertions on them. The assertion amplification module, repeats collecting the trace logs for Nflakiness (default value is 10) times. Then it compares the observed values. If a value is not identical between all collected logs, Small-Amp marks it as non-deterministic.

Recursive Assertion Generation

Based on the type of the observed value, zero, one or more assert statements are generated. If the type is a variant of collection or an object, which include other internal values, the assertion generator uses a recursive method to build valid assertion statements. For non-deterministic values, the value is not asserted and only its type is asserted. The output of the assertion amplification step is a passing (green) test with extra assertions.

Intended Values Versus Actual Values

During assertion amplification, the assertion statements should the expected value which is deduced from an oracle. We assume that the current implementation of the program is correct, and therefore we deduce the oracle from the current state of the object under test. However, when there is a defect in the method under test, the generated assertions would verify against an incorrect oracle. This is an inherent limitation for both DSpot and Small-Amp, inherited from Regression Oracle Checking (Xie 2006).

Example

Listing 11 shows an example of a trace log collected by line 22 from Listing 10 (left) and its recursive assertion statements (right). In this example, we point out that the method timestamp is an accessor method in SmallBank class which returns a timestamp value. Since this value differs in different executions, it has been marked as a flaky value (line 8) hence only its type is asserted (line 24).

3.4 Test Selection – Prefer Focussed Tests

During each iteration of the inner loop (lines 7 to 15 in Algorithm 1 – p. 11) Small-Amp generates NmaxInputs new tests with their corresponding assertions. In the test selection step (lines 6 and 14) the algorithm selects those tests which kill mutants not killed by other tests.

First of all, Small-Amp performs a mutation testing analysis on CUT and TC and creates a list of live and uncovered mutants. Then Small-Amp selects those test methods from U (the set of amplified test methods) which increase the mutation score, thus killing a previously live or uncovered mutant. If multiple tests are killing the same mutant, the shortest test is chosen. If there are multiple short tests, the test with the least changes is chosen. In the DSpot paper, a similar heuristic is chosen, which the authors refer to as Focused Test Cases Selecting.

4 Small-Amp Extras Compared to DSpot

While the design of Small-Amp was inspired by DSpot, the lack of explicit type information forced us to make major changes but also permitted us to make improvements. This section describes additional and diverging aspects of Small-Amp compared to DSpot.

4.1 Dynamic Profiling to Collect Type Information

At the very beginning of the main algorithm (Algorithm 1 line 2), dynamic type profiling is done only once by executing the original test methods and observing the actual type information of variables.

In dynamically typed languages like Pharo, type annotations are not provided in the source code. So, performing static analysis which depend on types are challenging. In the context of Small-Amp, the most important step that relies on static code analysis is input amplification. The other steps are either based on dynamic analysis like assertion amplification, or depend on a third-party library such as selection based on mutation-testing.

In input-amplification, we can group operators into two classes as:

-

1.

Type sensitive operators. These operators heavily depend on the type information and without type information they are ineffective or impossible. An important type sensitive input amplifier in Small-Amp is method call addition. The types of variables defined in a test method must be inferred when adding a valid method call. In addition, it needs the type information of parameters in the newly called method.

-

2.

Type insensitive operators. These are all operators that are still applicable without the type information. An example is the operators amplifying literals. These operators are easy to adapt to a dynamic language because literals are distinguishable from a token representation of the source code.

To obtain accurate type information we rely on the presence of manually written tests, which should be representative for the normal behaviour of the program under test. We exploit profiling tools (commonly available in modern program environments) to extract accurate type information from the variables present in the program. The profiler is configured to attach hooks to the relevant elements in the code. When these important code elements are executed, the hooks are triggered, the profiler reads the information from the program state and logs it.

In Small-Amp, we rely on two distinct profilers:

-

A Method-proxy profiler, which collects the type of parameters in Class-Under-Test methods.

-

A Metalink based profiler which collects the type of variables in the test methods

To apply test amplification to other dynamically typed languages one needs comparable profiling technology. Some languages provide reflexive facilities that can be exploited. Python metaclasses for example allow one to transparently hook into the code proxy objects similar to the method-proxies adopted in Small-Amp. If such reflexive facilities are not available, one can resort to the debugger APIs to inspect values of variables at run-time.

Profiling by Method-Proxies

For gathering the type of parameters in methods, Small-Amp uses method proxies (Ducasse 1999; Peck et al. 2015). Proxies are methods wrapping the methods in the class under test and trigger instead of the original methods. They first log the arguments and then pass the control to the original method (Listing 12).

The main drawback of the Method-proxy profiler is that when a method is not covered by the test class, it will not be profiled. Small-Amp reports the list of such uncovered methods as one of its outputs. Using this report, a developer can decide to add new tests for uncovered methods, make them private (using an adequate protocol / method tag), or remove them.

Profiling by Metalinks

Pharo provides Metalinks as a fine-grained behavioral reflection solution (Denker et al. 2007; Costiou et al. 2020). For collecting the type of variables in the test method, Small-Amp uses Metalinks.

A metalink contains an action to perform which is defined by providing a meta-object, a selector, and also a control. Metalinks can be installed on one or more nodes in the abstract syntax tree. Listing 13 shows how metalink is defined and installed on all variable nodes in the test method.

Line 1 to 5 shows how Metalink is initialized. It says that after execution the AST node containing this link, the method logNode:context:object: will be called with the following arguments:

-

node: The static representation of the AST node. It is used to get information such as name and the position in the code.

-

context: The context of execution including dynamic values of the variables and stack. It is used to access to the values of temporary variables.

-

object: The state of the object on which the metalink is installed (in this case the test class). It is used to access to the values of instance variables.

In lines 7 to 10, all variable nodes in the test method are selected and then the link is installed on them. After installing the metalinks, the test method is executed. When the execution passes each variable node, the metalink is triggered and the logger method is called. The logger method extracts the type information from the context, logs them and returns. Then, the execution on the test method continues until the end or another metalink is triggered.

How the Collected Data is Used

The collected data from each profiler is stored as a dictionary object mapping the identifier of the profiled data to its type and a list of sample values (only for primitive types). In Small-Amp, there are two dictionaries, for the type of method parameters and the type of variable nodes. During the input amplification, when type information is needed, the corresponding dictionary is consulted.

4.2 Test Input Reduction

The input amplification step quickly produces a large number of new test inputs with the inner loop of Algorithm 1 – p. 11 – lines 7 to 15. For instance, if the number of inputs in the first iteration is |v|, this number in the second iteration grows to |v|×|v|, and in iteration i reaches |v|i. We refer to this problem as test-input explosion. Since the number of test inputs grows exponentially, either the number of transformations (Niteration) needs to be chosen as small values for being feasible to try all generated test input, or we need to reduce the number of inputs by using a heuristic to select a limited number of them.

Small-Amp uses a random selection heuristic which maximises diversity in order to select a maximum number (NmaxInputs) of test inputs. This selection is different from selection by mutation score (Section 3.4); we name it reduction.

Small-Amp reduction considers two techniques:

-

a competitive selection. a portion of test inputs (by default half of NmaxInputs) are selected completely randomly from the output of all input amplifiers.

-

a balanced selection in the remaining portion, Small-Amp assures that all input amplifiers are contributed by selecting from their outputs regarding an assigned weight. In Small-Amp, all input amplifiers are assigned a weight (it is 1 by default for all amplifiers). This maintains a diversity in the selected test inputs.

Why Diversity is Important?

Each input-amplifier algorithm performs transformations based on different considerations. As a result, the number of generated tests is different for input amplifiers. If the test inputs are selected purely random, the result will be dominated by generated tests from amplifiers generating more outputs. Therefore, we need to have a balance between the outputs from each amplifier.

As an example, we compare the number of new test inputs from a statement-removal amplifier and a statement-addition amplifier. The former has a O(S) complexity where S is the number of statements in the test method. It means that if the number of lines in the test is increased, the number of new test inputs generated by this input-amplifier shows a linear increase. However, the latter has a O(S ∗ M) complexity where M is the number of methods in the class under test. It means that the increase in the outputs depends on not only the numbers of statements in the test, but also the number of methods in the class-under-test. Now, if we select a number of generated test methods randomly, the outputs from the latter operator is more likely to be selected; so the result will be dominated by the result from the second input-amplifier.

4.3 Improving Readability Via Post-Processing

In order to make the generated tests more readable, Small-Amp adds a few steps after finishing the main loop of the algorithm (line 17 in Algorithm 1 – p. 11). These steps do not have any effect on the mutation score of the amplified test suite; they only make the test cases more readable for Small-Amp users.

Assertion Reduction

As described in Section 3.3, Small-Amp generates all possible assertions for all observation points. Consequently, the generated test methods easily include hundreds of new assertions most of which appear redundant. The assertion reducer is a post-processing step that discards all assertions that do not affect the mutation score.

Each amplified test method encompasses the identifier of all newly killed mutants. Small-Amp surrounds all assertion statements by exception handling blocks to catch exceptions, especially AssertionFailure raised from the assertion statements. Then, the mutation testing framework is run using the newly killed mutants only. When an AssertionFailure is caught, the identifier of the assertion is logged as important. Finally, Small-Amp keeps only important assertions and remove all other assertion statements.

In some cases, an assertion may call an impure accessor methods, i.e. an accessor method that alters the internal state of the object. When such assertions are removed, some of the next assertions may fail. Small-Amp runs each test method after removing unnecessary assertions, to confirm that they remain green and the mutants are still killed by the test. If the confirmation failed, the assertion reduction is not successful and all removed assertions are reinserted.

Comply with Coding Conventions

Before processing a test method, Small-Amp breaks complex statements (chains of method invocations and cascades) into an explicit sequence of message sends to permit observing state changes (see Listing 10 – p. 14). This is necessary to observe state changes during assertion amplification. In this post-process step, Small-Amp cleans up all unused temporary variables, and chooses a better name for the remaining variables based on the type of the variable. If possible, it reconstructs message chains and cascades to make the source code more readable and conform to Pharo coding conventions.

5 Evaluation

To evaluate Small-Amp, we replicated the experimental protocol introduced for DSpot (Danglot et al. 2019b). We adopted a qualitative experiment by sending pull-requests in GitHub for evaluating whether the generated tests are relevant to the developers or not (RQ1). Next, we use a quantitative experiment to evaluate the effectiveness of Small-Amp (RQ2, RQ3 and RQ4). The order of RQ1 to RQ4 is exactly the same order in Danglot et al. (2019b) to facilitate the comparing and it does not reflect the importance of the research questions. In RQ5, we make a detailed comparison of our results versus the ones in the original experiment. Finally, in RQ6, we report the time cost of the running Small-Amp, with special attention to the performance penalty induced by the additional steps (profiling and oracle reduction).

-

RQ1

Pull Requests. Would developers be ready to permanently accept amplified test cases into the test repository? We create pull-request on mature and active open-source projects in the Pharo ecosystem. We propose the improvement as a pull request on GitHub, comprising improvements on an existing test (typically due to assertion amplification) or new tests (typically the result of input plus assertion amplification). We interpret the statements where extra mutants are killed to provide a manual motivation on why this pull request is an improvement. The main contributors then review, discuss and decide to merge, reject or ignore the pull request. The ratio of accepted pull requests gives an indication of whether developers would permanently accept amplified test cases into the test repository. More importantly, the discussions raised during the review of the pull request provides qualitative evidence on the perceived value of the amplified tests.

-

RQ2

Focus. To what extent are improved test methods considered as focused? We assess whether the amplified tests don’t overwhelm developers, by assessing how many extra mutants the amplified tests kill. Ideally, the amplified test method kills only a few extra mutants as then we consider the test focussed (cfr. Section 3.4 – p. 16). We present and discuss the proportion of focused tests out of all proposed amplified tests. An amplified test case is considered focus if, compared to the original, at least 50% of the newly killed mutants are located in a single method.

-

RQ3

Mutation Coverage. To what extent do improved test classes kill more mutants than developer-written test classes? We assess whether the amplified tests cover corner cases by using a proxy — the improvement in mutation score via the mutation testing tool mutalk (Wilkinson et al. 2009). We first run mutalk on the original class under test (CUT) as tested by the test class (TC) to compute the original mutation score. We distinguish between strong tests and weaker tests, by splitting the set of test classes in half after sorting according to the mutation score. Next, we amplify the test class and compute the new mutation score. We report the relative improvement (in percentage).

-

RQ4

Amplification Steps. What is the contribution of input amplification and assertion amplification (the main steps in the test amplification algorithm) to the effectiveness of the generated tests? Here as well we use mutation score as a proxy for the added value of both the input and assertion amplification step and here as well we distinguish between strong and weak test classes. Therefore, we compare the relative improvement (in percentage) of assertion amplification against the relative improvement of input and assertion amplification combined. We report separately which amplification operators have the most impact, paying special attention to the ones which are sensitive to type information.

-

RQ5

Comparison. How does Small-Amp compare against DSpot? To analyse the differences in result between Small-Amp and DSpot, we compare the qualitative and quantitative results reported in the DSpot paper against the results we obtained for RQ1 to RQ4.

-

RQ6

Time Costs. What is the time cost of running Small-Amp, including its steps? To study the applicability of Small-Amp, we analyse the time cost of all runs in the quantitative analysis. We compare the relative time cost of each step, paying special attention to the extra overhead of profiling and oracle reduction.

5.1 Dataset and Metrics

Selecting a Dataset

Firstly, we collected some candidate projects under test from different sources: (1) We looked at the projects used in a recent paper focusing on testing in Pharo (Delplanque et al. 2019). (2) We looked at the projects introduced in “Innovation Technology Awards” section of ESUG conference from year 2014. (3) We used GitHub API to find the Pharo projects hosted in GitHub with more than 10 forks and 20 stars.

Then we applied a set of inclusion and exclusion criteria. Our projects needs to be hosted in GitHub and written in Pharo. They should include a test suite written in sUnit, and can run in Pharo 8 (stable version). For not being overwhelmed with resolving dependencies, they need to support installation with Metacello and not depend on system level packages like databases or a special installation service. We discarded all libraries that are part of the Pharo system such as collections, or compiler.

Based on the mentioned criteria, we selected randomly 20 projects. Then, we rejected projects having less than 4 green test classes with known class under test and mutation coverage less than 100.

Similar to the experimental protocol in DSpot (Danglot et al. 2019b), we select randomly 4 test classes, 2 high mutation coverage and 2 low, for each project. If a project lacks at least 2 test classes having high (or low) mutation score, we select from lower (higher) covered classes instead. As result, we have 52 test classes, 27 of them considered strong (high mutation score) and 25 considered weaker (lower mutation score).

Table 2 shows the descriptive statistics of the selected projects with a short description, area of usage, number of test classes and test methods and their version based on git commit id, and selected test classes (a superscript h is used to indicate a test class with high mutation coverage, and l is used to indicate low mutation coverage).

Detecting the Class Under Test

Small-Amp needs a test class and its class-under-test as inputs. Finding a mapping between a test and a class can be challenging. As the default mapping heuristic, we rely on the pattern used by Pharo IDE to detect a test method for a class. The Pharo code browser finds a unit test for a class as follows: it adds the postfix "Test" to the name of the class. If there is such class loaded in the system that is a subclass of TestCase it is considered as the unit test class. If this heuristic is not followed in a project, one can explicitly define the class-under-test by overriding a hook method in test classes.

Metrics

We adopt the same metrics used in the experimental protocol in Danglot et al. (2019b):

-

All killed mutants (#Mutants.killed): The absolute number of mutants killed by a test class in a given class under test.

-

Mutation score (%M.Score): The ratio (in percentage) of killed mutants over the number of all mutants injected in the class under test.

$$ \%M.Score = 100 \times \frac{\#Mutants.killed}{\#Mutants.All} $$ -

Newly killed mutants (#Mutants.killednew): The number of all new mutants that are killed in an amplified version of the test class.

$$\#Mutants.killed_{new} = \#Mutants.killed_{amplified} - \#Mutants.killed_{original}$$ -

Increase killed (%Inc.killed): The ratio (in percentage) of all newly killed mutants over the number of all killed mutants.

$$ \%Inc.killed = 100 \times \frac{\#Mutants.killed_{new}}{\#Mutants.killed_{original}} $$

5.2 RQ1 — Pull Requests

In this experiment, we choose an amplified test method for each project and send a pull-request in GitHub. Before the experiment, we sent a pilot pull-request to learn how developers deal with external contributions. Firstly, we explain the pilot pull-request and then all pull-requests are described one by one. Table 3 demonstrates the status as well as the url of each pull-request per project.

5.2.1 Pull-Requests Preparation

Each pull-request contains a single amplified test methodFootnote 4. In order to attract the developers’ interest, we try to select a test method testing an important class/method. We select the class under test by scanning their name and relating the names to the context of the project. For example, we know the project Zinc is a HTTP component, so the class ZnRequest should be a core class. We run the tool on the selected test class, and then scan the generated test methods to select one of them. In selecting an amplified test method, we still consider the vocabularies in the name of the original test method. We also prioritize the tests with more mutants killed.

In addition, we need to explain why a test is valuable in each pull-request. Since some developers may not be familiar with the concept of mutation testing, we need to understand the test in advance and explain it in simpler words.

We inspect at the selected test method and try to understand the effect of the killed mutant and come up with an easy to understand explanation. Examples of explanations are: increasing branch coverage (PolyMath), raising an exception (pharo-project, DataFrame), covering new state revealing methods (Bloc, Zinc), reducing technical debt (GraphQL).

After selecting an amplified test method, we perform small corrections on the generated code, as a normal Pharo developer would do when see an auto-generated code. These corrections include choosing more meaningful names for the test method, variables and string constants, or deleting the superfluous lines, and adding comments for small hints.

All preparation steps are performed by the first author and are reviewed by the second and third authors. In the time of experiment, the familiarity of the first author about the projects was only studying parts of provided readme description in GitHub. So, he was totally unfamiliar with the projects, he had not contributed to any of the projects, and had never reviewed their code. In fact this shows although there might be more interesting tests for experts, a normal Pharo developer with limited knowledge about the projects is able to review the output and detect some useful test methods that are merged to the projects. The preparation of the tests was quite straightforward and normally did not take more than one hour for each project.

5.2.2 Pilot Pull-Request

Initially, we sent a pull-requestFootnote 5 to Seaside project containing the suggestion for adding a set of new lines into an existing test method. The main goal of this pull-request was to learn more about how developers deal with pull-requests from strangers.

We consider the fact that Seaside project is a framework for web application development and we scanned the name of classes and selected WARequestTest because we expected this test class is related to a core class-under-test which interacts with Http requests. Then, we amplified the test class and selected the test method with the most mutants killed.

The selected new test method was able to kill 6 new mutants and was the result of a cooperation between assertion amplification (Section 3.2) and input amplification (Section 3.3). We merged the parts of amplified test into the original test method (#testPostFields). The test is shown in Fig. 1. Lines 5 to 10 are produced by assertion amplification on the original test method (#testPostFields). Line 15 is added by the method-call-adder input-amplifier.

We wrote a description for the pull-request trying to explain why this test is useful. We also expressed that the test method is the output of a tool, because it is important to inform developers in advance that they are participating in an experiment.

After a few days the test was merged by one of the project’s developers. Moreover, the developer left a valuable comment containing the following points:

-

The suggestions do not fit this test method: The developer said “I expected the testPostfields unit test method to focus on testing the postFields”. We agree with his remark. If the suggested changes do not have a semantic relation to the original test method, it should be moved to another test or a new one. We considered this advice in the subsequent pull-requests.

-

Usefulness of the result to refactoring the tests: The developer also stated “the result of the test amplification makes me evaluate the existing unit tests and refactor them to improve the test coverage and test factorization”. This shows that even if the immediate results of test amplification are not tidy enough, they still help refactor existing tests.

5.2.3 Pull-Request Details

In the following parts we describe the details on the pull-requests on each project.

PolyMath

We sent a pull-requestFootnote 6 to this project containing the suggestion for adding a new test method in the test class PMVectorTest. The suggested test method is shown in Fig. 2.

This test method is testing the call of the method #householder on two different vectors. Before this test, the method #householder was not covered in this test class.

The method-call-adder input amplifier adds calls to an existing method in the public interface of a class to the test to force the object under test in a new state. We merged two of them in a new test method that execute two different branches in the test method (based on the condition x ≤ 0). The former vector (line 75 in Fig. 2) forces the ifTrue branch and the latter vector (line 80 in Fig. 2) forces ifFalse branch. Note that the comment text (line 75) is added manually to increase the readability of the test.

The original test method included two assertions to confirm the type of the returned value of the method (self assert: w class equals: Array). The developers asked us to omit these assertion statements. We changed the pull request accordingly and it was merged immediately.

Pharo-Launcher

We sent a pull-requestFootnote 7 to this project containing the suggestion for adding a new test method in the test class PhLImportImageCommandTest. The suggested test method is shown in Fig. 3.

This test is produced from the original test method of testCanImportAnImage which verifies an image can be imported using a valid filename. Small-Amp applies a literal mutation on the file name (‘foo.image' changed to ‘fo.image') that results an invalid filename and consequently raising a FileDoesNotExistException error. While preparing the test for the pull-request, we modified the name of the test method and the file to be more meaningfull.

The pull-request was merged in the same day with this comment: “Indeed, the test you are adding has a value. Good job SmallAmp”.

DataFrame

We sent a pull-requestFootnote 8 to this project containing the suggestion for adding a new test method in the test class DataFrameTest. The suggested test method is shown in Fig. 4.

The variable df is an instance variable that has been initialized in the #setUp method. It includes a tabular data mixed from numbers and texts. The initial amplified test method was generated by adding the method #range as the first statement in one of the original test methods. We recognized the remaining statements as superfluous lines and removed all of them. We also added a comment including the exception description.

This test method makes it explicit that calling the method #range on a DataFrame object containing non-numerical columns throws an exception. With this new test it becomes an explicit part of the contract for DataFrame.

The pull-request was merged after a few weeks. A developer of the project commented: “Small-amp seems to be a very valuable tool!”

Bloc

We sent a pull-requestFootnote 9 to this project containing the suggestion for adding a new assertion in an existing test method in the test class BlKeyboardProcessorTest. The suggested test method is shown in Fig. 5.

By calling the state revealing method #keystrokesAllowed, the assertion verifies the correctness of the object state after an #processKeyDown: event. This test is the result of combining assertion-amplification with oracle-reduction. Normally, the assertions-amplification step generates lots of assertions, and the oracle-reduction module removes all assertion statements that do not kill any mutant. So, the test code did not need any special preparation and we only need to provide a comment to explain the test method.

The pull-request was also merged after a few weeks with a positive comment.

GraphQL

We sent a pull-requestFootnote 10 to this project containing the suggestion for adding a new test method in the test class GQLSSchemaNodeTest. The suggested test method is shown in Fig. 6.

This test method verifies the return value of directives in an schema object. The returned value is generated in the method GQLSSchemaNode >> initializeDefaultDirectives and contains technical debt. This test method guards against future evolutions which may break assumptions made by clients. We selected a meaningful name for the test and wrote a comment text. We also added back some of the assertions removed by oracle-reduction step. The pull-request was merged after a few days.

Zinc

We sent a pull-requestFootnote 11 to this project containing the suggestion for adding a new test method in the test class ZnRequestTest. The suggested test method is shown in Fig. 7.

This test method calls the method #setAcceptEncodingGzip on an request object. Then calls another method #acceptsEncodingGzip to verify the change. Both of these methods were not covered in this test class before this test method.

This method is built by cooperating three module of Small-Amp. First, method-call-adder input amplifier adds a new method call. Then assertion amplification inserts a set of new assertions after the added method call. And finally, after the main amplification loop is finished, the oracle-reduction step rejects all superfluous assertion statements. This test method did not need much preparation and we only selected a meaningful name for it. The pull-request was merged in the same day.

DiscordSt

We sent a pull-requestFootnote 12 to this project containing the suggestion for adding a new test method in the test class DSEmbedImageTest. The suggested test method is shown in Fig. 8.

The method covers the method #extent which was not covered in the test class before. This test method did not need much preparation and we only selected a meaningful name for it. The pull-request was merged after a few days.

MaterialDesignLite

We sent a pull-requestFootnote 13 to this project containing the suggestion for adding two new test methods in the test class MDLCalendarTest. The suggested test methods are shown in Fig. 9.

Both of test methods are similar and are created by adding a new method call to the test input. The tests are created for the Calendar widget and verify correctness of #selectPreviousYears and #selectNextYears methods. In these test methods, the oracle-reduction step removed most of the assertions and it only preserved the first assertion killing the mutant: self assert: calendar yearsInterval fourth equals: 2006. We replaced the assertions with more human readable assertions (asserting first and last of the interval). The pull-request was merged the day after.

PetitParser2

We sent a pull-requestFootnote 14 to this project containing the suggestion for adding a new test method in the test class PP2NoopVisitorTest. The suggested test method is shown in Fig. 10.

The test method tests the value of currentContext in result object. This test method resulted from assertion amplification combined with oracle-reduction. The test had two assertions: self deny: visitor isRoot and self assert: visitor currentContext class equals: PP2NoopContext. We added back some of removed assertions relating to currentContext and also removed the self deny: visitor isRoot to make the test more focused. The pull-request is not merged up to the date of writing (July 5, 2022).

OpenPonk

We sent a pull-requestFootnote 15 to this project containing the suggestion for adding a set of new lines in an existing test method in the test class OPDiagramTest. The suggested test method is shown in Fig. 11.

The original test method is presented in Listing 14.

Small-Amp has broken the statement at line 4 in Listing 14 (the result is visible in lines 104 and 108 in Fig. 11) and then added a series of assertions. Since this test is dedicated to test model, we kept all assertions reflecting the state of model and removed other assertions. So, the assertions in lines 104 to 107 verify the state of a freshly initialized OPDiagram object (where model is nil), and the assertions in lines 109 to 112 verify the public API through the accessor methods. The pull-request is not merged up to the date of writing this paper.

Telescope

We sent a pull-requestFootnote 16 to this project containing the suggestion for adding a new test method in the test class TLNodeCreationStrategyTest. The suggested test method is shown in Fig. 12.

The test method verifies the state of the returned object from calling copyAsSimpleStrategy. This method is never covered in the test class. It also contain technical debt. The call to copyAsSimpleStrategy is added by method-call-addition amplifier and the state of the returned value is asserted via assertion-amplification. We kept all assertions related to the returned value, and removed all other superfluous lines to make the test more readable. The pull-request is not merged up to the date of writing this paper.

5.3 RQ2 — Focus

We use the results in Table 4 for answering the next research questions. These tables present the result of test amplification on the all selected classes selected in our dataset. In Table 4, the first 104 rows represent test amplification for test classes with high mutation coverage, while the remaining of the rows show the test classes with poor mutation coverage. Small-Amp algorithm (Algorithm 1) has a stochastic nature, especially test input reduction (Section 4.2) which heavily depends on randomness. Therefore, we ran the algorithm three times on each test class to observe the effect of randomness on the results.

In addition, we ran the algorithm another time by disabling the profiling and the type sensitive operator for investigating the effectiveness of type profiler (denoted by ∘∘∘).

The columns in this table indicate:

-

Id: Used as a reference for the row in the table.

-

Class: The name of the test class to be amplified.

-

# Test methods original: The number of test method in the test class before test amplification.

-

# loc CUT: The number of lines in the class under test.

-

% Mut. score: The mutation score (percentage) of the test class before test amplification.

-

# New test methods: The absolute number of newly generated test methods after test amplification.

-

# Focused methods: The absolute number of focused methods in the generated test methods.

-

# Killed mutants original: The absolute number of killed mutants by the test class before test amplification.

-

# Newly killed amplified: The absolute number of newly killed mutants by the test class after test amplification.

-

% Increase killed amplified: The increase (in percentage) of killed mutants by the test class after test amplification.

-

# Newly mutant A-amp: The absolute number of newly killed mutants only by Assertion amplification (Niteration = 0 in Algorithm 1).

-

% Increase killed only A-amp: The relative increase (in percentage) of killed mutants only by assertion amplification.

-

# Newly killed type aided: The absolute number of newly killed mutants by type sensitive input amplifiers.

-

% Increase killed type aided: The relative increase (in percentage) of increase killed mutants by type sensitive input amplifiers.

-

Time: The duration of test amplification process in the hours-minutes-seconds (h:m:s) format.

RQ2: To what extent are improved test methods considered as focused?

For answering this research question, we use values in the column # Focused methods. We use the same definition for focused methods as DSpot:

Focus is defined as where at least 50% of the newly killed mutants are located in a single method. Danglot et al. (2019b)

Generating focused tests is important because analysing a focused test is easier (most mutants reside in the same method under test) hence should take less review time from developers. For calculating this value, we use generated annotations by Small-Amp on the newly generated test methods which show the details of the killed mutants by the method.

We see that almost all amplified tests are focussed. Only on two cases (BlInsetsTest, #117 and #118) we see that some generated methods are not focused.

5.4 RQ3 — Mutation Coverage

RQ3: To what extent do improved test classes kill more mutants than developer-written test classes?

In 86/156 cases (55.12%), Small-Amp successfully amplified an existing test class. The distribution of the number of killed mutants, and increase kill are presented in Figs. 13 and 14 for all test classes, highly covered as well as poorly covered ones. The number of newly killed mutants in these classes (column # Newly killed amplified) varies from 1 up to 50 mutants (case #119). In the executions amplifying test classes having high coverage, Small-Amp is able to amplify 38 out of 78 (48.7%), and for the test classes having poor coverage this number is 48 out of 78 (61.5%). Therefore, we see more amplification in the classes with poor coverage. The relative increase in mutation score (column % Increase killed amplified) varies from 2.08% (cases 149-151) up to 200% (cases 105-107). It is also observable regarding to these metrics that amplification on classes with poor coverage is more successful.

Surprisingly, despite running MuTalk with its all mutation operators, the mutation testing framework did not manage to create any mutant for the class TLLegendTest (cases 177-179). MuTalk mutation operators work statically and only a limited set of well-known transformations are provided in the tool.

The Effect of Randomness

In this section we report the effect of randomness on the results. Based on the Algorithm 1, the main randomness happens during the input-amplification (Line 10) and oracle reduction (Line 12) steps. Therefore, we expect to see the minimum difference in the results generated by assertion amplification (Line 5). The column 11 (# Newly mutant A-amp) shows the absolute number of killed mutants only by assertion amplification. These values are identical in executions for all classes except the case 81 (TLExpandCollapseNodesActionTest). The reason for this exception is that a specific mutant may be killed by input amplification in a test method, and if it is not killed, it will be killed by assertion amplification in the next test method. Based on the information presented in Table 4, regardless of time, the same result are achieved from different executions in 43 out of 52 test classes.

For a deeper investigation, we randomly select 5 test classes from the cases that are affected by randomness, and 5 test classes from the cases without an observable change. Then, we run Small-Amp on these classes for 10 times (10 class × 10 times = 100 runs). Table 5 shows the results of this experiment. In the column with title X10, we report the number of newly killed mutants for each run in order, which is the most important metric for amplification. In the column X3, we also echo the values from column # Newly killed amplified in Table 4 to make the comparison easier. The next two columns compare the Median and Average values in these two columns. The first 5 rows in this table are the cases affected by randomness, and the next 5 rows are cases without an observable effect.

When we compare the values in columns X10 with X3, we still do not see any visible effect from randomness in the rows 6 to 10. In the first 5 rows, we see the median values and average values in both experiments are similar. In three cases (rows 2, 3 and 4), the values of X3 did not achieve the maximum number of killed mutants seen in X10. In one case (row 1), we see some runs lacking any improvements in X10 while all of its runs in X3 shows a successful amplification. To sum up, we see that the randomness has an effect on the result, but the impact is minimal and does not invalidate the findings. In addition, repeating the analysis 3 times is justifiable since running 10 times adds little extra information for a large increase in processing time.

5.5 RQ4 — Amplification Steps

RQ4: What is the contribution of input amplification and assertion amplification (the main steps in the test amplification algorithm) to the effectiveness of the generated tests?

As we reported in Section 5.4, in 86/156 cases (55.12%), the improvements are achieved from input-amplification and assertion-amplification cooperation. In this research question, we study the results generated only by assertion-amplification, and also generated by the type sensitive operators.

Contribution of the Assertion-Amplification Step

In this section, we filter all amplified test methods that are generated only by assertion amplification. In other words, we only account the improvements from all amplified test methods that are selected from the first assertion amplification (Line 5 in Algorithm 1). The column 11 (# Newly mutant A-amp) shows the absolute number of killed mutants only by assertion amplification; column 12 (% Increase killed only A-amp) also shows the relative increase. The reported results in Table 4 shows that in 61/156 cases (39.1%) at least 1 mutant is killed only by the assertion amplification step. Improvements in four classes (cases 53-55; 65-67; 73-75; 149-151) achieved only by adding new assertions.

Contribution of the Type Sensitive Input Amplifiers

Here, we filter all amplified test methods that in at least one of its transformations, a type sensitive input amplifier (in our case method-call-adder) is used. While type-sensitive operators benefit the information provided by dynamic profiler step (Section 4.1), the contribution of these operators is important because it can show the effectiveness of dynamic profiling.

Column 13 (# Newly killed type aided) shows the absolute number of newly killed mutants by the type sensitive input amplifiers. Column 14 (% Increase killed type aided) also shows the relative increase. We see that in 30/156 cases (19.2%) the type sensitive input amplifiers contribute to the result. Especially for 2 test classes (WebGrammarTest rows 37-39, and ZnStatusLineTest rows 125-127), Small-Amp was able to amplify the tests only by the type sensitive input operators.

To assess the impact of type profiling, we quantified the effect of the steps that rely on type profiling. We therefore extended the analysis with an additional evaluation step where we disabled the type profiler in the algorithm, as well as the type sensitive input amplifier (method addition amplifier) and ran the tool on all test classes. The results for this experiment are mentioned in the forth row for each test class in Table 4 (denoted by ∘∘∘). We focus on cases in which type-sensitive input amplifiers improved the coverage in at least two of three runs (10 test classes, cases starting with 33, 37, 101, 109, 113, 117, 125, 165, 173, 185). In 8 cases we see that disabling the profiling and also the type sensitive operators decrease the improvements and only in two cases we see no difference (case 101) or a slight improvements (case 117).

5.6 RQ5 — Comparison

RQ5: How does Small-Amp compare against DSpot?

With this research question, we compare our results from quantitative and qualitative studies with corresponding results from DSpot reported in Danglot et al. (2019b).

Table 6 shows how the results from Small-Amp and DSpot are comparable. Small-Amp is validated against 52 test classes. It has successfully amplified 28, 29 and 29 of them (≈ 55%), while DSpot has been validated against 40 test classes of which 26 cases were improved (65%). The most notable differences between the results from Small-Amp and DSpot are the number of mutants in two ecosystems and consequently the number of newly generated test methods (denoted by ∗ in the Table 6). These differences can be attributed to the use of two different mutation testing frameworks in two different languages. Small-Amp uses MuTalk which has notably fewer mutation operators than the DSpot counterpart PITest. To reduce the effect of the mutation testing framework, we calculate the relative increase in killed mutants within the two ecosystem as follows:

This value is shown in the row number 12 in the Table 6 for two experiments. It is 14.03% in total for Small-Amp, and 20.26% for DSpot.

We have also submitted 11 pull-requests by using Small-Amp outputs and 8 of them were merged by developers (≈ 72%), while Danglot et al. submitted 19 pull requests derived from DSpot output and 13 of them merged successfully (68%).

Finally, it is worth mentioning that Small-Amp is configured as NmaxInput = 10 which means the reduce step (Algorithm 1, line 12) select 10 test-input in each iteration. This value for DSpot is not reported in their paper. Increasing this hyperparameter may improve the result, but it also may increase the execution time significantly.

5.7 RQ6 — Time Costs

RQ6: What is the time cost of running Small-Amp, including its steps?