Abstract

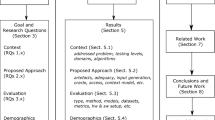

The evolution of a new technology depends upon a good theoretical basis for developing the technology, as well as upon its experimental validation. In order to provide for this experimentation, we have investigated the creation of a software testbed and the feasibility of using the same testbed for experimenting with a broad set of technologies. The testbed is a set of programs, data, and supporting documentation that allows researchers to test their new technology on a standard software platform. An important component of this testbed is the Unified Model of Dependability (UMD), which was used to elicit dependability requirements for the testbed software. With a collection of seeded faults and known issues of the target system, we are able to determine if a new technology is adept at uncovering defects or providing other aids proposed by its developers. In this paper, we present the Tactical Separation Assisted Flight Environment (TSAFE) testbed environment for which we modeled and evaluated dependability requirements and defined faults to be seeded for experimentation. We describe two completed experiments that we conducted on the testbed. The first experiment studies a technology that identifies architectural violations and evaluates its ability to detect the violations. The second experiment studies model checking as part of design for verification. We conclude by describing ongoing experimental work studying testing, using the same testbed. Our conclusion is that even though these three experiments are very different in terms of the studied technology, using and re-using the same testbed is beneficial and cost effective.

Similar content being viewed by others

References

Asgari S, Basili V, Costa P, Donzelli P, Hochstein L, Lindvall M, Rus I, Shull I, Tvedt R, Zelkowitz M (2004) Empirical-based estimation of the effect on software dependability of a technique for architecture conformance verification, ICSE/DSN 2004 twin workshop on architecting dependable systems (WADS 2004), Edinburgh, Scotland

Basili V, Donzelli P, Asgari S (2004) A unified model of dependability: capturing dependability in context. IEEE Softw 21(6):19–25

Bhansali S, Nii HP (1992) Software design by reusing architectures. Proceedings of the Seventh Knowledge-Based Software Engineering Conference, McLean, Virginia, USA, pp 100–109

BetinCan A, Bultan T, Lindvall M, Lux B, Topp S (2005) Application of design for verification with concurrency controllers to air traffic control software. In: Proceedings of 20th IEEE/ACM international conference on automated software engineering, pp 14–23

Boehm B, Huang L, Jain A, Madachy R (2003) The nature of information system dependability—a stakeholder/value approach. USC Technical Report

Brat G, Havelund K, Park S, Visser W (2000) Java PathFinder—a second generation of a Java model checker. In: Proceedings of the workshop on advances in verification, July 2000, Chicago, USA

Brooks FP (1995) The mythical man-month. Addison Wesley, Reading, MA

Bultan T, Yavuz-Kahveci T (2001) Action language verifier. In: Proc. 16th IEEE international conference on automated software engineering, San Diego, USA, 382–386

Dennis G (2003) TSAFE: building a trusted computing base for air traffic control software. Masters Thesis, Computer Science Dept., Massachusetts Inst. Technology

Donzelli P, Basili V (2006) A practical framework for eliciting and modeling system dependability requirements: experience from the NASA high dependability computing project. J Syst Softw 79(1):107–119

Erzberger H (2001) The automated airspace concept. In: 4th USA/Europe air traffic management R&D seminar, Santa Fe, New-Mexico, USA

Erzberger H (2004) Transforming the NAS: the next generation air traffic control system. In: 24th International Congress of the Aeronautical Sciences, Yokohama, Japan

Godfrey MW, Lee EHS (2000) Secrets from the Monster: extracting Mozilla’s software architecture. In: Proc 2nd symp. constructing software engineering tools (CoSET00), Limerick, Ireland, June. ACM Press, New York

Huynh D, Zelkowitz MV, Basili VR, Rus I (2003) Modeling dependability for a diverse set of stakeholders (Fast abstracts), Distributed Systems and Networks, San Francisco, CA, June, B6–B7

International Federation for Information Processing (IFIP WG-10.4) http://www.dependability.org

Laprie J-C (1992) Dependability: basic concepts and terminology, dependable computing and fault tolerance. Springer-Verlag, Vienna, Austria

Lehman MM (1996) Laws of software evolution revisited. In: European Workshop Software Process Technology, Nancy, France, October 1996. Springer, Berlin Heidelberg New York, pp 108–124

Lehman MM, Belady LA (1985) Program evolution: processes of software change. Harcourt Brace Jovanovich, London

Lindvall M, Rus I, Shull F, Zelkowitz MV, Donzelli P, Memon A, Basili VR, Costa P, Tvedt RT, Hochstein L, Asgari S, Ackermann C, Pech D (2005) An evolutionary testbed for software technology evaluation. NASA Journal of Innovations in Systems and Software Engineering 1:3–11

Memon A, Soffa M, Pollack ME (2001) Coverage criteria for GUI testing. 8th European software engineering conference (ESEC) and 9th ACM SIGSOFT international symposium on the foundations of software engineering (FSE-9). Vienna University of Technology, Austria, pp 256–267

Memon A, Banerjee I, Nagarajan A (2003) What test oracle should I use for effective GUI testing? In: IEEE international conference on automated software engineering (ASE’03), Montreal, Canada, pp 164–173

Memon A, Nagarajan A, Xie Q (2005) Automating regression testing for evolving GUI software. Journal of Software Maintenance and Evolution: Research and Practice 17:27–64

Miodonski P, Forster T, Knodel J, Lindvall M, Muthig D (2004) Evaluation of software architechtures with eclipse, Institute for Empirical Software Engineering (IESE)-Report 107.04/E, Kaiserslautern, Germany

Randel B (1998) Dependability, a unifying concept. In: Proceedings of computer security, dependability and assurance: from needs to solutions, Williamsburg, VA. IEEE, Computer Society Press, Los Alamitos, CA, pp 16–25

Rus I, Basili V, Zelkowitz M, Boehm B (2002) Empirical evaluation techniques and methods used for achieving and assessing high dependability. Workshop on dependability benchmarking, The international conference of dependable systems & networks , June, Washington , DC, pp F19–F20

Tvedt RT, R Costa P, Lindvall M (2002) Does the code match the design? A process for architecture evaluation. In: IEEE, Proceedings of the international conference on software maintenance. Montreal, Canada, pp 393–401

Acknowledgements

This work is sponsored by NSF grant CCF0438933, “Flexible High Quality Design for Software” and by the NASA High Dependability Computing Program under cooperative agreement NCC-2-1298. We thank our HDCP team members at the University of Southern California, especially Dr. Barry Boehm for fruitful collaboration. We also thank the reviewers for insightful comments on the paper and Jen Dix for proof-reading.

Access to the testbed

Please contact Mikael Lindvall at mlindvall@fc-md.umd.edu for further information on how to obtain access to the testbed and related artifacts. Several versions of the testbed exist and determining exactly which one is the most appropriate depends on the purpose of the experiment.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lindvall, M., Rus, I., Donzelli, P. et al. Experimenting with software testbeds for evaluating new technologies. Empir Software Eng 12, 417–444 (2007). https://doi.org/10.1007/s10664-006-9034-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-006-9034-0