Abstract

Online collaborative learning (OCL) has been a mainstream pedagogy in the field of higher education. However, learners often produce off-topic information and engage less during online collaborative learning compared to other approaches. In addition, learners often cannot converge in knowledge, and they often do not know how to coregulate with peers. To cope with these problems, this study proposed an immediate analysis of interaction topics (IAIT) approach through deep learning technologies. The purpose of this study is to examine the effects of the IAIT approach on group performance, knowledge convergence, coregulation, and cognitive engagement in online collaborative learning. In total, 60 undergraduate students participated in this quasi-experimental study. They were assigned to either the experimental or the control groups. The students in the experimental groups conducted online collaborative learning with the IAIT approach, and the students in the control groups conducted online collaborative learning only without any particular approach. The whole study lasted for three months. Both qualitative and quantitative methods were adopted to analyze data. The results indicated that the IAIT approach significantly promoted group performance, knowledge convergence, coregulated behaviors, and cognitive engagement. The IAIT approach did not increase learners’ cognitive load. The results, together with the implications for teachers, practitioners and researchers, are also discussed in depth.

Similar content being viewed by others

1 Introduction

Online collaborative learning (OCL) refers to a pedagogy in which learners who are geographically separated learn together online to complete tasks and solve problems (Reeves et al., 2004). As an effective pedagogy, online collaborative learning can engage students in learning together anytime and anywhere (Lampe et al., 2011). The advantages of online collaborative learning are well known, including improving learning performance (Ng et al., 2022), social skills (Meijer et al., 2020), and problem-solving abilities (Rosen et al., 2020). During online collaborative learning, learners often generate a large amount of online discussion content. Chen et al. (2021) found that learners often become lost among large amounts of online discussion content because there is lack of an informative presentation format for online discussion content. Gao et al. (2013) also revealed that the effectiveness of online discussion would decrease if there was a lack of visual cues and real-time feedback during online collaborative learning. Furthermore, Peng et al. (2020) revealed that learners often cannot locate and track interaction topics during online discussion. There is often off-topic information or shallow discussion during online collaborative learning, which results in deviation from collaborative learning tasks and low learning performance (Wu, 2022).

To cope with these problems, previous studies have adopted manual coding or traditional machine learning methods to detect topics, especially off-topic information (Wu, 2022). However, the accuracies of traditional machine learning methods were very low (Wu, 2022). With the rapid development of artificial intelligence technologies, deep learning technologies have emerged and been widely adopted in the field of education. Deep learning technologies are based on learning architectures called deep neural networks (DNNs) that can overcome the shortcomings of shallow networks (Shrestha & Mahmood, 2019). DNNs are conceptualized as the neural networks used in deep learning (Sze et al., 2017; Liu et al., 2017) revealed that DNNs can yield more competitive results than traditional machine learning methods. Nevertheless, few studies have adopted deep neural networks (DNNs) to analyze interaction topics immediately in an online collaborative learning context. In fact, automated topic detection is beneficial for both teachers and students to quickly graph the main content during online discussion (Ahmad et al., 2022). The automatic analysis of interaction topics can contribute to formative assessment of collaborative learning processes (Wong et al., 2021). Therefore, it is necessary to analyze interaction topics in real time during online collaborative learning.

This study aims to propose an innovative approach to the immediate analysis of interaction topics through DNNs to promote group performance, knowledge convergence, cognitive engagement, and coregulation in the OCL context. Group performance is conceptualized as the quantity or quality of the products yielded by group members (Weldon & Weingart, 1993). Knowledge convergence is defined as learners having knowledge of the same concepts as their peers (Weinberger et al., 2007). Cognitive engagement is defined as the extent to which one is thinking about the learning activity (Ben-Eliyahu et al., 2018). Coregulation refers to dynamic regulatory processes through which individuals internalize social and cultural influences (Volet et al., 2009). In addition, Wu et al. (2018) found that the use of technology may increase cognitive load. Thus, the research questions are addressed as follows:

-

1.

Can the immediate analysis of interaction topic (IAIT) approach improve group performance in comparison with the traditional online collaborative learning (TOCL) approach?

-

2.

Can the IAIT approach promote knowledge convergence?

-

3.

Can the IAIT approach enhance cognitive engagement?

-

4.

Can the IAIT approach promote coregulation?

-

5.

Can the IAIT approach increase cognitive load?

2 Literature review

2.1 Topic detection in online learning

A topic refers to a set of activities that are strongly related to seminal real-world events (Allan, 2002). Topic detection is defined as an automatic technique for finding topically related material in streams of data (Wayne, 1997). Recently, topic detection has been widely used in the field of online learning. The purpose of topic detection is to detect latent topics in online learning and help teachers guide and intervene with learners to improve learning performance (Peng et al., 2020). Typically, there are two approaches to detecting topics in the online learning field. One approach is to utilize traditional methods; the other approach is to utilize deep learning techniques.

Many studies have adopted traditional methods to detect topics in the field of online learning. For example, Li et al. (2015) employed key terms to detect hot topics in online learning communities. Chen et al. (2021) adopted Latent Dirichlet Allocation (LDA) to detect topics in asynchronous online discussion to help learners grasp discussion topics. Wong et al. (2019) integrated unsupervised and supervised methods to extract topics from Massive Open Online Course (MOOC) discussion forums. Wu (2022) combined traditional machine learning methods and manual methods to detect off-topic information in online discussion. Shahzad and Wali (2022) utilized word mover distance, word embedding, and a random forest algorithm to detect off-topic essays.

Recently, deep learning techniques, especially deep neural network models, have been utilized for topic detection. DNN is a neural network modeled as a multilayer perceptron trained with algorithms to learn representations without any manual design of feature extractors (Shrestha & Mahmood, 2019). Deep neural networks are able to achieve higher performance than shallow neural networks and traditional machine learning methods since deep neural networks can extract complex and high-level features (Sze et al., 2017). Previous studies have employed deep neural network models to detect MOOC and social media topics. For example, Xu and Lynch (2018) adopted deep neural network models, namely, BiLSTM (bidirectional long short-term memory model), to detect question topics in MOOC forums. Asgari-Chenaghlu et al. (2020) employed bidirectional encoder representations from transformers (BERT) to detect topics in social media. BERT was proposed by Devlin et al. 2019), who revealed that BERT is empirically powerful since it can be fine-tuned to capture the semantic relationships among labels. However, very few studies have adopted BERT to detect interaction topics in the online collaborative learning context. This study aims to close the research gaps to examine the effectiveness of utilizing BERT to detect interaction topics in an online collaborative learning context.

2.2 Knowledge convergence

Knowledge convergence is a main goal of collaborative learning (Mercier, 2017). Roschelle and Teasley (1995) believed that the mission of collaborative learning is to construct a shared understanding of interaction topics through knowledge convergence. However, learners often cannot converge in knowledge during online collaborative learning (Chen, 2017). For instance, learners often discuss irrelevant or off-topic information during online collaborative learning, which results in diverging knowledge. Learners cannot grasp the whole group’s interaction topics, and they often become lost during online discussion (Chen et al., 2021).

To promote knowledge convergence, different strategies have been employed in previous studies, including online reciprocal peer feedback (Chen, 2017), collaborative learning activities (Draper, 2015), collaborative concept maps (Chen et al., 2018), and learning goals (Mercier, 2017). In addition, Hernández-Sellés et al. 2020) found that cognitive, social, and organizational interactions contribute to reaching knowledge convergence in collaborative learning. Zheng et al. (2022a) adopted a learning analytics-based method to promote knowledge convergence in the CSCL context. Nevertheless, there is a paucity of studies analyzing interaction topics in real time to facilitate knowledge convergence.

2.3 Cognitive engagement

Cognitive engagement plays a very important role in online learning (Richardson & Newby, 2006). It also represents the level of mental involvement in learning (Li et al., 2021). Furthermore, cognitive engagement can also reflect students’ knowledge construction level when completing a learning task, and it denotes lower-level and higher-level cognitive strategies (Liu et al., 2022). Previous studies have adopted traditional or automated measurement methods to measure cognitive engagement. Traditional measurement methods include self-report scales (Greene, 2015), interviews, observations, and ratings (Li et al., 2021). Automated measurement methods include using log files, physiological or neurological sensors, computer vision, and machine learning methods to automatically measure cognitive engagement (Whitehill et al., 2014).

Furthermore, cognitive engagement is closely related to productive learning outcomes (Chi & Wylie, 2014), but learners often cognitively engage less during the process of online collaborative learning compared to other learning processes (Lin et al., 2020). Previous studies have adopted different strategies to promote cognitive engagement. For example, Wen (2021) adopted augmented reality technologies to enhance cognitive engagement. Ouyang and Chang (2019) proposed that empowering learners to take leadership roles contributes to enhancing cognitive engagement in online discussion. Several studies have also adopted learning analytics intervention to promote cognitive engagement (Kew & Tasir, 2022; Chen et al., 2022); however, there is a paucity of empirical research on instantly analyzing interaction topics to promote cognitive engagement. This study aims to close this research gap to immediately analyze interaction topics to increase cognitive engagement in the online collaborative learning context.

2.4 Coregulation

Coregulation is an interactive process in which regulatory activity is shared and embedded in the interactions (Hadwin & Oshige, 2011). It is composed of emergent social interactions mediated by setting goals, making plans, monitoring, and evaluating (Zheng et al., 2016). Coregulation focuses on mutuality and shared representation in a collaborative learning context. It is crucial for successful collaborative learning, and it provides temporary support for each other’s self-regulated learning (Schoor et al., 2015). However, learners often do not know how to regulate each other during collaborative learning (Borge et al., 2022).

Previous studies have adopted different strategies to promote coregulation. For example, Miller and Hadwin (2015) adopted scripts or awareness tools to facilitate coregulation. Zheng et al. (2022b) adopted personalized feedback to promote coregulation. However, there is a paucity of studies promoting coregulation through immediate analysis of interaction topics in online collaborative learning. To address this problem, this study aims to enhance coregulation using an immediate analysis of interaction topics approach in an online collaborative learning context.

3 Methodology

3.1 An innovative approach to the immediate analysis of interaction topics in OCL

The present study proposed an innovative approach to immediately analyzing interaction topics and providing feedback in online collaborative learning. The proposed approach included three steps. The first step was to conduct online collaborative learning and collect online discussion transcripts through the online collaborative learning platform. Figure 1 shows the online collaborative learning platform.

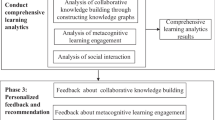

The second step was to automatically analyze interaction topics through a deep neural network model. The deep neural network model is bidirectional encoder representations from transformers (BERT) that includes pre-training and fine-tuning (Devlin et al., 2019). In the pre-training phase, the model is trained with the unlabeled corpus, and in the fine-tuning phase, the model is fine-tuned using labeled data (González-Carvajal & Garrido-Merchán, 2020). Therefore, BERT is a pretraining model that is trained by masked language modeling and recovers the masked tokens using a bidirectional transformer (Minaee et al., 2021). In this study, the Chinese BERT-base was adopted with 12 layers, a hidden size of 768, 12 self-attention heads, and 110 M parameters based on Devlin et al. 2019). Before this study, the first author collected the 10,559 online collaborative learning records from college students who completed the same collaborative learning tasks as this study’s tasks. Thus, totally 10,559 online collaborative learning records were used to train, test, and validate BERT. In this study, 70% of the data were the training set, 20% of the data were the validation set, and 10% of the data were the test set. In addition, other competing models, including LSTM (long short-term memory), support vector machine (SVM), logistic regression (LR), and naive Bayes (NB), were also compared. As shown in Table 1, BERT achieved the highest accuracy among all competing models. Therefore, this study selected BERT to automatically analyze interaction topics for one group and all groups. The third step was to provide personalized feedback and to recommend learning resources based on the analysis results. Figure 2 shows the topic analysis results and feedback for one group. Figure 3 shows the topic analysis results of all groups and feedback.

3.2 Participants

This study enrolled 60 college students from a top-10 public university in China. The average age of participants was 22 years old. There were 53 females and 7 males, which is in line with the population distribution of this university. The participants were majoring in fields such as literature, education, psychology, politics, mathematics, and geography. All participants had prior knowledge and experienced collaborative learning before this study. In total, 30 students were assigned to the experimental groups, and another 30 students were assigned to the control groups. Participants were not randomly assigned into the experimental and control groups since this study was a quasi-experimental study. Furthermore, there was no significant difference in prior knowledge between the experimental and control groups (t = 0.075, p = .941). Although females were more than males, there was no significant difference in gender distribution between the experimental and control groups (X2 = 1.456, p = .228). Informed written consent was obtained from all participants, and they all agreed to participate in this study.

3.3 Experimental procedure

This study adopted a mixed research design to compare the impacts of the IAIT approach on group performance, knowledge convergence, coregulated behavioral patterns, cognitive engagement, and cognitive load. The whole experiment lasted for three months. There were six phases during this experiment, as shown in Fig. 4. The first phase was to conduct a pretest for 15 min to examine the previous knowledge of all participants. The second phase was to introduce how to use the online collaborative learning platform and the IAIT approach. The third phase was to conduct online collaborative learning in different time slots and complete the same collaborative learning task for the same duration. The students in the ten experimental groups conducted online collaborative learning with the IAIT approach. The students in the ten control groups conducted online collaborative learning without obtaining any analysis results about collaborative learning processes and outcomes. The topic of online collaborative learning tasks focused on the cultivation of abilities. More specifically, the task included four subtasks, namely, how to define abilities; what are the relationships among abilities, skills, and knowledge; what kinds of abilities are crucial for experts in all professions and trades; and how can learners’ innovative abilities be fostered? Each group collaboratively completed an online document about the solutions to collaborative learning tasks as a group product. The fourth phase was to conduct the posttest and postquestionnaire for 30 min. The fifth phase was to interview each experimental group face-to-face for 30 min. The last phase was to complete a delayed posttest three days later.

3.4 Instruments

The pretest, posttest, delayed posttest, and cognitive load questionnaire were adopted as instruments in this study. The purpose of the pretest was to examine the participants’ prior knowledge. The pretest with a perfect score of 100 consisted of ten multiple-choice items, two short-answer questions, and two essay questions. The pretest, posttest, and delayed posttest were developed and rated by two experienced experts. The reliability of the pretest achieved 0.81, indicating high internal reliability (Cohen, 1988). The posttest with a perfect score of 100 consisted of ten multiple-choice items, three short-answer questions, and two essay questions. The reliability of the posttest achieved 0.85, indicating high internal reliability (Cohen, 1988). The items used in the delayed posttest were the same as those used in the original posttest. The reliability of the delayed posttest achieved 0.83, indicating high internal reliability (Cohen, 1988). In addition, the cognitive load questionnaire was adapted from Hwang et al. 2013). The questionnaire consisted of five items measuring mental load and three items measuring mental effort. The reliability of the cognitive load questionnaire calculated by Cronbach’s alpha achieved 0.86, indicating high internal reliability (Cohen, 1988).

3.5 Data analysis methods

The collected datasets in this study included 60 pretests, 60 posttests, 60 delayed posttests, and 60 cognitive load questionnaires, online discussion transcripts of 20 groups, 20 group products, and interview records of 10 groups. The computer-assisted knowledge graph analysis method, content analysis method, and lag sequential analysis method were employed in this study.

First, online discussion transcripts of 20 groups were analyzed through the computer-assisted knowledge graph analysis method proposed by the Zheng et al. (2015). The computer-assisted knowledge graph analysis method includes three steps, namely, drawing the target knowledge graph, coding online discussion transcripts based on predefined rules, and calculating the knowledge convergence level through the developed analytic tool. Two coders analyzed all online discussion transcripts. The interrater reliability calculated through kappa statistics achieved 0.8, indicating high interrater reliability (Cohen, 1988). The knowledge convergence level was equal to the sum of the number of activations of each vertex on the common knowledge graph, which has been validated by Zheng (2017).

Second, online discussion transcripts of 20 groups were analyzed using the content analysis method and the lag sequential analysis method to examine coregulated behavioral patterns. The coregulated behaviors include orienting goals (OG), making plans (MP), enacting strategies (ES), monitoring and controlling (MC), evaluating and reflecting (ER), and adapting metacognition (AM). The coding scheme of coregulated behaviors originated from the Zheng et al. (2022b), and it can recognize coregulated behaviors more effectively than other coding schemes. The interrater reliability calculated through kappa statistics achieved 0.9, indicating high interrater reliability (Cohen, 1988). Then, the lag sequential analysis method was adopted to analyze coregulated behavioral transition. GSEQ 5.1 software was employed to perform behavioral sequence analysis to detect the coregulated behavioral transition (Quera et al., 2007).

Third, online discussion transcripts of 20 groups were analyzed through a content analysis method to examine cognitive engagement. Cognitive engagement was classified into memory, understanding, application, evaluation, and off-topic information, adapted from Bloom et al. 1956). Two coders analyzed cognitive engagement independently, and the interrater reliability calculated through kappa statistics achieved 0.9, indicating high interrater reliability (Cohen, 1988).

Fourth, 20 group products were evaluated independently based on the assessment criteria that were adapted from Zheng et al. (2022a). The assessment criteria included five dimensions with a total score of 100, namely, correctness (25), rationality (20), originality (25), completeness (20), and format (10). The Cohen’s kappa value achieved 0.84, indicating high interrater reliability (Cohen, 1988).

Last, the interview records of the experimental groups were analyzed according to thematic analysis methods (Braun & Clarke, 2006) to detect four themes, namely, improving group performance, improving knowledge convergence, improving group learning engagement, and promoting coregulation. The two coders analyzed all of the interview transcripts independently, and the interrater reliability achieved 0.9. All discrepancies were resolved via face-to-face discussion.

4 Results

4.1 Analysis of group performance

Group performance was measured using posttests, delayed posttests, and group products. First, the Shapiro‒Wilk test was performed to examine the normality of all data. The results indicated that all of the datasets were normally distributed (p > .05). Second, homogeneity of variance test was performed through Levene’s test and the results indicated that homogeneity of variance was not violated (p > .05). Third, the homogeneity of regression slopes was conducted before one-way analysis of covariance (ANCOVA), and the results indicated that ANCOVA can be performed to analyze the difference in posttests (F = 2.39, p = .12), delayed posttests (F = 0.87, p = .35), and group products (F = 1.67, p = .21). Finally, ANCOVA was performed, and Table 2 shows the analysis results. The findings revealed that there were significant differences in posttests (F = 8.11, p = .006), delayed posttests (F = 5.98, p = .01), and group products (F = 10.97, p = .004) between the experimental and control groups. In addition, the proposed approach had a large effect size for group products (η2 = 0.39) and a medium effect size for posttests (η2 = 0.13) and delayed posttests (η2 = 0.10) according to Cohen (1988).

4.2 Analysis of knowledge convergence

First, the Shapiro‒Wilk test was performed to examine the normality of all data. The results indicated that all of the datasets were normally distributed (p > .05). Second, homogeneity of variance test was performed through Levene’s test and the results indicated that homogeneity of variance was not violated (p > .05). Third, the homogeneity of regression slopes was conducted before one-way analysis of covariance (ANCOVA), and the results indicated that ANCOVA can be performed to analyze the difference in knowledge convergence (F = 0.25, p = .63). Finally, ANCOVA was performed, and Table 3 shows the analysis results. The findings revealed that there was a significant difference in knowledge convergence (F = 20.41, p = .000) between the experimental and control groups. In addition, the proposed approach had a large effect size for knowledge convergence (η2 = 0.55) according to Cohen (1988).

4.3 Analysis of coregulated behavioral patterns

To analyze the differences in coregulated behavioral patterns between the experimental groups and control groups, the adjusted residuals of the groups were calculated using GSEQ 5.1 software. The adjusted residual refers to whether target behaviors occur significantly more or less often than expected after given behaviors (Bakeman & Quera, 2011). If the adjusted residual is larger than 1.96, it means that target behavior occurs significantly more often than expected by chance (Bakeman & Quera, 2011). Tables 4 and 5 show the adjusted residuals of the experimental groups and control groups, respectively. Figure 5 shows the coregulated behavioral sequence of the experimental groups and control groups. The numbers indicate the adjusted residuals.

The findings revealed that there were eight coregulated behavioral transition sequences and two repeated behavioral sequences in the experimental groups. As shown in Fig. 5, OG→MP indicates that learners make plans after orientating goals. MP→OG indicates that learners orientate goals again after making plans. MP→MP reveals that learners make plans continually. MP→MC denotes that learners monitor and control collaborative learning progress after they make plans. ES→ES indicates that learners enact strategies continually. ES→MC indicates that learners monitor and control the collaborative learning process after they enact strategies. MC→MP indicates that learners enact strategies after making plans. MC→ES indicates that learners enact strategies after monitoring and controlling. ER→AM indicates that learners adapt metacognition after they evaluate and reflect. AM→ER indicates that learners evaluate and reflect after they adapt metacognition.

The control groups, by contrast, only had three coregulated behavioral transition sequences and five repeated behavioral sequences. As shown in Fig. 5, OG→OG indicates orientating goals repeatedly, and OG→MP indicates making plans after orientating goals. MP→MP indicates making plans repeatedly. MP→MC denotes that learners monitor and control collaborative learning progress after they make plans. ES→ES represents enacting strategies repeatedly. MC→MP indicates that learners enact strategies after making plans. ER→ER represents evaluating and reflecting repeatedly. AM→AM represents adapting metacognition repeatedly.

Furthermore, Table 6 shows that there were five significant coregulated behavioral transition sequences that only occurred in the experimental groups, namely, MP→OG, ES→MC, MC→ES, ER→AM, and AM→ER. The findings revealed that orientating goals, monitoring and controlling, enacting strategies, evaluating and reflecting, and adapting metacognition were crucial coregulated behaviors for successful collaborative learning.

4.4 Analysis of cognitive engagement and cognitive load

First, the Shapiro‒Wilk test was performed to examine the normality of all data and the results indicated that all datasets were normally distributed (p > .05). Table 7 shows the independent sample t test results of cognitive engagement and cognitive load. The results revealed that there were significant differences in cognitive engagement (t = 2.12, p = .04) between the experimental and control groups. The experimental groups demonstrated more cognitive engagement than the control groups. Hence, the proposed approach enhanced learners’ cognitive engagement. In addition, the results revealed that there were no significant differences in cognitive load (t = 0.46, p = .65), mental load (t = 0.77, p = .44) or mental effort (t = 0.12, p = .91). Hence, the proposed approach did not increase learners’ cognitive load.

4.5 Analysis of interview results

To obtain learners’ perceptions of the IAIT approach, face-to-face interviews were conducted, and Table 8 shows the interview results. The findings indicated that the proposed approach could significantly promote group performance, knowledge convergence, cognitive engagement, and coregulation. With regard to improving group performance, interviewees believed that they often revised and refined group products based on the analysis results (90%) and that they could acquire new knowledge and skills through the proposed approach (90%). With respect to promoting knowledge convergence, interviewees believed that the proposed approach promoted more shared understanding (90%). The proposed approach reminded them to focus on the collaborative learning task and avoid off-topic information (90%). Concerning facilitating coregulation, all of the interviewees believed that the proposed approach stimulated coregulating with each other (100%), monitoring the online collaborative learning progress (100%), reflecting and evaluating based on topic analysis results (100%), and adapting plans and strategies (100%). Regarding promoting cognitive engagement, all of the interviewees noted that the proposed approach increased cognitive engagement overall (90%) and promoted engaging in the understanding, application, and evaluation of collaborative learning tasks (90%).

5 Discussion and conclusions

5.1 Discussion of main findings

The results indicated that the IAIT approach can significantly promote group performance, knowledge convergence, coregulated behaviors, and cognitive engagement. The IAIT approach did not increase learners’ cognitive load. The main contribution of this study is to propose and validate the impacts of the immediate analysis of interaction topics approach.

With respect to improving group performance, two possible reasons might explain the results. First, the IAIT approach immediately demonstrated the intergroup and intragroup interaction topics, which serve as a group awareness tool and stimulate awareness of the latest progress of intergroup and intragroup. Yilmaz and Yilmaz (2019) found that the group awareness tool contributes to improving group performance. Peng et al. (2022) revealed that intragroup information contributes to improving group performance. Second, the IAIT approach automatically provided real-time feedback and recommended learning resources, which contributed to improving group performance. This finding also echoed Al Hakim et al. 2022) and Zheng et al. (2022a), who also revealed that real-time feedback could significantly improve learning performance.

With respect to promoting knowledge convergence, there were two possible explanations for this result. First, the IAIT approach automatically displayed the interaction topics, and learners could be aware of whether they were off-topic. If there was off-topic information, learners would immediately focus on collaborative learning tasks and become on-topic, which promoted knowledge convergence. Praharaj et al., 2022) also found that on-topic conversation contributed to higher knowledge convergence than off-topic conversation. Second, the IAIT approach provided real-time feedback based on the analysis results of interaction topics, which also facilitated knowledge convergence. This finding was consistent with Chen (2017), who believed that providing feedback promoted the occurrence of knowledge convergence.

Concerning enhancing coregulated behaviors, there are three possible reasons. First, the IAIT approach automatically presented the intergroup and intragroup interaction topics, which served as a learning analytics dashboard to facilitate coregulation among group members. Sedrakyan et al. 2020) found that learning analytics dashboards could effectively support coregulation. Second, the IAIT approach immediately presented the interaction topics of intergroup and intragroup, which served as a group awareness tool. Schnaubert and Bodemer (2022) found that group awareness tools can promote coregulation in collaborative learning settings. Third, the IAIT approach also provided real-time feedback for each group, which also facilitated coregulation. This result was in line with Zheng et al. (2022b), who found that real-time feedback could support coregulation. In addition, the interview results also confirmed that participants could monitor and control, reflect and evaluate the online collaborative learning process and outcomes based on the analysis results. They also adjusted plans and strategies based on the analysis results.

The main reason for increased cognitive engagement was that the IAIT approach provided immediate analysis results about interaction topics and real-time feedback, which promoted frequent interactions among participants. Lee et al. 2022) revealed that peer interaction could promote cognitive engagement. Furthermore, real-time feedback could increase cognitive engagement (Al Hakim et al., 2022). The interview results also found that participants who used the IAIT approach believed that the feedback provided was very useful. This finding also echoed Mayordomo et al. 2022), who found that the positive perception of feedback could promote cognitive engagement in an online learning environment.

Furthermore, the IAIT approach did not increase cognitive load. The primary reason was that participants in the experimental groups obtained useful information to complete tasks efficiently. Redifer et al. 2021) believed that useful information could reduce cognitive load. In addition, participants in the experimental groups and control groups completed the same collaborative learning task for the same duration. Therefore, there was no significant difference in cognitive load between the experimental and control groups.

5.2 Implications

The present study has several implications for teachers, practitioners, and researchers. First, the IAIT approach is very helpful for learners to improve their learning performance, knowledge convergence, coregulated behavior patterns, and cognitive engagement. The IAIT approach is also useful for teachers and practitioners to quickly obtain a better understanding of online discussion content, which contributes to reducing workload and improving efficiency. In addition, teachers can make use of the analysis results to evaluate what students have learned. The analysis results are also useful for identifying the problems during online collaborative learning. Teachers and practitioners can also provide real-time feedback and intervention based on analysis results to improve collaborative learning performance. Therefore, it is recommended that teachers and practitioners pay attention to the immediate analysis of interaction topics in online collaborative learning.

Second, the IAIT approach can benefit the optimization of online collaborative learning task design based on topic analysis results. For example, there are few discussions on a particular topic, and learners might feel that the topic is boring or difficult. Then, teachers and practitioners should revise the topic and task accordingly. In addition, teachers and practitioners can optimize learning resources, interactive strategies, and assessment methods to stimulate learners’ interest in the topics and help overcome difficulties in online collaborative learning.

Third, this study found that deep learning technologies, especially deep neural network models, are very efficient in topic analysis. Deep neural networks have a superior ability to extract semantic relationships from texts than traditional machine learning methods (Li & Mao, 2019). Therefore, practitioners can adopt deep neural networks to automatically analyze topics in online learning. It is highly recommended that researchers develop innovative deep neural network models to achieve higher performance in the future.

5.3 Limitations and future studies

This study was constrained by several limitations, and caution should be taken when generalizing the findings to other contexts. First, the sample size of this study was small due to the COVID-19 pandemic. Future studies should examine the IAIT approach using a larger sample size. Second, the current study only engaged participants in completing one collaborative learning task. Future studies should engage learners in completing other tasks in other learning domains. Third, this study only investigated the effects of the IAIT approach on group performance, knowledge convergence, coregulation, and cognitive engagement. Future studies should examine the impacts of the IAIT approach on other variables, such as knowledge elaboration, problem-solving skills, and behavioral engagement. Fourth, the current study did not examine the possible moderating effect of gender on group performance, knowledge convergence, coregulation, and cognitive engagement. Future studies should investigate the moderating effect of gender on the dependent variables.

Data availability

The data of this study cannot be made openly available due to ethical concerns but are available from the corresponding author on reasonable request.

References

Ahmad, M., Junus, K., & Santoso, H. B. (2022). Automatic content analysis of asynchronous discussion forum transcripts: a systematic literature review. Education and Information Technologies, 1–56. https://doi.org/10.1007/s10639-022-11065-w

Al Hakim, V. G., Yang, S. H., Liyanawatta, M., Wang, J. H., & Chen, G. D. (2022). Robots in situated learning classrooms with immediate feedback mechanisms to improve students’ learning performance. Computers & Education, 182, 104483. https://doi.org/10.1016/j.compedu.2022.104483

Allan, J. (2002). Introduction to topic detection and tracking. In Topic detection and tracking (pp.1–16). Springer, Boston, MA. https://link.springer.com/chapter/10.1007/978-1-4615-0933-2_1. Accessed 15 June 2022.

Asgari-Chenaghlu, M., Feizi-Derakhshi, M. R., Balafar, M. A., & Motamed, C. (2020). Topicbert: A transformer transfer learning based memory-graph approach for multimodal streaming social media topic detection. arXiv preprint arXiv:2008.06877. https://doi.org/10.48550/arXiv.2008.06877

Bakeman, R., & Quera, V. (2011). Sequential analysis and observational methods for the behavioral sciences. Cambridge University Press.

Ben-Eliyahu, A., Moore, D., Dorph, R., & Schunn, C. D. (2018). Investigating the multidimensionality of engagement: affective, behavioral, and cognitive engagement across science activities and contexts. Contemporary Educational Psychology, 53, 87–105. https://doi.org/10.1016/j.cedpsych.2018.01.002

Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Taxonomy of educational objectives. Handbook I: cognitive domain. David McKay.

Borge, M., Aldemir, T., & Xia, Y. (2022). How teams learn to regulate collaborative processes with technological support. Educational Technology Research and Development, 1–30. https://doi.org/10.1007/s11423-022-10103-1

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Chen, C. M., Li, M. C., Chang, W. C., & Chen, X. X. (2021). Developing a topic analysis instant feedback system to facilitate asynchronous online discussion effectiveness. Computers & Education, 163, 104095. https://doi.org/10.1016/j.compedu.2020.104095

Chen, S., Ouyang, F., & Jiao, P. (2022). Promoting student engagement in online collaborative writing through a student-facing social learning analytics tool. Journal of Computer Assisted Learning, 38(1), 192–208. https://doi.org/10.1111/jcal.12604

Chen, W. (2017). Knowledge convergence among pre-service mathematics teachers through online reciprocal peer feedback. Knowledge Management & E-Learning: An International Journal, 9(1), 1–18. https://doi.org/10.34105/j.kmel.2017.09.001

Chen, W., Allen, C., & Jonassen, D. (2018). Deeper learning in collaborative concept mapping: a mixed methods study of conflict resolution. Computers in Human Behavior, 87, 424–435. https://doi.org/10.1016/j.chb.2018.01.007

Chi, M. T., & Wylie, R. (2014). The ICAP framework: linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. https://doi.org/10.1080/00461520.2014.965823

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum. https://doi.org/10.4324/9780203771587

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (pp. 4171–4186). Association for Computational Linguistics. https://doi.org/10.48550/arXiv.1810.04805

Draper, D. C. (2015). Collaborative instructional strategies to enhance knowledge convergence. American Journal of Distance Education, 29(2), 109–125. https://doi.org/10.1080/08923647.2015.1023610

Gao, F., Zhang, T., & Franklin, T. (2013). Designing asynchronous online discussion environments: recent progress and possible future directions. British Journal of Educational Technology, 44(3), 469–483. https://doi.org/10.1111/j.1467-8535.2012.01330.x

González-Carvajal, S., & Garrido-Merchán, E. C. (2020). Comparing BERT against traditional machine learning text classification. arXiv preprint arXiv:2005.13012. https://arxiv.org/pdf/2005.13012.pdf

Greene, B. A. (2015). Measuring cognitive engagement with self-report scales: reflections from over 20 years of research. Educational Psychologist, 50(1), 14–30. https://doi.org/10.1080/00461520.2014.989230

Hadwin, A., & Oshige, M. (2011). Self-regulation, coregulation, and socially shared regulation: exploring perspectives of social in self-regulated learning theory. Teachers College Record, 113(2), 240–264. https://doi.org/10.1177/016146811111300204

Hernández-Sellés, N., Muñoz-Carril, P. C., & González-Sanmamed, M. (2020). Interaction in computer supported collaborative learning: an analysis of the implementation phase. International Journal of Educational Technology in Higher Education, 17(1), 1–13. https://doi.org/10.1186/s41239-020-00202-5

Hwang, G. J., Yang, L. H., & Wang, S. Y. (2013). A Concept map-embedded educational computer game for improving students’ learning performance in natural science courses. Computers & Education, 69, 121–130. https://doi.org/10.1016/j.compedu.2013.07.008

Kew, S. N., & Tasir, Z. (2022). Developing a learning analytics intervention in e-learning to enhance students’ learning performance: a case study. Education and Information Technologies, 1–36. https://doi.org/10.1007/s10639-022-10904-0

Lampe, C., Wohn, D. Y., Vitak, J., Ellison, N. B., & Wash, R. (2011). Student use of Facebook for organizing collaborative classroom activities. International Journal of Computer-Supported Collaborative Learning, 6(3), 329–347. https://doi.org/10.1007/s11412-011-9115-y

Lee, J., Park, T., & Davis, R. O. (2022). What affects learner engagement in flipped learning and what predicts its outcomes? British Journal of Educational Technology, 53(2), 211–228. https://doi.org/10.1111/bjet.12717

Li, P., & Mao, K. (2019). Knowledge-oriented convolutional neural network for causal relation extraction from natural language texts. Expert Systems with Applications, 115, 512–523. https://doi.org/10.1016/j.eswa.2018.08.009

Li, S., Lajoie, S. P., Zheng, J., Wu, H., & Cheng, H. (2021). Automated detection of cognitive engagement to inform the art of staying engaged in problem-solving. Computers & Education, 163, 104114. https://doi.org/10.1016/j.compedu.2020.104114

Li, Y., Zheng, Y., Bao, H., & Liu, Y. (2015). Towards better understanding of hot topics in online learning communities. Smart Learning Environments, 2(1), 1–14. https://doi.org/10.1186/s40561-015-0019-6

Lin, Y. T., Wu, C. C., Chen, Z. H., & Ku, P. Y. (2020). How gender pairings affect collaborative problem solving in social-learning context: the effects on performance, behaviors, and attitudes. Educational Technology & Society, 23(4), 30–44.

Liu, S., Liu, S., Liu, Z., Peng, X., & Yang, Z. (2022). Automated detection of emotional and cognitive engagement in MOOC discussions to predict learning achievement. Computers & Education, 181, 104461. https://doi.org/10.1016/j.compedu.2022.104461

Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., & Alsaadi, F. E. (2017). A survey of deep neural network architectures and their applications. Neurocomputing, 234, 11–26. https://doi.org/10.1016/j.neucom.2016.12.038

Mayordomo, R. M., Espasa, A., Guasch, T., & Martínez-Melo, M. (2022). Perception of online feedback and its impact on cognitive and emotional engagement with feedback. Education and Information Technologies, 1–25. https://doi.org/10.1007/s10639-022-10948-2

Meijer, H., Hoekstra, R., Brouwer, J., & Strijbos, J. W. (2020). Unfolding collaborative learning assessment literacy: a reflection on current assessment methods in higher education. Assessment & Evaluation in Higher Education, 45(8), 1222–1240. https://doi.org/10.1080/02602938.2020.1729696

Mercier, E. M. (2017). The influence of achievement goals on collaborative interactions and knowledge convergence. Learning and Instruction, 50, 31–43. https://doi.org/10.1016/j.learninstruc.2016.11.006

Miller, M., & Hadwin, A. (2015). Scripting and awareness tools for regulating collaborative learning: changing the landscape of support in CSCL. Computers in Human Behavior, 52, 573–588. https://doi.org/10.1016/j.chb.2015.01.050

Minaee, S., Kalchbrenner, N., Cambria, E., Nikzad, N., Chenaghlu, M., & Gao, J. (2021). Deep learning–based text classification: a comprehensive review. ACM Computing Surveys (CSUR), 54(3), 1–40. https://doi.org/10.1145/3439726

Ng, P. M., Chan, J. K., & Lit, K. K. (2022). Student learning performance in online collaborative learning. Education and Information Technologies, 1–17. https://doi.org/10.1007/s10639-022-10923-x

Ouyang, F., & Chang, Y. H. (2019). The relationships between social participatory roles and cognitive engagement levels in online discussions. British Journal of Educational Technology, 50(3), 1396–1414. https://doi.org/10.1111/bjet.12647

Peng, X., Han, C., Ouyang, F., & Liu, Z. (2020). Topic tracking model for analyzing student-generated posts in SPOC discussion forums. International Journal of Educational Technology in Higher Education, 17(1), 1–22. https://doi.org/10.1186/s41239-020-00211-4

Praharaj, S., Scheffel, M., Schmitz, M., Specht, M., & Drachsler, H. (2022). Towards collaborative convergence: quantifying collaboration quality with automated co-located collaboration analytics. In LAK22: 12th International Learning Analytics and Knowledge Conference (pp. 358–369). https://doi.org/10.1145/3506860.3506922

Quera, V., Bakeman, R., & Gnisci, A. (2007). Observer agreement for event sequences: methods and software for sequence alignment and reliability estimates. Behavior Research Methods, 39(1), 39–49. https://doi.org/10.3758/BF03192842

Redifer, J. L., Bae, C. L., & Zhao, Q. (2021). Self-efficacy and performance feedback: impacts on cognitive load during creative thinking. Learning and Instruction, 71, 101395. https://doi.org/10.1016/j.learninstruc.2020.101395

Reeves, T. C., Herrington, J., & Oliver, R. (2004). A development research agenda for online collaborative learning. Educational Technology Research and Development, 52(4), 53–65. https://doi.org/10.1007/BF02504718

Richardson, J. C., & Newby, T. (2006). The role of students’ cognitive engagement in online learning. American Journal of Distance Education, 20(1), 23–37. https://doi.org/10.1207/s15389286ajde2001_3

Roschelle, J., & Teasley, S. (1995). The construction of shared knowledge in collaborative problem solving. In C. O’Malley (Ed.), Computer-supported collaborative learning (pp. 69–97). SpringerVerlag. https://doi.org/10.1007/978-3-642-85098-1_5

Rosen, Y., Wolf, I., & Stoeffler, K. (2020). Fostering collaborative problem solving skills in science: the Animalia project. Computers in Human Behavior, 104, 105922. https://doi.org/10.1016/j.chb.2019.02.018

Sedrakyan, G., Malmberg, J., Verbert, K., Järvelä, S., & Kirschner, P. A. (2020). Linking learning behavior analytics and learning science concepts: Designing a learning analytics dashboard for feedback to support learning regulation. Computers in Human Behavior, 107, 105512. https://doi.org/10.1016/j.chb.2018.05.004

Schnaubert, L., & Bodemer, D. (2022). Group awareness and regulation in computer-supported collaborative learning. International Journal of Computer-Supported Collaborative Learning, 1–28. https://doi.org/10.1007/s11412-022-09361-1

Schoor, C., Narciss, S., & Körndle, H. (2015). Regulation during cooperative and collaborative learning: a theory-based review of terms and concepts. Educational Psychologist, 50(2), 97–119. https://doi.org/10.1080/00461520.2015.1038540

Shahzad, A., & Wali, A. (2022). Computerization of off-topic essay detection: a possibility? Education and Information Technologies, 27(4), 5737–5747. https://doi.org/10.1007/s10639-021-10863-y

Shrestha, A., & Mahmood, A. (2019). Review of deep learning algorithms and architectures. IEEE Access, 7, 53040–53065. https://doi.org/10.1109/ACCESS.2019.2912200

Sze, V., Chen, Y. H., Yang, T. J., & Emer, J. S. (2017). Efficient processing of deep neural networks: A tutorial and survey. Proceedings of the IEEE, 105(12), 2295–2329. https://doi.org/10.1109/JPROC.2017.2761740

Volet, S., Summers, M., & Thurman, J. (2009). High-level co-regulation in collaborative learning: how does it emerge and how is it sustained? Learning and Instruction, 19(2), 128–143. https://doi.org/10.1016/j.learninstruc.2008.03.001

Wayne, C. L. (1997). Topic detection and tracking (TDT). In workshop held at the University of Maryland, 27, 28–30. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.27.2955&rep=rep1&type=pdf. Accessed 15 June 2022.

Weinberger, A., Stegmann, K., & Fischer, F. (2007). Knowledge convergence in collaborative learning: concepts and assessment. Learning and Instruction, 17(4), 416–426. https://doi.org/10.1016/j.learninstruc.2007.03.007

Weldon, E., & Weingart, L. R. (1993). Group goals and group performance. British Journal of Social Psychology, 32(4), 307–334. https://doi.org/10.1111/j.2044-8309.1993.tb01003.x

Wen, Y. (2021). Augmented reality enhanced cognitive engagement: designing classroom-based collaborative learning activities for young language learners. Educational Technology Research and Development, 69(2), 843–860. https://doi.org/10.1007/s11423-020-09893-z

Whitehill, J., Serpell, Z., Lin, Y. C., Foster, A., & Movellan, J. R. (2014). The faces of engagement: automatic recognition of student engagement from facial expressions. IEEE Transactions on Affective Computing, 5(1), 86–98. https://doi.org/10.1109/TAFFC.2014.2316163

Wong, A. W., Wong, K., & Hindle, A. (2019). Tracing forum posts to MOOC content using topic analysis. arXiv preprint arXiv:1904.07307. https://arxiv.org/pdf/1904.07307.pdf. Accessed 15 June 2022.

Wong, G. K., Li, Y. K., & Lai, X. (2021). Visualizing the learning patterns of topic-based social interaction in online discussion forums: an exploratory study. Educational Technology Research and Development, 69(5), 2813–2843. https://doi.org/10.1007/s11423-021-10040-5

Wu, S. Y. (2022). Construction and evaluation of an online environment to reduce off-topic messaging. Interactive Learning Environments, 30(3), 455–469. https://doi.org/10.1080/10494820.2019.1664594

Wu, T. T., Huang, Y. M., Su, C. Y., Chang, L., & Lu, Y. C. (2018). Application and analysis of a mobile e-book system based on project-based learning in community health nursing practice courses. Educational Technology & Society, 21(4), 143–156.

Xu, Y., & Lynch, C. F. (2018). What do you want? Applying deep learning models to detect question topics in MOOC forum posts? In Wood-stock’18: ACM Symposium on Neural Gaze Detection (pp. 1–6). ACM, New York, NY. http://ml4ed.cc/attachments/Xu.pdf. Accessed 15 June 2022.

Yilmaz, F. G. K., & Yilmaz, R. (2019). Impact of pedagogic agent-mediated metacognitive support towards increasing task and group awareness in CSCL. Computers & Education, 134, 1–14. https://doi.org/10.1016/j.compedu.2019.02.001

Zheng, L. (2017). Knowledge building and regulation in computer-supported collaborative learning. Springer.

Zheng, L., & Huang, R. (2016). The effects of sentiments and co-regulation on group performance in computer supported collaborative learning. The Internet and Higher Education, 28, 59–67. https://doi.org/10.1016/j.iheduc.2015.10.001

Zheng, L., Huang, R., Hwang, G. J., & Yang, K. (2015). Measuring knowledge elaboration based on a computerassisted knowledge map analytical approach to collaborative learning. Journal of Educational Technology & Society, 18(1), 321–336. https://www.jstor.org/stable/jeductechsoci.18.1.321

Zheng, L., Niu, J., & Zhong, L. (2022a). Effects of a learning analytics-based real-time feedback approach on knowledge elaboration, knowledge convergence, interactive relationships and group performance in CSCL. British Journal of Educational Technology, 53, 130–149. https://doi.org/10.1111/bjet.13156

Zheng, L., Zhong, L., & Niu, J. (2022b). Effects of personalised feedback approach on knowledge building, emotions, co-regulated behavioural patterns and cognitive load in online collaborative learning. Assessment & Evaluation in Higher Education, 47(1), 109–125. https://doi.org/10.1080/02602938.2021.1883549

Acknowledgements

This study is funded by the International Joint Research Project of Huiyan International College, Faculty of Education, Beijing Normal University (ICER202101).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, L., Zhong, L. & Fan, Y. An immediate analysis of the interaction topic approach to promoting group performance, knowledge convergence, cognitive engagement, and coregulation in online collaborative learning. Educ Inf Technol 28, 9913–9934 (2023). https://doi.org/10.1007/s10639-023-11588-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-11588-w