Abstract

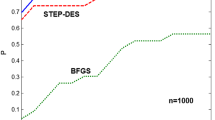

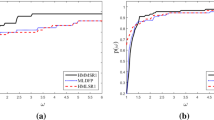

In this work we introduce and study novel Quasi Newton minimization methods based on a Hessian approximation Broyden Class-type updating scheme, where a suitable matrix \(\tilde{B}_k\) is updated instead of the current Hessian approximation \(B_k\). We identify conditions which imply the convergence of the algorithm and, if exact line search is chosen, its quadratic termination. By a remarkable connection between the projection operation and Krylov spaces, such conditions can be ensured using low complexity matrices \(\tilde{B}_k\) obtained projecting \(B_k\) onto algebras of matrices diagonalized by products of two or three Householder matrices adaptively chosen step by step. Experimental tests show that the introduction of the adaptive criterion, which theoretically guarantees the convergence, considerably improves the robustness of the minimization schemes when compared with a non-adaptive choice; moreover, they show that the proposed methods could be particularly suitable to solve large scale problems where L-BFGS is not able to deliver satisfactory performance.

Similar content being viewed by others

References

Al-Baali, M.: Analysis of a family of self-scaling quasi-Newton methods. Deptartment of Mathematics and Computer Science, United Arab Emirates University, Technical Report (1993)

Al-Baali, M.: Global and superlinear convergence of a restricted class of self-scaling methods with inexact line searches, for convex functions. Comput. Optim. Appl. 9(2), 191–203 (1998)

Andrei, N.: A double-parameter scaling Broyden-Fletcher-Goldfarb-Shanno method based on minimizing the measure function of byrd and nocedal for unconstrained optimization. J. Optim. Theory Appl. 178(1), 191–218 (2018)

Bortoletti, A., Di Fiore, C., Fanelli, S., Zellini, P.: A new class of quasi-Newtonian methods for optimal learning in MLP-networks. IEEE Trans. Neural Netw. 14(2), 263–273 (2003)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60(2), 223–311 (2018)

Byrd, R.H., Hansen, S.L., Nocedal, J., Singer, Y.: A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 26(2), 1008–1031 (2016)

Byrd, R.H., Nocedal, J.: A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 26(3), 727–739 (1989)

Byrd, R.H., Nocedal, J., Yuan, Y.X.: Global convergence of a class of Quasi-Newton methods on convex problems. SIAM J. Numer. Anal. 24(5), 1171–1190 (1987)

Cai, J.F., Chan, R.H., Di Fiore, C.: Minimization of a detail-preserving regularization functional for impulse noise removal. J. Math. Imaging Vis. 29(1), 79–91 (2007)

Caliciotti, A., Fasano, G., Roma, M.: Novel preconditioners based on quasi-Newton updates for nonlinear conjugate gradient methods. Optim. Lett. 11(4), 835–853 (2017)

Cipolla, S., Di Fiore, C., Tudisco, F.: Euler-Richardson method preconditioned by weakly stochastic matrix algebras: a potential contribution to Pagerank computation. Electron. J. Linear Algebra 32, 254–272 (2017)

Cipolla, S., Di Fiore, C., Tudisco, F., Zellini, P.: Adaptive matrix algebras in unconstrained minimization. Linear Algebra Appl. 471, 544–568 (2015)

Cipolla, S., Di Fiore, C., Zellini, P.: Low complexity matrix projections preserving actions on vectors. Calcolo 56(2), 8 (2019)

Cipolla, S., Durastante, F.: Fractional PDE constrained optimization: an optimize-then-discretize approach with L-BFGS and approximate inverse preconditioning. Appl. Numer. Math. 123, 43–57 (2018)

Di Fiore, C.: Structured matrices in unconstrained minimization methods. In: Contemporary Mathematics, pp. 205–219 (2003)

Di Fiore, C., Fanelli, S., Lepore, F., Zellini, P.: Matrix algebras in Quasi-Newton methods for unconstrained minimization. Numer. Math. 94(3), 479–500 (2003)

Di Fiore, C., Fanelli, S., Zellini, P.: Low-complexity minimization algorithms. Numer. Linear Algebra Appl. 12(8), 755–768 (2005)

Di Fiore, C., Fanelli, S., Zellini, P.: Low complexity secant quasi-Newton minimization algorithms for nonconvex functions. J. Comput. Appl. Math. 210(1–2), 167–174 (2007)

Di Fiore, C., Lepore, F., Zellini, P.: Hartley-type algebras in displacement and optimization strategies. Linear Algebra Appl. 366, 215–232 (2003)

Di Fiore, C., Zellini, P.: Matrix algebras in optimal preconditioning. Linear Algebra Appl. 335(1–3), 1–54 (2001)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Dunlavy, D.M., Kolda, T.G., Acar, E.: Poblano v1. 0: a matlab toolbox for gradient-based optimization. Sandia National Laboratories, Albuquerque, NM and Livermore, CA, Technical Report SAND2010-1422 (2010)

Ebrahimi, A., Loghmani, G.: B-spline curve fitting by diagonal approximation BFGS methods. Iran. J. Sci. Technol. Trans. A Sci. 1–12

Eldén, L.: Numerical linear algebra in data mining. Acta Numer. 15, 327–384 (2006)

Horn, R.A., Johnson, C.R.: Matrix Analysis, 2nd edn. Cambridge University Press, Cambridge (2013)

Jiang, L., Byrd, R.H., Eskow, E., Schnabel, R.B.: A preconditioned L-BFGS algorithm with application to molecular energy minimization. Technical Report, Colorado University at Boulder Dept. of Computer Science (2004)

Kolda, T.G., O’leary, D.P., Nazareth, L.: BFGS with update skipping and varying memory. SIAM J. Optim. 8(4), 1060–1083 (1998)

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, D.H., Fukushima, M.: A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 129(1), 15–35 (2001)

Liu, C., Vander Wiel, S.A.: Statistical Quasi-Newton: a new look at least change. SIAM J. Optim. 18(4), 1266–1285 (2007)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45(1–3), 503–528 (1989)

Nazareth, L.: A relationship between the BFGS and conjugate gradient algorithms and its implications for new algorithms. SIAM J. Numer. Anal. 16(5), 794–800 (1979)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, Berlin (2006)

Oren, S.S., Luenberger, D.G.: Self-scaling variable metric (SSVM) algorithms: part i: criteria and sufficient conditions for scaling a class of algorithms. Manag. Sci. 20(5), 845–862 (1974)

Powell, M.J.D.: Some global convergence properties of a variable metric algorithm for minimization without exact line searches. Nonlinear Program SIAM-AMS Proc. 9, 53–72 (1976)

Saad, Y.: Analysis of some Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 29(1), 209–228 (1992)

Saad, Y.: Numerical methods for large eigenvalue problems. SIAM (2011). https://doi.org/10.1137/1.9781611970739

Acknowledgements

The authors acknowledge anonymous referees for their thorough reading of the manuscript and the many suggestions they gave in order to improve its readability. Moreover, they acknowledge the Associated Editor for his/her valuable commentaries and for suggesting the introduction of the scaling factor as in Sect. 4. S.C. and C.D.F. are members of the INdAM Research group GNCS, which partially supported this work. C.D.F acknowledges the partial support of the Italian mathematics Research Institute INdAM-GNCS and of the MIUR Excellence Department Project awarded to the Dept of Mathematics, Univ. of Rome “Tor Vergata”, CUP E83C18000100006.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Householder matrices

The results contained in this section are borrowed from [13] and we refer the interested reader there for more details.

Definition 1

(Householder Orthogonal Matrix) Given a vector \({\mathbf {p}}\in \mathbb {R}^n\) define

Consider two vectors \({\mathbf {v}},\, {\mathbf {z}}\in \mathbb {R}^n\). From direct computation one can check that defining \({\mathbf {p}}= {\mathbf {v}}- \frac{\Vert {\mathbf {v}}\Vert }{\Vert {\mathbf {z}}\Vert }{\mathbf {z}}\) with \({\mathbf {z}}\ne 0,\) we have

Lemma 5

([13]) Consider \(W=[{\mathbf {w}}_1|\dots |{\mathbf {w}}_s] \in \mathbb {R}^{n\times s}, V=[{\mathbf {v}}_1|\dots |{\mathbf {v}}_s] \in \mathbb {R}^{n\times s}\)of full rank and such that \(s \le n\), \(W^TW=V^TV\). Then there exist \(\,{\mathbf {h}}_1, \dots ,{\mathbf {h}}_{s} \in \mathbb {R}^n\), \(\Vert {\mathbf {h}}_i\Vert =\sqrt{2}\), such that the orthogonal matrix \(U=\mathcal {H}({\mathbf {h}}_s)\cdots \mathcal {H}({\mathbf {h}}_1)\), product of s Householder matrices, satisfies the following identities

The vectors \({\mathbf {h}}_i\)for \(i\in \{1,\dots ,s\}\)can be obtained by setting:

(where we set \({\mathbf {h}}_0={\mathbf {w}}_0={\mathbf {v}}_0=\varvec{0}\)). If \(s=n\)we have \({\mathbf {h}}_n=\mathbf {0}\)or \({\mathbf {h}}_n=\frac{\sqrt{2}}{\Vert {\mathbf {v}}_n\Vert }{\mathbf {v}}_n.\)The cost of the computation of the \({\mathbf {h}}_i\)for \(i=1,\dots ,s\)is:

Observe that when \({\mathbf {w}}_i={\mathbf {e}}_{k_i}\)for \(i=1,\dots ,s\), that is when \({\mathbf {v}}_1, \dots , {\mathbf {v}}_s \)are orthonormal and we are interested to construct an orthogonal U with s columns fixed as \({\mathbf {v}}_1, \dots , {\mathbf {v}}_s \), it is possible to save \((s-1)n \hbox { mult.}\)and \( (3s-2)n \hbox { add..}\)

Proof

The explicit expression of the \({\mathbf {h}}_i\) in (65) is obtained by applying the techniques for their construction introduced in [13]. \(\square \)

Appendix 2: Details on Theorem 1

In order to prove inequality (34) it is enough to prove the following:

Lemma 6

There exists \(c_3\), constant with respect to j and depending only on s and M, such that

(of course, such \(c_3\)turns out to be greater than 1).

In fact, once Lemma 6 is proved, the constant \(c_2\) (constant with respect to j) for which (34) is verified, will be \(c_2=2c_1c_3/(1-\beta )\) (note that \(c_2\) depends only s, M, \(\beta \) but not on j).

Proof

Fix \(\tilde{c}_3>1\). Note that the sequence of positive numbers

converges to zero as \(j \rightarrow +\infty \); thus there exists \(j^{*}\ge s\) (depending on s, M and \(\tilde{c}_3\)) s.t.

Note also that for all \(j \in \{s+1, \dots , j^{*}-1\}\) we have

and consider \(\hat{j} \ge j^{*}\) s.t. \(\gamma (\hat{j}-s+1)^n>1\) (\(\hat{j}\) depends on s, M, \(\gamma \) and \(\tilde{c}_3\)). From (66) we have

for all \(j \in \{s,s+1, \dots j^{*}-1\}\).

Collecting the above results, we can conclude that

where \(c_3:=\max \{ \tilde{c}_3, \gamma (\hat{j}-s+1)^n\}\) (\(c_3>1\) and depends on s, M and \(\tilde{c}_3\)).

Finally note that, once \(\tilde{c}_3\) is fixed, it is clear that \(c_3\) depends only on s, M. \(\square \)

In order to prove inequality (\(34_{1}\)), define \(a_k:=(1-\phi -\psi _k\phi )\Vert {\mathbf {g}}_k\Vert ^2/{\mathbf {s}}_k^{T}(-{\mathbf {g}}_k)>0\). We know that \(\lim _{k \rightarrow +\infty }a_k=+\infty \) and we have to show that there exists \(j^{*}\ge s\) such that

If \(a_k\ge c_2\) for all \(k \ge s\), since it must be \(a_k>c_2\) for infinite indexes k, then the thesis is obvious. So assume that there exists some index k such that \(a_k < c_2\). Let \(r \ge s\) be such that \(a_k>c_2\) for all \(k>r\). Note that \(c_2>\min _{k=s, \dots , r}a_k\). Set

Let \(j^{*}>r+1\) be such that \(a_k \ge t c_2\) for all \(k\ge j^{*}\). Then we have

i.e., \(\prod _{k=s}^{j^{*}}a_k>c_2^{j^*-s+1}\). Thus we obtain (\(34_{1}\hbox {bis}\)) since \(a_k\ge tc_2>c_2\) for \(k> j^{*}\).

Rights and permissions

About this article

Cite this article

Cipolla, S., Di Fiore, C. & Zellini, P. A variation of Broyden class methods using Householder adaptive transforms. Comput Optim Appl 77, 433–463 (2020). https://doi.org/10.1007/s10589-020-00209-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-020-00209-8

Keywords

- Unconstrained minimization

- Quasi-Newton methods

- Matrix algebras

- Matrix projections preserving directions