Abstract

With adverse industrial effects on the global landscape, climate change is imploring the global economy to adopt sustainable solutions. The ongoing evolution of energy efficiency targets massive data collection and Artificial Intelligence (AI) for big data analytics. Besides, emerging on the Internet of Energy (IoE) paradigm, edge computing is playing a rising role in liberating private data from cloud centralization. In this direction, a creative visual approach to understanding energy data is introduced. Building upon micro-moments, which are timeseries of small contextual data points, the power of pictorial representations to encapsulate rich information in a small two-dimensional (2D) space is harnessed through a novel Gramian Angular Fields (GAF) classifier for energy micro-moments. Designed with edge computing efficiency in mind, current testing results on the ODROID-XU4 can classify up to 7 million GAF-converted datapoints with ~ 90% accuracy in less than 30 s, paving the path towards industrial adoption of edge IoE.

Similar content being viewed by others

1 Introduction

Energy efficiency has been considered a fundamental theme to addressing global climate change caused by uneconomical energy systems including heating, ventilation, and air-conditioning [1]. Consequently, enormous research contributions, as well as interactive studies, have discussed the tools and methods to classify and analyze power consumption data effectively and accurately [2]. Particularly, energy efficiency applications employ data analytics to obtain useful insights on power usage patterns. Moreover, sustainable behavioral adjustments have been further motivated by employing eco-feedback systems for less knowledgeable occupants [3].

On another aspect, cloud computing servers are considered as powerful computational platforms that can perform cost-efficient and scalable Machine Learning (ML) and Deep Learning (DL) algorithms [4, 5]. Many challenges, however, arise when solely depending on cloud computing for Artificial Intelligence (AI), such as data privacy, networking latency, and energy efficiency [6,7,8]. Therefore, Edge AI has emerged as a solution in which ML algorithms are run at high performance by securely leveraging local computational resources. Edge AI is generally defined as the distributed implementation of ML and/or DL algorithms on resource-constrained devices with specialized hardware that optimizes for computational performance and efficiency for specific purposes. The name ‘edge’ is used to illustrate that the computations are conducted on the network’s edge, i.e., on a local level near the data collection site. Compared with cloud-computing, edge computing and edge AI complement existing cloud-based infrastructures by offloading some cloud computations to local devices to optimize for a number of parameters including data privacy, computational performance, etc.

Moreover, research has largely focused on Building Energy Management Systems (BEMSs) in the state-of-the-art technology, as buildings are a major energy-consuming sector reaching to around 40% of the world’s consumed energy. Moreover, other research has indicated that heating and cooling operations constitute 60% of the total building’s energy consumption [9]. Therefore, it is evident that the impact of buildings’ energy consumption is highly important on climate change and therefore is crucial to study buildings energy efficiency [10, 11].

Consequently, in recent research, it has become widely popular to recognize the significance of suppressing losses and environmental impact when investigating BEMSs aiming to develop a sustainable approach for building energy consumption. For this purpose, the deployment of Internet of Energy (IoE) has been investigated [12]. From both IoE and Internet of things (IoT) perspectives, a plethora smart objects are interconnected to communicate services and data based on an Internet architecture that enables such a framework. A variety of IoT applications have been largely adopted in the literature, such as power distribution, military applications, and weather forecasting [13].

Similarly, adopting an Internet-based solution, many of the IoT properties are largely involved in IoE. Several applications are dependent on employing IoE, such as buildings, electric vehicles, and distributed energy sources, in which energy networks can be successfully monitored and controlled based on the Internet [14]. Moreover, IoE-facilitated BEMSs deliver useful information supported by data processing and smart metering [15], and hence, approaching reduced energy losses and sustainable energy by influencing a certain acceptable energy consumption.

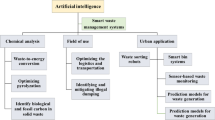

A wide range of research areas can be unraveled by unlocking the potential of edge AI for IoE in the following main sub-themes: (a) The collection of IoE data; (b) IoE data analytics; and (c) IoE decision making and integration. Throughout these processes, data are first collected using sensors, wireless communication, and backend servers, and then analyzed with edge AI by pre-processing, classification, and data visualization. Finally, based on the customized preferences, decision making is then performed by using intelligent recommendation systems, such that a suitable energy saving solution is constructed.

Speaking of data visualization, when integrated with ML, pictorial representations can introduce impactful results and insights [2, 3]. This brings us to the introduction of one of the tools used to convert one-dimensional (1D) data to higher dimensional realms, Gramian Angular Field (GAF) [16]. To create a GAF representation, a 1D time-series data is converted into polar coordinates [17, 18] as follows: a time-series vector \(x = \left\{ {x_{1} ,x_{2} ,x_{3} , \ldots ,x_{N} } \right\}\) with N samples is normalized to [0, 1] using Eq. (1).

where the time values are split up by a regularizing constant factor, R, and the values for each element in this series is encoded using angular cosine functions as shown in Eq. (2).

Evidently, two main types of GAF representations can be generated: the Gramian Angular Summation Field (GASF) using the cosine function, as in Eqs. (3) and (4), and the Gramian Angular Difference Field (GADF), as in Eqs. (5) and (6) using the sine function [19]:

where \(x^{\prime}\) is the transformed image dataset. Combined with DL image classification algorithms, it is believed that complex ML algorithms involved with multidimensional data can be dealt with more efficiently once converted into 2D GAF visualizations [16, 20].

In this work, a novel edge GAF classifier for energy micro-moment data is presented for industrial IoE applications. Particularly, the contributions of the article can be summarized as in the following:

-

1.

The novel use of GAF representations to convert large 1D time series data into high-resolution images that encapsulate rich information and enable further 2D classification possibilities;

-

2.

A GAF classifier is developed upon DNNs for optimized edge computing performance; and

-

3.

Implementation of the classifier on the ODROID-XU4 edge computing board along with a streamlined data workflow that involves hybrid edge-cloud infrastructure.

The general data workflow in this article is depicted in Fig. 1.

The remainder of this paper is organized as follows. Section 2 provides a concise summary of related literature. Section 3 presents the general concept of the system the authors are endeavoring to conceive. Section 4 discusses data collection and the novel GAF converter. Section 5 presents the employed intelligence scheme and performance optimization considerations for edge devices. Section 6 presents the study’s findings and discusses the presented workflow’s performance and limitations. Section 7 concludes the article.

2 Related work

To provide a concise background of the proposed work in this research, this section overviews recent related literature in the field of (a) edge AI and (b) using GAF for data processing applications. Starting with related work on edge AI platforms, the production of edge AI platforms has been gradually growing and adopted by international research and industrial institutions [21]. While edge AI accelerators share common functionalities with others, such as form factor, compatible ML frameworks, and algorithm execution process, they are signified by their architecture, processing power, memory, and overall cost.

Moreover, low power consumption and compact form factor are features of which the Single-Board Computer (SBC) platform ODROID-XU4 is commonly known for. It supports a composite of Advanced RISC Machines (ARM) Cortex-A15, Cortex-A7 big.LITTLE Central Processing Unit (CPU), and ARM Mali-T628 Graphics Processing Unit (GPU), in addition to several open-source operating systems, such as Linux and Android. On the other hand, a well-known AI accelerator, called Jetson Nano, was developed by Nvidia supporting GPU cores of 128 Maxwell. It is used in a variety of edge AI applications with an average of 5–10 W electric power consumption. For instance, 4 ARM cores and 256 Compute Unified Device Architecture (CUDA) Maxwell cores are supported by the edge AI accelerator Nvidia Jetson-TX1, whereas 6 ARM cores and 256 CUDA Pascal cores embedded in the Jetson-TX2.

Speaking of which, by leveraging edge accelerators, specialized System-on-Chip (SoC) is enabled allowing to efficiently execute DL models on edge platforms, and providing various desired qualities in an unlimited number of opportunities for developing sensory systems in the real world [5], such as ultra-low latency, responsive data security, and high availability. In fact, with the deployment of edge accelerators, the system building process of several sensor-based applications including IoT, smart health, and energy efficiency is restructured.

In the work of Gibson’s [22], and focusing on low-power Mali-T628 GPU on ODROID-XU4, different optimization solutions for improving the time execution efficiency for Deep Neural Networks (DNNs) have been technically presented. The operation of a MobileNet forward transfer is discussed. It is a compact architecture of lightweight deep CNNs that enables mobile and embedded vision deployments on the ODROID-XU4 board. In addition, it enhances the performance by leveraging the architectural parameters of the platform.

To deploy low-cost SBC and IoT devices for smart buildings infrastructures in the field of Building Energy Management (BEM), the selection and evaluation of embedded systems and software optimization are considered critical. For instance, building activity can be monitored and traced by managing IoT devices in buildings using ODROID-XU4, which is a low-cost and feasible solution that enables preserving energy consumption.

From another perspective, Sánchez et al. [23] have introduced the design of the Personal Protective Equipment (PPE) with AI to be worn by staff during the Coronavirus (COVID-19) pandemic. The PPE scheme is presented to help the staff members remain protected by notifying them with irregularities predicted in their environments. This is achieved by the employment of AI with edge computing and the Multi-Agent Device and a Robot Operating System (ROS).

Furthermore, edge computing phenomenon has an important responsibility in speeding up the adoption of DL in IoT applications. Zhu et al. propose the use of edge computing to mitigate power consumption in computing AI systems for Industrial Internet of Things (IIoT) [24]. The authors propose to offload most AI workloads from servers using novel scheduling scheme in order to enhance internal energy efficiency. Simulation results signify that the proposed online scheduling technique uses less than 80% static scheduling techniques.

Related, the concept of data lakes is described in [25], where an efficient IoT system is developed on a three-layer architecture that incorporates an edge, cloud, and application layers. To boost both computational performance and energy efficiency, cloud-based data lakes are employed into autoencoder running on an edge device with results showing the performance increase when using edge computing-based IoT frameworks along with the employed AI approach.

Among both GASF and GADF [26], alternatives transforms exist, including Markov transition matrix heatmaps [27], and Markov transition fields encoding maps [28]. In this work, authors have chosen GASF for this work due to its balanced availability and support in literature coupled with its untapped potential in enhancing energy data classification performance.

Moving towards more GAF application-oriented literature, Hong et al. [17] present research that employs GAF for power system improvement by predicting day-ahead solar irradiation. By transforming time-based datastreams into GAF representation and then using Convolutional Long Short-term Memory (LSTM) model to create next-day predictions.

In the same context, Seon et al. [29] showcase a method for categorizing appliances based on user consumption data by integrating the GAF approach for transforming 1D data to a 2D matrix with convolutional neural networks. The authors utilize the Residential Energy Disaggregation Dataset (REDD). The authors have used both GASF and GADF transforms. According to simulation findings, both models achieved 94 percent accuracy on appliances with binary-state (i.e., on/off), while GASF achieved 93.5% accuracy, which is 3% better than GADF on appliances with multi-state.

Moreover, another study presents an automated abnormality detection approach for excessive carrying-load (DeTECLoad), which employs a GAF to transform sensor data into picture form. Then the image form is analyzed using a hybrid Convolutional Neural Networks (CNN)-LSTM to identify load-carrying modes from the image data, with load classification accuracy reaching up to 96% [20].

From another perspective, a related research discussed classifying power quality disturbances using GASF with a CNN [30]. After GAF representations are generated, a CNN is used to extract features and classify images through 2-D convolutional, pooling, and batch-normalization layers to capture multi-scale features of the power quality disturbances problem and reduce overfitting. The categorization analysis is further backed by experimental data acquired on a PV system prototype configuration.

Finally, a rare work that employs Edge AI for Non-Intrusive Load Monitoring (NILM) is presented by Tito et al. [31]. The work provides two event detection algorithms based on picture segmentation that are based on K-Means clustering and the thresholding, which is input to convert timeseries streams to pictures formed using GASF. The suggested technique is evaluated and confirmed using real-world load measurements from the Almanac of Minutely Power dataset, and extensive simulation have been performed on the Raspberry Pi 3B + edge computing board. The accompanying event detection findings are considered satisfactory, signifying potential for more extended research in NILM and energy data classification.

3 The grand scheme: overview of the IoE framework

In this section, an overview of the framework the authors are developing in efforts to advance research on domestic energy efficiency is presented with emphasis on edge AI and IoE. As illustrated in Fig. 2, on the right side, the IoE framework is comprised of an edge board connected to a multitude of sensors collecting power consumption data (e.g., aggregated via a smart meter or at appliance-level via smart plugs) as well as contextual ambient environmental conditions (e.g., temperature, humidity, light level, barometric pressure, and occupancy). As data are collected, they are mirrored to a cloud database, where further operations are carried out. On the other side, a mobile application is built to showcase descriptive and behavior changing visualizations of power consumption patterns along with energy-saving recommendations that aim to gradually transform end-user behavior and contextually raise awareness about the benefits of sustainability.

Data operations are distributed onto three categories: (a) edge operations including data collection and storage from sensors conducting pre-processing functions including cleaning, normalization, filling missing data, etc., and executing classification models on unseen data; (b) hybrid edge-cloud operations that can be done on either the cloud side or on the edge side, such as the conversion of 1D time series data into 2D GAF pictorial representations; and (c) cloud operations including the classification model training and validation using large training datasets. It is evident that the hybrid edge-cloud architecture is suitable when dealing with large sums of data that requires intensive computation power for training an accurate DL classification model in a cloud setting, where hardware resources are readily and economically available. On the flipside, the edge is used where it performs best, namely at data collection, data processing, and model execution, boosting overall data privacy. To add further, depending on the size of data, GAF data generation can be carried out on either the cloud or the edge permitting a hybrid flexibility to the system.

To aid in revamping the understanding of collected data, Energy Micro-Moments (EMMs) are introduced. Dubbed as a marketing tool by Google [32, 33], it was recently transformed into a benchmarking tool for energy consumption by dissecting into five classes representing normal consumption (EMM 0) capturing appliance state change (EMM 1) using an appliance while not occupying a space (EMM 2), and excessive consumption while inside a space (EMM 3) and extremely excessive power consumption (EMM 4) as shown in Table 1. Together, EMMs can be used to classify energy consumption and detect abnormalities.

In terms of intelligence, DL is heavily employed to classify big data as well as develop convincing recommendations to the energy end-user. More importantly, the hardware employed to run the algorithms must not only be compatible, but highly performing. This is why hardware selection is an instrumental part of the development of this framework. In this work, the ODROID-XU4 board is chosen as the edge computing device. It is equipped with an ARM Cortex-A15 as well as the Cortex-A7 big.LITTLE CPU, not to mention the ARM Mali-T628 GPU. Not only as a DL module, the ODROID-XU4 is a data hub connected to the IoE sensors and mirrors the data to the cloud.

Henceforth, in this article, we focus on the data processing and classification of energy consumption data. It is a multi-stage process that involves pre-processing, converting time series data into pictorial representations, known as GAF images, and classifying the GAF dataset into their corresponding classes. For the purposes of this article, a basic EMM scheme is suggested, known as the Binary Energy Micro-Moments (BEMMs), which simplifies micro-moments into only two classes: abnormal consumption, and normal consumption. The BEMM scheme is best used for abnormality detection applications and is used in this study.

4 Data: collection and GAF representations

In this section, the workflow of data collection, pre-processing, and the manifestation of GAF representations from 1D time series data are introduced. To begin, Table 2 describes the data collection sites and properties of the datasets built on each site. At De Montfort University (DMU), two testbeds have been installed, of which one is located at the Energy Lab and another at the AI Lab. On each of the two sites, an ODROID-XU4 is placed as the edge computing device and data collection hub. At the AI Lab, only environmental data are collected comprising of temperature, humidity, light level, barometric pressure, and carbon dioxide (CO2) level. On the other hand, the setup at the Energy Lab comprises of the former plus power consumption data through two smart plugs. Due to the Internet connection instability, as well as the variability of the different sensor data acquisition intervals, the data acquisition frequency varies between 5 s and 2 min at the two labs. Figure 3 depicts the AI Lab and Energy Lab data collection sites, respectively.

As data is being collected, the ODROID-XU4 mirrors the data to a cloud database, the Firebase Database in this case, for further data processing. A cloud solution is chosen as a high-performance computational platform for training the deep learning model for big data. Given the sensitivity of the collected information, it is notable to mention that an anonymization script should be run to remove any personal information from the collected data in efforts to protect the end-user’s privacy.

Further, on the edge side, pre-processing operations are conducted, such as cleaning, removing duplicates, filling missing data points, and converting data to a compatible format for further processing on the cloud, i.e., converting to either JavaScript Object Notation (JSON) or Comma-Separated Values (CSV) format as necessary.

Speaking of the benefits of 2D classification over 1D classification, when reviewing the literature, many contributions usually proceed with the classification of the time series data directly, using conventional ML methods, DL algorithms, or other techniques, such as transfer learning or genetic algorithms. Although this method is perfectly applicable, processing 1D data directly can be limiting, not to mention computationally intensive. On that account, elevating dimensions to a higher level can unravel new possibilities for classifying time series data. Enter GAFs, when classifying 2D GAF representations, not only novel insights can be revealed but in potentially faster and more efficient manner. For example, new power consumption patterns can be discerned from a pictorial representation of a 24-h consumption summary over a 12-month period indicating periods of excessive consumption (e.g., TV consumption patterns during a given week considering standby, normal, and elevated consumption levels correlated with occupancy levels). Accordingly, a GAF conversion scheme is employed to turn a large number of time series data points (e.g., in the order of millions) to several thousand images, which considerably accelerates processing time.

As outlined in Fig. 4, the GAF dataset creation workflow is described. Following data acquisition and pre-processing, the GAF conversion process commences, which includes (a) converting the timeseries cartesian coordinates into the polar coordinate system; (b) data points are normalized; and (c) GAF images are produced using the newly normalized polar data points [16]. In other words, the data is sliced into multiple 24-h fragments of which its power consumption is normalized, and then from the average normalized value, a simple threshold (i.e., check whether average normalized is more than 1) is selected to identify abnormal segments from the normal counterparts. A red–green–blue (RGB) map is used to provide colors to the GAF images, where red indicates maximum consumption levels, and blue indicates minimum consumption levels. When the GAF pictorials are generated, a program that tags each generated image as normal or abnormal, depending on the average daily normalized value of each GAF image. For example, if the daily average value is more than 1, the GAF image is tagged as abnormal, otherwise it is considered normal. The theory behind the GAF conversion process is thoroughly described in a prior publication [16]. A sample of generated 24-h averaged GAF images for of 25 days is portrayed in Fig. 5. As mentioned earlier, it is evident that images with more ‘red’ pixels are considered abnormal, whiles one with more ‘blue’ or ‘green’ pixels are tagged as normal.

The program created for GAF conversion was written in Python and can be customized to work with any relevant power consumption dataset of any considerable size. In this implementation, single day data (i.e., 24 h) are summarized into averaged and normalized 1-h snapshots to be used in the conversion of GAF images. Hence, each GAF image in this work represents a 1-h summary of a 24-h period.

5 Intelligence: deep learning on the hybrid edge-cloud

To provide a concise exposition of the data processing employed in this article, Fig. 6 is depicted. As described in the previous sections, data collection and GAF generation in the first two blocks of the flowchart. In this section, classification model selection, training, validation, and testing on the cloud and on the edge is described (i.e., the third and fourth blocks in Fig. 6).

Firstly, the TensorFlow (TF) Python library is employed for ML and DL model development.Footnote 1 The library includes a lightweight counterpart, known as TensorFlow Lite (TFLite),Footnote 2 designed to import and compute TFLite models in a process known as inference. Using TF, a model is created and trained on the provided data. It is then validated and exported as a TFLite model to be imported and executed on a compatible edge computing device.

Secondly, the TFLite Model Maker companion library is utilized for creating and exporting the TFLite model [34]. It is a recently developed library by the TF team and is currently undergoing Beta testing. In this work, a modified version of the EfficientNet-B0 model is utilized [35], which is a computationally efficient model based on CNNs with the ability to carry out transfer learning to a variety of image datasets, capturing prominent features and building a mature model accordingly. The EfficientNet-B0 is a member of the EfficientNet model family and one of the most computationally efficient versions. According to Tan & Le, EfficientNets are sophisticated, multi-stage neural network consisting of a stem layer, a final layer, and a number of intermediate layers in between depending on the model used. In the case EfficienetNet-B0, Fig. 7 describes its architecture. It spans seven blocks that carry out a different number of operations including convolution, normalization, zero padding, rescaling, addition, etc. Also, the EfficientNet-B0 model has been employed in this study due to (a) its well-documented implementation for image classification and (b) its edge-compatible architecture that can used be for edge-based classification. Other models can be used for this study; however, they may require elaborate programmatic changes in order to be compatible with the edge computing platform used in this work.

It is noteworthy to mention that the model training, validation, and export are carried out on a cloud server in order to train large sums of time series data economically with sufficient speed.

After the model is chosen and configured, data is supplied to the model instance for training. Data is split into three sub-datasets: the training, validation, and test datasets split as 80%, 10%, and 10% respectively. The model is fitted for a specified number of epochs. During training, high consumption snapshots are classified as abnormal and otherwise classified is normal. Following training, the model is validated using the validation dataset for a specified number of epochs. Then the model is exported as a TFLite model, which is downloaded to the computing edge device for inference.

On the edge side, the TFLite model is downloaded, imported into a Python interface, where the test dataset is run through the imported lightweight model and classified accordingly. After testing, the TFLite can infer any input image in near real-time performance.

6 Results and Discussion

In this section, implementation of the proposed GAF classifier is described on a realistic case scenario. In this workflow, for the purposes of illustrating the performance of the model on a large scale, the UK Domestic Appliance-Level Electricity (UK-DALE) dataset is employed due to its large size and multi-year duration [36]. All data generated or analyzed during this study are included in [36]. However, the dataset described in Sect. 4 is richer in parameters yet is still under construction. The UK-DALE dataset keeps record of the power consumption data from five houses in the UK, where in each household, aggregated power consumption is collected along with appliance-level data every six seconds. In our implementation, we have used aggregated power consumption data from one house. It is important to note that the BEMM scheme (described in Sect. 3) has been utilized to classify the input data either as abnormal or normal.

Table 3 describes the experimental dataset splits. As outlined, the 70 million data points are converted into 1,630 GAF images with 1D-to-2D ratio of 42,945. Each image has the resolution of 648 by 648 pixels. In this implementation, TensorFlow 2.7.0 is used in cloud operations run on Google Colab (with GPU) and edge operations on the ODROID-XU4 board. After multiple tests, 35 epochs was chosen as the optimum number of epochs for the EfficientNet-B0 model, as overfitting is observed for higher number of epochs. Model accuracy and loss curves are plotted in Fig. 8. It can be commonly noted that training accuracy is higher than the evaluation’s, due to the use of a Dropout layer in the model, which is automatically deactivated in evaluation, resulting a minor accuracy drop.

Following training and validation on the cloud, the model is exported, downloaded, imported, and tested on the ODROID-XU4. It also noteworthy to mention that the TFLite model is also tested on the cloud for accuracy and computation performance benchmarking purposes. Thus, Table 4 compares the model testing performance between the edge ODROID-XU4 running the TFLite model, Google Colab running the TF model, and the TFLite cloud implementation. In terms of computational speed, the cloud implementation excels given its high performance GPU. However, the performance of the ODROID-XU4 is considerably high with 28.5 s, which is approximately 6.9 times faster the cloud TFLite implementation. In terms of accuracy, the model scores evenly with an average of 89.4%. To provide more perspective, the ODROID-XU4 can classify a GAF image that represents − 42,000 data points in less than 17.5 ms, which is very close to real-time performance.

Emphasizing on ODROID-XU4 classification performance, Table 5 and Fig. 9 show the model evaluation metrics and confusion matrix respectively. As indicated in the data, the model is providing adequate classification accuracy without risking overfitting, thanks to the evaluating the model over multiple epochs. For example, the model’s F-score (F1), which can be considered as the harmonic mean of precision and recall, have achieved an average of − 91%, which can indicate excellent precision and recall.

It is worthy to mention that the edge implementation excels in terms of power consumption, with an average of less than 10 W, which is considerably lower than most conventional cloud clusters (e.g., a minimum of 350 W per GPU) [37]. Also, Fig. 10 show examples of false positive, false negative, true positive, and true negative classifications in light of GAF classification.

In terms of current limitations, the proposed work can be improved in the following aspects:

-

1.

Increase classification accuracy to more than 95%. This can be achieved by further tuning the model or using a high level version of the EfficientNet classifier;

-

2.

Training the model with the multi-class EMM scheme to classify for a larger variety of power consumption patterns; and

-

3.

Employing the data collected at DMU labs to incorporate ambient environmental data as well as appliance level power consumption.

To summarize, the hybrid edge-cloud data pipeline (Fig. 1) has proven to be a novel and efficient method of collecting and classifying power consumption data. The fine balance of delegating data collection and model execution to the edge while assigning GAF generation and model training to the cloud is crucial to ensuring an efficient data processing workflow that boasts high performance, efficiency, and keeps end-user privacy in mind.

The outcomes of the classifier can be used as input to a recommender system to be able to produce personalized, context-driven suggestion that motivate sustainable energy behavior along with using the data for novel and meaningful visualizations that signify consumption patterns and abnormalities. The source code of the proposed system is uploaded on GitHub.Footnote 3

7 Conclusions

This work proposed the use of a GAF classifier of EMM data with a case study on a computing edge device. EMM data is collected and pre-processed on the edge, while a classification model is fitted and evaluated on a cloud server to be exported and tested on the edge, namely the ODROID-XU4. In this implementation, a novel customizable 1D-to-2D GAF generator is presented and run on the UK-DALE dataset to convert 70 million datapoints into 1630 GAF images. A DL classifier based on the EfficientNet-B0 model is developed on TF and exported into a lightweight version for execution on the ODROID-XU4, showcasing adequate performance and impressive computational efficiency, as fast as 17.5 ms per classified GAF image.

The current implementation can be further improved by enhancing model’s accuracy, classifying multi-class micro-moment data, and employing the richer multi-parameter dataset described in Sect. 4.

To the best of the authors’ knowledge, this is first work to introduce a 2D energy consumption lightweight classifier running on the ODROID-XU4, and thusly paves a path the towards deeper edge computing integration with industrial IoE applications.

Data availability

The datasets analyzed during the current study are available in the United Kingdom Energy Research Centre (UKERC) Energy Data Centre: Data Catalogue, link to dataset.

References

Ruiz, L.G.B., Pegalajar, M.C., Molina-Solana, M., Guo, Y.-K.: A case study on understanding energy consumption through prediction and visualization (VIMOEN). Journal of Building Engineering 30, 101315 (2020). https://doi.org/10.1016/j.jobe.2020.101315

Al-Kababji, A. et al.: Energy data visualizations on smartphones for triggering behavioral change: Novel vs. conventional. In: 2020 2nd Global Power, Energy and Communication Conference (GPECOM), pp. 312–317 (Oct. 2020). doi: https://doi.org/10.1109/GPECOM49333.2020.9247901.

Xu, L., Francisco, A., Taylor, J.E., Mohammadi, N.: Urban energy data visualization and management: Evaluating community-scale eco-feedback approaches. J. Manag. Eng. 37(2), 04020111 (2021). https://doi.org/10.1061/(ASCE)ME.1943-5479.0000879

Badar, M.S., Shamsi, S., Haque, M.M.U., Aldalbahi, A.S.: Applications of AI and ML in IoT. In: Sharma, S.K., Bhushan, B., Kumar, R., Khamparia, A., Debnath, N.C. (eds.) Integration of WSNs into Internet of Things. CRC Press (2021)

Antonini, M., Vu, T.H., Min, C., Montanari, A., Mathur, A., Kawsar, F.: Resource Characterisation of Personal-scale sensing models on edge accelerators. In: Proceedings of the First International Workshop on Challenges in Artificial Intelligence and Machine Learning for Internet of Things, pp. 49–55. New York (Nov. 2019). doi: https://doi.org/10.1145/3363347.3363363.

Mothukuri, V., Parizi, R.M., Pouriyeh, S., Huang, Y., Dehghantanha, A., Srivastava, G.: A survey on security and privacy of federated learning. Futur. Gener. Comput. Syst. 115, 619–640 (2021). https://doi.org/10.1016/j.future.2020.10.007

Li, H., Yu, J., Zhang, H., Yang, M., Wang, H.: Privacy-preserving and distributed algorithms for modular exponentiation in IoT with edge computing assistance. IEEE Internet Things J. 7(9), 8769–8779 (2020). https://doi.org/10.1109/JIOT.2020.2995677

Chi, J. et al.: Privacy partition: A privacy-preserving framework for deep neural networks in edge networks. In: 2018 IEEE/ACM Symposium on Edge Computing (SEC), pp. 378–380 (Oct. 2018). doi: https://doi.org/10.1109/SEC.2018.00049.

Lizana, J., Chacartegui, R., Barrios-Padura, A., Valverde, J.M.: Advances in thermal energy storage materials and their applications towards zero energy buildings: A critical review. Appl. Energy 203, 219–239 (2017). https://doi.org/10.1016/j.apenergy.2017.06.008

Santamouris, M.: Innovating to zero the building sector in Europe: Minimising the energy consumption, eradication of the energy poverty and mitigating the local climate change. Sol. Energy 128, 61–94 (2016). https://doi.org/10.1016/j.solener.2016.01.021

Elbes, M., Alrawashdeh, T., Almaita, E., AlZubi, S., Jararweh, Y.: A platform for power management based on indoor localization in smart buildings using long short-term neural networks. Trans Emerging Tel Tech (2022). https://doi.org/10.1002/ett.3867

Deng, S., Wang, R.Z., Dai, Y.J.: How to evaluate performance of net zero energy building—A literature research. Energy 71, 1–16 (2014). https://doi.org/10.1016/j.energy.2014.05.007

Bui, N., Castellani, A.P., Casari, P., Zorzi, M.: The internet of energy: a web-enabled smart grid system. IEEE Network 26(4), 39–45 (2012). https://doi.org/10.1109/MNET.2012.6246751

Kafle, Y.R., Mahmud, K., Morsalin, S., Town, G.E.: Towards an internet of energy. In 2016 IEEE International Conference on Power System Technology (POWERCON), pp. 1–6 (Sep. 2016). doi: https://doi.org/10.1109/POWERCON.2016.7754036.

Kolokotsa, D.: The role of smart grids in the building sector. Energy and Buildings 116, 703–708 (2016). https://doi.org/10.1016/j.enbuild.2015.12.033

Alsalemi, A., Amira, A., Malekmohamadi, H., Diao, K., Bensaali, F. Elevating energy data analysis with M2GAF: micro-moment driven Gramian angular field visualizations. International Conference on Applied Energy. https://dora.dmu.ac.uk/handle/2086/21303 (2021). Accessed 27 December 2021.

Hong, Y.-Y., Martinez, J.J.F., Fajardo, A.C.: Day-ahead solar irradiation forecasting utilizing gramian angular field and convolutional long short-term memory. IEEE Access 8, 18741–18753 (2020). https://doi.org/10.1109/ACCESS.2020.2967900

Thanaraj, K.P., Parvathavarthini, B., Tanik, U.J., Rajinikanth, V., Kadry, S., Kamalanand, K.: Implementation of deep neural networks to classify EEG signals using gramian angular summation field for epilepsy diagnosis. arXiv:2003.04534 [CS, EESS]. Available: http://arxiv.org/abs/2003.04534 (Mar. 2020), Accessed 22 Sept 2021.

Wang, Z., Oates, T.: Imaging time-series to improve classification and imputation. https://www.aaai.org/ocs/index.php/IJCAI/IJCAI15/paper/view/11082 (Jun. 2015). Accessed 22 Sept. 2021.

Lee, H., Yang, K., Kim, N., Ahn, C.R.: Detecting excessive load-carrying tasks using a deep learning network with a Gramian Angular Field. Autom. Constr. 120, 103390 (2020). https://doi.org/10.1016/j.autcon.2020.103390

Khan, W.Z., Ahmed, E., Hakak, S., Yaqoob, I., Ahmed, A.: Edge computing: A survey. Futur. Gener. Comput. Syst. 97, 219–235 (2019). https://doi.org/10.1016/j.future.2019.02.050

Gibson, P.: Deep learning on a low power GPU” University of Edinburgh, MInf Project (Part 1) Report. https://project-archive.inf.ed.ac.uk/ug4/20181261/ug4_proj.pdf (2018).

Sánchez, S.M. et al.: Edge computing driven smart personal protective system deployed on NVIDIA Jetson and integrated with ROS. In Highlights in practical applications of agents, multi-agent systems, and trust-worthiness, pp. 385–393. The PAAMS Collection, Cham (2020). doi: https://doi.org/10.1007/978-3-030-51999-5_32.

Zhu, S., Ota, K., Dong, M.: Green AI for IIoT: Energy efficient intelligent edge computing for industrial Internet of Things. IEEE Trans. Green Commun. Netw. 6(1), 79–88 (2022). https://doi.org/10.1109/TGCN.2021.3100622

Yu, W., Liu, Y., Dillon, T.S., Rahayu, W.: Edge computing-assisted IoT framework with an autoencoder for fault detection in manufacturing predictive maintenance. IEEE Trans Ind Inform (2022). https://doi.org/10.1109/TII.2022.3178732

Yang, C.-L., Chen, Z.-X., Yang, C.-Y.: Sensor classification using convolutional neural network by encoding multivariate time series as two-dimensional colored images. Sensors 20(1), 168 (2020). https://doi.org/10.3390/s20010168

Padron-Manrique, C., et al.: mb-PHENIX: Diffusion and supervised uniform manifold approximation for denoising microbiota data. Bioinformatics. (2022). https://doi.org/10.1101/2022.06.23.497285

He, C., Ge, D., Yang, M., Yong, N., Wang, J., Yu, J.: A data-driven adaptive fault diagnosis methodology for nuclear power systems based on NSGAII-CNN. Ann. Nucl. Energy 159, 108326 (2021). https://doi.org/10.1016/j.anucene.2021.108326

Seon, J.-H., et al.: Classification method of multi-state appliances in non-intrusive load monitoring environment based on Gramian Angular Field. J. Instit. Internet Broadcasting Commun. 21(3), 183–191 (2021). https://doi.org/10.7236/JIIBC.2021.21.3.183

Shukla, J., Panigrahi, B.K., Ray, P.K.: Power quality disturbances classification based on Gramian angular summation field method and convolutional neural networks. Int Trans Electr Energ Syst (2021). https://doi.org/10.1002/2050-7038.13222

Tito, S.R. et al.: Image segmentation-based event detection for non-intrusive load monitoring using Gramian Angular Summation Field. In 2021 IEEE Industrial Electronics and Applications Conference (IEACon), pp. 185–190, Penang, Malaysia (Nov. 2021). doi: https://doi.org/10.1109/IEACon51066.2021.9654789.

How micro-moments are changing the rules. Think with Google. https://www.thinkwithgoogle.com/marketing-resources/micro-moments/how-micromoments-are-changing-rules/. Accessed 3 May 2018.

Alsalemi, A., Sardianos, C., Bensaali, F., Varlamis, I., Amira, A., Dimitrakopoulos, G.: The role of micro-moments: A survey of habitual behavior change and recommender systems for energy saving. IEEE Syst. J. 13(3), 3376–3387 (2019)

TensorFlow lite model maker. TensorFlow. https://www.tensorflow.org/lite/guide/model_maker. Accessed 27 Dec 2021.

Tan, M., Le, Q.V.: EfficientNet: Rethinking model scaling for convolutional neural networks. https://arxiv.org/abs/1905.11946v5 (2019), Accessed 27 Dec. 2021.

Kelly, J., Knottenbelt, W.: The UK-DALE dataset, domestic appliance-level electricity demand and whole-house demand from five UK homes. Scientific Data 2, 150007 (2015). https://doi.org/10.1038/sdata.2015.7

Zhang, A., Lipton, Z.C., Li, M., Smola, A.J.: Dive into deep learning. https://arxiv.org/abs/2106.11342v2 (2021). Accessed 31 Dec. 2021.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by AA. The first draft of the manuscript was written by AA and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

This research has been obtained ethical approval via De Montfort University Faculty of Computing, Engineering and Media with reference no. 414200 valid until November 22, 2024.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file2 (MP4 64488 KB)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Alsalemi, A., Amira, A., Malekmohamadi, H. et al. Lightweight Gramian Angular Field classification for edge internet of energy applications. Cluster Comput 26, 1375–1387 (2023). https://doi.org/10.1007/s10586-022-03704-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-022-03704-1