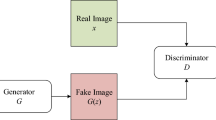

Shale reservoirs are characterized by the presence of nano-micron pores in abundance, easy breakage due to bedding development, and difficulty in preparing true core samples. Therefore, 3D reconstruction of digital cores has become an important means of studying the microstructure features of shale. Currently, for shale matrix of dense reservoirs, existing deep learning-based reconstruction methods suffer from high costs and low accuracy, mainly due to the insufficient extraction capability of the networks for nano-micron pore features and low core resolution during reconstruction. To accurately predict the nano-micron scale pore structure of shale, we propose a shale 3D reconstruction method based on context-aware generative adversarial networks and high-resolution optical flow estimation (COFRnet-3DWGAN). This method optimizes the 3DWGAN(Wasserstein GAN) and high-resolution optical flow estimation networks by incorporating context-awareness, enhances the feature extraction capability of the networks to improve the learning degree of core nano-micron pores, and optimizes the core resolution by increasing the resolution of optical flow between core sequence images, thereby improving the accuracy of core reconstruction. The results show that, compared with WGAN, the proposed method is closer to the true core in terms of porous media morphological functions, porosity and permeability distribution, and pore structure parameters, indicating that this method has certain advantages in improving the accuracy of shale reconstruction.

Similar content being viewed by others

Reference

Li H., Singh S., Chawla N., et al. Direct extraction of spatial correlation functions from limited x-ray tomography data for microstructural quantification [J]. Materials Characterization, 2018, 140: 265-274.

Bai T., Tahmasebi P. Sequential Gaussian simulation for geosystems modeling: A machine learning approach [J]. Geoscience Frontiers, 2022, 13(1): 101258.

Novikov-Borodin A. V. Reconstruction and Simulation of Experimental Data Using Test Measurements[J]. Instruments and Experimental Techniques, 2022, 65(2): 238-245.

Mo X. W., Zhang Q., Lu J. A. A complement optimization scheme to establish the digital core model based on the simulated annealing method[J]. Chinese Journal of Geophysics, 2016, 59(5): 1831-1838.

Ding K., Teng Q., Wang Z., et al. Improved multipoint statistics method for reconstructing three-dimensional porous media from a two-dimensional image via porosity matching[J]. Physical Review E, 2018, 97(6): 063304.

Nie X., Zou C. C., Meng X. H., et al. 3D digital core modeling of shale gas reservoir rocks: A case study of conductivity model[J]. Natural Gas Geoscience, 2016, 27(4): 706-715.

Creswell A., White T., Dumoulin V., et al. Generative adversarial networks: An overview[J]. IEEE signal processing magazine, 2018, 35(1): 53-65.

Radford A., Metz L., Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks[J]. arXiv preprint arXiv:1511.06434, 2015.

Karras T., Laine S., Aila T. A style-based generator architecture for generative adversarial networks[C]. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019: 4401-4410.

Huang X., Liu M. Y., Belongie S., et al. Multimodal unsupervised image-to-image translation[C]. Proceedings of the European conference on computer vision (ECCV). 2018: 172-189.

Mosser L., Dubrule O., Blunt M. J. Reconstruction of three-dimensional porous media using generative adversarial neural networks[J]. Physical Review E, 2017, 96(4): 043309.

Huang Y., Xiang Z., Qian M. Deep-learning-based porous media microstructure quantitative characterization and reconstruction method[J]. Physical Review E, 2022, 105(1): 015308.

Feng J., Teng Q., Li B., et al. An end-to-end three-dimensional reconstruction framework of porous media from a single two-dimensional image based on deep learning[J]. Computer Methods in Applied Mechanics and Engineering, 2020, 368: 113043.

Arjovsky M., Chintala S., Bottou L. Wasserstein generative adversarial networks[C]. International conference on machine learning. PMLR, 2017: 214-223.

Choy C. B., Xu D., Gwak .J Y., et al. 3D-R2N2: A Unified Approach for Single and Multi-view 3D Object Reconstruction[J]. Springer International Publishing, 2016.

Xie H., Yao H., Sun X., et al. Pix2vox: Context-aware 3d reconstruction from single and multi-view images[C]. Proceedings of the IEEE/CVF international conference on computer vision. 2019: 2690-2698.

Dosovitskiy A., Fischer P., Ilg E., et al. Flownet: Learning optical flow with convolutional networks[C]. Proceedings of the IEEE international conference on computer vision. 2015: 2758-2766.

Ilg E., Mayer N., Saikia T., et al. Flownet 2.0: Evolution of optical flow estimation with deep networks[C]. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 2462-2470.

Liao R., Tao X., Li R., et al. Video super-resolution via deep draft-ensemble learning[C]. Proceedings of the IEEE international conference on computer vision. 2015: 531-539.

Liu D., Wang Z., Fan Y, et al. Robust video super-resolution with learned temporal dynamics[C]. Proceedings of the IEEE International Conference on Computer Vision. 2017: 2507-2515.

Caballero J., Ledig C., Aitken A., et al. Real-time video super-resolution with spatio-temporal networks and motion compensation[C]. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 4778-4787.

Caballero J., Ledig C., Aitken A., et al. Real-time video super-resolution with spatio-temporal networks and motion compensation: U.S. Patent 10,701,394[P]. 2020-6-30.

Wang L., Guo Y., Liu L., et al. Deep Video Super-Resolution using HR Optical Flow Estimation[J]. IEEE Transactions on Image Processing, 2020, PP(99):1-1.

Simonyan K., Zisserman A. Two-stream convolutional networks for action recognition in videos[J]. Advances in neural information processing systems, 2014, 27.

Liu W., Salzmann M., Fua P. Context-aware crowd counting[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019: 5099-5108.

Hu H., Bai S., Li A., et al. Dense relation distillation with context-aware aggregation for few-shot object detection[C]. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021: 10185-10194.

Lewitt R. M. Reconstruction algorithms: Transform methods[J]. Proceedings of the IEEE, 2005, 71(3):390-408.

Acknowledgements

The authors cordially acknowledge the financial support of the National Natural Science Foundation of China (No.:51974270) and the Science and Technology Cooperation Project of the CNPC-SWPU Innovation Alliance (No.:2020CX040201). The National Natural Science Foundation: The mechanism and method of reinforcing the reinforced body by plugging in shale water-based drilling fluid (No.:51974270). The Science and Technology Cooperation Project of the CNPC-SWPU Innovation Alliance: Research on matching technology to reduce the complicated conditions and downhole accidents of long horizontal sections (No.:2020CX040201).

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Khimiya i Tekhnologiya Topliv i Masel, No. 3, pp. 83–92 May – June, 2023.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pingquan, W., Chao, R., Junlin, S. et al. Shale 3D Reconstruction Method Based on Context-Aware Generative Adversarial Networks and High-Resolution Optical Flow Estimation. Chem Technol Fuels Oils 59, 517–533 (2023). https://doi.org/10.1007/s10553-023-01553-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10553-023-01553-1