Abstract

The theme of the present paper is numerical integration of \(C^r\) functions using randomized methods. We consider variance reduction methods that consist in two steps. First the initial interval is partitioned into subintervals and the integrand is approximated by a piecewise polynomial interpolant that is based on the obtained partition. Then a randomized approximation is applied on the difference of the integrand and its interpolant. The final approximation of the integral is the sum of both. The optimal convergence rate is already achieved by uniform (nonadaptive) partition plus the crude Monte Carlo; however, special adaptive techniques can substantially lower the asymptotic factor depending on the integrand. The improvement can be huge in comparison to the nonadaptive method, especially for functions with rapidly varying rth derivatives, which has serious implications for practical computations. In addition, the proposed adaptive methods are easily implementable and can be well used for automatic integration.

Similar content being viewed by others

1 Introduction

Adaption is a useful tool to improve performance of algorithms. The problems of numerical integration and related to it \(L^1\) approximation are not exceptions, see, e.g., [9] for a survey of theoretical results on the subject. If an underlying function possesses some singularities and is otherwise smooth, then using adaption is necessary to localize the singular points and restore the convergence rate typical for smooth functions, see, e.g., [6, 12,13,14]. For functions that are smooth in the whole domain, adaptive algorithms usually do not offer a better convergence rate than nonadaptive algorithms; however, they can essentially lower asymptotic constants. This is why adaptive quadratures are widely used for numerical integration, see, e.g., [1, 3, 7]. Their superiority over nonadaptive quadratures is rather obvious, but precise answers to the quantitative question of “how much adaption helps” are usually missing. This gap was partially filled by recent results of [2, 10, 11], where best asymptotic constants of deterministic algorithms that use piecewise polynomial interpolation were determined for integration and \(L^1\) approximation of r-times continuously differentiable functions \(f:[a,b]\rightarrow {\mathbb {R}}.\) In this case, adaption relies on adjusting the partition of the interval [a, b] to the underlying function. While the convergence rate is of order \(N^{-r},\) where N is the number of function evaluations used, it turns out that the asymptotic constant depends on f via the factor of \((b-a)^r\big \Vert f^{(r)}\big \Vert _{L^1}\) for uniform partition, and \(\big \Vert f^{(r)}\Vert _{L^{1/(r+1)}}\) for best adaptive partition.

In the current paper, we present the line of thinking similar to that of the aforementioned papers. The difference is that now we want to carry out the analysis and obtain asymptotic constants for randomized algorithms.

Our goal is the numerical approximation of the integral

It is well known that for \(f\in L^2(a,b)\) the crude Monte Carlo,

returns an approximation with expectation \({\mathbb {E}}(M_Nf)=Sf\) and error (standard deviation)

Suppose that the function enjoys more smoothness, i.e.,

Then a much higher convergence rate \(N^{-(r+1/2)}\) can be achieved using various techniques of variance reduction, see, e.g., [4]. One way is to apply a randomized approximation of the form

where \(L_{m,r}\) is the piecewise polynomial interpolation of f of degree \(r-1\) using a partition of the interval [a, b] into m subintervals, \(M_n\) is a Monte Carlo type algorithm using n random samples of f, and N is the total number of function evaluations used (for arguments chosen either deterministically or randomly). The optimal rate is already achieved for uniform (nonadaptive) partition and crude Monte Carlo. Then, see Theorem 2.1 with \(\beta =0,\) the error asymptotically equals

where c depends only on the choice of the interpolation points within subintervals. The main result of this paper relies on showing that with the help of adaption the asymptotic error of the methods (1.4) can be reduced to

see Theorems 3.1 and 4.1. Observe that the gain can be significant, especially when the derivative \(f^{(r)}\) drastically changes. For instance, for \(r=4,\) \([a,b]=[0,1],\) and \(f(x)=1/(x+d),\) adaption is asymptotically roughly \(5.7*10^{12}\) times better than nonadaption if \(d=10^{-4},\) and \(1.8*10^{29}\) times if \(d=10^{-8}.\)

We construct two randomized algorithms, denoted \({\overline{M}}_{N,r}^{\,*}\) and \({\overline{M}}_{N,r}^{\,**},\) that achieve the error (1.5). Although they use different initial approaches; namely, stratification versus importance sampling, in the limit they both reach essentially the same partition, such that the \(L^1\) errors of Lagrange interpolation in all subintervals are equalized. However, numerical tests of Sect. 5 show that the algorithm \({\overline{M}}_{N,r}^{\,*}\) achieves the error (1.5) with some delay, which makes \({\overline{M}}_{N,r}^{\,**}\) worth recommending rather than \({\overline{M}}_{N,r}^{\,*}\) in practical computations.

Other advantages of \({\overline{M}}_{N,r}^{\,**}\) are that it is easily implementable and, as shown in Sect. 6, it can be successfully used for automatic Monte Carlo integration.

Our analysis has been so far restricted to one-dimensional integrals only. In a future work it will be extended and corresponding adaptive Monte Carlo algorithms will be constructed for multivariate integration, where randomization finds its major application. The current paper is the first step in this direction.

In the sequel, we use the following notation. For two functions of N we write \(g_1(N)\lessapprox g_2(N)\) iff \(\limsup _{N\rightarrow \infty }g_1(N)/g_2(N)\le 1,\) and we write \(g_1(N)\approx g_2(N)\) iff \(\lim _{N\rightarrow \infty }g_1(N)/g_2(N)=1.\) Similarly, for functions of \(\varepsilon \) we write \(h_1(\varepsilon )\lessapprox h_2(\varepsilon )\) iff \(\limsup _{\varepsilon \rightarrow 0^+}h_1(\varepsilon )/h_2(\varepsilon )\le 1,\) and \(h_1(\varepsilon )\approx h_2(\varepsilon )\) iff \(\lim _{\varepsilon \rightarrow 0^+}h_1(\varepsilon )/h_2(\varepsilon )=1.\)

2 Variance reduction using Lagrange interpolation

We first derive some general error estimates for the variance reduction algorithms of the form (1.4), where the standard Monte Carlo is applied for the error of piecewise Lagrange interpolation. Specifically, we divide the interval [a, b] into m subintervals using a partition \(a=x_0<x_1<\cdots <x_m=b,\) and on each subinterval \([x_{j-1},x_j]\) we approximate f using Lagrange interpolation of degree \(r-1\) with the interpolation points

where

are fixed (independent of the partition). Denote such an approximation by \(L_{m,r}f.\) Then \(f=L_{m,r}f+R_{m,r}f\) with \(R_{m,r}f=f-L_{m,r}f.\) The integral Sf is finally approximated by

where \(M_n\) is the crude Monte Carlo (1.2). We obviously have \({\mathbb {E}}({\overline{M}}_{m,n,r}f)=Sf.\) Since

by (1.3) we have

Note that

is the squared \(L^2\)-error of the applied (piecewise) polynomial interpolation, while

is the error of the quadrature \({\overline{Q}}_{m,r}f=S(L_{m,r}f).\)

From now on we assume that f is not a polynomial of degree smaller than or equal to \(r-1,\) since otherwise \({\overline{M}}_{m,n,r}f=Sf.\) Define the polynomial

We first consider the interpolation error \(\Vert f-L_{m,r}f\Vert _{L^2(a,b)}.\) Let

For each j, the local interpolation error equals

Hence

In particular, for the equispaced partition, in which case \(h_j=(b-a)/m,\) we have

Now, we consider the quadrature error \(Sf-{\overline{Q}}_{m,r}f.\) Let

The local integration errors equal

Choose arbitrary \(\zeta _j\in [x_{j-1},x_j]\) for \(1\le j\le m.\) Then

where \(\omega \) is the modulus of continuity of \(f^{(r)}.\) We also have

Hence \(Sf-{\overline{Q}}_{m,r}f\,=\,X_m\,+\,Y_m,\) where

In particular, for the equispaced partition,

Suppose that \(\beta \ne 0\) and \(\int _a^bf^{(r)}(x)\,\mathrm dx\ne 0.\) Then \(X_m\approx \frac{\beta }{r!}(b-a)^r\Big (\int _a^bf^{(r)}(x) \mathrm dx\Big )m^{-r}.\) Since \(\omega (h)\) goes to zero as \(h\rightarrow 0^+,\) the component \(X_m\) dominates \(Y_m\) as \(m\rightarrow +\infty .\) Hence

On the other hand, if \(\beta =0\) or \(\int _a^bf^{(r)}(x)\,\mathrm dx=0\) then the quadrature error converges to zero faster than \(m^{-r},\) i.e.

Note that \(\beta =0\) if and only if the quadrature \({\overline{Q}}_{m,r}\) has the degree of exactness at least r, i.e., it is exact for all polynomials of degree r or less. Obviously, the maximal degree of exactness equals \(2r-1.\)

We see that for the equidistant partition of the interval [a, b] the error \(\big ({\mathbb {E}}(Sf-{\overline{M}}_{m,n,r}f)^2\big )^{1/2}\) is asymptotically proportional to

regardless of the choice of points \(z_i\) in (2.1). Let us minimize \(\phi (m,n)\) assuming the total number of points used is at most N. We have two cases depending on whether both endpoints of each subinterval are used in interpolation. If so, i.e., if \(z_1=0\) and \(z_r=1\) (in this case \(r\ge 2\)) then \(N=(r-1)m+1+n.\) The optimal values are

for which

Otherwise we have \(N=rm+n.\) The optimal values are

for which

Denote by \({\overline{M}}_{N,r}\) the corresponding algorithm with the equidistant partition, where for given N the values of n and m equal correspondingly \(\lfloor n^*\rfloor \) and \(\lfloor m^*\rfloor .\) Our analysis is summarized in the following theorem.

Theorem 2.1

We have as \(N\rightarrow +\infty \) that

where

\(\alpha \) and \(\beta \) are given by (2.3) and (2.4), and

We add that the algorithm \({\overline{M}}_{N,r}\) is fully implementable since we assume that we have access to function evaluations at points from [a, b].

3 First adaptive algorithm

Now we add a stratification strategy to our algorithm of Theorem 2.1 to obtain an adaptive algorithm with a much better asymptotic constant. That is, we divide the initial interval [a, b] into k equal length subintervals \(I_i, \ 1\le i \le k,\) and on each subinterval we apply the approximation of Theorem 2.1 with some \(N_i,\) where

Denote such an approximation by \({\overline{M}}_{N,k,r}.\) (Note that \({\overline{M}}_{N,r}={\overline{M}}_{N,1,r}\).) Then, by Theorem 2.1, for fixed k we have as all \(N_i\rightarrow +\infty \) that

where

Minimization of \(\psi (N_1,\ldots ,N_k)=\left( \sum _{i=1}^kC_i^2N_i^{-(2r+1)}\right) ^{1/2}\) with respect to (3.1) gives

and then

Let \(\xi _i,\eta _i\in I_i\) satisfy \(\int _{I_i}\big |f^{(r)}(x)\big |^2\mathrm dx=h\big |f^{(r)}(\xi _i)\big |^2\) and \(\int _{I_i}f^{(r)}(x)\,\mathrm dx=hf^{(r)}(\eta _i).\) Then

and we have as \(k\rightarrow +\infty \) that

It is clear that we have to take \(N_i\) to be an integer and at least r, for instance

Then the corresponding number \(m_i\) of subintervals and number \(n_i\) of random points in \(I_i\) can be chosen as

where \(m_i^*\) and \(n_i^*\) are given by (2.5) and (2.6) with N replaced by \(N_i.\)

Denote by \({\overline{M}}^{\,*}_{N,r}\) the above constructed approximation \({\overline{M}}_{N,k_N,r}\) with \(k_N\) such that \(k_N\rightarrow +\infty \) and \(k_N/N\rightarrow 0\) as \(N\rightarrow +\infty .\) For instance, \(k_N=N^\kappa \) with \(0<\kappa <1.\) Our analysis gives the following result.

Theorem 3.1

We have as \(N\rightarrow +\infty \) that

The asymptotic constant of the approximation \({\overline{M}}^{\,*}_{N,r}\) of Theorem 3.1 is never worse than that of \({\overline{M}}_{N,r}\) of Theorem 2.1. Indeed, comparing both constants we have

where the first inequality follows from the Schwarz inequality and the second one from Hölder’s inequality for integrals. As shown in the introduction, the gain can be significant, especially when the derivative \(f^{(r)}\) drastically changes.

The approximation \({\overline{M}}^{\,*}_{N,r}\) possesses good asymptotic properties, but is not feasible since we do not have a direct access to the \(C_i\)s. In a feasible implementation one can approximate \(C_i\) using divided differences, i.e.,

and \(x_{i,j}\) are arbitrary points from \(I_i.\) Then

This works well for functions f for which the rth derivative does not nullify at any point in [a, b]. Indeed, then \(f^{(r)}\) does not change its sign and, moreover, it is separated away from zero. This means that

which is enough for the asymptotic equality of Theorem 3.1 to hold true.

If \(f^{(r)}\) is not separated away from zero then we may have problems with proper approximations of \(C_i\) in the intervals \(I_i\) where \(|f^{(r)}|\) assumes extremely small values or even zeros. A possible and simple remedy is to choose ‘small’ \(\Delta >0\) and modify \({\widetilde{C}}_i\) as follows:

Then, letting \(A_1=\big \{a\le x\le b:\,|f^{(r)}(x)|\ge \Delta \big \}\) and \(A_2=[a,b]\setminus A_1,\) we have as \(k\rightarrow +\infty \) that

Hence, the approximation of \(C_i\) by (3.3) results in an algorithm whose error is approximately upper bounded by

where

We obviously have \(\lim _{\Delta \rightarrow 0^+}\int _a^b\big |f_\Delta ^{(r)}(x)\big |^{1/(r+1)}\mathrm dx =\int _a^b\big |f^{(r)}(x)\big |^{1/(r+1)}\mathrm dx.\)

A closer look at the deterministic part of \({\overline{M}}^{\,*}_{N,r}\) shows that the final partition of the interval [a, b] tends to equalize the \(L^1\) errors in all of the m subintervals. As shown in [11], such a partition is optimal in the sense that it minimizes the asymptotic constant in the error \(\Vert f-L_{m,r}f\Vert _{L^1(a,b)}\) among all possible piecewise Langrange interpolations \(L_{m,r}\). A disadvantage of the optimal partition is that it is not nested. This makes it necessary to start the computations from scratch when N is updated to a higher value. Also, a proper choice of the sequence \(k_N=N^\kappa \) is problematic, especially when N is still relatively small. On one hand, the larger \(\kappa \) the better the approximation of \(C_i\) by \({\widetilde{C}}_i,\) but also the more far away the partition from the optimal one. On the other hand, the smaller \(\kappa \) the closer the partition to the optimal one, but also the worse the approximation of \(C_i.\) This trade–off significantly affects the actual behavior of the algorithm, which can be seen in numerical experiments of Sect. 5.

In the following section, we propose another adaptive approach leading to an easily implementable algorithm that produces nested partitions close to optimal and possesses asymptotic properties similar to that of \({\overline{M}}^{\,*}_{N,r}.\) As we shall see in Sect. 6, nested partitions are vital for automatic Monte Carlo integration.

4 Second adaptive algorithm

Consider a \(\rho \)-weighted integration problem

where the function \(\rho :[a,b]\rightarrow {\mathbb {R}}\) is integrable and positive a.e. and \(\int _a^b\rho (x)\,\mathrm dx=1.\) The corresponding Monte Carlo algorithm is

where \(\mu _\rho \) is the probability distribution on [a, b] with density \(\rho .\) Then

Now, the non-weighted integral (1.1) can be written as

Then

Let’s go further on and apply a variance reduction,

where Lf is an approximation to f. Then

The question is how to choose L and \(\rho \) to make the quantity

as small as possible.

Observe that if

then

and this error is even zero if \((f-Lf)(x)\) does not change its sign. This suggests the following algorithm.

Suppose that \(Lf=L_{m,r}f\) is based on a partition of [a, b] such that the \(L^1\) errors in all m subintervals \(I_i\) have the same value, i.e.,

Then we apply the variance reduction (4.1) with density

where \(h_i\) is the length of \(I_i.\) That is, for the corresponding probability measure \(\mu _\rho \) we have \(\mu _\rho (I_i)=\tfrac{1}{m}\) and the conditional distribution \(\mu _\rho (\cdot |I_i)\) is uniform on \(I_i.\)

We now derive an error formula for such an approximation. Let \(\gamma =\Vert P\Vert _{L^1(a,b)}=\int _0^1|P(z)|\,\mathrm dz.\) (Recall that P is given by (2.2).) We have

for some \(\xi _i,\zeta _i\in I_i.\) Denoting

(which is independent of i) we have as \(m\rightarrow +\infty \) that

To get an asymptotic formula for \(\int _a^b(f-L_{m,r}f)(x)\,\mathrm dx\) we use the analysis done in Sect. 2. If \(\beta =0\) then the integral decreases faster than \(m^{-r}.\) Let \(\beta \ne 0.\) Then

where \(\xi _i\)s are as in (4.4), and \(m_+\) and \(m_-\) are the numbers of indexes i for which \(f^{(r)}(\xi _i)\ge 0\) and \(f^{(r)}(\xi _i)<0,\) respectively. Let

Since \(A\approx \Vert f^{(r)}\Vert _{L^{1/(r+1)}(a,b)}m^{-(r+1)}\) and \(m_+A^{1/(r+1)}\approx \int _{D_+}|f^{(r)}(x)|^{1/(r+1)}\mathrm dx,\) we have

Thus

provided \(\int _a^b|f^{(r)}(x)|^{1/(r+1)}\textrm{sgn} f^{(r)}(x)\,\mathrm dx\ne 0,\) and otherwise the convergence is faster than \(m^{-r}.\)

Our analysis above shows that if m and n are chosen as in (2.5) and (2.6) then the error of the described algorithm asymptotically equals (as \(N\rightarrow +\infty \))

The factor at \(\beta ^2\) in (4.5) can be easily replaced by 1 with the help of stratified sampling. Indeed, instead of randomly sampling n times with density (4.3) on the whole interval [a, b], one can apply the same sampling strategy independently on k groups \(G_j\) of subintervals. Each group consists of \(s=m/k\) subintervals,

and the number of samples for each \(G_j\) equals n/k. As in the algorithm \({\overline{M}}^{\,*}_{N,r},\) we combine \(k=k_N\) and N in such a way that \(k_N\rightarrow +\infty \) and \(k_N/N\rightarrow 0\) as \(N\rightarrow \infty .\) Then the total number of points used in each \(G_j\) is \(N_j=N/k.\) Denoting

and using the fact that the factor at \(\beta ^2\) equals 1 if \(f^{(r)}\) does not change its sign, the error of such an approximation asymptotically equals

as claimed. (Note that \(N_j=N/k\) minimize the sum \(\sum _{j=1}^kC_j^2N_j^{-(2r+1)}\) with respect to \(\sum _{j=1}^kN_j=N;\) compare with the analysis in Sect. 3.)

Thus we obtained exactly the same error formula as in Theorem 3.1 for \({\overline{M}}_{N,r}^{\,*}.\)

It remains to show a feasible construction of a nested partition that is close to the one satisfying (4.2). To that end, we utilize the iterative method presented in [11], where the \(L^p\) error of piecewise Lagrange interpolation is examined.

We first consider the case when

In the following construction, we use a priority queue \({\mathscr {S}}\) whose elements are subintervals. For each subinterval \(I_i\) of length \(h_i,\) its priority is given as

where \(d_i\) is the divided difference (3.2). In the following pseudocode, \(\textrm{insert}({\mathscr {S}},I)\) and \(I:=\mathrm {extract\_max}({\mathscr {S}})\) implement correspondingly the actions of inserting an interval to \({\mathscr {S}},\) and extracting from \({\mathscr {S}}\) an interval with the highest priority.

\(\textbf{algorithm}\;\textrm{PARTITION}\)

\({\mathscr {S}}=\emptyset ;\;\textrm{insert}({\mathscr {S}},[a,b]);\)

\(\textbf{for}\; k=2:m\)

\(\quad [l,r]=\mathrm {extract\_max}({\mathscr {S}});\)

\(\quad c=(l+r)/2;\)

\(\quad \textrm{insert}({\mathscr {S}},[l,c]); \textrm{insert}({\mathscr {S}},[c,r]);\)

\(\textbf{endfor}\)

After execution, the elements of \({\mathscr {S}}\) form a partition into m subintervals \(I_i.\) Note that if the priority queue is implemented through a heap then the running time of \(\textrm{PARTITION}\) is proportional to \(m\log m.\)

Denote by \({\overline{M}}^{\,**}_{N,r}\) the corresponding algorithm that uses the above nested partition and density (4.3), and N is related to the number m of subintervals and the number n of random samples as in (2.5) and (2.6). We want to see how much worse is this algorithm than that using the (not nested) partition (4.2).

Let \(A=(A_1,A_2,\ldots ,A_m)\) with

and \(\Vert A\Vert _p=\big (\sum _{i=1}^mA_i^p\big )^{1/p}.\) For the corresponding piecewise Lagrange approximation \(L_{m,r}f\) and density \(\rho \) given by (4.3) we have

We also have \(\bigg (\int _a^b\big |f^{(r)}(x)\big |^{1/(r+1)}\mathrm dx\bigg )^{r+1}\approx \big (\sum _{i=1}^mA_i^{1/(r+1)}\big )^{r+1}=\Vert A\Vert _{\frac{1}{r+1}}.\) Hence

where

Observe that halving an interval results in two subintervals whose priorities are asymptotically (as \(m\rightarrow +\infty \)) \(2^{r+1}\) times smaller than the priority of the original interval. This means that \(K_{m,r}(A)\) is asymptotically not larger than

Thus we obtained the following result.

Theorem 4.1

If the function f satisfies (4.6) then we have as \(N\rightarrow +\infty \) that

where \(K^*(r)\) is given by (4.7).

We numerically calculated \(K^*(r)\) in some special cases. For instance, if the points \(z_i\) in (2.1) are equispaced, \(z_i=(i-1)/(r-1),\) \(1\le i\le r,\) then for \(r=2,3,4,5,6\) we correspondingly have

while for any \(z_i\)s satisfying \(\beta =\int _0^1(z-z_1)\cdots (z-z_r)\,\mathrm dz=0\) we have

If f does not satisfy (4.6) then the algorithm \({\overline{M}}^{**}_{N,r}f\) may fail. Indeed, it may happen that \(p_f(I_i)=0\) while \(f^{(r)}\not =0\) in \(I_i.\) Then this subinterval may never be further subdivided. In this case, we can repeat the same construction, but with the modified priority

where \(\Delta >0.\) Then the error is asymptotically upper bounded by

where \(\big |f_\Delta ^{(r)}(x)\big |\) is given by (3.4).

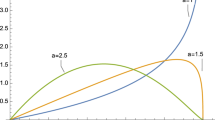

5 Numerical experiments

In this section, we present results of two numerical experiments that illustrate the performance of the nonadaptive Monte Carlo algorithm \({\overline{M}}_{N,r}\) and the adaptive algorithms \({\overline{M}}^{\,*}_{N,r}\) and \({\overline{M}}^{**}_{N,r}.\) Our test integral is

Since for \(r\in {\mathbb {N}}\) we have \((-1)^r f^{(r)}>0,\) the parameter \(\Delta \) is set to zero.

The three algorithms are verified for \(r=2\) and \(r=4\). In both cases, the interpolation nodes are equispaced, i.e., in (2.1) we take

In addition, for the first adaptive algorithm \({\overline{M}}_{N,r}^{\,*}\) we take \(k_N = N^\kappa \) with \(\kappa = 0.8.\) This exponent was chosen to ensure a trade–off as per our discussion in Sect. 3, and some empirical results. Also, for a fixed N we plot a single output instead of the expected value estimator. Therefore the error fluctuations are visible. For completeness, we also show the asymptotes corresponding to the theoretical errors from Theorems 2.1 and 3.1, and the upper bound from Theorem 4.1. The scale is logarithmic, \(-\log _{10}(\textrm{error})\) versus \(\log _{10}N.\)

The results for \(r=2\) are presented in Fig. 1.

As it can be observed, both adaptive algorithms significantly outperform the nonadaptive MC; however, the right asymptotic behaviour of the first adaptive algorithm is visible only for large N.

Similar conclusions can be inferred from validation performed for \(r=4,\) with all other parameters unchanged. We add that the results for N larger than \(10^{4.8}\) are not illustrative any more, since the process is disturbed by a serious reduction of significant digits when calculating divided differences in the partition part (Fig. 2).

Notably, both adaptive algorithms attain their asymptotic errors, but this is not the case for nonadaptive MC for which the output is not stable. Initially, the first adaptive algorithm does not leverage additional sampling since for all intervals \(I_i\) we have \(N_i/(2r+1)< 1.\) The Monte Carlo adjustments are visible only for \(N \ge 10^3\) and the error tends to the theoretical asymptote.

In conclusion, the numerical experiments confirm our theoretical findings and, in particular, superiority of the second adaptive algorithm \({\overline{M}}^{\,**}_{N,r}.\)

6 Automatic Monte Carlo integration

We now use the results of Sect. 4 for automatic Monte Carlo integration. The goal is to construct an algorithm that for given \(\varepsilon >0\) and \(0<\delta <1\) returns an \(\varepsilon \)-approximation to the integral Sf with probability at least \(1-\delta ,\) asymptotically as \(\varepsilon \rightarrow 0^+.\) To that end, we shall use the approximation \({\overline{M}}_{N,r}^{\,**}f\) with N determined automatically depending on \(\varepsilon \) and \(\delta .\)

Let \(X_i\) for \(1\le i\le n\) be independent copies of the random variable

where \(L_{m,r},\) n and \(\rho \) are as in \({\overline{M}}_{N,r}^{\,**}f.\) Then \({\mathbb {E}}(X)=0\) and

By Hoeffding’s inequality [5] we have

where \(B_m=\max _{a\le t\le b}|X(t)|.\) Hence we fail with probability at most \(\delta \) if

Now we estimate \(B_m.\) Let \(\lambda =\Vert P\Vert _{L^\infty (0,1)}=\max _{0\le t\le 1}|P(t)|,\) and

where \(\Delta =0\) if \(f^{(r)}>0\) or \(f^{(r)}<0,\) and \(\Delta >0\) otherwise. Let \(A=(A_1,A_2,\ldots ,A_m)\) with

where, as before, \(\{I_i\}_{i=1}^m,\) is the partition used by \({\overline{M}}_{N,r}^{\,**}f\) and \(h_i\) is the length of \(I_i.\) Since \(\Vert A\Vert _\frac{1}{r+1}=\big (\sum _{i=1}^mA_i^{1/(r+1)}\big )^{r+1}\lessapprox {\mathscr {L}}_r(f),\;\) for \(x\in I_i\) we have

We have the same upper bound for \(S(f-L_{m,r}f)\) since by mean-value theorem

Hence

Using the above inequality and the fact that \(\sqrt{n}\,m^r\approx N^{r+1/2}/(c_rr!)\) with \(c_r\) given by (2.7), we get

The last inequality and (6.1) imply that we fail to have error \(\varepsilon \) with probability at most \(\delta \) for

Now the question is how to obtain the random approximation \({\overline{M}}_{N,r}^{\,**}f\) for N satisfying (6.2).

One possibility is as follows. We first execute the iteration \(\textbf{for}\) in the algorithm \(\textrm{PARTITION}\) of Sect. 4 for \(k=2:m,\) where m satisfies \(\lim _{\varepsilon \rightarrow 0^+}m\,\varepsilon ^{\frac{1}{r+1/2}}=0,\) e.g.,

Let \(\{I_i\}_{i=1}^m\) be the obtained partition. Then we replace \({\mathscr {L}}_r(f)\) in (6.2) by its asymptotic equivalent

set

and continue the iteration for \(k=m+1:m_\varepsilon ,\) where \(m_\varepsilon \) is the number of subintervals corresponding to \(N_\varepsilon .\) Finally, we complete the algorithm by \(n_\varepsilon \) random samples.

Denote the final randomized approximation by \({\mathscr {A}}_{\varepsilon ,\delta }f.\) Then we have \({\mathscr {A}}_{\varepsilon ,\delta }f={\overline{M}}_{N_\varepsilon ,r}^{\,**}f\) and

A disadvantage of the above algorithm is that it uses a priority queue and therefore its total running time is proportional to \(N\log N.\) It turns out that by using recursion the running time can be reduced to N.

A crucial component of the algorithm with the running time proportional to N is the following recursive procedure, in which \({\mathscr {S}}\) is a set of intervals.

\(\textbf{procedure}\;\textrm{AUTO}\,(f,a,b,e)\)

\(\textbf{if}\;p_f([a,b])\le e\)

\(\quad \textrm{insert}({\mathscr {S}},[a,b]);\)

\(\textbf{else}\)

\(\quad c:=(a+b)/2;\)

\(\quad \textrm{AUTO}(f,a,c,e);\)

\(\quad \textrm{AUTO}(f,c,b,e);\)

\(\textbf{endif}\)

Similarly to \({\mathscr {A}}_{\varepsilon ,\delta },\) the algorithm consists of two steps. First \(\textrm{AUTO}\) is run for \(e=\varepsilon '\) satisfying \(\varepsilon '\rightarrow 0^+\) and \(\varepsilon /\varepsilon '\rightarrow 0^+\) as \(\varepsilon \rightarrow 0^+,\) e.g.,

Then \({\mathscr {L}}_r(f)\) in (6.2) is replaced by \(\widetilde{{\mathscr {L}}}_r(f)\) given by (6.3), and \(N_\varepsilon \) found from (6.4). The recursion is resumed with the target value \(e=\varepsilon '',\) where

The algorithm is complemented by the corresponding \(n_\varepsilon \) random samples.

Observe that the number \(m''\) of subintervals in the final partition is asymptotically at least \(m_\varepsilon .\) Indeed, for any function \(g\in C^{r}([a,b])\) with \(g^{(r)}(x)=\big |f_\Delta ^{(r)}(x)\big |\) we have \({\mathscr {L}}_r(g)={\mathscr {L}}_r(f)\) and

where the first inequality above follows from Proposition 2 of [11]. This implies

as claimed.

Denote the resulting approximation by \({\mathscr {A}}_{\varepsilon ,\delta }^*f.\) Observe that its running time is proportional to \(N_\varepsilon \) since recursion can be implemented in linear time.

Theorem 6.1

We have

Now we present outcomes of the second automatic procedure \({\mathscr {A}}_{\varepsilon ,\delta }^*\) for the test integral

Although the derivatives fluctuate and nullify many times in this case, we take \(\Delta =~0.\) We confront the outcomes for \(r=2\) and \(r=4.\) In each case, we compute the number of breaches (i.e. when the absolute error is greater than \(\varepsilon = 10^{-3}\)) based on \(K=10\,000\) independent executions of the code (Table 1). We also take \(\varepsilon ' = \varepsilon ^{1/2}.\) In our testing, we expect the empirical probability of the breach to be less than \(\delta =0.05.\) For completeness, we also present the maximum error from all executions together with obtained \(N_\varepsilon .\)

Note that in both cases we did not identify any exceptions. The magnitude of the maximum errors indicate a serious overestimation of \(N_\varepsilon ,\) but the results are satisfactory given the upper bound estimate of Theorem 6.1.

References

Davis, P., Rabinowitz, P.: Methods of Numerical Integration, 2nd edn. Academic Press, New York (1984)

Goćwin, M.: On optimal adaptive quadratures for automatic integration. BIT Numer. Math. 61, 411–439 (2021)

Gonnet, P.: A review of error estimation in adaptive quadrature. ACM Comput. Surv. 44, 1–36 (2012)

Heinrich, S.: Random approximation in numerical analysis. In: Bierstedt, K.D., et al. (eds.) Proceedings of the Functional Analysis Conference, Essen 1991, pp. 123–171. Marcel Dekker, New York (1993)

Hoeffding, W.: Probability inequalities for sums of bounded random variables. J. Am. Stat. Assoc. 58, 13–30 (1963)

Kacewicz, B., Przybyłowicz, P.: Complexity of the derivative-free solution of systems of IVPs with unknown singularity hypersurface. J. Complex. 31, 75–97 (2015)

Lyness, J.N.: Guidelines for automatic quadrature routines. In: Freeman, C.V. (ed.) Information Processing 71, vol. 2, pp. 1351–1355. North-Holland Publ. (1972)

Novak, E.: Deterministic and Stochastic Error Bounds in Numerical Analysis. Vol. 1349 of Lecture Notes in Math. Springer, Berlin (1988)

Novak, E.: On the power of adaption. J. Complex. 12, 199–237 (1996)

Plaskota, L.: Automatic integration using asymptotically optimal adaptive Simpson quadrature. Numer. Math. 131, 173–198 (2015)

Plaskota, L., Samoraj, P.: Automatic approximation using asymptotically optimal adaptive interpolation. Numer. Algorithms 89, 277–302 (2022)

Plaskota, L., Wasilkowski, G.W.: Adaption allows efficient integration of functions with unknown singularities. Numer. Math. 102, 123–144 (2005)

Plaskota, L., Wasilkowski, G.W., Zhao, Y.: The power of adaption for approximating functions with singularities. Math. Comput. 77, 2309–2338 (2008)

Przybyłowicz, P.: Adaptive Itô-Taylor algorithm can optimally approximate the Itô integrals of singular functions. J. Comput. Appl. Math. 235, 203–217 (2010)

Acknowledgements

The work of L. Plaskota and P. Przybyłowicz was partially supported by the National Science Centre, Poland, under project 2017/25/B/ST1/00945.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflicts of interest.

Additional information

Communicated by Stefano De Marchi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Below we present a crucial part of the code in the Python programming language, where all the algorithms were implemented. In addition, we provide relevant comments linked to particular fragments of the code.

-

(1)

The almost optimal partition main_nodes is derived out of this function in order to save computation time when the trajectories are computed subsequently. Moreover, node_type argument lets the user insert his own partitions, e.g. those based on Chebyshev polynomials of the second kind.

-

(2)

In order to minimize errors resulting from (possibly) adding relatively small adjustments to the estimated quadrature value, we use Decimal library. It enables us to increase the precision of intermediate computations, which is now set to 28 digits in decimal system.

-

(3)

In our case, the interpolating polynomial is based on equidistant mesh including endpoints of a subinterval \(I_i\). By np we understand the references to NumPy library.

-

(4)

Initializing the variables which control Monte Carlo adjustments for our quadrature. In particular, l stores the number of currently used random points, while we loop through the subintervals.

-

(5)

The program calculates all interpolation nodes in the interval \(I_i.\) For that reason, the function optimalt_uniform is executed to provide distinct \(z_1, \ldots , z_r \in [0,1].\)

-

(6)

Depending on the value of r, different formulas for (nonadaptive, deterministic) quadrature \(SL_{m,r}\) are leveraged.

-

(7)

Below, we calculate the Monte Carlo adjustment on interval \(I_i.\)

-

(8)

This code yields random points used for. MC_init function reports them in a from of a number from 0 to m. The integer part points the index i of subinterval, while the fractional part - its position within \(I_i.\) Both parameters are sourced by using math.modf function.

-

(9)

For stability reasons, the coefficients of interpolating polynomial in canonical base are not stored. Therefore, for every point, lagrange function is invoked separately.

-

(10)

We decided to add \(SL_{m,r}\) for each subinterval and then add the cumulative adjustments. Since latter are usually relatively much smaller than the quadrature values, this might result in neglecting the actual adjustment values. Please note that Decimal library was also used to address such constraints.

-

(11)

Ultimately, we add Monte Carlo result to the previous approximation.

As it can be observed, the current solution enables the user to insert own interpolation meshes, increase the precision of computations, as well as extend the method to arbitrary regularity \(r \in {\mathbb {N}}.\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Plaskota, L., Przybyłowicz, P. & Stȩpień, Ł. Monte Carlo integration of \(C^r\) functions with adaptive variance reduction: an asymptotic analysis. Bit Numer Math 63, 32 (2023). https://doi.org/10.1007/s10543-023-00972-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10543-023-00972-0