Abstract

We present a new refinement strategy for locally refined B-splines which ensures the local linear independence of the basis functions. The strategy also guarantees the spanning of the full spline space on the underlying locally refined mesh. The resulting mesh has nice grading properties which grant the preservation of shape regularity and local quasi uniformity of the elements in the refining process.

Similar content being viewed by others

1 Introduction

Locally Refined (LR) B-splines have been introduced in [10] as generalization of the tensor product B-splines to achieve adaptivity in the discretization process. By allowing local insertions in the underlying mesh, the approximation efficiency is dramatically improved as one avoids the wasting of degrees of freedom by increasing the number of basis functions only where rapid and large variations occur in the analyzed object. Nevertheless, the adoption of LR B-splines for simulation purposes in the Isogeometric Analysis (IgA) framework [15] is complicated by the risk of linear dependence relations [21]. Several refinement strategies proposed in the literature, such as the minimum span, full span and structured refinement [16, 24], do not ensure linear independence of the LR B-splines. As a consequence, the so-called peeling algorithm [10, 21] has to be adopted in order to remove redundant basis functions, if any, and restore linear independence, after any refinement iteration. The peeling algorithm may, however, fail in detecting all the linear dependence relations and further, more computationally expensive, checks, such as the tensor expansion [16], are required to sort out such remaining cases.

Although a complete characterization of linear independence is still not available, the local linear independence of the basis functions is guaranteed when the underlying Locally Refined (LR) mesh has the so-called Non-Nested-Support (\(\hbox {N}_2\hbox {S}\)) property [2, 3]. The local linear independence not only is sufficient to avoid the hurdles of dealing with singular linear systems, but it also improves the sparsity of the matrices when assembling the numerical solution. Furthermore, it allows the construction of efficient quasi-interpolation schemes [22]. Such a strong property of the basis functions is a rarity, or at least it is quite cumbersome to gain, among the technologies used for adaptive IgA. For instance, it is not available for (truncated) hierarchical B-splines [11, 13] while it can be achieved for PHT-splines [9] and Analysis-suitable (and dual-compatible) T-splines [6], respectively, by imposing reduced regularity and by endorsing a considerable propagation in the refinement [1].

In this work we present a new refinement strategy to produce LR meshes with the \(\hbox {N}_2\hbox {S}\) property. In addition to the local linear independence of the associated LR B-splines, the strategy proposed has two further features: the space spanned coincides with the full space of spline functions and it guarantees smooth grading in the transitions between coarser and finer regions on the LR meshes produced. The former property boosts the approximation power with respect to the degrees of freedom as the spaces used for the discretization in the IgA context are in general just subsets of the spline space. Such a spanning completeness is more demanding to achieve in terms of meshing constraints and regularity, respectively, for (truncated) hierarchical B-splines and splines over T-meshes [3, 8, 12, 19]. The grading properties are instead required to theoretically ensure optimal algebraic rates of convergence in adaptive IgA methods [4, 5], even in presence of singularities in the PDE data or solution, similarly to what happens in Finite Element Methods (FEM) [20]. More specifically, the LR meshes generated by the proposed strategy satisfy the requirements listed in the axioms of adaptivity [5] in terms of grading and overall appearance. Such axioms constitute a set of sufficient conditions to guarantee convergence at optimal algebraic rate in adaptive methods. Furthermore, mesh grading has been assumed to prove robust convergence of solvers for linear systems arising in the adaptive IgA framework with respect to mesh size and number of iterations [14]. For these reasons, we have called the strategy Effective Grading (EG) refinement strategy.

The next sections are organized as follows. In Sect. 2 we recall the definitions of tensor product meshes and B-splines from a perspective that ease the introduction of LR meshes and LR B-splines. In the second part, we define the \(\hbox {N}_2\hbox {S}\) property for the LR meshes and provide the characterization for the local linear independence of the LR B-splines. In Sect. 3 we first define the EG strategy and then we prove that it has the \(\hbox {N}_2\hbox {S}\) property. The completeness of the space spanned and the grading of the LR meshes are discussed at the end of the section. Finally, in Sect. 4 we draw the conclusions and present the future research.

2 Preliminaries

In this section we recall the definition of Locally Refined (LR) meshes and B-splines and the conditions ensuring the local linear independence of the latter. We stick to the 2D setting for the sake of simplicity, however, many of the following definitions have a direct generalization to any dimension, see [10] for details. We assume the reader to be familiar with the definition and main properties of B-splines, in particular with the knot insertion procedure. An introduction to this topic can be found, e.g., in the review papers [17, 18] or in the classical books [7] and [23].

2.1 LR meshes and LR B-splines

LR meshes and related sets of LR B-splines are constituted simultaneously and iteratively from tensor meshes and sets of tensor B-splines. We therefore start by recalling the latter using a terminology which is proper of the LR B-spline theory. Thereby, we can easily introduce the new concepts by generalizing the tensor case. A tensor (product) mesh on an axes-aligned rectangular domain \(\varOmega \subseteq {\mathbb {R}}^2\) can be represented as a triplet \({\mathcal {N}}= ({\mathcal {M}}, \pmb {p}, \mu )\) where \({\mathcal {M}}\) is a collection (with repetitions) of meshlines, which are the segments connecting two (and only two) vertices of a rectangular grid on \(\varOmega \). \(\pmb {p}=(p_1,p_2)\) is a bidegree, that is, a pair of integers in \({\mathbb {N}}\), and \(\mu :{\mathcal {M}}\rightarrow {\mathbb {N}}^*\) is a map that counts the number of times any meshline \(\gamma \) appears in \({\mathcal {M}}\). \(\mu (\gamma )\) is called multiplicity of the meshline \(\gamma \). Furthermore, the following constraints are imposed on \({\mathcal {M}}\):

-

C1.

\(\mu (\gamma _1) = \mu (\gamma _2)\) if \(\gamma _1,\gamma _2\in {\mathcal {M}}\) are contiguous and aligned,

-

C2.

\(\mu (\gamma ) \le p_1+1\) if \(\gamma \in {\mathcal {M}}\) is vertical and \(\mu (\gamma ) \le p_2+1\) if \(\gamma \) is horizontal. In particular, we say that \(\gamma \) has full multiplicity if the equality holds.

A tensor mesh \({\mathcal {N}}\) is \(\mathbf{open} \) if the meshlines on \(\partial \varOmega \) have full multiplicities.

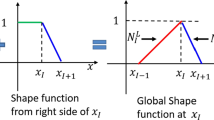

Given an open tensor mesh \({\mathcal {N}}=({\mathcal {M}}, \pmb {p}, \mu )\), consider another tensor mesh \({\mathcal {N}}_B := ({\mathcal {M}}_B, \pmb {p}, \mu _B)\) where \({\mathcal {M}}_B\) is a sub-collection of meshlines \({\mathcal {M}}_B \subseteq {\mathcal {M}}\) forming a rectangular grid in a sub-domain \(\varOmega _B\subseteq \varOmega \) of \(p_1+2\) vertical lines and \(p_2+2\) horizontal lines, where a line is counted m times if the meshlines in it have multiplicity m with respect to \(\mu _B\). The multiplicity \(\mu _B:{\mathcal {M}}_B \rightarrow {\mathbb {N}}^*\) is such that \(\mu _B(\gamma ) \le \mu (\gamma )\) for all \(\gamma \in {\mathcal {M}}_B\). Such vertical and horizontal lines can be parametrized as \(\{x_i\}\times [y_1, y_{p_2+2}]\) and \([x_1, x_{p_1+2}]\times \{y_j\}\) with \(\pmb {x}:=(x_i)_{i=1}^{p_1+2}\) and \(\pmb {y}=(y_j)_{j=1}^{p_2+2}\) such that \(x_i \le x_{i+1}\) and \(y_j \le y_{j+1}\) and with \(x_i, y_j\) appearing \(p_1+1\) and \(p_2+1\) times at most in \(\pmb {x}\) and \(\pmb {y}\), respectively, because of the constraint C2 on \({\mathcal {M}}\). On \(\pmb {x}\) and \(\pmb {y}\) we can define a tensor (product) B-spline, \(B=B[\pmb {x}, \pmb {y}]\). Then, we have that the support of B is \(\varOmega _B\) and hence \({\mathcal {N}}_B\) is a tensor mesh in \(\text {supp}\,B\). We say that B has minimal support on \({\mathcal {N}}\) if no line in \({\mathcal {M}}\backslash {\mathcal {M}}_B\) traverses \(\text {int}(\text {supp}\,B)\) entirely and \(\mu _B \equiv \mu \) on the meshlines of \({\mathcal {M}}_B\) in the interior of \(\text {supp}\,B\). The collection of all the minimal support B-splines on \({\mathcal {N}}\) constitutes the B-spline set on \({\mathcal {N}}\). If instead B has not minimal support on \({\mathcal {N}}\), then there exists a line in \({\mathcal {M}}\) entirely traversing \(\text {int}(\text {supp}\,B)\) which either is not in \({\mathcal {M}}_B\) or it is in \({\mathcal {M}}_B\) but its meshlines have a higher multiplicity with respect to \(\mu \) than \(\mu _B\). In both cases, such exceeding line corresponds to extra knots either in the x- or y-direction. One could then express B with B-splines of minimal support on \({\mathcal {N}}\) by performing knot insertions. An example of B-spline with no minimal support on a tensor mesh is reported in Fig. 1.

Example of B-spline with no minimal support on a tensor mesh. Let us consider the tensor mesh \({\mathcal {N}}=({\mathcal {M}},(2,2),1)\) as in figure (a). Let also \(B = B[\pmb {x}, \pmb {y}]\) be the B-spline of bidegree (2, 2) whose knot vectors are \(\pmb {x}=(x_i)_{i=1}^4, \pmb {y}=(y_j)_{j=1}^4\) and whose support and tensor mesh \({\mathcal {N}}_B = ({\mathcal {M}}_B, (2,2), 1)\) are highlighted in figure (a). B has not minimal support on \({\mathcal {N}}\) as the vertical line placed at value \({\hat{x}}\) is traversing \(\text {supp}\,B\) entirely while its meshlines in \(\text {supp}\,B\) are not contained in \({\mathcal {M}}_B\). However, by knot insertion of \({\hat{x}}\) in \(\pmb {x}\) we can express B in terms of two minimal support B-splines on \({\mathcal {N}}\), \(B[\pmb {x}^1,\pmb {y}]\) and \(B[\pmb {x}^2,\pmb {y}]\), with \(\pmb {x}^1=(x_i^1)_{i=1}^4, \pmb {x}^2=(x_i^2)_{i=1}^4\). The supports of the latter partially overlap horizontally and are represented in figure (b)

We will now extend the above notions and terminology to locally refined meshes, in order to introduce the LR B-splines. Given an open tensor mesh \({\mathcal {N}}=({\mathcal {M}}, \pmb {p}, \mu )\) and the corresponding B-spline set \({\mathcal {B}}\), assume that we either

-

R1.

raise by one the multiplicity of a set of contiguous and colinear meshlines in \({\mathcal {M}}\), which, however, still has to satisfy the constraints C1–C2,

-

R2.

insert a new axis-aligned line \(\gamma \) with endpoints on \({\mathcal {M}}\), traversing the support of at least one B-spline \(B \in {\mathcal {B}}\), and extend \(\mu \) to include the segments connecting the intersection points of \(\gamma \) and \({\mathcal {M}}\), by setting it equal to 1 for such new meshlines.

Let \({\mathcal {M}}'\) be the new collection of meshlines and \(\mu '\) be the multiplicity for \({\mathcal {M}}'\). By construction, there exists at least one B-spline \(B \in {\mathcal {B}}\) that does not have minimal support on \({\mathcal {N}}' = ({\mathcal {M}}', \pmb {p}, \mu ')\). By performing knot insertions we can, however, replace B in the collection \({\mathcal {B}}\) with B-splines of minimal support on \({\mathcal {N}}'\). This creates a new set \({\mathcal {B}}'\) of B-splines of minimal support defined on \({\mathcal {N}}'\). We are now ready to define (recursively) LR meshes and LR B-splines.

An LR mesh on \(\varOmega \) is a triplet \({\mathcal {N}}' = ({\mathcal {M}}',\pmb {p},\mu ')\) which either is a tensor mesh or it is obtained by applying the procedure R1 or R2 to \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\) which, in turn, is an LR mesh. The LR B-spline set \({\mathcal {B}}'\) on \({\mathcal {N}}'\) is the B-spline set on \({\mathcal {N}}'\) if the latter is a tensor mesh or, in case \({\mathcal {N}}'\) is not a tensor mesh, it is obtained via knot insertions from the LR B-spline set \({\mathcal {B}}\) defined on \({\mathcal {N}}\).

In other words, we refine a coarse tensor mesh by inserting new lines (which possibly can have an endpoint in the interior of \(\varOmega \)), one at a time, or by raising the multiplicity of a line already on the mesh. On the initial tensor mesh we consider the tensor B-splines and whenever a B-spline in our collection has no longer minimal support during the mesh refinement process, we replace it by using the knot insertion procedure. The LR B-splines will be the final set of B-splines produced by this algorithm. In Fig. 2 we illustrate the evolution of an LR B-spline throughout such process.

Evolution of an LR B-spline throughout the refinement process of a tensor mesh. Consider the tensor mesh \({\mathcal {N}}=({\mathcal {M}},(2,2),1)\) reported in figure (a). Let \(B[\pmb {x},\pmb {y}]\) be the minimal support B-spline whose support and tensor mesh are highlighted in figure (a). Let us insert a first vertical line in \({\mathcal {N}}\) (dashed in figure (a)). This line does not traverses \(\text {supp}\,B\), hence B is preserved in the B-spline set on the new LR mesh, as shown in figure (b). We then insert an horizontal line (dashed in figure (b)). This time the line is traversing \(\text {supp}\,B\) and \(B[\pmb {x},\pmb {y}]\) is replaced by the B-splines \(B[\pmb {x},\pmb {y}^1]\) and \(B[\pmb {x},\pmb {y}^2]\) involved in the knot insertion. In figure (c) we see the supports and tensor meshes of the latter on the new LR mesh. In particular we see that \(B[\pmb {x},\pmb {y}^1]\) (the bottom B-spline in figure (c)) has not minimal support on the LR mesh as there is a vertical line traversing its support without being part of its tensor mesh. Thus \(B[\pmb {x},\pmb {y}^1]\) is replaced as well, via knot insertion, by two other B-splines \(B[\pmb {x}^1,\pmb {y}^1], B[\pmb {x}^2, \pmb {y}^1]\). Therefore, in the end, we move from \(B[\pmb {x}, \pmb {y}]\), on the tensor mesh, to \(B[\pmb {x}^1, \pmb {y}^1], B[\pmb {x}^2,\pmb {y}^1], B[\pmb {x}, \pmb {y}^2]\) on the final LR mesh. The supports and tensor meshes of the latter are represented in figure (d)

We conclude this section with a short list of remarks:

-

In general the mesh refinement process producing a given LR mesh is not unique, as the insertion ordering can often be changed. However, the final LR B-spline set is well defined because independent of such insertion ordering, as proved in [10, Theorem 3.4].

-

The LR B-spline set is in general only a subset of the set of minimal support B-spline defined on the LR mesh, although the two sets coincide on the initial tensor mesh. When inserting new lines the LR B-splines are the result of the knot insertion procedure, applied to LR B-splines defined on the previous LR mesh, while some minimal support B-splines could be created from scratch on the new LR mesh. Further details and examples can found in [21, Section 5].

-

We have introduced LR meshes and LR B-splines starting from open tensor meshes and related sets of tensor B-splines. For the sake of completeness, we mention that it is actually not necessary that the initial tensor mesh is open, as long as it is possible to define at least one tensor B-spline on it. However, we always assume the openness of the initial tensor meshes in this paper.

-

In the next sections, we always consider tensor and LR meshes with boundary meshlines of full multiplicity and internal meshlines of multiplicity 1, if not specified otherwise. In particular, this means that we update the LR meshes and LR B-spline sets only by performing the procedure R2.

2.2 Local linear independence and \(\hbox {N}_2\hbox {S}\)-property

The LR B-splines coincide with the tensor B-splines when the underlying LR mesh is a tensor mesh and in general the formulation of LR B-splines remains broadly similar to that of tensor B-splines even though the former address local refinements. As a consequence, in addition to making them one of the most elegant extensions to achieve adaptivity, this similarity implies that many of the B-spline properties are preserved by the LR B-splines. For example, they are non-negative, have minimal support, are piecewise polynomials and can be expressed by the LR B-splines on finer LR meshes using non-negative coefficients (provided by the knot insertion procedure). Furthermore, it is possible to scale them by means of positive weights so that they also form a partition of unity, see [10, Section 7].

However, as opposed to tensor B-splines, they could be not locally linearly independent. Actually, the set of LR B-splines can even be linearly dependent (examples can be found in [10, 21, 22]).

Nevertheless, in [2, 3] a characterization of the local linear independence of the LR B-splines has been provided in terms of meshing constraints leading to particular arrangements of the LR B-spline supports on the LR mesh. In this section we recall such characterization.

First of all, we introduce the concept of nestedness. Given an LR mesh \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\), let \(B_1, B_2\) be two different LR B-splines defined on \({\mathcal {N}}\). We say that \(B_2\) is nested in \(B_1\) if

-

\(\text {supp}\,B_2 \subseteq \text {supp}\,B_1\),

-

\(\mu _{B_2}(\gamma ) \le \mu _{B_1}(\gamma )\) for all the meshlines \(\gamma \) of \({\mathcal {M}}\) in \(\partial \text {supp}\,B_1 \cap \partial \text {supp}\,B_2\).

An LR mesh where no LR B-spline is nested is said to have the Non-Nested-Support property, or in short the \(\mathbf{N} _{{2}}{} \mathbf{S} \) property. Figure 3 shows an example of an LR B-spline nested in another.

Example of nested LR B-splines on the LR mesh \({\mathcal {N}}=({\mathcal {M}},(2,2),\mu )\) shown in (a). All the meshlines of \({\mathcal {M}}\) have multiplicity 1 except those in the left edge, highlighted with a double line, which have multiplicity 2. In (b)–(d) three LR B-splines \(B_1,B_2,B_3\) defined on \({\mathcal {N}}\), represented by means of their supports and tensor meshes. All the meshlines in \({\mathcal {M}}_{B_1},{\mathcal {M}}_{B_2}\) and \({\mathcal {M}}_{B_3}\) have multiplicity 1 except those on the left edge in \({\mathcal {M}}_{B_3}\) which have multiplicity 2. Therefore, \(B_2\) is nested in \(B_1\) while \(B_3\) is not nested neither in \(B_2\) nor \(B_3\), despite that \(\text {supp}\,B_3 \subseteq \text {supp}\,B_2\) and \(\text {supp}\,B_3 \subseteq \text {supp}\,B_1\), because the shared meshlines in the left edge of \(\text {supp}\,B_3\), \(\text {supp}\,B_2\) and \(\text {supp}\,B_1\) have multiplicity 2 in \({\mathcal {M}}_{B_3}\) and multiplicity 1 in \({\mathcal {M}}_{B_2}\) and \({\mathcal {M}}_{B_1}\)

The next result, from [3, Theorem 4], relates the local linear independence of the LR B-splines to the \(\hbox {N}_2\hbox {S}\) property of the LR mesh. In order to present it, we recall that given an LR mesh \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\), \({\mathcal {M}}\) induces a box-partition of \(\varOmega \), that is, a collection of axes-aligned rectangles, called boxes, with disjoint interiors covering \(\varOmega \). Hereafter, we will just call them boxes of \({\mathcal {M}}\), with an abuse of notation, instead of boxes in the box-partition induced by \({\mathcal {M}}\).

Theorem 2.1

Let \({\mathcal {N}}=({\mathcal {M}}, \pmb {p}, \mu )\) be an LR mesh and let \({\mathcal {L}}\) be the related LR B-spline set. The following statements are equivalent.

-

1.

The elements of \({\mathcal {L}}\) are locally linearly independent.

-

2.

\({\mathcal {N}}\) has the \(\hbox {N}_2\hbox {S}\) property.

-

3.

Any box \(\beta \) of \({\mathcal {M}}\) is contained in exactly \((p_1+1)(p_2+1)\) LR B-spline supports, that is,

$$\begin{aligned} \#\{B \in {\mathcal {L}}\,:\, \text {supp}\,B \supseteq \beta \} = (p_1+1)(p_2+1). \end{aligned}$$ -

4.

The LR B-splines in \({\mathcal {L}}\) form a partition of unity, without the use of scaling weights.

In the next section we present an algorithm to construct LR meshes with the \(\hbox {N}_2\hbox {S}\) property. The resulting LR meshes will furthermore show a nice gradual grading from coarser regions to finer regions, which avoids the thinning in some direction of the box sizes and the placing of small boxes side by side with large boxes.

3 The effective grading refinement strategy

In this section we present a refinement strategy to generate LR meshes with the \(\hbox {N}_2\hbox {S}\) property. We call it Effective Grading (EG) refinement strategy as the finer regions smoothly fade towards the coarser regions in the resulting LR meshes.

To the best of our knowledge, two other strategies have been proposed to build LR meshes with the \(\hbox {N}_2\hbox {S}\) property so far: the Non-Nested-Support-Structured (\(\mathbf{N} _{{2}}{} \mathbf{S} _{{2}}\)) mesh refinement [22] and the Hierarchical Locally Refined (HLR) mesh refinement [3]. The \(\hbox {N}_2\hbox {S}_2\) mesh refinement is a function-based refinement strategy, which means that at each iteration we refine those LR B-splines contributing more to the approximation error, in some norm. The \(\hbox {N}_2\hbox {S}_2\) mesh strategy does not require any condition on the LR B-splines selected for refinement to ensure the \(\hbox {N}_2\hbox {S}\) property of the resulting LR meshes. On the other hand, no grading has been proved on the final LR meshes and skinny elements may be present on them. The HLR refinement is instead a box-based strategy, which means that at each iteration the region to refine is identified by those boxes, in the box-partition induced by the LR mesh, in which a larger error is committed, in some norm. The HLR strategy produces nicely graded LR meshes but it requires that the regions to be refined and the maximal resolution have to be chosen a priori to ensure the \(\hbox {N}_2\hbox {S}\) property. Usually one does not know in advance where the error will be large and how fine the mesh has to be to reduce the error under a certain tolerance. Therefore, the conditions for the \(\hbox {N}_2\hbox {S}\) property constitute a drawback for the adoption of the HLR strategy in many practical purposes.

The EG refinement is a box-based strategy providing LR meshes very similar to those that one gets with the HLR strategy, when fixing the refinement regions and the number of iterations. As we shall show, the LR meshes generated will always have the \(\hbox {N}_2\hbox {S}\) property, with no requirements or assumptions.

3.1 Preliminary observations and generalized shadow map

In order to introduce the strategy, we need some preliminary considerations on the LR meshes produced by the algorithm. For the sake of simplicity, we assume our domain \(\varOmega \) to be a square \(\varOmega = [a,b]^2 \subseteq {\mathbb {R}}^2\). Given an LR mesh \({\mathcal {N}}=({\mathcal {M}}, \pmb {p},\mu )\) in \(\varOmega \), generated by several applications of EG strategy, and a box \(\beta \) in \({\mathcal {M}}\), we define the diameter of \(\beta \), denoted by \(\text {diam}(\beta )\), as the length of the diagonal of \(\beta \). As we shall show in Sect. 3.2, the boxes in \({\mathcal {M}}\) are either squares or rectangles with one side twice the other. Furthermore, such boxes are obtained by halving boxes in the previous mesh in one of the two directions, i.e., square boxes are refined in rectangles and rectangular boxes are refined in square boxes. In particular, the width L of the longest side of any given box of \({\mathcal {M}}\) has expression

This means that \(\beta \) is a square box in \({\mathcal {M}}\) if and only if

Whereas, \(\beta \) is a rectangular box in \({\mathcal {M}}\) if and only if

Hence, given \(\text {diam}(\beta )\) we can understand if \(\beta \) is a square or a rectangular box by looking at \(\frac{3(b-a)^2}{\text {diam}(\beta )^2} \mod 3\):

with the only exception of the square box \(\beta = \varOmega \), for which \(q=0\) and \(\frac{3(b-a)^2}{\text {diam}(\beta )^2} = \frac{3}{2}\).

There are two variants of the EG strategy, the “Horizontal-major” and the “Vertical-major”. In the Horizontal-major version, the boxes of the mesh, at any iteration, are squares or rectangles of width twice the height. Hence, square boxes are refined by halving them horizontally, while rectangular boxes are refined by halving them vertically. In the Vertical-major case it is the opposite: squares are refined in rectangles of height twice the width, by halving them vertically, and rectangular boxes are refined in square boxes, by halving them horizontally. In Fig. 4 we compare the two variants by refining along the same “bean curve”, using bidegree (2, 2) and 8 levels of refinement in each direction.

Comparison of the Horizontal-major and Vertical-major versions of the EG strategy. We consider bidegree (2, 2) and refine along a “bean curve” with 8 levels of resolution in each direction. In (a) the Horizontal-major variant of the strategy and in (b) the Vertical-major variant of the strategy. In the former the rectangular boxes have width twice the height. In the latter the rectangular boxes have height twice the width. We also show the number of LR B-splines defined in the meshes. Obviously the two cardinalities are different because the two meshes are not equal after flipping the axes, due to the asymmetry of the curve

In the description of the EG strategy in Sect. 3.2, we will just use the verb “to halve”, without specifying the direction, to treat the two variants at the same time.

Let \(\beta \) be a square box in the mesh of diameter \(\text {diam}(\beta ) = d\). \(\beta \) has been obtained by halving a box of diameter

Instead, if \(\beta \) is a rectangular box, it has been obtained by halving a box of diameter

In the description of the EG strategy in Sect. 3.2 we will denote by s the scaling factor to express \(d'\) in terms of d, i.e., \(d' = sd\), independently of the shape of the box at hand, that is,

Finally, we introduce the generalized shadow map of a set A in \(\varOmega \). As opposed to the shadow map [3, Definition 10] which is defined for tensor meshes, the generalized shadow map can be applied in locally refined meshes. The latter is consistent with the former, that is, the two maps are equivalent, when the underlying LR mesh is a tensor mesh and A consists of a bunch of boxes of the mesh, as we shall show in the appendix of this paper. Given an LR mesh \({\mathcal {N}}=({\mathcal {M}}, \pmb {p},\mu )\) and a set A in \(\varOmega \), the generalized shadow map of A in \({\mathcal {N}}\) defines a superset of A which is larger only along one of the two directions, as follows. We present only the horizontal shadow map for briefness, the procedure for the vertical is analogous. For the sake of simplicity, let us assume first that A has only one connected component. For any point \(\pmb {q} \in \partial A\) we consider the two horizontal half-lines from \(\pmb {q}\), \(r^1\) and \(r^2\). Let \(\pmb {q}_1^i, \ldots , \pmb {q}_{N_i}^i\) be the intersection points of \(r^i\) with the vertical meshlines of \({\mathcal {M}}\) (counting their multiplicites), where \(\pmb {q}_1^i\) is the closest to \(\pmb {q}\) and \(\pmb {q}_{N_i}^i\) the farthest. In particular, note that if \(\pmb {q}\) lies on a vertical line of \({\mathcal {M}}\), then \(\pmb {q}_1^i = \pmb {q}\). We define

The (horizontal) generalized shadow of A with respect to \({\mathcal {N}}\), denoted by \({\mathcal {S}}A\), are the boxes of \({\mathcal {M}}\) intersecting the points in the segments \(\overline{\pmb {q}_*^1\pmb {q}_*^2}\) for \(\pmb {q} \in \partial A\) or the points in A, that is,

If A has more connected components, \(A_1, \ldots , A_M\), then the generalized shadow \({\mathcal {S}}A\) will be the union of the generalized shadows of the connected components:

Examples of a horizontal generalized shadow map of different sets. All the meshlines in all the meshes have multiplicity one and \(p_1 =2\). The red regions are the sets considered and the unions of the red regions and the blue regions are the shadow of them (we refer to the online version of the paper for the colors). In (a)–(b) the underlying mesh is a tensor mesh. In (c)–(d) we consider LR meshes built using the minimum span strategy, proposed in [16], with bidegree (2, 2) to emphasize the difference of the generalized shadow map on meshes with local insertions with respect to the tensor case

In Fig. 5 we show four examples of horizontal generalized shadow maps for three different sets and degree \(p_1=2\). In particular the sets considered are unions of boxes of the underlying mesh. We made this choice because these are the kind of sets considered for refinement in practice.

In the EG strategy we will apply the generalized shadow map to sets composed of boxes of the same size and shape in the mesh. The direction of the shadow will be established by such shape: if the boxes are rectangles then the shadow is in the same direction of the long edges, if they are squares then it is in the other direction.

3.2 Definition of the strategy and proof of the \(\hbox {N}_2\hbox {S}\) property

Given a region \(\omega \subseteq \varOmega \) composed of a set of boxes to be refined, the EG strategy can be divided in two macro steps. In the first step new lines are inserted in order to refine \(\omega \). As we shall show, these lines halve boxes of the same shape and size and therefore they are all in the same direction, as we explained in Sect. 3.1. The new line insertions will in general spoil the \(\hbox {N}_2\hbox {S}\) property of the mesh. In the second step of the EG strategy we reinstate the \(\hbox {N}_2\hbox {S}\) property by suitably extending lines that were already on the mesh before such new insertions. This approach, of dividing the strategy into “refining step” and “\(\hbox {N}_2\hbox {S}\) property recovering step”, was already adopted in [22] for the \(\hbox {N}_2\hbox {S}_2\) mesh refinement. As it will be clear, restoring the \(\hbox {N}_2\hbox {S}\) property will also provide nice grading properties in the final mesh.

The refining step works as follows. Let \({\mathcal {N}}= ({\mathcal {M}}, \pmb {p}, \mu )\) be the LR mesh at hand, provided by several iterations of EG strategy, and let \({\mathcal {L}}\) be the corresponding set of LR B-splines. We define the subset \({\mathcal {L}}_\omega \subseteq {\mathcal {L}}\) as the set of those LR B-splines whose support is intersecting region \(\omega \). Then we compute the maximum of \(\text {diam}(\beta )\) over all the LR B-splines \(B \in {\mathcal {L}}_\omega \) and all the boxes \(\beta \) in the tensor meshes \({\mathcal {M}}_B\) associated to the knot vectors of B. We halve such maximal boxes. As all of them have same diameter, the new lines have all same direction. This concludes the refining step. The new lines inserted and the new extensions, provided by the re-establishing of the \(\hbox {N}_2\hbox {S}\) property, trigger a refinement in the LR B-spline set \({\mathcal {L}}\). We finally update \(\omega \) by removing those boxes of it that have been refined (if any). We repeat the procedure until all the boxes in \(\omega \) have been halved. The scheme of the EG refinement strategy is given in Algorithm 1.

When we recover the \(\hbox {N}_2\hbox {S}\) and grading properties we make sure that the shadow of each box of diameter d in the mesh contains only boxes of diameter sd or smaller, with the scaling value s defined as in Eq. (3.1). We proceed from the boxes with the smallest diameter to those with the largest diameter. The input is the LR mesh \({\mathcal {N}}= ({\mathcal {M}}, \pmb {p}, \mu )\) obtained after the refining step. Let \({\mathcal {E}}\) be the set of boxes of \({\mathcal {M}}\). At first, we set d as the diameter of the smallest boxes in \({\mathcal {M}}\) and \({\mathcal {E}}_d \subseteq {\mathcal {E}}\) as the set of boxes with diameter d. For each of such boxes \(\beta \in {\mathcal {E}}_d\) we check if there is a \(\beta ' \in {\mathcal {S}}\beta \) (the shadow map of \(\beta \)) with \(\text {diam}(\beta ') > sd\). If this is the case, we halve the closest to \(\beta \) of such larger boxes and we update the shadow of \(\beta \). We iterate this procedure until all the boxes in \({\mathcal {S}}\beta \) have diameter at most sd. After that, the next extensions will involve only boxes of diameter sd or larger. Hence, we remove \({\mathcal {E}}_d\) from \({\mathcal {E}}\), we update d as the smallest diameter of the boxes in such new collection. We iterate the procedure until \({\mathcal {E}}\) becomes empty. The \(\hbox {N}_2\hbox {S}\) property restoring step is schematized in Algorithm 2. In Fig. 6 we visually represent the steps of an iteration of the EG refinement on a given LR mesh. We remark that the LR meshes produced by the EG strategy have boundary meshlines of full multiplicity and internal meshlines of multiplicity 1.

Example of EG strategy iteration. The input is the LR mesh pictured in figure (a) and a set of boxes marked for refinement, highlighted with red dots. We collect all the LR B-splines on the mesh whose support intersects the marked boxes. The region given by the union of their supports is colored blue in figure (a). We halve the boxes of largest diameter in their support, in this case all the boxes in the colored region, and we get the LR mesh shown in figure (b). Such LR mesh may have not the \(\hbox {N}_2\hbox {S}\) property. We reinstate it as follows. We consider the smallest boxes on the mesh and we compute the generalized shadow of such region. We consider the Horizontal-major variant of the strategy for this example, therefore such shadow is horizontal, as shown in figure (c). We mark for refinement those boxes in the shadow that are too large as explained in Algorithm 2. These boxes are highlighted with red dots in figure (c). We halve those of them that are closer to the region and we update the shadow. At the end of the process we have the mesh in figure (d). We iterate the procedure over all the boxes from the smaller to the larger. The next boxes considered are those reported in red in figure (e) together with their (vertical) generalized shadow in blue. The boxes marked with a red dot are those that need to be halved. The final LR mesh is reported in figure (f). We refer to the online version of the paper for the colors

In order to prove that such LR meshes have the \(\hbox {N}_2\hbox {S}\) property, we rely on the following result [3, Theorem 11]. Let \(\{{\mathcal {N}}_\ell ^T = ({\mathcal {M}}^\ell , \pmb {p}, \mu )\}_{\ell \in {\mathbb {N}}}\) be a sequence of tensor meshes with \({\mathcal {M}}_0^T\) the boundary of \(\varOmega \) and \({\mathcal {M}}_\ell ^T\) obtained by halving the boxes in \({\mathcal {M}}_{\ell -1}^T\), alternating the directions of such splits. Let \(\varOmega _\ell \subseteq \varOmega \) be a union of boxes in \({\mathcal {M}}_\ell ^T\). Then [3, Theorem 11] states that if an LR mesh \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\) can be written as \({\mathcal {M}}= \cup _{\ell \le L} {\mathcal {M}}_{\ell }^T|_{\varOmega _\ell }\) and the sequence \(\{\varOmega _\ell \}_{\ell \le L}\) is such that \(\varOmega _{\ell -1}\supseteq {\mathcal {S}}\varOmega _\ell \), then \({\mathcal {N}}\) has the \(\hbox {N}_2\hbox {S}\) property. We now show that the LR meshes produced by the EG strategy satisfy the hypotheses of [3, Theorem 11].

Theorem 3.1

Let \({\mathcal {N}}= ({\mathcal {M}},\pmb {p},\mu )\) be an LR mesh obtained via several iterations of the EG strategy. Then \({\mathcal {N}}\) has the \(\hbox {N}_2\hbox {S}\) property.

Proof

Let d be the minimal diameter over all the boxes of \({\mathcal {M}}\). Let \(\varOmega ^d\subseteq \varOmega \) be the region composed of all the boxes in \({\mathcal {N}}\) of diameter d. Let \(d' = sd\) and \(\varOmega ^{d'}\subseteq \varOmega \) be the region made of boxes of diameter \(d'\) or smaller. In the \(\hbox {N}_2\hbox {S}\) property restoring step of the EG strategy (Algorithm 2) we make sure that only boxes of diameter sd or smaller are in \({\mathcal {S}}\varOmega ^d\). Therefore, \(\varOmega ^{d'} \supseteq {\mathcal {S}}\varOmega ^d\). By iterating this procedure, replacing d with \(d'\) until \(\varOmega ^{d'} = {\mathcal {S}}\varOmega ^d = \varOmega \), we get a sequence \(\{\varOmega ^d\}_d\) for which \(\varOmega ^{d'} \supseteq {\mathcal {S}}\varOmega ^d\). Furthermore, by recalling that the boxes of diameter d are obtained by halving boxes of diamter \(d'\), it is clear that the sequence \(\{\varOmega ^d\}_d\) corresponds to a sequence \(\{\varOmega _\ell \}_{\ell \le L}\) as that considered in [3, Theorem 11] and \({\mathcal {M}}= \cup _{\ell \le L} {\mathcal {M}}_\ell ^T|_{\varOmega _\ell }\). This proves that \({\mathcal {N}}\) has the \(\hbox {N}_2\hbox {S}\) property thanks to [3, Theorem 11]. \(\square \)

In Fig. 7–8 we show iterations of the EG strategy and the adaptivity of it. From LR meshes obtained by performing 14 iterations (7 vertical and 7 horizontal insertions) of the EG strategy localized on some regions, we change completely the curve along which we perform further refinements. All the meshes shown (and many more) have been tested for the \(\hbox {N}_2\hbox {S}\) property to confirm the theoretical result of Theorem 3.1.

Example showing the adaptivity of the EG strategy. From a refinement localized along a diagonal, we perform iterations on the other diagonal to form an “X”, switching the region of refinement. The figure has to be read following the arrows which represent the iterations. The EG strategy guarantees local linear independence of the LR B-splines on each of the LR meshes in the process. The bidegree considered is \(\pmb {p}=(2,2)\)

Example showing the adaptivity of the EG strategy. From a refinement localized along a triangle, we perform iterations on the the circumscribed circle and then on the square in which the circle is inscribed, switching the regions of refinement. The figure has to be read following the arrows which represent the iterations. For a matter of space, we do not show the intermediate steps when moving from the circle to the square to get the final mesh. The EG strategy guarantees local linear independence of the LR B-splines in all the iterations. The bidegree considered is \(\pmb {p}=(2,2)\)

Remark 3.1

We highlight the importance of refining only the closest to \(\beta \) of the boxes in \({\mathcal {S}}\beta \) of diameter larger than sd in Algorithm 2 and updating the shadow. Halving only one box and updating the shadow avoids the presence of extra spurious lines in the mesh at the end of the process, as explained in Fig. 9.

Supporting figure to Remark 3.1. Let \(p_1 =2\). In figure (a) we represent the horizontal generalized shadow of the two right-most boxes. The two boxes on the left in such shadow are too large for the EG strategy. If we proceed as described in Algorithm 2, we halve only the closest to the boxes considered and we update the shadow before performing a further refinement. The result is represented in figure (b). If instead we halve both the large boxes in the shadow shown in figure (a) we have the mesh in figure (c) at the end of the process. As one can see, in figure (c) there is an extra vertical line. This vertical line may make the mesh be not an LR mesh anymore as it happens in figures (d)–(e). Figure (d) is an intermediate step while performing Algorithm 2 in the refining process shown in Figure 8. In particular, in this stage we are checking that the shadow highlighted is composed of boxes of the right size. The two boxes on the left are too large for the EG strategy. If we halve both of them before updating the shadow we obtain the mesh in figure (e) at the end of Algorithm 2. The small vertical line has not traversed any LR B-spline on the mesh in figure (d). Hence, the mesh in figure (e) is not an LR mesh anymore. Instead, if each time we refine only the closest box, we obtain the LR mesh at the center of Figure 8, which is an LR mesh with the \(\hbox {N}_2\hbox {S}\) property

3.3 Grading and spanning properties

In this section we present the further properties of the EG strategy. We first analyze the grading of the mesh and then we identify the space spanned by the related set of LR B-splines. More specifically, we show

-

bounds on the thinning of the boxes throughout the refinement,

-

bounds on the size ratio of adjacent boxes,

-

that the space spanned fills up the ambient space of the spline functions on the LR mesh.

Assume that \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\) is an LR mesh built using the EG strategy schematized in Algorithm 1. Then the aspect ratio of a box of \({\mathcal {M}}\) is either 1 : 1 or 2 : 1 as rectangular boxes, of aspect ratio 2 : 1, are obtained from square boxes and vice-versa throughout the making of the mesh. Furthermore, we note that, because of the constraints imposed in the \(\hbox {N}_2\hbox {S}\) property restoring step of the strategy, reported in Algorithm 2, a box of size \(c_1\times c_2\) in \({\mathcal {M}}\) can be side by side only with boxes of same size or size double/half in one or both dimensions, i.e., boxes of sizes \(c_1\times c_2, (2^{{\pm } 1}c_1)\times c_2, c_1\times (2^{{\pm } 1}c_2)\) and \(2^{{\pm } 1}(c_1 \times c_2)\). More precisely, along the direction of the generalized shadow map, which is established by the shape of the box, there will only be boxes of the same size or with a scaling factor 2 in one of the two dimension. In the direction orthogonal to the shadow, we may find boxes of same size or of size double/half in both dimensions. These bounds on the box sizes and neighboring boxes avoid the thinning throughout the refinement process and guarantee smoothly grading transitions between finer and coarser regions of the LR meshes produced by the EG strategy. In particular, given two adjacent boxes \(\beta , \beta '\) of \({\mathcal {M}}\), called \(h_\beta \) the square root of the area of \(\beta \), it holds

Inequalities (A1)–(A2) show that the box-partition associated to \({\mathcal {M}}\) satisfies the shape regularity and local quasi uniformity conditions, which are two of the so-called axioms of adaptivity: a set of requirements which theoretically ensure optimal algebraic convergence rate in adaptive FEM and IgA, see [5] and [4, Sections 5–6] for details. In particular, conditions (A1)–(A2) is what is demanded in terms of grading and overall appearance of the mesh used for the discretization.

We now prove another important feature of the EG strategy: the space spanned is the entire spline space. The spline space on a given LR mesh \({\mathcal {N}}=({\mathcal {M}}, \pmb {p},\mu )\), denoted by \({\mathbb {S}}({\mathcal {N}})\), is defined as

In general, all the spaces spanned by generalizations of the B-splines addressing adaptivity, such as LR spline spaces, are just subspaces of the spline space on the underlying mesh. The next result ensures that when we are using LR meshes generated by the EG strategy, the span of the LR B-splines actually fills up the entire spline space.

Theorem 3.2

Let \({\mathcal {N}}= ({\mathcal {M}}, \pmb {p}, \mu )\) be an LR-mesh provided by several iterations of EG strategy and let \({\mathcal {L}}\) be the associated LR B-spline set. Then \(\text {span}\,{\mathcal {L}}= {\mathbb {S}}({\mathcal {N}})\).

Proof

If \({\mathcal {N}}\) is a tensor mesh, the LR B-spline set coincides with the tensor B-spline set and the statement is true by the Curry-Schoenberg Theorem. If instead there are local insertions in \({\mathcal {M}}\), we recall that during the refining steps of the EG strategy that yielded \({\mathcal {M}}\), we have always inserted new lines traversing the support of at least one LR B-spline. This means that each new line along the kth direction traversed at least \(p_k+2\) orthogonal meshlines when has been inserted. In the \(\hbox {N}_2\hbox {S}\) property recovering steps we then have further prolonged some of such lines. By [3, Theorem 12], this “length” of the lines in terms of intersections guarantees that \(\text {span}\,{\mathcal {L}}= {\mathbb {S}}({\mathcal {N}})\). \(\square \)

This spanning property is achieved also by using the HLR strategy [3].

4 Conclusion

We have presented a simple refinement strategy ensuring the local linear independence of the associated LR B-splines. Furthermore, the width of the regions refined at each iteration of the strategy guarantees that the span of the LR B-splines fills up the whole spline space on the LR mesh.

We have called it Effective Grading (EG) strategy as the transition between coarser and finer regions is rather gradual and smooth in the LR meshes produced, with strict bounds on the aspect ratio of the boxes and on the sizes of the neighboring boxes. Such a grading ensures that the requirements on the mesh appearance listed in the axioms of adaptivity [4, 5] are verified. The latter are a set of sufficient conditions on mesh grading, refinement strategy, error estimates and approximant spaces in adaptive numerical methods to theoretically guarantee optimal algebraic convergence rate of the numerical solution to the real solution. The verification of the remaining axioms will be the topic of future research.

References

Beirão da Veiga, L., Buffa, A., Sangalli, G., Vázquez, R.: Mathematical analysis of variational isogeometric methods. Acta Numer. 23, 157–287 (2014). https://doi.org/10.1017/S096249291400004X

Bressan, A.: Some properties of LR-splines. Comput. Aided Geom. Design 30(8), 778–794 (2013). https://doi.org/10.1016/j.cagd.2013.06.004

Bressan, A., Jüttler, B.: A hierarchical construction of LR meshes in 2D. Comput. Aided Geom. Design 37, 9–24 (2015). https://doi.org/10.1016/j.cagd.2015.06.002

Buffa, A., Gantner, G., Giannelli, C., Praetorius, D., Vázquez, R.: Mathematical foundations of adaptive isogeometric analysis. arXiv preprint arXiv:2107.02023 (2021)

Carstensen, C., Feischl, M., Page, M., Praetorius, D.: Axioms of adaptivity. Comput. Math. Appl. 67(6), 1195–1253 (2014). https://doi.org/10.1016/j.camwa.2013.12.003

Da Veiga, L.B.a., Buffa, A., Sangalli, G., Vázquez, R.: Analysis-suitable T-splines of arbitrary degree: definition, linear independence and approximation properties. Math. Models Methods Appl. Sci. 23(11), 1979–2003 (2013). https://doi.org/10.1142/S0218202513500231

de Boor, C.: A Practical Guide to Splines, Applied Mathematical Sciences, vol. 27. Springer-Verlag, New York-Berlin (1978)

Deng, J., Chen, F., Feng, Y.: Dimensions of spline spaces over \(T\)-meshes. J. Comput. Appl. Math. 194(2), 267–283 (2006). https://doi.org/10.1016/j.cam.2005.07.009

Deng, J., Chen, F., Li, X., Hu, C., Tong, W., Yang, Z., Feng, Y.: Polynomial splines over hierarchical T-meshes. Graphical Models 70, 76–86 (2008)

Dokken, T., Lyche, T., Pettersen, K.F.: Polynomial splines over locally refined box-partitions. Comput. Aided Geom. Design 30(3), 331–356 (2013). https://doi.org/10.1016/j.cagd.2012.12.005

Forsey, D.R., Bartels, R.H.: Hierarchical B-spline refinement. ACM Siggraph Computer Graphics 22, 205–212 (1988)

Giannelli, C., Jüttler, B.: Bases and dimensions of bivariate hierarchical tensor-product splines. J. Comput. Appl. Math. 239, 162–178 (2013). https://doi.org/10.1016/j.cam.2012.09.031

Giannelli, C., Jüttler, B., Speleers, H.: THB-splines: the truncated basis for hierarchical splines. Comput. Aided Geom. Design 29(7), 485–498 (2012). https://doi.org/10.1016/j.cagd.2012.03.025

Hofreither, C., Mitter, L., Speleers, H.: Local multigrid solvers for adaptive isogeometric analysis in hierarchical spline spaces. IMA Journal of Numerical Analysis (2021). https://doi.org/10.1093/imanum/drab041

Hughes, T.J.R., Cottrell, J.A., Bazilevs, Y.: Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput. Methods Appl. Mech. Engrg. 194(39–41), 4135–4195 (2005). https://doi.org/10.1016/j.cma.2004.10.008

Johannessen, K.A., Kvamsdal, T., Dokken, T.: Isogeometric analysis using LR B-splines. Comput. Methods Appl. Mech. Engrg. 269, 471–514 (2014). https://doi.org/10.1016/j.cma.2013.09.014

Lyche, T., Manni, C., Speleers, H.: Foundations of spline theory: B-splines, spline approximation, and hierarchical refinement. In: Splines and PDEs: from approximation theory to numerical linear algebra, Lecture Notes in Math., vol. 2219, pp. 1–76. Springer, Cham (2018)

Manni, C., Speleers, H.: Standard and non-standard CAGD tools for isogeometric analysis: a tutorial. In: Isogeometric analysis: a new paradigm in the numerical approximation of PDEs, Lecture Notes in Math., vol. 2161, pp. 1–69. Springer, [Cham] (2016)

Mokriš, D., Jüttler, B., Giannelli, C.: On the completeness of hierarchical tensor-product \(B\)-splines. J. Comput. Appl. Math. 271, 53–70 (2014). https://doi.org/10.1016/j.cam.2014.04.001

Nochetto, R.H., Veeser, A.: Primer of adaptive finite element methods. In: Multiscale and adaptivity: modeling, numerics and applications, Lecture Notes in Math., vol. 2040, pp. 125–225. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-24079-9

Patrizi, F., Dokken, T.: Linear dependence of bivariate minimal support and locally refined B-splines over LR-meshes. Comput. Aided Geom. Design 77, 101803, 22 (2020). https://doi.org/10.1016/j.cagd.2019.101803

Patrizi, F., Manni, C., Pelosi, F., Speleers, H.: Adaptive refinement with locally linearly independent LR B-splines: theory and applications. Comput. Methods Appl. Mech. Engrg. 369, 113230, 20 (2020). https://doi.org/10.1016/j.cma.2020.113230

Schumaker, L.L.: Spline functions: basic theory, third edn. Cambridge Mathematical Library. Cambridge University Press, Cambridge (2007). https://doi.org/10.1017/CBO9780511618994

Skytt, V., Dokken, T.: Scattered data approximation by LR B-spline surfaces. A study on refinement strategies for efficient approximation. In: Geometric Challenges in Isogeometric Analysis, Springer INdAM Series, vol. 49. Springer, [Cham] (2022)

Acknowledgements

This work was partially supported by the European Council under the Horizon 2020 Project Energy oriented Centre of Excellence for computing applications - EoCoE, Project ID 676629. The author is member of Gruppo Nazionale per il Calcolo Scientifico, Istituto Nazionale di Alta Matematica.

Funding

Open Access funding enabled and organized by Projekt DEAL. Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Tom Lyche.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partially supported by the European Council under the Horizon 2020 Project Energy oriented Centre of Excellence for computing applications - EoCoE, Project ID 676629. The author is member of Gruppo Nazionale per il Calcolo Scientifico, Istituto Nazionale di Alta Matematica.

Equivalence with the shadow map

Equivalence with the shadow map

In this appendix we show that the generalized shadow map is equivalent to the definition of shadow map, given in [3, Definition 10], when the underlying mesh is a tensor mesh and the set considered is constituted of a collection of boxes. In order to recall the latter, we introduce the separation distance. Given a direction \(k \in \{1,2\}\), let \({\mathcal {N}}=({\mathcal {M}},\pmb {p},\mu )\) be a tensor mesh and \({\mathcal {M}}_{3-k}\) be the subcollection in \({\mathcal {M}}\) of all the meshlines in the \((3-k)\)th direction. Given two points \(\pmb {p},\pmb {q} \in \varOmega \) the separation distance of \(\pmb {p}\) and \(\pmb {q}\) along direction k with respect to the tensor mesh \({\mathcal {N}}\) is defined as

with \(\gamma \) the segment along direction k between \(\pmb {p}\) and \(\pmb {q}\). Given a set \(A\subseteq \varOmega \) composed of boxes of \({\mathcal {M}}\), we define

The definition of shadow map along direction k with respect to the tensor mesh \({\mathcal {N}}\) given in [3] is then

We use the gothic symbol \({\mathfrak {S}}\) to distinguish it from the generalized shadow map, defined in Sect. 3.1. We now show that the two are equivalent. Let \(\beta \) be a box in A. Then, \(\text {sep}_k^{{\mathcal {N}}}(\pmb {p},A) = 0\) for all \(\pmb {p} \in \beta \) and \(\beta \subseteq {\mathfrak {S}}(A)\). Let instead \(\beta \subseteq {\mathcal {S}}A\setminus A\). Then \(\text {sep}_k^{{\mathcal {N}}}(\pmb {p},A) \le p_k+1\) for all \(\pmb {p} \in \beta \) and so \(\beta \subseteq {\mathfrak {S}}A\). Note that the “for all \(\pmb {p} \in \beta \)” is true because A is composed of boxes of \({\mathcal {M}}\). Otherwise, there could be points \(\pmb {p}\) in \(\beta \) that are not part of the shadow \({\mathfrak {S}}A\), see, e.g., [3, Figure 5]. We have proved that \({\mathcal {S}}A \subseteq {\mathfrak {S}}A\). We now show the opposite, that is, \({\mathcal {S}}A \supseteq {\mathfrak {S}}A\). Let \(\pmb {p} \in {\mathfrak {S}}A\). Then \(p_k +1 \ge \inf _{\pmb {q} \in A} \text {sep}_k^{{\mathcal {N}}}(\pmb {p},\pmb {q})\). If \(\pmb {p} \notin A\) then such infimum is reached for some \(\pmb {q} \in \partial A\) and so \(\pmb {p} \in \overline{\pmb {q}_*^1\pmb {q}_*^2}\), with \(\pmb {q}_*^1\) as defined in Eq. (3.2). Therefore \(\pmb {p}\) is contained in a box \(\beta \) of \({\mathcal {M}}\) intersecting \(\overline{\pmb {q}_*^1\pmb {q}_*^2}\) and \(\beta \subseteq {\mathcal {S}}A\). If instead \(\pmb {p} \in A\), there is nothing to prove as A is in \({\mathcal {S}}A\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Patrizi, F. Effective grading refinement for locally linearly independent LR B-splines. Bit Numer Math 62, 1745–1764 (2022). https://doi.org/10.1007/s10543-022-00929-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-022-00929-9