Abstract

Many complex applications require the solution of initial-value problems where some components change fast, while others vary slowly. Multirate schemes apply different step sizes to resolve different components of the system, according to their dynamics, in order to achieve increased computational efficiency. The stiff components of the system, fast or slow, are best discretized with implicit base methods in order to ensure numerical stability. To this end, linearly implicit methods are particularly attractive as they solve only linear systems of equations at each step. This paper develops the Multirate GARK-ROS/ROW (MR-GARK-ROS/ROW) framework for linearly-implicit multirate time integration. The order conditions theory considers both exact and approximative Jacobians. The effectiveness of implicit multirate methods depends on the coupling between the slow and fast computations; an array of efficient coupling strategies and the resulting numerical schemes are analyzed. Multirate infinitesimal step linearly-implicit methods, that allow arbitrarily small micro-steps and offer extreme computational flexibility, are constructed. The new unifying framework includes existing multirate Rosenbrock(-W) methods as particular cases, and opens the possibility to develop new classes of highly effective linearly implicit multirate integrators.

Similar content being viewed by others

1 Introduction

Multiphysics applications lead to initial-value problems in additively partitioned form

where the right-hand side \(\mathbf {f}: \mathbb {R}^{d} \rightarrow \mathbb {R}^{d}\) is split into \(\mathrm {N}\) different components based on, for example, stiffness (stiff/non-stiff), nonlinearity (linear/non-linear), dynamical behavior (fast/slow), and evaluation cost (cheap/expensive). Additive partitioning also includes the special case of component partitioning where the solution vector is split into disjoint sets [15].

Multimethods are effective numerical solvers for multiphysics and multiscale applications (1.1) that treat each component with an appropriate time discretization and time step, which are carefully coordinated such that the overall solution has the desired accuracy and stability properties. Examples of widely used multimethods include implicit-explicit [6, 7, 19, 40,41,42] and multirate schemes [4, 12, 24].

This paper focuses on partitioned systems (1.1) where some components are fast varying and have small evaluation costs, while other are slowly varying but their evaluation is expensive. Multirate schemes exploit this different dynamical behavior by applying small step sizes to the fast part and large step sizes to the slow part, while ensuring the order and stability of the overall numerical integration scheme. Significant computational savings are made possible by the less frequent evaluation of the slow expensive components. Multirate schemes have been developed using base methods such as Runge-Kutta [1, 2, 8, 21, 22, 24], Linear Multistep [12, 18, 30], Rosenbrock [13, 14, 35], and extrapolation [9,10,11, 31].

The General-structure Additive Runge-Kutta (GARK) formalism introduced in [33] defines a comprehensive framework for studying a large class of partitioned Runge-Kutta based schemes for solving (1.1). It allows for different stage values with different components of the right hand side. Though in principle equivalent to Additive Runge-Kutta schemes [5], “the advantage of the GARK formulation is that it clarifies the coupling between the various methods, in addition to eliminating zero quadrature weights in the ARK formalism, hence the analysis of special cases” [38]. Examples of partitioned methods developed in the GARK framework include [15, 27, 38]. MR-GARK and MRI-GARK frameworks [15, 16, 25, 26, 29, 34] define general classes of multirate Runge-Kutta methods based on the GARK formalism.

The stiff components of the system (1.1) are best discretized with implicit base methods in order to ensure numerical stability. Linearly implicit methods enjoy the same stability properties as the implicit schemes, but solve only linear systems of equations at each step. Rosenbrock-Wanner (ROS) methods [17, 28] are linearly implicit Runge–Kutta schemes that use the exact Jacobian of the right hand side function in the computational process; Rosenbrock-W methods [36] allow arbitrary approximations of the Jacobian. In a recent paper [32] the authors have generalized the GARK approach to partitioned linearly-implicit schemes based on using exact (GARK-ROS) and inexact (GARK-ROW) Jacobian information.

This paper proposes the Multirate GARK-ROS/ROW (MR-GARK-ROS/ ROW) framework for linearly-implicit multirate time integration. The new elements developed herein are as follows. MR-GARK-ROS/ROW provides a unifying formalism for linearly-implicit multirate schemes of Runge-Kutta type, that includes as particular cases existing methods such as [13, 14, 35]. A general order conditions theory is developed for methods with exact and with inexact Jacobian information. The effectiveness of implicit multirate methods hinges upon the manner in which the slow and fast system computations are coupled; to this end, an array of various efficient coupling approaches is proposed. Two particular coupling structures, which hold considerable promise for practical applications, are studied in detail: compound-first-step, where the macro-step is coupled with the first micro-step only, and step-predictor-corrector, where a macro-step carried out over the entire system is followed by a recalculation of the fast components using small steps. Finally, multirate infinitesimal step (MRI) methods [20, 26, 29, 37] that allow arbitrarily small micro-steps offer extreme computational flexibility, since the fast subsystem can be solved with any discretization method and sequence of step sizes. The work develops general MRI-GARK ROS/ROW schemes, as well as MRI step-predictor-corrector methods; order condition theories are provided for both families.

The paper is organized as follows. Section 2 reviews basic aspects of GARK-ROS/ROW methods. The new MR-GARK ROS/ROW formalism is defined in Sect. 3. Using the partitioned GARK ROS/ROW framework, general order conditions are derived in Sect. 4. A linear stability analysis is performed in Sect. 5. Various slow-fast coupling strategies for an efficient computation are discussed in Sect. 6. Compound-first-step schemes are analyzed in Sect. 7, and step-predictor-corrector (SPC) methods in Sect. 8. Multirate infinitesimal step (MRI-GARK ROS/ROW) schemes are developed in Sect. 9, and multirate infinitesimal step SPC methods in Sect. 10. Conclusions and an outlook of future work are given in Sect. 11.

2 Linearly implicit GARK schemes

2.1 GARK-ROS and GARK-ROW schemes

The class of linearly-implicit GARK-ROS and GARK-ROW schemes was developed in [32] , in analogy to the extension of (explicit) Runge-Kutta schemes to Rosenbrock-Wanner (ROS) and Rosenbrock-W (ROW) schemes. One GARK-ROS/ROW step applied to (1.1) reads:

here \(\varvec{1}_s \in \mathbb {R}^s\) is a vector of ones, \(\mathbf {I}_{s \times s}\in \mathbb {R}^{s \times s}\) is the identity matrix, \(\otimes \) is the Kronecker product, and the following matrix notation is used:

with  and

and

The matrices \(\pmb {\upalpha }^{\{q,m\}}\) are strictly lower triangular and \(\pmb {\upgamma }^{\{q,m\}}\) are lower triangular. Depending on the choice of matrices \(\mathbf {L}^{\{q\}} \in \mathbb {R}^{d\times d}\) one distinguishes several types of methods, as follows:

-

GARK-ROS schemes use the exact Jacobian information, i.e., \(\mathbf {L}^{\{q\}} :=\mathbf {f}_{\mathbf {y}}^{\{q\}}(\mathbf {y}_{n-1})\) are the Jacobians of the component functions evaluated at the current solution;

-

GARK-ROW schemes allow any approximation of the Jacobian, i.e., \(\mathbf {L}^{\{q\}}\) may be arbitrary;

-

In the case of GARK-ROS schemes with time-lagged Jacobians one has \(\mathbf {L}^{\{q\}}=\mathbf {f}_{\mathbf {y}}^{\{q\}}(\mathbf {y}_{n-1}) + \mathcal {O}(h)\).

The scheme (2.1) is characterized by the extended Butcher tableau (with \(\pmb {\upbeta }^{\{q,m\}} :=\pmb {\upalpha }^{\{q,m\}}+\pmb {\upgamma }^{\{q,m\}}\))

Remark 2.1

(GARK-ROS and GARK-ROW scheme structure)

-

Similar to GARK, the scheme (2.1) uses only one function \(\mathbf {f}^{\{q\}}\) evaluation for the increment \(\mathbf {k}^{\{q\}}\), and linear combinations of increments \(\mathbf {k}^{\{m\}}\) both as function arguments and as additive terms.

-

For all \(\pmb {\upgamma }^{\{q,m\}}=0\) (2.1) is an explicit GARK scheme.

-

If \(\pmb {\upgamma }^{\{q,m\}}=0\) for all \(m>q\) then increments can be computed sequentially in the following order: \(k_1^{\{1\}}, \ldots , k_1^{\{\mathrm {N}\}}, k_2^{\{1\}},\ldots , k_{s^{\{\mathrm {N}\}}}^{\{\mathrm {N}\}}\).

Theorem 2.1

(GARK-ROS order conditions [32]) The GARK-ROS order conditions Eq. (2.1) are the same as the Rosenbrock order conditions [17], except that the method coefficients are also labelled according to partition indices. In the order conditions, in each sequence of matrix multiplies, the partition indices are compatible according to matrix multiplication rules.

Let \(\varvec{1}^{\{n\}} \in \mathbb {R}^{s^{\{n\}}}\) be a vector of ones. For brevity of notation we define:

The GARK-ROS order four conditions read:

Theorem 2.2

(GARK-ROW order conditions [32]) The GARK-ROW order conditions Eq. (2.1) are the same as the Rosenbrock-W order conditions [17], except that the method coefficients are also labelled according to partition indices. In the order conditions, in each sequence of matrix multiplies, the partition indices are compatible according to matrix multiplication rules.

The GARK-ROW order four conditions read:

Remark 2.2

(Internal consistency [32]) The order conditions (2.5) and (2.6) simplify considerably for internally consistent GARK-ROS/ROW schemes, for which the vectors (2.4) satisfy:

3 Multirate GARK ROS/ROW methods

We aim at employing the GARK ROS/ROW formalism (2.1) to develop a class of multirate linearly-implicit schemes.

3.1 Multirate GARK-ROS/ROW for additive splitting

Multirate GARK schemes [15] seek to exploit the multiscale behavior given in different dynamics. Consider a two-way additively partitioned system (1.1) of the form

with a slow part  that is computationally expensive and a fast part

that is computationally expensive and a fast part  that is inexpensive to evaluate. Solving the slow expensive component with a large macro-step H, and the fast inexpensive one with \(\mathrm{M}\) micro-steps \(h=H/\mathrm{M}\), allows for reducing the overall computational cost.

that is inexpensive to evaluate. Solving the slow expensive component with a large macro-step H, and the fast inexpensive one with \(\mathrm{M}\) micro-steps \(h=H/\mathrm{M}\), allows for reducing the overall computational cost.

Definition 3.1

(Multirate GARK-ROS/ROW method) One step of a multirate GARK-ROS/ROW method (MR-GARK-ROS/ROW for short) applied to (3.1) computes the solution as follows. The slow component is discretized with a ROS/ROW method  and macro-step H. The fast component uses \(\mathrm{M}\) micro-steps \(h = H/\mathrm{M}\), and at each micro-step \(\lambda \) a (possibly different) ROS/ROW method

and macro-step H. The fast component uses \(\mathrm{M}\) micro-steps \(h = H/\mathrm{M}\), and at each micro-step \(\lambda \) a (possibly different) ROS/ROW method  is applied. The computational process reads:

is applied. The computational process reads:

The matrices \(\mathbf {L}^{\{a\}}\), \(a \in \{{\textsc {s}},{\textsc {f}}\}\), can be chosen as exact Jacobians (ROS schemes) or as arbitrary approximations to the Jacobians (ROW schemes).Footnote 1 Method (3.2) is a GARK ROS/ROW scheme with step H defined by the Butcher tableau (2.3):

and \(\varvec{\upbeta }^{\{a,b\}}\, :=\, \varvec{\upalpha }^{\{a,b\}} + \varvec{\upgamma }^{\{a,b\}}\) for \(a \in \{{\textsc {s}},{\textsc {f}}\}\).

Remark 3.1

(Intermediate micro-step solutions) Define the intermediate solutions at the end of the fast micro-steps as:

From (3.2a) the fast stages are computed as:

and from (3.2c) the next step solution as:

Remark 3.2

(Non-uniform micro-steps) Definition 3.1 can be immediately extended to accommodate nonuniform micro-steps \(h_\lambda \) with \(\sum _{\lambda =1}^\mathrm{M}h_\lambda = H\) by using \(h_\lambda \) in each fast step (3.2a), and scaling columns \(\lambda \) of the Butcher tableaus (3.3a) and (3.3b) by \(h_\lambda /H\) instead of \(1/\mathrm{M}\).

Remark 3.3

(Pure multirate approach) In this paper we focus on “pure” multirate methods where all micro-steps use the same fast base method, i.e.,

Nevertheless, the general structure of Definition 3.1 remains of interest as it allows to build special types of fast-slow couplings.

3.2 Multirate GARK-ROS/ROW for component-wise splitting

Consider a two-way component partitioned system (3.1) of the form

again, with a slow part  that is computationally expensive and a fast part

that is computationally expensive and a fast part  that is inexpensive to evaluate.

that is inexpensive to evaluate.

Definition 3.2

(Multirate GARK-ROS/ROW method for component partitioned systems) One macro-step of a MR-GARK-ROS/ROW method applied to (3.6) with macro-step H and \(\mathrm{M}\) equal micro-steps \(h = H/\mathrm{M}\) reads:

where  and

and  are the notation (2.2a) for

are the notation (2.2a) for  and

and  , respectively. In case of GARK-ROS methods we define

, respectively. In case of GARK-ROS methods we define  , \(a,b \in \{ {\textsc {f}}, {\textsc {s}}\}\), and in case of GARK-ROW methods these are arbitrary approximations of the Jacobian blocks.

, \(a,b \in \{ {\textsc {f}}, {\textsc {s}}\}\), and in case of GARK-ROW methods these are arbitrary approximations of the Jacobian blocks.

4 Order conditions for MR-GARK-ROS/ROW schemes

In the previous section we have seen that MR-GARK-ROS/ROW schemes can be interpreted as partitioned GARK-ROS/ROW schemes. Thus the order conditions of these multirate schemes can be easily derived form the underlying GARK-ROS/ROW schemes derived in [32]. In the following we focus on pure multirate methods (3.5). We consider only order conditions up to order four, as multirate schemes fit better to lower error tolerances and thus lower order schemes. Lower error tolerances are sufficient for many real-world applications, if they are in the range of the respective modeling and measurement errors of model parameters.

For exact Jacobians the order conditions for GARK-ROS schemes are (2.5). For GARK-ROS schemes with time-lagged Jacobian information, in addition to the order conditions (2.5a)–(2.5c), the order conditions

have to be fulfilled for order three. For general approximations of the Jacobians, the order conditions of GARK-ROW schemes are given by (2.6).

4.1 Internal consistency of MR-GARK-ROS/ROW schemes

We consider methods (3.2) of pure multirate type (3.5). The internal consistency conditions (2.7) read:

From here we have that:

In what follows it is convenient to use the following notation for the coupling coefficients averaged across all micro-steps Footnote 2:

with similar notations used for  ,

,  , and

, and  .

.

4.2 Order four conditions for internally consistent MR-GARK-ROS schemes

We consider internally consistent MR-GARK-ROS schemes (4.2). It is immediate that, if the base methods are second order ROS schemes, the MR-GARK-ROS has also second order (2.6b).

We turn our attention to the MR-GARK-ROS order three conditions (2.5c). Assume that each of the base methods is a third order ROS scheme. The remaining order three coupling conditions are as follows:

Remark 4.1

(Order three conditions for time-lagged Jacobian approach) Consider an internally consistent MR-GARK-ROS scheme of order three. The additional order three conditions for time-lagged Jacobians (4.1) reduce to:

The method maintains order three in the case of time-lagged Jacobians if and only if each base method satisfies the time-lagged Jacobian conditions above. It can be shown that equation  is equivalent to the order three MR-GARK-ROS condition

is equivalent to the order three MR-GARK-ROS condition  in case of internal consistency; therefore only one additional condition

in case of internal consistency; therefore only one additional condition  is necessary and sufficient to maintain order three when time-lagged Jacobians are used.

is necessary and sufficient to maintain order three when time-lagged Jacobians are used.

We consider the MR-GARK-ROS order four conditions (2.5d). Assume that each of the base methods is a fourth order ROS scheme. The remaining order four coupling conditions are as follows (with \(\times \) denoting the compontent wise multiplication of two vectors):

4.3 Order three conditions for internally consistent MR-GARK-ROW schemes

We consider internally consistent MR-GARK-ROW schemes (4.2).

Assume that the base methods are second order ROW schemes. The MR-GARK-ROW second order conditions (2.6b) are:

These conditions are automatically satisfied for internally consistent methods.

We consider the MR-GARK-ROW order three conditions (2.6c). Assume that each of the base methods is a third order ROW scheme. There are eight order three coupling conditions, as follows:

Remark 4.2

Order four coupling conditions for MR-GARK-ROW schemes can be derived in an analogous manner from the general order conditions (2.6d).

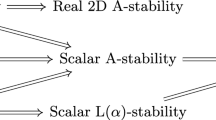

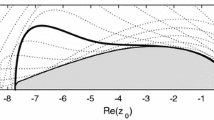

5 Linear stability

Consider the scalar test problem

Application of the MR-GARK-ROS method (3.2) to (5.1) leads to the same stability equation as the application of a Multirate GARK scheme. Using the notation (3.3) and defining

we obtain:

which is the same stability function as for a GARK scheme with tableau of coefficients \((\mathbf {b},{\mathbf {B}})\). The following definition extends immediately from GARK to MR-GARK-ROS schemes.

Definition 5.1

(Stiff accuracy) Let \(\delta _{s} \in \mathbb {R}^s\) be a vector with the last entry equal to one, and all other entries equal to zero. The MR-GARK-ROS method (3.2) is called stiffly accurate if

For a stiffly accurate MR-GARK-ROS/ROW scheme the stability function (5.2) becomes:

If  is nonsingular, then \(R(Z) \rightarrow 0\) when

is nonsingular, then \(R(Z) \rightarrow 0\) when  .

.

For component partitioned systems we consider the following model problem [21]:

Let  , and

, and  . Application of the MGARK-ROS method (2.7), regarded as a partitioned GARK-ROS scheme according to the Butcher tableau (3.3), advances over one step H via the recurrence:

. Application of the MGARK-ROS method (2.7), regarded as a partitioned GARK-ROS scheme according to the Butcher tableau (3.3), advances over one step H via the recurrence:

with the stability matrix:

One immediately sees that for one-sided coupled problems with  or

or  the stability of the base schemes guarantees the stability of the multirate schemes.

the stability of the base schemes guarantees the stability of the multirate schemes.

6 Coupling the fast and slow systems in a computationally-efficient manner

In traditional Rosenbrock methods the coefficient matrix \({\varvec{\alpha }}\) is strictly lower triangular, and the matrix \({\varvec{\gamma }}\) lower triangular with equal diagonal entries. Due to this structure the stages \(\mathbf {k}_i\) are evaluated sequentially in a decoupled manner, each stage computation is only implicit in the current stage.

Multirate GARK ROS/ROW schemes compute both slow and fast stage vectors. We call a stage computation “decoupled” if it is implicit in only the current stage  or

or  . We call computations “coupled” if one (or more) slow stages, and one (or more) fast stages are computed together by solving a single large system of linear equations. Computational efficiency of multirate methods relies on evaluating less frequently the expensive slow part. Consequently, an efficient multirate method keeps the coupling at a minimum.

. We call computations “coupled” if one (or more) slow stages, and one (or more) fast stages are computed together by solving a single large system of linear equations. Computational efficiency of multirate methods relies on evaluating less frequently the expensive slow part. Consequently, an efficient multirate method keeps the coupling at a minimum.

In this paper we construct multirate GARK ROS/ROW schemes where the base methods are Rosenbrock(-W) schemes with matrices  ,

,  strictly lower triangular, and matrices

strictly lower triangular, and matrices  and

and  lower triangular. From the Butcher tableau (3.3) compute the coupling structure matrix

lower triangular. From the Butcher tableau (3.3) compute the coupling structure matrix

where \(| \cdots |\) takes element-wise absolute values, and \(\times \) is the element-wise product. We make the following observations:

-

In order to compute stages sequentially, in a completely decoupled manner, it must hold that \(\mathbf {S} = \varvec{0}\).

-

The non-zero entries in this matrix \(\mathbf {S} \) correspond to slow and fast stages that are computed together, in a coupled manner. Specifically, if element in row \((\lambda ,i)\) and column j is non-zero then stages

and

and  are computed by solving a joint linear system.

are computed by solving a joint linear system. -

Note that internal consistency Eq. (2.7) for \(\mathbf {g}\) requires that at least one slow and one fast stage are computed together in a coupled manner.

Example 6.1

(Second order, two-rate method) Consider the following example using \(\mathrm{M}=2\) and two-stage base methods:

We conveniently choose  for all j and \(\lambda \) such as to satisfy the first internal consistency conditions. The coupling structure matrix (6.1) is:

for all j and \(\lambda \) such as to satisfy the first internal consistency conditions. The coupling structure matrix (6.1) is:

6.1 IMEX approach

If one chooses  and

and  then the fast component is integrated with a Runge-Kutta method; this method is explicit if

then the fast component is integrated with a Runge-Kutta method; this method is explicit if  are strictly lower triangular matrices. For a decoupled computation one needs to select the coupling coefficients

are strictly lower triangular matrices. For a decoupled computation one needs to select the coupling coefficients  ,

,  , and

, and  such that the matrix \(\mathbf {S} = \varvec{0}\). Using notation (3.4), the fast stages are computed as:

such that the matrix \(\mathbf {S} = \varvec{0}\). Using notation (3.4), the fast stages are computed as:

The corresponding Butcher tableau (3.3) is:

6.2 Compound-first-step approach: coupling the macro-step with the first micro-step

We consider base methods with the same number of stages  . Moreover, we set the coupling coefficients

. Moreover, we set the coupling coefficients  for \(\lambda = 2, \dots ,\mathrm{M}\).

for \(\lambda = 2, \dots ,\mathrm{M}\).

The resulting Butcher tableau (3.3) is:

The coefficient matrices  ,

,  ,

,  ,

,  are chosen strictly lower triangular. The coefficient matrices

are chosen strictly lower triangular. The coefficient matrices  ,

,  ,

,  , and

, and  are chosen lower triangular, with equal diagonal entries:

are chosen lower triangular, with equal diagonal entries:  for \(i=1,\dots ,s\).

for \(i=1,\dots ,s\).

In the structure matrix (6.1) the entries \(\mathbf {S}_{i,i} \ne 0\) for  . This means that each slow stage

. This means that each slow stage  and the corresponding fast stage of the first micro-step

and the corresponding fast stage of the first micro-step  are computed in a coupled manner, by solving a full coupled system of linear equations. Since all diagonal entries are equal to each other, only one LU decomposition of the compound matrix is necessary for computing all stage vectors

are computed in a coupled manner, by solving a full coupled system of linear equations. Since all diagonal entries are equal to each other, only one LU decomposition of the compound matrix is necessary for computing all stage vectors  and

and  for \(i=1,\dots ,s\).

for \(i=1,\dots ,s\).

Since all slow stages are known after the first micro-step,  and

and  can be full matrices for \(\lambda =2,\ldots ,\mathrm{M}\). For all remaining micro steps a single additional LU decomposition is necessary if

can be full matrices for \(\lambda =2,\ldots ,\mathrm{M}\). For all remaining micro steps a single additional LU decomposition is necessary if  is constant for all

is constant for all  and \(\lambda =2,\ldots ,\mathrm{M}\).

and \(\lambda =2,\ldots ,\mathrm{M}\).

A simple choice of coefficients for compound-first-step coupling is  ,

,  ,

,  ,

,  .

.

Example 6.2

Consider the scheme of Example 6.1 with the following coefficients:

The coupling matrix (6.2) reads:

which indicates that  and

and  are computed together, and so are

are computed together, and so are  and

and  .

.

Remark 6.1

The multirate ROW schemes introduced by Bartel and Günther [3] fall into the class of multirate GARK-ROW schemes. They consider the case of time-lagged Jacobians (which differ by a term of magnitude \({\mathcal {O}}(H)\) from the exact Jacobian). In addition, the same order p within the micro steps is demanded.

6.3 Coupling only the first fast and the first slow stage computations

The smallest amount of coupling that allows the construction of internally consistent implicit schemes is a lighter version of the strategy discussed in Sect. 6.2, where only the first fast stage  and the first slow stage

and the first slow stage  are computed together. It is possible to select coefficients such that all subsequent stage computations are implicit in either fast or slow stages, and are computed in a decoupled manner.

are computed together. It is possible to select coefficients such that all subsequent stage computations are implicit in either fast or slow stages, and are computed in a decoupled manner.

Example 6.3

Consider the scheme from Example 6.1 with the following coefficients:

The coupling matrix (6.2) has single non-zero element,  , corresponding to first computing stages

, corresponding to first computing stages  and

and  in a coupled manner. Next,

in a coupled manner. Next,  and

and  are computed in a decoupled manner, since they only depend on the known slow stage

are computed in a decoupled manner, since they only depend on the known slow stage  . After this,

. After this,  is computed in a decoupled manner as it does not depend on the (yet unknown) last fast stage

is computed in a decoupled manner as it does not depend on the (yet unknown) last fast stage  . Finally,

. Finally,  is evaluated using both slow stages.

is evaluated using both slow stages.

Remark 6.2

Note that the coupling approaches in Sects. 6.2 and 6.3 consider only the influence of the fast scale within the first micro step onto the slow variables. If the evolution of the fast part changes drastically after the first micro-step, this would only affect the slow part in the next macro step. However, the order conditions show that the overall scheme has order p at the macro step grid points. In addition, as a rule of thumb, the fast part has typically only a weak influence on the slow part, as otherwise the slow part might incorporate some fast dynamics, and require to be treated as fast, too. The compound-step approach idea traces back to Kvaerno and Rentrop [22] in 1999, and to our knowledge, no time-lag effect has been observed since then in any multirate scheme based on the compound-step approach.

6.4 Fully decoupled approach

In the completely decoupled approach each stage follows a regular Rosenbrock computation, implicit in either the fast or the slow stages, but not in both at the same time. In this case the second internal consistency conditions  do not hold unless this first stage is explicit. Consequently, the coupling order conditions for the entire method become more complex, but such methods are possible to construct.

do not hold unless this first stage is explicit. Consequently, the coupling order conditions for the entire method become more complex, but such methods are possible to construct.

Example 6.4

Consider the scheme from Example 6.1 with the following coefficients:

Note the complementary sparsity structure of the off-diagonal coupling blocks. The first fast stage is that of a classical Rosenbrock method:

Similarly, the first slow stage is computed in a decoupled manner:

and the decoupled computations continue alternating fast and slow stages.

6.5 Step-predictor-corrector approach

This approach starts with a “predictor” step where the slow Rosenbrock method is applied with step size H to the entire system, in a classical fashion. The slow components are sufficiently accurate, but the fast components are not; for this reason we keep only the computed  , but discard

, but discard  . The “corrector” re-computes

. The “corrector” re-computes  for all sub-steps \(\lambda \), with the small steps sizes h, and uses these values to construct the final solution. The Butcher tableaus (3.3) read:

for all sub-steps \(\lambda \), with the small steps sizes h, and uses these values to construct the final solution. The Butcher tableaus (3.3) read:

Step-predictor-corrector methods will be discussed in detail in Sect. 8.

7 Compound-first-step MR-GARK-ROS/ROW schemes

Consider a telescopic compound-first-step method (6.4) where the fast and the slow base methods coincide:  ,

,  ,

,  , and

, and  . Remember that compound-first-step methods are characterized by the relation

. Remember that compound-first-step methods are characterized by the relation  for \(\lambda >1\), see (6.4).

for \(\lambda >1\), see (6.4).

A natural choice for the fast/slow coupling coefficients is:

where we allow the additional coefficient matrices  , and

, and  for more flexibility. Here

for more flexibility. Here  is assumed to be strictly lower triangular, and

is assumed to be strictly lower triangular, and  lower triangular. For internal consistency (4.2) we ask that

lower triangular. For internal consistency (4.2) we ask that

Overall, this choice adds \(\mathrm{M}\,s\,(2s-3)\) degrees of freedom to coupling coefficients (7.1a).

A natural choice of slow/fast coupling for a compound first step is:

with  strictly lower triangular and

strictly lower triangular and  lower triangular, where for internal consistency we impose

lower triangular, where for internal consistency we impose

This choice adds \(s^2-2s\) degrees of freedom to the coupling coefficients (7.1c).

The particular choice of coupling coefficients (7.1) implies that:

where we have used the abbreviation

Assuming that the base ROS scheme has order three, the remaining MR-GARK-ROS order three conditions (4.5) read:

Assuming that the base ROW scheme has order three, the remaining MR-GARK-ROW order three conditions (4.7) are:

Assuming that the base ROS scheme has order three, the remaining MR-GARK-ROS order four conditions (4.6) are:

Remark 7.1

Order four coupling conditions for compound-first-step MR-GARK-ROW schemes can be derived in an analogous manner from the general order conditions (2.6d).

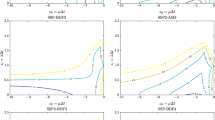

Using the framework of compound-first-step schemes we derive embedded MR-GARK-ROS methods of order (2)3 (main method of order three, with embedded scheme of order two) which fulfill the time-lagged Jacobian order conditions.

Example 7.1

(Implicit-implicit case) An embedded implicit-implicit MR-GARK-ROS scheme of order (2)3, which automatically fulfills the time-lagged Jacobian order conditions (4.1) due Remark 4.1, with \(\gamma \) and \(\beta _{2,1}\) as free parameters, is given by

Example 7.2

(Implicit-explicit case) For the implicit-explicit case we select  for \(\lambda =2,\ldots ,\mathrm{M}\), i.e., the base scheme for the last \(\mathrm{M}-1\) steps of the fast part is explicit. To obtain a fully-implicit compound step we set

for \(\lambda =2,\ldots ,\mathrm{M}\), i.e., the base scheme for the last \(\mathrm{M}-1\) steps of the fast part is explicit. To obtain a fully-implicit compound step we set  . We choose \({\varvec{\alpha }}, {\varvec{\beta }}, \texttt {b}, \hat{\texttt {b}}\) and the other coupling coefficients as in Example 7.1 above, but replace \(\hat{\alpha }\) with:

. We choose \({\varvec{\alpha }}, {\varvec{\beta }}, \texttt {b}, \hat{\texttt {b}}\) and the other coupling coefficients as in Example 7.1 above, but replace \(\hat{\alpha }\) with:

.

8 Step-predictor-corrector methods

We consider step predictor-corrector (SPC) methods described in Sect. 6.5. The computations associated with the Butcher tableau (6.5) proceed as follows. First, the “predictor” applies the slow base scheme  over a macro-step H to solve the entire coupled system:

over a macro-step H to solve the entire coupled system:

which gives the following values of the slow stages:

Next, the “corrector” applies the fast base scheme  over \(\mathrm{M}\) micro-steps of size h. The fast stages

over \(\mathrm{M}\) micro-steps of size h. The fast stages  are computed using formula (3.2a), and the next step solution \(\mathbf {y}_{n}\) using formula (3.2c). Note that the coupling matrices

are computed using formula (3.2a), and the next step solution \(\mathbf {y}_{n}\) using formula (3.2c). Note that the coupling matrices  and

and  do not need to be triangular, and can have any fill-in structure, since all

do not need to be triangular, and can have any fill-in structure, since all  are known before the start of the micro-steps.

are known before the start of the micro-steps.

8.1 Order conditions

8.1.1 Internal consistency

The internal consistency conditions (2.7) read:

The accuracy analysis in this section assumes internally consistent SPC-MR-GARK-ROS/ROW methods (8.2), where the slow and fast base methods are ROS/ROW schemes of the corresponding order. Therefore the analysis below focuses only on the remaining coupling conditions.

8.1.2 Second order conditions

For internally consistent SPC-MR-GARK-ROS/ROW methods the coupling conditions (2.5b) and (2.6b), respectively, are automatically satisfied.

8.1.3 Third order conditions

For SPC-MR-GARK-ROS methods there is a single remaining order three coupling condition (2.5c), as follows:

The third order conditions for time-lagged Jacobians (4.1) are automatically satisfied due to internal consistency.

For SPC-MR-GARK-ROW methods the order three coupling conditions (2.6c) are:

8.1.4 Fourth order conditions

For SPC-MR-GARK-ROS methods (8.2) there are four remaining order four coupling ROS conditions (2.5d), as follows:

Remark 8.1

Order four coupling conditions for SPC-MR-GARK-ROW schemes can be derived in an analogous manner from the general order conditions (2.6d).

8.2 Telescopic SPC methods

Consider a telescopic SPC method (6.5) where the fast and the slow base methods coincide, and are both equal to \((\texttt {b},{\varvec{\alpha }},{\varvec{\gamma }})\); the vectors (2.4) are \(\texttt {c}= {\varvec{\alpha }}\,\varvec{1}\), \(\texttt {g}= {\varvec{\gamma }}\,\varvec{1}\), and \(\texttt {e}= \texttt {c}+ \texttt {g}\).

The internal consistency Eq. (8.2) read for \(\lambda = 1, \dots , \mathrm{M}\):

We consider the following natural choice for the coupling coefficients:

where, in order to ensure internal consistency, we ask that

The second order coupling conditions for SPC-MR-GARK-ROS/ROW schemes are automatically satisfied due to internal consistency.

Using notation (7.2) and the coupling coefficients (8.6a) we have:

8.2.1 Third order conditions

The third order SPC-MR-GARK-ROS coupling condition (8.3) reads:

The third order SPC-MR-GARK-ROW coupling conditions (8.4) are:

The following choice of rank-one coupling matrix ensures third order:

where impose the internal consistency (8.6b), the coupling condition (8.8), and the second condition (8.9) by the following equations, respectively:

If the base method is a third order ROS scheme, then \(\mathbf {v}_1^T = 2\,\texttt {b}^T\, {\varvec{\beta }}\) offers a solution of these equations.

8.2.2 Fourth order conditions

The SPC-MR-GARK-ROS order four coupling conditions (8.5b) and (8.5d) are automatically satisfied by the choice of coupling coefficients (8.6). The remaining order four coupling conditions (8.5a) and (8.5c) are:

where \(\mathbf {f}(\lambda ) \, :=\, \mathbf {F}(\lambda )\,\texttt {e},\) \(\mathbf {f}(\Sigma _0) \, :=\, \mathbf {F}(\Sigma _0)\,\texttt {e},\) \(\mathbf {f}(\Sigma _1) \, :=\, \mathbf {F}(\Sigma _1)\,\texttt {e}\).

8.2.3 Polynomial coupling matrix

We build the coupling matrix \(\mathbf {F}(\lambda )\) as a polynomial with matrix coefficients:

Let \(K=2\). For internal consistency (8.6b) we require

The third order ROS coupling conditions (8.8) are:

and the third order ROW coupling conditions (8.9) read:

The fourth order ROS coupling conditions (8.10) are:

9 Multirate infinitesimal step methods

We now consider MR-GARK-ROS/ROW methods where the micro-steps can be arbitrarily small. We call these methods multirate “infinitesimal step”, or MRI-GARK-ROS/ROW for short; they offer extreme flexibility since they allow to solve the fast subsystem with any sufficiently accurate discretization method and sequence of step sizes.

Definition 9.1

(MRI-GARK-ROS/ROW methods) Consider a base slow ROS/ROW scheme  with non-decreasing abscissae

with non-decreasing abscissae  , and denote:

, and denote:

A multirate infinitesimal step GARK ROS/ROW method advances the solution of the fast-slow partitioned system (3.1) via the following computational process:

A modified fast ODE (9.1b) is integrated between consecutive stages of the base slow method. The slow components influence the fast dynamics via the time dependent coefficients  . The fast solutions impact the computation of the slow stages (9.1c) via the coupling coefficients

. The fast solutions impact the computation of the slow stages (9.1c) via the coupling coefficients  ,

,  . The next step solution (9.1d) combines the fast solution \(\widetilde{\mathbf {y}}_{n}\) and the slow solution increment given by the stages

. The next step solution (9.1d) combines the fast solution \(\widetilde{\mathbf {y}}_{n}\) and the slow solution increment given by the stages  .

.

To analyze the scheme (9.1) we start by discretizing each modified fast ODE (9.1b) with an explicit Runge-Kutta scheme  of arbitrary accuracy:

of arbitrary accuracy:

The fast discrete stages (9.2), together with the slow stages (9.1c) and the next step solution (9.1d), form an IMEX GARK ROS/ROW method (3.2) with  , as described in Sect. 6.1. The slow/fast coupling coefficients have the following particular structure:

, as described in Sect. 6.1. The slow/fast coupling coefficients have the following particular structure:

The Butcher tableau (3.3) of the resulting IMEX GARK ROS/ROW scheme is:

9.1 Internal consistency

The internal consistency conditions (4.1a) and (4.1d) are automatically satisfied. Conditions (4.1b) and (4.1c) read:

The following choice obeys the internal consistency condition:

We have that:

9.2 Third order conditions

The MRI-GARK-ROS coupling conditions (4.5) read:

The order three MRI-GARK-ROW coupling conditions (4.7) are:

Remark 9.1

Order four coupling conditions for infinitesimal step MR-GARK-ROS/ROW schemes can be derived in an analogous manner from the general order conditions (2.5d), (2.6d).

10 Infinitesimal step SPC methods

Consider a step-predictor-corrector method (6.5) with the slow base method  , and perform the compound step (8.1). Then proceed with the fast integration, but instead of applying the discrete formula 3.2a), solve the following modified fast ODE:

, and perform the compound step (8.1). Then proceed with the fast integration, but instead of applying the discrete formula 3.2a), solve the following modified fast ODE:

The next step solution, computed using (3.2c), is:

Remark 10.1

(Error estimation) Assume that the base method has an embedded scheme  for error estimation. Using

for error estimation. Using  in (10.2) gives an error estimate in the slow component, which can be used for macro-step error control. The fast components are solved with infinite accuracy, and the same fast integration (10.1) is used for both the main and the embedded solutions.

in (10.2) gives an error estimate in the slow component, which can be used for macro-step error control. The fast components are solved with infinite accuracy, and the same fast integration (10.1) is used for both the main and the embedded solutions.

Solving (10.1) with an arbitrarily accurate fast GARK-ROS/ROW method (2.1) with coefficients  gives the discrete solution:

gives the discrete solution:

The Butcher tableau (3.3) of the coupled scheme (8.1) and (10.3) reads:

The internal consistency conditions (2.7) are:

Without loss of generality we choose  , i.e., we solve the modified fast ODE with an arbitrarily accurate Runge-Kutta scheme. The corresponding continuous coupling coefficients are chosen accordingly as

, i.e., we solve the modified fast ODE with an arbitrarily accurate Runge-Kutta scheme. The corresponding continuous coupling coefficients are chosen accordingly as  .

.

Define the  coupling coefficients as polynomials in time:

coupling coefficients as polynomials in time:

The internal consistency conditions (10.5) are satisfied with:

10.1 SPC-MRI-GARK-ROS methods

The order three ROS condition (2.5c) reads:

The order four ROS conditions (2.5d) are:

An order four linear-in-time coupling can be constructed as follows:

Example 10.1

(Multirate Rodas) We build an SPC MRI version of Hairer and Wanner’s Rodas method [17, Chapter VI.4] by constructing a coupling of the form (10.6). The Rodas method has six stages, and the coupling (10.6) is defined by twelve coefficients (the entries of the six-dimensional vectors \(\varvec{\mu }_0\) and \(\varvec{\mu }_1\)). There are six ROS order four coupling conditions (10.6), and we also impose the order 3 ROW conditions (10.8). The coefficients depend on four free parameters:

The free parameters can be used to improve stability. By setting \(\theta _3 = \theta _4 = 0\) the last slow Rodas stage is not used for coupling.

10.2 SPC-MRI-GARK-ROW methods

The order three ROW conditions (2.6c) are:

and a third order coupling can be constructed as follows:

Example 10.2

Consider the third order, stiffly accurate ROW method ROS34PW2 of Rang and Angermann [23, Table 4.3]. The SPC MRI coupling coefficients (10.9) are defined in terms of one free parameter \(\theta \) that can be used to improve stability:

Remark 10.2

Order four coupling conditions for infinitesimal SPC multirate GARK-ROW schemes can be derived in an analogous manner from the general order conditions (2.6d).

11 Discussion

This paper proposes a general framework for linearly-implicit multirate time integration. Multirate GARK-ROS and GARK-ROW schemes, which make use of the exact or approximative Jacobian, respectively, are developed and analyzed. Order conditions up to order four are derived, with a focus on internally consistent schemes. We discuss several slow-fast coupling structures that lead to efficient computational processes. Such couplings include compound-first step schemes, step-predictor-corrector methods, and multirate infinitesimal step approaches. Coefficient sets for new specific methods are given to illustrate these coupling strategies.

The new MR(I)-GARK-ROS/ROW framework includes all existing multirate Rosenbrock(-W) methods as particular cases, and opens the possibility to develop new, high order, highly stable linearly implicit multirate schemes for a myriad of applications. The development and optimization of practical MR(I)-GARK-ROS/ROW methods, their efficient implementation [39], and extensive numerical testing in real applications will be presented in a forthcoming publication.

Notes

Note that in the GARK-ROS case, all Jacobians are evaluated at the current macro grid point \(t_{n-1}\) for two reasons: first of all, one saves computational and overhead costs. Secondly, we only aim at achieving the order of the method in the macro grid points, and not in addition in the micro grid points, as we regard our scheme as a partitioned scheme on the macro step size. The same point of view was taken in [3].

Here we have used the greek uppercase letter \(\Sigma \) to denote that we are summing over all greek lowercase letters \(\lambda \). The index k in \(\Sigma _k\) denotes that we are using the k-th polynomial of \(\lambda -1\) in the sums.

References

Andrus, J.F.: Numerical solution for ordinary differential equations separated into subsystems. SIAM J. Numer. Anal. 16, 605–611 (1979)

Andrus, J.F.: Stability of a multirate method for numerical integration of ODEs. Comput. Math. Appl. 25, 3–14 (1993)

Bartel, A., Günther, M.: A multirate W-method for electrical networks in state-space formulation. J. Comput. Appl. Math. 147, 411–425 (2002)

Bremicker-Trübelhorn, S., Ortleb, S.: On multirate GARK schemes with adaptive micro-step sizes for fluid-structure interaction: order conditions and preservation of the geometric conservation law. Aerospace 4, 8 (2017)

Kennedy, C.A., Carpenter, M.H.: Additive Runge-Kutta schemes for convection-diffusion-reaction equations. Appl. Numer. Math. 44, 139–181 (2003)

Cardone, A., Jackiewicz, Z., Sandu, A., Zhang, H.: Extrapolated IMEX Runge-Kutta methods. Math. Model. Anal. 19, 18–43 (2014)

Cardone, A., Jackiewicz, Z., Sandu, A., Zhang, H.: Extrapolation-based implicit-explicit general linear methods. Numer. Algorithms 65, 377–399 (2014)

Constantinescu, E.M., Sandu, A.: Multirate timestepping methods for hyperbolic conservation laws. J. Sci. Comput. 33, 239–278 (2007)

Constantinescu, E.M., Sandu, A.: On extrapolated multirate methods. In: Progress in Industrial Mathematics at ECMI 2008, Hans-Georg, B., Frank, H., Avner, F., Arvind, G., Helmut, N., William, R.P., Torgeir, R., Fadil, S., Anna-Karin, T., Vincenzo, C., Robert, M., Helmut, N., Otmar, S., Alistair, D.F., John N., Hilary, O., and Eddie, W. (eds.) vol. 15 of Mathematics in Industry, Springer Berlin Heidelberg, pp. 341–347 (2010)

Constantinescu, E.M., Sandu, A.: Extrapolated multirate methods for differential equations with multiple time scales. J. Sci. Comput. 56, 28–44 (2013)

Engstler, C., Lubich, C.: Multirate extrapolation methods for differential equations with different time scales. Computing 58, 173–185 (1997)

Gear, C.W., Wells, D.R.: Multirate linear multistep methods. BIT 24, 484–502 (1984)

Günther, M., Kværnø, A., Rentrop, P.: Multirate partitioned Runge-Kutta methods. BIT Numer. Math. 41, 504–514 (2001)

Günther, M., Rentrop, P.: Multirate ROW-methods and latency of electric circuits. Appl. Numer. Math. 13, 83–102 (1993)

Günther, M., Sandu, A.: Multirate generalized additive Runge-Kutta methods. Numer. Math. 133, 497–524 (2016)

Hachtel, C., Bartel, A., Günther, M., Sandu, A.: Multirate implicit Euler schemes for a class of differential–algebraic equations of index-1. J. Comput. Appl. Math. 387, 112499 (2021)

Hairer, E., Wanner, G.: Solving ordinary differential equations II: stiff and differential-algebraic problems. In: Springer Series in Computational Mathematics, 2nd edn. Springer, Berlin, Heidelberg (1996)

Kato, T., Kataoka, T.: Circuit analysis by a new multirate method. Electr. Eng. Jpn 126, 55–62 (1999)

Kennedy, C.A., Carpenter, M.H.: Higher-order additive Runge-Kutta schemes for ordinary differential equations. Appl. Numer. Math. 136, 183–205 (2019)

Knoth, O., Wolke, R.: Implicit-explicit Runge-Kutta methods for computing atmospheric reactive flows. Appl. Numer. Math. 28, 327–341 (1998)

Kværnø, A.: Stability of multirate Runge-Kutta schemes. Int. J. Diff. Equ. Appl. 1, 97–105 (2000)

Kværnø, A., Rentrop, P.: Low order multirate Runge-Kutta methods in electric circuit simulation. (1999)

Rang, Joachim, Angermann, L.: New Rosenbrock W-methods of order 3 for partial differential algebraic equations of index 1. BIT Numer. Math. 45, 761–787 (2005)

Rice, J.R.: Split Runge-Kutta methods for simultaneous equations. J. Res. Natl. Inst. Stand. Technol. 60, (1960)

Roberts, S., Loffeld, J., Sarshar, A., Woodward, C.S., Sandu, A.: Implicit multirate gark methods. J. Sci. Comput. 87, 4 (2021)

Roberts, S., Sarshar, A., Sandu, A.: Coupled multirate infinitesimal GARK methods for stiff differential equations with multiple time scales. SIAM J. Sci. Comput. 42, A1609–A1638 (2020)

Romer, U., Narayanamurthi, M., Sandu, A.: Goal-oriented a posteriori estimation of numerical errors in the solution of multiphysics systems. Submitted (2020)

Rosenbrock, H.H.: Some general implicit processes for the numerical solution of differential equations. Comput. J. 5, 329–330 (1963)

Sandu, A.: A class of multirate infinitesimal GARK methods. SIAM J. Numer. Anal. 57, 2300–2327 (2019)

Sandu, A., Constantinescu, E.M.: Multirate Adams methods for hyperbolic equations. J. Sci. Comput. 38, 229–249 (2009)

Sandu, A., Constantinescu, E.M.: Multirate time discretizations for hyperbolic partial differential equations. In: International Conference of Numerical Analysis and Applied Mathematics (ICNAAM 2009), vol. 1168-1 of American Institute of Physics (AIP) Conference Proceedings, pp. 1411–1414 (2009)

Sandu, A., Guenther, M., Roberts, S.B.: Linearly implicit GARK schemes. Appl. Numer. Math. 161, 286–310 (2021)

Sandu, A., Günther, M.: A generalized-structure approach to additive Runge-Kutta methods. SIAM J. Numer. Anal. 53, 17–42 (2015)

Sarshar, A., Roberts, S., Sandu, A.: Design of high-order decoupled multirate GARK schemes. SIAM J. Sci. Comput. 41, A816–A847 (2019)

Savcenco, V.: Construction of high-order multirate Rosenbrock methods for stiff ODEs, Tech. Report MAS-E0716, Centrum voor Wiskunde en Informatica (2007)

Steihaug, T., Wolfbrandt, A.: An attempt to avoid exact Jacobian and nonlinear equations in the numerical solution of stiff differential equations. Math. Comput. 33, 521 (1979)

Wensch, J., Knoth, O., Galant, A.: Multirate infinitesimal step methods for atmospheric flow simulation. BIT Numer. Math. 49, 449–473 (2009)

Zanna, A.: Discrete variational methods and symplectic generalized additive Runge–Kutta methods. (2020)

Zhang, H., Sandu, A.: FATODE: a library for forward, adjoint and tangent linear integration of stiff systems. SIAM J. Sci. Comput. 36, C504–C523 (2014)

Zhang, H., Sandu, A., Blaise, S.: Partitioned and implicit-explicit general linear methods for ordinary differential equations. J. Sci. Comput. 61, 119–144 (2014)

Zhang, H., Sandu, A., Blaise, S.: High order implicit-explicit general linear methods with optimized stability regions. SIAM J. Sci. Comput. 38, A1430–A1453 (2016)

Zharovsky, E., Sandu, A., Zhang, H.: A class of IMEX two-step Runge-Kutta methods. SIAM J. Numer. Anal. 53, 321–341 (2015)

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Christian Lubich.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of Sandu was supported by awards NSF CCF–1613905, NSF ACI–1709727, NSF CDS&E-MSS–1953113, DOE ASCR DE–SC0021313, and by the Computational Science Laboratory at Virginia Tech. The work of Günther was supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie Grant Agreement No. 765374, ROMSOC.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Günther, M., Sandu, A. Multirate linearly-implicit GARK schemes. Bit Numer Math 62, 869–901 (2022). https://doi.org/10.1007/s10543-021-00898-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-021-00898-5

Keywords

- Multirate integration

- Generalized Additive Runge-Kutta (GARK) schemes

- Linear implicitness

- GARK ROS/ROW methods

- Stability

and

and  are computed by solving a joint linear system.

are computed by solving a joint linear system.