Abstract

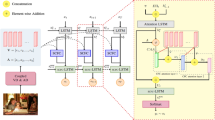

The image captioning task is among the most important tasks in computer vision. Most existing methods mine more useful contextual information from image features. Similarly, to mine more contextual information, this paper proposes a visual contextual relationship augmented transformer (VRAT) method for improving the correctness of image description statements. In VRAT, visual contextual features are enhanced by using a pre-trained visual contextual relationship augmented module (VRAM). In VRAM, we classify images into three categories: globe, object, and grid, and use encoders of CLIP and ResNext to encode images and text to supplement the original image descriptions with visual and textual features. Finally, a similarity retrieval model is constructed to match global features, object features, and grid features for contextual relationships. During model training, our model supplements the original image captioning model with global, object, and grid visual features and textual features. In addition, to improve the quality of the attention-focused image features, we propose an attention augmented module (AAM) that adds a compensated attention module to the original multi-head attention, which allows a large number of image features in the model to focus more on important information and filter out some unimportant attention information. To alleviate the imbalance of positive and negative samples during training, we propose a multi-label focal loss in the model and combine it with the original cross-entropy loss function to improve the performance of the model. Experiments on the MSCOCO image description benchmark dataset show that the proposed method can perform well and outperform many existing state-of-the-art methods. The improvement in the CIDEr score and BLEU-1 score over the baseline model was 7.7 and 1.5, respectively.

Graphical abstract

Similar content being viewed by others

Data Availability

The raw/processed data cannot be shared temporarily as the data also forms part of an ongoing study.

References

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6077–6086

Li G, Zhu L, Liu P, Yang Y (2019) Entangled transformer for image captioning. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8928–8937

Ji J, Luo Y, Sun X, Chen F, Luo G, Wu Y, Gao Y, Ji R (2021) Improving image captioning by leveraging intra-and inter-layer global representation in transformer network. In: Proceedings of the AAAI conference on artificial intelligence, pp 1655–1663

Cornia M, Stefanini M, Baraldi L, Cucchiara R (2020) Meshed-memory transformer for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10578–10587

Luo Y, Ji J, Sun X, Cao L, Wu Y, Huang F, Lin C-W, Ji R (2021) Dual-level collaborative transformer for image captioning. In: Proceedings of the AAAI conference on artificial intelligence, pp 2286–2293

Luo J, Li Y, Pan Y, Yao T, Feng J, Chao H, Mei T (2023) Semantic-conditional diffusion networks for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 23359–23368

Ma Y, Ji J, Sun X, Zhou Y, Ji R (2023) Towards local visual modeling for image captioning. Pattern Recognit 138:109420

Wang C, Gu X (2023) Learning joint relationship attention network for image captioning. Expert Syst Appl 211:118474

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp 91–99

Wei J, Li Z, Zhu J, Ma H (2023) Enhance understanding and reasoning ability for image captioning. Appl Intell 53(3):2706–2722

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J, et al (2021) Learning transferable visual models from natural language supervision. In: Proceedings of the international conference on machine learning, pp 8748–8763. PMLR

Xie S, Girshick R, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1492–1500

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: A neural image caption generator. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3156–3164

Huang L, Wang W, Chen J, Wei X-Y (2019) Attention on attention for image captioning. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4634–4643

Li B, Yao Y, Tan J, Zhang G, Yu F, Lu J, Luo Y (2022) Equalized focal loss for dense long-tailed object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6990–6999

Mao J, Xu W, Yang Y, Wang J, Yuille AL (2015) Deep captioning with multimodal recurrent neural networks (m-rnn). In: Proceedings of the 3rd international conference on learning representations

Wei H, Li Z, Zhang C, Ma H (2020) The synergy of double attention: Combine sentence-level and word-level attention for image captioning. Comput Vis Image Understand 201:103068

Karpathy A, Fei-Fei L (2015) Deep visual-semantic alignments for generating image descriptions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3128–3137

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: Neural image caption generation with visual attention. In: Proceedings of the international conference on machine learning, pp 2048–2057. PMLR

You Q, Jin H, Wang Z, Fang C, Luo J (2016) Image captioning with semantic attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4651–4659

Liu C, Mao J, Sha F, Yuille A (2017) Attention correctness in neural image captioning. In: Proceedings of the AAAI conference on artificial intelligence, pp 4176–4182

Lu J, Xiong C, Parikh D, Socher R (2017) Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 375–383

Li L, Tang S, Deng L, Zhang Y, Tian Q (2017) Image caption with global-local attention. In: Proceedings of the AAAI conference on artificial intelligence, pp 4133–4139

Ranzato M, Chopra S, Auli M, Zaremba W (2016) Sequence level training with recurrent neural networks. In: Proceedings of the 4th international conference on learning representations

Ren Z, Wang X, Zhang N, Lv X, Li L-J (2017) Deep reinforcement learning-based image captioning with embedding reward. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 290–298

Rennie SJ, Marcheret E, Mroueh Y, Ross J, Goel V (2017) Self-critical sequence training for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7008–7024

Qin Y, Du J, Zhang Y, Lu H (2019) Look back and predict forward in image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8367–8375

Wei H, Li Z, Huang F, Zhang C, Ma H, Shi Z (2021) Integrating scene semantic knowledge into image captioning. ACM Trans Multimed Comput Commun Appl (TOMM) 17(2):1–22

Huang F, Li Z, Chen S, Zhang C, Ma H (2020) Image captioning with internal and external knowledge. In: Proceedings of the 29th ACM international conference on information & knowledge management, pp 535–544

Xian T, Li Z, Tang Z, Ma H (2022) Adaptive path selection for dynamic image captioning. Advances in IEEE transactions on circuits and systems for video technology 5762–5775

Pan Y, Yao T, Li Y, Mei T (2020) X-linear attention networks for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10971–10980

Li J, Li D, Xiong C, Hoi S (2022) Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In: Proceedings of the international conference on machine learning, pp 12888–12900

Kenton JDM-WC, Toutanova LK (2019) Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of naacL-HLT, vol 1, pp 2

Kuo C-W, Kira Z (2022) Beyond a pre-trained object detector: Cross-modal textual and visual context for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 17969–17979

Yang X, Tang K, Zhang H, Cai J (2019) Auto-encoding scene graphs for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10685–10694

Hendricks LA, Venugopalan S, Rohrbach M, Mooney R, Saenko K, Darrell T (2016) Deep compositional captioning: Describing novel object categories without paired training data. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–10

Yao T, Pan Y, Li Y, Mei T (2017) Incorporating copying mechanism in image captioning for learning novel objects. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6580–6588

Li Y, Yao T, Pan Y, Chao H, Mei T (2019) Pointing novel objects in image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12497–12506

Zhou Y, Sun Y, Honavar V (2019) Improving image captioning by leveraging knowledge graphs. In: Proceedings of the 2019 IEEE winter conference on applications of computer vision, pp. 283–293. IEEE

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2021) An image is worth 16x16 words: Transformers for image recognition at scale. In: Proceedings of the 9th international conference on learning representations

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing, pp 1532–1543

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. In: Proceedings of the 1st international conference on learning representations

Krishna R, Zhu Y, Groth O, Johnson J, Hata K, Kravitz J, Chen S, Kalantidis Y, Li L-J, Shamma DA et al (2017) Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int J Comput Vis 123:32–73

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11534–11542

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. Advances in neural information processing systems 27

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: Computer vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, pp 740–755. Springer

Karpathy A, Fei-Fei L (2015) Deep visual-semantic alignments for generating image descriptions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3128–3137

Papineni K, Roukos S, Ward T, Zhu W-J (2002) Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th annual meeting of the association for computational linguistics, pp 311–318

Vedantam R, Lawrence Zitnick C, Parikh D (2015) Cider: Consensus-based image description evaluation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4566–4575

Kingma DP, Ba J (2015) Adam: A method for stochastic optimization. In: Proceedings of the 3rd international conference on learning representations

Li X, Yin X, Li C, Zhang P, Hu X, Zhang L, Wang L, Hu H, Dong L, Wei F et al (2020) Oscar: Object-semantics aligned pre-training for vision-language tasks. In: Proceedings of the European conference on computer vision, pp 121–137

Zhang P, Li X, Hu X, Yang J, Zhang L, Wang L, Choi Y, Gao J (2021) Vinvl: Revisiting visual representations in vision-language models. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5579–5588

Herdade S, Kappeler A, Boakye K, Soares J (2019) Image captioning: Transforming objects into words. In: Advances in neural information processing systems, pp 11135–11145

Yao T, Pan Y, Li Y, Mei T (2018) Exploring visual relationship for image captioning. In: Proceedings of the European conference on computer vision, pp 684–699

Guo L, Liu J, Zhu X, Yao P, Lu S, Lu H (2020) Normalized and geometry-aware self-attention network for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10327–10336

Mao Y, Chen L, Jiang Z, Zhang D, Zhang Z, Shao J, Xiao J (2022) Rethinking the reference-based distinctive image captioning. In: Proceedings of the 30th ACM international conference on multimedia, pp 4374–4384

Yao T, Pan Y, Li Y, Mei T (2019) Hierarchy parsing for image captioning. In: Proceedings of the IEEE international conference on computer vision, pp 2621–2629

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 62276073, 61966004), the Guangxi Natural Science Foundation (No. 2019GXNSFDA245018), the Innovation Project of Guangxi Graduate Education (No. YCSW2023141), the Guangxi “Bagui Scholar” Teams for Innovation and Research Project, and the Guangxi Collaborative Innovation Center of Multi-source Information Integration and Intelligent Processing.

Author information

Authors and Affiliations

Contributions

Qiang Su, Junbo Hu and Zhixin Li contributed to the research conception and design of the paper, analyzed the data, and writing the paper.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare there is no conflicts of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Su, Q., Hu, J. & Li, Z. Visual contextual relationship augmented transformer for image captioning. Appl Intell 54, 4794–4813 (2024). https://doi.org/10.1007/s10489-024-05416-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05416-y