Abstract

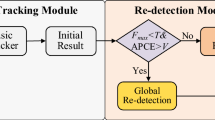

In long-term visual tracking, target occlusion and out-of-view are common problems that lead to target loss. Adding a re-detection module to the short-term tracking algorithm is a general solution. However, the existing re-detection methods have limited accuracy, a large amount of calculation, and serious error accumulation, which seriously affect the algorithm’s long-term tracking ability. This paper proposes a flexible and accurate global re-detection module that enhances long-term tracking performance of the algorithm while improving re-detection speed. The proposed method innovatively uses three templates for global sampling to improve the re-detection accuracy. Then, Gumbel-Softmax is introduced into the re-detection module for accurate sampling, and a less number of target candidate boxes are output, which reduces the amount of computation. Finally, color feature is added to assist cosine similarity to locate the final target position more accurately. Four tracking algorithms are selected as benchmark algorithms (STMTrack, KeepTrack, SuperDiMP, and DiMP). The experimental results on five datasets (UAV123, UAV20L, LaSOT, VOT2018-LT, and VOT2020-LT) show that the long-term tracking ability of these algorithms can be effectively improved after adding the re-detection module. Especially on UAV20L, the accuracy and success rate of the improved STMTrack can be increased by 15.6% and 11.6% respectively.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Ye M, Shen J, Lin G, Xiang T, Shao L, Hoi SCH (2021) Deep learning for person re-identification: a survey and outlook. IEEE Trans Pattern Anal Mach Intell 44(6):2872–2893

Liu Q, Chu Q, Liu B, Yu N (2020) GSM: graph similarity model for multi-object tracking. In: Proc 29th int joint conf artif intell. IJCAI, pp 530–536

Wu X, Xu J, Zhu Z, Zhang Q, Tang S, Liang M, Cao B (2022) Correlation filter tracking algorithm based on spatial-temporal regularization and context awareness. Appl Intell:1–12

Xiao D, Tan K, Wei Z, Zhang G (2022) Siamese block attention network for online update object tracking. Appl Intell:1–13

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 8971–8980

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 4282–4291

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) Atom: accurate tracking by overlap maximization. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 4660–4669

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 6668–6677

Zhang J, Liu Y, Liu H, Wang J, Zhang Y (2022) Distractor-aware visual tracking using hierarchical correlation filters adaptive selection. Appl Intell 52(6):6129–6147

Cheng S, Zhong B, Li G, Liu X, Tang Z, Li X, Wang J (2021) Learning to filter: siamese relation network for robust tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 4421–4431

Guo D, Shao Y, Cui Y, Wang Z, Zhang L, Shen C (2021) Graph attention tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 9543–9552

Wang X, Chen Z, Tang J, Luo B, Wang Y, Tian Y, Wu F (2021) Dynamic attention guided Multi-Trajectory analysis for single object tracking. IEEE Trans Circuits Syst Video Technol 31(12):4895–4908

Zhang Z, Peng H, Fu J, Li B, Hu W (2020) Ocean: object-aware anchor-free tracking. In: Proc eur conf comput vis. Cham, pp 771–787

Zhou Z, Li X, Zhang T, Wang H, He Z (2022) Object tracking via Spatial-Temporal memory network. IEEE Trans Circuits Syst Video Technol 32(5):2976–2989

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proc eur conf comput vis. Cham, pp 101–117

Zhang Y, Wang D, Wang L, Qi J, Lu H (2018) Learning regression and verification networks for long-term visual tracking. arXiv:1809.04320

Yan B, Zhao H, Wang D, Lu H, Yang X (2019) ‘Skimming-Perusal’ tracking: a framework for real-time and robust long-term tracking. In: Proc IEEE/CVF int conf comput vis. IEEE, pp 2385–2393

Voigtlaender P, Luiten J, Torr PHS, Leibe B (2020) Siam r-cnn: visual tracking by re-detection. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 6578–6588

Dai K, Zhang Y, Wang D, Li J, Lu H, Yang X (2020) High-performance long-term tracking with meta-updater. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 6298–6307

Choi S, Lee J, Lee Y, Hauptmann A (2020) Robust long-term object tracking via improved discriminative model prediction. In: Proc eur conf comput vis. Cham, pp 602–617

Tang F, Ling Q (2020) Contour-aware long-term tracking with reliable re-detection. IEEE Trans Circuits Syst Video Technol 30(12):4739–4754

Wang N, Zhou W, Li H (2019) Reliable re-detection for long-term tracking. IEEE Trans Circuits Syst Video Technol 29(3):730–743

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for uav tracking. In: Proc eur conf comput vis. Cham, pp 445–461

Fan H, Lin L, Yang F et al (2019) Lasot: a high-quality benchmark for large-scale single object tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 5374–5383

Lukežič A, Zajc LČ, Vojíř T, Matas J, Kristan M (2018) Now you see me: evaluating performance in long-term visual tracking. arXiv:1804.07056

Kristan M, Leonardis A, Matas J et al (2020) The eighth visual object tracking VOT2020 challenge results. In: Proc eur conf comput vis. Cham, pp 547–601

Wu Y, Lim J, Yang MH (2013) Online object tracking: a benchmark. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 2411–2418

Kristan M, Leonardis A, Matas J, Felsberg M, Pflugfelder R, Zajc LC (2018) The sixth visual object tracking vot2018 challenge results. In: Proc eur conf comput vis workshops. Cham, pp 0–0

Kalal Z, Mikolajczyk K, Matas J (2011) Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell 34(5):1409–1422

Ma C, Yang X, Zhang C, Yang M (2015) Long-term correlation tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 5388–5396

Hong Z, Chen Z, Wang C, Mei X, Prokhorov D, Tao D (2015) Multi-store tracker (muster): a cognitive psychology inspired approach to object tracking. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 749–758

Ma C, Huang JB, Yang X, Yang M (2018) Adaptive correlation filters with long-term and short-term memory for object tracking. Int J Comput Vis 126:771–796

Zhu G, Porikli F, Li H (2016) Beyond local search: tracking objects everywhere with instance-specific proposals. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 943–951

Lukežič A, Zajc L, Vojíř T, Matas J, Kristan M (2018) Fucolot–a fully-correlational long-term tracker. In: Asian conf comput vis. Springer, pp 595–611

Huang L, Zhao X, Huang K (2020) Globaltrack: a simple and strong baseline for long-term tracking. In: Proc AAAI conf artif intell. AIII, pp 11037–11044

Cheng J, Wu Y, AbdAlmageed W, Natarajan P (2019) QATM: quality-aware template matching for deep learning. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 11553–11562

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv:1312.6114

Wang M, Liu Y, Huang Z (2017) Large margin object tracking with circulant feature maps. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 4021–4029

Fu Z, Liu Q, Fu Z, Wang Y (2021) STMTrack: template-free visual tracking with space-time memory networks. In: Proc IEEE conf comput vis pattern recognit. IEEE, pp 13774–13783

Mayer C, Danelljan M, Paudel DP, Gool LV (2021) Learning target candidate association to keep track of what not to track. arXiv:2103.16556

Danelljan M, Bhat G (2019) PyTracking: visual tracking library based on PyTorch. https://github.com/visionml/pytracking

Bhat G, Danelljan M, Gool LV, Timofte R (2019) Learning discriminative model prediction for tracking. In: Proc IEEE/CVF int conf comput vis. IEEE, pp 6182–6191

Gavves E, Tao R, Gupta DK, Smeulders AWM (2021) Model decay in long-term tracking. In: IEEE 25th int conf pattern recognit. IEEE, pp 2685–2692

Henriques JF, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Sitaula C, Hossain MB (2021) Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl Intell 51:2850–2863

Mateen M, Wen J, Song S, Huang Z (2018) Fundus image classification using VGG-19 architecture with PCA and SVD. Symmetry 11(1):1

Li C, Guo J, Guo C (2018) Emerging from water: underwater image color correction based on weakly supervised color transfer. IEEE Signal Proc Let 25(3):323–327

Liu Y, Pan C, Bie M, Li J (2022) An efficient real-time target tracking algorithm using adaptive feature fusion. Vis Commun Image Represent 85:103505

Bourouis S, Channoufi I, Alroobaea R, Rubaiee S, Andejany M, Bouguila N (2021) Color object segmentation and tracking using flexible statistical model and level-set. Multimed Tools Appl 80(4):5809–5831

Yan B, Peng H, Fu J, Wang D, Lu H (2021) Learning spatio-temporal transformer for visual tracking. arXiv:2103.17154

Zhao H, Yan B, Wang D, Qian X, Yang X, Lu H (2022) Effective local and global search for fast long-term tracking. IEEE Trans Pattern Anal Mach Intell 1:1–1

Acknowledgements

This work is supported by the National Natural Science Foundation of China under grant no. 62072370.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

This work is supported by the National Natural Science Foundation of China under grant no. 62072370. The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, Z., Ma, J., Yu, W. et al. Multi-template global re-detection based on Gumbel-Softmax in long-term visual tracking. Appl Intell 53, 20874–20890 (2023). https://doi.org/10.1007/s10489-023-04584-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04584-7