Abstract

Employee turnover is one of the most important issues in human resource management, which is a combination of soft and hard skills. This makes it difficult for managers to make decisions. In order to make better decisions, this article has been devoted to identifying factors affecting employee turnover using feature selection approaches such as Recursive Feature Elimination algorithm and Mutual Information and Meta-heuristic algorithms such as Gray Wolf Optimizer and Genetic Algorithm. The use of Multi-Criteria Decision-Making techniques is one of the other approaches used to identify the factors affecting the employee turnover in this article. Our expert has used the Best-Worst Method to evaluate each of these variables. In order to check the performance of each of the above methods and to identify the most significant factors on employee turnover, the results are used in some machine learning algorithms to check their accuracy in predicting the employee turnover. These three methods have been implemented on the human resources dataset of a company and the results show that the factors identified by the Mutual Information algorithm can show better results in predicting the employee turnover. Also, the results confirm that managers need a support tool to make decisions because the possibility of making mistakes in their decisions is high. This approach can be used as a decision support tool by managers and help managers and organizations to have a correct insight into the departure of their employees and adopt policies to retain and optimize their employees.

Similar content being viewed by others

1 Introduction

Human resources in any organization constitute the most complex social system for management that can develop itself. They are also a combination of decision-making and planning. That is why the decision to allocate humans, hiring, turnover, labor analysis, talent analysis, rewards, and human resource optimization is recognized as one of the challenging issues for managers in organizations to achieve the desired performance. Human resource management is a complex process that includes subjective and unpredictable factors and measurements [33]. Managing this complex process not only can increase employee efficiency but also can control the organization’s costs, which would increase the productivity of the organization as well.

On the other hand, human resources are considered as a source for creating values and strategic policies of the organization, and the long-term success and competitive advantage of organizations will depend on their human resources. In fact, the human resources of any organization are very significant and valuable talents that are very difficult to replace, especially for organizations that need knowledge workers. This concept shows that maintaining and developing qualify employees in organizations is very important, and organizations are obliged to maintain their human resources because hiring, training and then socializing people are costly and time-consuming. The experience and skills that employees gain in any organization are fundamental and hidden investments for the organization. In other words, it can be said that scarce, valuable and inimitable resources such as human resources are considered a kind of capital loss.

The employee turnover is often the result poor hiring decisions, and poor management and directly affects the organization’s strategic plans. In other words, poor management and managerial performance gaps in decision making can increase the employee turnover rate [9]. According to Zhu et al. [38], many companies had to adjust, improve and upgrade their management systems to reduce their turnover rate and private companies and governments spend billions of dollars annually to manage this problem. It can be said that maintaining and optimizing expensive and scarce resources such as human recourses is one of the most important concerns of managers, which requires more advantage and more innovative tools to improve decisions. This is the reason why identifying the factors affecting employee turnover is a very valuable measure that can lead to corrective measures to prevent employee turnover and helps managers to making better decision.

Therefore, in order to solve this research gap and improve the performance of manager’s decisions, there is a need for more advanced approaches to find out which features has more significant effect on employee turnover, how can employee turnover be prevented and how well can managers identify the factors influencing the employee turnover? In order to solve this research gap and increase the performance of organizations, this study uses the IBM Dataset, which were obtained from the Keggel, in three different approaches to identify the most effective factors on employee turnover using each of the methods. Also, by predicting the employee turnover and use the results of each approach in machine learning algorithms, the performance of these approach has been compared. These three approaches are Feature Selection algorithms in machine learning, Meta-heuristic algorithms and Multi-Criteria Decision-Making techniques.

With increasing progress of science and technology and the use of artificial intelligence in data analysis, it is possible to play a significant role in solving the problems of managers and increasing profitability for organizations. It can be said that the best companies compete by analyzing the people, abilities, and talents of their employees and trying to maximize the performance, participation, and retention of top talent [16]. One of the practical techniques to find useful information from a large amount of data is the data mining approach that has a wide range of real-world applications can be done by machine learning [15]. One of the most significant parts before designing machine learning models is Feature Selection, which is very important for producing an optimal model.

Feature Selection means to selecting the most influential variables in a Dataset on the target variable. This process will be very effective before creating predictive models using machine learning algorithms because all the machine learning algorithms use input data to generate output results. This input data contains a series of features whose structure usually appears in the form of columns and affects the target variable. Other approaches that can be used to find the optimal features are Meta-heuristics algorithms. Many of the optimizing features that are selected for machine learning models in the real world are large in size and complexity, and it will be very time-consuming to reach a definitive optimal solution for them. Meta-heuristic algorithms make the search space smaller. Although in this method, there is no guarantee to find a definitive solution, but these algorithms can approach the optimal solution with an acceptable percentage.

Human resource management is always influenced by the decisions of managers, whose poor decisions can bring a lot of costs to the organization and increase the employee turnover. For this purpose, in this study, in addition to using feature selection approaches in machine learning and Meta-heuristic algorithms, we also used the opinions of managers (decision-makers) regarding the identification of effective factors in the employee turnover by using Best-Worst Method (BWM), which is one of the Multi-Criteria Decision-Making (MCDM) techniques. In general, Multi-Criteria Decision-Making techniques are very popular tools among managers, which can choose the best options. Decision-makers can use these mathematical tools to evaluate and rank potential options in complex situations [4]. Determining decision criteria, selecting the worst and best criteria, prioritizing all criteria, comparing the criteria with the best and worst criteria, and calculating the optimal weight of each criterion by solving the mathematical planning model are the steps of this approach.

This study presents a new combined approach to identify the factors affecting employee turnover using feature selection algorithms in machine learning, Meta-heuristic algorithms and Multi-Criteria Decision-Making techniques. By doing this work, we want to compare the results of these approaches with each other and find the most identification factors for employee turnover. Also, the results of these methods have been used is some machine learning algorithms such as Logistic regression, Decision Tree, Random Forest, Super Vector Machine, Gradient Boosting, and Adaptive Boosting to predict the employee turnover.

With all of these interpretations, human resources analysis must be done in a way that facilitates complex modeling and experiments to understand cause and effect. The results of these analyzes should be such that managers can use them to make decisions. Accordingly, identifying the most influential factors on employee turnover can help predict the future employee turnover rate of the organization and lead to the development of more accurate models. Using feature selection, Meta-heuristic algorithms and Multi-Criteria Decision-Making techniques, which are very practical tools for managers, this article seeks to identify the factors influencing the employee turnover. Also, in order to check the performance of the proposed models, some machine learning algorithms have been used to predict the turnover rate of human resources in order to compare the accuracy of the features selected from the above methods using these algorithms. Identifying the factors affecting employee turnover can be used as a decision support tool to help managers to make the best decision for the strategic planning of employees and take the necessary corrective measures to reduce the turnover rate of their employees, which leads to increased productivity of organizations.

In general, this article is arranged as follows; In Section 2, we will review the literature on this topic. Our literature review consists of two sections: literature on the Data Mining Approach in Human Resource Problems, and literature on Multi-Criteria Decision-Making Techniques and Human Resource Problems. In Section 3, the problem Description and Data Description were presented. In Section 4, the methodology is presented. Section 5 is dedicated to Performance Evaluation. Section 6 presents the results and Section 7 compares the discussion. Finally, Section 8 is devoted to the conclusion.

2 Literature review

In this part, we will review the literature related to the use of data mining approach and the Multi-Criteria Decision-Making techniques in human resource management issues.

2.1 Data mining approach in human resource problems

Over the years, human resource management has undergone significant changes, and issues such as the use of data mining [10], artificial intelligence [19] become very popular. Certainly, the desire to improve human resource management in recent years will continue to be more widespread in the future and the use of data analysis in human resource management will be very popular. Esmaieeli Sikaroudi, RouzbehGhousi, and Sikaroudi [6] identified the factors affecting employee turnover to predict the unstable employee in order to use the results to reduce the obvious and hidden costs of the organization. Also, by analyzing the organization’s historical data, they found that the recruitment strategy should be improved. In the same years, Other researchers used linear and multivariate regression to analyze data such as organizational financial performance, employee turnover rate, employee age, organizational performance, and employee participation rate [22]. By doing this job, they sought to examine the impact of employee turnover rates on organizational performance.

Zhu et al. [38] used time-series data to predict the employee turnover rate to increasing the ability of predicting employee turnover without considering the impact of economic indicators. Y. Zhao, Hryniewicki, Cheng, Fu, and Zhu [35] predicted and evaluated employee turnover by using human resource data sets on some machine learning algorithms such as Decision Tree, Random Forest, Gradient Boosting. In 2019, other researchers examined data such as employee age, organizational role, and job nature to find ways to influence employee decision-making and performance in marketing, finance, human resources, research, and create development [26]. Other researchers used standardized tests to examine the relocation data of schoolteachers in New York to determine the effect of teacher relocation on student performance and by doing this they shows that the organizational performance was negatively related to subsequent employee turnover [27].

W. Zhao, Pu, and Jiang [34] introduced team member errors as one of the problems in human resource allocation, and to solve this problem, they analyzed some of the characteristics of resources from the perspective of demographics and business process analysis. In the same year, Lyons and Bandura [13] used statistical discussions to examine the factors affecting employee turnover. Their results show that there are multiple motivations or triggers that encourage an employee to leave an organization, which is much more complex than issues related to job satisfaction, rewards, or an unfavorable boss. Nagpal and Mishra [18] analyzed factors such as recruitment, training, and performance management to improve decision-maker’s ability to make informed decisions about human resources. X. Wang and Zhi [28] proposed an analytical framework based on machine learning due to the variety of factors affecting the employee turnover, the lack of comprehensive data, and the existence of machine learning models to predict the employee turnover. Recently, researchers investigated the effect of the strength of COVID-19 on the turnover intention of hotel employees by combining perceived operational performance and job insecurity [30].

2.2 Multi-criteria decision-making techniques and human resource problems

Human resource management and related issues include soft and hard constraints that make these issues a little more complicated. Therefore, multi-criteria decision-making techniques, which are practical tools for managers, have many applications in this field. One of the problems in human resource management is personal selection. In 2016, some researchers from China wanted to utilize human resources to increase the sustainable development of society and the economy. To evaluate and classify overseas talents in China under the intuitionistic relations environment they used the BWM method [29].

By using the BWM and the TOPSIS, Gupta [7] tried to identify important actions and practices of Green Human Resource Management (GHRM) and evaluate its performance using a three-phase methodology. Due to the effect of soft (human-resource-related) dimensions on Green Supply Chain Management (GSCM), Kumar, Mangla, Luthra, and Ishizaka [11] used the BWM and Decision-Making Trial and Evaluation Laboratory (DEMATEL) to minimize the negative environmental effect of the value chain. Chou, Yen, Dang, and Sun [3] used FAHP and fuzzy technique for ordering priority based on similarity to ideal solution (FTOPSIS) to evaluate the performance of human resources in science and technology (HRST) in Southeast Asian countries. Results They show that Singapore, South Korea and Taiwan have similarities in desirable levels of HRST performance and have better HRST performance than other Southeast Asian countries. Soba, Ersoy, Tarakcioğlu Altınay, Erkan, and Şik [25] used some multi-criteria decision-making techniques such as gray relationship analysis (GRA) method and PROMETHEE grading and ranking method for personnel selection. Esangbedo, Bai, Mirjalili, and Wang [5] evaluated the human resource information systems provided by different vendors using two new hybrid Multi-Criteria Decision-Making techniques that require ordinal data as inputs.

Many studies have used inferential statistics to find relationships between variables in human resource management. Also, Multi-Criteria Decision-Making techniques have been used in various fields. However, no article in human resource management has used Meta-heuristic and feature selection algorithms that are very useful and accurate to discover patterns and relationships between variables. Also, the use of Multi-Criteria Decision-Making techniques to find the importance of variables is not seen in the literature. Since the world of human resources is full of quality issues, using these methods to solve problems can also be very practical. On the other hand, deep searching for the relationships between variables, Meta-heuristic, and machine learning approaches can lead us to the correct use and modeling. In order to evaluate the results of the models, in the next step, we will use machine learning algorithms to predict the departure of employee turnover to ensure how practical the above results can be. Although many researchers have used data mining techniques and Multi-Criteria Decision-Making techniques in various human resource topics, combining and comparing these approaches shows a research gap that this study tries to cover.

3 Problem description and data description

From the organization’s point, employee turnover is in two forms: voluntary turnover and involuntary turnover. Involuntary turnover occurs when the employee wants to stay in the organization, but the organization wants the employee leaves in various forms such as retirement. In voluntary turnover, the organization wants to keep the employee, especially its talented and knowledgeable workers, but the employee wants to leave the organization [1]. Both employee turnovers cost the organization dearly, but voluntary exits are always a concern for managers. One of the best things that can be done for an organization is to retain its valuable employees. Regardless of the economic situation, companies are always at risk of losing their best employees and joining them to the organization’s competitors. The narrowing of the labor market and economic growth has created competition among companies to attract labor. This means that organizations are in fierce competition to attract talent. This competition creates conditions that make it difficult for any party to retain a talented employee, resulting in increased employee turnover rates.

When talented leave their jobs, the company will be losing knowledge and skills that it has acquired with difficulty and often at great expense. With the departure of these people, the high knowledge and expertise of these talented and efficient employees who guaranteed your success will leave your company with their turnover. It should be noted that reducing the employee turnover does not mean continuous work with all employees, but it means keeping qualified employees as long as their performance is in line with the organization’s goals. Although some voluntary employee turnover in the organization is inevitable, it can be minimized by identifying the factors affecting the employee turnover and applying correct management approaches in the next step. For this purpose, in this study, using various approaches such as Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm, Gray Wolf Optimizer, we identify the factors affecting the employee turnover. In the next step, we used the suggested variables from each of the above algorithms in some machine learning algorithms to examine the validity of the above models by using the current accuracy of machine learning algorithms in predicting the employee turnover.

There are many factors that can have a direct impact on the employee turnover. For this reason, we used the IBM data set obtained from the Keggel to determine the impact of important parameters such as years since the last promotion, work-life balance, percentage of salary increase, years of service, overtime, and number of trainings to employee turnover. The IBM Dataset includes 1470 employees and 38 features. From the total number of employees, 237 employees have left their jobs. After cleaning the data, all features with unique values that were the same across all employee inputs were removed. A total of 1470 employees with 31 characteristics remain. The features intended for analysis can be classified into 3 types continuous, discrete ordinal variables, and Nominal categorical variables like gender. The target variable in this data set is binary and divided into two classes of people, who left the organization, and people who did not leave the organization, as shown in Fig. 1. Figure 1 shows a general graph of the number of people who have left the organization.

Age, employee turnover, Business Travel, Daily Rate, Department, Distance From Home, Education, Education Field, Employee Number, Environment Satisfaction, Gender, Hourly Rate, Job Involvement, Job Level, Job Role, Job Satisfaction, Marital Status, Monthly Income, Monthly Rate, Number of Companies Worked, Over18, Overtime, Percent Salary Hike, Performance Rating, Relationship Satisfaction, Standard Hours, Stock Option Level, Total Working Years, Training Times Last Year, Work Life Balance, Years At Company, Years In Current Role, Years Since Last Promotion, Years With Current Manager are the features that we used. Some features such as over 18 and Standard Hours were not considered in data analyzation because they had no variance.

4 Methodology

In this article, in order to identify the factors affecting the employee turnover, Meta-heuristic, Feature Selection algorithms and Multi-Criteria Decision-Making techniques, which are very practical tools for managers, have been used. These algorithms include Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm, Gray Wolf Optimizer. Each of these approaches identify some features as the main factors affecting the employee turnover. In order to check the performance, and validation of the above methods, their results are used in some machine learning algorithms such as Logistic regression, Decision Tree, Random Forest, Gradient Boosting, Super Vector Machine and Adaptive Boosting.

One of the widely used techniques in observational learning is the classification technique. The classification technique is a kind of classic data mining approach for data analysis, which is based on machine learning. The classification process is based on a training set, the system learns to divide the data into the right groups with the least error. The training set contains data whose category is known; Each pattern or category has a label and data with the same target label are placed in a group. The goal of this method is to learn a function that maps input patterns (feature vectors) to their corresponding labels. Finally, in order to make the results of Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm, Gray Wolf Optimizer more practical, by using the results of the classification algorithms, we will predict the employee turnover, so that the results can be examined. The higher the accuracy of the prediction models, the more the variables proposed by the algorithms have had a greater impact on the employee turnover. Machine learning algorithms are compared using F1-score. Figure 2 shows the steps and application of these methods.

4.1 Mutual information

Mutual Information is a concept of information theory that measures the uncertainty of a random variable. Considering the two-dimensional random variables X and Y, the Mutual Information between X and Y can calculate as Eq. 1. In this Equation p(x, y) shows the probability of variables X and Y. If X and Y are independent to each other, the joint probability of variables X and Y, that shown as I(X, Y) will equals to 0. Nonlinear correlation can be extracted from Mutual Information because the calculation of Mutual Information is based on the estimation of probability density. Mutual Information is more usable for nonlinear systems [37].

4.2 Recursive feature elimination

Feature Selection is one of the most important concepts in machine learning. Feature selection methods play a significant role in the optimal performance of learning models. One of these feature selection methods is the Recursive Feature Elimination (RFE) method. The characteristics that a machine learning engineer or data scientist uses to teach a machine learning model will have a tremendous impact on the performance, accuracy, and optimal performance of the implemented system. Also, unrelated or somewhat related features can harm system performance.

The Recursive Feature Elimination method is a greedy method for selecting features. In this method, the features are selected retrospectively by considering smaller and smaller sets of features (in each step). In this method, features are ranked based on the order in which they are removed from the feature space. RFE requires a certain number of features to maintain, however it is often not clear in advance how many features are optimal. However, as with other methods, we found nine of the most influential variables on employee turnover.

4.3 Best-worst method

Best-Worst Method (BWM) is a Multi-Criteria Decision-Making techniques based on the pairwise comparison in various fields such as green innovation [8], evaluation and selection of technology [20], evaluation and selection of technology [24], evaluation of research performance has been used [23]. BMW can reduce inconsistencies in results and reduce the number of pairwise comparisons required [12].

The concept and objectives of this method are described in the following sections.

Suppose we have n criteria and we want to make a pairwise comparison matrix, in fact, gaining the weight of each criterion starts from this part. As it is shown below, each element of this matrix shows the relative preference of the criteria over each other; moreover, the pairwise comparison matrix will be filled by the decision-maker (DM) by using the number between 1 to 9 scales. For instance, aij is the relative preference of criterion i to the criterion j. If aij = 1, it shows that criterion i and criterion j have the same importance and ifaij > 1, it shows that i is regarded as much more important one. If aij = 9, it is an indication of extreme importance of I to j [21].

Considering the cross-matrix property, (n − 1)/2 pairwise comparison is performed to complete the above-mentioned matrix. The consistency of the matrix is high. The literature on the matrix of consistent pairwise comparisons is shown as follows:

The topic we talked about above was the basics of the pairwise comparison matrix. Still, we didn’t answer a question, and that one is related to the assurance and reliability of this matrix. To use experts’ ideas, we need to be sure that those ideas are not biased and eventually can express the strength of criterion i to criterion j appropriately. The Best-worst method is introduced by [21] to obtain the weights of each criterion by comparing the other’s criterion with the best one and also comparing them with the worst, so by applying this method DM can express his preferences more easily. At last, the comparing process will be simplified.

In this section, we describe the BWM steps for extracting the weight of criteria [21].

-

Step 1. In this step the decision criteria are determined. Criteria {c1, c2, c3, …cn} have been considered for decision making.

-

Step 2. We have to find the best criterion and the worst criterion. The DM will specify the most important or desirable as well as the lowest criteria.

-

Step 3. To highlight the preference of the best one over other criteria, a number between 1 and 9 is assigned to each criterion. This will, at last, make the best-to- others vector.

-

Step 4. To highlight the preference of the worst one over other criteria, a number between 1 and 9 is assigned to each criterion. This will, at last, make others- to-worst vector.

-

Step 5. In the last step, we get the desired weights (w1, w2, w3, …wn). The optimal weight for each criteria is the one for which \( \frac{w_B}{W_J} \)=aij and also \( \frac{w_j}{W_W} \)=ajW.

To meet the requirements mentioned for obtaining criteria’s weights, the maximum absolute differences \( \mid \frac{w_B}{W_J} \)-aij∣, \( \mid \frac{w_j}{W_W} \)-ajW∣ for all j needs to be minimized. Hence the model is shown in Eq. 5:

The linear form is also obtained as:

4.4 Genetic algorithm

One of the Metaheuristic algorithms that is used to find the optimal formula for predicting or matching the pattern is the Genetic Algorithm. This algorithm uses biological techniques such as inheritance, biological mutation, and Darwin’s principles of choice. In many cases, Genetic Algorithms are referred to as function optimizers. This algorithm is used to optimize objective functions of various problems. The implementation of a genetic algorithm usually begins with the production of a population of chromosomes that are usually randomly generated. Next, the generated data structures, or chromosomes, are evaluated. Chromosomes that can better represent the Optimal Solution to a problem have a better chance of Reproduction than weaker answers. It can be said that the opportunity for reproduction is assigned to these chromosomes. The goodness of an answer is usually measured against the population of the current candidate’s answers.

Changes in chromosomes occur during the reproductive process. Parent’s chromosomes are exchanged randomly through a special process called crossover. Thus, children inherit and display some of the traits or characteristics of the father and some of the traits or characteristics of the mother. There is a process called mutation that causes changes in the properties or characteristics of living things. This process rarely occurs between modes. On the other hand, sometimes there may be an error in the process of copying chromosomes, which is called mitosis. Mutations can lead to newer and more graceful species of living things.

To solve the problem of feature selection using a genetic algorithm, a space is selected by coding each person and its degree of competence is calculated according to the degree of class resolution in that space. In each experiment, the genetic algorithm is run several times with different initial populations. Finally, after several runs, the best person will select. The genetic algorithm has features compared to other methods.

This algorithm does not work directly with the variables themselves, but with the coded decision variables, and does not start with a single point, but with a set of points. Also, this algorithm does not use computational rules but uses random transfer. The genetic algorithm uses only the output information of the competency function and does not use derivatives or other ancillary information.

4.5 Gray wolf optimizer

The Gray Wolf Algorithm Optimizer (GWO) is a Meta-heuristic algorithm first introduced in 2014 [14, 17]. This algorithm is inspired by the hieratical hierarchical structure and social behavior of gray wolves. GWO is population-based, has a simple process, and can be easily generalized to large-scale problems. Gray wolves prefer to live in a group, and each group has an average of 5–12 members. There are 4 degrees in each herd of wolves. The leader wolf is called Alpha (the fittest solution). The wolf beta (the second-best solution) is a wolf that follows the alpha wolf and is prone to be chosen instead of the alpha wolf in the decision-making process. Wolf Delta (the third-best solution) follows the wolf alpha and beta. After selecting these three wolves, the other wolves fall into the category of omega wolves (the rest of the candidate solutions). In the GWO algorithm, these wolves mentioned as α, β, δ and ω.

In explaining the GOW, we can say that this algorithm consists of 3 main steps. The first step involves tracking and approaching. The second step is pursing and encircling, and the third one is attacking.

According to the Yu, Xu, Wu, and Wang [31] when the prey is surrounded by wolves and stops moving, the attack is led by alpha wolf. Modeling of this process is done using the reduction of the\( \overrightarrow{a} \). Since \( \overrightarrow{A} \) is a random vector in the interval [−2a, 2a], as decreases, the coefficient \( \overrightarrow{A} \) also decreases. If ∣ A ∣ < 1, the wolf alpha will approach the prey (and the rest of the wolves), and if ∣ A ∣ > 1 the wolf will stay away from the prey (and the rest of the wolves). The gray wolf algorithm requires all wolves to update their position according to the position of alpha, beta, and delta wolves. During the hunt, gray wolves surround the prey. The mathematical model of the siege behavior is shown in Eqs. 8 and 9. In these equations, t represents the current iteration, \( \overrightarrow{A} \) and \( \overrightarrow{C\ } \)represent the coefficient vectors, \( \overrightarrow{X_{p\kern0.5em }} \)represents the prey position vector, and \( \overrightarrow{X} \) represents the gray wolf position vector.

\( \overrightarrow{A} \) and \( \overrightarrow{C} \) are calculated as \( \overrightarrow{A}=2\overrightarrow{a}.{\overrightarrow{r}}_1-\overrightarrow{a} \) and \( \overrightarrow{C}=2.{\overrightarrow{r}}_2 \). In these relations, the variable a decreases linearly from 2 to 0 during the iterations, and\( {\overrightarrow{r}}_1 \), \( {\overrightarrow{r}}_2 \) are random vectors in the range [0, 1]. Attracting operations are usually led by alpha. Beta and delta wolves may occasionally participate in hunting. In the mathematical model of gray wolf hunting behavior, we hypothesized that alpha, beta, and delta have better knowledge of prey potential positions. The first three solutions are best stored and the other agent is required to update their positions according to the position of the best search agents according to the following Eqs. 10, 11 and 12.

\( \overrightarrow{C} \) is considered as an obstacle in nature that slows down wolves approaching prey. \( \overrightarrow{C} \) gives weight to the prey and makes it more inaccessible to wolves. Unlike\( \overrightarrow{a} \), this vector does not decrease linearly from 2 to 0.

5 Performance evaluation

In this part, some machine learning algorithms such as Logistic Regression, Decision Tree, Random Forest, Gradient Boosting, Super Vector Machine, and Adaptive Boosting, were used to check the accuracy of the results obtained from Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm and Gray Wolf Optimizer. Evaluation of prediction models has been done using F1-score.

5.1 Logistic regression

One of the algorithms used for classification problems is logistic Regression. Logistic regression is a type of regression that is used to provide solutions for problems with binary objective variables. Logistic Regression uses to classify these problems. Equation 13 is shown the logistic Regression formulation. In this equation, y is the estimated value for the dependent variable, which is represented by Eq. 14. Equation 14 represents a simple linear regression equation. In this equation, b represents the width of the origin, and b1 represents the slope of the line.

5.2 Decision tree

The Decision Tree is one of the most widely used tools and techniques in data mining skills and can be used for both classification and regression problems. This approach is widely used by project managers and business analysts because, it is a utilizable tool for decision making, and it can show well the complexity between variables. It can also examine large volumes of data in a short amount of time by eliminating unnecessary comparisons. In general, each tree contains roots, nodes, leaves, and branches. The highest node in the tree is called the root node and represents the set of all samples. Nodes represent a set of samples and specify divisions. In other words, the tree is divided according to the middle nodes. The branches of the tree represent possible outcomes. Finally, the leaves represent the nodes of the class or target states.

Also, items such as the number of nodes, the number of leaves, the number of characteristics to be considered, and the depth of the roots determine the size of each decision tree, which is usually due to the smaller volume making the decision tree more understandable. They control it by pruning.

Decision Tree algorithms start with selecting a test to perform the best separation for the categories, and it will be repeated for all other nodes. The Decision Tree can be built using several algorithms. Here we used entropy as the division criterion. If we classify the members of a set into k categories and pkshows the probability that the members belong to the k category, the expected information that is needed for the members to belong to the correct category is called entropy. Equation 15 shows the relationship of entropies. If the sample is completely homogeneous, the entropy is zero, and if the sample is the same, the entropy is one. In other words, the most entropy degree occurs when the probability of belonging to all categories is equal. It is necessary to calculate two types of entropy in the process of constructing each decision tree, which is entropy using the frequency table of one property and entropy using the frequency table of two properties, respectively. Equation 16 also shows the relationship of the Gini Index. The Gini Index is a statistical measure of distribution that measures the degree or likelihood of misclassification of a particular variable by random selection.

5.3 Random Forest

One of the multipurpose machine learning algorithms is the Random Forest. This algorithm can perform both classification and regression methods. The Random Forest algorithm is made up of several Decision Trees. It can be said that a set of Decision Trees together produce a forest. Each Decision Tree is unique to the Random Forest, which reduces the overall variance of the Random Forest classifier. The Random Forest algorithm can make better decisions than a Decision Tree. In other words, Random Forest decisions are more stable and reliable than a tree. Especially when each of the trees is not correlated with each other. The Random Forest collects the decisions of individual trees through a voting scheme such as a majority vote that is, for each observation, each tree decides on a group of votes, and selects the group with the highest number of votes. Random Forest has some adjustment parameters that can be optimized. These include the number of trees, the number of predictor variables randomly selected in each node, the ratio of observations for example in each Decision Tree, and the minimum number of observations in the final node of the Decision Tree. Random Forrest algorithm, like the decision tree, consists of two important concepts: Entropy and information gain which are shown in Eq. 15, and 16.

5.4 Gradient boosting

Gradient Boosting is one of the ensembles learning techniques for both regression and classification. Each iteration of the training set that is randomly selected is checked from the base model of the gradient boosting algorithm. The speed and accuracy of the gradient for execution can be improved by randomly sub-sampling the training data. It is also can help to prevent over-fitting. The smaller fraction of training data can increase the regression’s speed because the model has to fit smaller data at each iteration.

In Gradient Boosting regression, we required some tuning ntrees and shrinkage rate. ntrees shows the number of trees that have to be grown, the value of ntrees should not be set to too small. The shrinkage parameter is often known as the learning rate applied to each tree in the expansion. In gradient boosting it is invariant to the scaling of inputs and it can learn higher-order interactions between features. Different from other tree ensemble methods, gradient tree boosting is trained in an additive manner. The other tree that is grown at each time step t to minimize the residual of the current model [2]. Formally, the objective function can be described as Eq. 17. In this Equation, L shows the loss function that measures the difference between the label of the i − th instance yi and the prediction at the last step plus the current tree output. Ω(ft) is the regularization term that penalizes the complexity of the new tree.

5.5 Super vector machine

Support Vector Machine (SVM) is one of the supervised Machine Learning algorithms that is used for both regression and classification problems. When we use this algorithm with random forest algorithms or other machine learning tools, this algorithm can provide a very compelling model for data classification. A backup machine algorithm is a great option when high predictive power is required. In the training phase, the SVM algorithm tries to select the decision boundary in such a way that the “minimum” distance, boundary, Decision-Making, or separator is maximized with each of the categories. In other words, the margin model is more reliable. According to Zhang, Zheng, Pang, and Zhou [32] the SVM would build a Hyperplane H when y is the list of targeted applications or classes that Eq. 18 shows this formulation. In this Equation, x is the input from net flow data, a shows the vector of the weights for each flow feature, b shows the bias term and ∅(0) is fixed feature-space transformation.

In this algorithm, the problem is to find the Hyperplanes H that separate different classes, and the SVM assumes that the classes are linearly separable.

5.6 Adaptive boosting

Adaptive Boosting (AdaBoost) is one of the most successful amplification algorithms for binary classification. AdaBoost is generally used with small Decision Trees. Each input training Dataset is presented and labeled as in Eq. 19. In this Equation n is training data set size, xi ∈ T and yi ∈ {−1, 1}. In this algorithm, each training sample of data could be a point in a multidimensional feature space.

AdaBoost can implement a probability distribution over all the training samples of data. This distribution could be modified by iterations with a new weak classifier to the data. Dt(xi) shows the probability distribution and the successive iterations is shown by T. In this algorithm ht is the weak classifier chosen for the iteration t. ht(xi) denoted the class label assigned by xi. After comparing ht(xi) with yi for i = 1, 2, …, n we will have an error that we noted it ϵi. This error shows the classification error rate for the classifier ht. Each classifier candidate is trained over iterations using a subsample of all the training data provided by the Dt(x) probability distribution. The higher the probability of Dt(x) for a given training sample x, the greater the chance of selecting it for the instruction of candidate h(t) classifier. The htmust be selected in a way to minimize the amount of misclassification rate ϵ. The trust level αt, of the weak classifier in which we trust, is the point of the AdaBoost algorithm. The bigger the value of error, ϵt for a classifier, the lower the trust must be. Eq. 20 shows the relation between αt and ϵt.

The trust level of the candidate classifier h(t) will get a value in the scale of −∞ and∞, if the epsilon rate gets closer to 1 starting from 0. If the ϵ gets closer to 1, the weak classifier almost completely fails on the overall training Dataset. If the ϵ gets closer to 0, the weak classifier will be powerful as also stated. Finally, the classifier H will be obtained. After n iterations, H classifier in the AdaBoost will be evaluated as shown in Eq. 21.

In this Equation x shows the new data element. This data element denotes as information strength in the training data. For example, if we have a binary classification and H(x) would be positive, then the prediction class would be 1, otherwise, it would be −1.

5.7 Evaluation of machine learning models

The F1-score is a machine learning metric that can be used in classification models. Although there are many metrics for classification models, the F1-score is a common metric that captures class imbalance well and has a very simple function. Another criterion used in classification problems is accuracy, but it works well for data that is perfectly balanced and has no class bias or imbalance. The evaluation of machine learning models in this study is done using the F1-score, because in addition to the fact that our data is imbalanced (From the total number of employees, 237 employees have left their jobs), that is, the ratio of our 1 and 0 variables is not equal to one another, we are looking to identify the number of people who left the organization and we want identified them correctly. Since the accuracy criterion cannot distinguish between False Negative and False Positive errors, we have used F1-score to check the performance of machine learning models. Since in some problems, Recall and Precision are both very important factors, criterion F1-score is used. They are also the basis of F1-score, which means that the F1-score is actually a balanced combination of Precision and Recall, and can be used in cases where the cost of false positives and false negatives is different [36].

In this study, we seek to correctly identify the people who leave the organization. The higher the ratio of False Negatives (cases that we expected to be predicted but were not correctly predicted) to True Positives (correct predictions), the lower the Recall value. On the other hand, the higher the number of False Positives (cases that the Athens model has incorrectly predicted) compared to True Positives (correct predictions), the lower the Precision will be. In fact, the higher the number of false diagnoses of the program, the lower its recall, and the higher the number of things that should be obtained but not predicted, the lower the precision. The F1-score is equal to the geometric mean of these two criteria. It can be said that, the F1-score is a suitable criterion for evaluating the accuracy of our models because, considering the large volume of our data, this test, in addition to considering the Precision and Recall criteria together, only needs to train the model once. Using the k-fold method can be very useful, but it is usually used for data whose volume is small because by increasing k, this method leads to the training of more models and turns the training process into a costly and time-consuming process.

The classification process has two phases, Train and Test. In the Train phase, the data in the dataset are selected as training data, and in the Test section, the remaining data are used for testing and validating the selection. In the test phase, the patterns whose labels are not known are given to the system and the designed system predicts their output or labels with the help of the learned function. The data in this article are divided into two parts with the ratio of 25–75 to the train set and test set. Also, the performance of the MCDM techniques, Meta-heuristic, and Feature Selection models were evaluated by F1-score in prediction models. These tests are calculated based on recall and precision. True positive (Tp) counts the number of positive instances that are classified correctly. The number of negative instances that are classified correctly counts in true negative (Tn). False positive (Fp), means the number of negative instances that are classified as positive and also false negative (Fn), shows the number of positive instances that are classified as negative. Finding the exact point for the classifier on which the number of true positives is maximum, and the number of true negatives is minimum makes our objective. Recall shows how much the system is capable of classifying. Precision, Recall, and F1 score can be calculated as Eq. 22, 23 and 24.

6 Results

6.1 Mutual information

According to Mutual Information, the most influential variable on employee turnover is the years with the current manager. Nine of the most influential variables that we also used for the prediction model are years with the current manager, gender, distance from home, hourly rate, total working years, years at the company, work life balance, environmental satisfaction, and job level. Figure 3 shows the feature importance in Mutual Information. In this Figure, the more important a feature is, the higher its graph. As shown in the Fig. 3, some features, such as business travel and departments, do not affect the employee turnover.

6.2 Recursive feature elimination

Mutual Information gives us the importance and the Score of features. In Mutual Information, the higher the numerical value of a feature, the more important that feature is. Unlike the Mutual Information method in the Recursive Feature Elimination method, we have a ranking of features. Features with ranking one are the most effective features on employee turnover. According to this method, age, business travel, daily rate, distance from home, education, gender, work life balance, distance from home, job environment, and monthly rate are the most influential variable on employee turnover. The feature importance of Recursive Feature Elimination is shown in Fig. 4.

6.3 Best-worst method

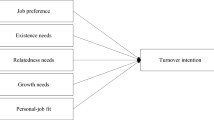

Among 31 features, our expert has selected 9 of the most significant features that affect employee turnover. Among these 9 significant criteria, our expert identified the most influential criteria (best criteria) and the least influential criteria (worst criteria) on the employee turnover. In the next step, by using the number 1 to 9, he weighs the other variables against the worst and the best criteria. Table 1 shows the weight of the variables relative to the best criteria, and Table 2 shows the weight of the variables relative to the worst criteria. The criteria that are used for the BWM method are job level, job satisfaction, monthly income, relationship satisfaction, total work years, work life balance, years at the company, years since the last promotion, and years with the current manager. The best criteria are job satisfaction and the worst criteria are years with the current manager. These results show that with the weight of 0.30658026, job satisfaction has the most effect on employee turnover.

Table 3 shows the standard effectiveness coefficient in the employee turnover and the weights obtained by the BWM for each criteria.

6.4 Meta-heuristic algorithms

Genetic Algorithm and Gray Wolf Optimizer are two Meta-heuristic Algorithms that are used to find the factors identification on employee turnover. The results of the Genetic Algorithm show that age, department, education field, job level, job role, job satisfaction, monthly income, overtime, and years in the current role are the most significant factors. Gray Wolf Optimizer shows that distance from home, job role, monthly income, number of companies worked, overtime, years at the company, years in the current role, years since last promotion, and years with the current manager are the most significant factors. The GWO shows that the Employees whose homes are 4–6 miles far away from the company make up 10% of all employee turnover at the company. In the field of job roles, managers and human resources also have the most employee turnover rate in the organization. Managers also make up 4% and Human Resources 23% of the organization’s employee turnover rate. Also, people who work with their managers for 1 or 6 years and employees who have overtime are more likely to leave their jobs.

Some of these features are the same in both algorithms. One of these features is monthly income. The monthly income of $2000–3000, has a high employee turnover rate and this represents 40% of the employee turnover in the company. 1000–2000 monthly income, which has a high employee turnover in its income, is 54.5%. As monthly income increases, the employee turnover rate decreases. However, at a monthly income of $900–11,000, there is an increase in turnover compared to the monthly income itself. Table 4 shows statistical information on employee monthly income.

Overtime is another common feature of these two algorithms. 30.5% of employees who have overtime work in the company left their job, which includes 53.5% of all employee turnover in the companies. Although only 10% of people who do not have over time have left their jobs, they account for 46% of the total employee turnover rate in an organization. By comparing both groups, overtime employees are much more likely to leave the company. Table 5 shows statistical information on employee overtime.

7 Discussion

As mentioned before, we used Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm, Gray Wolf Optimizer to identify the factors affecting employee turnover. In this section, we are looking for a comparison between the results of the above algorithms. In summary, Table 6 shows the factors affecting the departure of employee turnover by these algorithms.

We used different classification algorithms to check the accuracy of the features selected by MCDM, Genetic Algorithm, Gray Wolf Optimizer, Mutual Information, and Recursive Feature Elimination results. Logistic Regression, Decision Tree, Random Forest, Super Vector Machine, Gradient Boosting, and Adaptive Boosting are the algorithm that we used for prediction. By predicting this data, we obtained the accuracy of each method on employee turnover and have a tradeoff between the results of each method. The results of these predictions are shown in Table 7.

As shown in Table 7, we once predicted the employee turnover using the entire Dataset, once we predicted the employee turnover with results of BWM, Genetic Algorithm, Gray Wolf Optimizer, Mutual Information, and Recursive Feature Elimination. The Total Improvement of the prediction model by the best algorithm is shown in the last column. For example, in the Decision Tree model, the use of 9 features that the Mutual Information algorithm considers to be the most influential variables on employee turnover has been able to give better performance to our model than using all the features. In other words, its Total Improvement has grown by 0.0494%. Among BWM methods, Genetic Algorithm, Mutual Information, and Recursive Feature Elimination results, the method that has shown better and more efficient results in most algorithms is the Mutual Information algorithm. Figure 5 shows a comparison diagram of the results of the prediction models.

As shown in Fig. 5, the most accurate among the proposed models is Algorithm Mutual Information algorithm. The criteria selected by this algorithm are years with the current manager, gender, distance from home, hourly rate, total working years, years at the company, work life balance, environmental satisfaction, and job level. When the results of this algorithm are used in machine learning models, the F1-score of most algorithms such as algorithm Logistic regression, Decision Tree, Random Forest and Super Vector Machine has increased compared to using all algorithms. Figure 6 shows these results well.

Using the suggested variables of Mutual Information can show better results in all machine learning algorithms than other algorithms in predicting the employee turnover. In all algorithms, including Gradient Boosting and Adaptive Boosting, which had shown weaker results compared to using all features, they have been able to show better results. In Adaptive boosting algorithm, the results of Mutual Information and Recursive Feature Elimination are almost equal. The comparison of Mutual Information with BWM shows much better results in all machine learning models. Figure 7 shows a tradeoff between the results of two algorithms.

Using the results of Mutual Information compared to the results of Genetic algorithm and GWO in machine learning models, has been able to show much better results. Figure 8 shows a trade-off between the results of Mutual Information, Genetic algorithm and GWO.

Some of these features are common to some algorithms. There are 4 categories bad, best, better and good for work-life balance in this company. Throughout the company, work-life balance is satisfactory generally. But we have the highest employee turnover number and percentage throughout the company. 127 of the 893 people who are in a better category of work life balance have left the organization. This means that 14% of the employees who have a better work life balance left the organization and 53.58% of people who have left the organization are in the better category of work life balance. It should be noted that a total of 31.25% of people with bad work-life balance left the organization, which is a significant percentage. Table 8 shows statistical information on work-life balance in the company. The tradeoff of employee turnover according to work-life balances and the impact of work-life balances on employee turnover is shown in Fig. 9.

This company has five job levels. At job level 5, 5 employees of 69 employees have left their jobs, which includes 2.1% of all employee turnover in the company, and 7.2% of employees with job category 5 have left their jobs. By increasing job levels, there is a decrease in employee turnover number throughout the company. As the results show in Fig. 5 and Table 6, the highest turnover rate is observed in job level 1. 143 employees in job level 1, who compose 60.3% of all employee turnover, left the company. Table 9 shows statistical information on employee job levels. The tradeoff of employee turnover according to job level and the impact of job level on employee turnover is shown in Fig. 10.

With 31.6% of all employee turnover at the company, employees who don’t fulfill their first year and in their first year in their current role are more likely to leave the company. The genetic algorithm also confirms this result. with36.7% of all employee turnover at the company, the employees who have 2–5 years of experience have the maximum employee turnover rate. Moreover, after many years in the current position, the employee is ready to leave the company. Table 9 shows statistical information of years at the company. Table 10 shows the statistical information for years at the company. The tradeoff of employee turnover according to years at the company and the impact of years at the company on employee turnover is shown in Fig. 11.

With 35.8% of all employee turnover at the company, most of the employees leave the company before completing their first year with their current manager. After that, the employee who worked two years with the current manager leaves the company. That includes 21% of all employee turnover in the company. With 13% of all employee turnover at the company, the employees who work seven years with the current manager leaves the company. Table 11 shows the statistical information for years with the current manager. The tradeoff of employee turnover according to years with the current manager and the impact of years with the current manager on employee turnover is shown in Fig. 12.

7.1 Managerial insight

As indicated in the results, there are four main factors affecting employee turnover within the companies: Work-life balance, Job level, years with the company, and years with the current manager.

By putting all these results together, it can be highlighted that the early stages of employee engagement with the company are vital in several manners. The employees are more likely to leave the company in their early years of working, which can make their satisfaction levels and commitment levels of them in the early years a crucial factor for retaining them. Due to these facts, the authors suggest companies invest in increasing the satisfaction and commitment levels, by designing and benefitting from productive onboarding programs as well as their employer branding. An effective onboarding program specifically in an era in which the workplace is changing its nature, is a tailored authentic experience for employees that would affect their purpose and elevate their energy and performance. After all, employees are seeking for fulfilling their purpose, trust and social bonding in their careers.

Also, it is worth mentioning that due to the results, after 5 years of working employees are less likely to leave the company, which can be referred to as the importance of the early years of employees’ experiences with the company- how the employer has created their unique experience in the workplace bundled and multiplied by the employer brand within the market.

Also, considering the job level and years with the current manager as another vital affecting factor on employee turnover, it can be referred the importance of succession planning and career progress within the job. The more the employees feel their career progress in the company the less they are likely to leave. This factor also can be referred to as the fact that finding a meaning in employees’ everyday jobs, as well as, living their purpose through their job is an effecting underlying factor for creating commitment and decreasing the turnover. However, since the factor of employee generation seems to be affecting in purpose and meaning of the job, the authors suggest that it can be determined through separate research for complementary purposes.

On the other hand, to make the employees’ progress fruitful and meaningful it seems necessary for companies to invest in their leadership development by conducting different leadership development programs to minimize the risk of employees’ dissatisfaction with their current managers through better leadership, also, managers need to hold a crystal clear plan for their employees’ career path and growth journey which may result in a better-satisfied employee in their early stages through progressing in their job level. The mentioned leadership development programs can be conducted by several training and consulting firms in a variety of concepts. From result driven leadership programs to conscious leadership and self-realization, that may vary in use based on the nature of the work, company culture and the most important the employees themselves. However, it’s vital to mention that a well-defined and structured talent journey plan, based on the competency model, would affect in engaging multi layers of employees in different development programs as well as, clearly communicating the growth roadmap for them.

Finally, the work-life balance as indicated in the results has a considerable effect on the turnover. Although at a glance it may come to one’s mind that the better the work-life balance the more the employees are likely to leave, it’s absolutely important to mention that due to the results, 31.25% of employees with bad work-life balance are intended to leave the company. This brings the fact that the companies are going to need to invest in creating and maintaining a culture of work-life balance security and to improve it via broad communication through the organization and creating rituals for it.

In conclusion, by investing in creating better employer brands, better effective onboarding programs, clarifying better career paths and succession planning for early years joiners of the company, and maintaining all by enriching everyday experience via better leadership and better work-life balance rituals, it seems to be able to minimize the risk of turnover in employees early years with the company, which would result in better company culture and employer brand and long run, minimize the investments spent by companies on retaining right talents.

7.2 Contributions, limitations, and future works

There are many factors that directly and indirectly affect the employee turnover and can impose a lot of costs on organizations. On the other hand, in some cases, the departure of human resources can be the result of the manager’s wrong decisions. For this purpose, identifying the factors affecting the employee turnover has been one of the most important challenges for managers, so that by providing appropriate management approaches, they can reduce the rate of departure of human resources in their organizations. In order to identify the factors affecting the employee turnover, this article has used three approaches of Meta-heuristic algorithms, Multi-Criteria Decision-Making techniques, Feature Selection algorithms in machine learning.

Multi-Criteria Decision-Making is an advanced field of operations research dedicated to the development and implementation of decision support tools and methods. These methods are very practical tools for managers that can be used to solve complex decision-making problems such as the existence of several criteria, goals or objectives with a contradictory nature. In this study, Best-Worst Method has been used to identify the most influential factor on employee turnover. There are many problems in various sciences that are of the optimization type, that is, among a set of possible solutions, the best solution is sought that maximizes or minimizes the objective function, which are very large in terms of size and complexity. In these cases, it takes a lot of time to reach the definitive optimal solution, and the use of Meta-heuristic algorithms can approach the optimal solution with an acceptable percentage. Genetic Algorithm and Gray Wolf Optimizer have been used in this article in order to optimize and identify the factors influencing the employee turnover.

Other methods used are method Mutual Information and Recursive Feature Elimination, which are part of feature selection methods. This method helps people to have a better view of the variables by stating which features are more important and how the features are related to each other. All three approaches above are very practical methods that can be used to identify and optimize the factors affecting the employee turnover. However, past researches were limited to the use of statistical charts. In order to evaluate the performance of these methods, the results have been used in some machine learning algorithms. Machine learning models have been compared with each other using F1-score, and the most influential factors on the employee turnover have been identified. Some of these variables have been the same in different models, which have been discussed in detail.

However, when using approach Best-Worst Method, our expert selected 9 of all the features and then evaluated and ranked them. It is suggested that future researchers first cluster the criteria into a number of clusters. This way, you add one level to the hierarchy of the problem, and solve the problem. Finally, a general weight for all variables will obtain after doing some pairwise comparison among the sub-sets. The Meta-heuristic methods used in this article are limited to methods Genetic Algorithm and Gray Wolf Optimizer and do not show the priority of the selected features. Researchers are requested to show the results of other Meta-heuristic algorithms and their prioritization. Researchers can focus on improving the results and optimizing the Hyper-parameters of the models. It is suggested to use these methods on the data of other organizations and compare the results with this study.

The data used in this study is not a time series, which suggests that these methods should be implemented on time series data and some deep learning algorithms. It is suggested to consider fuzzy logic in models or in data. Identifying the factors affecting the employee turnover can lead to the improvement of macro policies of organizations to plan human resources so that the organization does not face a shortage of human resources when it is needed. The results of this study can be used as a decision support tool to improve organizational strategies and improve manager’s performance. These results can be used in various mathematical models to optimize human resources.

8 Conclusion

Employee turnover is always one of the main concerns of managers because it is a kind of loss of capital and is often the result of poor hiring decisions, and management, and directly affect the organization’s strategic plans. For this purpose, identifying the factors influencing the employee turnover is a very practical issue for organizations that have been limited to using statistical charts. In order to identify the most influential factors on employee turnover, three approaches are used in this article, which are Feature Selection, Multi-Criteria Decision-Making techniques and Meta-heuristic algorithms. Each of these methods are very practical techniques that can be available to managers as a tool, and their use in organizations has been neglected.

Method Best-Worst method is one of the most practical approaches in Multi-Criteria Decision-Making techniques used in this article. Our expert weighed the factors influencing employee turnover using the Best-Worst method. The results of this method show that job level, job satisfaction, monthly income, relationship satisfaction, total work years, work-life balance, years at the company, years since the last promotion, and years with the current manager are the most influential factors, respectively. The Genetic algorithm is one of the Meta-heuristic algorithms, used for optimization. The results show that age, department, education field, job level, job role, job satisfaction, monthly income, overtime, and years in the current role are the most significant factors. Gray Wolf Optimizer shows that distance from home, job role, monthly income, number of companies worked, overtime, years at the company, years in the current role, years since last promotion, and years with the current manager are the most significant factors.

The other method is to use the Feature Selection approach, which is of the main steps to using the learning machine. Using two approaches, Mutual Information and Recursive Feature Elimination, we examined the factors affecting employee turnover. The results show that in the Mutual Information method, the most influential factors are years with the current manager, gender, distance from home, hourly rate, total work years, years at the company, work-life balance, environmental satisfaction, and job level. In the Recursive Feature Elimination method, the most influential factors are age, business travel, daily rate, education, gender, work-life balance, distance from home, job environment, and monthly rate.

In the next step, the results of Mutual Information, Recursive Feature Elimination, Best-Worst Method, Genetic Algorithm, Gray Wolf Optimizer algorithms are used in machine learning algorithms so that the results can be applied in predicting the departure of the required human resources. Used and then compared. The results obtained in logistic regression, decision tree, random forest, super vector machine, gradient boosting and adaptive boosting are the algorithms used for prediction. Prediction models using all features have also been implemented and their results have been compared with each other. The results of the prediction show that the Mutual Information algorithm has been able to show better results than using all the features. The results of a case study based on IBM’s Dataset show that among these three methods that we used to identify the factors that affect employee turnover, total working years, years with the current manager and work-life balance are common. Managers should pay more attention to these two factors to better control the rate of employee turnover.

This approach can help managers and organizations to have a vision of the future based on data and make the best decision to plan for their employees. It may not be possible for all companies to collect and record this Dataset and find these results, so this research can help the manager and promote a data-driven decision approach in their organization, especially in human resource management, and reduce the costs of making poor decisions. By doing this, managers can retain their knowledge workers and prevent unconventional employee turnover in their company. In other words, the use of data mining approach, Multi-Criteria Decision Making techniques and the above results can be used as a decision support tool. These approaches can influence future decisions by using the historical data of the organization and reduce the costs caused by mistakes. Data is increasingly viewed as a corporate asset that can be used to make informed business decisions, improve marketing activities, optimize business operations, and reduce costs, with the goal of increasing revenue, profit, and organizational productivity.

Most of the previous articles that used data to identify factors were limited to using charts and graphs. We tried to use other approaches such as using Multi-Criteria Decision-Making techniques, feature selection algorithms and Meta-heuristic algorithms. However, there are also limitations in this research. The Meta-heuristic algorithms used in this article do not show the priority of the selected features, and future researchers are asked to improve it. In addition, in this article, only two algorithms are used, which researchers are asked to expand. Therefore, we ask researchers to increase the accuracy of this model and add more factors.

In this article, the prediction algorithms are not optimized. It is suggested that the results after the optimization of the algorithms should also be examined. Also, by using Best-Worst Method, our expert selected 9 of all the features and then evaluated and ranked these features. It is suggested that first cluster all the features into a number of clusters. In addition, for future research, we propose to use deep learning models such as the Convolutional Neural Network algorithm and Artificial neural network to predict the employee turnover rate using these features. They can also optimize these results using Meta-heuristic algorithms. Researchers are also advised to use mathematical modeling to minimize the employee turnover rate. Researchers can predict the employee turnover rate and use it to optimize, locate and allocate human resources. They can also findi the features using fuzzy logic.

References

Aksu A (2008) Chapter 10 - Employee turnover: calculation of turnover rates and costs. In: Tesone DV (ed.) Handbook of Hospitality Human Resources Management. Butterworth-Heinemann, Oxford, pp 195–222

Arora Y, Sikka S (2023) Reviewing fake news classification algorithms. In: Goyal D, Kumar A, Piuri V, Paprzycki M (eds) Proceedings of the Third International Conference on Information Management and Machine Intelligence. Algorithms for Intelligent Systems. Springer, Singapore. https://doi.org/10.1007/978-981-19-2065-3_46

Chou Y-C, Yen H-Y, Dang V, Sun CC (2019) Assessing the human resource in science and Technology for Asian Countries: application of fuzzy AHP and fuzzy TOPSIS. Symmetry 11:251. https://doi.org/10.3390/sym11020251

Dotoli M, Epicoco N, Falagario M (2020) Multi-criteria decision making techniques for the management of public procurement tenders: A case study. Appl Soft Comput 88:106064

Esangbedo MO, Bai S, Mirjalili S, Wang Z (2021) Evaluation of human resource information systems using grey ordinal pairwise comparison MCDM methods. Expert Syst Appl 182:115151. https://doi.org/10.1016/j.eswa.2021.115151

Esmaieeli Sikaroudi A, Ghousi R, Sikaroudi A (2015) A data mining approach to employee turnover prediction (case study: Arak automotive parts manufacturing). J Indust Syst Eng 8:108–121

Gupta H (2018) Assessing organizations performance on the basis of GHRM practices using BWM and fuzzy TOPSIS. J Environ Manag 226:201–216. https://doi.org/10.1016/j.jenvman.2018.08.005

Gupta H, Barua MK (2018) A framework to overcome barriers to green innovation in SMEs using BWM and fuzzy TOPSIS. Sci Total Environ 633:122–139

Han, JW (2020) A review of antecedents of employee turnover in the hospitality industry on individual, team and organizational levels. International hospitality review, ahead-of-print(ahead-of-print). https://doi.org/10.1108/IHR-09-2020-0050

Kim J, Dibrell C, Kraft E, Marshall D (2021) Data analytics and performance: the moderating role of intuition-based HR management in major league baseball. J Bus Res 122:204–216. https://doi.org/10.1016/j.jbusres.2020.08.057

Kumar A, Mangla SK, Luthra S, Ishizaka A (2019) Evaluating the human resource related soft dimensions in green supply chain management implementation. Prod Plan Control 30(9):699–715. https://doi.org/10.1080/09537287.2018.1555342

Liu P, Zhu B, Wang P (2021) A weighting model based on best–worst method and its application for environmental performance evaluation. Appl Soft Comput 103:107168. https://doi.org/10.1016/j.asoc.2021.107168

Lyons P, Bandura R (2020) Employee turnover: features and perspectives. Dev Learn Organ Int J 34(1):1–4. https://doi.org/10.1108/DLO-02-2019-0048

Mammadova M, Jabrayilova Z (2014) Application of fuzzy optimization method in decision-making for personnel selection. Intell Control Autom 5:190–204. https://doi.org/10.4236/ica.2014.54021

Mamoudan, M, Forouzanfar, D, Mohammadnazari, Z, Aghsami, A, Jolai, F (2021) Factor identification for insurance pricing mechanism using data mining and multi criteria decision making. J Ambient Intell Humaniz Comput https://doi.org/10.1007/s12652-021-03585-z

Margherita A (2022) Human resources analytics: A systematization of research topics and directions for future research. Hum Resour Manag Rev 32(2):100795. https://doi.org/10.1016/j.hrmr.2020.100795

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Nagpal, T, Mishra, M (2021) Analyzing human resource practices for decision making in banking sector using HR analytics. Materials Today: Proceedings. https://doi.org/10.1016/j.matpr.2020.12.460

Qi L, Yao K (2021) Artificial intelligence enterprise human resource management system based on FPGA high performance computer hardware. Microprocess Microsyst 82:103876. https://doi.org/10.1016/j.micpro.2021.103876

Ren J (2018) Technology selection for ballast water treatment by multi-stakeholders: a multi-attribute decision analysis approach based on the combined weights and extension theory. Chemosphere 191:747–760

Rezaei J (2015) Best-worst multi-criteria decision-making method. Omega 53:49–57

Rijamampianina R (2015) Employee turnover rate and organizational performance in South Africa. Probl Perspect Manag 13:240–253

Salimi N, Rezaei J (2018) Evaluating firms’ R&D performance using best worst method. Evaluation Program Plan 66:147–155

Shoji Y, Kim H, Kubo T, Tsuge T, Aikoh T, Kuriyama K (2021) Understanding preferences for pricing policies in Japan’s national parks using the best–worst scaling method. J Nat Conserv 60:125954. https://doi.org/10.1016/j.jnc.2021.125954