Abstract

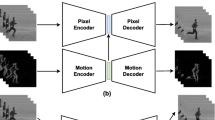

Accurate video prediction is critical for human drivers and self-driving cars to make informed decisions. To overcome the limitations of existing deep learning-based video prediction methods in driving scenes, such as inaccurate relative position prediction, high latency, and blurred multi-frame image prediction, we propose a Memory Differential Motion Network (MDMNet) model with a convolutional auto-encoder and a spatiotemporal information learning module, based on an improved Memory In Memory Network (MIM). One prominent characteristic of this newly constructed model is that in each time step, it can learn richer spatiotemporal representation features in each frame, and each layer computes the attention weight of the global spatiotemporal information collected, recalling and focusing on the crucial component. First, inspired by the notion of inter-frame differentiation, the network model incorporates motion filters and differential operations inside the unit, which makes it easier to extract the complex relative motion changes and trends of multiple objects in the scene. Second, an innovative global attention module between pictures and states is implemented to ensure global motion information integrity. Finally, to improve the quality of generated pictures, an adaptive convolutional auto-encoder is created. The MDMNet model outperforms other baseline prediction models in Mean Squared Error (MSE), Mean Absolute Error (MAE), Structural Similarity (SSIM), and Peak Signal to Noise Ratio (PSNR) when tested on D2-city and BDD100K datasets.

Similar content being viewed by others

References

Lu W, Cui J, Chang YS, Zhang L (2021) A video prediction method based on optical flow estimation and pixel generation. IEEE Access 9:100395–100406

Brand F, Seiler J, Kaup A (2020) Intra-frame coding using a conditional autoencoder. IEEE Journal of Selected Topics in Signal Processing 15:354–365

Chen L, Guan Q, Feng B, Yue H, Wang J, Zhang F (2019) A multi-convolutional autoencoder approach to multivariate geochemical anomaly recognition. Minerals 9:270–270

Arif S, Wang J, Hassan T, Fei Z (2019) 3D-CNN-based fused feature maps with lstm applied to action recognition. Future Internet 11:1–17

Horn B, Schunck B (1981) Determining optical flow. Artif Intell 17:185–203

Wren C, Azarbayejani A (1997) Pfinder: real-time tracking of the human body. IEEE Trans Pattern Anal Mach Intell 19:780–785

Shi X, Chen Z, Wang H, Yeung D, Wong W, Woo W (2015) Convolutional lstm network: a machine learning approach for precipitation nowcasting. Adv Neural Inf Proces Syst 1:802–810

Wang Y, Long M, Wang J, Gao Z, Yu P (2017) PredRNN: recurrent neural networks for predictive learning using spatiotemporal lstms. Adv Neural Inf Proces Syst 30:879–888

Wang Y, Gao Z, Long M, Wang J, Yu PS (2018) PredRNN++:towards a resolution of the deep-in-time dilemma in spatiotemporal predictive learning. In: Proceedings of the 35thInternational Conference On International Conference Onmachine Learning (ICML), pp 5110–5119

Wang Y, Zhang J, Zhu H, Long M, Wang J, Yu PS (2019) Memory in memory: a predictive neural network for learning higher-order non-stationarity from spatiotemporal dynamics. In: 2019 IEEE/CVF Conference On Computer Vision and Pattern Recognition (CVPR), pp 9146–9154

Lotter W, Kreiman G, Cox D (2017) Deep predictive coding networks for video prediction and unsupervised learning. In: 5th International Conference On Learning Representations(ICLR), pp 1–19

Wang Y, Jiang L, Yang M-H, Li L-J, Long M, Fei-Fei L (2018) Eidetic 3D LSTM: A model for video prediction and beyond. In: International Conference On Learning Representations (ICLR), pp 1–14

Lin Z, Li M, Zheng Z, Cheng Y, Yuan C (2020) Self-attention ConvLSTM for spatiotemporal prediction. Proceedings of the AAAI Conference on Artificial Intelligence 34:11531–11538

Wu H, Yao Z, Wang J, Long M (2021) MotionRNN: A flexible model for video prediction with spacetime-varying motions. In: 2021 IEEE/CVF Conference On Computer Vision and Pattern Recognition (CVPR), pp 15430–15439

Wang S, Zhao P, Yu B, Huang W, Liang H (2020) Vehicle trajectory prediction by knowledge-driven lstm network in urban environments. J Adv Transp 2020:1–20

Wolfe B, Fridman L, Kosovicheva A, Seppelt B, Mehler B, Reimer B, Rosenholtz R (2019) Predicting road scenes from brief views of driving video. J Vis 19(5):8–8

Jeong Y, Yi K (2020) Bidirectional long shot-term memory-based interactive motion prediction of cut-in vehicles in urban environments. IEEE Access 8:106183–106197

Xue J, Fang J, Zhang P (2018) A survey of scene understanding by event reasoning in autonomous driving. Int J Autom Comput 15:249–266

Li S, Fang J, Xu H, Xue J (2020) Video frame prediction by deep multi-branch mask network. IEEE Transactions on Circuits and Systems for Video Technology 31:1283–1295

Yuan M, Dai Q (2021) A novel deep pixel restoration video prediction algorithm integrating attention mechanism. Appl Intell 52:5015–5033

Jing B, Ding H, Yang Z, Li B, Bao L (2021) Video prediction: a step-by-step improvement of a video synthesis network. Appl Intell 52:3640–3652

Lee S, Kim HG, Hwi Choi D, Kim H-I, Ro YM (2021) Video prediction recalling long-term motion context via memory alignment learning. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 3053–3062

Fang Y, Zhang C, Min X, Huang H, Yi Y, Zhai G, Lin C-W (2020) DevsNet: deep video saliency network using short-term and long-term cues. Pattern Recogn 103:107294

Xu J, Ni B, Yang X (2021) Progressive multi-granularity analysis for video prediction. Int J Comput Vis 129:601–618

Meng X, Jia C, Zhang X, Wang S, Ma S (2021) Spatio-temporal correlation guided geometric partitioning for versatile video coding. IEEE Trans Image Process 31:30–42

Chen D, Wang P, Yue L, Zhang Y, Jia T (2020) Anomaly detection in surveillance video based on bidirectional prediction. Image Vis Comput 98:103915

Ryoo MS (2011) Human activity prediction: early recognition of ongoing activities from streaming videos. In: 2011 international conference on computer vision, pp 1036–1043

Vu T-H, Olsson C, Laptev I, Oliva A, Sivic J (2014) Predicting actions from static scenes. In: European Conference on Computer Vision. Springer, pp 421–436

Huang D-A, Kitani KM (2014) Action-reaction: forecasting the dynamics of human interaction. In: European conference on computer vision. Springer, pp 489–504

Pickup LC, Zheng P, Wei D, Shih YC, Freeman WT (2014) Seeing the arrow of time. In: 2014 IEEE conference on computer vision and pattern recognition, pp 2043–2050

Lampert CH (2015) Predicting the future behavior of a time-varying probability distribution. In: 2015 IEEE Conference On Computer Vision and Pattern Recognition (CVPR), pp 942–950

Pintea SL, van Gemert JC, Smeulders AWM (2014) Deja vu: Motion prediction in static images. In: European conference on computer vision. Springer, pp 172–187

Vondrick C, Pirsiavash H, Torralba A (2016) Anticipating visual representations from unlabeled video. In: 2016 IEEE Conference On Computer Vision and Pattern Recognition (CVPR), pp 98–106

Yan X, Chang H, Shan S, Chen X (2014) Modeling video dynamics with deep dynencoder. In: European conference on computer vision. Springer, pp 215–230

Liu Z, Yeh RA, Tang X, Liu Y, Agarwala A (2017) Video frame synthesis using deep voxel flow. In: 2017 IEEE International Conference On Computer Vision (ICCV), pp 4473–4481

Finn C, Goodfellow I, Levine S (2016) Unsupervised learning for physical interaction through video prediction. Adv Neural Inf Proces Syst 29:64–72

Kwon Y-H, Park M-G (2019) Predicting future frames using retrospective cycle GAN. In: 2019 IEEE/CVF Conference On Computer Vision and Pattern Recognition (CVPR), pp 1811–1820

Liang X, Lee L, Dai W, Xing EP (2017) Dual motion GAN for future-flow embedded video prediction. In: 2017 IEEE International Conference On Computer Vision (ICCV), pp 1762–1770

Jie H, Li S, Gang S, Albanie S (2017) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2013

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 3141–3149

Alom M, Yakopcic C, Hasan M, Taha T, Asari V (2019) Recurrent residual U-net for medical image segmentation. J Med Image 6(1):014006

Zhang H, Goodfellow I, Metaxas D, Odena A (2019) Self-attention generative adversarial networks. In: International conference on machine learning. PMLR, pp 7354–7363

Ji Y, Zhang H, Jie Z, Ma L, Wu Q (2021) CASNet: a cross-attention siamese network for video salient object detection. IEEE Trans Neural Netw Learn Syst 32(6):2676–2690

Fu H, Huang X (2011) Automatic detection and elimination of specular reflection components in leaf images. Computer Engineering and Design 32:87–89

Gu Z, Cheng J, Fu H, Zhou K, Hao H, Zhao Y, Zhang T, Gao S, Liu J (2019) CE-net: context encoder network for 2D medical image segmentation. IEEE Trans Med Imaging 38(10):2281–2292

Shen H, Cai Q (2009) Simple and efficient method for specularity removal in an image. Appl Opt 48(14):2711–2719

Xie P, Li X, Ji X, Chen X, Chen Y, Liu J, Ye Y (2020) An energy-based generative adversarial forecaster for radar echo map extrapolation. IEEE Geosci Remote Sens Lett 19:1–5

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, GomezAN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Proces Syst 30:5998–6008

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, C., Chen, X. Video prediction for driving scenes with a memory differential motion network model. Appl Intell 53, 4784–4800 (2023). https://doi.org/10.1007/s10489-022-03813-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03813-9