Abstract

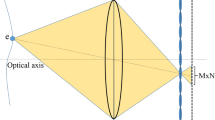

Light fields contain a wealth of information about real-world scenes and can be easily acquired by commercial light field cameras. However, the trade-off between angular and spatial resolution is inevitable. This paper proposes an end-to-end light field angular super-resolution network by exploiting structure and scene information to mitigate this problem. First, a Light Field ResBlock is proposed to explicitly extract epipolar plane image features and scene features. The epipolar plane image features with obvious directionality represent light field structure information that contains correlations among different views, such as the intensity and depth consistencies. The scene features express scene information that depicts local details and global information and describes individual objects in a scene. The exploitation of light field structure and scene information can gather the intrinsic characteristics of light fields. Then, 4D deconvolution is employed to upsample the angular resolution based on the extracted features related to light field structure and scene. The utilization of 4D deconvolution preserves the connections among sparse views and generates new views with high correlations. Last but not least, the refinement network further exploiting LF structure and scene information is employed to alleviate the artifacts induced by too little angular information during the early stage of the model. Experiments on two different angular super-resolution tasks demonstrate that our method can achieve the best angular resolution enhancement performance on different datasets.

Similar content being viewed by others

References

Wu G, Masia B, Jarabo A, Zhang Y, Wang L, Dai Q, Chai T, Liu Y (2017) Light field image processing: an overview. IEEE J Sel Top Signal Process 11(7):926–954

Bae S-I, Kim K, Jang K-W, Kim H-K, Jeong K-H (2021) High contrast ultrathin light-field camera using inverted microlens arrays with metal–insulator–metal optical absorber. Adv Opt Mater 9(6):2001657

Lee J-h, Yanusik I, Choi Y, Kang B, Hwang C, Park J, Nam D, Hong S (2020) Automotive augmented reality 3d head-up display based on light-field rendering with eye-tracking. Opt Express 28 (20):29788–29804

Lee S, Jung H, Rhee CE (2021) Data orchestration for accelerating gpu-based light field rendering aiming at a wide virtual space. IEEE Trans Circuits Syst Video Technol

Ai W, Xiang S, Yu L (2019) Robust depth estimation for multi-occlusion in light-field images. Opt Express 27(17):24793–24807

Chen J, Hou J, Ni Y, Chau L-P (2018) Accurate light field depth estimation with superpixel regularization over partially occluded regions. IEEE Trans Image Process 27(10):4889–4900

Stefanoiu A, Page J, Symvoulidis P, Westmeyer GG, Lasser T (2019) Artifact-free deconvolution in light field microscopy. Opt Express 27(22):31644–31666

Verinaz-Jadan H, Song P, Howe CL, Foust AJ, Dragotti PL (2022) Shift-invariant-subspace discretization and volume reconstruction for light field microscopy. IEEE Trans Comput Imaging

Wang Y, Yang J, Guo Y, Xiao C, An W (2018) Selective light field refocusing for camera arrays using bokeh rendering and superresolution. IEEE Signal Process Lett 26(1):204–208

Hedayati E, Bos JP (2020) Modeling standard plenoptic camera by an equivalent camera array. Opt Eng 59(7):073101

The Stanford multi-camera array. http://graphics.stanford.edu/projects/array/

LightField forum. http://lightfield-forum.com/lytro/

Raytrix. https://www.raytrix.de/

Chaurasia G, Duchêne S, Sorkine-Hornung O, Drettakis G (2013) Depth synthesis and local warps for plausible image-based navigation. ACM Transactions on Graphics. to be presented at SIGGRAPH 2013, 32

Wanner S, Goldluecke B (2014) Variational light field analysis for disparity estimation and super-resolution. IEEE Trans Pattern Anal Mach Intell 36(3):606–619. https://doi.org/10.1109/TPAMI.2013.14

Zhou W, Shi J, Hong Y, Lin L, Kuruoglu EE (2021) Robust dense light field reconstruction from sparse noisy sampling. Signal Process 186:108121

Wu G, Liu Y, Fang L, Chai T (2021) Revisiting light field rendering with deep anti-aliasing neural network. IEEE Trans Pattern Anal Mach Intell:1–1. https://doi.org/10.1109/TPAMI.2021.3073739

Wu G, Liu Y, Fang L, Dai Q, Chai T (2019) Light field reconstruction using convolutional network on epi and extended applications. IEEE Trans Pattern Anal Mach Intell 41(7):1681–1694. https://doi.org/10.1109/TPAMI.2018.284539

Wu G, Liu Y, Dai Q, Chai T (2019) Learning sheared epi structure for light field reconstruction. IEEE Trans Image Process 28(7):3261–3273

Kalantari NK, Wang T-C, Ramamoorthi R (2016) Learning-based view synthesis for light field cameras. ACM Trans Graph (TOG) 35(6):1–10

Wing Fung Yeung H, Hou J, Chen J, Ying Chung Y, Chen X (2018) Fast light field reconstruction with deep coarse-to-fine modeling of spatial-angular clues. In: Proceedings of the European conference on computer vision (ECCV), pp 137–152

Meng N, So HK-H, Sun X, Lam E (2019) High-dimensional dense residual convolutional neural network for light field reconstruction. IEEE Trans Pattern Anal Mach Intell

Jin J, Hou J, Yuan H, Kwong S (2020) Learning light field angular super-resolution via a geometry-aware network. In: AAAI, pp 11141–11148

Jin J, Hou J, Chen J, Zeng H, Kwong S, Yu J (2020) Deep coarse-to-fine dense light field reconstruction with flexible sampling and geometry-aware fusion. IEEE Trans Pattern Anal Mach Intell, 1–1(01)

Ko K, Koh YJ, Chang S, Kim C-S (2021) Light field super-resolution via adaptive feature remixing. IEEE Trans Image Process 30:4114–4128

Wang X, Ma J, Yi P, Tian X, Jiang J, Zhang X-P (2022) Learning an epipolar shift compensation for light field image super-resolution. Inf Fusion 79:188–199

Cheng Z, Xiong Z, Chen C, Liu D, Zha Z-J (2021) Light field super-resolution with zero-shot learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10010–10019

Wang Y, Liu F, Wang Z, Hou G, Sun Z, Tan T (2018) End-to-end view synthesis for light field imaging with pseudo 4dcnn. In: Proceedings of the european conference on computer vision (ECCV), pp 333–348

Wang Y, Liu F, Zhang K, Wang Z, Tan T (2020) High-fidelity view synthesis for light field imaging with extended pseudo 4dcnn. IEEE Trans Comput Imaging, pp 1–1(99)

Liu D, Huang Y, Wu Q, Ma R, An P (2020) Multi-angular epipolar geometry based light field angular reconstruction network. IEEE Trans Comput Imaging 6:1507–1522

Liu D, Wu Q, Huang Y, Huang X, An P (2021) Learning from epi-volume-stack for light field image angular super-resolution. Signal Process Image Commun 97:116353

Tran T-H, Berberich J, Simon S (2022) 3dvsr: 3d epi volume-based approach for angular and spatial light field image super-resolution. Signal Process 192:108373

Hu Z, Chung YY, Ouyang W, Chen X, Chen Z (2020) Light field reconstruction using hierarchical features fusion. Expert Syst Appl:113394

Sheng H, Zhao P, Zhang S, Zhang J, Yang D (2018) Occlusion-aware depth estimation for light field using multi-orientation epis. Pattern Recogn 74:587–599

Zhang S, Lin Y, Sheng H (2019) Residual networks for light field image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11046–11055

Raj AS, Lowney M, Shah R, Wetzstein G (2016) Stanford Lytro light field archive. http://lightfields.stanford.edu/LF2016.html

Rerabek M, Ebrahimi T (2016) New light field image dataset. In: 8th International conference on quality of multimedia experience (qoMEX)

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Ng R, Levoy M, Brédif M, Duval G, Horowitz M, Hanrahan P (2005) Light Field Photography with a Hand-held Plenoptic Camera. Research Report CSTR 2005-02, Stanford University. https://hal.archives-ouvertes.fr/hal-02551481

Funding

This work was supported in part by the National Key Research and Development Program of China (2020YFB1711400) and the National Natural Science Foundation of China (52075485).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, J., Wang, L., Ren, L. et al. Light field angular super-resolution based on structure and scene information. Appl Intell 53, 4767–4783 (2023). https://doi.org/10.1007/s10489-022-03759-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03759-y