Abstract

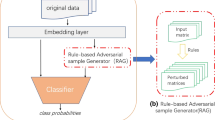

In this paper, we propose a method for detecting adversarial examples using a text modification module. The proposed method detects adversarial examples based on the change in classification result that occurs when a sample is modified by arbitrarily changing a specific word to a similar word. The method exploits the fact that the adversarial example’s sensitivity to changes to specific words is greater than that of the original sample. Experiments were conducted with three datasets (AG’s News, a movie review dataset, and the IMDB Large Movie Review Dataset), and TensorFlow was used as a machine learning library. In the experiment using these datasets, the proposed method detected an average of 71.7% of the adversarial sentences while minimizing the change in the results given by the model for the original sentences to an average of 2.9%.

Similar content being viewed by others

Data Availability

The data used to support the findings of this study will be available from the corresponding author upon request after acceptance.

References

Schmidhuber J (2015) Deep learning in neural networks: An overview. Neural Netw 61:85–117

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations

Wu Z, Zhao D, Liang Q, Yu J, Gulati A, Pang R (2021) Dynamic sparsity neural networks for automatic speech recognition. In: ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 6014– 6018

Otter D W, Medina JR, Kalita JK (2020) A survey of the usages of deep learning for natural language processing. IEEE Trans Neural Netw Learn Syst 32(2):604–624

Potluri S, Diedrich C (2016) Accelerated deep neural networks for enhanced intrusion detection system. In: Emerging Technologies and Factory Automation (ETFA), 2016 IEEE 21st International Conference on. IEEE, pp 1–8

Ren H, Huang T, Yan H (2021) Adversarial examples: attacks and defenses in the physical world. Int J Mach Learn Cybern:1–12

Papernot N, McDaniel P, Wu X, Jha S, Swami A (2016) Distillation as a defense to adversarial perturbations against deep neural networks. In: Security and Privacy (SP), 2016 IEEE Symposium on. IEEE, pp 582–597

Goodfellow I, Shlens J, Szegedy C (2015) Explaining and harnessing adversarial examples. In: International Conference on Learning Representations

Carlini N, Wagner D (2017) Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy (SP). IEEE, pp 39–57

Kurakin A, Goodfellow I J, Bengio S (2017) Adversarial machine learning at scale. In: International Conference on Learning Representations (ICLR)

Ebrahimi J, Rao A, Lowd D, Dou D (2018) Hotflip: White-box adversarial examples for text classification. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pp 31–36

Jin D, Jin Z, Zhou J T, Szolovits P (2020) Is bert really robust? a strong baseline for natural language attack on text classification and entailment. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 8018–8025

Li L, Qiu X (2020) Textat: Adversarial training for natural language understanding with token-level perturbation. arXiv:2004.14543

Peng W, Huang L, Jia J, Ingram E (2018) Enhancing the naive bayes spam filter through intelligent text modification detection. In: 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE). IEEE, pp 849–854

Zhang X, Zhao J, LeCun Y (2015) Character-level convolutional networks for text classification. Adv Neural Inf Process Syst 28:649–657

Lin C, He Y (2009) Joint sentiment/topic model for sentiment analysis. In: Proceedings of the 18th ACM conference on Information and knowledge management, pp 375–384

Maas A, Daly RE, Pham PT, Huang D, Ng AY, Potts C (2011) Large movie review dataset

Devlin J, Chang M-W, Lee K, Toutanova K (2019) Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp 4171–4186

Sabbah T, Selamat A, Selamat M H, Al-Anzi F S, Viedma E H, Krejcar O, Fujita H (2017) Modified frequency-based term weighting schemes for text classification. Appl Soft Comput 58:193–206

Catelli R, Casola V, De Pietro G, Fujita H, Esposito M (2021) Combining contextualized word representation and sub-document level analysis through bi-lstm+ crf architecture for clinical de-identification. Knowl-Based Syst 213:106649

Moosavi-Dezfooli S-M, Fawzi A, Frossard P (2016) Deepfool: a simple and accurate method to fool deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2574–2582

Papernot N, McDaniel P, Goodfellow I, Jha S, Celik Z B, Swami A (2017) Practical black-box attacks against machine learning. In: Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security. ACM, pp 506–519

Narodytska N, Kasiviswanathan S (2017) Simple black-box adversarial attacks on deep neural networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). IEEE, pp 1310–1318

Carlini N, Wagner D (2018) Audio adversarial examples: Targeted attacks on speech-to-text. In: 2018 IEEE Security and Privacy Workshops (SPW). IEEE, pp 1–7

Wu A, Han Y, Zhang Q, Kuang X (2019) Untargeted adversarial attack via expanding the semantic gap. In: 2019 IEEE International Conference on Multimedia and Expo (ICME). IEEE, pp 514–519

Zang Y, Qi F, Yang C, Liu Z, Zhang M, Liu Q, Sun M (2020) Word-level textual adversarial attacking as combinatorial optimization. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp 6066–6080

Niven T, Kao H-Y (2019) Probing neural network comprehension of natural language arguments. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp 4658–4664

Schmitt X, Kubler S, Robert J, Papadakis M, LeTraon Y (2019) A replicable comparison study of ner software: Stanfordnlp, nltk, opennlp, spacy, gate. In: 2019 Sixth International Conference on Social Networks Analysis, Management and Security (SNAMS). IEEE, pp 338–343

Mrkšić N, Séaghdha D, Thomson B, Gašić M, Rojas-Barahona L, Su PH, Vandyke D, Wen TH, Young S (2016) Counter-fitting word vectors to linguistic constraints. In: 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL HLT 2016-Proceedings of the Conference, pp 142–148

Hill F, Reichart R, Korhonen A (2015) Simlex-999: Evaluating semantic models with (genuine) similarity estimation. Comput Linguist 41(4):665–695

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M et al (2016) Tensorflow: A system for large-scale machine learning.. In: OSDI, vol 16, pp 265–283

Jawale S, Sawarkar SD (2020) Interpretable sentiment analysis based on deep learning: An overview. In: 2020 IEEE Pune Section International Conference (PuneCon). IEEE, pp 65–70

Zhang C, Yamana H (2021) Improving text classification using knowledge in labels. In: 2021 IEEE 6th International Conference on Big Data Analytics (ICBDA). IEEE, pp 193–197

Rahimi Z, Homayounpour MM (2020) Tens-embedding: A tensor-based document embedding method. Expert Syst Appl 162:113770

Petrosyan A, Dereventsov A, Webster CG (2020) Neural network integral representations with the relu activation function. In: Mathematical and Scientific Machine Learning. PMLR, pp 128–143

Sheikholeslami S (2019) Ablation programming for machine learning

Cervellini P, Menezes AG, Mago VK (2016) Finding trendsetters on yelp dataset. In: 2016 IEEE symposium series on computational intelligence (SSCI). IEEE, pp 1–7

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2017) Feature selection: A data perspective. ACM Comput Surv (CSUR) 50(6):1–45

Liu X, He J, Lang B, Chang S-F (2013) Hash bit selection: a unified solution for selection problems in hashing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1570–1577

Ma J, Xu H, Jiang J, Mei X, Zhang X-P (2020) Ddcgan: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans Image Process 29:4980–4995

Acknowledgments

This study was supported by the Basic Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2021R1I1A1A01040308) and the Hwarang-Dae Research Institute of Korea Military Academy.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

In this appendix, we added five sample cases (Figs. 13, 14, 15, 16, 17 and 18) for each dataset. As shown in five sample cases, the adversarial sentence, created by changing the important word in the original sentence to a similar word, is misclassified by the model yet is recognized by humans as belonging to the same class as the original sentence. However, after an important word in the adversarial sentence is changed by the text modification module, the modified sentence is correctly recognized by the model again.

Appendix B

In this appendix, we analyzed the failed cases (counterexamples) (Figs. 19, 20, 21, 22, 23 and 24) for each dataset, but000 no specific patterns were identified. We have added an appendix showing three counterexamples for each dataset.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kwon, H., Lee, S. Detecting textual adversarial examples through text modification on text classification systems. Appl Intell 53, 19161–19185 (2023). https://doi.org/10.1007/s10489-022-03313-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03313-w