Abstract

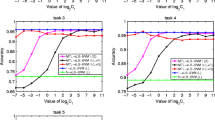

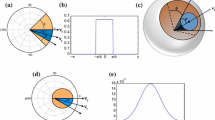

Multi-task learning (MTL) obtains a better classifier than single-task learning (STL) by sharing information between tasks within the multi-task models. Most existing multi-task learning models only focus on the data of the target tasks during training, and ignore the data of non-target tasks that may be contained in the target tasks. In this way, Universum data can be added to classifier training as prior knowledge, and these data do not belong to any indicated categories. In this paper, we address the problem of multi-task learning with Universum data, which improves utilization of non-target task data. We introduce Universum learning to make non-target task data act as prior knowledge and propose a novel multi-task support vector machine with Universum data (U-MTLSVM). Based on the characteristics of MTL, each task have corresponding Universum data to provide prior knowledge. We then utilize the Lagrange method to solve the optimization problem so as to obtain the multi-task classifiers. Then, conduct experiments to compare the performance of the proposed method with several baslines on different data sets. The experimental results demonstrate the effectiveness of the proposed methods for multi-task classification.

Similar content being viewed by others

Notes

http://people.csail.mit.edu/jrennie/20Newsgroups/

http://www.daviddlewis.com/resources/testcollections/

http://www.cs.cmu.edu/afs/cs.cmu.edu/project/theo-20/www/data/webkb-data.gtar.gz

http://people.ee.duke.edu/ lcarin/LandmineData.zip

References

Smith FW (1968) Pattern classifier design by linear programming. IEEE Transactions on Computers C-17 4:367–372

Yuan R, Li Z, Guan X, Xu L (2010) An svm-based machine learning method for accurate internet traffic classification. Inf Syst Front 12(2):149–156

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29 (5):1189–1232

Su C, Yang F, Zhang S, Tian Q, Davis LS, Gao W (2015) Multi-task learning with low rank attribute embedding for person re-identification pp 3739–3747

Xue Y, Liao X, Carin L, Krishnapuram B (2007) Multi-task learning for classification with dirichlet process priors. J Mach Learn Res 8:35–63

Tang Z, Li L, Wang D, Vipperla R (2017) Collaborative joint training with multitask recurrent model for speech and speaker recognition. IEEE Transactions on Audio Speech, and Language Processing 25 (3):493–504

Do VH, Chen NF, Lim BP, Hasegawajohnson M (2018) Multitask learning for phone recognition of underresourced languages using mismatched transcription. IEEE Transactions on Audio Speech, and Language Processing 26(3):501–514

Xie X, Zhai X, Hou F, Hao A, Qin H (2019) Multitask learning on monocular water images: surface reconstruction and image synthesis. computer animation and virtual worlds

Yan C, Zhou L, Wan Y (2019) A multi-task learning model for better representation of clothing images. IEEE Access 7:34499–34507

Ji Y, Sun S (2013) Multitask multiclass support vector machines: Model and experiments. Pattern Recogn 46(3):914–924

Chen J, Tang L, Liu J, Ye J (2009) A convex formulation for learning shared structures from multiple tasks pp 137–144

Blackwood G, Ballesteros M, Ward T (2018) Multilingual neural machine translation with task-specific attention pp 3112–3122.

Das A, Dantcheva A, Bremond F (2018) Mitigating bias in gender, age and ethnicity classification: A multi-task convolution neural network approach pp 573–585

Salucci M, Poli L, Oliveri G (2019) Full-vectorial 3d microwave imaging of sparse scatterers through a multi-task bayesian compressive sensing approach

Huang Y, Beck JL, Li H (2019) Multitask sparse bayesian learning with applications in structural health monitoring. Computer-aided Civil and Infrastructure Engineering 34(9):732–754

Wang S, Chang X, Li X, Sheng QZ, Chen W (2016) Multi-task support vector machines for feature selection with shared knowledge discovery. Signal Process 120:746–753

Chandra R, Gupta A, Ong Y, Goh C (2018) Evolutionary multi-task learning for modular knowledge representation in neural networks. Neural Process Lett 47(3):993–1009

Pearce M, Branke J (2018) Continuous multi-task bayesian optimisation with correlation. European Journal of Operational Research p 270(3):1074–1085

Vapnik V (1998a) Statistical Learning Theory, dblp

Vapnik V (1998b) Estimation of Dependence Based on Empirical Data, springer

Qi Z, Tian Y, Shi Y (2012) Twin support vector machine with universum data. Neural Netw 36:112–119

Liu CL, Lee CH (2016) Enhancing text classification with the universum. In: 2016 12th International Conference on Natural Computation and 13th Fuzzy Systems and Knowledge Discovery (ICNC-FSKD)

Pan S, Wu J, Zhu X, Long G, Zhang C (2017) Boosting for graph classification with universum. Knowledge & Information Systems 50(1):1–25

Richhariya B, Gupta D (2018) Facial expression recognition using iterative universum twin support vector machine, applied soft computing

Weston J, Collobert R, Sinz FH, Bottou L, Vapnik V (2006) Inference with the universum pp 1009–1016

Sinz F, Chapelle O, Agarwal A, Scholkopf B, Platt CJ, Koller D, Singer Y, Roweis S (2007) An analysis of inference with the universum. Advances in Neural Information Processing Systems 20 (2008):1369–1376

Qi Z, Tian Y, Shi Y (2014) A nonparallel support vector machine for a classification problem with universum learning. J Comput Appl Math 263(1):288–298

Xu Y, Chen M, Yang Z, Li G (2016) V-twin support vector machine with universum data for classification. Applied Intelligence 44(4):956–968

Liu D, Tian Y, Bie R, Shi Y (2014) Self-universum support vector machine. Personal and ubiquitous computing 18(8):1813–1819

Chen S, Zhang C (2009) Selecting informative universum sample for semi-supervised learning. In: International Jont Conference on Artifical Intelligence

Dhar S, Cherkassky V (2015) Development and evaluation of cost-sensitive universum-svm. IEEE Transactions on Systems Man, and Cybernetics 45(4):806–818

Zhao J, Xu Y (2017) A safe sample screening rule for universum support vector machines. Knowledge Based Systems 138:46–57

Zhu C, Miao D, Zhou R, Wei L (2020) Weight-and-universum-based semi-supervised multi-view learning machine. Soft Comput 24(14):10657–10679

Chen X, Yin H, Jiang F, Wang L (2018) Multi-view dimensionality reduction based on universum learning. Neurocomputing 275:2279–2286

Wang Z, Zhu Y, Liu W, Chen Z, Gao D (2014) Multi-view learning with universum. Knowledge Based Systems 70:376–391

Deng J, Xu X, Zhang Z, Fruhholz S, Schuller B (2017) Universum autoencoder-based domain adaptation for speech emotion recognition. IEEE Signal Processing Letters 24(4):500–504

Songsiri P, Cherkassky V, Kijsirikul B (2018) Universum selection for boosting the performance of multiclass support vector machines based on one-versus-one strategy. Knowledge Based Systems 159:9–19

Ruder S (2017) An overview of multi-task learning in deep neural networks. arXiv: Learning

Fawzi A, Sinn M, Frossard P (2017) Multitask additive models with shared transfer functions based on dictionary learning. International conference on machine learning 65(5):1352–1365

Palmieri N, Yang X, De Rango F, Santamaria AF (2018) Self-adaptive decision-making mechanisms to balance the execution of multiple tasks for a multi-robots team. Neurocomputing 306:17–36

Khosravan N, Bagci U (2018) Semi-supervised multi-task learning for lung cancer diagnosis pp 710–713

Wen Z, Li K, Huang Z, Lee C, Tao J, Wen Z (2017) Improving deep neural network based speech synthesis through contextual feature parametrization and multi-task learning. Journal of Signal Processing Systems 90:0–1037

Chikontwe P, Lee HJ (2018) Deep multi-task network for learning person identity and attributes. IEEE Access 6:1–1

Su C, Yang F, Zhang S, Tian Q, Davis LS, Gao W (2017) Multi-task learning with low rank attribute embedding for multi-camera person re-identification. IEEE Transactions on Pattern Analysis & Machine Intelligence 40:1167–1181

Ma AJ, Yuen PC, Li J (2013) Domain transfer support vector ranking for person re-identification without target camera label information pp 3567–3574

Zhi S, Liu Y, Li X, Guo Y (2017) Toward real-time 3d object recognition: a lightweight volumetric cnn framework using multitask learning. Computers & Graphics 71(APR.):199–207

Zhang K, Zheng L, Liu Z, Jia N (2019) A deep learning based multitask model for network-wide traffic speed predication. Neurocomputing

Huang Y, Beck JL, Li H (2019) Multitask sparse bayesian learning with applications in structural health monitoring. Computer-Aided Civil and Infrastructure Engineering 34(9):732–754

Zhang Y, Yang Y, Li t, Fujita H (2018) A multitask multiview clustering algorithm in heterogeneous situations based on lle and le. knowledge based systems

Chen J, Liu J, Ye J (2012) Learning incoherent sparse and low-rank patterns from multiple tasks. Acm Transactions on Knowledge Discovery from Data 5(4):1–31

Gong P, Ye J, Zhang C (2013) Multi-stage multi-task feature learning pp 2979–3010

Li Y, Tian X, Liu T (2017) On better exploring and exploiting task relationships in multi-task learning: joint model and feature learning pp 1–11

Yousefi F, Smith M, Alvarez MA (2019) Multi-task learning for aggregated data using gaussian processes. arXiv: machine learning

Richhariya B, Sharma A, Tanveer M (2018) Improved universum twin support vector machine. In: IEEE Symposium Series on Computational Intelligence SSCI

Mei B, Xu Y (2019) Multi-task least squares twin support vector machine for classification. neurocomputing

Ping L i, Chen S (2018) Hierarchical gaussian processes model for multi-task learning, pattern recognition the journal of the pattern recognition society

Alzaidy RA, Caragea C, Giles CL (2019) Bi-lstm-crf sequence labeling for keyphrase extraction from scholarly documents pp 2551–2557

Joachims T (1998) Text categorization with support vector machines: Learning with many relevant features, ecml

Liu B, Xiao Y, Hao Z (2018) A selective multiple instance transfer learning method for text categorization problems. Knowl-Based Syst 141(1):178–187

Evgeniou T, Pontil M (2004) Regularized multi–task learning. In: Tenth Acm Sigkdd International Conference on Knowledge Discovery & Data Mining

Acknowledgments

The authors would like to thank the reviewers for their very useful comments and suggestions. This work was supported in part by the Natural Science Foundation of China under Grant 61876044, 62076074 and Grant 61672169, in part by Guangdong Natural Science Foundation under Grant 2020A1515010670 and 2020A1515011501, in part by the Science and Technology Planning Project of Guangzhou under Grant 202002030141.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiao, Y., Wen, J. & Liu, B. A new multi-task learning method with universum data. Appl Intell 51, 3421–3434 (2021). https://doi.org/10.1007/s10489-020-01954-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01954-3