Abstract

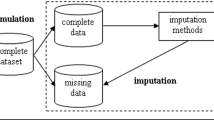

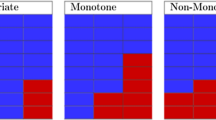

Imputation is a popular technique for handling missing data. We address a nonparametric imputation using the regularized M-estimation techniques in the reproducing kernel Hilbert space. Specifically, we first use kernel ridge regression to develop imputation for handling item nonresponse. Although this nonparametric approach is potentially promising for imputation, its statistical properties are not investigated in the literature. Under some conditions on the order of the tuning parameter, we first establish the root-n consistency of the kernel ridge regression imputation estimator and show that it achieves the lower bound of the semiparametric asymptotic variance. A nonparametric propensity score estimator using the reproducing kernel Hilbert space is also developed by the linear expression of the projection estimator. We show that the resulting propensity score estimator is asymptotically equivalent to the kernel ridge regression imputation estimator. Results from a limited simulation study are also presented to confirm our theory. The proposed method is applied to analyze air pollution data measured in Beijing, China.

Similar content being viewed by others

References

Aronszajn, N. (1950). Theory of reproducing kernels. Transactions of the American Mathematical Society, 68(3), 337–404.

Bohn, B., Rieger, C., Griebel, M. (2019). A represented theorem for deep kernel learning. Journal of Machine Learning Research, 20, 1–32.

Chan, K. C. G., Yam, S. C. P., Zhang, Z. (2016). Globally efficient non-parametric inference of average treatment effects by empirical balancing calibration weighting. Journal of the Royal Statistical Society, Series B, 78(3), 673–700.

Chen, J., Shao, J. (2001). Jackknife variance estimation for nearest neighbor imputation. Journal of the American Statistical Association, 96(453), 260–269.

Chen, S. X., Qin, J., Tang, C. Y. (2013). Mann-whitney test with adjustments to pretreatment variables for missing values and observational study. Journal of the Royal Statistical Society, Series B, 75(1), 81–102.

Cheng, P. E. (1994). Nonparametric estimation of mean functionals with data missing at random. Journal of the American Statistical Association, 89(425), 81–87.

Claeskens, G., Krivobokova, T., Opsomer, J. D. (2009). Asymptotic properties of penalized spline estimators. Biometrika, 96(3), 529–544.

Fan, J., Li, R. (2001). Variable selection via nonconcave penalized likelihood and its Oracle properties. Journal of the American Statistical Association, 96(456), 1348–1360.

Hainmueller, J. (2012). Entropy balancing for causal effects: A multivariate reweighting method to produce balanced samples in observational studies. Political Analysis, 20, 25–46.

Hampel, F. R. (1974). The influence curve and its role in robust estimation. Journal of the American Statistical Association, 69(346), 383–393.

Hastie, T., Tibshirani, R., Friedman, J. H., Friedman, J. H. (2009). The elements of statistical learning: Data mining, inference, and prediction (Vol. 2). New York, NY, USA: Springer.

Hayfield, T., Racine, J. S. (2008). Nonparametric econometrics: The np package. Journal of Statistical Software, 27(5), 1–32.

Hurvich, C. M., Simonoff, J. S., Tsai, C.-L. (1998). Smoothing parameter selection in nonparametric regression using an improved akaike information criterion. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 60(2), 271–293.

Kim, J. K., Rao, J. N. K. (2009). A unified approach to linearization variance estimation from survey data after imputation for item nonresponse. Biometrika, 96(4), 917–932.

Kim, J. K., Shao, J. (2021). Statistical methods for handling incomplete data, 2nd edition. New York: Chapman & Hall/CRC.

Koltchinskii, V., et al. (2006). Local Rademacher complexities and oracle inequalities in risk minimization. The Annals of Statistics, 34(6), 2593–2656.

Liang, X., Zou, T., Guo, B., Li, S., Zhang, H., Zhang, S., Huang, H., Chen, S. X. (2015). Assessing Beijing’s PM 2.5 pollution: Severity, weather impact, APEC and winter heating. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 471(2182):20150257.

Little, R. J. A., Rubin, D. B. (2019). Statistical analysis with missing data, 3rd edition. Hoboken, NJ: John Wiley & Sons.

Mendelson, S. (2002). Geometric parameters of kernel machines. In International conference on computational learning theory, pages 29–43. Springer.

Morgan, S. L., Winship, C. (2014). Counterfactuals and causal inference: Methods and principles for social research, 2nd edition. Cambridge: Cambridge University Press.

Rasmussen, C. E., Williams, C. K. I. (2006). Gaussian processes for machine learning. Cambridge, MA: MIT Press.

Robins, J. M. (1994). Correcting for non-compliance in randomized trials using structural nested mean models. Communications in Statistics-Theory and Methods, 23(8), 2379–2412.

Robins, J. M., Rotnitzky, A., Zhao, L. P. (1994). Estimation of regression coefficients when some regressors are not always observed. Journal of the American Statistical Association, 89(427), 846–866.

Rubin, D. B. (1976). Inference and missing data. Biometrika, 63(3), 581–592.

Sang, H., Kim, J. K., Lee, D. (2022). Semiparametric fractional imputation using gaussian mixture models for handling multivariate missing data. Journal of the American Statistical Association, 117(538), 654–663.

Schölkopf, B., Smola, A. J., Bach, F. (2002). Learning with Kernels: Support vector machines, regularization, optimization, and beyond. Cambridge, MA: MIT press.

Shawe-Taylor, J., Cristianini, N. (2004). Kernel methods for pattern analysis. Cambridge University Press.

Steinwart, I., Hush, D. R., Scovel, C. (2009). Optimal rates for regularized least squares regression. In Proceedings of the 22nd Annual Conference on Learning Theory, pages 79–93.

Stone, C. (1982). Optimal global rates of converence for nonparametric regression. The Annals of Statistics, 10(4), 1040–1053.

Tan, Z. (2020). Regularized calibrated estimation of propensity scores with model misspecification and high-dimensional data. Biometrika, 107(1), 137–158.

van de Geer, S. A. (2000). Empirical processes in M-estimation (Vol. 6). Cambridge University Press.

Wahba, G. (1990). Spline models for observational data, volume 59. Siam.

Wang, D., Chen, S. X. (2009). Empirical likelihood for estimating equations with missing values. The Annals of Statistics, 37(1), 490–517.

Wong, R. K., Chan, K. C. G. (2018). Kernel-based covariate functional balancing for observational studies. Biometrika, 105(1), 199–213.

Wood, S. (2017). Generalized additive models: An introduction with R, 2nd edition. New York: Chapman and Hall/CRC.

Wu, C., Sitter, R. R. (2001). A model-calibration approach to using complete auxiliary information from survey data. Journal of the American Statistical Association, 96(453), 185–193.

Yang, S., Ding, P. (2020). Combining multiple observational data sources to estimate causal effects. Journal of the American Statistical Association, 115(531), 1540–1554.

Yang, S., Kim, J. K. (2020). Asymptotic theory and inference of predictive mean matching imputation using a superpopulation model framework. Scandinavian Journal of Statistics, 47, 839–861.

Zhang, T. (2005). Learning bounds for kernel regression using effective data dimensionality. Neural Computation, 17(9), 2077–2098.

Zhang, T., Simon, N. (2023). An online projection estimator for nonparametric regression in reproducing kernel hilbert spaces. Statistica Sinica, 33, 127–148.

Zhang, Y., Duchi, J., Wainwright, M. (2013). Divide and conquer kernel ridge regression. In Conference on learning theory, pages 592–617.

Zhao, Q. (2019). Covariate balancing propensity score by tailored loss functions. The Annals of Statistics, 47(2), 965–993.

Zou, H. (2006). The adaptive Lasso and its oracle properties. Journal of the American Statistical Association, 101(476), 1418–1429.

Acknowledgments

The authors thank the AE and two anonymous referees for very constructive comments. The research of the first author is supported by Fujian Provincial Department of Education (JAT210059). The research of the second author is partially supported by a grant from National Science Foundation (OAC-1931380) and a grant from the Iowa Agriculture and Home Economics Experiment Station, Ames, Iowa.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

This Appendix contains the technical proof for Theorem 1 and a sketched proof for (14).

A Proof for Theorem 1

To prove our main theorem, we write

Therefore, as long as we show

then the main theorem automatically holds.

To show (10), note that

where \(\widehat{{\varvec{m}}} = (\widehat{m}(\varvec{x}_{1}), \ldots , \widehat{m}(\varvec{x}_{n}))^\textrm{T}\). Let \(\varvec{S}_{\lambda } = ( \varvec{I}_{n} + \lambda \textbf{K}^{-1} )^{-1}\), we have

where

By Lemma 2, we obtain

where \(\varvec{\Pi }= \text{ diag }(\pi (\varvec{x}_{1}), \ldots , \pi (\varvec{x}_{n}))\) and \(\gamma (\lambda )\) is the effective dimension of kernel K. Similarly, we have

Now, writing

and using (19) and

by Taylor expansion, we have

where the last equality holds because

Therefore, we have

For \(\ell \)-th order of Sobolev space, we have

Additionally,

which implies that, as long as \(n\lambda \rightarrow 0\) and \(n\lambda ^{1/2\ell }\rightarrow \infty \), holds, we have \(n^{-1}\varvec{1}_{n}^\textrm{T}\varvec{a}_{n} = o_{p}(n^{-1/2})\) and (10) is established. \(\square \)

B Justification of equation (14)

Note that

where the third equality holds by Lemma 2. \(\square \)

About this article

Cite this article

Wang, H., Kim, J.K. Statistical inference using regularized M-estimation in the reproducing kernel Hilbert space for handling missing data. Ann Inst Stat Math 75, 911–929 (2023). https://doi.org/10.1007/s10463-023-00872-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-023-00872-8